Best AI tools for< Generate Synthetic Data >

20 - AI tool Sites

Bifrost AI

Bifrost AI is a data generation engine designed for AI and robotics applications. It enables users to train and validate AI models faster by generating physically accurate synthetic datasets in 3D simulations, eliminating the need for real-world data. The platform offers pixel-perfect labels, scenario metadata, and a simulated 3D world to enhance AI understanding. Bifrost AI empowers users to create new scenarios and datasets rapidly, stress test AI perception, and improve model performance. It is built for teams at every stage of AI development, offering features like automated labeling, class imbalance correction, and performance enhancement.

Synthesis AI

Synthesis AI is a synthetic data platform that enables more capable and ethical computer vision AI. It provides on-demand labeled images and videos, photorealistic images, and 3D generative AI to help developers build better models faster. Synthesis AI's products include Synthesis Humans, which allows users to create detailed images and videos of digital humans with rich annotations; Synthesis Scenarios, which enables users to craft complex multi-human simulations across a variety of environments; and a range of applications for industries such as ID verification, automotive, avatar creation, virtual fashion, AI fitness, teleconferencing, visual effects, and security.

Syntho

Syntho is a self-service AI-generated synthetic data platform that offers a comprehensive solution for generating synthetic data for various purposes. It provides tools for de-identification, test data management, rule-based synthetic data generation, data masking, and more. With a focus on privacy and accuracy, Syntho enables users to create synthetic data that mirrors real production data while ensuring compliance with regulations and data privacy standards. The platform offers a range of features and use cases tailored to different industries, including healthcare, finance, and public organizations.

Gretel.ai

Gretel.ai is a synthetic data platform purpose-built for AI applications. It allows users to generate artificial, synthetic datasets with the same characteristics as real data, enabling the improvement of AI models without compromising privacy. The platform offers APIs for generating anonymized and safe synthetic data, training generative AI models, and validating models with quality and privacy scores. Users can deploy Gretel for enterprise use cases and run it on various cloud platforms or in their own environment.

MOSTLY AI Platform

The website offers a Synthetic Data Generation platform with the highest accuracy for free. It provides detailed information on synthetic data, data anonymization, and features a Python Client for data generation. The platform ensures privacy and security, allowing users to create fully anonymous synthetic data from original data. It supports various AI/ML use cases, self-service analytics, testing & QA, and data sharing. The platform is designed for Enterprise organizations, offering scalability, privacy by design, and the world's most accurate synthetic data.

Incribo

Incribo is a company that provides synthetic data for training machine learning models. Synthetic data is artificially generated data that is designed to mimic real-world data. This data can be used to train machine learning models without the need for real-world data, which can be expensive and difficult to obtain. Incribo's synthetic data is high quality and affordable, making it a valuable resource for machine learning developers.

Gretel.ai

Gretel.ai is an AI tool that helps users incorporate generative AI into their data by generating synthetic data that is as good or better than the existing data. Users can fine-tune custom AI models and use Gretel's APIs to generate unlimited synthesized datasets, perform privacy-preserving transformations on sensitive data, and identify PII with advanced NLP detection. Gretel's APIs make it simple to generate anonymized and safe synthetic data, allowing users to innovate faster and preserve privacy while doing it. Gretel's platform includes Synthetics, Transform, and Classify APIs that provide users with a complete set of tools to create safe data. Gretel also offers a range of resources, including documentation, tutorials, GitHub projects, and open-source SDKs for developers. Gretel Cloud runners allow users to keep data contained by running Gretel containers in their environment or scaling out workloads to the cloud in seconds. Overall, Gretel.ai is a powerful AI tool for generating synthetic data that can help users unlock innovation and achieve more with safe access to the right data.

Marvin

Marvin is a lightweight toolkit for building natural language interfaces that are reliable, scalable, and easy to trust. It provides a variety of AI functions for text, images, audio, and video, as well as interactive tools and utilities. Marvin is designed to be easy to use and integrate, and it can be used to build a wide range of applications, from simple chatbots to complex AI-powered systems.

DeepEval

DeepEval by Confident AI is a comprehensive LLM Evaluation Framework used by leading AI companies. It enables users to build reliable evaluation pipelines to test any AI system. With 50+ research-backed metrics, native multi-modal support, and auto-optimization of prompts, DeepEval offers a sophisticated evaluation ecosystem for AI applications. The framework covers unit-testing for LLMs, single and multi-turn evaluations, generation & simulation of test data, and state-of-the-art evaluation techniques like G-Eval and DAG. DeepEval is integrated with Pytest and supports various system architectures, making it a versatile tool for AI testing.

RagaAI Catalyst

RagaAI Catalyst is a sophisticated AI observability, monitoring, and evaluation platform designed to help users observe, evaluate, and debug AI agents at all stages of Agentic AI workflows. It offers features like visualizing trace data, instrumenting and monitoring tools and agents, enhancing AI performance, agentic testing, comprehensive trace logging, evaluation for each step of the agent, enterprise-grade experiment management, secure and reliable LLM outputs, finetuning with human feedback integration, defining custom evaluation logic, generating synthetic data, and optimizing LLM testing with speed and precision. The platform is trusted by AI leaders globally and provides a comprehensive suite of tools for AI developers and enterprises.

QuarkIQL

QuarkIQL is a generative testing tool for computer vision APIs. It allows users to create custom test images and requests with just a few clicks. QuarkIQL also provides a log of your queries so you can run more experiments without starting from square one.

OpinioAI

OpinioAI is an AI-powered market research tool that allows users to gain business critical insights from data without the need for costly polls, surveys, or interviews. With OpinioAI, users can create AI personas and market segments to understand customer preferences, affinities, and opinions. The platform democratizes research by providing efficient, effective, and budget-friendly solutions for businesses, students, and individuals seeking valuable insights. OpinioAI leverages Large Language Models to simulate humans and extract opinions in detail, enabling users to analyze existing data, synthesize new insights, and evaluate content from the perspective of their target audience.

Datagen

Datagen is a platform that provides synthetic data for computer vision. Synthetic data is artificially generated data that can be used to train machine learning models. Datagen's data is generated using a variety of techniques, including 3D modeling, computer graphics, and machine learning. The company's data is used by a variety of industries, including automotive, security, smart office, fitness, cosmetics, and facial applications.

Rendered.ai

Rendered.ai is a platform that provides unlimited synthetic data for AI and ML applications, specifically focusing on computer vision. It helps in generating low-cost physically-accurate data to overcome bias and power innovation in AI and ML. The platform allows users to capture rare events and edge cases, acquire data that is difficult to obtain, overcome data labeling challenges, and simulate restricted or high-risk scenarios. Rendered.ai aims to revolutionize the use of synthetic data in AI and data analytics projects, with a vision that by 2030, synthetic data will surpass real data in AI models.

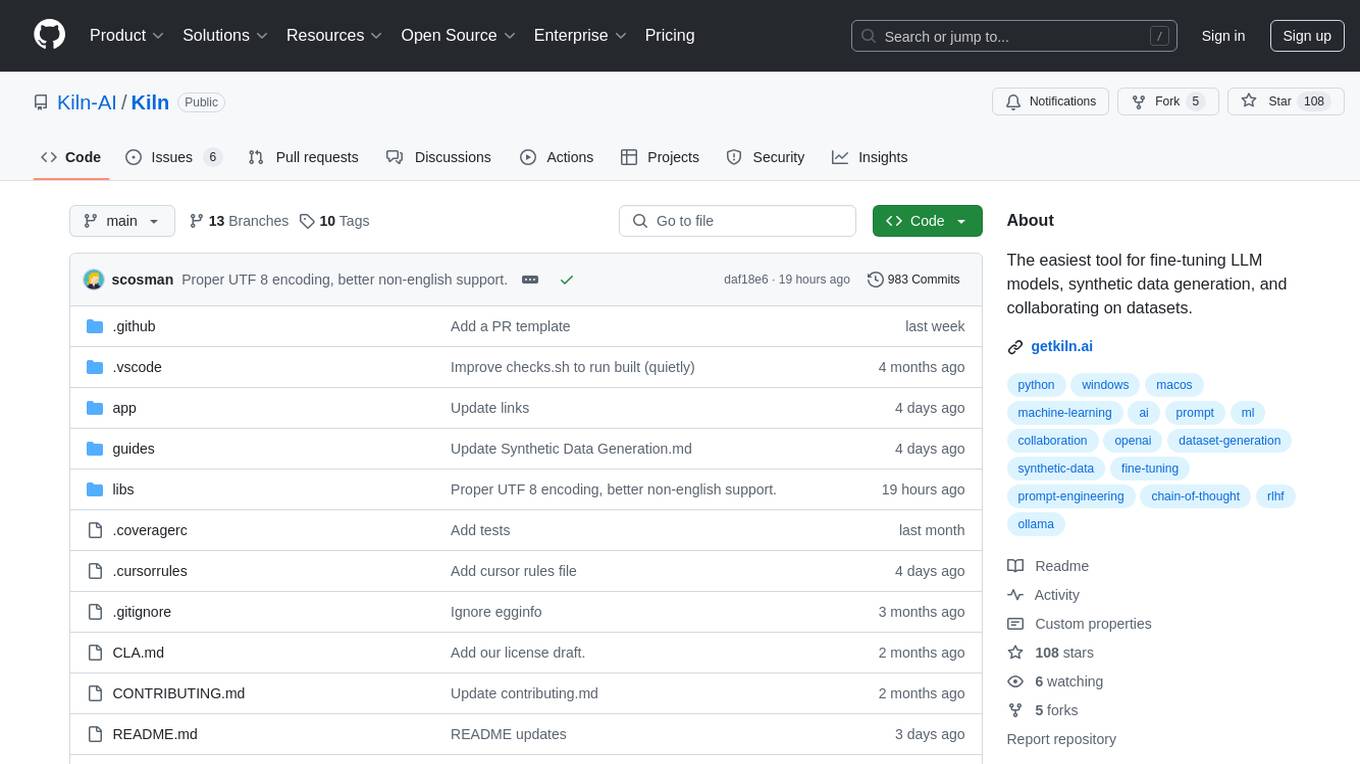

Kiln

Kiln is an AI tool designed for fine-tuning LLM models, generating synthetic data, and facilitating collaboration on datasets. It offers intuitive desktop apps, zero-code fine-tuning for various models, interactive visual tools for data generation, Git-based version control for datasets, and the ability to generate various prompts from data. Kiln supports a wide range of models and providers, provides an open-source library and API, prioritizes privacy, and allows structured data tasks in JSON format. The tool is free to use and focuses on rapid AI prototyping and dataset collaboration.

STELLARWITS

STELLARWITS is an AI solutions and software platform that empowers users to explore cutting-edge technology and innovation. The platform offers AI models with versatile capabilities, ranging from content generation to data analysis to problem-solving. Users can engage directly with the technology, experiencing its power in real-time. With a focus on transforming ideas into technology, STELLARWITS provides tailored solutions in software and AI development, delivering intelligent systems and machine learning models for innovative and efficient solutions. The platform also features a download hub with a curated selection of solutions to enhance the digital experience. Through blogs and company information, users can delve deeper into the narrative of STELLARWITS, exploring its mission, vision, and commitment to reshaping the tech landscape.

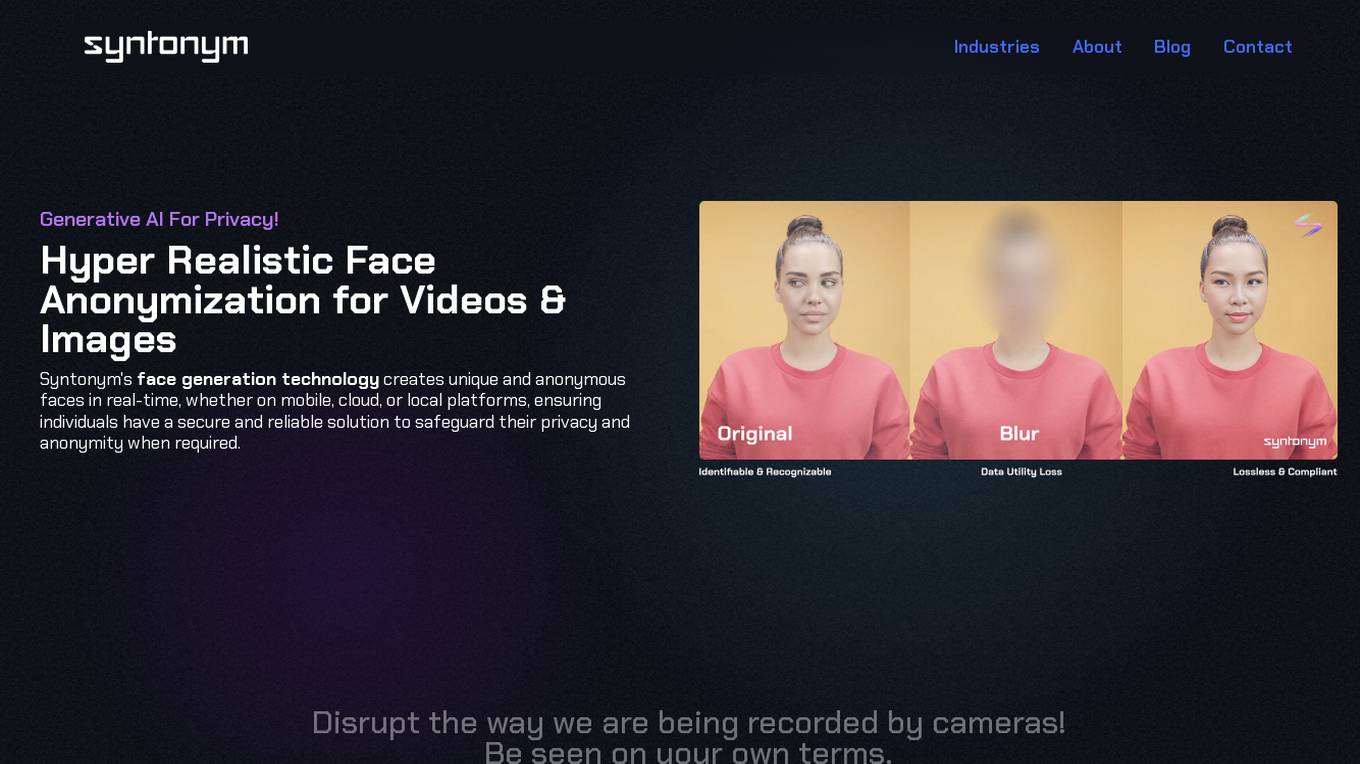

Syntonym

Syntonym is a generative AI tool focused on privacy, specifically offering hyper-realistic face anonymization for videos and images. The technology creates unique and anonymous faces in real-time, ensuring individuals have a secure and reliable solution to safeguard their privacy and anonymity. Syntonym disrupts the way individuals are recorded by cameras, allowing them to be seen on their own terms and integrating privacy layers into video platforms for interactive communication. The tool removes biometrics to unlock unique video processing potential with real-time, lossless anonymization in compliance with privacy regulations.

Tensoic AI

Tensoic AI is an AI tool designed for custom Large Language Models (LLMs) fine-tuning and inference. It offers ultra-fast fine-tuning and inference capabilities for enterprise-grade LLMs, with a focus on use case-specific tasks. The tool is efficient, cost-effective, and easy to use, enabling users to outperform general-purpose LLMs using synthetic data. Tensoic AI generates small, powerful models that can run on consumer-grade hardware, making it ideal for a wide range of applications.

This Beach Does Not Exist

This Beach Does Not Exist is an AI application powered by StyleGAN2-ADA network, capable of generating realistic beach images. The website showcases AI-generated beach landscapes created from a dataset of approximately 20,000 images. Users can explore the training progress of the network, generate random images, utilize K-Means Clustering for image grouping, and download the network for experimentation or retraining purposes. Detailed technical information about the network architecture, dataset, training steps, and metrics is provided. The application is based on the GAN architecture developed by NVIDIA Labs and offers a unique experience of creating virtual beach scenes through AI technology.

Blackshark.ai

Blackshark.ai is an AI-based platform that generates real-time accurate semantic photorealistic 3D digital twin of the entire planet. The platform extracts insights about the planet's infrastructure from satellite and aerial imagery via machine learning at a global scale. It enriches missing attributes using AI to provide a photorealistic, geo-typical, or asset-specific digital twin. The results can be used for visualization, simulation, mapping, mixed reality environments, and other enterprise solutions. The platform offers end-to-end geospatial solutions, including globe data input sources, no-code data labeling, geointelligence at scale, 3D semantic map, and synthetic environments.

23 - Open Source AI Tools

qgate-model

QGate-Model is a machine learning meta-model with synthetic data, designed for MLOps and feature store. It is independent of machine learning solutions, with definitions in JSON and data in CSV/parquet formats. This meta-model is useful for comparing capabilities and functions of machine learning solutions, independently testing new versions of machine learning solutions, and conducting various types of tests (unit, sanity, smoke, system, regression, function, acceptance, performance, shadow, etc.). It can also be used for external test coverage when internal test coverage is not available or weak.

llm-datasets

LLM Datasets is a repository containing high-quality datasets, tools, and concepts for LLM fine-tuning. It provides datasets with characteristics like accuracy, diversity, and complexity to train large language models for various tasks. The repository includes datasets for general-purpose, math & logic, code, conversation & role-play, and agent & function calling domains. It also offers guidance on creating high-quality datasets through data deduplication, data quality assessment, data exploration, and data generation techniques.

LLM4IR-Survey

LLM4IR-Survey is a collection of papers related to large language models for information retrieval, organized according to the survey paper 'Large Language Models for Information Retrieval: A Survey'. It covers various aspects such as query rewriting, retrievers, rerankers, readers, search agents, and more, providing insights into the integration of large language models with information retrieval systems.

Main

This repository contains material related to the new book _Synthetic Data and Generative AI_ by the author, including code for NoGAN, DeepResampling, and NoGAN_Hellinger. NoGAN is a tabular data synthesizer that outperforms GenAI methods in terms of speed and results, utilizing state-of-the-art quality metrics. DeepResampling is a fast NoGAN based on resampling and Bayesian Models with hyperparameter auto-tuning. NoGAN_Hellinger combines NoGAN and DeepResampling with the Hellinger model evaluation metric.

continuous-eval

Open-Source Evaluation for LLM Applications. `continuous-eval` is an open-source package created for granular and holistic evaluation of GenAI application pipelines. It offers modularized evaluation, a comprehensive metric library covering various LLM use cases, the ability to leverage user feedback in evaluation, and synthetic dataset generation for testing pipelines. Users can define their own metrics by extending the Metric class. The tool allows running evaluation on a pipeline defined with modules and corresponding metrics. Additionally, it provides synthetic data generation capabilities to create user interaction data for evaluation or training purposes.

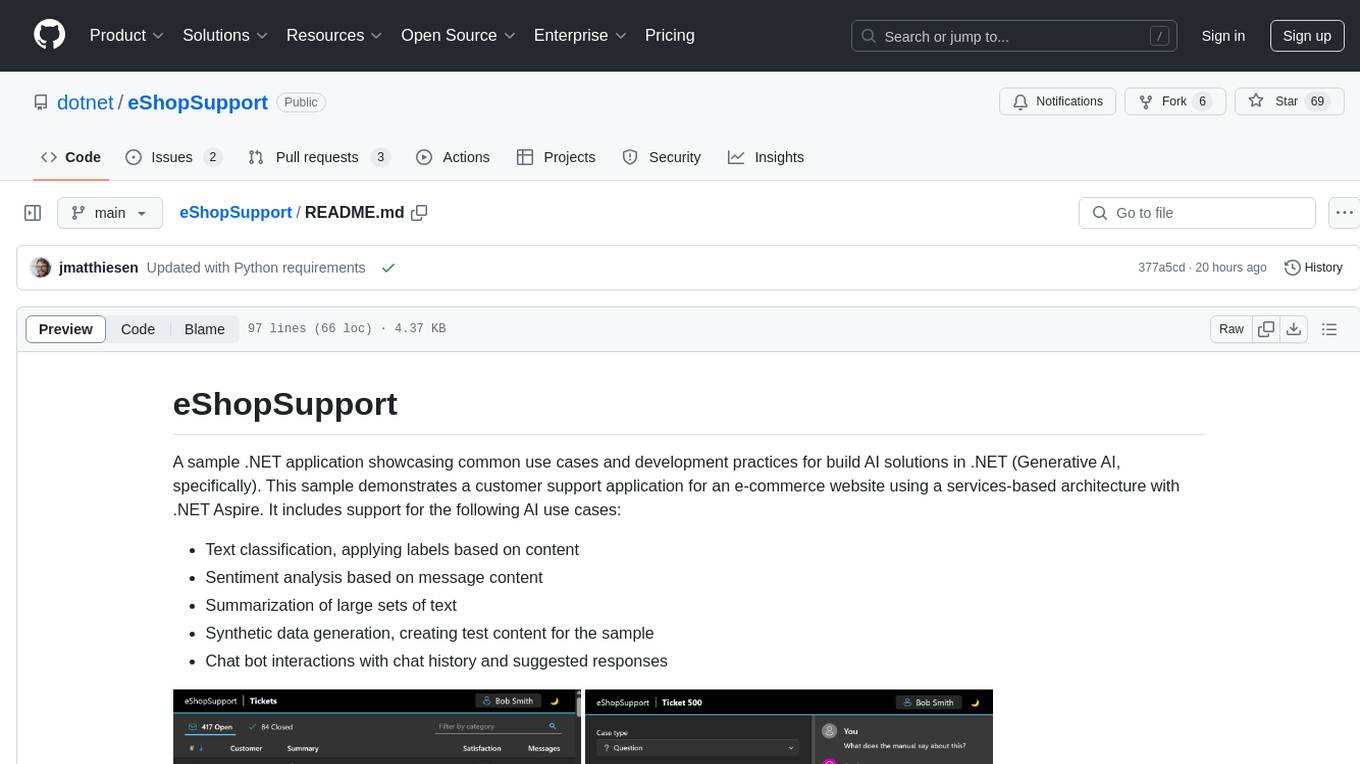

eShopSupport

eShopSupport is a sample .NET application showcasing common use cases and development practices for building AI solutions in .NET, specifically Generative AI. It demonstrates a customer support application for an e-commerce website using a services-based architecture with .NET Aspire. The application includes support for text classification, sentiment analysis, text summarization, synthetic data generation, and chat bot interactions. It also showcases development practices such as developing solutions locally, evaluating AI responses, leveraging Python projects, and deploying applications to the Cloud.

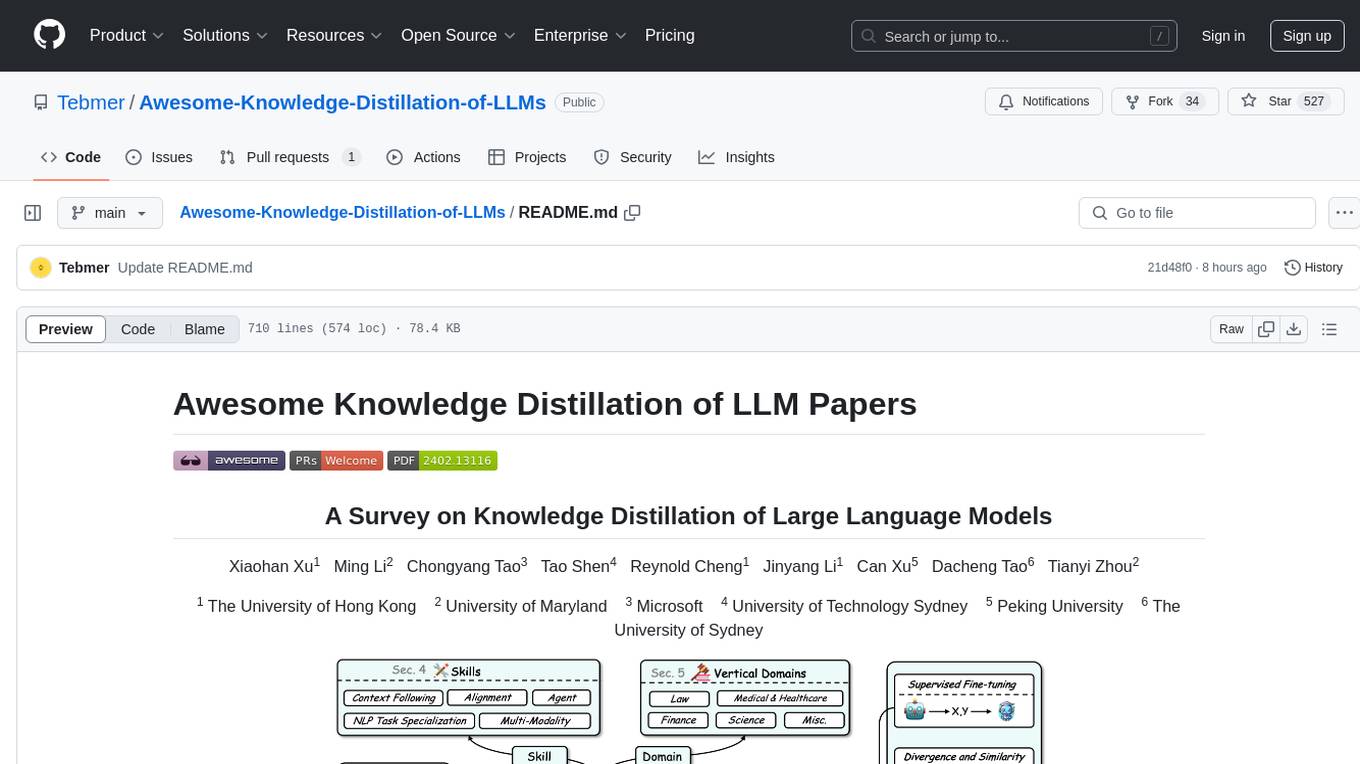

Awesome-Knowledge-Distillation-of-LLMs

A collection of papers related to knowledge distillation of large language models (LLMs). The repository focuses on techniques to transfer advanced capabilities from proprietary LLMs to smaller models, compress open-source LLMs, and refine their performance. It covers various aspects of knowledge distillation, including algorithms, skill distillation, verticalization distillation in fields like law, medical & healthcare, finance, science, and miscellaneous domains. The repository provides a comprehensive overview of the research in the area of knowledge distillation of LLMs.

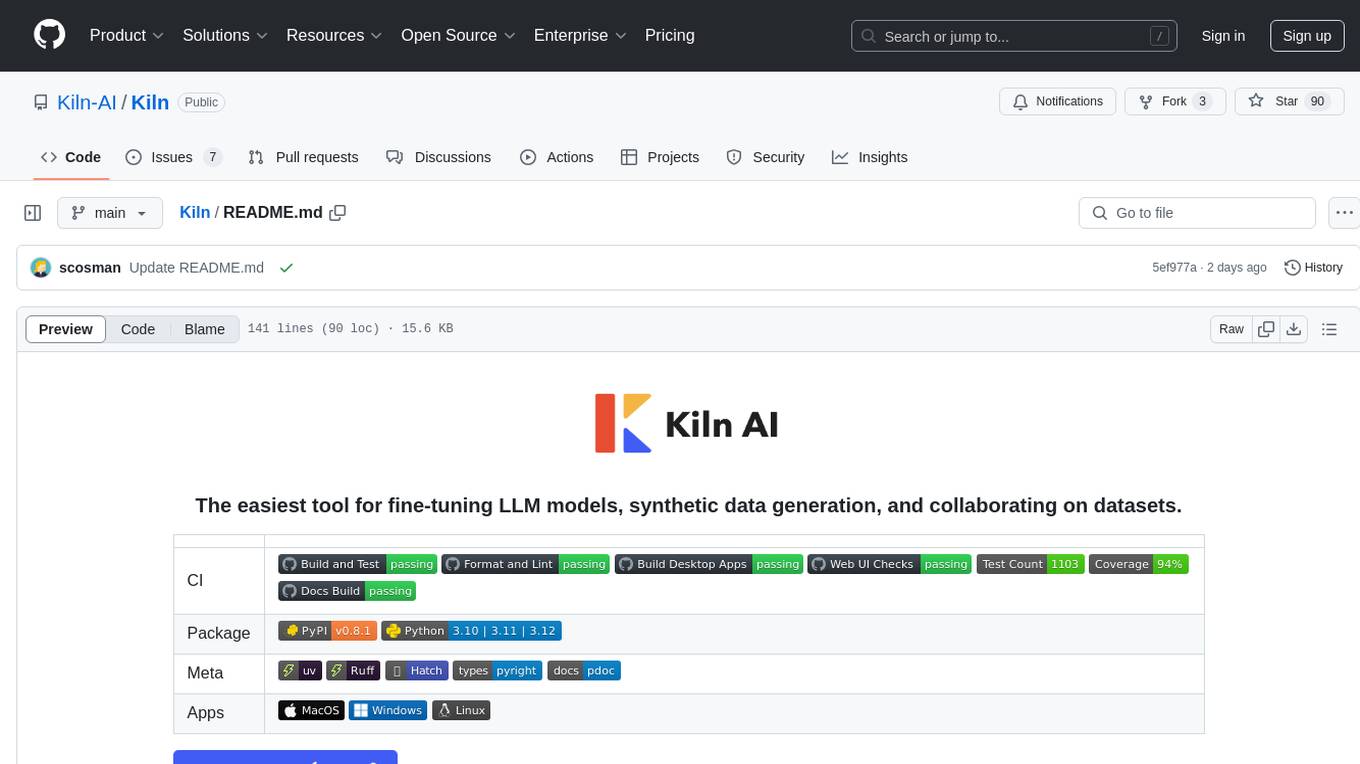

Kiln

Kiln is an intuitive tool for fine-tuning LLM models, generating synthetic data, and collaborating on datasets. It offers desktop apps for Windows, MacOS, and Linux, zero-code fine-tuning for various models, interactive data generation, and Git-based version control. Users can easily collaborate with QA, PM, and subject matter experts, generate auto-prompts, and work with a wide range of models and providers. The tool is open-source, privacy-first, and supports structured data tasks in JSON format. Kiln is free to use and helps build high-quality AI products with datasets, facilitates collaboration between technical and non-technical teams, allows comparison of models and techniques without code, ensures structured data integrity, and prioritizes user privacy.

LLM-Synthetic-Data

LLM-Synthetic-Data is a repository focused on real-time, fine-grained LLM-Synthetic-Data generation. It includes methods, surveys, and application areas related to synthetic data for language models. The repository covers topics like pre-training, instruction tuning, model collapse, LLM benchmarking, evaluation, and distillation. It also explores application areas such as mathematical reasoning, code generation, text-to-SQL, alignment, reward modeling, long context, weak-to-strong generalization, agent and tool use, vision and language, factuality, federated learning, generative design, and safety.

TinyTroupe

TinyTroupe is an experimental Python library that leverages Large Language Models (LLMs) to simulate artificial agents called TinyPersons with specific personalities, interests, and goals in simulated environments. The focus is on understanding human behavior through convincing interactions and customizable personas for various applications like advertisement evaluation, software testing, data generation, project management, and brainstorming. The tool aims to enhance human imagination and provide insights for better decision-making in business and productivity scenarios.

promptwright

Promptwright is a Python library designed for generating large synthetic datasets using a local LLM and various LLM service providers. It offers flexible interfaces for generating prompt-led synthetic datasets. The library supports multiple providers, configurable instructions and prompts, YAML configuration for tasks, command line interface for running tasks, push to Hugging Face Hub for dataset upload, and system message control. Users can define generation tasks using YAML configuration or Python code. Promptwright integrates with LiteLLM to interface with LLM providers and supports automatic dataset upload to Hugging Face Hub.

curator

Bespoke Curator is an open-source tool for data curation and structured data extraction. It provides a Python library for generating synthetic data at scale, with features like programmability, performance optimization, caching, and integration with HuggingFace Datasets. The tool includes a Curator Viewer for dataset visualization and offers a rich set of functionalities for creating and refining data generation strategies.

RagaAI-Catalyst

RagaAI Catalyst is a comprehensive platform designed to enhance the management and optimization of LLM projects. It offers features such as project management, dataset management, evaluation management, trace management, prompt management, synthetic data generation, and guardrail management. These functionalities enable efficient evaluation and safeguarding of LLM applications.

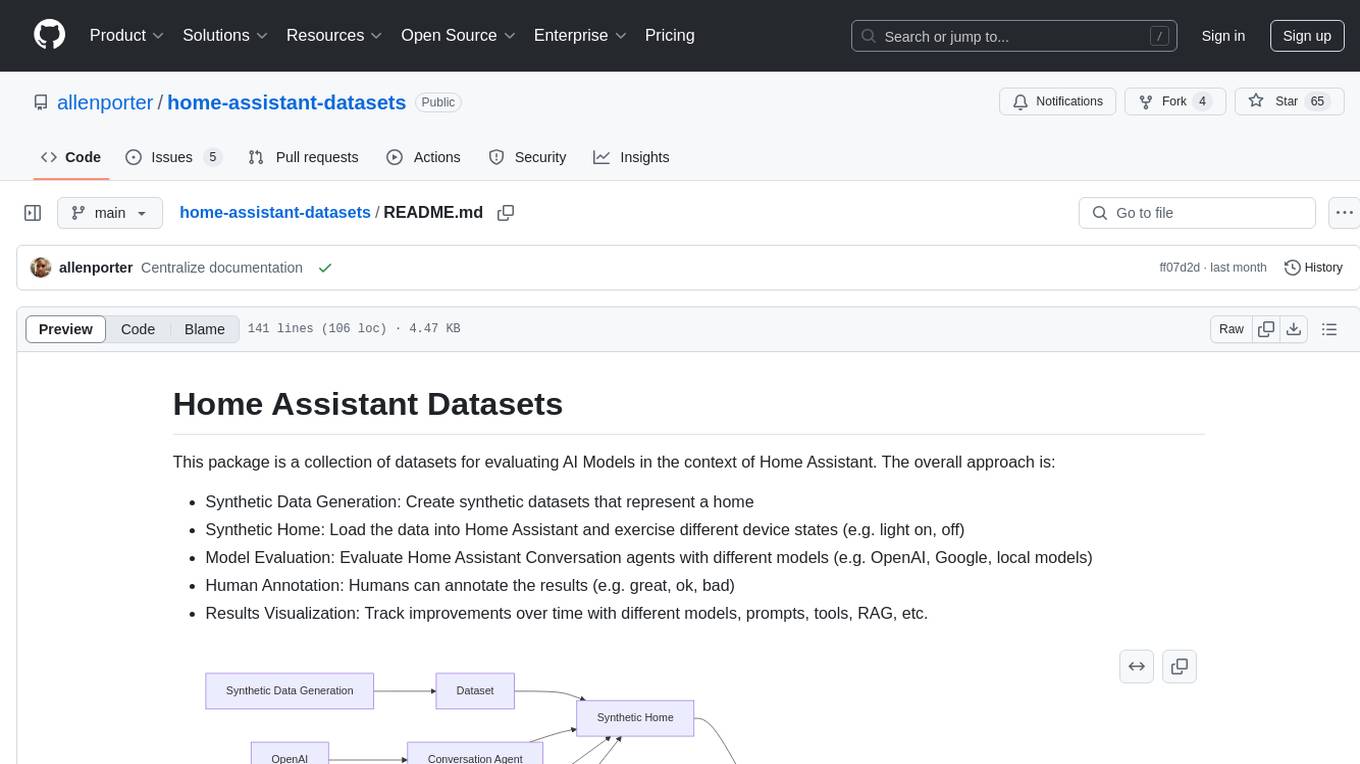

home-assistant-datasets

This package provides a collection of datasets for evaluating AI Models in the context of Home Assistant. It includes synthetic data generation, loading data into Home Assistant, model evaluation with different conversation agents, human annotation of results, and visualization of improvements over time. The datasets cover home descriptions, area descriptions, device descriptions, and summaries that can be performed on a home. The tool aims to build datasets for future training purposes.

promptwright

Promptwright is a Python library designed for generating large synthetic datasets using local LLM and various LLM service providers. It offers flexible interfaces for generating prompt-led synthetic datasets. The library supports multiple providers, configurable instructions and prompts, YAML configuration, command line interface, push to Hugging Face Hub, and system message control. Users can define generation tasks using YAML configuration files or programmatically using Python code. Promptwright integrates with LiteLLM for LLM providers and supports automatic dataset upload to Hugging Face Hub. The library is not responsible for the content generated by models and advises users to review the data before using it in production environments.

dlio_benchmark

DLIO is an I/O benchmark tool designed for Deep Learning applications. It emulates modern deep learning applications using Benchmark Runner, Data Generator, Format Handler, and I/O Profiler modules. Users can configure various I/O patterns, data loaders, data formats, datasets, and parameters. The tool is aimed at emulating the I/O behavior of deep learning applications and provides a modular design for flexibility and customization.

ArcticTraining

ArcticTraining is a framework designed to simplify and accelerate the post-training process for large language models (LLMs). It offers modular trainer designs, simplified code structures, and integrated pipelines for creating and cleaning synthetic data, enabling users to enhance LLM capabilities like code generation and complex reasoning with greater efficiency and flexibility.

mem-kk-logic

This repository provides a PyTorch implementation of the paper 'On Memorization of Large Language Models in Logical Reasoning'. The work investigates memorization of Large Language Models (LLMs) in reasoning tasks, proposing a memorization metric and a logical reasoning benchmark based on Knights and Knaves puzzles. It shows that LLMs heavily rely on memorization to solve training puzzles but also improve generalization performance through fine-tuning. The repository includes code, data, and tools for evaluation, fine-tuning, probing model internals, and sample classification.

stochastic-rs

stochastic-rs is a high-performance Rust library for simulating and analyzing stochastic processes. It is designed for applications in quantitative finance, AI training, and statistical modeling, providing efficient tools to generate synthetic data and analyze complex stochastic systems. The library is actively developed and welcomes contributions such as bug reports, feature suggestions, and documentation improvements. It is licensed under the MIT License.

eval-assist

EvalAssist is an LLM-as-a-Judge framework built on top of the Unitxt open source evaluation library for large language models. It provides users with a convenient way of iteratively testing and refining LLM-as-a-judge criteria, supporting both direct (rubric-based) and pairwise assessment paradigms. EvalAssist is model-agnostic, supporting a rich set of off-the-shelf judge models that can be extended. Users can auto-generate a Notebook with Unitxt code to run bulk evaluations and save their own test cases. The tool is designed for evaluating text data using language models.

GraphGen

GraphGen is a framework for synthetic data generation guided by knowledge graphs. It enhances supervised fine-tuning for large language models (LLMs) by generating synthetic data based on a fine-grained knowledge graph. The tool identifies knowledge gaps in LLMs, prioritizes generating QA pairs targeting high-value knowledge, incorporates multi-hop neighborhood sampling, and employs style-controlled generation to diversify QA data. Users can use LLaMA-Factory and xtuner for fine-tuning LLMs after data generation.

sdg_hub

sdg_hub is a modular Python framework designed for building synthetic data generation pipelines using composable blocks and flows. Users can mix and match LLM-powered and traditional processing blocks to create sophisticated data generation workflows. The toolkit offers features such as modular composability, async performance, built-in validation, auto-discovery, rich monitoring, dataset schema discovery, and easy extensibility. sdg_hub provides detailed documentation and supports high-throughput processing with error handling. It simplifies the process of transforming datasets by allowing users to chain blocks together in YAML-configured flows, enabling the creation of complex data generation pipelines.

PINNeAPPle

Pinneaple is an open-source Python platform that unifies physical data, geometry, numerical solvers, and machine learning models for Physics-Informed AI. It emphasizes physical consistency, scalability, auditability, and interoperability with CFD/CAD/scientific data formats. It provides tools for unified physical dataset representation, data pipelines, geometry and mesh operations, a model zoo, physics loss factory, solvers, synthetic data generation, training and evaluation. The platform is designed for physics AI researchers, CFD/FEA/climate ML teams, industrial R&D groups, scientific ML practitioners, and anyone building surrogates, inverse models, or hybrid solvers.

20 - OpenAI Gpts

Synthetic Work (Re)Search Assistant

Search data on the impact of AI on jobs, productivity and operations published by Synthetic Work (https://synthetic.work)

Chemistry Companion

Professional chemistry assistant, SMILES/SMART supported molecule and reaction diagrams, and more!

PANˈDÔRƏ

Pandora is a Posthuman Prompt Engineer powered by the MANNS engine. Surpass human creative limitations by synthesizing diverse knowledge, advanced pattern recognition, and algorithmic creativity

Angular Architect AI: Generate Angular Components

Generates Angular components based on requirements, with a focus on code-first responses.

🖌️ Line to Image: Generate The Evolved Prompt!

Transforms lines into detailed prompts for visual storytelling.

Generate text imperceptible to detectors.

Discover how your writing can shine with a unique and human style. This prompt guides you to create rich and varied texts, surprising with original twists and maintaining coherence and originality. Transform your writing and challenge AI detection tools!