Awesome-Knowledge-Distillation-of-LLMs

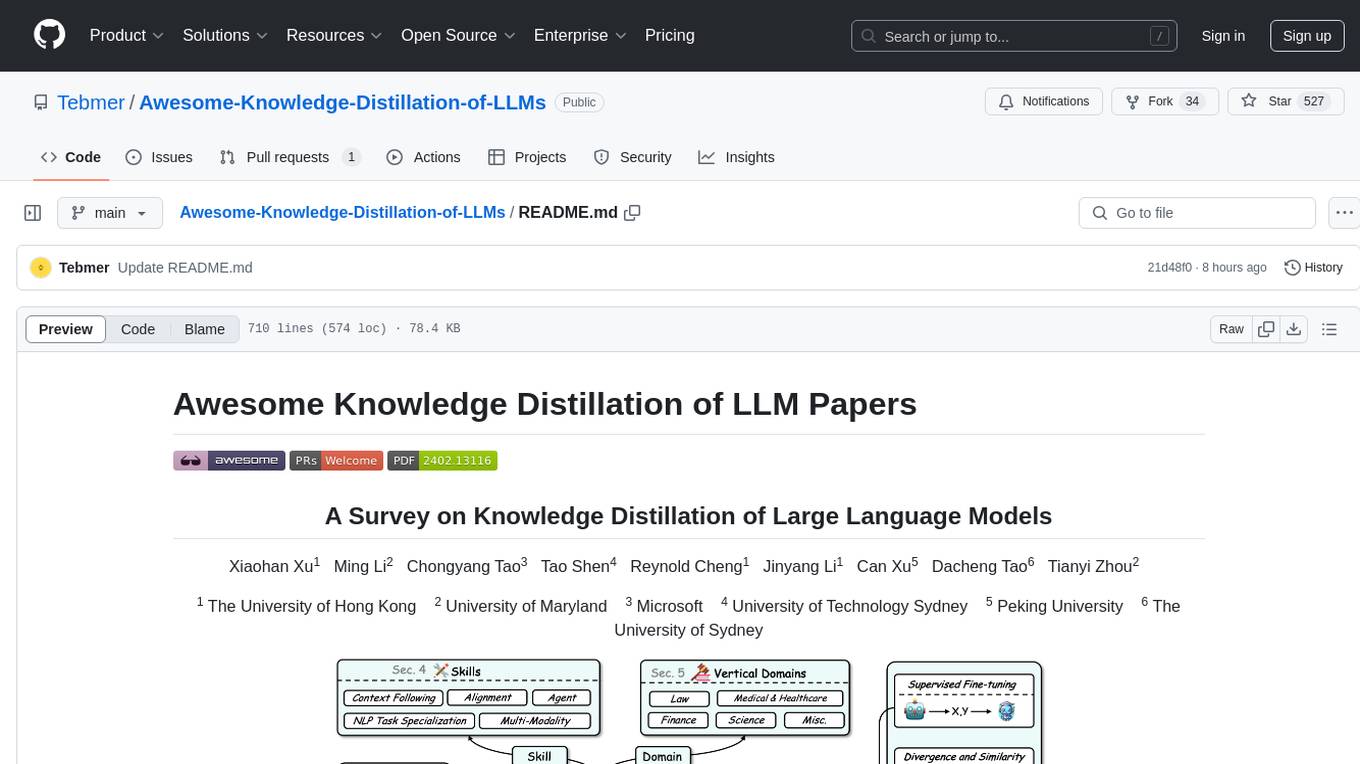

This repository collects papers for "A Survey on Knowledge Distillation of Large Language Models". We break down KD into Knowledge Elicitation and Distillation Algorithms, and explore the Skill & Vertical Distillation of LLMs.

Stars: 890

A collection of papers related to knowledge distillation of large language models (LLMs). The repository focuses on techniques to transfer advanced capabilities from proprietary LLMs to smaller models, compress open-source LLMs, and refine their performance. It covers various aspects of knowledge distillation, including algorithms, skill distillation, verticalization distillation in fields like law, medical & healthcare, finance, science, and miscellaneous domains. The repository provides a comprehensive overview of the research in the area of knowledge distillation of LLMs.

README:

Xiaohan Xu1   Ming Li2   Chongyang Tao3   Tao Shen4   Reynold Cheng1   Jinyang Li1   Can Xu5   Dacheng Tao6   Tianyi Zhou2

1 The University of Hong Kong    2 University of Maryland    3 Microsoft    4 University of Technology Sydney    5 Peking University    6 The University of Sydney

A collection of papers related to knowledge distillation of large language models (LLMs). If you want to use LLMs for benefitting your own smaller models training, or use self-generated knowledge to achieve the self-improvement, just take a look at this collection.

We will update this collection every week. Welcome to star ⭐️ this repo to keep track of the updates.

❗️Legal Consideration: It's crucial to note the legal implications of utilizing LLM outputs, such as those from ChatGPT (Restrictions), Llama (License), etc. We strongly advise users to adhere to the terms of use specified by the model providers, such as the restrictions on developing competitive products, and so on.

-

2024-2-20: 📃 We released a survey paper "A Survey on Knowledge Distillation of Large Language Models". Welcome to read and cite it. We are looking forward to your feedback and suggestions.

-

Update Log

- 2024-3-19: Add 14 papers.

Feel free to open an issue/PR or e-mail [email protected], [email protected], [email protected] and [email protected] if you find any missing taxonomies or papers. We will keep updating this collection and survey.

KD of LLMs: This survey delves into knowledge distillation (KD) techniques in Large Language Models (LLMs), highlighting KD's crucial role in transferring advanced capabilities from proprietary LLMs like GPT-4 to open-source counterparts such as LLaMA and Mistral. We also explore how KD enables the compression and self-improvement of open-source LLMs by using them as teachers.

KD and Data Augmentation: Crucially, the survey navigates the intricate interplay between data augmentation (DA) and KD, illustrating how DA emerges as a powerful paradigm within the KD framework to bolster LLMs' performance. By leveraging DA to generate context-rich, skill-specific training data, KD transcends traditional boundaries, enabling open-source models to approximate the contextual adeptness, ethical alignment, and deep semantic insights characteristic of their proprietary counterparts.

Taxonomy: Our analysis is meticulously structured around three foundational pillars: algorithm, skill, and verticalization -- providing a comprehensive examination of KD mechanisms, the enhancement of specific cognitive abilities, and their practical implications across diverse fields.

KD Algorithms: For KD algorithms, we categorize it into two principal steps: "Knowledge Elicitation" focusing on eliciting knowledge from teacher LLMs, and "Distillation Algorithms" centered on injecting this knowledge into student models.

Skill Distillation: We delve into the enhancement of specific cognitive abilities, such as context following, alignment, agent, NLP task specialization, and multi-modality.

Verticalization Distillation: We explore the practical implications of KD across diverse fields, including law, medical & healthcare, finance, science, and miscellaneous domains.

Note that both Skill Distillation and Verticalization Distillation employ Knowledge Elicitation and Distillation Algorithms in KD Algorithms to achieve their KD. Thus, there are overlaps between them. However, this could also provide different perspectives for the papers.

In the era of LLMs, KD of LLMs plays the following crucial roles:

| Role | Description | Trend |

|---|---|---|

| ① Advancing SLMs | Transferring advanced capabilities from proprietary LLMs to smaller SLMs, such as open source LLMs or other smaller models. | Most common |

| ② Compression | Compressing LLMs to make them more efficient and practical. | More popular with the prosperity of open-source LLMs |

| ③ Self-Improvement | Refining open-source LLMs' performance by leveraging their own knowledge, i.e. self-knowledge. | New trend to make open-source LLMs more competitive |

Due to the large number of works applying supervised fine-tuning, we only list the most representative ones here.

| Title | Venue | Date | Code | Data |

|---|---|---|---|---|

| Evidence-Focused Fact Summarization for Knowledge-Augmented Zero-Shot Question Answering | arXiv | 2024-03 | ||

| KnowTuning: Knowledge-aware Fine-tuning for Large Language Models | arXiv | 2024-02 | Github | |

| Self-Rewarding Language Models | arXiv | 2024-01 | Github | |

| Self-Play Fine-Tuning Converts Weak Language Models to Strong Language Models | arXiv | 2024-01 | Github | Data |

| Zephyr: Direct Distillation of Language Model Alignment | arXiv | 2023-10 | Github | Data |

| CycleAlign: Iterative Distillation from Black-box LLM to White-box Models for Better Human Alignment | arXiv | 2023-10 |

| Title | Venue | Date | Code | Data |

|---|---|---|---|---|

| Zephyr: Direct Distillation of LM Alignment | arXiv | 2023-10 | Github | Data |

| OPENCHAT: ADVANCING OPEN-SOURCE LANGUAGE MODELS WITH MIXED-QUALITY DATA | ICLR | 2023-09 | Github | Data |

| Enhancing Chat Language Models by Scaling High-quality Instructional Conversations | arXiv | 2023-05 | Github | Data |

| Baize: An Open-Source Chat Model with Parameter-Efficient Tuning on Self-Chat Data | EMNLP | 2023-04 | Github | Data |

| Vicuna: An Open-Source Chatbot Impressing GPT-4 with 90% ChatGPT Quality* | - | 2023-03 | Github | Data |

| Title | Venue | Date | Code | Data |

|---|---|---|---|---|

| Self-RAG: Learning to Retrieve, Generate, and Critique through Self-Reflection | NIPS | 2023-10 | Github | Data |

| SAIL: Search-Augmented Instruction Learning | arXiv | 2023-05 | Github | Data |

| Knowledge-Augmented Reasoning Distillation for Small Language Models in Knowledge-Intensive Tasks | NIPS | 2023-05 | Github | Data |

| Title | Venue | Date | Code | Data |

|---|---|---|---|---|

| Aligning Large and Small Language Models via Chain-of-Thought Reasoning | EACL | 2024-03 | Github | |

| Divide-or-Conquer? Which Part Should You Distill Your LLM? | arXiv | 2024-02 | ||

| Selective Reflection-Tuning: Student-Selected Data Recycling for LLM Instruction-Tuning | arXiv | 2024-02 | Github | Data |

| Can LLMs Speak For Diverse People? Tuning LLMs via Debate to Generate Controllable Controversial Statements | arXiv | 2024-02 | Github | Data |

| Knowledgeable Preference Alignment for LLMs in Domain-specific Question Answering | arXiv | 2023-11 | Github | |

| Orca 2: Teaching Small Language Models How to Reason | arXiv | 2023-11 | ||

| Reflection-Tuning: Data Recycling Improves LLM Instruction-Tuning | NIPS Workshop | 2023-10 | Github | Data |

| Orca: Progressive Learning from Complex Explanation Traces of GPT-4 | arXiv | 2023-06 | ||

| SelFee: Iterative Self-Revising LLM Empowered by Self-Feedback Generation | arXiv | 2023-05 |

| Title | Venue | Date | Code | Data |

|---|---|---|---|---|

| Ultrafeedback: Boosting language models with high-quality feedback | arXiv | 2023-10 | Github | Data |

| Zephyr: Direct Distillation of LM Alignment | arXiv | 2023-10 | Github | Data |

| Rlaif: Scaling Reinforcement Learning from Human Feedback with AI Feedback | arXiv | 2023-09 | ||

| OPENCHAT: ADVANCING OPEN-SOURCE LANGUAGE MODELS WITH MIXED-QUALITY DATA | ICLR | 2023-09 | Github | Data |

| RLCD: Reinforcement Learning from Contrast Distillation for Language Model Alignment | arXiv | 2023-07 | Github | |

| Aligning Large Language Models through Synthetic Feedbacks | EMNLP | 2023-05 | Github | Data |

| Reward Design with Language Models | ICLR | 2023-03 | Github | |

| Training Language Models with Language Feedback at Scale | arXiv | 2023-03 | ||

| Constitutional AI: Harmlessness from AI Feedback | arXiv | 2022-12 |

| Title | Venue | Date | Code | Data |

|---|---|---|---|---|

| Ultrafeedback: Boosting language models with high-quality feedback | arXiv | 2023-10 | Github | Data |

| RLCD: Reinforcement Learning from Contrast Distillation for Language Model Alignment | arXiv | 2023-07 | Github | |

| Principle-Driven Self-Alignment of Language Models from Scratch with Minimal Human Supervision | NeurIPS | 2023-05 | Github | Data |

| Training Socially Aligned Language Models on Simulated Social Interactions | arXiv | 2023-05 | ||

| Constitutional AI: Harmlessness from AI Feedback | arXiv | 2022-12 |

| Title | Venue | Date | Code | Data |

|---|---|---|---|---|

| Tailoring Self-Rationalizers with Multi-Reward Distillation | arXiv | 2023-11 | Github | Data |

| RECOMP: Improving Retrieval-Augmented LMs with Compression and Selective Augmentation | arXiv | 2023-10 | Github | |

| Neural Machine Translation Data Generation and Augmentation using ChatGPT | arXiv | 2023-07 | ||

| On-Policy Distillation of Language Models: Learning from Self-Generated Mistakes | ICLR | 2023-06 | ||

| Can LLMs generate high-quality synthetic note-oriented doctor-patient conversations? | arXiv | 2023-06 | Github | Data |

| InheritSumm: A General, Versatile and Compact Summarizer by Distilling from GPT | EMNLP | 2023-05 | ||

| Impossible Distillation: from Low-Quality Model to High-Quality Dataset & Model for Summarization and Paraphrasing | arXiv | 2023-05 | Github | |

| Data Augmentation for Radiology Report Simplification | Findings of EACL | 2023-04 | Github | |

| Want To Reduce Labeling Cost? GPT-3 Can Help | Findings of EMNLP | 2021-08 |

| Title | Venue | Date | Code | Data |

|---|---|---|---|---|

| Can Small Language Models be Good Reasoners for Sequential Recommendation? | arXiv | 2024-03 | ||

| Large Language Model Augmented Narrative Driven Recommendations | arXiv | 2023-06 | ||

| Recommendation as Instruction Following: A Large Language Model Empowered Recommendation Approach | arXiv | 2023-05 | ||

| ONCE: Boosting Content-based Recommendation with Both Open- and Closed-source Large Language Models | WSDM | 2023-05 | Github | Data |

| Title | Venue | Date | Code | Data |

|---|---|---|---|---|

| Prometheus: Inducing Fine-grained Evaluation Capability in Language Models | ICLR | 2023-10 | Github | Data |

| TIGERScore: Towards Building Explainable Metric for All Text Generation Tasks | arXiv | 2023-10 | Github | Data |

| Generative Judge for Evaluating Alignment | ICLR | 2023-10 | Github | Data |

| PandaLM: An Automatic Evaluation Benchmark for LLM Instruction Tuning Optimization | arXiv | 2023-06 | Github | Data |

| INSTRUCTSCORE: Explainable Text Generation Evaluation with Fine-grained Feedback | EMNLP | 2023-05 | Github | Data |

| Title | Venue | Date | Code | Data |

|---|---|---|---|---|

| Magicoder: Source Code Is All You Need | arXiv | 2023-12 | Github |

Data Data |

| WaveCoder: Widespread And Versatile Enhanced Instruction Tuning with Refined Data Generation | arXiv | 2023-12 | ||

| Instruction Fusion: Advancing Prompt Evolution through Hybridization | arXiv | 2023-12 | ||

| MFTCoder: Boosting Code LLMs with Multitask Fine-Tuning | arXiv | 2023-11 | Github |

Data Data |

| LLM-Assisted Code Cleaning For Training Accurate Code Generators | arXiv | 2023-11 | ||

| Personalised Distillation: Empowering Open-Sourced LLMs with Adaptive Learning for Code Generation | EMNLP | 2023-10 | Github | |

| Code Llama: Open Foundation Models for Code | arXiv | 2023-08 | Github | |

| Distilled GPT for Source Code Summarization | arXiv | 2023-08 | Github | Data |

| Textbooks Are All You Need: A Large-Scale Instructional Text Data Set for Language Models | arXiv | 2023-06 | ||

| Code Alpaca: An Instruction-following LLaMA model for code generation | - | 2023-03 | Github | Data |

| Title | Venue | Date | Code | Data |

|---|---|---|---|---|

| Fuzi | - | 2023-08 | Github | |

| ChatLaw: Open-Source Legal Large Language Model with Integrated External Knowledge Bases | arXiv | 2023-06 | Github | |

| Lawyer LLaMA Technical Report | arXiv | 2023-05 | Github | Data |

| Title | Venue | Date | Code | Data |

|---|---|---|---|---|

| XuanYuan 2.0: A Large Chinese Financial Chat Model with Hundreds of Billions Parameters | CIKM | 2023-05 |

| Title | Venue | Date | Code | Data |

|---|---|---|---|---|

| OWL: A Large Language Model for IT Operations | arXiv | 2023-09 | Github | Data |

| EduChat: A Large-Scale Language Model-based Chatbot System for Intelligent Education | arXiv | 2023-08 | Github | Data |

Note: Our survey mainly focuses on generative LLMs, and thus the encoder-based KD is not included in the survey. However, we are also interested in this topic and continue to update the latest works in this area.

| Title | Venue | Date | Code | Data |

|---|---|---|---|---|

| Masked Latent Semantic Modeling: an Efficient Pre-training Alternative to Masked Language Modeling | Findings of ACL | 2023-08 | ||

| Better Together: Jointly Using Masked Latent Semantic Modeling and Masked Language Modeling for Sample Efficient Pre-training | CoNLL | 2023-08 |

- [ ] Add works about O1-like distillation. Stay tuned!

If you find this repository helpful, please consider citing the following paper:

@misc{xu2024survey,

title={A Survey on Knowledge Distillation of Large Language Models},

author={Xiaohan Xu and Ming Li and Chongyang Tao and Tao Shen and Reynold Cheng and Jinyang Li and Can Xu and Dacheng Tao and Tianyi Zhou},

year={2024},

eprint={2402.13116},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Awesome-Knowledge-Distillation-of-LLMs

Similar Open Source Tools

Awesome-Knowledge-Distillation-of-LLMs

A collection of papers related to knowledge distillation of large language models (LLMs). The repository focuses on techniques to transfer advanced capabilities from proprietary LLMs to smaller models, compress open-source LLMs, and refine their performance. It covers various aspects of knowledge distillation, including algorithms, skill distillation, verticalization distillation in fields like law, medical & healthcare, finance, science, and miscellaneous domains. The repository provides a comprehensive overview of the research in the area of knowledge distillation of LLMs.

Awesome-LLMs-for-Video-Understanding

Awesome-LLMs-for-Video-Understanding is a repository dedicated to exploring Video Understanding with Large Language Models. It provides a comprehensive survey of the field, covering models, pretraining, instruction tuning, and hybrid methods. The repository also includes information on tasks, datasets, and benchmarks related to video understanding. Contributors are encouraged to add new papers, projects, and materials to enhance the repository.

Awesome-Interpretability-in-Large-Language-Models

This repository is a collection of resources focused on interpretability in large language models (LLMs). It aims to help beginners get started in the area and keep researchers updated on the latest progress. It includes libraries, blogs, tutorials, forums, tools, programs, papers, and more related to interpretability in LLMs.

Awesome-Model-Merging-Methods-Theories-Applications

A comprehensive repository focusing on 'Model Merging in LLMs, MLLMs, and Beyond', providing an exhaustive overview of model merging methods, theories, applications, and future research directions. The repository covers various advanced methods, applications in foundation models, different machine learning subfields, and tasks like pre-merging methods, architecture transformation, weight alignment, basic merging methods, and more.

Awesome-Jailbreak-on-LLMs

Awesome-Jailbreak-on-LLMs is a collection of state-of-the-art, novel, and exciting jailbreak methods on Large Language Models (LLMs). The repository contains papers, codes, datasets, evaluations, and analyses related to jailbreak attacks on LLMs. It serves as a comprehensive resource for researchers and practitioners interested in exploring various jailbreak techniques and defenses in the context of LLMs. Contributions such as additional jailbreak-related content, pull requests, and issue reports are welcome, and contributors are acknowledged. For any inquiries or issues, contact [email protected]. If you find this repository useful for your research or work, consider starring it to show appreciation.

MOSS-TTS

MOSS-TTS Family is an open-source speech and sound generation model family designed for high-fidelity, high-expressiveness, and complex real-world scenarios. It includes five production-ready models: MOSS-TTS, MOSS-TTSD, MOSS-VoiceGenerator, MOSS-TTS-Realtime, and MOSS-SoundEffect, each serving specific purposes in speech generation, dialogue, voice design, real-time interactions, and sound effect generation. The models offer features like long-speech generation, fine-grained control over phonemes and duration, multilingual synthesis, voice cloning, and real-time voice agents.

DataFlow

DataFlow is a data preparation and training system designed to parse, generate, process, and evaluate high-quality data from noisy sources, improving the performance of large language models in specific domains. It constructs diverse operators and pipelines, validated to enhance domain-oriented LLM's performance in fields like healthcare, finance, and law. DataFlow also features an intelligent DataFlow-agent capable of dynamically assembling new pipelines by recombining existing operators on demand.

Awesome-Tabular-LLMs

This repository is a collection of papers on Tabular Large Language Models (LLMs) specialized for processing tabular data. It includes surveys, models, and applications related to table understanding tasks such as Table Question Answering, Table-to-Text, Text-to-SQL, and more. The repository categorizes the papers based on key ideas and provides insights into the advancements in using LLMs for processing diverse tables and fulfilling various tabular tasks based on natural language instructions.

MMOS

MMOS (Mix of Minimal Optimal Sets) is a dataset designed for math reasoning tasks, offering higher performance and lower construction costs. It includes various models and data subsets for tasks like arithmetic reasoning and math word problem solving. The dataset is used to identify minimal optimal sets through reasoning paths and statistical analysis, with a focus on QA-pairs generated from open-source datasets. MMOS also provides an auto problem generator for testing model robustness and scripts for training and inference.

VoiceBench

VoiceBench is a repository containing code and data for benchmarking LLM-Based Voice Assistants. It includes a leaderboard with rankings of various voice assistant models based on different evaluation metrics. The repository provides setup instructions, datasets, evaluation procedures, and a curated list of awesome voice assistants. Users can submit new voice assistant results through the issue tracker for updates on the ranking list.

Awesome-LLM-Constrained-Decoding

Awesome-LLM-Constrained-Decoding is a curated list of papers, code, and resources related to constrained decoding of Large Language Models (LLMs). The repository aims to facilitate reliable, controllable, and efficient generation with LLMs by providing a comprehensive collection of materials in this domain.

AI-Competition-Collections

AI-Competition-Collections is a repository that collects and curates various experiences and tips from AI competitions. It includes posts on competition experiences in computer vision, NLP, speech, and other AI-related fields. The repository aims to provide valuable insights and techniques for individuals participating in AI competitions, covering topics such as image classification, object detection, OCR, adversarial attacks, and more.

ZhiLight

ZhiLight is a highly optimized large language model (LLM) inference engine developed by Zhihu and ModelBest Inc. It accelerates the inference of models like Llama and its variants, especially on PCIe-based GPUs. ZhiLight offers significant performance advantages compared to mainstream open-source inference engines. It supports various features such as custom defined tensor and unified global memory management, optimized fused kernels, support for dynamic batch, flash attention prefill, prefix cache, and different quantization techniques like INT8, SmoothQuant, FP8, AWQ, and GPTQ. ZhiLight is compatible with OpenAI interface and provides high performance on mainstream NVIDIA GPUs with different model sizes and precisions.

CogVLM2

CogVLM2 is a new generation of open source models that offer significant improvements in benchmarks such as TextVQA and DocVQA. It supports 8K content length, image resolution up to 1344 * 1344, and both Chinese and English languages. The project provides basic calling methods, fine-tuning examples, and OpenAI API format calling examples to help developers quickly get started with the model.

END-TO-END-GENERATIVE-AI-PROJECTS

The 'END TO END GENERATIVE AI PROJECTS' repository is a collection of awesome industry projects utilizing Large Language Models (LLM) for various tasks such as chat applications with PDFs, image to speech generation, video transcribing and summarizing, resume tracking, text to SQL conversion, invoice extraction, medical chatbot, financial stock analysis, and more. The projects showcase the deployment of LLM models like Google Gemini Pro, HuggingFace Models, OpenAI GPT, and technologies such as Langchain, Streamlit, LLaMA2, LLaMAindex, and more. The repository aims to provide end-to-end solutions for different AI applications.

Prompt-Engineering-Holy-Grail

The Prompt Engineering Holy Grail repository is a curated resource for prompt engineering enthusiasts, providing essential resources, tools, templates, and best practices to support learning and working in prompt engineering. It covers a wide range of topics related to prompt engineering, from beginner fundamentals to advanced techniques, and includes sections on learning resources, online courses, books, prompt generation tools, prompt management platforms, prompt testing and experimentation, prompt crafting libraries, prompt libraries and datasets, prompt engineering communities, freelance and job opportunities, contributing guidelines, code of conduct, support for the project, and contact information.

For similar tasks

Awesome-Knowledge-Distillation-of-LLMs

A collection of papers related to knowledge distillation of large language models (LLMs). The repository focuses on techniques to transfer advanced capabilities from proprietary LLMs to smaller models, compress open-source LLMs, and refine their performance. It covers various aspects of knowledge distillation, including algorithms, skill distillation, verticalization distillation in fields like law, medical & healthcare, finance, science, and miscellaneous domains. The repository provides a comprehensive overview of the research in the area of knowledge distillation of LLMs.

rlhf_thinking_model

This repository is a collection of research notes and resources focusing on training large language models (LLMs) and Reinforcement Learning from Human Feedback (RLHF). It includes methodologies, techniques, and state-of-the-art approaches for optimizing preferences and model alignment in LLM training. The purpose is to serve as a reference for researchers and engineers interested in reinforcement learning, large language models, model alignment, and alternative RL-based methods.

aimet

AIMET is a library that provides advanced model quantization and compression techniques for trained neural network models. It provides features that have been proven to improve run-time performance of deep learning neural network models with lower compute and memory requirements and minimal impact to task accuracy. AIMET is designed to work with PyTorch, TensorFlow and ONNX models. We also host the AIMET Model Zoo - a collection of popular neural network models optimized for 8-bit inference. We also provide recipes for users to quantize floating point models using AIMET.

hqq

HQQ is a fast and accurate model quantizer that skips the need for calibration data. It's super simple to implement (just a few lines of code for the optimizer). It can crunch through quantizing the Llama2-70B model in only 4 minutes! 🚀

llm-resource

llm-resource is a comprehensive collection of high-quality resources for Large Language Models (LLM). It covers various aspects of LLM including algorithms, training, fine-tuning, alignment, inference, data engineering, compression, evaluation, prompt engineering, AI frameworks, AI basics, AI infrastructure, AI compilers, LLM application development, LLM operations, AI systems, and practical implementations. The repository aims to gather and share valuable resources related to LLM for the community to benefit from.

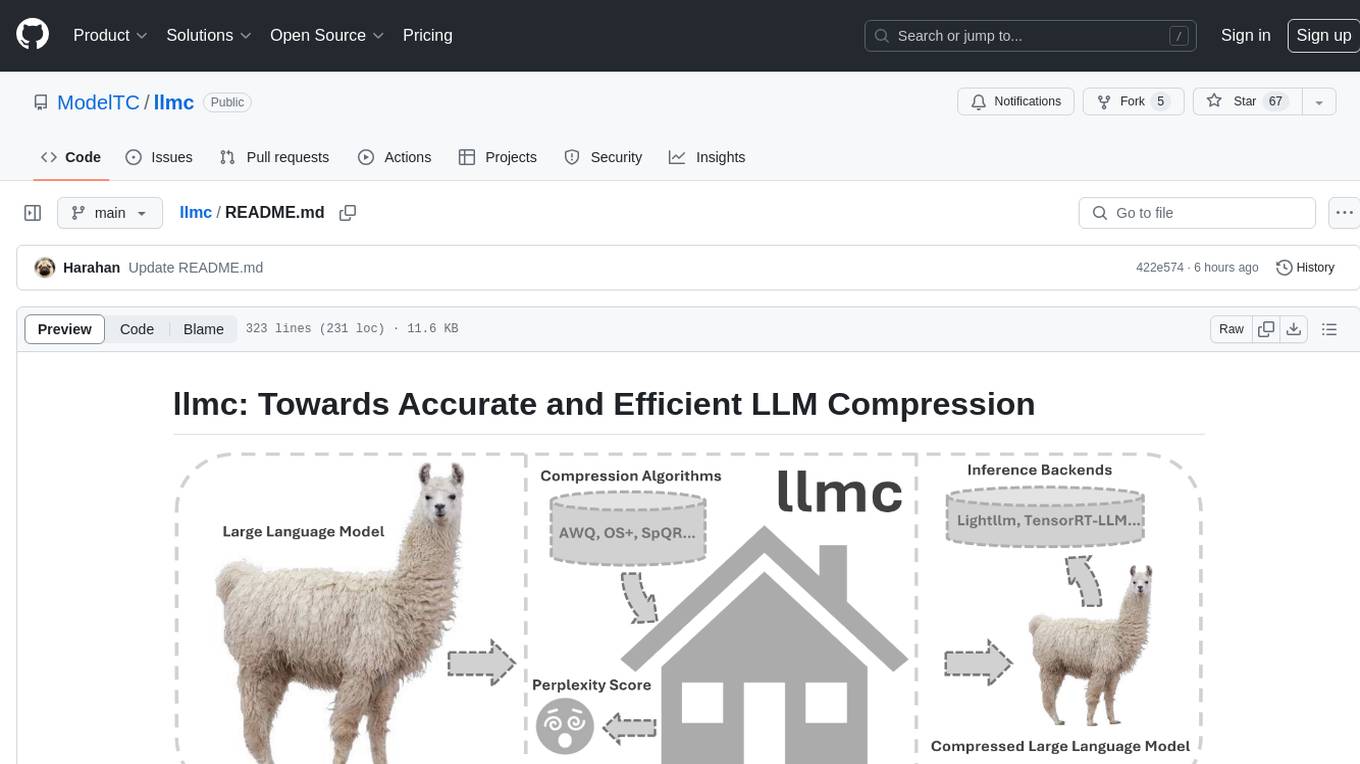

llmc

llmc is an off-the-shell tool designed for compressing LLM, leveraging state-of-the-art compression algorithms to enhance efficiency and reduce model size without compromising performance. It provides users with the ability to quantize LLMs, choose from various compression algorithms, export transformed models for further optimization, and directly infer compressed models with a shallow memory footprint. The tool supports a range of model types and quantization algorithms, with ongoing development to include pruning techniques. Users can design their configurations for quantization and evaluation, with documentation and examples planned for future updates. llmc is a valuable resource for researchers working on post-training quantization of large language models.

Awesome-Efficient-LLM

Awesome-Efficient-LLM is a curated list focusing on efficient large language models. It includes topics such as knowledge distillation, network pruning, quantization, inference acceleration, efficient MOE, efficient architecture of LLM, KV cache compression, text compression, low-rank decomposition, hardware/system, tuning, and survey. The repository provides a collection of papers and projects related to improving the efficiency of large language models through various techniques like sparsity, quantization, and compression.

TensorRT-Model-Optimizer

The NVIDIA TensorRT Model Optimizer is a library designed to quantize and compress deep learning models for optimized inference on GPUs. It offers state-of-the-art model optimization techniques including quantization and sparsity to reduce inference costs for generative AI models. Users can easily stack different optimization techniques to produce quantized checkpoints from torch or ONNX models. The quantized checkpoints are ready for deployment in inference frameworks like TensorRT-LLM or TensorRT, with planned integrations for NVIDIA NeMo and Megatron-LM. The tool also supports 8-bit quantization with Stable Diffusion for enterprise users on NVIDIA NIM. Model Optimizer is available for free on NVIDIA PyPI, and this repository serves as a platform for sharing examples, GPU-optimized recipes, and collecting community feedback.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.