GPULlama3.java

GPU-accelerated Llama3.java inference in pure Java using TornadoVM.

Stars: 164

GPULlama3.java powered by TornadoVM is a Java-native implementation of Llama3 that automatically compiles and executes Java code on GPUs via TornadoVM. It supports Llama3, Mistral, Qwen2.5, Qwen3, and Phi3 models in the GGUF format. The repository aims to provide GPU acceleration for Java code, enabling faster execution and high-performance access to off-heap memory. It offers features like interactive and instruction modes, flexible backend switching between OpenCL and PTX, and cross-platform compatibility with NVIDIA, Intel, and Apple GPUs.

README:

|

Llama3 models written in native Java automatically accelerated on GPUs with TornadoVM.

Runs Llama3 inference efficiently using TornadoVM's GPU acceleration.

Currently, supports Llama3, Mistral, Qwen2.5, Qwen3 and Phi3 models in the GGUF format. Builds on Llama3.java by Alfonso² Peterssen. Previous integration of TornadoVM and Llama2 it can be found in llama2.tornadovm. |

[Interactive-mode] Running on a RTX 5090 with nvtop on bottom to track GPU utilization and memory usage.

We are at the early stages of Java entering the AI world with features added to the JVM that enable faster execution such as GPU acceleration, Vector acceleration, high-performance access to off-heap memory and others.

This repository provides the first Java-native implementation of Llama3 that automatically compiles and executes Java code on GPUs via TornadoVM.

The baseline numbers presented below provide a solid starting point for achieving more competitive performance compared to llama.cpp or native CUDA implementations.

Our roadmap provides the upcoming set of features that will dramatically improve the numbers below with the clear target being to achieve performance parity with the fastest implementations.

If you achieve additional performance data points (e.g. new hardware or platforms) please let us know to add them below.

In addition, if you are interested to learn more about the challenges of managed programming languages and GPU acceleration, you can read our book or consult the TornadoVM educational pages.

| Vendor / Backend | Hardware | Llama-3.2-1B-Instruct | Llama-3.2-3B-Instruct | Optimizations |

|---|---|---|---|---|

| FP16 | FP16 | Support | ||

| NVIDIA / OpenCL-PTX | RTX 3070 | 52 tokens/s | 22.96 tokens/s | ✅ |

| RTX 4090 | 66.07 tokens/s | 35.51 tokens/s | ✅ | |

| RTX 5090 | 96.65 tokens/s | 47.68 tokens/s | ✅ | |

| L4 Tensor | 52.96 tokens/s | 22.68 tokens/s | ✅ | |

| Intel / OpenCL | Arc A770 | 15.65 tokens/s | 7.02 tokens/s | (WIP) |

| Apple Silicon / OpenCL | M3 Pro | 14.04 tokens/s | 6.78 tokens/s | (WIP) |

| M4 Pro | 16.77 tokens/s | 8.56 tokens/s | (WIP) | |

| AMD / OpenCL | Radeon RX | (WIP) | (WIP) | (WIP) |

TornadoVM currently runs on Apple Silicon via OpenCL, which has been officially deprecated since macOS 10.14.

Despite being deprecated, OpenCL can still run on Apple Silicon; albeit, with older drivers which do not support all optimizations of TornadoVM. Therefore, the performance is not optimal since TornadoVM does not have a Metal backend yet (it currently has OpenCL, PTX, and SPIR-V backends). We recommend using Apple silicon for development and for performance testing to use OpenCL/PTX compatible Nvidia GPUs for the time being (until we add a Metal backend to TornadoVM and start optimizing it).

Ensure you have the following installed and configured:

- Java 21: Required for Vector API support & TornadoVM.

- TornadoVM with OpenCL or PTX backends.

- Maven: For building the Java project.

When cloning this repository, use the --recursive flag to ensure that TornadoVM is properly included as submodule:

# Clone the repository with all submodules

git clone --recursive https://github.com/beehive-lab/GPULlama3.java.git

# Navigate to the project directory

cd GPULlama3.java

# Update the submodules to match the exact commit point recorded in this repository

git submodule update --recursive# Enter the TornadoVM submodule directory

cd external/tornadovm

# Optional: Create and activate a Python virtual environment if needed

python3 -m venv venv

source ./venv/bin/activate

# Install TornadoVM with a supported JDK 21 and select the backends (--backend opencl,ptx).

# To see the compatible JDKs run: ./bin/tornadovm-installer --listJDKs

# For example, to install with OpenJDK 21 and build the OpenCL backend, run:

./bin/tornadovm-installer --jdk jdk21 --backend opencl

# Source the TornadoVM environment variables

source setvars.sh

# Navigate back to the project root directory

cd ../../

# Source the project-specific environment paths -> this will ensure the correct paths are set for the project and the TornadoVM SDK

# Expect to see: [INFO] Environment configured for Llama3 with TornadoVM at: /home/YOUR_PATH_TO_TORNADOVM

source set_paths

# Build the project using Maven (skip tests for faster build)

# mvn clean package -DskipTests or just make

make

# Run the model (make sure you have downloaded the model file first - see below)

./llama-tornado --gpu --verbose-init --opencl --model beehive-llama-3.2-1b-instruct-fp16.gguf --prompt "tell me a joke"# Enter the TornadoVM submodule directory

cd external/tornadovm

# Optional: Create and activate a Python virtual environment if needed

python -m venv .venv

.venv\Scripts\activate.bat

.\bin\windowsMicrosoftStudioTools2022.cmd

# Install TornadoVM with a supported JDK 21 and select the backends (--backend opencl,ptx).

# To see the compatible JDKs run: ./bin/tornadovm-installer --listJDKs

# For example, to install with OpenJDK 21 and build the OpenCL backend, run:

python bin\tornadovm-installer --jdk jdk21 --backend opencl

# Source the TornadoVM environment variables

setvars.cmd

# Navigate back to the project root directory

cd ../../

# Source the project-specific environment paths -> this will ensure the correct paths are set for the project and the TornadoVM SDK

# Expect to see: [INFO] Environment configured for Llama3 with TornadoVM at: C:\Users\YOUR_PATH_TO_TORNADOVM

set_paths.cmd

# Build the project using Maven (skip tests for faster build)

# mvn clean package -DskipTests or just make

make

# Run the model (make sure you have downloaded the model file first - see below)

python llama-tornado --gpu --verbose-init --opencl --model beehive-llama-3.2-1b-instruct-fp16.gguf --prompt "tell me a joke"To integrate it into your codebase or IDE (e.g., IntelliJ) or custom build system (like IntelliJ, Maven, or Gradle), use the --show-command flag.

This flag shows the exact Java command with all JVM flags that are being invoked under the hood to enable seamless execution on GPUs with TornadoVM.

Hence, it makes it simple to replicate or embed the invoked flags in any external tool or codebase.

llama-tornado --gpu --model beehive-llama-3.2-1b-instruct-fp16.gguf --prompt "tell me a joke" --show-command📋 Click to see the JVM configuration

/home/mikepapadim/.sdkman/candidates/java/current/bin/java \

-server \

-XX:+UnlockExperimentalVMOptions \

-XX:+EnableJVMCI \

-Xms20g -Xmx20g \

--enable-preview \

-Djava.library.path=/home/mikepapadim/manchester/TornadoVM/bin/sdk/lib \

-Djdk.module.showModuleResolution=false \

--module-path .:/home/mikepapadim/manchester/TornadoVM/bin/sdk/share/java/tornado \

-Dtornado.load.api.implementation=uk.ac.manchester.tornado.runtime.tasks.TornadoTaskGraph \

-Dtornado.load.runtime.implementation=uk.ac.manchester.tornado.runtime.TornadoCoreRuntime \

-Dtornado.load.tornado.implementation=uk.ac.manchester.tornado.runtime.common.Tornado \

-Dtornado.load.annotation.implementation=uk.ac.manchester.tornado.annotation.ASMClassVisitor \

-Dtornado.load.annotation.parallel=uk.ac.manchester.tornado.api.annotations.Parallel \

-Dtornado.tvm.maxbytecodesize=65536 \

-Duse.tornadovm=true \

-Dtornado.threadInfo=false \

-Dtornado.debug=false \

-Dtornado.fullDebug=false \

-Dtornado.printKernel=false \

-Dtornado.print.bytecodes=false \

-Dtornado.device.memory=7GB \

-Dtornado.profiler=false \

-Dtornado.log.profiler=false \

-Dtornado.profiler.dump.dir=/home/mikepapadim/repos/gpu-llama3.java/prof.json \

-Dtornado.enable.fastMathOptimizations=true \

-Dtornado.enable.mathOptimizations=false \

-Dtornado.enable.nativeFunctions=fast \

-Dtornado.loop.interchange=true \

-Dtornado.eventpool.maxwaitevents=32000 \

"-Dtornado.opencl.compiler.flags=-cl-denorms-are-zero -cl-no-signed-zeros -cl-finite-math-only" \

--upgrade-module-path /home/mikepapadim/manchester/TornadoVM/bin/sdk/share/java/graalJars \

@/home/mikepapadim/manchester/TornadoVM/bin/sdk/etc/exportLists/common-exports \

@/home/mikepapadim/manchester/TornadoVM/bin/sdk/etc/exportLists/opencl-exports \

--add-modules ALL-SYSTEM,tornado.runtime,tornado.annotation,tornado.drivers.common,tornado.drivers.opencl \

-cp /home/mikepapadim/repos/gpu-llama3.java/target/gpu-llama3-1.0-SNAPSHOT.jar \

org.beehive.gpullama3.LlamaApp \

-m beehive-llama-3.2-1b-instruct-fp16.gguf \

--temperature 0.1 \

--top-p 0.95 \

--seed 1746903566 \

--max-tokens 512 \

--stream true \

--echo false \

-p "tell me a joke" \

--instructThe above model can we swapped with one of the other models, such as beehive-llama-3.2-3b-instruct-fp16.gguf or beehive-llama-3.2-8b-instruct-fp16.gguf, depending on your needs.

Check models below.

Download FP16 quantized Llama-3 .gguf files from:

- https://huggingface.co/beehive-lab/Llama-3.2-1B-Instruct-GGUF-FP16

- https://huggingface.co/beehive-lab/Llama-3.2-3B-Instruct-GGUF-FP16

- https://huggingface.co/beehive-lab/Llama-3.2-8B-Instruct-GGUF-FP16

Download FP16 quantized Mistral .gguf files from:

Download FP16 quantized Qwen3 .gguf files from:

- https://huggingface.co/ggml-org/Qwen3-0.6B-GGUF

- https://huggingface.co/ggml-org/Qwen3-1.7B-GGUF

- https://huggingface.co/ggml-org/Qwen3-4B-GGUF

- https://huggingface.co/ggml-org/Qwen3-8B-GGUF

Download FP16 quantized Qwen2.5 .gguf files from:

- https://huggingface.co/bartowski/Qwen2.5-0.5B-Instruct-GGUF

- https://huggingface.co/Qwen/Qwen2.5-1.5B-Instruct-GGUF

Download FP16 quantized DeepSeek-R1-Distill-Qwen .gguf files from:

Please be gentle with huggingface.co servers:

Note FP16 models are first-class citizens for the current version.

# Llama 3.2 (1B) - FP16

wget https://huggingface.co/beehive-lab/Llama-3.2-1B-Instruct-GGUF-FP16/resolve/main/beehive-llama-3.2-1b-instruct-fp16.gguf

# Llama 3.2 (3B) - FP16

wget https://huggingface.co/beehive-lab/Llama-3.2-3B-Instruct-GGUF-FP16/resolve/main/beehive-llama-3.2-3b-instruct-fp16.gguf

# Llama 3 (8B) - FP16

wget https://huggingface.co/beehive-lab/Llama-3.2-8B-Instruct-GGUF-FP16/resolve/main/beehive-llama-3.2-8b-instruct-fp16.gguf

# Mistral (7B) - FP16

wget https://huggingface.co/MaziyarPanahi/Mistral-7B-Instruct-v0.3-GGUF/resolve/main/Mistral-7B-Instruct-v0.3.fp16.gguf

# Qwen3 (0.6B) - FP16

wget https://huggingface.co/ggml-org/Qwen3-0.6B-GGUF/resolve/main/Qwen3-0.6B-f16.gguf

# Qwen3 (1.7B) - FP16

wget https://huggingface.co/ggml-org/Qwen3-0.6B-GGUF/resolve/main/Qwen3-1.7B-f16.gguf

# Qwen3 (4B) - FP16

wget https://huggingface.co/ggml-org/Qwen3-0.6B-GGUF/resolve/main/Qwen3-4B-f16.gguf

# Qwen3 (8B) - FP16

wget https://huggingface.co/ggml-org/Qwen3-0.6B-GGUF/resolve/main/Qwen3-8B-f16.gguf

# Phi-3-mini-4k - FP16

wget https://huggingface.co/microsoft/Phi-3-mini-4k-instruct-gguf/resolve/main/Phi-3-mini-4k-instruct-fp16.gguf

# Qwen2.5 (0.5B)

wget https://huggingface.co/bartowski/Qwen2.5-0.5B-Instruct-GGUF/resolve/main/Qwen2.5-0.5B-Instruct-f16.gguf

# Qwen2.5 (1.5B)

wget https://huggingface.co/Qwen/Qwen2.5-1.5B-Instruct-GGUF/resolve/main/qwen2.5-1.5b-instruct-fp16.gguf

# DeepSeek-R1-Distill-Qwen (1.5B)

wget https://huggingface.co/hdnh2006/DeepSeek-R1-Distill-Qwen-1.5B-GGUF/resolve/main/DeepSeek-R1-Distill-Qwen-1.5B-F16.gguf

[Experimental] you can download the Q8 and Q4 used in the original implementation of Llama3.java, but for now are going to be dequanted to FP16 for TornadoVM support:

# Llama 3.2 (1B) - Q4_0

curl -L -O https://huggingface.co/mukel/Llama-3.2-1B-Instruct-GGUF/resolve/main/Llama-3.2-1B-Instruct-Q4_0.gguf

# Llama 3.2 (3B) - Q4_0

curl -L -O https://huggingface.co/mukel/Llama-3.2-3B-Instruct-GGUF/resolve/main/Llama-3.2-3B-Instruct-Q4_0.gguf

# Llama 3 (8B) - Q4_0

curl -L -O https://huggingface.co/mukel/Meta-Llama-3-8B-Instruct-GGUF/resolve/main/Meta-Llama-3-8B-Instruct-Q4_0.gguf

# Llama 3.2 (1B) - Q8_0

curl -L -O https://huggingface.co/mukel/Llama-3.2-1B-Instruct-GGUF/resolve/main/Llama-3.2-1B-Instruct-Q8_0.gguf

# Llama 3.1 (8B) - Q8_0

curl -L -O https://huggingface.co/mukel/Meta-Llama-3.1-8B-Instruct-GGUF/resolve/main/Meta-Llama-3.1-8B-Instruct-Q4_0.gguf

To execute Llama3, or Mistral models with TornadoVM on GPUs use the llama-tornado script with the --gpu flag.

Run a model with a text prompt:

./llama-tornado --gpu --verbose-init --opencl --model beehive-llama-3.2-1b-instruct-fp16.gguf --prompt "Explain the benefits of GPU acceleration."Enable GPU acceleration with Q8_0 quantization:

./llama-tornado --gpu --verbose-init --model beehive-llama-3.2-1b-instruct-fp16.gguf --prompt "tell me a joke"You can run GPULlama3.java fully containerized with GPU acceleration enabled via OpenCL or PTX using pre-built Docker images.

More information as well as examples to run with the containers are available at docker-gpullama3.java.

| Backend | Docker Image | Pull Command |

|---|---|---|

| OpenCL | beehivelab/gpullama3.java-nvidia-openjdk-opencl |

docker pull beehivelab/gpullama3.java-nvidia-openjdk-opencl |

| PTX (CUDA) | beehivelab/gpullama3.java-nvidia-openjdk-ptx |

docker pull beehivelab/gpullama3.java-nvidia-openjdk-ptx |

docker run --rm -it --gpus all \

-v "$PWD":/data \

beehivelab/gpullama3.java-nvidia-openjdk-opencl \

/gpullama3/GPULlama3.java/llama-tornado \

--gpu --verbose-init \

--opencl \

--model /data/Llama-3.2-1B-Instruct.FP16.gguf \

--prompt "Tell me a joke"You may encounter an out-of-memory error like:

Exception in thread "main" uk.ac.manchester.tornado.api.exceptions.TornadoOutOfMemoryException: Unable to allocate 100663320 bytes of memory.

To increase the maximum device memory, use -Dtornado.device.memory=<X>GB

This indicates that the default GPU memory allocation (7GB) is insufficient for your model.

First, check your GPU specifications. If your GPU has high memory capacity, you can increase the GPU memory allocation using the --gpu-memory flag:

# For 3B models, try increasing to 15GB

./llama-tornado --gpu --model beehive-llama-3.2-3b-instruct-fp16.gguf --prompt "Tell me a joke" --gpu-memory 15GB

# For 8B models, you may need even more (20GB or higher)

./llama-tornado --gpu --model beehive-llama-3.2-8b-instruct-fp16.gguf --prompt "Tell me a joke" --gpu-memory 20GB| Model Size | Recommended GPU Memory |

|---|---|

| 1B models | 7GB (default) |

| 3-7B models | 15GB |

| 8B models | 20GB+ |

Note: If you still encounter memory issues, try:

- Using Q4_0 instead of Q8_0 quantization (requires less memory).

- Closing other GPU-intensive applications in your system.

Supported command-line options include:

cmd ➜ llama-tornado --help

usage: llama-tornado [-h] --model MODEL_PATH [--prompt PROMPT] [-sp SYSTEM_PROMPT] [--temperature TEMPERATURE] [--top-p TOP_P] [--seed SEED] [-n MAX_TOKENS]

[--stream STREAM] [--echo ECHO] [-i] [--instruct] [--gpu] [--opencl] [--ptx] [--gpu-memory GPU_MEMORY] [--heap-min HEAP_MIN] [--heap-max HEAP_MAX]

[--debug] [--profiler] [--profiler-dump-dir PROFILER_DUMP_DIR] [--print-bytecodes] [--print-threads] [--print-kernel] [--full-dump]

[--show-command] [--execute-after-show] [--opencl-flags OPENCL_FLAGS] [--max-wait-events MAX_WAIT_EVENTS] [--verbose]

GPU-accelerated LLaMA.java model runner using TornadoVM

options:

-h, --help show this help message and exit

--model MODEL_PATH Path to the LLaMA model file (e.g., beehive-llama-3.2-8b-instruct-fp16.gguf) (default: None)

LLaMA Configuration:

--prompt PROMPT Input prompt for the model (default: None)

-sp SYSTEM_PROMPT, --system-prompt SYSTEM_PROMPT

System prompt for the model (default: None)

--temperature TEMPERATURE

Sampling temperature (0.0 to 2.0) (default: 0.1)

--top-p TOP_P Top-p sampling parameter (default: 0.95)

--seed SEED Random seed (default: current timestamp) (default: None)

-n MAX_TOKENS, --max-tokens MAX_TOKENS

Maximum number of tokens to generate (default: 512)

--stream STREAM Enable streaming output (default: True)

--echo ECHO Echo the input prompt (default: False)

--suffix SUFFIX Suffix for fill-in-the-middle request (Codestral) (default: None)

Mode Selection:

-i, --interactive Run in interactive/chat mode (default: False)

--instruct Run in instruction mode (default) (default: True)

Hardware Configuration:

--gpu Enable GPU acceleration (default: False)

--opencl Use OpenCL backend (default) (default: None)

--ptx Use PTX/CUDA backend (default: None)

--gpu-memory GPU_MEMORY

GPU memory allocation (default: 7GB)

--heap-min HEAP_MIN Minimum JVM heap size (default: 20g)

--heap-max HEAP_MAX Maximum JVM heap size (default: 20g)

Debug and Profiling:

--debug Enable debug output (default: False)

--profiler Enable TornadoVM profiler (default: False)

--profiler-dump-dir PROFILER_DUMP_DIR

Directory for profiler output (default: /home/mikepapadim/repos/gpu-llama3.java/prof.json)

TornadoVM Execution Verbose:

--print-bytecodes Print bytecodes (tornado.print.bytecodes=true) (default: False)

--print-threads Print thread information (tornado.threadInfo=true) (default: False)

--print-kernel Print kernel information (tornado.printKernel=true) (default: False)

--full-dump Enable full debug dump (tornado.fullDebug=true) (default: False)

--verbose-init Enable timers for TornadoVM initialization (llama.EnableTimingForTornadoVMInit=true) (default: False)

Command Display Options:

--show-command Display the full Java command that will be executed (default: False)

--execute-after-show Execute the command after showing it (use with --show-command) (default: False)

Advanced Options:

--opencl-flags OPENCL_FLAGS

OpenCL compiler flags (default: -cl-denorms-are-zero -cl-no-signed-zeros -cl-finite-math-only)

--max-wait-events MAX_WAIT_EVENTS

Maximum wait events for TornadoVM event pool (default: 32000)

--verbose, -v Verbose output (default: False)

View TornadoVM's internal behavior:

# Print thread information during execution

./llama-tornado --gpu --model model.gguf --prompt "..." --print-threads

# Show bytecode compilation details

./llama-tornado --gpu --model model.gguf --prompt "..." --print-bytecodes

# Display generated GPU kernel code

./llama-tornado --gpu --model model.gguf --prompt "..." --print-kernel

# Enable full debug output with all details

./llama-tornado --gpu --model model.gguf --prompt "..." --debug --full-dump

# Combine debug options

./llama-tornado --gpu --model model.gguf --prompt "..." --print-threads --print-bytecodes --print-kernel- Support for GGUF format models with full FP16 and partial support for Q8_0 and Q4_0 quantization.

- Instruction-following and chat modes for various use cases.

-

Interactive CLI with

--interactiveand--instructmodes. - Flexible backend switching - choose OpenCL or PTX at runtime (need to build TornadoVM with both enabled).

-

Cross-platform compatibility:

- ✅ NVIDIA GPUs (OpenCL & PTX )

- ✅ Intel GPUs (OpenCL)

- ✅ Apple GPUs (OpenCL)

Click here to view a more detailed list of the transformer optimizations implemented in TornadoVM.

Click here to see the roadmap of the project.

This work is partially funded by the following EU & UKRI grants (most recent first):

- EU Horizon Europe & UKRI AERO 101092850.

- EU Horizon Europe & UKRI P2CODE 101093069.

- EU Horizon Europe & UKRI ENCRYPT 101070670.

- EU Horizon Europe & UKRI TANGO 101070052.

MIT

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for GPULlama3.java

Similar Open Source Tools

GPULlama3.java

GPULlama3.java powered by TornadoVM is a Java-native implementation of Llama3 that automatically compiles and executes Java code on GPUs via TornadoVM. It supports Llama3, Mistral, Qwen2.5, Qwen3, and Phi3 models in the GGUF format. The repository aims to provide GPU acceleration for Java code, enabling faster execution and high-performance access to off-heap memory. It offers features like interactive and instruction modes, flexible backend switching between OpenCL and PTX, and cross-platform compatibility with NVIDIA, Intel, and Apple GPUs.

cb-tumblebug

CB-Tumblebug (CB-TB) is a system for managing multi-cloud infrastructure consisting of resources from multiple cloud service providers. It provides an overview, features, and architecture. The tool supports various cloud providers and resource types, with ongoing development and localization efforts. Users can deploy a multi-cloud infra with GPUs, enjoy multiple LLMs in parallel, and utilize LLM-related scripts. The tool requires Linux, Docker, Docker Compose, and Golang for building the source. Users can run CB-TB with Docker Compose or from the Makefile, set up prerequisites, contribute to the project, and view a list of contributors. The tool is licensed under an open-source license.

rwkv-qualcomm

This repository provides support for inference RWKV models on Qualcomm HTP (Hexagon Tensor Processor) using QNN SDK. It supports RWKV v5, v6, and experimentally v7 models, inference using Qualcomm CPU, GPU, or HTP as the backend, whole-model float16 inference, activation INT16 and weights INT8 quantized inference, and activation INT16 and weights INT4/INT8 mixed quantized inference. Users can convert model weights to QNN model library files, generate HTP context cache, and run inference on Qualcomm Snapdragon SM8650 with HTP v75. The project requires QNN SDK, AIMET toolkit, and specific hardware for verification.

pytorch-lightning

PyTorch Lightning is a framework for training and deploying AI models. It provides a high-level API that abstracts away the low-level details of PyTorch, making it easier to write and maintain complex models. Lightning also includes a number of features that make it easy to train and deploy models on multiple GPUs or TPUs, and to track and visualize training progress. PyTorch Lightning is used by a wide range of organizations, including Google, Facebook, and Microsoft. It is also used by researchers at top universities around the world. Here are some of the benefits of using PyTorch Lightning: * **Increased productivity:** Lightning's high-level API makes it easy to write and maintain complex models. This can save you time and effort, and allow you to focus on the research or business problem you're trying to solve. * **Improved performance:** Lightning's optimized training loops and data loading pipelines can help you train models faster and with better performance. * **Easier deployment:** Lightning makes it easy to deploy models to a variety of platforms, including the cloud, on-premises servers, and mobile devices. * **Better reproducibility:** Lightning's logging and visualization tools make it easy to track and reproduce training results.

MAVIS

MAVIS (Math Visual Intelligent System) is an AI-driven application that allows users to analyze visual data such as images and generate interactive answers based on them. It can perform complex mathematical calculations, solve programming tasks, and create professional graphics. MAVIS supports Python for coding and frameworks like Matplotlib, Plotly, Seaborn, Altair, NumPy, Math, SymPy, and Pandas. It is designed to make projects more efficient and professional.

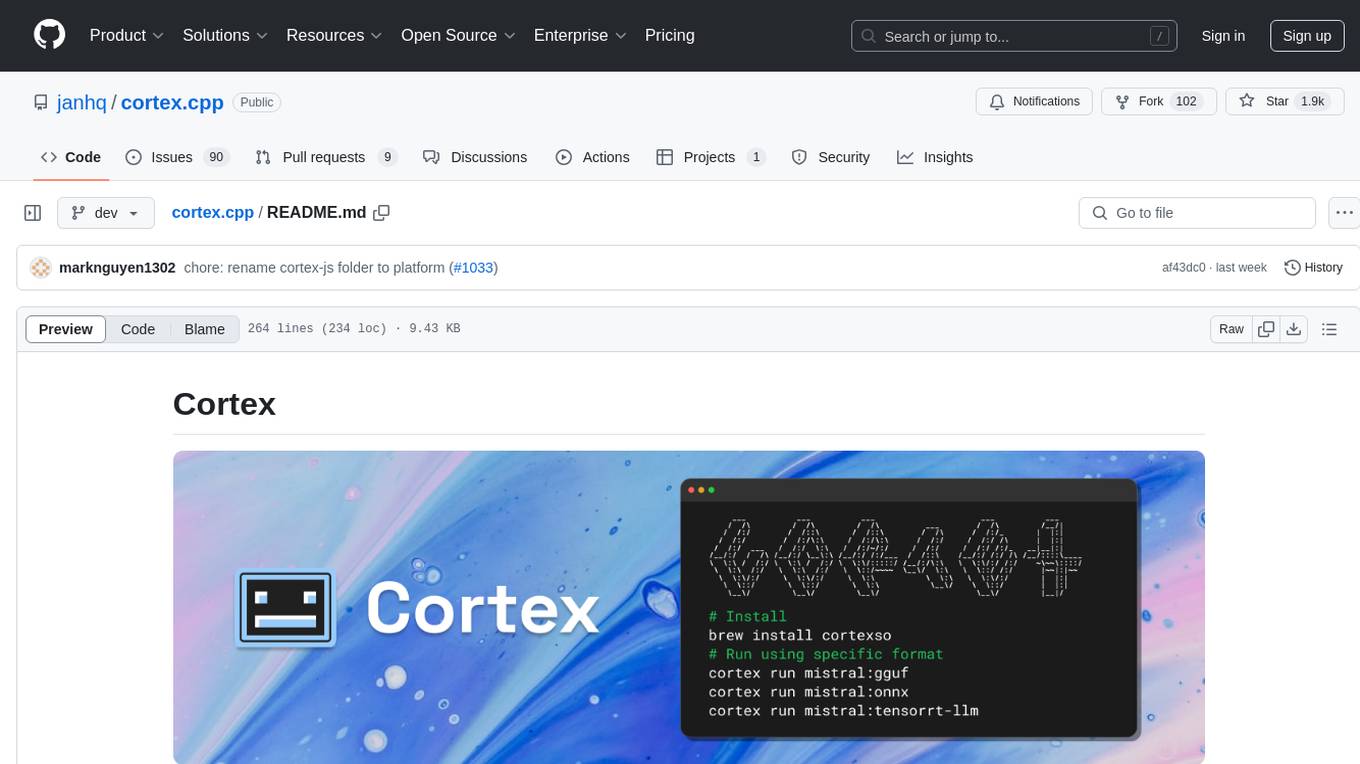

cortex.cpp

Cortex is a C++ AI engine with a Docker-like command-line interface and client libraries. It supports running AI models using ONNX, TensorRT-LLM, and llama.cpp engines. Cortex can function as a standalone server or be integrated as a library. The tool provides support for various engines and models, allowing users to easily deploy and interact with AI models. It offers a range of CLI commands for managing models, embeddings, and engines, as well as a REST API for interacting with models. Cortex is designed to simplify the deployment and usage of AI models in C++ applications.

MaskLLM

MaskLLM is a learnable pruning method that establishes Semi-structured Sparsity in Large Language Models (LLMs) to reduce computational overhead during inference. It is scalable and benefits from larger training datasets. The tool provides examples for running MaskLLM with Megatron-LM, preparing LLaMA checkpoints, pre-tokenizing C4 data for Megatron, generating prior masks, training MaskLLM, and evaluating the model. It also includes instructions for exporting sparse models to Huggingface.

daytona

Daytona is a secure and elastic infrastructure tool designed for running AI-generated code. It offers lightning-fast infrastructure with sub-90ms sandbox creation, separated and isolated runtime for executing AI code with zero risk, massive parallelization for concurrent AI workflows, programmatic control through various APIs, unlimited sandbox persistence, and OCI/Docker compatibility. Users can create sandboxes using Python or TypeScript SDKs, run code securely inside the sandbox, and clean up the sandbox after execution. Daytona is open source under the GNU Affero General Public License and welcomes contributions from developers.

TempCompass

TempCompass is a benchmark designed to evaluate the temporal perception ability of Video LLMs. It encompasses a diverse set of temporal aspects and task formats to comprehensively assess the capability of Video LLMs in understanding videos. The benchmark includes conflicting videos to prevent models from relying on single-frame bias and language priors. Users can clone the repository, install required packages, prepare data, run inference using examples like Video-LLaVA and Gemini, and evaluate the performance of their models across different tasks such as Multi-Choice QA, Yes/No QA, Caption Matching, and Caption Generation.

DeepClaude

DeepClaude is an open-source project inspired by the DeepSeek R1 model, aiming to provide the best results in various tasks by combining different models. It supports OpenAI-compatible input and output formats, integrates with DeepSeek and Claude APIs, and offers special support for other OpenAI-compatible models. Users can run the project locally or deploy it on a server to access a powerful language model service. The project also provides guidance on obtaining necessary APIs and running the project, including using Docker for deployment.

ScaleLLM

ScaleLLM is a cutting-edge inference system engineered for large language models (LLMs), meticulously designed to meet the demands of production environments. It extends its support to a wide range of popular open-source models, including Llama3, Gemma, Bloom, GPT-NeoX, and more. ScaleLLM is currently undergoing active development. We are fully committed to consistently enhancing its efficiency while also incorporating additional features. Feel free to explore our **_Roadmap_** for more details. ## Key Features * High Efficiency: Excels in high-performance LLM inference, leveraging state-of-the-art techniques and technologies like Flash Attention, Paged Attention, Continuous batching, and more. * Tensor Parallelism: Utilizes tensor parallelism for efficient model execution. * OpenAI-compatible API: An efficient golang rest api server that compatible with OpenAI. * Huggingface models: Seamless integration with most popular HF models, supporting safetensors. * Customizable: Offers flexibility for customization to meet your specific needs, and provides an easy way to add new models. * Production Ready: Engineered with production environments in mind, ScaleLLM is equipped with robust system monitoring and management features to ensure a seamless deployment experience.

WilliamButcherBot

WilliamButcherBot is a Telegram Group Manager Bot and Userbot written in Python using Pyrogram. It provides features for managing Telegram groups and users, with ready-to-use methods available. The bot requires Python 3.9, Telegram API Key, Telegram Bot Token, and MongoDB URI. Users can install it locally or on a VPS, run it directly, generate Pyrogram session for Heroku, or use Docker for deployment. Additionally, users can write new modules to extend the bot's functionality by adding them to the wbb/modules/ directory.

Open-Sora-Plan

Open-Sora-Plan is a project that aims to create a simple and scalable repo to reproduce Sora (OpenAI, but we prefer to call it "ClosedAI"). The project is still in its early stages, but the team is working hard to improve it and make it more accessible to the open-source community. The project is currently focused on training an unconditional model on a landscape dataset, but the team plans to expand the scope of the project in the future to include text2video experiments, training on video2text datasets, and controlling the model with more conditions.

KB-Builder

KB Builder is an open-source knowledge base generation system based on the LLM large language model. It utilizes the RAG (Retrieval-Augmented Generation) data generation enhancement method to provide users with the ability to enhance knowledge generation and quickly build knowledge bases based on RAG. It aims to be the central hub for knowledge construction in enterprises, offering platform-based intelligent dialogue services and document knowledge base management functionality. Users can upload docx, pdf, txt, and md format documents and generate high-quality knowledge base question-answer pairs by invoking large models through the 'Parse Document' feature.

llama-assistant

Llama Assistant is a local AI assistant that respects your privacy. It is an AI-powered assistant that can recognize your voice, process natural language, and perform various actions based on your commands. It can help with tasks like summarizing text, rephrasing sentences, answering questions, writing emails, and more. The assistant runs offline on your local machine, ensuring privacy by not sending data to external servers. It supports voice recognition, natural language processing, and customizable UI with adjustable transparency. The project is a work in progress with new features being added regularly.

For similar tasks

GPULlama3.java

GPULlama3.java powered by TornadoVM is a Java-native implementation of Llama3 that automatically compiles and executes Java code on GPUs via TornadoVM. It supports Llama3, Mistral, Qwen2.5, Qwen3, and Phi3 models in the GGUF format. The repository aims to provide GPU acceleration for Java code, enabling faster execution and high-performance access to off-heap memory. It offers features like interactive and instruction modes, flexible backend switching between OpenCL and PTX, and cross-platform compatibility with NVIDIA, Intel, and Apple GPUs.

tt-metal

TT-NN is a python & C++ Neural Network OP library. It provides a low-level programming model, TT-Metalium, enabling kernel development for Tenstorrent hardware.

mscclpp

MSCCL++ is a GPU-driven communication stack for scalable AI applications. It provides a highly efficient and customizable communication stack for distributed GPU applications. MSCCL++ redefines inter-GPU communication interfaces, delivering a highly efficient and customizable communication stack for distributed GPU applications. Its design is specifically tailored to accommodate diverse performance optimization scenarios often encountered in state-of-the-art AI applications. MSCCL++ provides communication abstractions at the lowest level close to hardware and at the highest level close to application API. The lowest level of abstraction is ultra light weight which enables a user to implement logics of data movement for a collective operation such as AllReduce inside a GPU kernel extremely efficiently without worrying about memory ordering of different ops. The modularity of MSCCL++ enables a user to construct the building blocks of MSCCL++ in a high level abstraction in Python and feed them to a CUDA kernel in order to facilitate the user's productivity. MSCCL++ provides fine-grained synchronous and asynchronous 0-copy 1-sided abstracts for communication primitives such as `put()`, `get()`, `signal()`, `flush()`, and `wait()`. The 1-sided abstractions allows a user to asynchronously `put()` their data on the remote GPU as soon as it is ready without requiring the remote side to issue any receive instruction. This enables users to easily implement flexible communication logics, such as overlapping communication with computation, or implementing customized collective communication algorithms without worrying about potential deadlocks. Additionally, the 0-copy capability enables MSCCL++ to directly transfer data between user's buffers without using intermediate internal buffers which saves GPU bandwidth and memory capacity. MSCCL++ provides consistent abstractions regardless of the location of the remote GPU (either on the local node or on a remote node) or the underlying link (either NVLink/xGMI or InfiniBand). This simplifies the code for inter-GPU communication, which is often complex due to memory ordering of GPU/CPU read/writes and therefore, is error-prone.

mlir-air

This repository contains tools and libraries for building AIR platforms, runtimes and compilers.

free-for-life

A massive list including a huge amount of products and services that are completely free! ⭐ Star on GitHub • 🤝 Contribute # Table of Contents * APIs, Data & ML * Artificial Intelligence * BaaS * Code Editors * Code Generation * DNS * Databases * Design & UI * Domains * Email * Font * For Students * Forms * Linux Distributions * Messaging & Streaming * PaaS * Payments & Billing * SSL

AIMr

AIMr is an AI aimbot tool written in Python that leverages modern technologies to achieve an undetected system with a pleasing appearance. It works on any game that uses human-shaped models. To optimize its performance, users should build OpenCV with CUDA. For Valorant, additional perks in the Discord and an Arduino Leonardo R3 are required.

aika

AIKA (Artificial Intelligence for Knowledge Acquisition) is a new type of artificial neural network designed to mimic the behavior of a biological brain more closely and bridge the gap to classical AI. The network conceptually separates activations from neurons, creating two separate graphs to represent acquired knowledge and inferred information. It uses different types of neurons and synapses to propagate activation values, binding signals, causal relations, and training gradients. The network structure allows for flexible topology and supports the gradual population of neurons and synapses during training.

nextpy

Nextpy is a cutting-edge software development framework optimized for AI-based code generation. It provides guardrails for defining AI system boundaries, structured outputs for prompt engineering, a powerful prompt engine for efficient processing, better AI generations with precise output control, modularity for multiplatform and extensible usage, developer-first approach for transferable knowledge, and containerized & scalable deployment options. It offers 4-10x faster performance compared to Streamlit apps, with a focus on cooperation within the open-source community and integration of key components from various projects.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.