monoscope

Monoscope lets you ingest and explore your logs, traces and metrics. We store these in S3 compatible buckets. Query in natural language via LLMs.

Stars: 452

Monoscope is an open-source monitoring and observability platform that uses artificial intelligence to understand and monitor systems automatically. It allows users to ingest and explore logs, traces, and metrics in S3 buckets, query in natural language via LLMs, and create AI agents to detect anomalies. Key capabilities include universal data ingestion, AI-powered understanding, natural language interface, cost-effective storage, and zero configuration. Monoscope is designed to reduce alert fatigue, catch issues before they impact users, and provide visibility across complex systems.

README:

Ingest and explore logs, traces, and metrics stored in your S3 buckets. Query with natural language. Create AI agents that detect anomalies and send daily/weekly reports to your inbox.

Website • Playground • Discord • Twitter • Documentation

Monoscope is an open-source observability platform that stores your telemetry data in S3-compatible storage. Self-host it or use our cloud offering.

Core capabilities:

- 💰 S3 storage — Store years of logs, metrics, and traces affordably in your own S3 buckets

- 💬 Natural language queries — Search your data using plain English via LLMs

- 🤖 AI agents — Create agents that run on a schedule to detect anomalies and surface insights

- 📧 Email reports — Receive daily/weekly summaries of important events and anomalies

- 🔭 OpenTelemetry native — 750+ integrations out of the box

- ⚡ Live tail — Stream logs and traces in real-time

- 🕵️ Unified view — Correlate logs, metrics, traces, and session replays in one place

In both options, you bring your own S3 buckets—your data stays yours.

| Cloud | Self-hosted | |

|---|---|---|

| Storage | Your S3 buckets | Your S3 buckets |

| Compute | Managed by us | You manage |

| Auth & SSO | Built-in | DIY |

| Alert channels | Slack, PagerDuty, etc. | Basic email |

| Pricing | Usage-based | Free (AGPL-3.0) |

→ Start free on Cloud or continue below to self-host.

git clone https://github.com/monoscope-tech/monoscope.git

cd monoscope

docker-compose upVisit http://localhost:8080 (default: admin/changeme)

Populate your dashboard with test telemetry:

# Install telemetrygen

go install github.com/open-telemetry/opentelemetry-collector-contrib/cmd/telemetrygen@latest

# Send test traces (replace YOUR_API_KEY from the UI)

telemetrygen traces --otlp-endpoint localhost:4317 --otlp-insecure \

--otlp-header 'Authorization="Bearer YOUR_API_KEY"' --traces 10Python

pip install opentelemetry-distro opentelemetry-exporter-otlp

opentelemetry-bootstrap -a install

OTEL_SERVICE_NAME="my-app" \

OTEL_EXPORTER_OTLP_ENDPOINT="http://localhost:4317" \

opentelemetry-instrument python myapp.pyNode.js

npm install --save @opentelemetry/auto-instrumentations-node

OTEL_SERVICE_NAME="my-app" \

OTEL_EXPORTER_OTLP_ENDPOINT="http://localhost:4317" \

node --require @opentelemetry/auto-instrumentations-node/register app.jsJava

curl -L https://github.com/open-telemetry/opentelemetry-java-instrumentation/releases/latest/download/opentelemetry-javaagent.jar -o otel-agent.jar

OTEL_SERVICE_NAME="my-app" \

OTEL_EXPORTER_OTLP_ENDPOINT="http://localhost:4317" \

java -javaagent:otel-agent.jar -jar myapp.jarKubernetes

# Install OpenTelemetry Operator

kubectl apply -f https://github.com/open-telemetry/opentelemetry-operator/releases/latest/download/opentelemetry-operator.yaml

# Configure auto-instrumentation

kubectl apply -f - <<EOF

apiVersion: opentelemetry.io/v1alpha1

kind: Instrumentation

metadata:

name: my-instrumentation

spec:

exporter:

endpoint: http://monoscope:4317

propagators:

- tracecontext

- baggage

EOF

# Annotate your deployments for auto-instrumentation

kubectl patch deployment my-app -p \

'{"spec":{"template":{"metadata":{"annotations":{"instrumentation.opentelemetry.io/inject-java":"my-instrumentation"}}}}}'Query your telemetry data in plain English:

- "Show me all errors in the payment service in the last hour"

- "What caused the spike in response time yesterday?"

- "Which endpoints have the highest p99 latency?"

Create AI agents that monitor your systems on a schedule:

- Scheduled analysis — Agents run at intervals you define (hourly, daily, weekly)

- Anomaly detection — Automatically surface unusual patterns in logs, metrics, and traces

- Email reports — Receive summaries of important events and insights directly in your inbox

- Customizable focus — Configure agents to watch specific services, error types, or metrics

graph LR

A[Your Apps] -->|Logs/Metrics/Traces| B[Ingestion API]

B --> C[TimeFusion Engine]

C --> D[(S3 Storage)]

D --> E[Query Engine]

E --> F[Dashboards]

D --> G[AI Agent Scheduler]

G -->|LLM Analysis| H[Anomaly Detection]

H --> I[Email Reports]

H --> J[Alert Channels]Monoscope is built on TimeFusion, our open-source time-series database for observability workloads.

| 🗄️ S3-native | Data lives in your S3 buckets—no vendor lock-in |

| 🐘 PostgreSQL compatible | Use any Postgres client or driver |

| ⚡ 500K+ events/sec | Columnar storage with Apache Arrow |

| 💵 Pay only for S3 | No expensive proprietary storage fees |

| Feature | Monoscope | Datadog | Elastic | Prometheus |

|---|---|---|---|---|

| S3/Object Storage | ✅ Native | ❌ | ✅ | ✅ |

| Natural Language Query | ✅ | ❌ | ❌ | ❌ |

| AI Agents & Reports | ✅ Built-in | ❌ Add-on | ❌ | ❌ |

| Open Source | ✅ AGPL-3.0 | ❌ | ✅ | ✅ |

| Self-hostable | ✅ | ❌ | ✅ | ✅ |

Logs and trace spans displayed together in context for complete observability.

See detailed trace information alongside logs for debugging complex distributed systems.

Real-time metrics and performance monitoring with AI-powered insights.

"Monoscope notifies us about any slight change on the system. Features that would cost us a lot more elsewhere." — Samuel Joseph, Woodcore

- [x] Custom dashboards builder

- [ ] More out-of-the-box dashboards

- [ ] AIOps workflow builder

- [ ] Full migration to TimeFusion storage engine

- [ ] Metrics aggregation rules

- [ ] Multi-tenant workspace support

- [ ] More alert channel integrations

See our public roadmap for details and to vote on features.

💬 Discord • 🐛 Issues • 🐦 Twitter

AGPL-3.0. See LICENSE for details.

For commercial licensing options, contact us at [email protected].

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for monoscope

Similar Open Source Tools

monoscope

Monoscope is an open-source monitoring and observability platform that uses artificial intelligence to understand and monitor systems automatically. It allows users to ingest and explore logs, traces, and metrics in S3 buckets, query in natural language via LLMs, and create AI agents to detect anomalies. Key capabilities include universal data ingestion, AI-powered understanding, natural language interface, cost-effective storage, and zero configuration. Monoscope is designed to reduce alert fatigue, catch issues before they impact users, and provide visibility across complex systems.

axonhub

AxonHub is an all-in-one AI development platform that serves as an AI gateway allowing users to switch between model providers without changing any code. It provides features like vendor lock-in prevention, integration simplification, observability enhancement, and cost control. Users can access any model using any SDK with zero code changes. The platform offers full request tracing, enterprise RBAC, smart load balancing, and real-time cost tracking. AxonHub supports multiple databases, provides a unified API gateway, and offers flexible model management and API key creation for authentication. It also integrates with various AI coding tools and SDKs for seamless usage.

eko

Eko is a lightweight and flexible command-line tool for managing environment variables in your projects. It allows you to easily set, get, and delete environment variables for different environments, making it simple to manage configurations across development, staging, and production environments. With Eko, you can streamline your workflow and ensure consistency in your application settings without the need for complex setup or configuration files.

mindnlp

MindNLP is an open-source NLP library based on MindSpore. It provides a platform for solving natural language processing tasks, containing many common approaches in NLP. It can help researchers and developers to construct and train models more conveniently and rapidly. Key features of MindNLP include: * Comprehensive data processing: Several classical NLP datasets are packaged into a friendly module for easy use, such as Multi30k, SQuAD, CoNLL, etc. * Friendly NLP model toolset: MindNLP provides various configurable components. It is friendly to customize models using MindNLP. * Easy-to-use engine: MindNLP simplified complicated training process in MindSpore. It supports Trainer and Evaluator interfaces to train and evaluate models easily. MindNLP supports a wide range of NLP tasks, including: * Language modeling * Machine translation * Question answering * Sentiment analysis * Sequence labeling * Summarization MindNLP also supports industry-leading Large Language Models (LLMs), including Llama, GLM, RWKV, etc. For support related to large language models, including pre-training, fine-tuning, and inference demo examples, you can find them in the "llm" directory. To install MindNLP, you can either install it from Pypi, download the daily build wheel, or install it from source. The installation instructions are provided in the documentation. MindNLP is released under the Apache 2.0 license. If you find this project useful in your research, please consider citing the following paper: @misc{mindnlp2022, title={{MindNLP}: a MindSpore NLP library}, author={MindNLP Contributors}, howpublished = {\url{https://github.com/mindlab-ai/mindnlp}}, year={2022} }

agentscope

AgentScope is a multi-agent platform designed to empower developers to build multi-agent applications with large-scale models. It features three high-level capabilities: Easy-to-Use, High Robustness, and Actor-Based Distribution. AgentScope provides a list of `ModelWrapper` to support both local model services and third-party model APIs, including OpenAI API, DashScope API, Gemini API, and ollama. It also enables developers to rapidly deploy local model services using libraries such as ollama (CPU inference), Flask + Transformers, Flask + ModelScope, FastChat, and vllm. AgentScope supports various services, including Web Search, Data Query, Retrieval, Code Execution, File Operation, and Text Processing. Example applications include Conversation, Game, and Distribution. AgentScope is released under Apache License 2.0 and welcomes contributions.

openakita

OpenAkita is a self-evolving AI Agent framework that autonomously learns new skills, performs daily self-checks and repairs, accumulates experience from task execution, and persists until the task is done. It auto-generates skills, installs dependencies, learns from mistakes, and remembers preferences. The framework is standards-based, multi-platform, and provides a Setup Center GUI for intuitive installation and configuration. It features self-learning and evolution mechanisms, a Ralph Wiggum Mode for persistent execution, multi-LLM endpoints, multi-platform IM support, desktop automation, multi-agent architecture, scheduled tasks, identity and memory management, a tool system, and a guided wizard for setup.

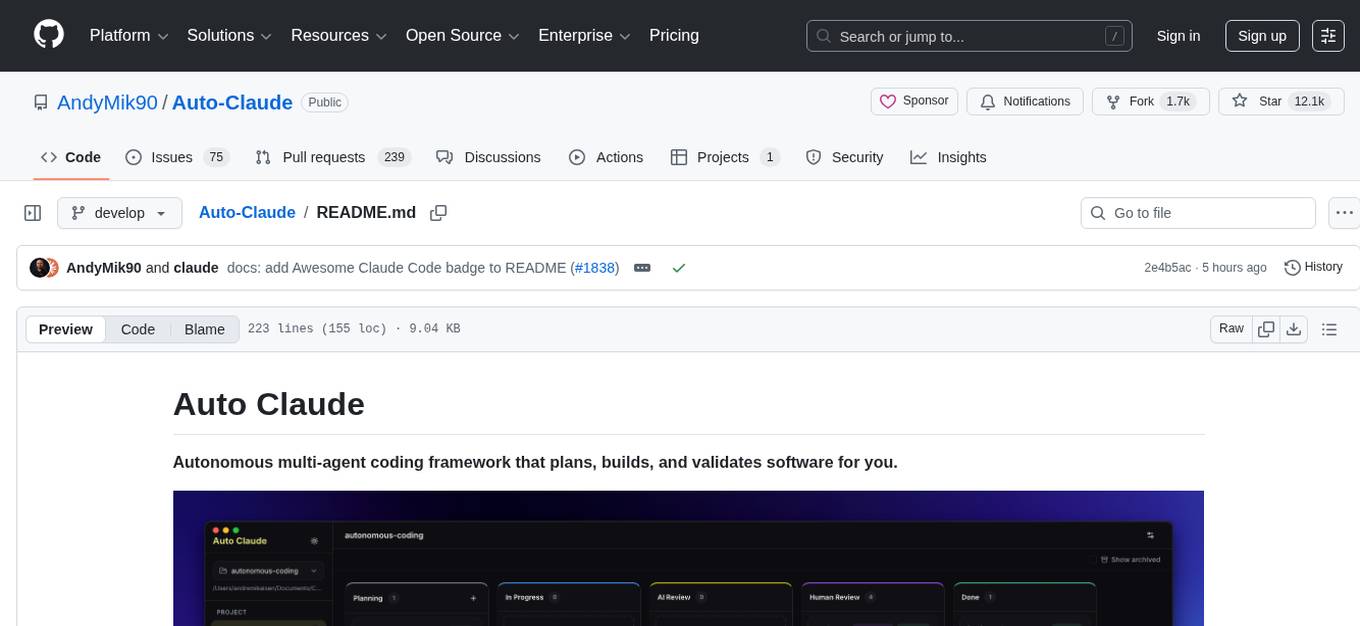

Auto-Claude

Auto Claude is an autonomous multi-agent coding framework that plans, builds, and validates software for users. It provides features such as autonomous tasks handling planning, implementation, and validation, parallel execution with multiple agent terminals, isolated workspaces for safe changes, self-validating quality assurance, AI-powered merge for conflict resolution, memory layer for smarter builds, GitHub/GitLab integration, cross-platform native desktop apps, auto-updates, and more. The tool offers a visual Kanban board for task management, AI-powered terminals for parallel work, AI-assisted feature planning, insights chat interface, ideation for code improvements, performance issues, and vulnerabilities discovery, and changelog generation from completed tasks. It follows a three-layer security model with OS sandbox, filesystem restrictions, and dynamic command allowlist, ensuring security through VirusTotal scans, SHA256 checksums, and code-signing for macOS releases.

claude-code-ultimate-guide

The Claude Code Ultimate Guide is an exhaustive documentation resource that takes users from beginner to power user in using Claude Code. It includes production-ready templates, workflow guides, a quiz, and a cheatsheet for daily use. The guide covers educational depth, methodologies, and practical examples to help users understand concepts and workflows. It also provides interactive onboarding, a repository structure overview, and learning paths for different user levels. The guide is regularly updated and offers a unique 257-question quiz for comprehensive assessment. Users can also find information on agent teams coverage, methodologies, annotated templates, resource evaluations, and learning paths for different roles like junior developer, senior developer, power user, and product manager/devops/designer.

motia

Motia is an AI agent framework designed for software engineers to create, test, and deploy production-ready AI agents quickly. It provides a code-first approach, allowing developers to write agent logic in familiar languages and visualize execution in real-time. With Motia, developers can focus on business logic rather than infrastructure, offering zero infrastructure headaches, multi-language support, composable steps, built-in observability, instant APIs, and full control over AI logic. Ideal for building sophisticated agents and intelligent automations, Motia's event-driven architecture and modular steps enable the creation of GenAI-powered workflows, decision-making systems, and data processing pipelines.

EverMemOS

EverMemOS is an AI memory system that enables AI to not only remember past events but also understand the meaning behind memories and use them to guide decisions. It achieves 93% reasoning accuracy on the LoCoMo benchmark by providing long-term memory capabilities for conversational AI agents through structured extraction, intelligent retrieval, and progressive profile building. The tool is production-ready with support for Milvus vector DB, Elasticsearch, MongoDB, and Redis, and offers easy integration via a simple REST API. Users can store and retrieve memories using Python code and benefit from features like multi-modal memory storage, smart retrieval mechanisms, and advanced techniques for memory management.

langtrace

Langtrace is an open source observability software that lets you capture, debug, and analyze traces and metrics from all your applications that leverage LLM APIs, Vector Databases, and LLM-based Frameworks. It supports Open Telemetry Standards (OTEL), and the traces generated adhere to these standards. Langtrace offers both a managed SaaS version (Langtrace Cloud) and a self-hosted option. The SDKs for both Typescript/Javascript and Python are available, making it easy to integrate Langtrace into your applications. Langtrace automatically captures traces from various vendors, including OpenAI, Anthropic, Azure OpenAI, Langchain, LlamaIndex, Pinecone, and ChromaDB.

ai-dev-kit

The AI Dev Kit is a comprehensive toolkit designed to enhance AI-driven development on Databricks. It provides trusted sources for AI coding assistants like Claude Code and Cursor to build faster and smarter on Databricks. The kit includes features such as Spark Declarative Pipelines, Databricks Jobs, AI/BI Dashboards, Unity Catalog, Genie Spaces, Knowledge Assistants, MLflow Experiments, Model Serving, Databricks Apps, and more. Users can choose from different adventures like installing the kit, using the visual builder app, teaching AI assistants Databricks patterns, executing Databricks actions, or building custom integrations with the core library. The kit also includes components like databricks-tools-core, databricks-mcp-server, databricks-skills, databricks-builder-app, and ai-dev-project.

GraphGen

GraphGen is a framework for synthetic data generation guided by knowledge graphs. It enhances supervised fine-tuning for large language models (LLMs) by generating synthetic data based on a fine-grained knowledge graph. The tool identifies knowledge gaps in LLMs, prioritizes generating QA pairs targeting high-value knowledge, incorporates multi-hop neighborhood sampling, and employs style-controlled generation to diversify QA data. Users can use LLaMA-Factory and xtuner for fine-tuning LLMs after data generation.

new-api

New API is a next-generation large model gateway and AI asset management system that provides a wide range of features, including a new UI interface, multi-language support, online recharge function, key query for usage quota, compatibility with the original One API database, model charging by usage count, channel weighted randomization, data dashboard, token grouping and model restrictions, support for various authorization login methods, support for Rerank models, OpenAI Realtime API, Claude Messages format, reasoning effort setting, content reasoning, user-specific model rate limiting, request format conversion, cache billing support, and various model support such as gpts, Midjourney-Proxy, Suno API, custom channels, Rerank models, Claude Messages format, Dify, and more.

prism-insight

PRISM-INSIGHT is a comprehensive stock analysis and trading simulation system based on AI agents. It automatically captures daily surging stocks via Telegram channel, generates expert-level analyst reports, and performs trading simulations. The system utilizes OpenAI GPT-4.1 for in-depth stock analysis and GPT-5 for investment strategy simulation. It also interacts with users via Anthropic Claude for Telegram conversations. The system architecture includes AI analysis agents, stock tracking, PDF conversion, and Telegram bot functionalities. Users can customize criteria for identifying surging stocks, modify AI prompts, and adjust chart styles. The project is open-source under the MIT license, and all investment decisions based on the analysis are the responsibility of the user.

gateway

Gateway is a tool that streamlines requests to 100+ open & closed source models with a unified API. It is production-ready with support for caching, fallbacks, retries, timeouts, load balancing, and can be edge-deployed for minimum latency. It is blazing fast with a tiny footprint, supports load balancing across multiple models, providers, and keys, ensures app resilience with fallbacks, offers automatic retries with exponential fallbacks, allows configurable request timeouts, supports multimodal routing, and can be extended with plug-in middleware. It is battle-tested over 300B tokens and enterprise-ready for enhanced security, scale, and custom deployments.

For similar tasks

qdrant

Qdrant is a vector similarity search engine and vector database. It is written in Rust, which makes it fast and reliable even under high load. Qdrant can be used for a variety of applications, including: * Semantic search * Image search * Product recommendations * Chatbots * Anomaly detection Qdrant offers a variety of features, including: * Payload storage and filtering * Hybrid search with sparse vectors * Vector quantization and on-disk storage * Distributed deployment * Highlighted features such as query planning, payload indexes, SIMD hardware acceleration, async I/O, and write-ahead logging Qdrant is available as a fully managed cloud service or as an open-source software that can be deployed on-premises.

SynapseML

SynapseML (previously known as MMLSpark) is an open-source library that simplifies the creation of massively scalable machine learning (ML) pipelines. It provides simple, composable, and distributed APIs for various machine learning tasks such as text analytics, vision, anomaly detection, and more. Built on Apache Spark, SynapseML allows seamless integration of models into existing workflows. It supports training and evaluation on single-node, multi-node, and resizable clusters, enabling scalability without resource wastage. Compatible with Python, R, Scala, Java, and .NET, SynapseML abstracts over different data sources for easy experimentation. Requires Scala 2.12, Spark 3.4+, and Python 3.8+.

mlx-vlm

MLX-VLM is a package designed for running Vision LLMs on Mac systems using MLX. It provides a convenient way to install and utilize the package for processing large language models related to vision tasks. The tool simplifies the process of running LLMs on Mac computers, offering a seamless experience for users interested in leveraging MLX for vision-related projects.

Java-AI-Book-Code

The Java-AI-Book-Code repository contains code examples for the 2020 edition of 'Practical Artificial Intelligence With Java'. It is a comprehensive update of the previous 2013 edition, featuring new content on deep learning, knowledge graphs, anomaly detection, linked data, genetic algorithms, search algorithms, and more. The repository serves as a valuable resource for Java developers interested in AI applications and provides practical implementations of various AI techniques and algorithms.

Awesome-AI-Data-Guided-Projects

A curated list of data science & AI guided projects to start building your portfolio. The repository contains guided projects covering various topics such as large language models, time series analysis, computer vision, natural language processing (NLP), and data science. Each project provides detailed instructions on how to implement specific tasks using different tools and technologies.

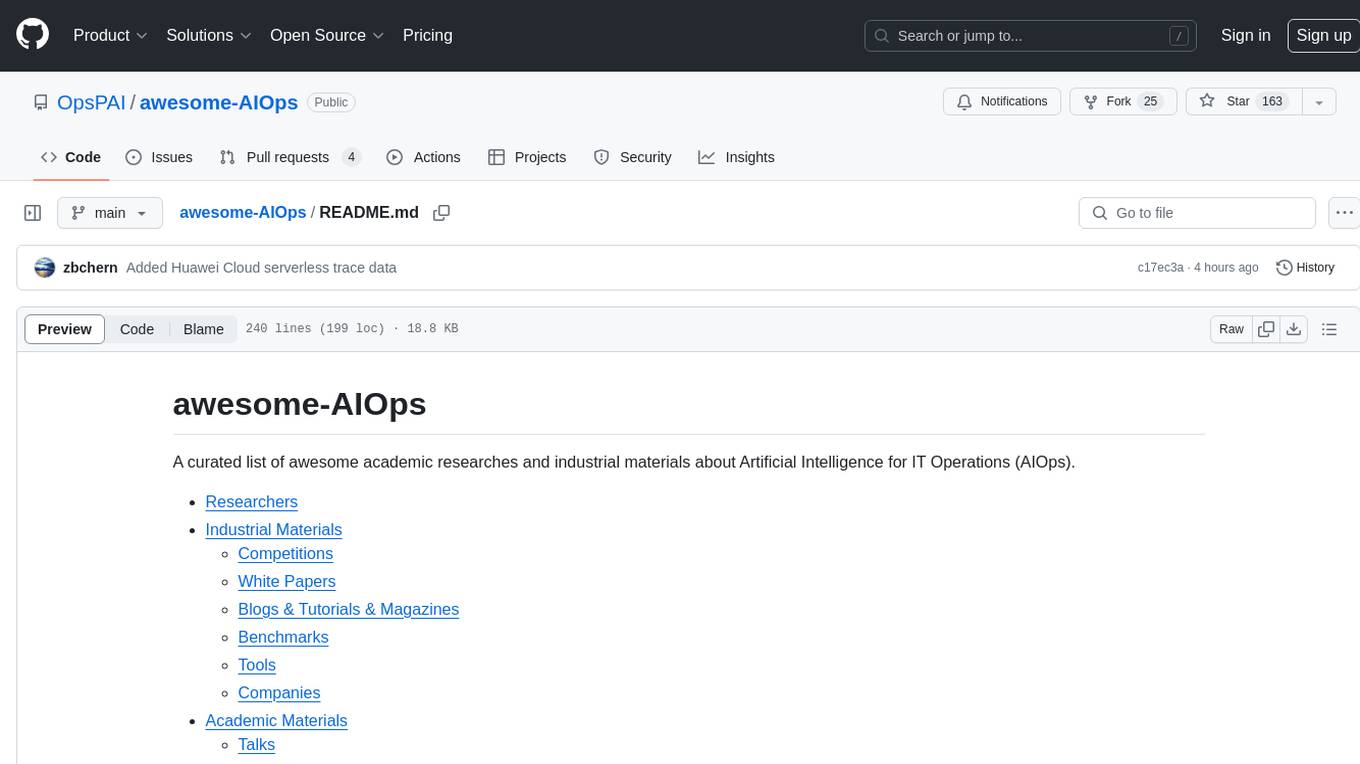

awesome-AIOps

awesome-AIOps is a curated list of academic researches and industrial materials related to Artificial Intelligence for IT Operations (AIOps). It includes resources such as competitions, white papers, blogs, tutorials, benchmarks, tools, companies, academic materials, talks, workshops, papers, and courses covering various aspects of AIOps like anomaly detection, root cause analysis, incident management, microservices, dependency tracing, and more.

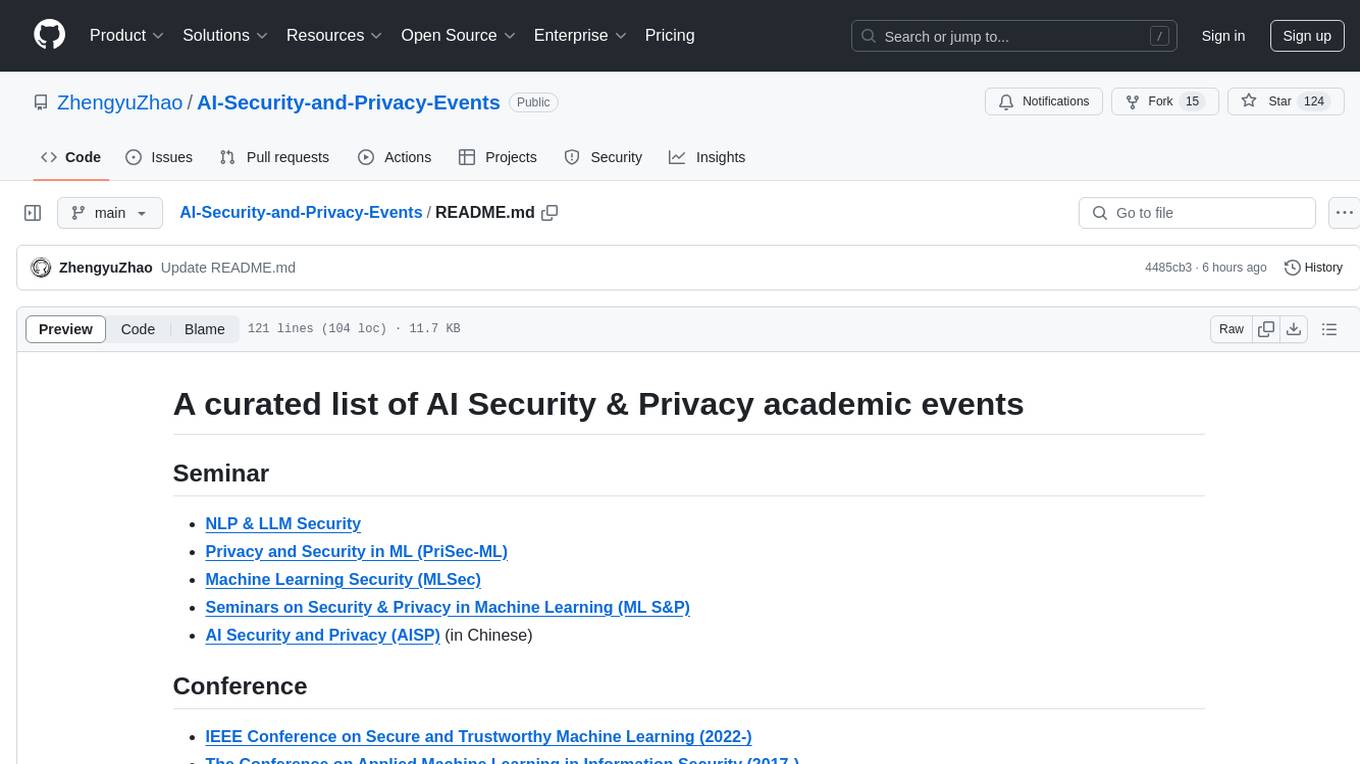

AI-Security-and-Privacy-Events

AI-Security-and-Privacy-Events is a curated list of academic events focusing on AI security and privacy. It includes seminars, conferences, workshops, tutorials, special sessions, and covers various topics such as NLP & LLM Security, Privacy and Security in ML, Machine Learning Security, AI System with Confidential Computing, Adversarial Machine Learning, and more.

AI_Spectrum

AI_Spectrum is a versatile machine learning library that provides a wide range of tools and algorithms for building and deploying AI models. It offers a user-friendly interface for data preprocessing, model training, and evaluation. With AI_Spectrum, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is designed to be flexible and scalable, making it suitable for both beginners and experienced data scientists.

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.