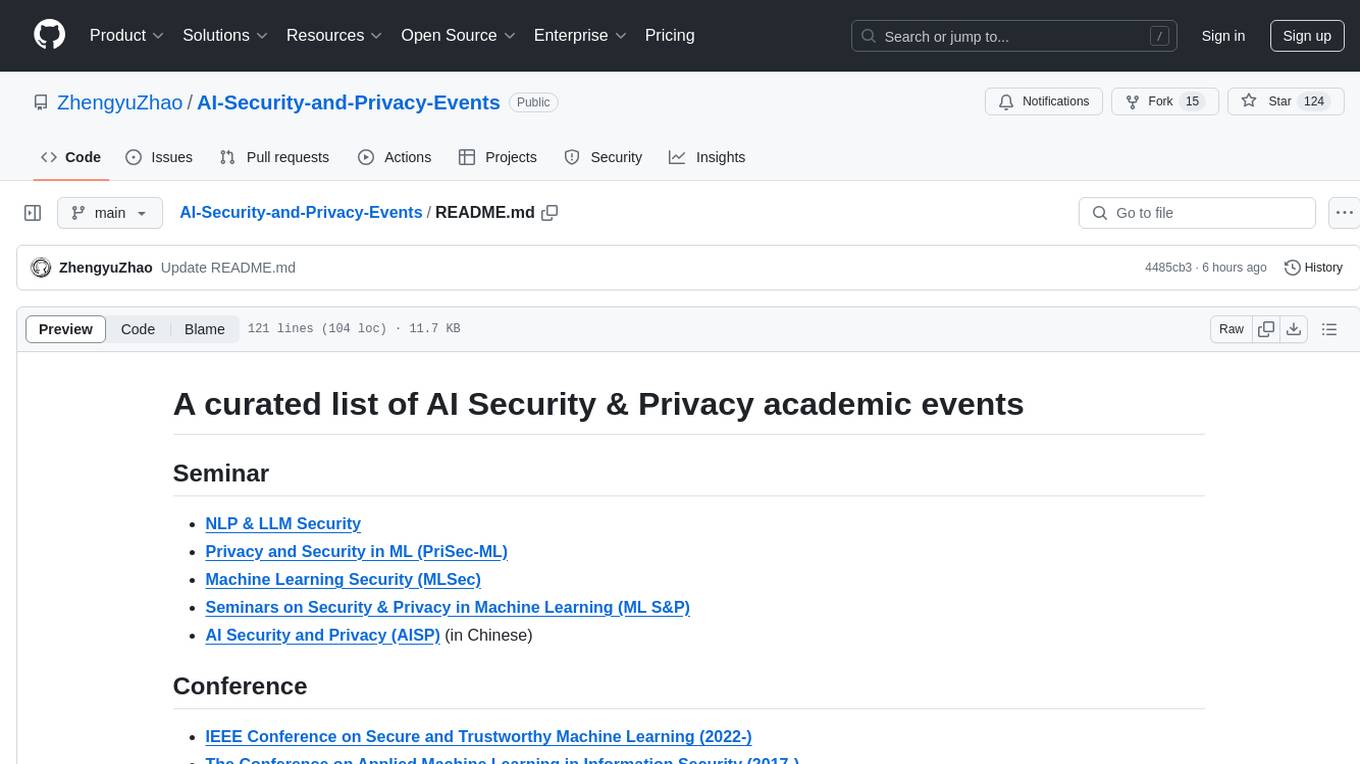

AI-Security-and-Privacy-Events

A curated list of academic events on AI Security & Privacy

Stars: 124

AI-Security-and-Privacy-Events is a curated list of academic events focusing on AI security and privacy. It includes seminars, conferences, workshops, tutorials, special sessions, and covers various topics such as NLP & LLM Security, Privacy and Security in ML, Machine Learning Security, AI System with Confidential Computing, Adversarial Machine Learning, and more.

README:

- NLP & LLM Security

- Privacy and Security in ML (PriSec-ML)

- Machine Learning Security (MLSec)

- Seminars on Security & Privacy in Machine Learning (ML S&P)

- AI Security and Privacy (AISP) (in Chinese)

- IEEE Conference on Secure and Trustworthy Machine Learning (2022-)

- The Conference on Applied Machine Learning in Information Security (2017-)

-

- Red Teaming GenAI: What Can We Learn from Adversaries? (NeurIPS 2024)

- Safe Generative AI (NeurIPS 2024)

- Towards Safe & Trustworthy Agents (NeurIPS 2024)

- Socially Responsible Language Modelling Research (NeurIPS 2024)

- Next Generation of AI Safety (ICML 2024)

- Trustworthy Multi-modal Foundation Models and AI Agents (ICML 2024)

- Secure and Trustworthy Large Language Models (ICLR 2024)

- Reliable and Responsible Foundation Models (ICLR 2024)

- Privacy Regulation and Protection in Machine Learning (ICLR 2024)

- Responsible Language Models (AAAI 2024)

- Privacy-Preserving Artificial Intelligence (AAAI 2020-2024)

- Practical Deep Learning in the Wild (CAI 2024, AAAI 2022-2023)

- Backdoors in Deep Learning: The Good, the Bad, and the Ugly (NeurIPS 2023)

- Trustworthy and Reliable Large-Scale Machine Learning Models (ICLR 2023)

- Backdoor Attacks and Defenses in Machine Learning (ICLR 2023)

- Privacy, Accountability, Interpretability, Robustness, Reasoning on Structured Data (ICLR 2022)

- Security and Safety in Machine Learning Systems (ICLR 2021)

- Robust and Reliable Machine Learning in the Real World (ICLR 2021)

- Towards Trustworthy ML: Rethinking Security and Privacy for ML (ICLR 2020)

- Safe Machine Learning: Specification, Robustness and Assurance (ICLR 2019)

- New Frontiers in Adversarial Machine Learning (ICML 2022-2023)

- Theory and Practice of Differential Privacy (ICML 2021-2022)

- Uncertainty & Robustness in Deep Learning (ICML 2020-2021)

- A Blessing in Disguise: The Prospects and Perils of Adversarial Machine Learning (ICML 2021)

- Security and Privacy of Machine Learning (ICML 2019)

- Socially Responsible Machine Learning (NeurIPS 2022, ICLR 2022, ICML 2021)

- ML Safety (NeurIPS 2022)

- Privacy in Machine Learning (NeurIPS 2021)

- Dataset Curation and Security (NeurIPS 2020)

- Security in Machine Learning (NeurIPS 2018)

- Machine Learning and Computer Security (NeurIPS 2017)

- Adversarial Training (NeurIPS 2016)

- Reliable Machine Learning in the Wild (NeurIPS 2016)

- Adversarial Learning Methods for Machine Learning and Data Mining (KDD 2019-2022)

- Privacy Preserving Machine Learning (FOCS 2022, CCS 2021, NeurIPS 2020, CCS 2019, NeurIPS 2018)

- SafeAI (AAAI 2019-2022)

- Adversarial Machine Learning and Beyond (AAAI 2022)

- Towards Robust, Secure and Efficient Machine Learning (AAAI2021)

- AISafety (IJCAI 2019-2022)

-

- The Dark Side of Generative AIs and Beyond (ECCV 2024)

- Trust What You learN (ECCV 2024)

- Privacy for Vision & Imaging (ECCV 2024)

- Adversarial Machine Learning on Computer Vision (CVPR 2024, CVPR 2023, CVPR 2022, CVPR 2020)

- Secure and Safe Autonomous Driving (CVPR 2023)

- Adversarial Robustness in the Real World (ICCV 2023, ECCV 2022, ICCV 2021, CVPR 2021, ECCV 2020, CVPR 2020, CVPR 2019)

- The Bright and Dark Sides of Computer Vision: Challenges and Opportunities for Privacy and Security (CVPR 2021, ECCV 2020, CVPR 2019, CVPR 2018, CVPR 2017)

- Responsible Computer Vision (ECCV 2022)

- Safe Artificial Intelligence for Automated Driving (ECCV 2022)

- Adversarial Learning for Multimedia (ACMMM 2021)

- Adversarial Machine Learning towards Advanced Vision Systems (ACCV 2022)

-

- Trustworthy Natural Language Processing (2021-2024)

- Privacy in Natural Language Processing (ACL 2024, NAACL 2022, NAACL 2021, EMNLP 2020, WSDM 2020)

- BlackboxNLP (2018-2024)

-

- Online Misinformation- and Harm-Aware Recommender Systems (RecSys 2021, RecSys 2020)

- Adversarial Machine Learning for Recommendation and Search (CIKM 2021)

-

- Quantitative Reasoning About Data Privacy in Machine Learning (ICML 2022)

- Foundational Robustness of Foundation Models (NeurIPS 2022)

- Adversarial Robustness - Theory and Practice (NeurIPS 2018)

- Towards Adversarial Learning: from Evasion Attacks to Poisoning Attacks (KDD 2022)

- Adversarial Robustness in Deep Learning: From Practices to Theories (KDD 2021)

- Adversarial Attacks and Defenses: Frontiers, Advances and Practice (KDD 2020)

- Adversarial Robustness of Deep Learning: Theory, Algorithms, and Applications (ICDM 2020)

- Adversarial Machine Learning for Good (AAAI 2022)

- Adversarial Machine Learning (AAAI 2018)

-

- Adversarial Machine Learning in Computer Vision (CVPR 2021)

- Practical Adversarial Robustness in Deep Learning: Problems and Solutions (CVPR 2021)

- Adversarial Robustness of Deep Learning Models (ECCV 2020)

- Deep Learning for Privacy in Multimedia (ACMMM 2020)

-

- Vulnerabilities of Large Language Models to Adversarial Attacks (ACL 2024)

- Robustness and Adversarial Examples in Natural Language Processing (EMNLP 2021)

- Deep Adversarial Learning for NLP (NAACL 2019)

-

- Adversarial Machine Learning in Recommender Systems (ECIR 2021, RecSys 2020, WSDM 2020)

- Special Track on Safe and Robust AI (AAAI 2023)

- Special Session on Adversarial Learning for Multimedia Understanding and Retrieval (ICMR 2022)

- Special Session on Adversarial Attack and Defense (APSIPA 2022)

- Special Session on Information Security meets Adversarial Examples (WIFS 2019)

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AI-Security-and-Privacy-Events

Similar Open Source Tools

AI-Security-and-Privacy-Events

AI-Security-and-Privacy-Events is a curated list of academic events focusing on AI security and privacy. It includes seminars, conferences, workshops, tutorials, special sessions, and covers various topics such as NLP & LLM Security, Privacy and Security in ML, Machine Learning Security, AI System with Confidential Computing, Adversarial Machine Learning, and more.

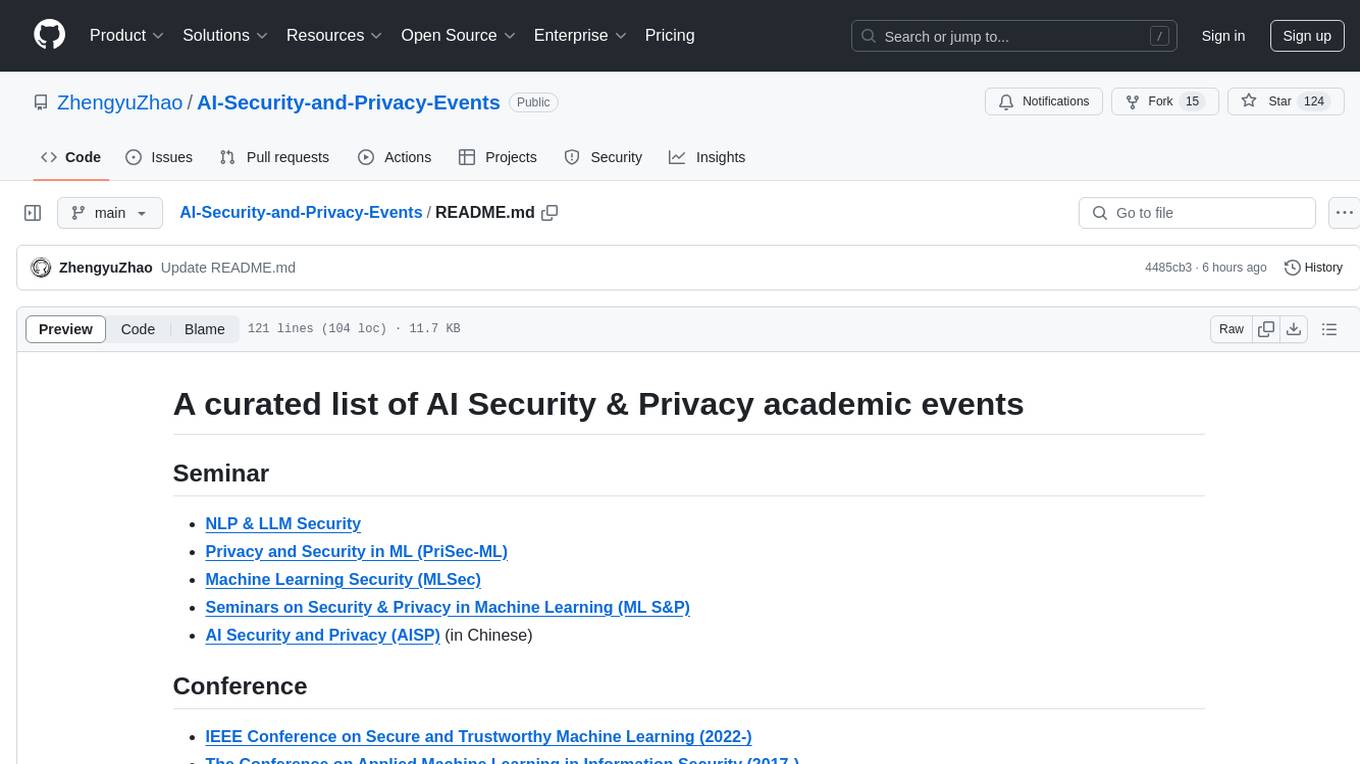

Fueling-Ambitions-Via-Book-Discoveries

Fueling-Ambitions-Via-Book-Discoveries is an Advanced Machine Learning & AI Course designed for students, professionals, and AI researchers. The course integrates rigorous theoretical foundations with practical coding exercises, ensuring learners develop a deep understanding of AI algorithms and their applications in finance, healthcare, robotics, NLP, cybersecurity, and more. Inspired by MIT, Stanford, and Harvard’s AI programs, it combines academic research rigor with industry-standard practices used by AI engineers at companies like Google, OpenAI, Facebook AI, DeepMind, and Tesla. Learners can learn 50+ AI techniques from top Machine Learning & Deep Learning books, code from scratch with real-world datasets, projects, and case studies, and focus on ML Engineering & AI Deployment using Django & Streamlit. The course also offers industry-relevant projects to build a strong AI portfolio.

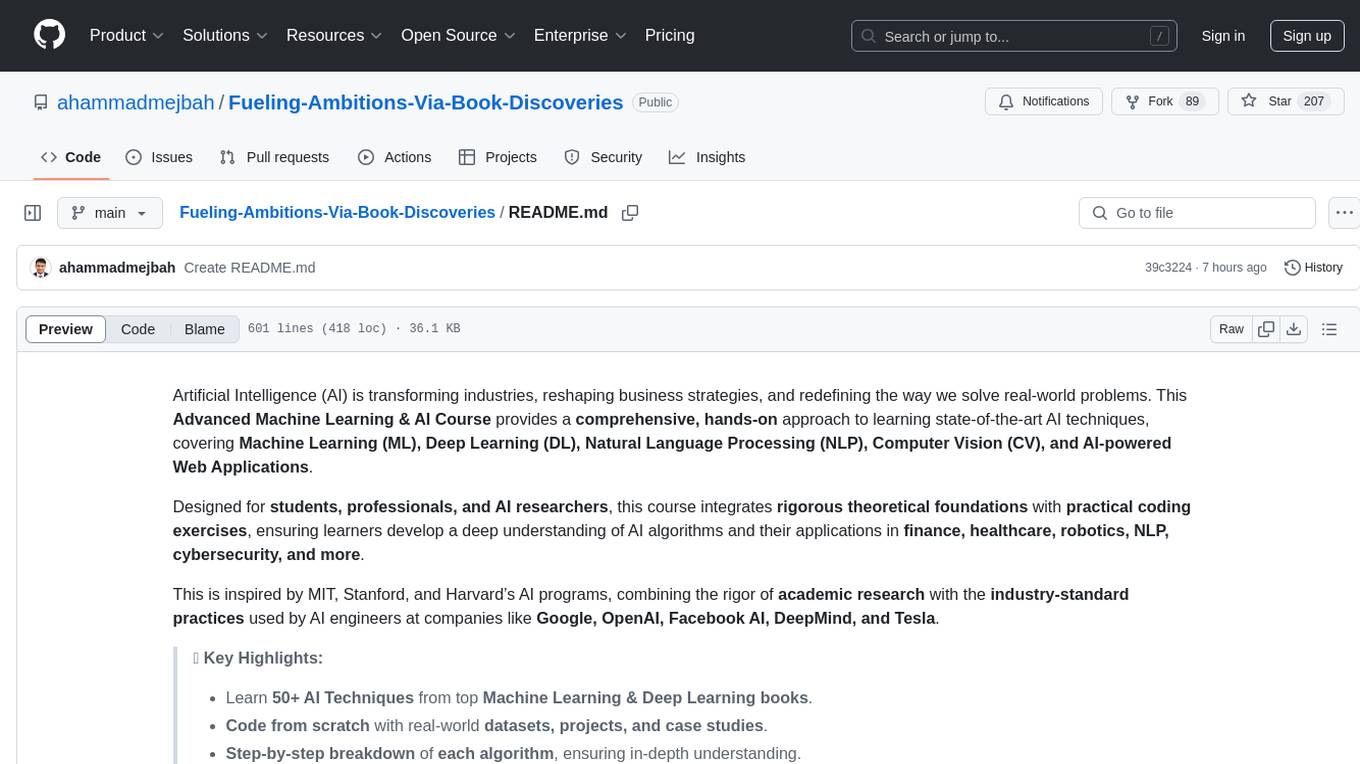

CyberSentinel-AI

CyberSentinel AI is a powerful automated security monitoring and AI analysis system designed to help security researchers and enthusiasts track the latest security vulnerabilities (CVE) and security-related repositories on GitHub in real-time. It utilizes artificial intelligence technology for in-depth analysis and automatically publishes valuable security intelligence to a blogging platform. The system features multiple data sources monitoring, intelligent AI analysis using OpenAI and Gemini engines, fully automated workflow with 24/7 monitoring, daily briefings, and dynamic blacklists, flexible configuration and management with support for multiple tokens, configurable parameters, and detailed logging, and automatic blog publishing with integrated blogging platform and Markdown reports.

AiLearning-Theory-Applying

This repository provides a comprehensive guide to understanding and applying artificial intelligence (AI) theory, including basic knowledge, machine learning, deep learning, and natural language processing (BERT). It features detailed explanations, annotated code, and datasets to help users grasp the concepts and implement them in practice. The repository is continuously updated to ensure the latest information and best practices are covered.

LLM-Navigation

LLM-Navigation is a repository dedicated to documenting learning records related to large models, including basic knowledge, prompt engineering, building effective agents, model expansion capabilities, security measures against prompt injection, and applications in various fields such as AI agent control, browser automation, financial analysis, 3D modeling, and tool navigation using MCP servers. The repository aims to organize and collect information for personal learning and self-improvement through AI exploration.

AcademicForge

Academic Forge is a collection of skills integrated for academic writing workflows. It provides a curated set of skills related to academic writing and research, allowing for precise skill calls, avoiding confusion between similar skills, maintaining focus on research workflows, and receiving timely updates from original authors. The forge integrates carefully selected skills covering various areas such as bioinformatics, clinical research, data analysis, scientific writing, laboratory automation, machine learning, databases, AI research, model architectures, fine-tuning, post-training, distributed training, optimization, inference, evaluation, agents, multimodal tasks, and machine learning paper writing. It is designed to streamline the academic writing and AI research processes by providing a cohesive and community-driven collection of skills.

MedLLMsPracticalGuide

This repository serves as a practical guide for Medical Large Language Models (Medical LLMs) and provides resources, surveys, and tools for building, fine-tuning, and utilizing LLMs in the medical domain. It covers a wide range of topics including pre-training, fine-tuning, downstream biomedical tasks, clinical applications, challenges, future directions, and more. The repository aims to provide insights into the opportunities and challenges of LLMs in medicine and serve as a practical resource for constructing effective medical LLMs.

kcores-llm-arena

KCORES LLM Arena is a large model evaluation tool that focuses on real-world scenarios, using human scoring and benchmark testing to assess performance. It aims to provide an unbiased evaluation of large models in real-world applications. The tool includes programming ability tests and specific benchmarks like Mandelbrot Set, Mars Mission, Solar System, and Ball Bouncing Inside Spinning Heptagon. It supports various programming languages and emphasizes performance optimization, rendering, animations, physics simulations, and creative implementations.

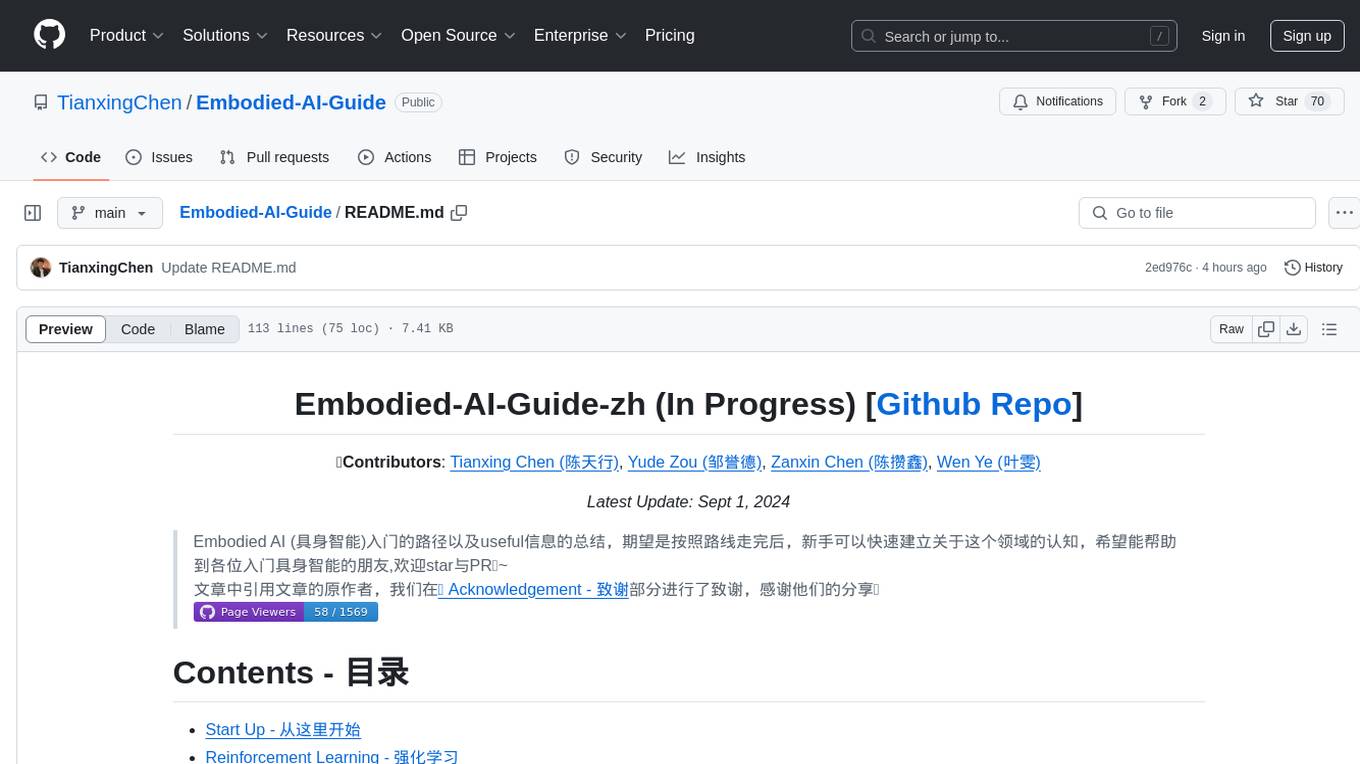

Embodied-AI-Guide

Embodied-AI-Guide is a comprehensive guide for beginners to understand Embodied AI, focusing on the path of entry and useful information in the field. It covers topics such as Reinforcement Learning, Imitation Learning, Large Language Model for Robotics, 3D Vision, Control, Benchmarks, and provides resources for building cognitive understanding. The repository aims to help newcomers quickly establish knowledge in the field of Embodied AI.

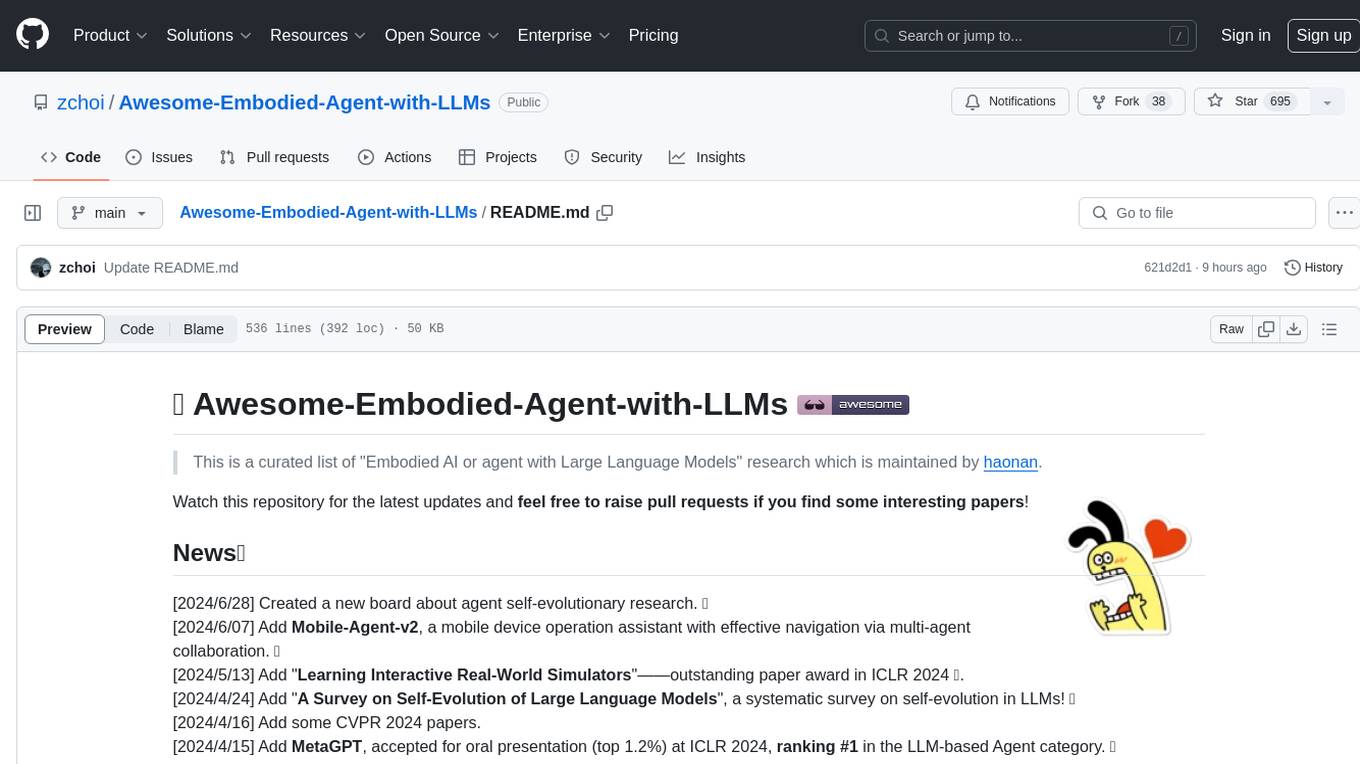

Awesome-Embodied-Agent-with-LLMs

This repository, named Awesome-Embodied-Agent-with-LLMs, is a curated list of research related to Embodied AI or agents with Large Language Models. It includes various papers, surveys, and projects focusing on topics such as self-evolving agents, advanced agent applications, LLMs with RL or world models, planning and manipulation, multi-agent learning and coordination, vision and language navigation, detection, 3D grounding, interactive embodied learning, rearrangement, benchmarks, simulators, and more. The repository provides a comprehensive collection of resources for individuals interested in exploring the intersection of embodied agents and large language models.

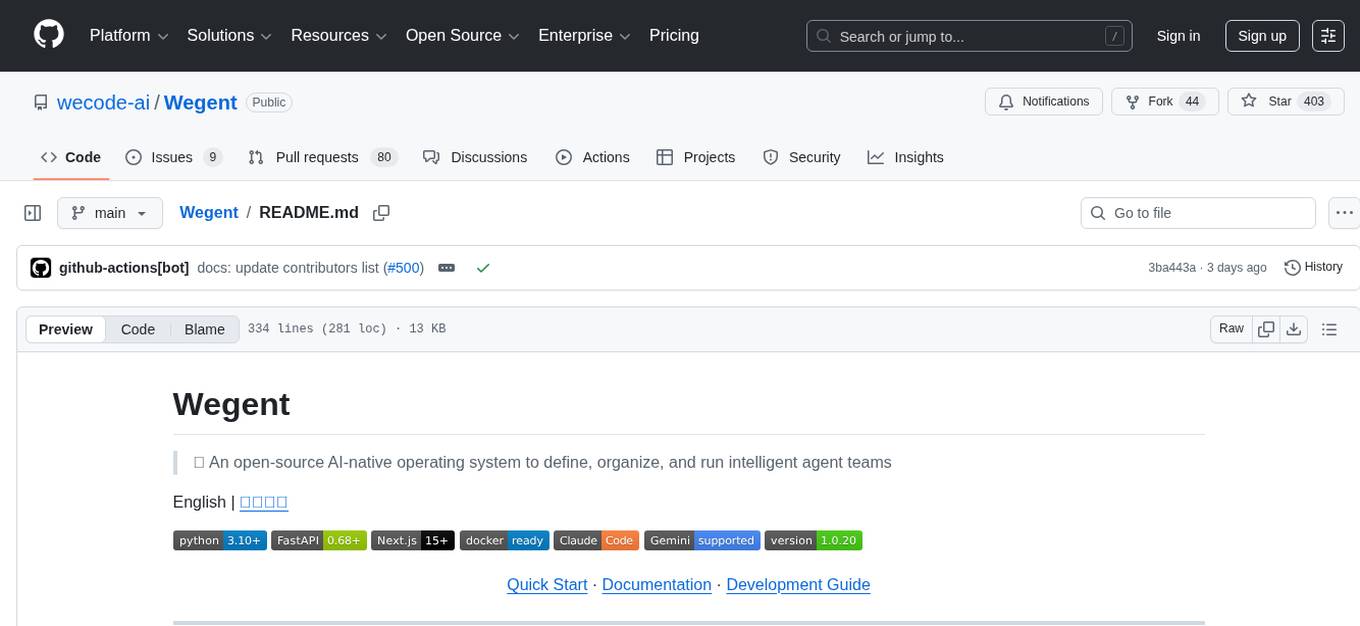

Wegent

Wegent is an open-source AI-native operating system designed to define, organize, and run intelligent agent teams. It offers various core features such as a chat agent with multi-model support, conversation history, group chat, attachment parsing, follow-up mode, error correction mode, long-term memory, sandbox execution, and extensions. Additionally, Wegent includes a code agent for cloud-based code execution, AI feed for task triggers, AI knowledge for document management, and AI device for running tasks locally. The platform is highly extensible, allowing for custom agents, agent creation wizard, organization management, collaboration modes, skill support, MCP tools, execution engines, YAML config, and an API for easy integration with other systems.

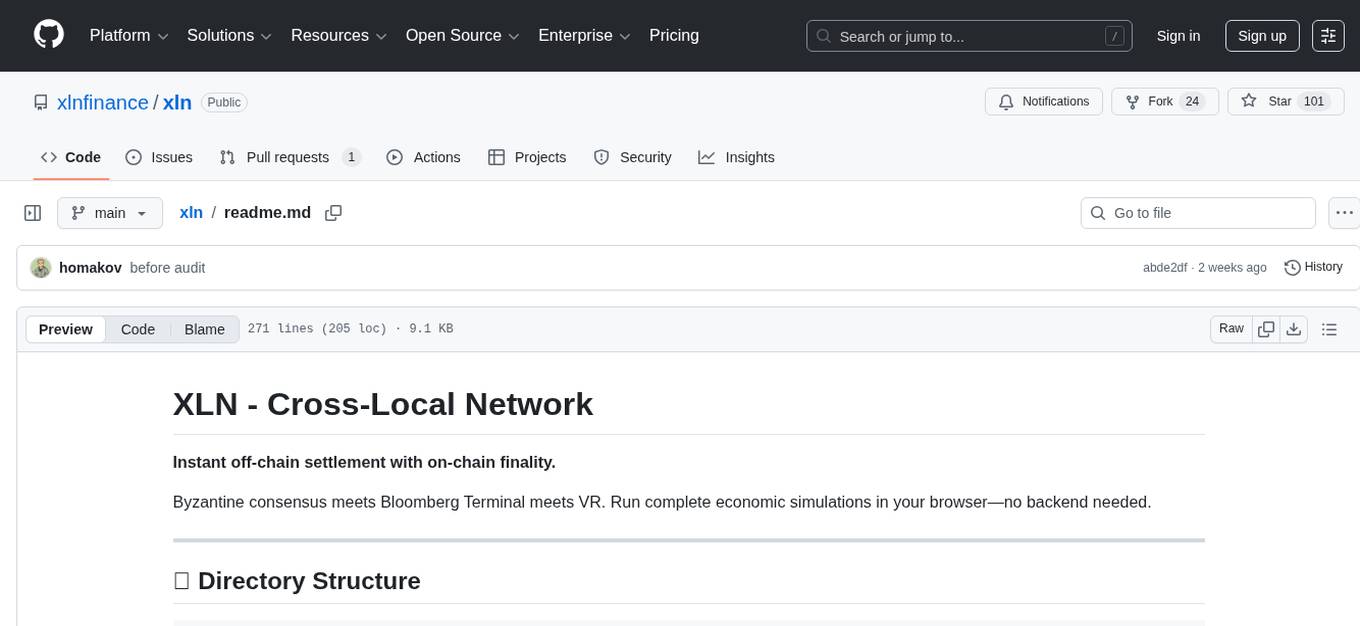

xln

XLN (Cross-Local Network) is a platform that enables instant off-chain settlement with on-chain finality. It combines Byzantine consensus, Bloomberg Terminal functionalities, and VR capabilities to run economic simulations in the browser without the need for a backend. The architecture includes layers for jurisdictions, entities, and accounts, with features like Solidity contracts, BFT consensus, and bilateral channels. The tool offers a panel system similar to Bloomberg Terminal for workspace organization and visualization, along with support for offline blockchain simulations in the browser and VR/Quest compatibility.

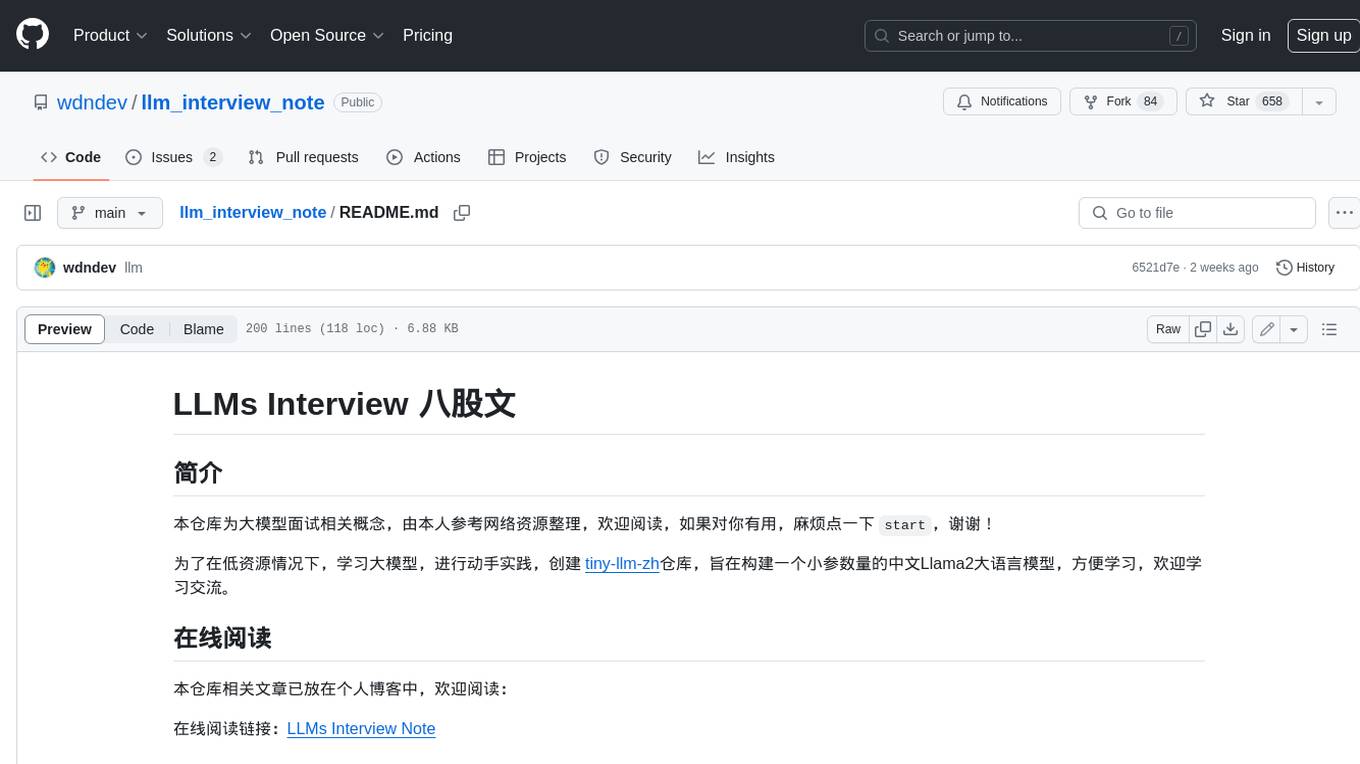

llm_interview_note

This repository provides a comprehensive overview of large language models (LLMs), covering various aspects such as their history, types, underlying architecture, training techniques, and applications. It includes detailed explanations of key concepts like Transformer models, distributed training, fine-tuning, and reinforcement learning. The repository also discusses the evaluation and limitations of LLMs, including the phenomenon of hallucinations. Additionally, it provides a list of related courses and references for further exploration.

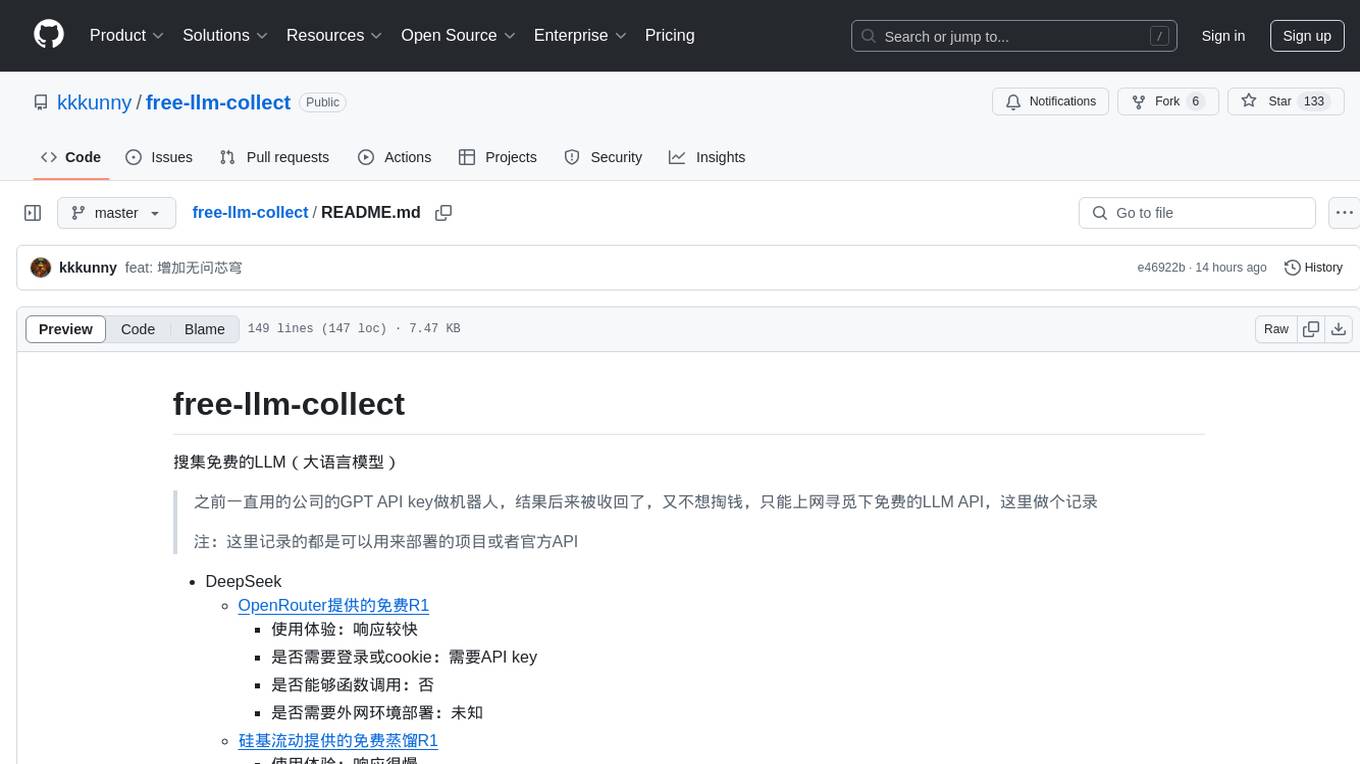

free-llm-collect

This repository is a collection of free large language models (LLMs) that can be used for various natural language processing tasks. It includes information on different free LLM APIs and projects that can be deployed without cost. Users can find details on the performance, login requirements, function calling capabilities, and deployment environments of each listed LLM source.

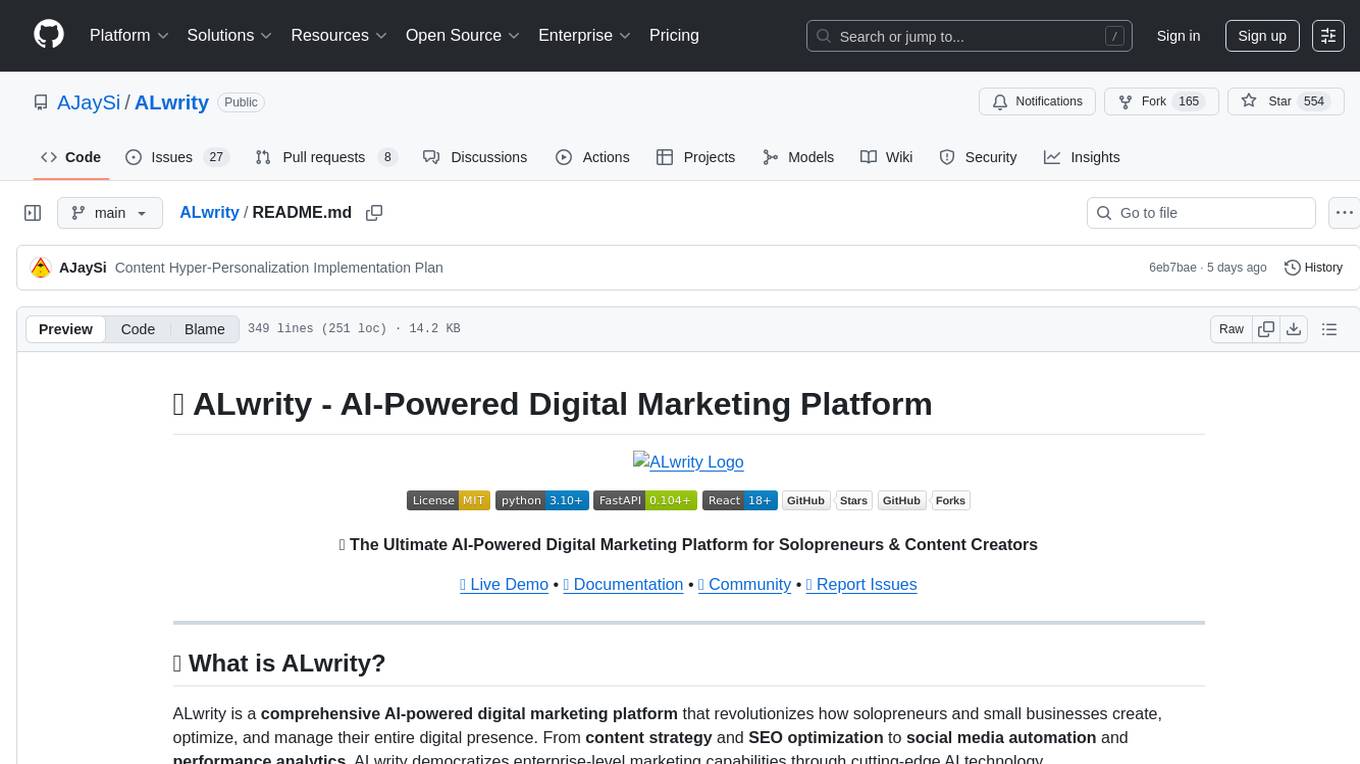

ALwrity

ALwrity is a lightweight and user-friendly text analysis tool designed for developers and data scientists. It provides various functionalities for analyzing and processing text data, including sentiment analysis, keyword extraction, and text summarization. With ALwrity, users can easily gain insights from their text data and make informed decisions based on the analysis results. The tool is highly customizable and can be integrated into existing workflows seamlessly, making it a valuable asset for anyone working with text data in their projects.

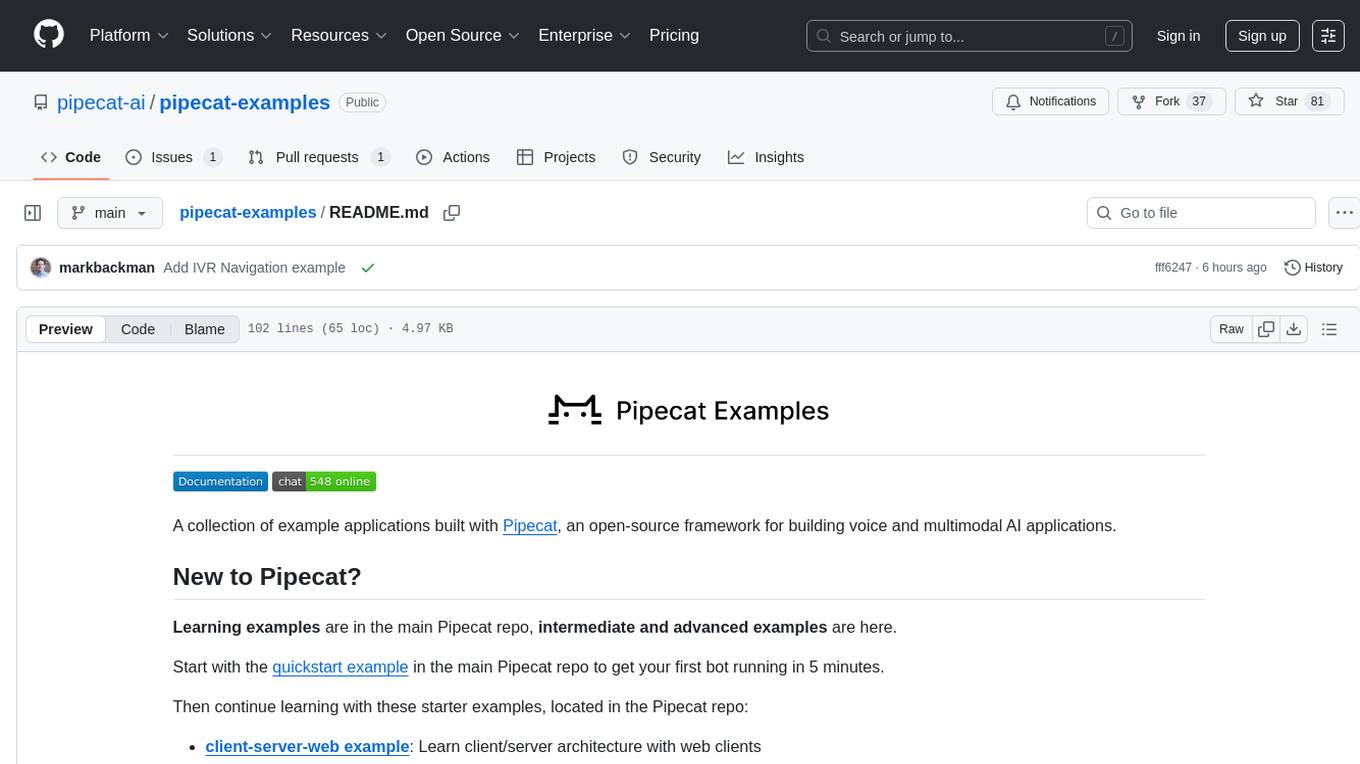

pipecat-examples

Pipecat-examples is a collection of example applications built with Pipecat, an open-source framework for building voice and multimodal AI applications. It includes various examples demonstrating telephony & voice calls, web & client applications, realtime APIs, multimodal & creative solutions, translation & localization tasks, support, educational & specialized use cases, advanced features, deployment & infrastructure setups, monitoring & analytics tools, and testing & development scenarios.

For similar tasks

AI-Security-and-Privacy-Events

AI-Security-and-Privacy-Events is a curated list of academic events focusing on AI security and privacy. It includes seminars, conferences, workshops, tutorials, special sessions, and covers various topics such as NLP & LLM Security, Privacy and Security in ML, Machine Learning Security, AI System with Confidential Computing, Adversarial Machine Learning, and more.

open-computer-use

Open Computer Use is a secure cloud Linux computer powered by E2B Desktop Sandbox and controlled by open-source LLMs. It allows users to operate the computer via keyboard, mouse, and shell commands, live stream the display of the sandbox on the client computer, and pause or prompt the agent at any time. The tool is designed to work with any operating system and supports integration with various LLMs and providers following the OpenAI API specification.

codegate

CodeGate is a local gateway that enhances the safety of AI coding assistants by ensuring AI-generated recommendations adhere to best practices, safeguarding code integrity, and protecting individual privacy. Developed by Stacklok, CodeGate allows users to confidently leverage AI in their development workflow without compromising security or productivity. It works seamlessly with coding assistants, providing real-time security analysis of AI suggestions. CodeGate is designed with privacy at its core, keeping all data on the user's machine and offering complete control over data.

qdrant

Qdrant is a vector similarity search engine and vector database. It is written in Rust, which makes it fast and reliable even under high load. Qdrant can be used for a variety of applications, including: * Semantic search * Image search * Product recommendations * Chatbots * Anomaly detection Qdrant offers a variety of features, including: * Payload storage and filtering * Hybrid search with sparse vectors * Vector quantization and on-disk storage * Distributed deployment * Highlighted features such as query planning, payload indexes, SIMD hardware acceleration, async I/O, and write-ahead logging Qdrant is available as a fully managed cloud service or as an open-source software that can be deployed on-premises.

SynapseML

SynapseML (previously known as MMLSpark) is an open-source library that simplifies the creation of massively scalable machine learning (ML) pipelines. It provides simple, composable, and distributed APIs for various machine learning tasks such as text analytics, vision, anomaly detection, and more. Built on Apache Spark, SynapseML allows seamless integration of models into existing workflows. It supports training and evaluation on single-node, multi-node, and resizable clusters, enabling scalability without resource wastage. Compatible with Python, R, Scala, Java, and .NET, SynapseML abstracts over different data sources for easy experimentation. Requires Scala 2.12, Spark 3.4+, and Python 3.8+.

mlx-vlm

MLX-VLM is a package designed for running Vision LLMs on Mac systems using MLX. It provides a convenient way to install and utilize the package for processing large language models related to vision tasks. The tool simplifies the process of running LLMs on Mac computers, offering a seamless experience for users interested in leveraging MLX for vision-related projects.

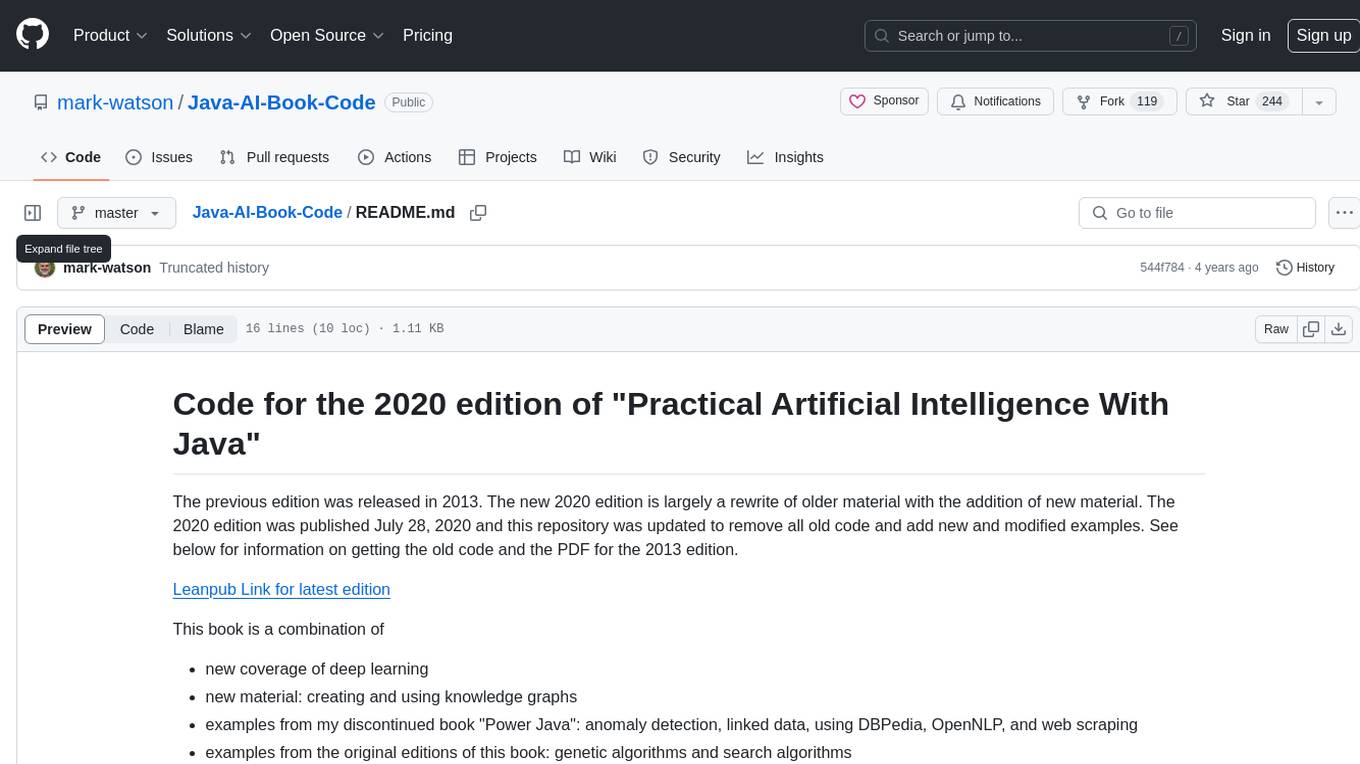

Java-AI-Book-Code

The Java-AI-Book-Code repository contains code examples for the 2020 edition of 'Practical Artificial Intelligence With Java'. It is a comprehensive update of the previous 2013 edition, featuring new content on deep learning, knowledge graphs, anomaly detection, linked data, genetic algorithms, search algorithms, and more. The repository serves as a valuable resource for Java developers interested in AI applications and provides practical implementations of various AI techniques and algorithms.

Awesome-AI-Data-Guided-Projects

A curated list of data science & AI guided projects to start building your portfolio. The repository contains guided projects covering various topics such as large language models, time series analysis, computer vision, natural language processing (NLP), and data science. Each project provides detailed instructions on how to implement specific tasks using different tools and technologies.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.