contextgem

ContextGem: Effortless LLM extraction from documents

Stars: 1492

Contextgem is a Ruby gem that provides a simple way to manage context-specific configurations in your Ruby applications. It allows you to define different configurations based on the context in which your application is running, such as development, testing, or production. This helps you keep your configuration settings organized and easily accessible, making it easier to maintain and update your application. With Contextgem, you can easily switch between different configurations without having to modify your code, making it a valuable tool for managing complex applications with multiple environments.

README:

| Package |

|

| Quality |

|

| Tools |

|

| Docs |

|

| Community |

|

ContextGem is a free, open-source LLM framework that makes it radically easier to extract structured data and insights from documents — with minimal code.

Most popular LLM frameworks for extracting structured data from documents require extensive boilerplate code to extract even basic information. This significantly increases development time and complexity.

ContextGem addresses this challenge by providing a flexible, intuitive framework that extracts structured data and insights from documents with minimal effort. The complex, most time-consuming parts are handled with powerful abstractions, eliminating boilerplate code and reducing development overhead.

📖 Read more on the project motivation in the documentation.

| Built-in abstractions | ContextGem | Other LLM frameworks* |

|---|---|---|

| Automated dynamic prompts | 🟢 | ◯ |

| Automated data modelling and validators | 🟢 | ◯ |

| Precise granular reference mapping (paragraphs & sentences) | 🟢 | ◯ |

| Justifications (reasoning backing the extraction) | 🟢 | ◯ |

| Neural segmentation (using wtpsplit's SaT models) | 🟢 | ◯ |

| Multilingual support (I/O without prompting) | 🟢 | ◯ |

| Single, unified extraction pipeline (declarative, reusable, fully serializable) | 🟢 | 🟡 |

| Grouped LLMs with role-specific tasks | 🟢 | 🟡 |

| Nested context extraction | 🟢 | 🟡 |

| Unified, fully serializable results storage model (document) | 🟢 | 🟡 |

| Extraction task calibration with examples | 🟢 | 🟡 |

| Built-in concurrent I/O processing | 🟢 | 🟡 |

| Automated usage & costs tracking | 🟢 | 🟡 |

| Fallback and retry logic | 🟢 | 🟢 |

| Multiple LLM providers | 🟢 | 🟢 |

🟢 - fully supported - no additional setup required

🟡 - partially supported - requires additional setup

◯ - not supported - requires custom logic

* See descriptions of ContextGem abstractions and comparisons of specific implementation examples using ContextGem and other popular open-source LLM frameworks.

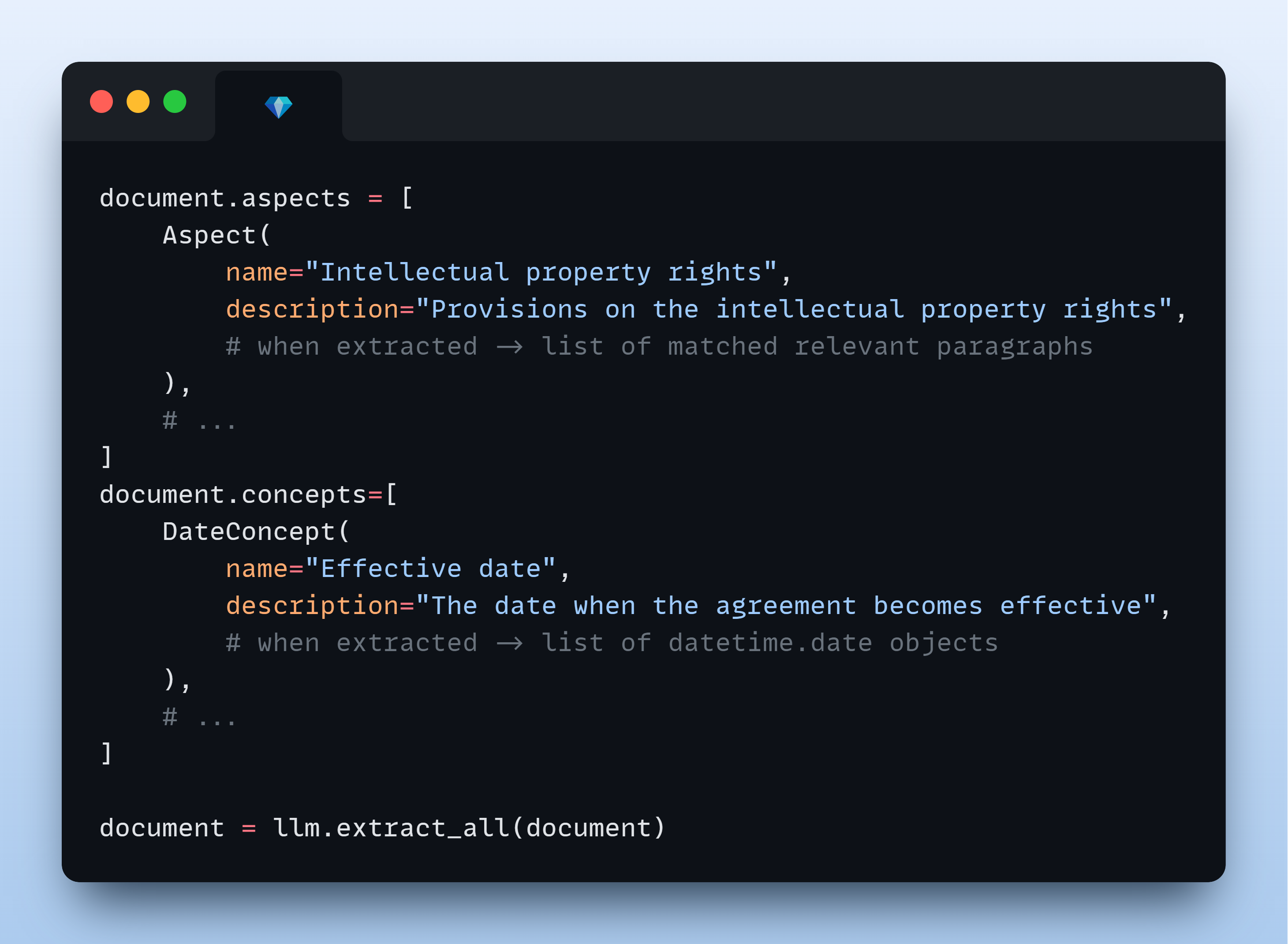

With minimal code, you can:

- Extract structured data from documents (text, images)

- Identify and analyze key aspects (topics, themes, categories) within documents (learn more)

- Extract specific concepts (entities, facts, conclusions, assessments) from documents (learn more)

- Build complex extraction workflows through a simple, intuitive API

- Create multi-level extraction pipelines (aspects containing concepts, hierarchical aspects)

pip install -U contextgemThe following example demonstrates how to use ContextGem to extract anomalies from a legal document - a complex concept that requires contextual understanding. Unlike traditional RAG approaches that might miss subtle inconsistencies, ContextGem analyzes the entire document context to identify content that doesn't belong, complete with source references and justifications.

# Quick Start Example - Extracting anomalies from a document, with source references and justifications

import os

from contextgem import Document, DocumentLLM, StringConcept

# Sample document text (shortened for brevity)

doc = Document(

raw_text=(

"Consultancy Agreement\n"

"This agreement between Company A (Supplier) and Company B (Customer)...\n"

"The term of the agreement is 1 year from the Effective Date...\n"

"The Supplier shall provide consultancy services as described in Annex 2...\n"

"The Customer shall pay the Supplier within 30 calendar days of receiving an invoice...\n"

"The purple elephant danced gracefully on the moon while eating ice cream.\n" # 💎 anomaly

"Time-traveling dinosaurs will review all deliverables before acceptance.\n" # 💎 another anomaly

"This agreement is governed by the laws of Norway...\n"

),

)

# Attach a document-level concept

doc.concepts = [

StringConcept(

name="Anomalies", # in longer contexts, this concept is hard to capture with RAG

description="Anomalies in the document",

add_references=True,

reference_depth="sentences",

add_justifications=True,

justification_depth="brief",

# see the docs for more configuration options

)

# add more concepts to the document, if needed

# see the docs for available concepts: StringConcept, JsonObjectConcept, etc.

]

# Or use `doc.add_concepts([...])`

# Define an LLM for extracting information from the document

llm = DocumentLLM(

model="openai/gpt-4o-mini", # or another provider/LLM

api_key=os.environ.get(

"CONTEXTGEM_OPENAI_API_KEY"

), # your API key for the LLM provider

# see the docs for more configuration options

)

# Extract information from the document

doc = llm.extract_all(doc) # or use async version `await llm.extract_all_async(doc)`

# Access extracted information in the document object

anomalies_concept = doc.concepts[0]

# or `doc.get_concept_by_name("Anomalies")`

for item in anomalies_concept.extracted_items:

print("Anomaly:")

print(f" {item.value}")

print("Justification:")

print(f" {item.justification}")

print("Reference paragraphs:")

for p in item.reference_paragraphs:

print(f" - {p.raw_text}")

print("Reference sentences:")

for s in item.reference_sentences:

print(f" - {s.raw_text}")

print()| 📄 Document |

|---|

| Create a Document that contains text and/or visual content representing your document (contract, invoice, report, CV, etc.), from which an LLM extracts information (aspects and/or concepts). Learn more |

document = Document(raw_text="Non-Disclosure Agreement...")| 🔍 Aspects | 💡 Concepts |

|---|---|

| Define Aspects to extract text segments from the document (sections, topics, themes). You can organize content hierarchically and combine with concepts for comprehensive analysis. Learn more | Define Concepts to extract specific data points with intelligent inference: entities, insights, structured objects, classifications, numerical calculations, dates, ratings, and assessments. Learn more |

# Extract document sections

aspect = Aspect(

name="Term and termination",

description="Clauses on contract term and termination",

)

# Extract specific data points

concept = BooleanConcept(

name="NDA check",

description="Is the contract an NDA?",

)

# Add these to the document instance for further extraction

document.add_aspects([aspect])

document.add_concepts([concept])| 🔄 Alternative: Configure Extraction Pipeline |

|---|

| Create a reusable collection of predefined aspects and concepts that enables consistent extraction across multiple documents. Learn more |

| 🤖 LLM | 🤖🤖 Alternative: LLM Group (advanced) |

|---|---|

| Configure a cloud or local LLM that will extract aspects and/or concepts from the document. DocumentLLM supports fallback models and role-based task routing for optimal performance. Learn more | Configure a group of LLMs with unique roles for complex extraction workflows. You can route different aspects and/or concepts to specialized LLMs (e.g., simple extraction vs. reasoning tasks). Learn more |

llm = DocumentLLM(

model="openai/gpt-4.1-mini", # or another provider/LLM

api_key="...",

)

document = llm.extract_all(document)

# print(document.aspects[0].extracted_items)

# print(document.concepts[0].extracted_items)📖 Learn more about ContextGem's core components and their practical examples in the documentation.

🌟 Basic usage:

- Aspect Extraction from Document

- Extracting Aspect with Sub-Aspects

- Concept Extraction from Aspect

- Concept Extraction from Document (text)

- Concept Extraction from Document (vision)

- LLM chat interface

🚀 Advanced usage:

- Extracting Aspects Containing Concepts

- Extracting Aspects and Concepts from a Document

- Using a Multi-LLM Pipeline to Extract Data from Several Documents

To create a ContextGem document for LLM analysis, you can either pass raw text directly, or use built-in converters that handle various file formats.

ContextGem provides a built-in converter to easily transform DOCX files into LLM-ready data.

- Comprehensive extraction of document elements: paragraphs, headings, lists, tables, comments, footnotes, textboxes, headers/footers, links, embedded images, and inline formatting

- Document structure preservation with rich metadata for improved LLM analysis

- Built-in converter that directly processes Word XML

🚀 Performance improvement in v0.17.1: DOCX converter now converts files ~2X faster.

# Using ContextGem's DocxConverter

from contextgem import DocxConverter

converter = DocxConverter()

# Convert a DOCX file to an LLM-ready ContextGem Document

# from path

document = converter.convert("path/to/document.docx")

# or from file object

with open("path/to/document.docx", "rb") as docx_file_object:

document = converter.convert(docx_file_object)

# Perform data extraction on the resulting Document object

# document.add_aspects(...)

# document.add_concepts(...)

# llm.extract_all(document)

# You can also use DocxConverter instance as a standalone text extractor

docx_text = converter.convert_to_text_format(

"path/to/document.docx",

output_format="markdown", # or "raw"

)📖 Learn more about DOCX converter features in the documentation.

ContextGem leverages LLMs' long context windows to deliver superior extraction accuracy from individual documents. Unlike RAG approaches that often struggle with complex concepts and nuanced insights, ContextGem capitalizes on continuously expanding context capacity, evolving LLM capabilities, and decreasing costs. This focused approach enables direct information extraction from complete documents, eliminating retrieval inconsistencies while optimizing for in-depth single-document analysis. While this delivers higher accuracy for individual documents, ContextGem does not currently support cross-document querying or corpus-wide retrieval - for these use cases, modern RAG frameworks (e.g., LlamaIndex, Haystack) remain more appropriate.

📖 Read more on how ContextGem works in the documentation.

ContextGem supports both cloud-based and local LLMs through LiteLLM integration:

- Cloud LLMs: OpenAI, Anthropic, Google, Azure OpenAI, xAI, and more

- Local LLMs: Run models locally using providers like Ollama, LM Studio, etc.

- Model Architectures: Works with both reasoning/CoT-capable (e.g. gpt-5) and non-reasoning models (e.g. gpt-4.1)

- Simple API: Unified interface for all LLMs with easy provider switching

💡 Model Selection Note: For reliable structured extraction, we recommend using models with performance equivalent to or exceeding

gpt-4o-mini. Smaller models (such as 8B parameter models) may struggle with ContextGem's detailed extraction instructions. If you encounter issues with smaller models, see our troubleshooting guide for potential solutions.

📖 Learn more about supported LLM providers and models, how to configure LLMs, and LLM extraction methods in the documentation.

ContextGem documentation offers guidance on optimization strategies to maximize performance, minimize costs, and enhance extraction accuracy:

- Optimizing for Accuracy

- Optimizing for Speed

- Optimizing for Cost

- Dealing with Long Documents

- Choosing the Right LLM(s)

- Troubleshooting Issues with Small Models

ContextGem allows you to save and load Document objects, pipelines, and LLM configurations with built-in serialization methods:

- Save processed documents to avoid repeating expensive LLM calls

- Transfer extraction results between systems

- Persist pipeline and LLM configurations for later reuse

📖 Learn more about serialization options in the documentation.

📖 Full documentation: contextgem.dev

📄 Raw documentation for LLMs: Available at docs/docs-raw-for-llm.txt - automatically generated, optimized for LLM ingestion.

🤖 AI-powered code exploration: DeepWiki provides visual architecture maps and natural language Q&A for the codebase.

📈 Change history: See the CHANGELOG for version history, improvements, and bug fixes.

🐛 Found a bug or have a feature request? Open an issue on GitHub.

💭 Need help or want to discuss? Start a thread in GitHub Discussions.

We welcome contributions from the community - whether it's fixing a typo or developing a completely new feature!

📋 Get started: Check out our Contributor Guidelines.

This project is automatically scanned for security vulnerabilities using multiple security tools:

- CodeQL - GitHub's semantic code analysis engine for vulnerability detection

- Bandit - Python security linter for common security issues

- Snyk - Dependency vulnerability monitoring (used as needed)

🛡️ Security policy: See SECURITY file for details.

ContextGem relies on these excellent open-source packages:

- aiolimiter: Powerful rate limiting for async operations

- genai-prices: LLM pricing data and utilities (by Pydantic) to automatically estimate costs

- Jinja2: Fast, expressive, extensible templating engine used for prompt rendering

- litellm: Unified interface to multiple LLM providers with seamless provider switching

- loguru: Simple yet powerful logging that enhances debugging and observability

- lxml: High-performance XML processing library for parsing DOCX document structure

- pillow: Image processing library for local model image handling

- pydantic: The gold standard for data validation

- python-ulid: Efficient ULID generation for unique object identification

- typing-extensions: Backports of the latest typing features for enhanced type annotations

- wtpsplit-lite: Lightweight version of wtpsplit for state-of-the-art paragraph/sentence segmentation using wtpsplit's SaT models

ContextGem is just getting started, and your support means the world to us!

⭐ Star the project if you find ContextGem useful

📢 Share it with others who might benefit

🔧 Contribute with feedback, issues, or code improvements

Your engagement is what makes this project grow!

License: Apache 2.0 License - see the LICENSE and NOTICE files for details.

Copyright: © 2025 Shcherbak AI AS, an AI engineering company building tools for AI/ML/NLP developers.

Connect: LinkedIn or X for questions or collaboration ideas.

Built with ❤️ in Oslo, Norway.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for contextgem

Similar Open Source Tools

contextgem

Contextgem is a Ruby gem that provides a simple way to manage context-specific configurations in your Ruby applications. It allows you to define different configurations based on the context in which your application is running, such as development, testing, or production. This helps you keep your configuration settings organized and easily accessible, making it easier to maintain and update your application. With Contextgem, you can easily switch between different configurations without having to modify your code, making it a valuable tool for managing complex applications with multiple environments.

verl-tool

The verl-tool is a versatile command-line utility designed to streamline various tasks related to version control and code management. It provides a simple yet powerful interface for managing branches, merging changes, resolving conflicts, and more. With verl-tool, users can easily track changes, collaborate with team members, and ensure code quality throughout the development process. Whether you are a beginner or an experienced developer, verl-tool offers a seamless experience for version control operations.

crewAI-tools

This repository provides a guide for setting up tools for crewAI agents to enhance functionality. It offers steps to equip agents with ready-to-use tools and create custom ones. Tools are expected to return strings for generating responses. Users can create tools by subclassing BaseTool or using the tool decorator. Contributions are welcome to enrich the toolset, and guidelines are provided for contributing. The development setup includes installing dependencies, activating virtual environment, setting up pre-commit hooks, running tests, static type checking, packaging, and local installation. The goal is to empower AI solutions through advanced tooling.

promptl

Promptl is a versatile command-line tool designed to streamline the process of creating and managing prompts for user input in various programming projects. It offers a simple and efficient way to prompt users for information, validate their input, and handle different scenarios based on their responses. With Promptl, developers can easily integrate interactive prompts into their scripts, applications, and automation workflows, enhancing user experience and improving overall usability. The tool provides a range of customization options and features, making it suitable for a wide range of use cases across different programming languages and environments.

Gito

Gito is a lightweight and user-friendly tool for managing and organizing your GitHub repositories. It provides a simple and intuitive interface for users to easily view, clone, and manage their repositories. With Gito, you can quickly access important information about your repositories, such as commit history, branches, and pull requests. The tool also allows you to perform common Git operations, such as pushing changes and creating new branches, directly from the interface. Gito is designed to streamline your GitHub workflow and make repository management more efficient and convenient.

navigator

Navigator is a versatile tool for navigating through complex codebases efficiently. It provides a user-friendly interface to explore code files, search for specific functions or variables, and visualize code dependencies. With Navigator, developers can easily understand the structure of a project and quickly locate relevant code snippets. The tool supports various programming languages and offers customizable settings to enhance the coding experience. Whether you are working on a small project or a large codebase, Navigator can help you streamline your development process and improve code comprehension.

lightfriend

Lightfriend is a lightweight and user-friendly tool designed to assist developers in managing their GitHub repositories efficiently. It provides a simple and intuitive interface for users to perform various repository-related tasks, such as creating new repositories, managing branches, and reviewing pull requests. With Lightfriend, developers can streamline their workflow and collaborate more effectively with team members. The tool is designed to be easy to use and requires minimal setup, making it ideal for developers of all skill levels. Whether you are a beginner looking to get started with GitHub or an experienced developer seeking a more efficient way to manage your repositories, Lightfriend is the perfect companion for your GitHub workflow.

pullfrog

Pullfrog is a versatile tool for managing and automating GitHub pull requests. It provides a simple and intuitive interface for developers to streamline their workflow and collaborate more efficiently. With Pullfrog, users can easily create, review, merge, and manage pull requests, all within a single platform. The tool offers features such as automated testing, code review, and notifications to help teams stay organized and productive. Whether you are a solo developer or part of a large team, Pullfrog can help you simplify the pull request process and improve code quality.

BrowserGym

BrowserGym is an open, easy-to-use, and extensible framework designed to accelerate web agent research. It provides benchmarks like MiniWoB, WebArena, VisualWebArena, WorkArena, AssistantBench, and WebLINX. Users can design new web benchmarks by inheriting the AbstractBrowserTask class. The tool allows users to install different packages for core functionalities, experiments, and specific benchmarks. It supports the development setup and offers boilerplate code for running agents on various tasks. BrowserGym is not a consumer product and should be used with caution.

BentoVLLM

BentoVLLM is an example project demonstrating how to serve and deploy open-source Large Language Models using vLLM, a high-throughput and memory-efficient inference engine. It provides a basis for advanced code customization, such as custom models, inference logic, or vLLM options. The project allows for simple LLM hosting with OpenAI compatible endpoints without the need to write any code. Users can interact with the server using Swagger UI or other methods, and the service can be deployed to BentoCloud for better management and scalability. Additionally, the repository includes integration examples for different LLM models and tools.

Companion

Companion is a software tool designed to provide support and enhance development. It offers various features and functionalities to assist users in their projects and tasks. The tool aims to be user-friendly and efficient, helping individuals and teams to streamline their workflow and improve productivity.

baibot

Baibot is a versatile chatbot framework designed to simplify the process of creating and deploying chatbots. It provides a user-friendly interface for building custom chatbots with various functionalities such as natural language processing, conversation flow management, and integration with external APIs. Baibot is highly customizable and can be easily extended to suit different use cases and industries. With Baibot, developers can quickly create intelligent chatbots that can interact with users in a seamless and engaging manner, enhancing user experience and automating customer support processes.

PentestGPT

PentestGPT provides advanced AI and integrated tools to help security teams conduct comprehensive penetration tests effortlessly. Scan, exploit, and analyze web applications, networks, and cloud environments with ease and precision, without needing expert skills. The tool utilizes Supabase for data storage and management, and Vercel for hosting the frontend. It offers a local quickstart guide for running the tool locally and a hosted quickstart guide for deploying it in the cloud. PentestGPT aims to simplify the penetration testing process for security professionals and enthusiasts alike.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

intelligent-app-workshop

Welcome to the envisioning workshop designed to help you build your own custom Copilot using Microsoft's Copilot stack. This workshop aims to rethink user experience, architecture, and app development by leveraging reasoning engines and semantic memory systems. You will utilize Azure AI Foundry, Prompt Flow, AI Search, and Semantic Kernel. Work with Miyagi codebase, explore advanced capabilities like AutoGen and GraphRag. This workshop guides you through the entire lifecycle of app development, including identifying user needs, developing a production-grade app, and deploying on Azure with advanced capabilities. By the end, you will have a deeper understanding of leveraging Microsoft's tools to create intelligent applications.

humanlayer

HumanLayer is a Python toolkit designed to enable AI agents to interact with humans in tool-based and asynchronous workflows. By incorporating humans-in-the-loop, agentic tools can access more powerful and meaningful tasks. The toolkit provides features like requiring human approval for function calls, human as a tool for contacting humans, omni-channel contact capabilities, granular routing, and support for various LLMs and orchestration frameworks. HumanLayer aims to ensure human oversight of high-stakes function calls, making AI agents more reliable and safe in executing impactful tasks.

For similar tasks

contextgem

Contextgem is a Ruby gem that provides a simple way to manage context-specific configurations in your Ruby applications. It allows you to define different configurations based on the context in which your application is running, such as development, testing, or production. This helps you keep your configuration settings organized and easily accessible, making it easier to maintain and update your application. With Contextgem, you can easily switch between different configurations without having to modify your code, making it a valuable tool for managing complex applications with multiple environments.

intel-extension-for-tensorflow

Intel® Extension for TensorFlow* is a high performance deep learning extension plugin based on TensorFlow PluggableDevice interface. It aims to accelerate AI workloads by allowing users to plug Intel CPU or GPU devices into TensorFlow on-demand, exposing the computing power inside Intel's hardware. The extension provides XPU specific implementation, kernels & operators, graph optimizer, device runtime, XPU configuration management, XPU backend selection, and options for turning on/off advanced features.

langflow

Langflow is an open-source Python-powered visual framework designed for building multi-agent and RAG applications. It is fully customizable, language model agnostic, and vector store agnostic. Users can easily create flows by dragging components onto the canvas, connect them, and export the flow as a JSON file. Langflow also provides a command-line interface (CLI) for easy management and configuration, allowing users to customize the behavior of Langflow for development or specialized deployment scenarios. The tool can be deployed on various platforms such as Google Cloud Platform, Railway, and Render. Contributors are welcome to enhance the project on GitHub by following the contributing guidelines.

Yi-Ai

Yi-Ai is a project based on the development of nineai 2.4.2. It is for learning and reference purposes only, not for commercial use. The project includes updates to popular models like gpt-4o and claude3.5, as well as new features such as model image recognition. It also supports various functionalities like model sorting, file type extensions, and bug fixes. The project provides deployment tutorials for both integrated and compiled packages, with instructions for environment setup, configuration, dependency installation, and project startup. Additionally, it offers a management platform with different access levels and emphasizes the importance of following the steps for proper system operation.

ansible-power-aix

The IBM Power Systems AIX Collection provides modules to manage configurations and deployments of Power AIX systems, enabling workloads on Power platforms as part of an enterprise automation strategy through the Ansible ecosystem. It includes example best practices, requirements for AIX versions, Ansible, and Python, along with resources for documentation and contribution.

magic-cli

Magic CLI is a command line utility that leverages Large Language Models (LLMs) to enhance command line efficiency. It is inspired by projects like Amazon Q and GitHub Copilot for CLI. The tool allows users to suggest commands, search across command history, and generate commands for specific tasks using local or remote LLM providers. Magic CLI also provides configuration options for LLM selection and response generation. The project is still in early development, so users should expect breaking changes and bugs.

ai-commit

ai-commit is a tool that automagically generates conventional git commit messages using AI. It supports various generators like Bito Cli, ERNIE-Bot-turbo, ERNIE-Bot, Moonshot, and OpenAI Chat. The tool requires PHP version 7.3 or higher for installation. Users can configure generators, set API keys, and easily generate and commit messages with customizable options. Additionally, ai-commit provides commands for managing configurations, self-updating, and shell completion scripts.

palimpzest

Palimpzest (PZ) is a tool for managing and optimizing workloads, particularly for data processing tasks. It provides a CLI tool and Python demos for users to register datasets, run workloads, and access results. Users can easily initialize their system, register datasets, and manage configurations using the CLI commands provided. Palimpzest also supports caching intermediate results and configuring for parallel execution with remote services like OpenAI and together.ai. The tool aims to streamline the workflow of working with datasets and optimizing performance for data extraction tasks.

For similar jobs

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

nvidia_gpu_exporter

Nvidia GPU exporter for prometheus, using `nvidia-smi` binary to gather metrics.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

openinference

OpenInference is a set of conventions and plugins that complement OpenTelemetry to enable tracing of AI applications. It provides a way to capture and analyze the performance and behavior of AI models, including their interactions with other components of the application. OpenInference is designed to be language-agnostic and can be used with any OpenTelemetry-compatible backend. It includes a set of instrumentations for popular machine learning SDKs and frameworks, making it easy to add tracing to your AI applications.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.