chat.md

An md file as a chat interface and editable history in one.

Stars: 57

This repository contains a chatbot tool that utilizes natural language processing to interact with users. The tool is designed to understand and respond to user input in a conversational manner, providing information and assistance. It can be integrated into various applications to enhance user experience and automate customer support. The chatbot tool is user-friendly and customizable, making it suitable for businesses looking to improve customer engagement and streamline communication.

README:

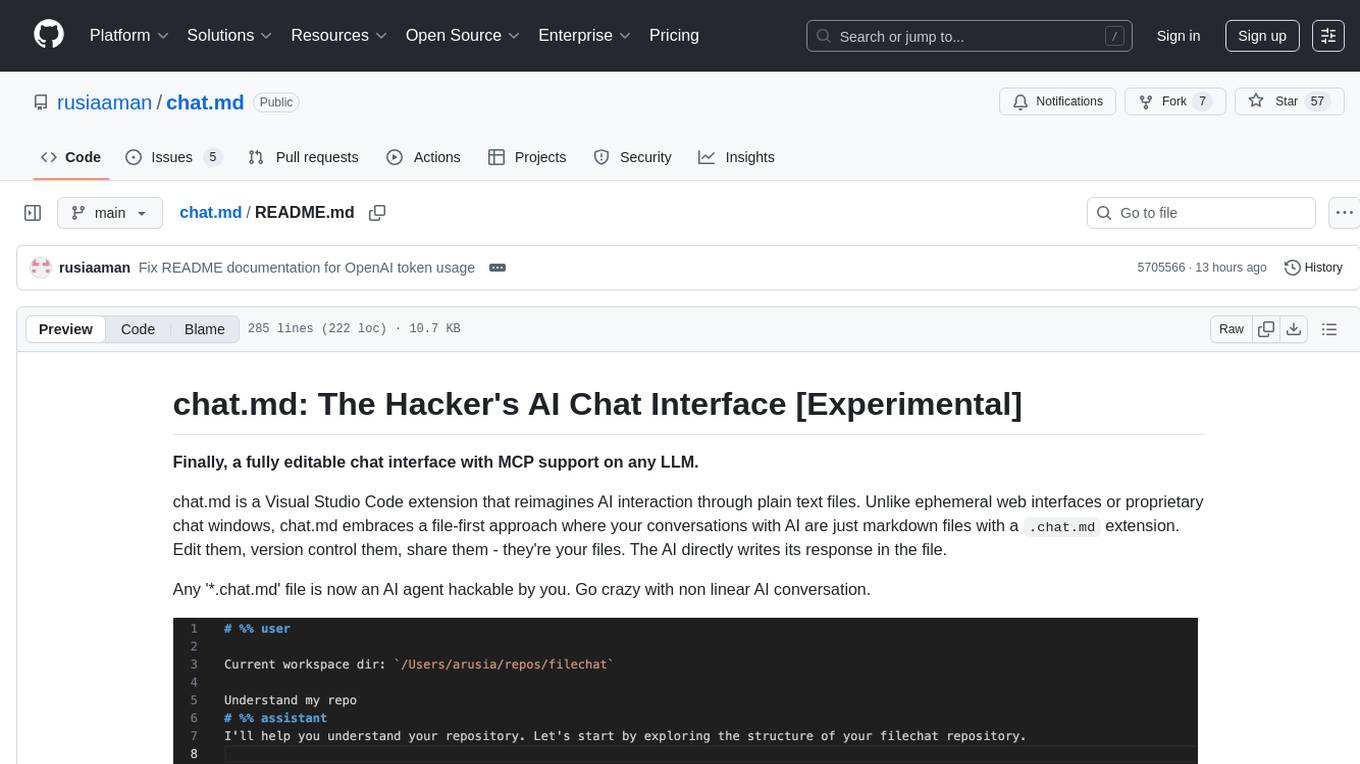

Finally, a fully editable chat interface with MCP support on any LLM.

chat.md is a Visual Studio Code extension that reimagines AI interaction through plain text files. Unlike ephemeral web interfaces or proprietary chat windows, chat.md embraces a file-first approach where your conversations with AI are just markdown files with a .chat.md extension. Edit them, version control them, share them - they're your files. The AI directly writes its response in the file.

Any '*.chat.md' file is now an AI agent hackable by you. Go crazy with non linear AI conversation.

Here's the chat I used to publish this vscode extension using gemini-2.5-pro and wcgw mcp

NOTE

| Other AI Tools | chat.md |

|---|---|

| ❌ Linear conversations or limited editing | ✅ Non-linear editing - rewrite history, branch conversations |

| ❌ Tool execution tied to proprietary implementations | ✅ Any LLM model can do tool calling |

| ❌ Can't manually edit AI responses | ✅ Put words in LLM's mouth - edit and have it continue from there |

| ❌ MCP not supported in many LLMs | ✅ Any LLM model can use MCP servers |

| ❌ Max token limit for assistant response can't be resumed | ✅ Resume incomplete AI responses at any point |

| ❌ Conversations live in the cloud or inaccessible | ✅ Files stored locally alongside your code in human readable format |

| ❌ Separate context from your workspace | ✅ Attach files directly from your project |

Unlike Copilot's inline suggestions, ChatGPT's web interface, or Cursor's side panel, chat.md treats conversations as first-class files in your workspace:

# %% user

How can I optimize this function?

[#file](src/utils.js)

# %% assistant

Looking at your utils.js file, I see several opportunities for optimization:

1. The loop on line 24 could be replaced with a more efficient map/reduce pattern

2. The repetitive string concatenation can be improved with template literals

...- Anthropic Claude: All models (Opus, Sonnet, Haiku)

- OpenAI: GPT-4, GPT-3.5, and future models

- Custom APIs: Any OpenAI-compatible endpoint (Azure, Google Gemini, etc.)

- Quick Switching: Toggle between different models in between a conversation.

Chat.md is a Model Context Protocol (MCP) client - an open standard for tool execution that works with any LLM.

Chat.md doesn't restrict any LLM from tool calling unlike many chat applications.

- Truly Universal: Any AI model (Claude, GPT, open-source models) can use any MCP tool

- Model Agnostic: Tools work identically regardless of which AI powers your conversation

- No Vendor Lock-in: Switch models without losing tool functionality

<tool_call>

<tool_name>filesystem.searchFiles</tool_name>

<param name="pattern">*.js</param>

<param name="directory">src</param>

</tool_call>

- Attach text files and images directly in your conversations (paste any copied image)

- Link files using familiar markdown syntax:

[file](path/to/file) - Files are resolved relative to the chat document - perfect for project context (or use absolute paths)

Since chat.md files are just text, you have complete control over your AI interactions:

- Non-linear Editing: Rewrite history by editing earlier parts of the conversation

- Conversation Hacking: Put words in the AI's mouth by editing its responses

- Continuation Control: Have the AI continue from any edited point

- Resume Truncated Outputs: If an AI response gets cut off, just add a new assistant block and continue

- Git-Friendly: Track conversation changes, collaborate on prompts, and branch conversations

- Conversation Templates: Create reusable conversation starters for common tasks

- Install 'chat.md' from the VS Code marketplace

- Configure your API key(s):

- Command Palette → "Add or Edit API Configuration"

- Create a new chat:

-

Opt+Cmd+'(Mac) /Ctrl+k Ctrl+c(Windows/Linux) to create a new '.chat.md' file with workspace information populated in a user block. - Or create any file with the

.chat.mdextension anywhere and open it in vscode.

-

- In a '# %% user' block write your query and press 'Shift + Enter' (or just create a new '# %% assistant' block and press enter)

- Watch the assistant stream its response and do any tool call.

Optionally you can start a markdown preview side by side to get live markdown preview of the chat which is more user friendly.

- You can insert a

# %% systemblock to append any new instructions to the system prompt. - You can manually add API configuration and MCP configuration in vscode settings. See example settings

- Click on the live status bar "Chat.md streaming" icon in the bottom or run "chat.md: Cancel streaming" command to interrupt

- You can also use the same shortcut "Opt+Cmd+'" to cancel streaming as for creating a new chat.

- You can run command "Refresh MCP Tools" to reload all mcp servers. Then run "MCP Diagnostics" to see available mcp servers.

- You can use "Select api configuration" command to switch between the API providers

Access these settings through VS Code's settings UI or settings.json:

-

chatmd.apiConfigs: Named API configurations (provider, API key, model, base URL) -

chatmd.selectedConfig: Active API configuration -

chatmd.mcpServers: Configure MCP tool servers -

chatmd.reasoningEffort: Control reasoning depth (minimal, low, medium, high)

When an AI response includes a tool call, the extension will automatically:

- Add a tool_execute block after the assistant's response

- Execute the tool with the specified parameters

- Insert the tool's result back into the document

- Add a new assistant block for the AI to continue

You can also trigger tool execution manually by:

- Pressing Shift+Enter while positioned at the end of an assistant response containing a tool call

- This will insert a tool_execute block and execute the tool

-

Shift+Enter: Insert next block (alternates between user/assistant) or inserts a tool_execute block if the cursor is at the end of an assistant block containing a tool call -

Opt+Cmd+'(Mac) /Ctrl+k Ctrl+c'(Windows/Linux): Create new context chat or cancel existing streaming

Connect any Model Context Protocol server to extend AI capabilities:

For local MCP servers running in the same environment as VS Code:

"chatmd.mcpServers": {

"wcgw": {

"command": "uvx",

"args": [

"--python",

"3.12",

"--from",

"wcgw@latest",

"wcgw_mcp"

]

}

}For remote MCP servers accessible via HTTP/Server-Sent Events:

"chatmd.mcpServers": {

"remote-mcp": {

"url": "http://localhost:3000/sse"

}

}You can also add environment variables if needed:

"chatmd.mcpServers": {

"remote-mcp": {

"url": "http://localhost:3000/sse",

"env": {

"API_KEY": "your-api-key-here"

}

}

}The AI will automatically discover available tools from both local and remote servers and know how to use them! Tool lists are refreshed automatically every 5 seconds to keep them up-to-date.

chat.md breaks away from the artificial "chat" paradigm and acknowledges that AI interaction is fundamentally about text processing. By treating conversations as files:

- Persistence becomes trivial - no special cloud sync or proprietary formats

- Collaboration is built-in - share, diff, and merge like any other code

- Version control is natural - track changes over time

- Customization is unlimited - edit the file however you want

- MCP -- only tools supported, prompts and resources will be supported in the future.

- Caching not yet supported in anthropic api.

- Gemini, ollama, llm studio and other models have to be accessed using openai-api only.

vscode json settings

"chatmd.apiConfigs": {

"gemini-2.5pro": {

"type": "openai",

"apiKey": "",

"base_url": "https://generativelanguage.googleapis.com/v1beta/openai/",

"model_name": "gemini-2.5-pro-exp-03-25"

},

"anthropic-sonnet-3-7": {

"type": "anthropic",

"apiKey": "sk-ant-",

"base_url": "",

"model_name": "claude-3-7-sonnet-latest"

},

"openrouter-qasar": {

"type": "openai",

"apiKey": "sk-or-",

"base_url": "https://openrouter.ai/api/v1",

"model_name": "openrouter/quasar-alpha"

},

"groq-llam4": {

"type": "openai",

"apiKey": "",

"base_url": "https://api.groq.com/openai/v1",

"model_name": "meta-llama/llama-4-scout-17b-16e-instruct"

},

"together-llama4": {

"type": "openai",

"base_url": "https://api.together.xyz/v1",

"apiKey": "",

"model_name": "meta-llama/Llama-4-Maverick-17B-128E-Instruct-FP8"

}

},

"chatmd.mcpServers": {

"wcgw": {

"command": "/opt/homebrew/bin/uv",

"args": [

"tool",

"run",

"--python",

"3.12",

"--from",

"wcgw@latest",

"wcgw_mcp"

]

},

"brave-search": {

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-brave-search"],

"env": {

"BRAVE_API_KEY": ""

}

},

"fetch": {

"command": "/opt/homebrew/bin/uvx",

"args": ["mcp-server-fetch"]

}

},

"chatmd.selectedConfig": "gemini-2.5pro",

"chatmd.maxTokens": 8000,

"chatmd.maxThinkingTokens": 16000,

"chatmd.reasoningEffort": "medium"Note: maxTokens (default: 8000) controls the maximum number of tokens for model responses.

For OpenAI reasoning models (GPT-5, o3, o1 series), maxTokens is used as the total max_completion_tokens budget (includes both thinking and response tokens).

For Anthropic models, maxThinkingTokens controls the thinking token budget separately, or can be calculated automatically from reasoningEffort.

MIT License - see the LICENSE file for details.

- File issues on the GitHub repository

- Contributions welcome via pull requests

- Claude with wcgw mcp

- Gemini 2.5 pro with chat.md

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for chat.md

Similar Open Source Tools

chat.md

This repository contains a chatbot tool that utilizes natural language processing to interact with users. The tool is designed to understand and respond to user input in a conversational manner, providing information and assistance. It can be integrated into various applications to enhance user experience and automate customer support. The chatbot tool is user-friendly and customizable, making it suitable for businesses looking to improve customer engagement and streamline communication.

Companion

Companion is a software tool designed to provide support and enhance development. It offers various features and functionalities to assist users in their projects and tasks. The tool aims to be user-friendly and efficient, helping individuals and teams to streamline their workflow and improve productivity.

duckduckgo-ai-chat

This repository contains a chatbot tool powered by AI technology. The chatbot is designed to interact with users in a conversational manner, providing information and assistance on various topics. Users can engage with the chatbot to ask questions, seek recommendations, or simply have a casual conversation. The AI technology behind the chatbot enables it to understand natural language inputs and provide relevant responses, making the interaction more intuitive and engaging. The tool is versatile and can be customized for different use cases, such as customer support, information retrieval, or entertainment purposes. Overall, the chatbot offers a user-friendly and interactive experience, leveraging AI to enhance communication and engagement.

chatmcp

Chatmcp is a chatbot framework for building conversational AI applications. It provides a flexible and extensible platform for creating chatbots that can interact with users in a natural language. With Chatmcp, developers can easily integrate chatbot functionality into their applications, enabling users to communicate with the system through text-based conversations. The framework supports various natural language processing techniques and allows for the customization of chatbot behavior and responses. Chatmcp simplifies the development of chatbots by providing a set of pre-built components and tools that streamline the creation process. Whether you are building a customer support chatbot, a virtual assistant, or a chat-based game, Chatmcp offers the necessary features and capabilities to bring your conversational AI ideas to life.

tiledesk-dashboard

Tiledesk is an open-source live chat platform with integrated chatbots written in Node.js and Express. It is designed to be a multi-channel platform for web, Android, and iOS, and it can be used to increase sales or provide post-sales customer service. Tiledesk's chatbot technology allows for automation of conversations, and it also provides APIs and webhooks for connecting external applications. Additionally, it offers a marketplace for apps and features such as CRM, ticketing, and data export.

WorkflowAI

WorkflowAI is a powerful tool designed to streamline and automate various tasks within the workflow process. It provides a user-friendly interface for creating custom workflows, automating repetitive tasks, and optimizing efficiency. With WorkflowAI, users can easily design, execute, and monitor workflows, allowing for seamless integration of different tools and systems. The tool offers advanced features such as conditional logic, task dependencies, and error handling to ensure smooth workflow execution. Whether you are managing project tasks, processing data, or coordinating team activities, WorkflowAI simplifies the workflow management process and enhances productivity.

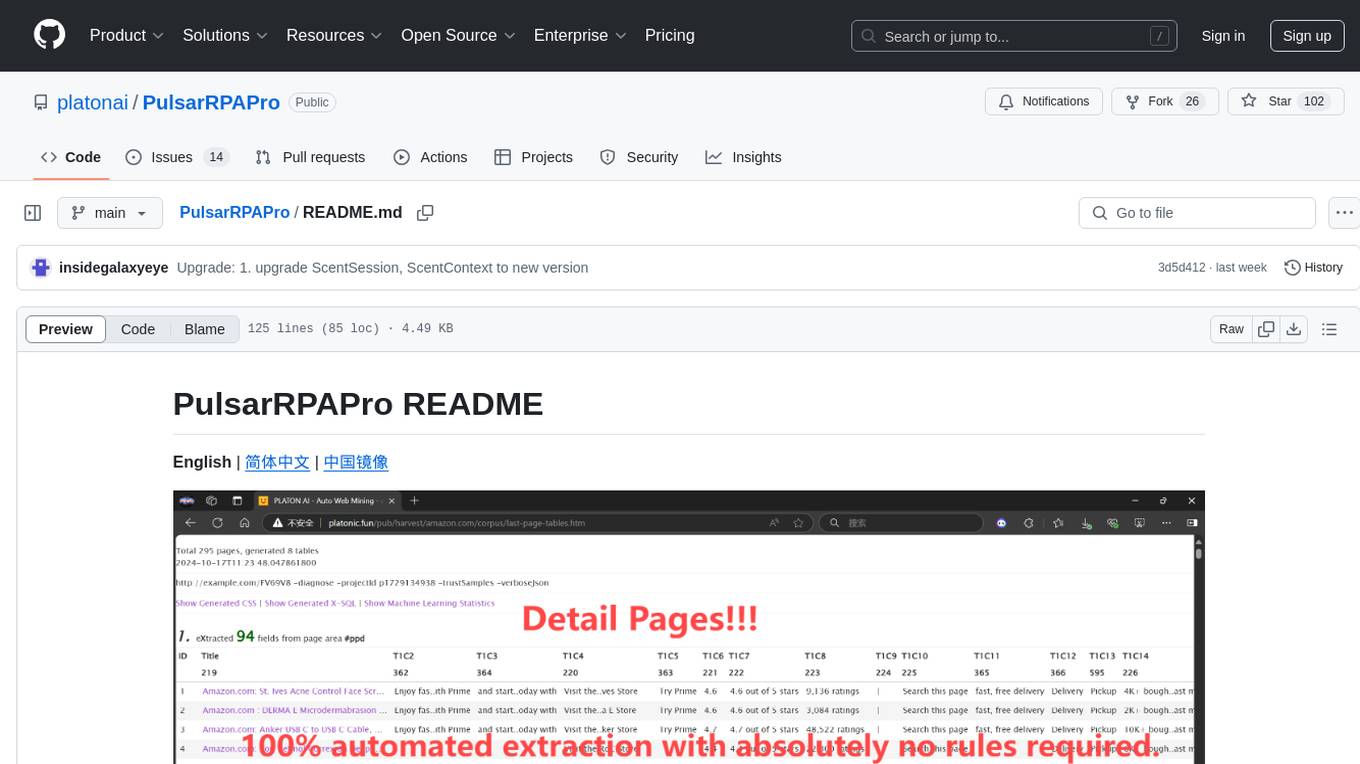

PulsarRPAPro

PulsarRPAPro is a powerful robotic process automation (RPA) tool designed to automate repetitive tasks and streamline business processes. It offers a user-friendly interface for creating and managing automation workflows, allowing users to easily automate tasks without the need for extensive programming knowledge. With features such as task scheduling, data extraction, and integration with various applications, PulsarRPAPro helps organizations improve efficiency and productivity by reducing manual work and human errors. Whether you are a small business looking to automate simple tasks or a large enterprise seeking to optimize complex processes, PulsarRPAPro provides the flexibility and scalability to meet your automation needs.

evalica

Evalica is a powerful tool for evaluating code quality and performance in software projects. It provides detailed insights and metrics to help developers identify areas for improvement and optimize their code. With support for multiple programming languages and frameworks, Evalica offers a comprehensive solution for code analysis and optimization. Whether you are a beginner looking to learn best practices or an experienced developer aiming to enhance your code quality, Evalica is the perfect tool for you.

J.A.R.V.I.S.

J.A.R.V.I.S.1.0 is an advanced virtual assistant tool designed to assist users in various tasks. It provides a wide range of functionalities including voice commands, task automation, information retrieval, and communication management. With its intuitive interface and powerful capabilities, J.A.R.V.I.S.1.0 aims to enhance productivity and streamline daily activities for users.

eververse

Eververse is an open source product management platform that provides a simple alternative to tools like Productboard and Cycle. It allows product teams to collaborate on exploring problems, ideating solutions, prioritizing features, and planning roadmaps with the assistance of AI.

trubrics-sdk

Trubrics-sdk is a software development kit designed to facilitate the integration of analytics features into applications. It provides a set of tools and functionalities that enable developers to easily incorporate analytics capabilities, such as data collection, analysis, and reporting, into their software products. The SDK streamlines the process of implementing analytics solutions, allowing developers to focus on building and enhancing their applications' functionality and user experience. By leveraging trubrics-sdk, developers can quickly and efficiently integrate robust analytics features, gaining valuable insights into user behavior and application performance.

Eridanus

Eridanus is a powerful data visualization tool designed to help users create interactive and insightful visualizations from their datasets. With a user-friendly interface and a wide range of customization options, Eridanus makes it easy for users to explore and analyze their data in a meaningful way. Whether you are a data scientist, business analyst, or student, Eridanus provides the tools you need to communicate your findings effectively and make data-driven decisions.

copilot

OpenCopilot is a tool that allows users to create their own AI copilot for their products. It integrates with APIs to execute calls as needed, using LLMs to determine the appropriate endpoint and payload. Users can define API actions, validate schemas, and integrate a user-friendly chat bubble into their SaaS app. The tool is capable of calling APIs, transforming responses, and populating request fields based on context. It is not suitable for handling large APIs without JSON transformers. Users can teach the copilot via flows and embed it in their app with minimal code.

aiounifi

Aiounifi is a Python library that provides a simple interface for interacting with the Unifi Controller API. It allows users to easily manage their Unifi network devices, such as access points, switches, and gateways, through automated scripts or applications. With Aiounifi, users can retrieve device information, perform configuration changes, monitor network performance, and more, all through a convenient and efficient API wrapper. This library simplifies the process of integrating Unifi network management into custom solutions, making it ideal for network administrators, developers, and enthusiasts looking to automate and streamline their network operations.

verl-tool

The verl-tool is a versatile command-line utility designed to streamline various tasks related to version control and code management. It provides a simple yet powerful interface for managing branches, merging changes, resolving conflicts, and more. With verl-tool, users can easily track changes, collaborate with team members, and ensure code quality throughout the development process. Whether you are a beginner or an experienced developer, verl-tool offers a seamless experience for version control operations.

LightLLM

LightLLM is a lightweight library for linear and logistic regression models. It provides a simple and efficient way to train and deploy machine learning models for regression tasks. The library is designed to be easy to use and integrate into existing projects, making it suitable for both beginners and experienced data scientists. With LightLLM, users can quickly build and evaluate regression models using a variety of algorithms and hyperparameters. The library also supports feature engineering and model interpretation, allowing users to gain insights from their data and make informed decisions based on the model predictions.

For similar tasks

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

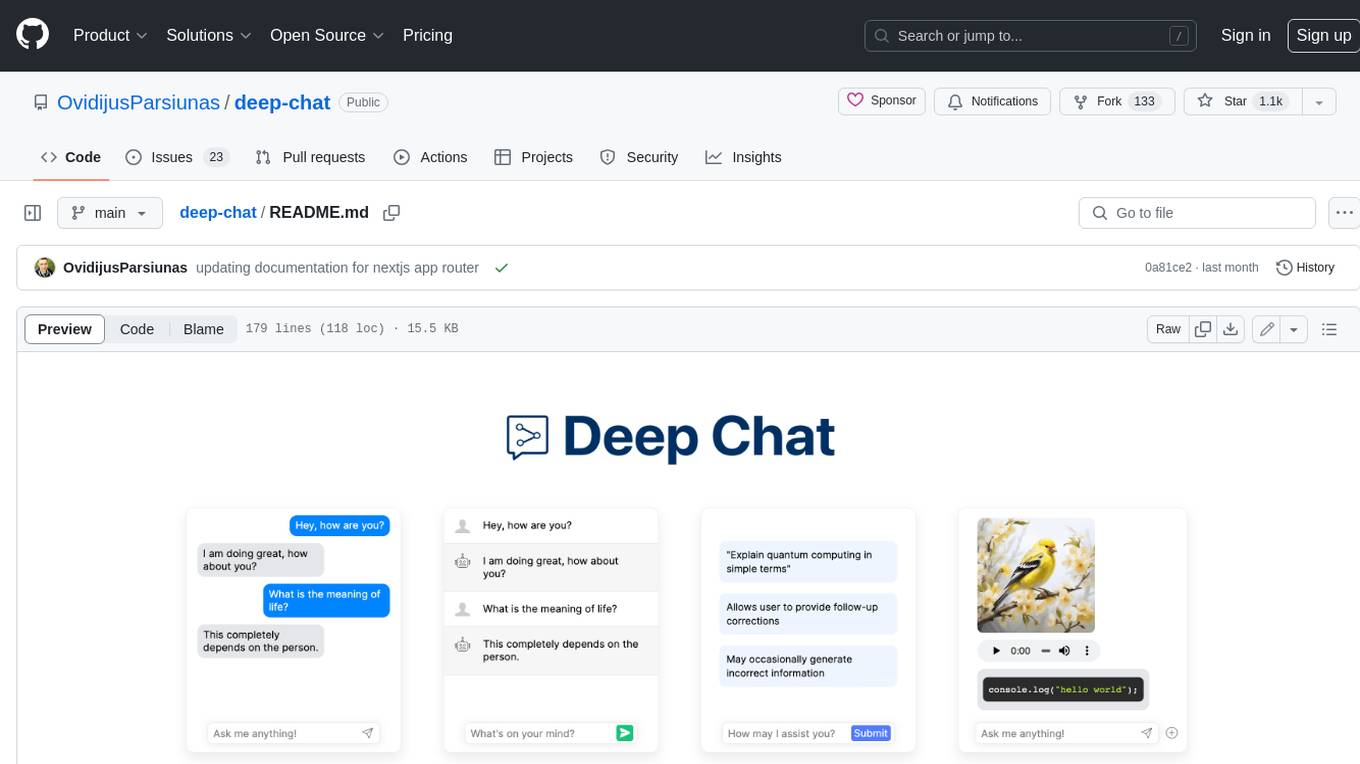

deep-chat

Deep Chat is a fully customizable AI chat component that can be injected into your website with minimal to no effort. Whether you want to create a chatbot that leverages popular APIs such as ChatGPT or connect to your own custom service, this component can do it all! Explore deepchat.dev to view all of the available features, how to use them, examples and more!

Avalonia-Assistant

Avalonia-Assistant is an open-source desktop intelligent assistant that aims to provide a user-friendly interactive experience based on the Avalonia UI framework and the integration of Semantic Kernel with OpenAI or other large LLM models. By utilizing Avalonia-Assistant, you can perform various desktop operations through text or voice commands, enhancing your productivity and daily office experience.

chatgpt-web

ChatGPT Web is a web application that provides access to the ChatGPT API. It offers two non-official methods to interact with ChatGPT: through the ChatGPTAPI (using the `gpt-3.5-turbo-0301` model) or through the ChatGPTUnofficialProxyAPI (using a web access token). The ChatGPTAPI method is more reliable but requires an OpenAI API key, while the ChatGPTUnofficialProxyAPI method is free but less reliable. The application includes features such as user registration and login, synchronization of conversation history, customization of API keys and sensitive words, and management of users and keys. It also provides a user interface for interacting with ChatGPT and supports multiple languages and themes.

tiledesk-dashboard

Tiledesk is an open-source live chat platform with integrated chatbots written in Node.js and Express. It is designed to be a multi-channel platform for web, Android, and iOS, and it can be used to increase sales or provide post-sales customer service. Tiledesk's chatbot technology allows for automation of conversations, and it also provides APIs and webhooks for connecting external applications. Additionally, it offers a marketplace for apps and features such as CRM, ticketing, and data export.

UFO

UFO is a UI-focused dual-agent framework to fulfill user requests on Windows OS by seamlessly navigating and operating within individual or spanning multiple applications.