WorkflowAI

WorkflowAI is an open-source platform where product and engineering teams collaborate to build and iterate on AI features.

Stars: 436

WorkflowAI is a powerful tool designed to streamline and automate various tasks within the workflow process. It provides a user-friendly interface for creating custom workflows, automating repetitive tasks, and optimizing efficiency. With WorkflowAI, users can easily design, execute, and monitor workflows, allowing for seamless integration of different tools and systems. The tool offers advanced features such as conditional logic, task dependencies, and error handling to ensure smooth workflow execution. Whether you are managing project tasks, processing data, or coordinating team activities, WorkflowAI simplifies the workflow management process and enhances productivity.

README:

-

Faster Time to Market: Build production-ready AI features in minutes through a web-app – no coding required.

-

Interactive Playground: Test and compare 80+ leading AI models side-by-side in our visual playground. See the difference in responses, costs, and latency. Try it now.

https://github.com/user-attachments/assets/febf1047-ed85-4af0-b796-5242aef051b4

- Model-agnostic: Works with all major AI models including OpenAI, Anthropic, Claude, Google/Gemini, Mistral, DeepSeek, Grok with a unified interface that makes switching between providers seamless. View all 80+ supported models.

-

Open-source and flexible deployment: WorkflowAI is fully open-source with flexible deployment options. Run it self-hosted on your own infrastructure for maximum data control, or use the managed WorkflowAI Cloud service for hassle-free updates and automatic scaling.

-

Observability integrated: Built-in monitoring and logging capabilities that provide insights into your AI workflows, making debugging and optimization straightforward. Learn more about observability features.

https://github.com/user-attachments/assets/ae260da3-06ed-4ba0-824b-a9cab4fadb6f

- Cost tracking: Automatically calculates and tracks the cost of each AI model run, providing transparency and helping you manage your AI budget effectively. Learn more about cost tracking.

- Structured output: WorkflowAI ensures your AI responses always match your defined structure, simplifying integrations, reducing parsing errors, and making your data reliable and ready for use. Learn more about structured input and output.

- Easy integration with SDKs for Python, Typescript and a REST API. View code examples here.

https://github.com/user-attachments/assets/261c3a5a-16ac-4c29-bc30-5ec725a0619d

- Instant Prompt Updates: Tired of creating tickets just to tweak a prompt? Update prompts and models with a single click - no code changes or engineering work required. Go from feedback to fix in seconds.

https://github.com/user-attachments/assets/0c81d596-ec70-43bc-80a8-ceddcd26b9d9

- Automatic Provider Failover: OpenAI experiences 40+ minutes of downtime per month. With WorkflowAI, traffic automatically reroutes to backup providers (like Azure OpenAI for OpenAI, or Amazon Bedrock for Anthropic) during outages - no configuration needed and at no extra cost. Your users won't even notice the switch.

- Streaming supported: Enables real-time streaming of AI responses for low latency applications, with immediate validation of partial outputs. Learn more about streaming capabilities.

https://github.com/user-attachments/assets/4cf6e65a-a7b4-4b93-a30c-7d28b22e1553

- Hosted tools: Comes with powerful hosted tools like web search and web browsing capabilities, allowing your agents to access real-time information from the internet. These tools enable your AI applications to retrieve up-to-date data, research topics, and interact with web content without requiring complex integrations. Learn more about hosted tools.

https://github.com/user-attachments/assets/9329af26-1222-4d5d-a68d-2bb4675261e2

- Multimodality support: Build agents that can handle multiple modalities, such as images, PDFs, documents, and audio. Try it here.

https://github.com/user-attachments/assets/0cd54e38-6e6d-42f2-aa7d-365970151375

- Developer-Friendly: Need more control? Seamlessly extend functionality with our Python SDK when you need custom logic.

import workflowai

from pydantic import BaseModel

from workflowai import Model

class MeetingInput(BaseModel):

meeting_transcript: str

class MeetingOutput(BaseModel):

summary: str

key_items: list[str]

action_items: list[str]

@workflowai.agent()

async def extract_meeting_info(meeting_input: MeetingInput) -> MeetingOutput:

...Fully managed solution with zero infrastructure setup required. Pay exactly what you'd pay the model providers — billed per token, with no minimums and no per-seat fees. No markups. We make our margin from provider discounts, not by charging you extra. Enterprise-ready with SOC2 compliance and high-availability infrastructure. We maintain strict data privacy - your data is never used for training.

The Docker Compose file is provided as a quick way to spin up a local instance of WorkflowAI. It is configured to be self contained viable from the start.

# Create a base environment file that will be used by the docker compose

# You should likely update the .env file to include some provider keys, see Configuring Provider keys below

cp .env.sample .env

# Build the client and api docker image

# By default the docker compose builds development images, see the `target` keys

docker-compose build

# [Optional] Start the dependencies in the background, this way we can shut down the app while

# keeping the dependencies running

docker-compose up -d clickhouse minio redis mongo

# Start the docker images

docker-compose up

# The WorkflowAI api is also a WorkflowAI user

# Since all the agents the api uses are hosted in WorkflowAI

# So you'll need to create a Workflow AI api key

# Open http://localhost:3000/organization/settings/api-keys and create an api key

# Then update the WORKFLOWAI_API_KEY in your .env file

open http://localhost:3000/organization/settings/api-keys

# The kill the containers (ctrl c) and restart them

docker-compose upAlthough it is configured for local development via hot reloads and volumes, Docker introduces significant latencies for development. Detailed setup for both the client and api are provided in their respective READMEs.

WorkflowAI connects to a variety of providers (see the Provider enum). There are two ways to configure providers:

-

Globally, using environment variables. The provider environment sample provides information on requirements for each provider.

-

Per tenant, through the UI, by navigating to

../organization/settings/providers

Several features of the website rely on providers being configured either globally or for the tenant that is used internally. For example, at the time of writing, the agent that allows building agents with natural language uses Claude 3.7 so either Anthropic or Bedrock should be configured. All the agents that WorkflowAI uses are located in the agents directory

For now, we rely on public read access to the storage in the frontend. The URLs are not discoverable though so it should be ok until we implement temporary leases for files. On minio that's possible with the following commands

# Run sh inside the running minio container

docker-compose exec minio sh

# Create an alias for the bucket

mc anonymous set download myminio/workflowai-task-runs

# Set download permissions

mc alias set myminio http://minio:9000 minio miniosecretThe api provides is the Python backend for WorkflowAI. It is structured as a FastAPI server and a TaskIQ based worker.

The client is a NextJS app that serves as a frontend

- MongoDB: we use MongoDB to store all the internal data

- Clickhouse: Clickhouse is used to store the run data. We first stored the run data in Mongo but it quickly got out of hand with storage costs and query duration.

- Redis: We use Redis as a broker for messages for taskiq. TaskIQ supports a number of different message broker. Redis 6.0 and above are supported.

-

Minio is used to store files but any S3 compatible storage will do. We also have a plugin for Azure Blob Storage.

The selected storage depends on the

WORKFLOWAI_STORAGE_CONNECTION_STRINGenv variable. A variable starting withs3://will result in the S3 storage being used.

WorkflowAI supports a variety of LLM providers (OpenAI, Anthropic, Amazon Bedrock, Azure OpenAI, Grok, Gemini, FireworksAI, ...). View all supported providers here.

Each provider has a different set of credentials and configuration. Providers that have the required environment variables are loaded by default (see the sample env for the available variables). Providers can also be configured per tenant through the UI.

To find answers to your questions, please refer to the Documentation, ask a question in the Q&A section of our GitHub Discussions or join our Discord.

WorkflowAI is licensed under the Apache 2.0 License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for WorkflowAI

Similar Open Source Tools

WorkflowAI

WorkflowAI is a powerful tool designed to streamline and automate various tasks within the workflow process. It provides a user-friendly interface for creating custom workflows, automating repetitive tasks, and optimizing efficiency. With WorkflowAI, users can easily design, execute, and monitor workflows, allowing for seamless integration of different tools and systems. The tool offers advanced features such as conditional logic, task dependencies, and error handling to ensure smooth workflow execution. Whether you are managing project tasks, processing data, or coordinating team activities, WorkflowAI simplifies the workflow management process and enhances productivity.

orchestkit

OrchestKit is a powerful and flexible orchestration tool designed to streamline and automate complex workflows. It provides a user-friendly interface for defining and managing orchestration tasks, allowing users to easily create, schedule, and monitor workflows. With support for various integrations and plugins, OrchestKit enables seamless automation of tasks across different systems and applications. Whether you are a developer looking to automate deployment processes or a system administrator managing complex IT operations, OrchestKit offers a comprehensive solution to simplify and optimize your workflow management.

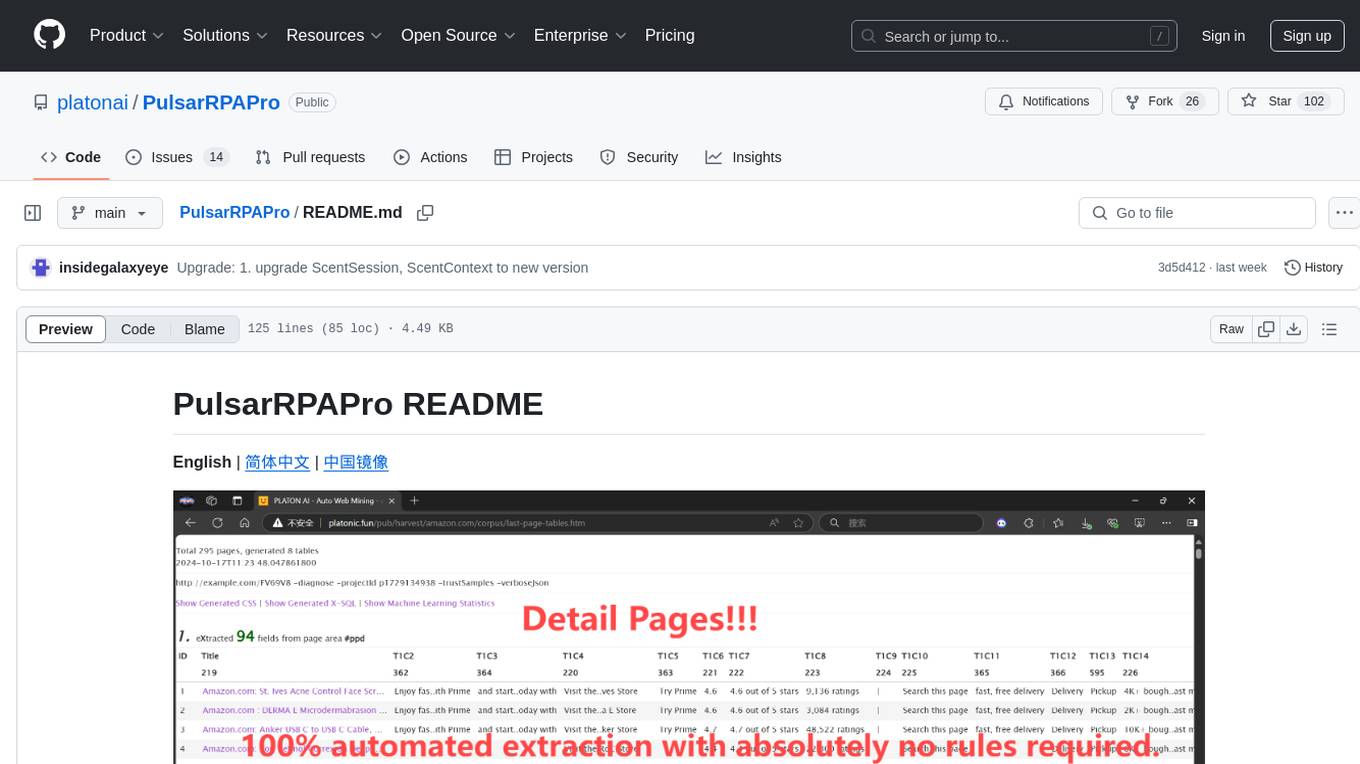

PulsarRPAPro

PulsarRPAPro is a powerful robotic process automation (RPA) tool designed to automate repetitive tasks and streamline business processes. It offers a user-friendly interface for creating and managing automation workflows, allowing users to easily automate tasks without the need for extensive programming knowledge. With features such as task scheduling, data extraction, and integration with various applications, PulsarRPAPro helps organizations improve efficiency and productivity by reducing manual work and human errors. Whether you are a small business looking to automate simple tasks or a large enterprise seeking to optimize complex processes, PulsarRPAPro provides the flexibility and scalability to meet your automation needs.

ome

Ome is a versatile tool designed for managing and organizing tasks and projects efficiently. It provides a user-friendly interface for creating, tracking, and prioritizing tasks, as well as collaborating with team members. With Ome, users can easily set deadlines, assign tasks, and monitor progress to ensure timely completion of projects. The tool offers customizable features such as tags, labels, and filters to streamline task management and improve productivity. Ome is suitable for individuals, teams, and organizations looking to enhance their task management process and achieve better results.

gaia

Gaia is a powerful open-source tool for managing infrastructure as code. It allows users to define and provision cloud resources using simple configuration files. With Gaia, you can automate the deployment and scaling of your applications, ensuring consistency and reliability across your infrastructure. The tool supports multiple cloud providers and offers a user-friendly interface for managing your resources efficiently. Gaia simplifies the process of infrastructure management, making it easier for teams to collaborate and deploy applications seamlessly.

Sage

Sage is a production-ready, modular, and intelligent multi-agent orchestration framework for complex problem solving. It intelligently breaks down complex tasks into manageable subtasks through seamless agent collaboration. Sage provides Deep Research Mode for comprehensive analysis and Rapid Execution Mode for quick task completion. It offers features like intelligent task decomposition, agent orchestration, extensible tool system, dual execution modes, interactive web interface, advanced token tracking, rich configuration, developer-friendly APIs, and robust error recovery mechanisms. Sage supports custom workflows, multi-agent collaboration, custom agent development, agent flow orchestration, rule preferences system, message manager for smart token optimization, task manager for comprehensive state management, advanced file system operations, advanced tool system with plugin architecture, token usage & cost monitoring, and rich configuration system. It also includes real-time streaming & monitoring, advanced tool development, error handling & reliability, performance monitoring, MCP server integration, and security features.

tools

Strands Agents Tools is a community-driven project that provides a powerful set of tools for your agents to use. It bridges the gap between large language models and practical applications by offering ready-to-use tools for file operations, system execution, API interactions, mathematical operations, and more. The tools cover a wide range of functionalities including file operations, shell integration, memory storage, web infrastructure, HTTP client, Slack client, Python execution, mathematical tools, AWS integration, image and video processing, audio output, environment management, task scheduling, advanced reasoning, swarm intelligence, dynamic MCP client, parallel tool execution, browser automation, diagram creation, RSS feed management, and computer automation.

promptl

Promptl is a versatile command-line tool designed to streamline the process of creating and managing prompts for user input in various programming projects. It offers a simple and efficient way to prompt users for information, validate their input, and handle different scenarios based on their responses. With Promptl, developers can easily integrate interactive prompts into their scripts, applications, and automation workflows, enhancing user experience and improving overall usability. The tool provides a range of customization options and features, making it suitable for a wide range of use cases across different programming languages and environments.

J.A.R.V.I.S.

J.A.R.V.I.S.1.0 is an advanced virtual assistant tool designed to assist users in various tasks. It provides a wide range of functionalities including voice commands, task automation, information retrieval, and communication management. With its intuitive interface and powerful capabilities, J.A.R.V.I.S.1.0 aims to enhance productivity and streamline daily activities for users.

pullfrog

Pullfrog is a versatile tool for managing and automating GitHub pull requests. It provides a simple and intuitive interface for developers to streamline their workflow and collaborate more efficiently. With Pullfrog, users can easily create, review, merge, and manage pull requests, all within a single platform. The tool offers features such as automated testing, code review, and notifications to help teams stay organized and productive. Whether you are a solo developer or part of a large team, Pullfrog can help you simplify the pull request process and improve code quality.

spec-workflow-mcp

Spec Workflow MCP is a Model Context Protocol (MCP) server that offers structured spec-driven development workflow tools for AI-assisted software development. It includes a real-time web dashboard and a VSCode extension for monitoring and managing project progress directly in the development environment. The tool supports sequential spec creation, real-time monitoring of specs and tasks, document management, archive system, task progress tracking, approval workflow, bug reporting, template system, and works on Windows, macOS, and Linux.

verl-tool

The verl-tool is a versatile command-line utility designed to streamline various tasks related to version control and code management. It provides a simple yet powerful interface for managing branches, merging changes, resolving conflicts, and more. With verl-tool, users can easily track changes, collaborate with team members, and ensure code quality throughout the development process. Whether you are a beginner or an experienced developer, verl-tool offers a seamless experience for version control operations.

context-portal

Context-portal is a versatile tool for managing and visualizing data in a collaborative environment. It provides a user-friendly interface for organizing and sharing information, making it easy for teams to work together on projects. With features such as customizable dashboards, real-time updates, and seamless integration with popular data sources, Context-portal streamlines the data management process and enhances productivity. Whether you are a data analyst, project manager, or team leader, Context-portal offers a comprehensive solution for optimizing workflows and driving better decision-making.

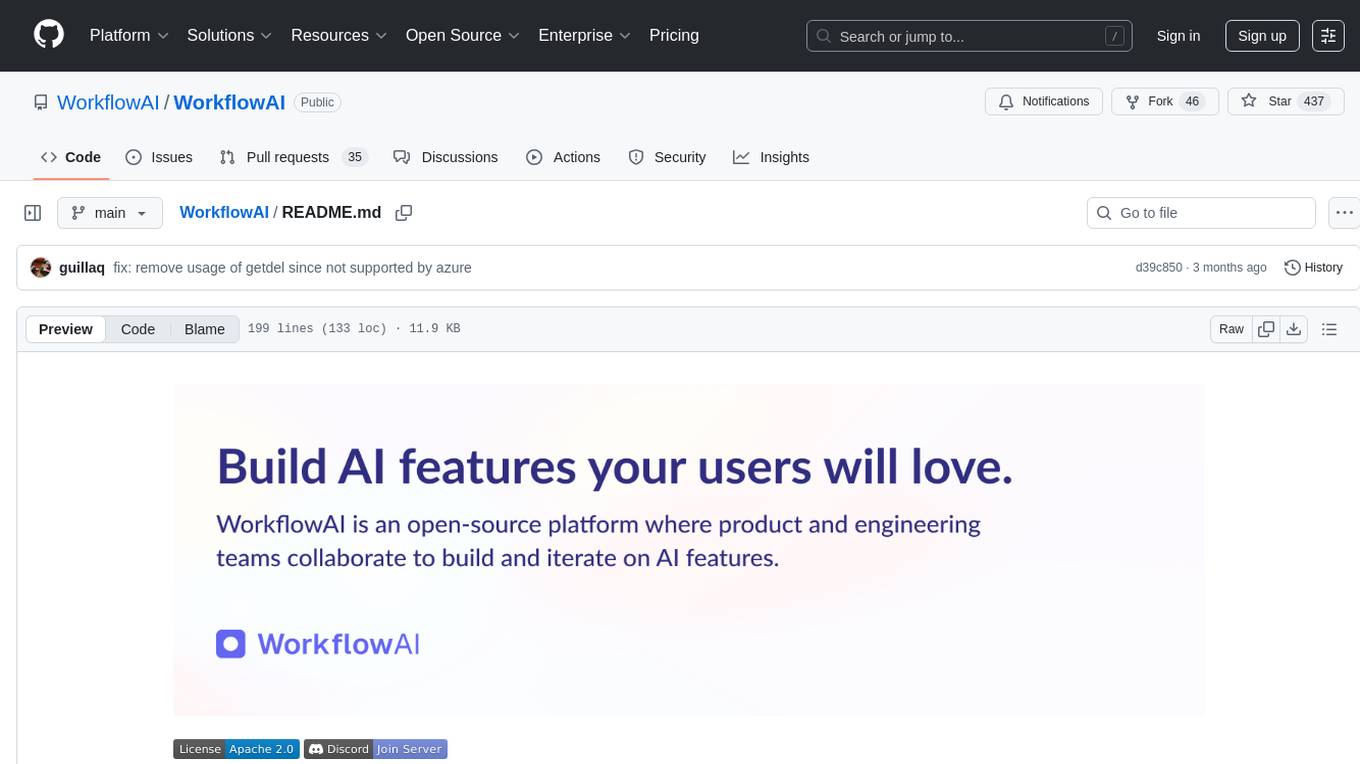

omnichain

OmniChain is a tool for building efficient self-updating visual workflows using AI language models, enabling users to automate tasks, create chatbots, agents, and integrate with existing frameworks. It allows users to create custom workflows guided by logic processes, store and recall information, and make decisions based on that information. The tool enables users to create tireless robot employees that operate 24/7, access the underlying operating system, generate and run NodeJS code snippets, and create custom agents and logic chains. OmniChain is self-hosted, open-source, and available for commercial use under the MIT license, with no coding skills required.

trubrics-sdk

Trubrics-sdk is a software development kit designed to facilitate the integration of analytics features into applications. It provides a set of tools and functionalities that enable developers to easily incorporate analytics capabilities, such as data collection, analysis, and reporting, into their software products. The SDK streamlines the process of implementing analytics solutions, allowing developers to focus on building and enhancing their applications' functionality and user experience. By leveraging trubrics-sdk, developers can quickly and efficiently integrate robust analytics features, gaining valuable insights into user behavior and application performance.

Awesome-Repo-Level-Code-Generation

This repository contains a collection of tools and scripts for generating code at the repository level. It provides a set of utilities to automate the process of creating and managing code across multiple files and directories. The tools included in this repository aim to improve code generation efficiency and maintainability by streamlining the development workflow. With a focus on enhancing productivity and reducing manual effort, this collection offers a variety of code generation options and customization features to suit different project requirements.

For similar tasks

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

floneum

Floneum is a graph editor that makes it easy to develop your own AI workflows. It uses large language models (LLMs) to run AI models locally, without any external dependencies or even a GPU. This makes it easy to use LLMs with your own data, without worrying about privacy. Floneum also has a plugin system that allows you to improve the performance of LLMs and make them work better for your specific use case. Plugins can be used in any language that supports web assembly, and they can control the output of LLMs with a process similar to JSONformer or guidance.

mindsdb

MindsDB is a platform for customizing AI from enterprise data. You can create, serve, and fine-tune models in real-time from your database, vector store, and application data. MindsDB "enhances" SQL syntax with AI capabilities to make it accessible for developers worldwide. With MindsDB’s nearly 200 integrations, any developer can create AI customized for their purpose, faster and more securely. Their AI systems will constantly improve themselves — using companies’ own data, in real-time.

aiscript

AiScript is a lightweight scripting language that runs on JavaScript. It supports arrays, objects, and functions as first-class citizens, and is easy to write without the need for semicolons or commas. AiScript runs in a secure sandbox environment, preventing infinite loops from freezing the host. It also allows for easy provision of variables and functions from the host.

activepieces

Activepieces is an open source replacement for Zapier, designed to be extensible through a type-safe pieces framework written in Typescript. It features a user-friendly Workflow Builder with support for Branches, Loops, and Drag and Drop. Activepieces integrates with Google Sheets, OpenAI, Discord, and RSS, along with 80+ other integrations. The list of supported integrations continues to grow rapidly, thanks to valuable contributions from the community. Activepieces is an open ecosystem; all piece source code is available in the repository, and they are versioned and published directly to npmjs.com upon contributions. If you cannot find a specific piece on the pieces roadmap, please submit a request by visiting the following link: Request Piece Alternatively, if you are a developer, you can quickly build your own piece using our TypeScript framework. For guidance, please refer to the following guide: Contributor's Guide

superagent-js

Superagent is an open source framework that enables any developer to integrate production ready AI Assistants into any application in a matter of minutes.

For similar jobs

WorkflowAI

WorkflowAI is a powerful tool designed to streamline and automate various tasks within the workflow process. It provides a user-friendly interface for creating custom workflows, automating repetitive tasks, and optimizing efficiency. With WorkflowAI, users can easily design, execute, and monitor workflows, allowing for seamless integration of different tools and systems. The tool offers advanced features such as conditional logic, task dependencies, and error handling to ensure smooth workflow execution. Whether you are managing project tasks, processing data, or coordinating team activities, WorkflowAI simplifies the workflow management process and enhances productivity.

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.