ai-commit

Automagically generate conventional git commit messages with AI. - 使用 AI 自动生成约定式 git 提交信息。

Stars: 210

ai-commit is a tool that automagically generates conventional git commit messages using AI. It supports various generators like Bito Cli, ERNIE-Bot-turbo, ERNIE-Bot, Moonshot, and OpenAI Chat. The tool requires PHP version 7.3 or higher for installation. Users can configure generators, set API keys, and easily generate and commit messages with customizable options. Additionally, ai-commit provides commands for managing configurations, self-updating, and shell completion scripts.

README:

Automagically generate conventional git commit message with AI. - 使用 AI 自动生成约定式 git 提交信息。

- [x] Bito Cli

- [x] ERNIE-Bot-turbo

- [x] ERNIE-Bot

- [x] Moonshot

- [x] OpenAI Chat

- [x] OpenAI

- [ ] ...

- PHP >= 7.3

Download the ai-commit file

curl 'https://raw.githubusercontent.com/guanguans/ai-commit/main/builds/ai-commit' -o ai-commit -#

chmod +x ai-commitcomposer global require guanguans/ai-commit --dev -v # global

composer require guanguans/ai-commit --dev -v # local./ai-commit config set generators.bito_cli.path bito-cli-path... --global # Config Bito Cli path(Optional)

./ai-commit config set generators.openai.api_key sk-... --global # Config OpenAI API key

./ai-commit config set generators.openai_chat.api_key sk-... --global # Config OpenAI API key

./ai-commit config set generator openai_chat --global # Config default generator(Optional)

./ai-commit commit # Generate and commit message╰─ ./ai-commit commit --generator=openai_chat --no-edit --no-verify --ansi ─╯

1. Generating commit message: generating...

{

"subject": "chore(ai-commit): update settings and commands",

"body": "- Set Width to 1200\n- Set Height to 742\n- Set TypingSpeed to 10ms\n- Set PlaybackSpeed to 0.2\n- Update git commands and sleep times"

}

1. Generating commit message: ✔

2. Confirming commit message: confirming...

+------------------------------------------------+---------------------------------------+

| subject | body |

+------------------------------------------------+---------------------------------------+

| chore(ai-commit): update settings and commands | - Set Width to 1200 |

| | - Set Height to 742 |

| | - Set TypingSpeed to 10ms |

| | - Set PlaybackSpeed to 0.2 |

| | - Update git commands and sleep times |

+------------------------------------------------+---------------------------------------+

Do you want to commit this message? (yes/no) [yes]:

>

2. Confirming commit message: ✔

3. Committing message: committing...

3. Committing message: ✔

[OK] Successfully generated and committed message.

╰─ ./ai-commit list ─╯

_____ _____ _ _

/\ |_ _| / ____| (_) |

/ \ | | | | ___ _ __ ___ _ __ ___ _| |_

/ /\ \ | | | | / _ \| '_ ` _ \| '_ ` _ \| | __|

/ ____ \ _| |_ | |___| (_) | | | | | | | | | | | | |_

/_/ \_\_____| \_____\___/|_| |_| |_|_| |_| |_|_|\__|

1.2.5

USAGE: ai-commit <command> [options] [arguments]

commit Automagically generate conventional commit message with AI.

completion Dump the shell completion script

config Manage config options.

self-update Allows to self-update a build application

thanks Thanks for using this tool../ai-commit config [set, get, unset, reset, list, edit] key value --global

./ai-commit config set key value

./ai-commit config get key

./ai-commit config unset key

./ai-commit config reset key

./ai-commit config list

./ai-commit config edit╰─ ./ai-commit self-update ─╯

Checking for a new version...

=============================

[OK] Updated from version 1.2.4 to v1.2.5.

╰─ ./ai-commit commit --help ─╯

Description:

Automagically generate conventional commit message with AI.

Usage:

commit [options] [--] [<path>]

Arguments:

path The working directory [default: "/Users/yaozm/Documents/develop/ai-commit"]

Options:

--commit-options[=COMMIT-OPTIONS] Append options for the `git commit` command [default: ["--edit"]] (multiple values allowed)

--diff-options[=DIFF-OPTIONS] Append options for the `git diff` command [default: [":!*.lock",":!*.sum"]] (multiple values allowed)

-g, --generator=GENERATOR Specify generator name [default: "openai_chat"]

-p, --prompt=PROMPT Specify prompt name of message generated [default: "conventional"]

--no-edit Enable or disable git commit `--no-edit` option

--no-verify Enable or disable git commit `--no-verify` option

-c, --config[=CONFIG] Specify config file

--retry-times=RETRY-TIMES Specify times of retry [default: 3]

--retry-sleep=RETRY-SLEEP Specify sleep milliseconds of retry [default: 200]

-h, --help Display help for the given command. When no command is given display help for the list command

-q, --quiet Do not output any message

-V, --version Display this application version

--ansi|--no-ansi Force (or disable --no-ansi) ANSI output

-n, --no-interaction Do not ask any interactive question

--env[=ENV] The environment the command should run under

-v|vv|vvv, --verbose Increase the verbosity of messages: 1 for normal output, 2 for more verbose output and 3 for debugcomposer testPlease see CHANGELOG for more information on what has changed recently.

Please see CONTRIBUTING for details.

Please review our security policy on how to report security vulnerabilities.

The MIT License (MIT). Please see License File for more information.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ai-commit

Similar Open Source Tools

ai-commit

ai-commit is a tool that automagically generates conventional git commit messages using AI. It supports various generators like Bito Cli, ERNIE-Bot-turbo, ERNIE-Bot, Moonshot, and OpenAI Chat. The tool requires PHP version 7.3 or higher for installation. Users can configure generators, set API keys, and easily generate and commit messages with customizable options. Additionally, ai-commit provides commands for managing configurations, self-updating, and shell completion scripts.

PraisonAI

Praison AI is a low-code, centralised framework that simplifies the creation and orchestration of multi-agent systems for various LLM applications. It emphasizes ease of use, customization, and human-agent interaction. The tool leverages AutoGen and CrewAI frameworks to facilitate the development of AI-generated scripts and movie concepts. Users can easily create, run, test, and deploy agents for scriptwriting and movie concept development. Praison AI also provides options for full automatic mode and integration with OpenAI models for enhanced AI capabilities.

agentscope

AgentScope is a multi-agent platform designed to empower developers to build multi-agent applications with large-scale models. It features three high-level capabilities: Easy-to-Use, High Robustness, and Actor-Based Distribution. AgentScope provides a list of `ModelWrapper` to support both local model services and third-party model APIs, including OpenAI API, DashScope API, Gemini API, and ollama. It also enables developers to rapidly deploy local model services using libraries such as ollama (CPU inference), Flask + Transformers, Flask + ModelScope, FastChat, and vllm. AgentScope supports various services, including Web Search, Data Query, Retrieval, Code Execution, File Operation, and Text Processing. Example applications include Conversation, Game, and Distribution. AgentScope is released under Apache License 2.0 and welcomes contributions.

libllm

libLLM is an open-source project designed for efficient inference of large language models (LLM) on personal computers and mobile devices. It is optimized to run smoothly on common devices, written in C++14 without external dependencies, and supports CUDA for accelerated inference. Users can build the tool for CPU only or with CUDA support, and run libLLM from the command line. Additionally, there are API examples available for Python and the tool can export Huggingface models.

openlrc

Open-Lyrics is a Python library that transcribes voice files using faster-whisper and translates/polishes the resulting text into `.lrc` files in the desired language using LLM, e.g. OpenAI-GPT, Anthropic-Claude. It offers well preprocessed audio to reduce hallucination and context-aware translation to improve translation quality. Users can install the library from PyPI or GitHub and follow the installation steps to set up the environment. The tool supports GUI usage and provides Python code examples for transcription and translation tasks. It also includes features like utilizing context and glossary for translation enhancement, pricing information for different models, and a list of todo tasks for future improvements.

vnc-lm

vnc-lm is a Discord bot designed for messaging with language models. Users can configure model parameters, branch conversations, and edit prompts to enhance responses. The bot supports various providers like OpenAI, Huggingface, and Cloudflare Workers AI. It integrates with ollama and LiteLLM, allowing users to access a wide range of language model APIs through a single interface. Users can manage models, switch between models, split long messages, and create conversation branches. LiteLLM integration enables support for OpenAI-compatible APIs and local LLM services. The bot requires Docker for installation and can be configured through environment variables. Troubleshooting tips are provided for common issues like context window problems, Discord API errors, and LiteLLM issues.

MooER

MooER (摩耳) is an LLM-based speech recognition and translation model developed by Moore Threads. It allows users to transcribe speech into text (ASR) and translate speech into other languages (AST) in an end-to-end manner. The model was trained using 5K hours of data and is now also available with an 80K hours version. MooER is the first LLM-based speech model trained and inferred using domestic GPUs. The repository includes pretrained models, inference code, and a Gradio demo for a better user experience.

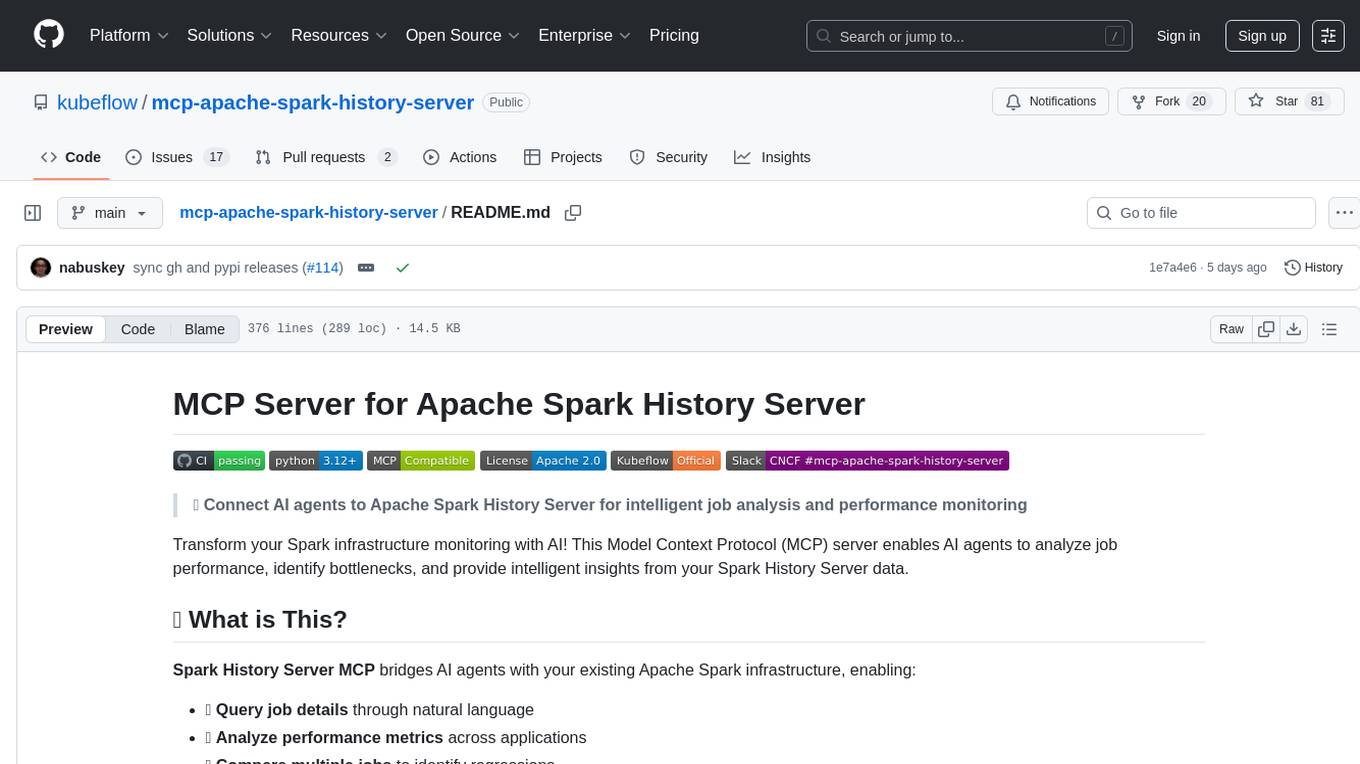

mcp-apache-spark-history-server

The MCP Server for Apache Spark History Server is a tool that connects AI agents to Apache Spark History Server for intelligent job analysis and performance monitoring. It enables AI agents to analyze job performance, identify bottlenecks, and provide insights from Spark History Server data. The server bridges AI agents with existing Apache Spark infrastructure, allowing users to query job details, analyze performance metrics, compare multiple jobs, investigate failures, and generate insights from historical execution data.

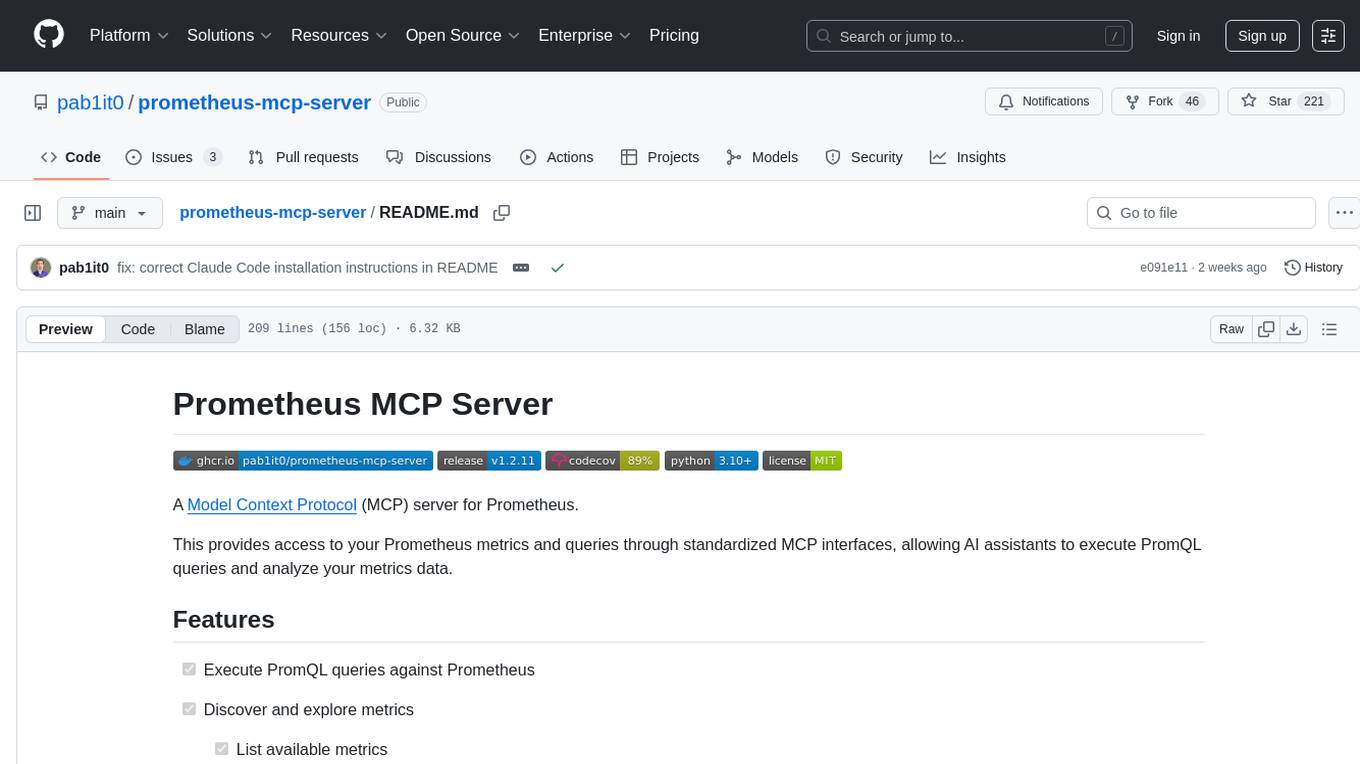

prometheus-mcp-server

Prometheus MCP Server is a Model Context Protocol (MCP) server that provides access to Prometheus metrics and queries through standardized interfaces. It allows AI assistants to execute PromQL queries and analyze metrics data. The server supports executing queries, exploring metrics, listing available metrics, viewing query results, and authentication. It offers interactive tools for AI assistants and can be configured to choose specific tools. Installation methods include using Docker Desktop, MCP-compatible clients like Claude Desktop, VS Code, Cursor, and Windsurf, and manual Docker setup. Configuration options include setting Prometheus server URL, authentication credentials, organization ID, transport mode, and bind host/port. Contributions are welcome, and the project uses `uv` for managing dependencies and includes a comprehensive test suite for functionality testing.

evalplus

EvalPlus is a rigorous evaluation framework for LLM4Code, providing HumanEval+ and MBPP+ tests to evaluate large language models on code generation tasks. It offers precise evaluation and ranking, coding rigorousness analysis, and pre-generated code samples. Users can use EvalPlus to generate code solutions, post-process code, and evaluate code quality. The tool includes tools for code generation and test input generation using various backends.

OSA

OSA (Open-Source-Advisor) is a tool designed to improve the quality of scientific open source projects by automating the generation of README files, documentation, CI/CD scripts, and providing advice and recommendations for repositories. It supports various LLMs accessible via API, local servers, or osa_bot hosted on ITMO servers. OSA is currently under development with features like README file generation, documentation generation, automatic implementation of changes, LLM integration, and GitHub Action Workflow generation. It requires Python 3.10 or higher and tokens for GitHub/GitLab/Gitverse and LLM API key. Users can install OSA using PyPi or build from source, and run it using CLI commands or Docker containers.

onnxruntime-server

ONNX Runtime Server is a server that provides TCP and HTTP/HTTPS REST APIs for ONNX inference. It aims to offer simple, high-performance ML inference and a good developer experience. Users can provide inference APIs for ONNX models without writing additional code by placing the models in the directory structure. Each session can choose between CPU or CUDA, analyze input/output, and provide Swagger API documentation for easy testing. Ready-to-run Docker images are available, making it convenient to deploy the server.

PDFMathTranslate

PDFMathTranslate is a tool designed for translating scientific papers and conducting bilingual comparisons. It preserves formulas, charts, table of contents, and annotations. The tool supports multiple languages and diverse translation services. It provides a command-line tool, interactive user interface, and Docker deployment. Users can try the application through online demos. The tool offers various installation methods including command-line, portable, graphic user interface, and Docker. Advanced options allow users to customize translation settings. Additionally, the tool supports secondary development through APIs for Python and HTTP. Future plans include parsing layout with DocLayNet based models, fixing page rotation and format issues, supporting non-PDF/A files, and integrating plugins for Zotero and Obsidian.

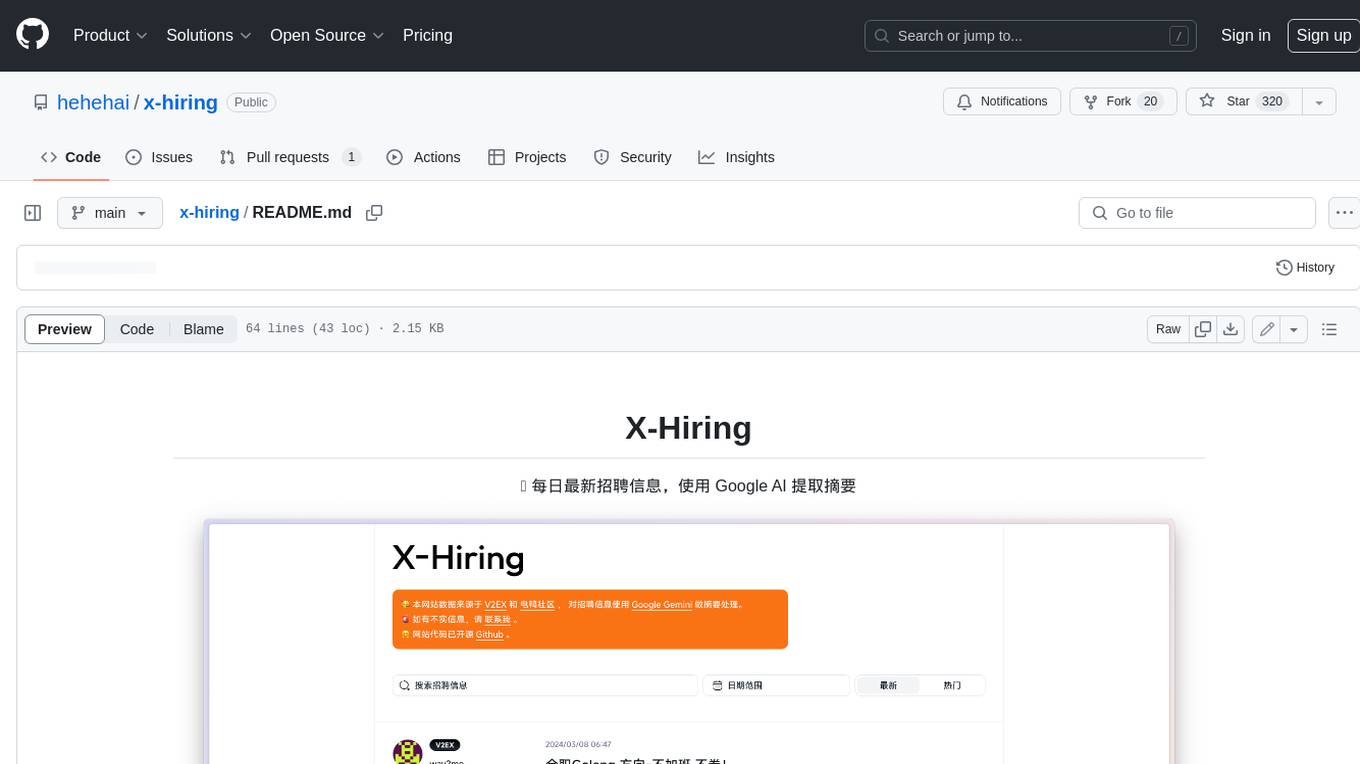

x-hiring

X-Hiring is a job search tool that uses Google AI to extract summaries of the latest job postings. It is easy to install and run, and can be used to find jobs in a variety of fields. X-Hiring is also open source, so you can contribute to its development or create your own custom version.

packages

This repository is a monorepo for NPM packages published under the `@elevenlabs` scope. It contains multiple packages in the `packages` folder. The setup allows for easy development, linking packages, creating new packages, and publishing them with GitHub actions.

agentops

AgentOps is a toolkit for evaluating and developing robust and reliable AI agents. It provides benchmarks, observability, and replay analytics to help developers build better agents. AgentOps is open beta and can be signed up for here. Key features of AgentOps include: - Session replays in 3 lines of code: Initialize the AgentOps client and automatically get analytics on every LLM call. - Time travel debugging: (coming soon!) - Agent Arena: (coming soon!) - Callback handlers: AgentOps works seamlessly with applications built using Langchain and LlamaIndex.

For similar tasks

airflow-code-editor

The Airflow Code Editor Plugin is a tool designed for Apache Airflow users to edit Directed Acyclic Graphs (DAGs) directly within their browser. It offers a user-friendly file management interface for effortless editing, uploading, and downloading of files. With Git support enabled, users can store DAGs in a Git repository, explore Git history, review local modifications, and commit changes. The plugin enhances workflow efficiency by providing seamless DAG management capabilities.

ai-commit

ai-commit is a tool that automagically generates conventional git commit messages using AI. It supports various generators like Bito Cli, ERNIE-Bot-turbo, ERNIE-Bot, Moonshot, and OpenAI Chat. The tool requires PHP version 7.3 or higher for installation. Users can configure generators, set API keys, and easily generate and commit messages with customizable options. Additionally, ai-commit provides commands for managing configurations, self-updating, and shell completion scripts.

twinny

Twinny is a free and open-source AI code completion plugin for Visual Studio Code and compatible editors. It integrates with various tools and frameworks, including Ollama, llama.cpp, oobabooga/text-generation-webui, LM Studio, LiteLLM, and Open WebUI. Twinny offers features such as fill-in-the-middle code completion, chat with AI about your code, customizable API endpoints, and support for single or multiline fill-in-middle completions. It is easy to install via the Visual Studio Code extensions marketplace and provides a range of customization options. Twinny supports both online and offline operation and conforms to the OpenAI API standard.

CodeGPT

CodeGPT is an extension for JetBrains IDEs that provides access to state-of-the-art large language models (LLMs) for coding assistance. It offers a range of features to enhance the coding experience, including code completions, a ChatGPT-like interface for instant coding advice, commit message generation, reference file support, name suggestions, and offline development support. CodeGPT is designed to keep privacy in mind, ensuring that user data remains secure and private.

vscode-i-dont-care-about-commit-message

This AI-powered git commit plugin for VSCode streamlines your commit and push processes, eliminating the need for manual confirmation. With a focus on minimizing keystrokes, the plugin leverages LLM to generate commit messages and automate the entire process. Key features include AI-assisted git commit and push, eliminating the need for the 'git add .' command, and customizable OpenAI model selection. The plugin supports multiple languages, making it accessible to developers worldwide. Additionally, it offers advanced settings for specifying the OpenAI API key, base URL, and conventional commit format. Developers can contribute to the project by following the provided development instructions.

ai-commits-intellij-plugin

AI Commits is a plugin for IntelliJ-based IDEs and Android Studio that generates commit messages using git diff and OpenAI. It offers features such as generating commit messages from diff using OpenAI API, computing diff only from selected files and lines in the commit dialog, creating custom prompts for commit message generation, using predefined variables and hints to customize prompts, choosing any of the models available in OpenAI API, setting OpenAI network proxy, and setting custom OpenAI compatible API endpoint.

aicommit2

AICommit2 is a Reactive CLI tool that streamlines interactions with various AI providers such as OpenAI, Anthropic Claude, Gemini, Mistral AI, Cohere, and unofficial providers like Huggingface and Clova X. Users can request multiple AI simultaneously to generate git commit messages without waiting for all AI responses. The tool runs 'git diff' to grab code changes, sends them to configured AI, and returns the AI-generated commit message. Users can set API keys or Cookies for different providers and configure options like locale, generate number of messages, commit type, proxy, timeout, max-length, and more. AICommit2 can be used both locally with Ollama and remotely with supported providers, offering flexibility and efficiency in generating commit messages.

lobe-cli-toolbox

Lobe CLI Toolbox is an AI CLI Toolbox designed to enhance git commit and i18n workflow efficiency. It includes tools like Lobe Commit for generating Gitmoji-based commit messages and Lobe i18n for automating the i18n translation process. The toolbox also features Lobe label for automatically copying issues labels from a template repo. It supports features such as automatic splitting of large files, incremental updates, and customization options for the OpenAI model, API proxy, and temperature.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.