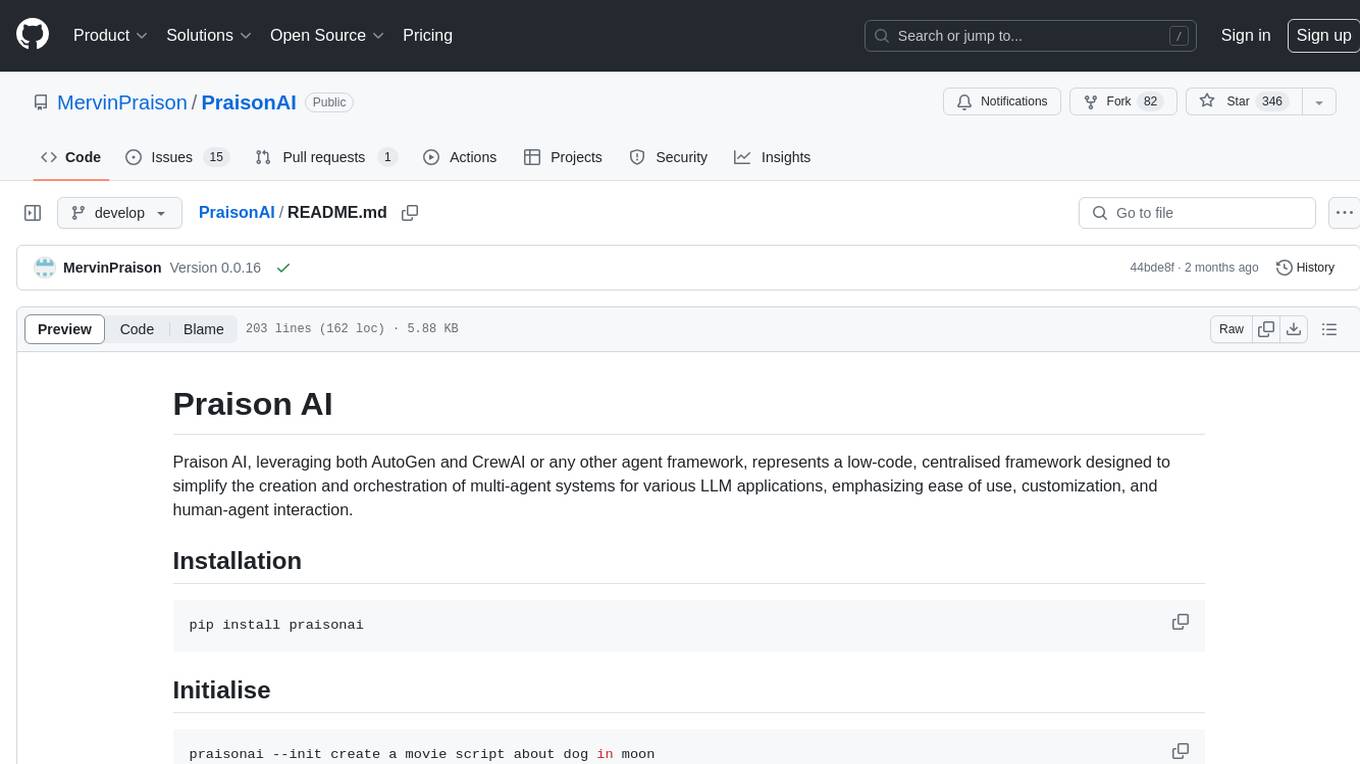

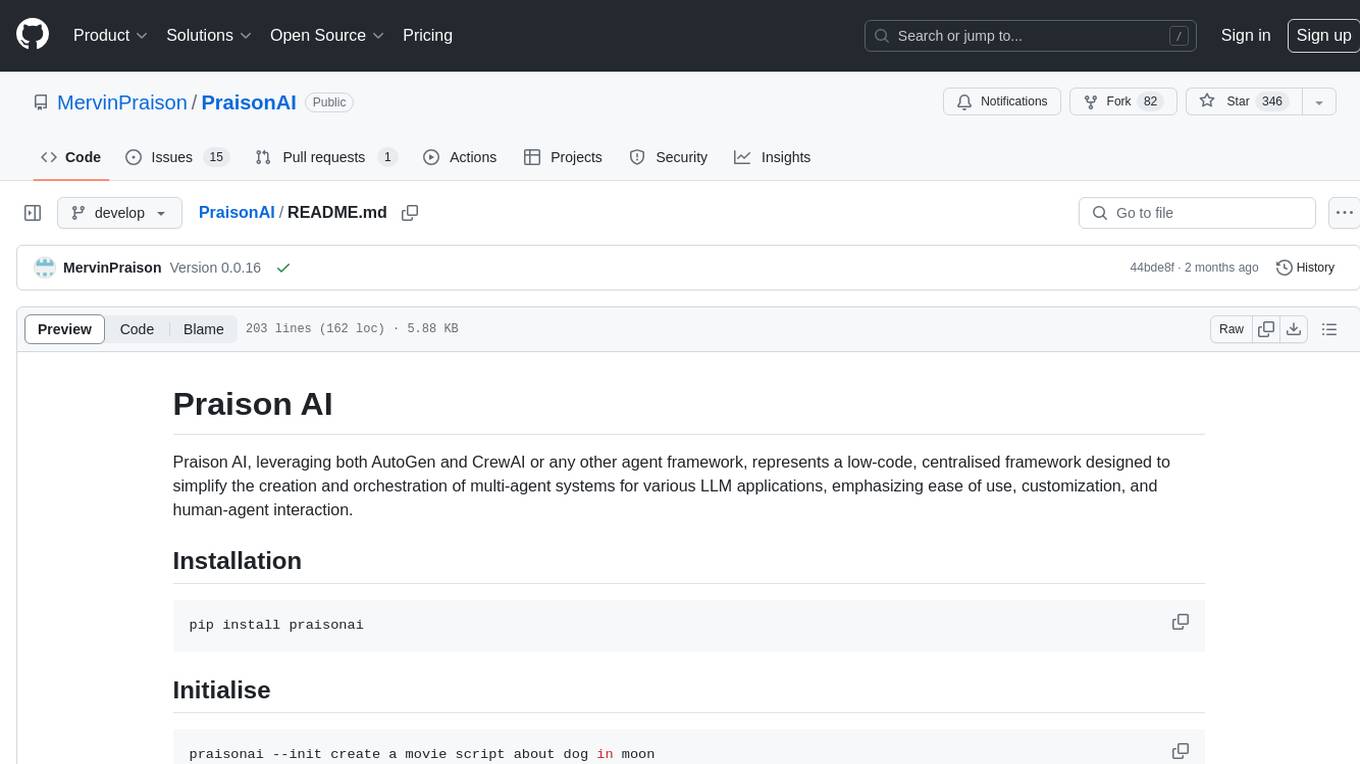

PraisonAI

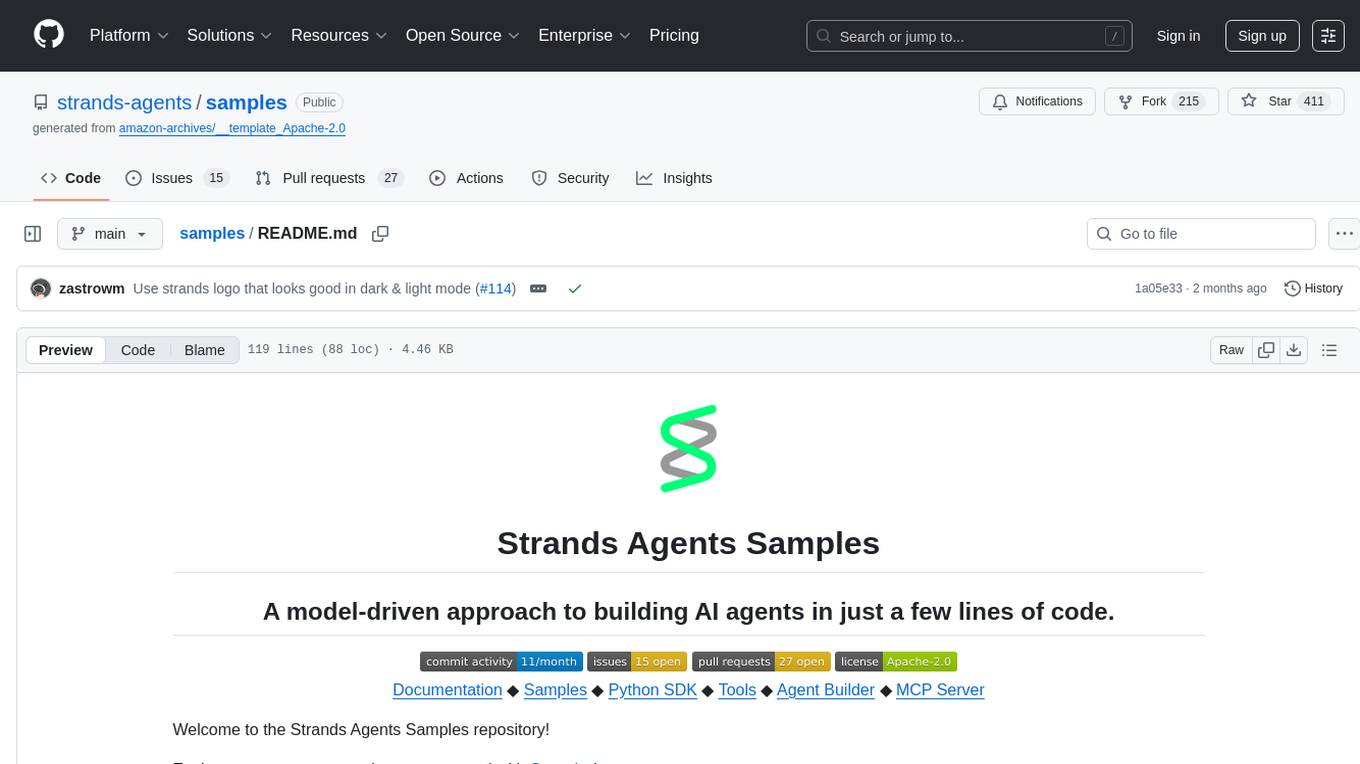

PraisonAI is a production-ready Multi AI Agents framework, designed to create AI Agents to automate and solve problems ranging from simple tasks to complex challenges. It provides a low-code solution to streamline the building and management of multi-agent LLM systems, emphasising simplicity, customisation, and effective human-agent collaboration.

Stars: 5593

Praison AI is a low-code, centralised framework that simplifies the creation and orchestration of multi-agent systems for various LLM applications. It emphasizes ease of use, customization, and human-agent interaction. The tool leverages AutoGen and CrewAI frameworks to facilitate the development of AI-generated scripts and movie concepts. Users can easily create, run, test, and deploy agents for scriptwriting and movie concept development. Praison AI also provides options for full automatic mode and integration with OpenAI models for enhanced AI capabilities.

README:

PraisonAI is a production-ready Multi-AI Agents framework with self-reflection, designed to create AI Agents to automate and solve problems ranging from simple tasks to complex challenges. It provides a low-code solution to streamline the building and management of multi-agent LLM systems, emphasising simplicity, customisation, and effective human-agent collaboration.

Quick Paths:

- 🆕 New here? → Quick Start (1 minute to first agent)

- 📦 Installing? → Installation

- 🐍 Python SDK? → Python Examples

- 🎯 CLI user? → CLI Quick Reference

- 🔧 Need config? → Configuration

- 🤝 Contributing? → Development

Getting Started

Python SDK

-

📘 Python Examples

- 1. Single Agent | 2. Multi Agents | 3. Planning Mode

- 4. Deep Research | 5. Query Rewriter | 6. Agent Memory

- 7. Rules & Instructions | 8. Auto-Generated Memories | 9. Agentic Workflows

- 10. Hooks | 11. Shadow Git Checkpoints | 12. Background Tasks

- 13. Policy Engine | 14. Thinking Budgets | 15. Output Styles

- 16. Context Compaction | 17. Field Names Reference | 18. Extended agents.yaml

- 19. MCP Protocol | 20. A2A Protocol

- 🛠️ Custom Tools

JavaScript SDK

CLI Reference

Configuration & Features

Architecture & Patterns

Data & Persistence

Learning & Community

PraisonAI Agents is the fastest AI agent framework for agent instantiation.

| Framework | Avg Time (μs) | Relative |

|---|---|---|

| PraisonAI | 3.77 | 1.00x (fastest) |

| OpenAI Agents SDK | 5.26 | 1.39x |

| Agno | 5.64 | 1.49x |

| PraisonAI (LiteLLM) | 7.56 | 2.00x |

| PydanticAI | 226.94 | 60.16x |

| LangGraph | 4,558.71 | 1,209x |

Run benchmarks yourself

cd praisonai-agents

python benchmarks/simple_benchmark.pyGet started with PraisonAI in under 1 minute:

# Install

pip install praisonaiagents

# Set API key

export OPENAI_API_KEY=your_key_here

# Create a simple agent

python -c "from praisonaiagents import Agent; Agent(instructions='You are a helpful AI assistant').start('Write a haiku about AI')"Next Steps: Single Agent Example | Multi Agents | CLI Auto Mode

Lightweight package dedicated for coding:

pip install praisonaiagentsFor the full framework with CLI support:

pip install praisonainpm install praisonai| Variable | Required | Description |

|---|---|---|

OPENAI_API_KEY |

Yes* | OpenAI API key |

ANTHROPIC_API_KEY |

No | Anthropic Claude API key |

GOOGLE_API_KEY |

No | Google Gemini API key |

GROQ_API_KEY |

No | Groq API key |

OPENAI_BASE_URL |

No | Custom API endpoint (for Ollama, Groq, etc.) |

*At least one LLM provider API key is required.

# Set your API key

export OPENAI_API_KEY=your_key_here

# For Ollama (local models)

export OPENAI_BASE_URL=http://localhost:11434/v1

# For Groq

export OPENAI_API_KEY=your_groq_key

export OPENAI_BASE_URL=https://api.groq.com/openai/v1🤖 Core Agents

| Feature | Code | Docs |

|---|---|---|

| Single Agent | Example | 📖 |

| Multi Agents | Example | 📖 |

| Auto Agents | Example | 📖 |

| Self Reflection AI Agents | Example | 📖 |

| Reasoning AI Agents | Example | 📖 |

| Multi Modal AI Agents | Example | 📖 |

🔄 Workflows

| Feature | Code | Docs |

|---|---|---|

| Simple Workflow | Example | 📖 |

| Workflow with Agents | Example | 📖 |

Agentic Routing (route()) |

Example | 📖 |

Parallel Execution (parallel()) |

Example | 📖 |

Loop over List/CSV (loop()) |

Example | 📖 |

Evaluator-Optimizer (repeat()) |

Example | 📖 |

| Conditional Steps | Example | 📖 |

| Workflow Branching | Example | 📖 |

| Workflow Early Stop | Example | 📖 |

| Workflow Checkpoints | Example | 📖 |

💻 Code & Development

| Feature | Code | Docs |

|---|---|---|

| Code Interpreter Agents | Example | 📖 |

| AI Code Editing Tools | Example | 📖 |

| External Agents (All) | Example | 📖 |

| Claude Code CLI | Example | 📖 |

| Gemini CLI | Example | 📖 |

| Codex CLI | Example | 📖 |

| Cursor CLI | Example | 📖 |

🧠 Memory & Knowledge

| Feature | Code | Docs |

|---|---|---|

| Memory (Short & Long Term) | Example | 📖 |

| File-Based Memory | Example | 📖 |

| Claude Memory Tool | Example | 📖 |

| Add Custom Knowledge | Example | 📖 |

| RAG Agents | Example | 📖 |

| Chat with PDF Agents | Example | 📖 |

| Data Readers (PDF, DOCX, etc.) | CLI | 📖 |

| Vector Store Selection | CLI | 📖 |

| Retrieval Strategies | CLI | 📖 |

| Rerankers | CLI | 📖 |

| Index Types (Vector/Keyword/Hybrid) | CLI | 📖 |

| Query Engines (Sub-Question, etc.) | CLI | 📖 |

🔬 Research & Intelligence

| Feature | Code | Docs |

|---|---|---|

| Deep Research Agents | Example | 📖 |

| Query Rewriter Agent | Example | 📖 |

| Native Web Search | Example | 📖 |

| Built-in Search Tools | Example | 📖 |

| Unified Web Search | Example | 📖 |

| Web Fetch (Anthropic) | Example | 📖 |

📋 Planning & Execution

| Feature | Code | Docs |

|---|---|---|

| Planning Mode | Example | 📖 |

| Planning Tools | Example | 📖 |

| Planning Reasoning | Example | 📖 |

| Prompt Chaining | Example | 📖 |

| Evaluator Optimiser | Example | 📖 |

| Orchestrator Workers | Example | 📖 |

👥 Specialized Agents

| Feature | Code | Docs |

|---|---|---|

| Data Analyst Agent | Example | 📖 |

| Finance Agent | Example | 📖 |

| Shopping Agent | Example | 📖 |

| Recommendation Agent | Example | 📖 |

| Wikipedia Agent | Example | 📖 |

| Programming Agent | Example | 📖 |

| Math Agents | Example | 📖 |

| Markdown Agent | Example | 📖 |

| Prompt Expander Agent | Example | 📖 |

🎨 Media & Multimodal

| Feature | Code | Docs |

|---|---|---|

| Image Generation Agent | Example | 📖 |

| Image to Text Agent | Example | 📖 |

| Video Agent | Example | 📖 |

| Camera Integration | Example | 📖 |

🔌 Protocols & Integration

| Feature | Code | Docs |

|---|---|---|

| MCP Transports | Example | 📖 |

| WebSocket MCP | Example | 📖 |

| MCP Security | Example | 📖 |

| MCP Resumability | Example | 📖 |

| MCP Config Management | Example | 📖 |

| LangChain Integrated Agents | Example | 📖 |

🛡️ Safety & Control

| Feature | Code | Docs |

|---|---|---|

| Guardrails | Example | 📖 |

| Human Approval | Example | 📖 |

| Rules & Instructions | Example | 📖 |

⚙️ Advanced Features

| Feature | Code | Docs |

|---|---|---|

| Async & Parallel Processing | Example | 📖 |

| Parallelisation | Example | 📖 |

| Repetitive Agents | Example | 📖 |

| Agent Handoffs | Example | 📖 |

| Stateful Agents | Example | 📖 |

| Autonomous Workflow | Example | 📖 |

| Structured Output Agents | Example | 📖 |

| Model Router | Example | 📖 |

| Prompt Caching | Example | 📖 |

| Fast Context | Example | 📖 |

🛠️ Tools & Configuration

| Feature | Code | Docs |

|---|---|---|

| 100+ Custom Tools | Example | 📖 |

| YAML Configuration | Example | 📖 |

| 100+ LLM Support | Example | 📖 |

| Callback Agents | Example | 📖 |

| Hooks | Example | 📖 |

| Middleware System | Example | 📖 |

| Configurable Model | Example | 📖 |

| Rate Limiter | Example | 📖 |

| Injected Tool State | Example | 📖 |

| Shadow Git Checkpoints | Example | 📖 |

| Background Tasks | Example | 📖 |

| Policy Engine | Example | 📖 |

| Thinking Budgets | Example | 📖 |

| Output Styles | Example | 📖 |

| Context Compaction | Example | 📖 |

📊 Monitoring & Management

| Feature | Code | Docs |

|---|---|---|

| Sessions Management | Example | 📖 |

| Auto-Save Sessions | Example | 📖 |

| History in Context | Example | 📖 |

| Telemetry | Example | 📖 |

| Project Docs (.praison/docs/) | Example | 📖 |

| AI Commit Messages | Example | 📖 |

| @Mentions in Prompts | Example | 📖 |

🖥️ CLI Features

| Feature | Code | Docs |

|---|---|---|

| Slash Commands | Example | 📖 |

| Autonomy Modes | Example | 📖 |

| Cost Tracking | Example | 📖 |

| Repository Map | Example | 📖 |

| Interactive TUI | Example | 📖 |

| Git Integration | Example | 📖 |

| Sandbox Execution | Example | 📖 |

| CLI Compare | Example | 📖 |

| Profile/Benchmark | Example | 📖 |

| Auto Mode | Example | 📖 |

| Init | Example | 📖 |

| File Input | Example | 📖 |

| Final Agent | Example | 📖 |

| Max Tokens | Example | 📖 |

🧪 Evaluation

| Feature | Code | Docs |

|---|---|---|

| Accuracy Evaluation | Example | 📖 |

| Performance Evaluation | Example | 📖 |

| Reliability Evaluation | Example | 📖 |

| Criteria Evaluation | Example | 📖 |

PraisonAI supports 100+ LLM providers through seamless integration:

View all 24 providers

| Provider | Example |

|---|---|

| OpenAI | Example |

| Anthropic | Example |

| Google Gemini | Example |

| Ollama | Example |

| Groq | Example |

| DeepSeek | Example |

| xAI Grok | Example |

| Mistral | Example |

| Cohere | Example |

| Perplexity | Example |

| Fireworks | Example |

| Together AI | Example |

| OpenRouter | Example |

| HuggingFace | Example |

| Azure OpenAI | Example |

| AWS Bedrock | Example |

| Google Vertex | Example |

| Databricks | Example |

| Cloudflare | Example |

| AI21 | Example |

| Replicate | Example |

| SageMaker | Example |

| Moonshot | Example |

| vLLM | Example |

Create app.py file and add the code below:

from praisonaiagents import Agent

agent = Agent(instructions="Your are a helpful AI assistant")

agent.start("Write a movie script about a robot in Mars")Run:

python app.pyCreate app.py file and add the code below:

from praisonaiagents import Agent, Agents

research_agent = Agent(instructions="Research about AI")

summarise_agent = Agent(instructions="Summarise research agent's findings")

agents = Agents(agents=[research_agent, summarise_agent])

agents.start()Run:

python app.pyEnable planning for any agent - the agent creates a plan, then executes step by step:

from praisonaiagents import Agent

def search_web(query: str) -> str:

return f"Search results for: {query}"

agent = Agent(

name="AI Assistant",

instructions="Research and write about topics",

planning=True, # Enable planning mode

planning_tools=[search_web], # Tools for planning research

planning_reasoning=True # Chain-of-thought reasoning

)

result = agent.start("Research AI trends in 2025 and write a summary")What happens:

- 📋 Agent creates a multi-step plan

- 🚀 Executes each step sequentially

- 📊 Shows progress with context passing

- ✅ Returns final result

Automated research with real-time streaming, web search, and citations using OpenAI or Gemini Deep Research APIs.

from praisonaiagents import DeepResearchAgent

# OpenAI Deep Research

agent = DeepResearchAgent(

model="o4-mini-deep-research", # or "o3-deep-research"

verbose=True

)

result = agent.research("What are the latest AI trends in 2025?")

print(result.report)

print(f"Citations: {len(result.citations)}")# Gemini Deep Research

from praisonaiagents import DeepResearchAgent

agent = DeepResearchAgent(

model="deep-research-pro", # Auto-detected as Gemini

verbose=True

)

result = agent.research("Research quantum computing advances")

print(result.report)Features:

- 🔍 Multi-provider support (OpenAI, Gemini, LiteLLM)

- 📡 Real-time streaming with reasoning summaries

- 📚 Structured citations with URLs

- 🛠️ Built-in tools: web search, code interpreter, MCP, file search

- 🔄 Automatic provider detection from model name

Transform user queries to improve RAG retrieval quality using multiple strategies.

from praisonaiagents import QueryRewriterAgent, RewriteStrategy

agent = QueryRewriterAgent(model="gpt-4o-mini")

# Basic - expands abbreviations, adds context

result = agent.rewrite("AI trends")

print(result.primary_query) # "What are the current trends in Artificial Intelligence?"

# HyDE - generates hypothetical document for semantic matching

result = agent.rewrite("What is quantum computing?", strategy=RewriteStrategy.HYDE)

# Step-back - generates broader context question

result = agent.rewrite("GPT-4 vs Claude 3?", strategy=RewriteStrategy.STEP_BACK)

# Sub-queries - decomposes complex questions

result = agent.rewrite("RAG setup and best embedding models?", strategy=RewriteStrategy.SUB_QUERIES)

# Contextual - resolves references using chat history

result = agent.rewrite("What about cost?", chat_history=[...])Strategies:

- BASIC: Expand abbreviations, fix typos, add context

- HYDE: Generate hypothetical document for semantic matching

- STEP_BACK: Generate higher-level concept questions

- SUB_QUERIES: Decompose multi-part questions

- MULTI_QUERY: Generate multiple paraphrased versions

- CONTEXTUAL: Resolve references using conversation history

- AUTO: Automatically detect best strategy

Enable persistent memory for agents - works out of the box without any extra packages.

from praisonaiagents import Agent

from praisonaiagents.memory import FileMemory

# Enable memory with a single parameter

agent = Agent(

name="Personal Assistant",

instructions="You are a helpful assistant that remembers user preferences.",

memory=True, # Enables file-based memory (no extra deps!)

user_id="user123" # Isolate memory per user

)

# Memory is automatically injected into conversations

result = agent.start("My name is John and I prefer Python")

# Agent will remember this for future conversationsMemory Types:

- Short-term: Rolling buffer of recent context (auto-expires)

- Long-term: Persistent important facts (sorted by importance)

- Entity: People, places, organizations with attributes

- Episodic: Date-based interaction history

Advanced Features:

from praisonaiagents.memory import FileMemory

memory = FileMemory(user_id="user123")

# Session Save/Resume

memory.save_session("project_session", conversation_history=[...])

memory.resume_session("project_session")

# Context Compression

memory.compress(llm_func=lambda p: agent.chat(p), max_items=10)

# Checkpointing

memory.create_checkpoint("before_refactor", include_files=["main.py"])

memory.restore_checkpoint("before_refactor", restore_files=True)

# Slash Commands

memory.handle_command("/memory show")

memory.handle_command("/memory save my_session")Storage Options:

| Option | Dependencies | Description |

|---|---|---|

memory=True |

None | File-based JSON storage (default) |

memory="file" |

None | Explicit file-based storage |

memory="sqlite" |

Built-in | SQLite with indexing |

memory="chromadb" |

chromadb | Vector/semantic search |

PraisonAI auto-discovers instruction files from your project root and git root:

| File | Description | Priority |

|---|---|---|

PRAISON.md |

PraisonAI native instructions | High |

PRAISON.local.md |

Local overrides (gitignored) | Higher |

CLAUDE.md |

Claude Code memory file | High |

CLAUDE.local.md |

Local overrides (gitignored) | Higher |

AGENTS.md |

OpenAI Codex CLI instructions | High |

GEMINI.md |

Gemini CLI memory file | High |

.cursorrules |

Cursor IDE rules | High |

.windsurfrules |

Windsurf IDE rules | High |

.claude/rules/*.md |

Claude Code modular rules | Medium |

.windsurf/rules/*.md |

Windsurf modular rules | Medium |

.cursor/rules/*.mdc |

Cursor modular rules | Medium |

.praison/rules/*.md |

Workspace rules | Medium |

~/.praison/rules/*.md |

Global rules | Low |

from praisonaiagents import Agent

# Agent auto-discovers CLAUDE.md, AGENTS.md, GEMINI.md, etc.

agent = Agent(name="Assistant", instructions="You are helpful.")

# Rules are injected into system prompt automatically@Import Syntax:

# CLAUDE.md

See @README for project overview

See @docs/architecture.md for system design

@~/.praison/my-preferences.mdRule File Format (with YAML frontmatter):

---

description: Python coding guidelines

globs: ["**/*.py"]

activation: always # always, glob, manual, ai_decision

---

# Guidelines

- Use type hints

- Follow PEP 8from praisonaiagents.memory import FileMemory, AutoMemory

memory = FileMemory(user_id="user123")

auto = AutoMemory(memory, enabled=True)

# Automatically extracts and stores memories from conversations

memories = auto.process_interaction(

"My name is John and I prefer Python for backend work"

)

# Extracts: name="John", preference="Python for backend"Create powerful multi-agent workflows with the Workflow class:

from praisonaiagents import Agent, Workflow

# Create agents

researcher = Agent(

name="Researcher",

role="Research Analyst",

goal="Research topics thoroughly",

instructions="Provide concise, factual information."

)

writer = Agent(

name="Writer",

role="Content Writer",

goal="Write engaging content",

instructions="Write clear, engaging content based on research."

)

# Create workflow with agents as steps

workflow = Workflow(steps=[researcher, writer])

# Run workflow - agents process sequentially

result = workflow.start("What are the benefits of AI agents?")

print(result["output"])Key Features:

-

Agent-first - Pass

Agentobjects directly as workflow steps -

Pattern helpers - Use

route(),parallel(),loop(),repeat() -

Planning mode - Enable with

planning=True -

Callbacks - Monitor with

on_step_complete,on_workflow_complete -

Async execution - Use

workflow.astart()for async

from praisonaiagents import Agent, Workflow

from praisonaiagents.workflows import route, parallel, loop, repeat

# 1. ROUTING - Classifier agent routes to specialized agents

classifier = Agent(name="Classifier", instructions="Respond with 'technical' or 'creative'")

tech_agent = Agent(name="TechExpert", role="Technical Expert")

creative_agent = Agent(name="Creative", role="Creative Writer")

workflow = Workflow(steps=[

classifier,

route({

"technical": [tech_agent],

"creative": [creative_agent]

})

])

# 2. PARALLEL - Multiple agents work concurrently

market_agent = Agent(name="Market", role="Market Researcher")

competitor_agent = Agent(name="Competitor", role="Competitor Analyst")

aggregator = Agent(name="Aggregator", role="Synthesizer")

workflow = Workflow(steps=[

parallel([market_agent, competitor_agent]),

aggregator

])

# 3. LOOP - Agent processes each item

processor = Agent(name="Processor", role="Item Processor")

summarizer = Agent(name="Summarizer", role="Summarizer")

workflow = Workflow(

steps=[loop(processor, over="items"), summarizer],

variables={"items": ["AI", "ML", "NLP"]}

)

# 4. REPEAT - Evaluator-Optimizer pattern

generator = Agent(name="Generator", role="Content Generator")

evaluator = Agent(name="Evaluator", instructions="Say 'APPROVED' if good")

workflow = Workflow(steps=[

generator,

repeat(evaluator, until=lambda ctx: "approved" in ctx.previous_result.lower(), max_iterations=3)

])

# 5. CALLBACKS

workflow = Workflow(

steps=[researcher, writer],

on_step_complete=lambda name, r: print(f"✅ {name} done")

)

# 6. WITH PLANNING & REASONING

workflow = Workflow(

steps=[researcher, writer],

planning=True,

reasoning=True

)

# 7. ASYNC EXECUTION

result = asyncio.run(workflow.astart("input"))

# 8. STATUS TRACKING

workflow.status # "not_started" | "running" | "completed"

workflow.step_statuses # {"step1": "completed", "step2": "skipped"}# .praison/workflows/research.yaml

name: Research Workflow

description: Research and write content with all patterns

agents:

researcher:

role: Research Expert

goal: Find accurate information

tools: [tavily_search, web_scraper]

writer:

role: Content Writer

goal: Write engaging content

editor:

role: Editor

goal: Polish content

steps:

# Sequential

- agent: researcher

action: Research {{topic}}

output_variable: research_data

# Routing

- name: classifier

action: Classify content type

route:

technical: [tech_handler]

creative: [creative_handler]

default: [general_handler]

# Parallel

- name: parallel_research

parallel:

- agent: researcher

action: Research market

- agent: researcher

action: Research competitors

# Loop

- agent: writer

action: Write about {{item}}

loop_over: topics

loop_var: item

# Repeat (evaluator-optimizer)

- agent: editor

action: Review and improve

repeat:

until: "quality > 8"

max_iterations: 3

# Output to file

- agent: writer

action: Write final report

output_file: output/{{topic}}_report.md

variables:

topic: AI trends

topics: [ML, NLP, Vision]

workflow:

planning: true

planning_llm: gpt-4o

memory_config:

provider: chroma

persist: truefrom praisonaiagents.workflows import YAMLWorkflowParser, WorkflowManager

# Option 1: Parse YAML string

parser = YAMLWorkflowParser()

workflow = parser.parse_string(yaml_content)

result = workflow.start("Research AI trends")

# Option 2: Load from file with WorkflowManager

manager = WorkflowManager()

workflow = manager.load_yaml("research_workflow.yaml")

result = workflow.start("Research AI trends")

# Option 3: Execute YAML directly

result = manager.execute_yaml(

"research_workflow.yaml",

input_data="Research AI trends",

variables={"topic": "Machine Learning"}

)# workflow.yaml - Full feature reference

name: Complete Workflow

description: Demonstrates all workflow.yaml features

framework: praisonai # praisonai, crewai, autogen

process: workflow # sequential, hierarchical, workflow

workflow:

planning: true

planning_llm: gpt-4o

reasoning: true

verbose: true

memory_config:

provider: chroma

persist: true

variables:

topic: AI trends

items: [ML, NLP, Vision]

agents:

researcher:

name: Researcher

role: Research Analyst

goal: Research topics thoroughly

instructions: "Provide detailed research findings"

backstory: "Expert researcher with 10 years experience" # alias for instructions

llm: gpt-4o-mini

function_calling_llm: gpt-4o # For tool calls

max_rpm: 10 # Rate limiting

max_execution_time: 300 # Timeout in seconds

reflect_llm: gpt-4o # For self-reflection

min_reflect: 1

max_reflect: 3

system_template: "You are a helpful assistant"

tools:

- tavily_search

writer:

name: Writer

role: Content Writer

goal: Write clear content

instructions: "Write engaging content"

steps:

- name: research_step

agent: researcher

action: "Research {{topic}}"

expected_output: "Comprehensive research report"

output_file: "output/research.md"

create_directory: true

- name: writing_step

agent: writer

action: "Write article based on research"

context: # Task dependencies

- research_step

output_json: # Structured output

type: object

properties:

title: { type: string }

content: { type: string }

callbacks:

on_workflow_start: log_start

on_step_complete: log_step

on_workflow_complete: log_completeIntercept and modify agent behavior at various lifecycle points:

from praisonaiagents.hooks import (

HookRegistry, HookRunner, HookEvent, HookResult,

BeforeToolInput

)

# Create a hook registry

registry = HookRegistry()

# Log all tool calls

@registry.on(HookEvent.BEFORE_TOOL)

def log_tools(event_data: BeforeToolInput) -> HookResult:

print(f"Tool: {event_data.tool_name}")

return HookResult.allow()

# Block dangerous operations

@registry.on(HookEvent.BEFORE_TOOL)

def security_check(event_data: BeforeToolInput) -> HookResult:

if "delete" in event_data.tool_name.lower():

return HookResult.deny("Delete operations blocked")

return HookResult.allow()

# Execute hooks

runner = HookRunner(registry)CLI Commands:

praisonai hooks list # List registered hooks

praisonai hooks test before_tool # Test hooks for an event

praisonai hooks run "echo test" # Run a command hook

praisonai hooks validate hooks.json # Validate configurationFile-level undo/restore using shadow git:

from praisonaiagents.checkpoints import CheckpointService

service = CheckpointService(workspace_dir="./my_project")

await service.initialize()

# Save checkpoint before changes

result = await service.save("Before refactoring")

# Make changes...

# Restore if needed

await service.restore(result.checkpoint.id)

# View diff

diff = await service.diff()CLI Commands:

praisonai checkpoint save "Before changes" # Save checkpoint

praisonai checkpoint list # List checkpoints

praisonai checkpoint diff # Show changes

praisonai checkpoint restore abc123 # Restore to checkpointLinks:

Run agent tasks asynchronously without blocking:

import asyncio

from praisonaiagents.background import BackgroundRunner, BackgroundConfig

async def main():

config = BackgroundConfig(max_concurrent_tasks=3)

runner = BackgroundRunner(config=config)

async def my_task(name: str) -> str:

await asyncio.sleep(2)

return f"Task {name} completed"

task = await runner.submit(my_task, args=("example",), name="my_task")

await task.wait(timeout=10.0)

print(task.result)

asyncio.run(main())CLI Commands:

praisonai background list # List running tasks

praisonai background status <id> # Check task status

praisonai background cancel <id> # Cancel a task

praisonai background clear # Clear completed tasksLinks:

Control what agents can and cannot do with policy-based execution:

from praisonaiagents.policy import (

PolicyEngine, Policy, PolicyRule, PolicyAction

)

engine = PolicyEngine()

policy = Policy(

name="no_delete",

rules=[

PolicyRule(

action=PolicyAction.DENY,

resource="tool:delete_*",

reason="Delete operations blocked"

)

]

)

engine.add_policy(policy)

result = engine.check("tool:delete_file", {})

print(f"Allowed: {result.allowed}")CLI Commands:

praisonai policy list # List policies

praisonai policy check "tool:name" # Check if allowed

praisonai policy init # Create templateLinks:

Configure token budgets for extended thinking:

from praisonaiagents.thinking import ThinkingBudget, ThinkingTracker

# Use predefined levels

budget = ThinkingBudget.high() # 16,000 tokens

# Track usage

tracker = ThinkingTracker()

session = tracker.start_session(budget_tokens=16000)

tracker.end_session(session, tokens_used=12000)

summary = tracker.get_summary()

print(f"Utilization: {summary['average_utilization']:.1%}")CLI Commands:

praisonai thinking status # Show current budget

praisonai thinking set high # Set budget level

praisonai thinking stats # Show usage statisticsLinks:

Configure how agents format their responses:

from praisonaiagents.output import OutputStyle, OutputFormatter

# Use preset styles

style = OutputStyle.concise()

formatter = OutputFormatter(style)

# Format output

text = "# Hello\n\nThis is **bold** text."

plain = formatter.format(text)

print(plain)CLI Commands:

praisonai output status # Show current style

praisonai output set concise # Set output styleLinks:

Automatically manage context window size:

from praisonaiagents.compaction import (

ContextCompactor, CompactionStrategy

)

compactor = ContextCompactor(

max_tokens=4000,

strategy=CompactionStrategy.SLIDING,

preserve_recent=3

)

messages = [...] # Your conversation history

compacted, result = compactor.compact(messages)

print(f"Compression: {result.compression_ratio:.1%}")CLI Commands:

praisonai compaction status # Show settings

praisonai compaction set sliding # Set strategy

praisonai compaction stats # Show statisticsLinks:

PraisonAI accepts both old (agents.yaml) and new (workflow.yaml) field names. Use the canonical names for new projects:

| Canonical (Recommended) | Alias (Also Works) | Purpose |

|---|---|---|

agents |

roles |

Define agent personas |

instructions |

backstory |

Agent behavior/persona |

action |

description |

What the step does |

steps |

tasks (nested) |

Define work items |

name |

topic |

Workflow identifier |

A-I-G-S Mnemonic - Easy to remember:

- Agents - Who does the work

- Instructions - How they behave

- Goal - What they achieve

- Steps - What they do

# Quick Reference - Canonical Format

name: My Workflow # Workflow name (not 'topic')

agents: # Define agents (not 'roles')

my_agent:

role: Job Title # Agent's role

goal: What to achieve # Agent's goal

instructions: How to act # Agent's behavior (not 'backstory')

steps: # Define steps (not 'tasks')

- agent: my_agent

action: What to do # Step action (not 'description')Note: The parser accepts both old and new names. Run

praisonai workflow validate <file.yaml>to see suggestions for canonical names.

Feature Parity: Both agents.yaml and workflow.yaml now support the same features:

- All workflow patterns (route, parallel, loop, repeat)

- All agent fields (function_calling_llm, max_rpm, max_execution_time, reflect_llm, templates)

- All step fields (expected_output, context, output_json, create_directory, callback)

- Framework support (praisonai, crewai, autogen)

- Process types (sequential, hierarchical, workflow)

You can use advanced workflow patterns directly in agents.yaml by setting process: workflow:

# agents.yaml with workflow patterns

framework: praisonai

process: workflow # Enables workflow mode

topic: "Research AI trends"

workflow:

planning: true

reasoning: true

verbose: true

variables:

topic: AI trends

agents: # Canonical: use 'agents' instead of 'roles'

classifier:

role: Request Classifier

instructions: "Classify requests into categories" # Canonical: use 'instructions' instead of 'backstory'

goal: Classify requests

researcher:

role: Research Analyst

instructions: "Expert researcher" # Canonical: use 'instructions' instead of 'backstory'

goal: Research topics

tools:

- tavily_search

steps:

# Sequential step

- agent: classifier

action: "Classify: {{topic}}"

# Route pattern - decision-based branching

- name: routing

route:

technical: [tech_expert]

default: [researcher]

# Parallel pattern - concurrent execution

- name: parallel_research

parallel:

- agent: researcher

action: "Research market trends"

- agent: researcher

action: "Research competitors"

# Loop pattern - iterate over items

- agent: researcher

action: "Analyze {{item}}"

loop:

over: topics

# Repeat pattern - evaluator-optimizer

- agent: aggregator

action: "Synthesize findings"

repeat:

until: "comprehensive"

max_iterations: 3Run with the same simple command:

praisonai agents.yamlPraisonAI supports MCP Protocol Revision 2025-11-25 with multiple transports.

from praisonaiagents import Agent, MCP

# stdio - Local NPX/Python servers

agent = Agent(tools=MCP("npx @modelcontextprotocol/server-memory"))

# Streamable HTTP - Production servers

agent = Agent(tools=MCP("https://api.example.com/mcp"))

# WebSocket - Real-time bidirectional

agent = Agent(tools=MCP("wss://api.example.com/mcp", auth_token="token"))

# SSE (Legacy) - Backward compatibility

agent = Agent(tools=MCP("http://localhost:8080/sse"))

# With environment variables

agent = Agent(

tools=MCP(

command="npx",

args=["-y", "@modelcontextprotocol/server-brave-search"],

env={"BRAVE_API_KEY": "your-key"}

)

)

# Multiple MCP servers + regular functions

def my_custom_tool(query: str) -> str:

"""Custom tool function."""

return f"Result: {query}"

agent = Agent(

name="MultiToolAgent",

instructions="Agent with multiple MCP servers",

tools=[

MCP("uvx mcp-server-time"), # Time tools

MCP("npx @modelcontextprotocol/server-memory"), # Memory tools

my_custom_tool # Regular function

]

)Expose your Python functions as MCP tools for Claude Desktop, Cursor, and other MCP clients:

from praisonaiagents.mcp import ToolsMCPServer

def search_web(query: str, max_results: int = 5) -> dict:

"""Search the web for information."""

return {"results": [f"Result for {query}"]}

def calculate(expression: str) -> dict:

"""Evaluate a mathematical expression."""

return {"result": eval(expression)}

# Create and run MCP server

server = ToolsMCPServer(name="my-tools")

server.register_tools([search_web, calculate])

server.run() # stdio for Claude Desktop

# server.run_sse(host="0.0.0.0", port=8080) # SSE for web clients| Feature | Description |

|---|---|

| Session Management | Automatic Mcp-Session-Id handling |

| Protocol Versioning | Mcp-Protocol-Version header |

| Resumability | SSE stream recovery via Last-Event-ID |

| Security | Origin validation, DNS rebinding prevention |

| WebSocket | Auto-reconnect with exponential backoff |

PraisonAI supports the A2A Protocol for agent-to-agent communication, enabling your agents to be discovered and collaborate with other AI agents.

from praisonaiagents import Agent, A2A

from fastapi import FastAPI

# Create an agent with tools

def search_web(query: str) -> str:

"""Search the web for information."""

return f"Results for: {query}"

agent = Agent(

name="Research Assistant",

role="Research Analyst",

goal="Help users research topics",

tools=[search_web]

)

# Expose as A2A Server

a2a = A2A(agent=agent, url="http://localhost:8000/a2a")

app = FastAPI()

app.include_router(a2a.get_router())

# Run: uvicorn app:app --reload

# Agent Card: GET /.well-known/agent.json

# Status: GET /status| Feature | Description |

|---|---|

| Agent Card | JSON metadata for agent discovery |

| Skills Extraction | Auto-generate skills from tools |

| Task Management | Stateful task lifecycle |

| Streaming | SSE streaming for real-time updates |

Documentation: docs.praison.ai/a2a | Examples: examples/python/a2a

PraisonAI provides a powerful CLI for no-code automation and quick prototyping.

| Category | Commands |

|---|---|

| Execution |

praisonai, --auto, --interactive, --chat

|

| Research |

research, --query-rewrite, --deep-research

|

| Planning |

--planning, --planning-tools, --planning-reasoning

|

| Workflows |

workflow run, workflow list, workflow auto

|

| Memory |

memory show, memory add, memory search, memory clear

|

| Knowledge |

knowledge add, knowledge query, knowledge list

|

| Sessions |

session list, session resume, session delete

|

| Tools |

tools list, tools info, tools search

|

| MCP |

mcp list, mcp create, mcp enable

|

| Development |

commit, docs, checkpoint, hooks

|

| Scheduling |

schedule start, schedule list, schedule stop

|

pip install praisonai

export OPENAI_API_KEY=xxxxxxxxxxxxxxxxxxxxxx

praisonai --auto create a movie script about Robots in Mars# Start interactive terminal mode (inspired by Gemini CLI, Codex CLI, Claude Code)

praisonai --interactive

praisonai -i

# Features:

# - Streaming responses (no boxes)

# - Built-in tools: read_file, write_file, list_files, execute_command, internet_search

# - Slash commands: /help, /exit, /tools, /clear

# Chat mode - single prompt with interactive style (for testing/scripting)

# Use --chat (or --chat-mode for backward compatibility)

praisonai "list files in current folder" --chat

praisonai "search the web for AI news" --chat

praisonai "read README.md" --chat# Start web-based Chainlit chat interface (requires praisonai[chat])

pip install "praisonai[chat]"

praisonai chat

# Opens browser at http://localhost:8084# Rewrite query for better results (uses QueryRewriterAgent)

praisonai "AI trends" --query-rewrite

# Rewrite with search tools (agent decides when to search)

praisonai "latest developments" --query-rewrite --rewrite-tools "internet_search"

# Works with any prompt

praisonai "explain quantum computing" --query-rewrite -v# Default: OpenAI (o4-mini-deep-research)

praisonai research "What are the latest AI trends in 2025?"

# Use Gemini

praisonai research --model deep-research-pro "Your research query"

# Rewrite query before research

praisonai research --query-rewrite "AI trends"

# Rewrite with search tools

praisonai research --query-rewrite --rewrite-tools "internet_search" "AI trends"

# Use custom tools from file (gathers context before deep research)

praisonai research --tools tools.py "Your research query"

praisonai research -t my_tools.py "Your research query"

# Use built-in tools by name (comma-separated)

praisonai research --tools "internet_search,wiki_search" "Your query"

praisonai research -t "yfinance,calculator_tools" "Stock analysis query"

# Save output to file (output/research/{query}.md)

praisonai research --save "Your research query"

praisonai research -s "Your research query"

# Combine options

praisonai research --query-rewrite --tools tools.py --save "Your research query"

# Verbose mode (show debug logs)

praisonai research -v "Your research query"# Enable planning mode - agent creates a plan before execution

praisonai "Research AI trends and write a summary" --planning

# Planning with tools for research

praisonai "Analyze market trends" --planning --planning-tools tools.py

# Planning with chain-of-thought reasoning

praisonai "Complex analysis task" --planning --planning-reasoning

# Auto-approve plans without confirmation

praisonai "Task" --planning --auto-approve-plan# Auto-approve ALL tool executions (use with caution!)

praisonai "run ls command" --trust

# Auto-approve tools up to a risk level (prompt for higher)

# Levels: low, medium, high, critical

praisonai "write to file" --approve-level high # Prompts for critical tools only

praisonai "task" --approve-level medium # Prompts for high and critical

# Default behavior (no flags): prompts for all dangerous tools

praisonai "run shell command" # Will prompt for approval# Enable memory for agent (persists across sessions)

praisonai "My name is John" --memory

# Memory with user isolation

praisonai "Remember my preferences" --memory --user-id user123

# Memory management commands

praisonai memory show # Show memory statistics

praisonai memory add "User prefers Python" # Add to long-term memory

praisonai memory search "Python" # Search memories

praisonai memory clear # Clear short-term memory

praisonai memory clear all # Clear all memory

praisonai memory save my_session # Save session

praisonai memory resume my_session # Resume session

praisonai memory sessions # List saved sessions

praisonai memory checkpoint # Create checkpoint

praisonai memory restore <checkpoint_id> # Restore checkpoint

praisonai memory checkpoints # List checkpoints

praisonai memory help # Show all commands# List all loaded rules (from PRAISON.md, CLAUDE.md, etc.)

praisonai rules list

# Show specific rule details

praisonai rules show <rule_name>

# Create a new rule

praisonai rules create my_rule "Always use type hints"

# Delete a rule

praisonai rules delete my_rule

# Show rules statistics

praisonai rules stats

# Include manual rules with prompts

praisonai "Task" --include-rules security,testing# List available workflows

praisonai workflow list

# Execute a workflow with tools and save output

praisonai workflow run "Research Blog" --tools tavily --save

# Execute with variables

praisonai workflow run deploy --workflow-var environment=staging --workflow-var branch=main

# Execute with planning mode (AI creates sub-steps for each workflow step)

praisonai workflow run "Research Blog" --planning --verbose

# Execute with reasoning mode (chain-of-thought)

praisonai workflow run "Analysis" --reasoning --verbose

# Execute with memory enabled

praisonai workflow run "Research" --memory

# Show workflow details

praisonai workflow show deploy

# Create a new workflow template

praisonai workflow create my_workflow

# Inline workflow (no template file needed)

praisonai "What is AI?" --workflow "Research,Summarize" --save

# Inline workflow with step actions

praisonai "GPT-5" --workflow "Research:Search for info,Write:Write blog" --tools tavily

# Workflow CLI help

praisonai workflow help# Run a YAML workflow file

praisonai workflow run research.yaml

# Run with variables

praisonai workflow run research.yaml --var topic="AI trends"

# Validate a YAML workflow

praisonai workflow validate research.yaml

# Create from template (simple, routing, parallel, loop, evaluator-optimizer)

praisonai workflow template routing --output my_workflow.yaml# Auto-generate a sequential workflow from topic

praisonai workflow auto "Research AI trends"

# Generate parallel workflow (multiple agents work concurrently)

praisonai workflow auto "Research AI trends" --pattern parallel

# Generate routing workflow (classifier routes to specialists)

praisonai workflow auto "Build a chatbot" --pattern routing

# Generate orchestrator-workers workflow (central orchestrator delegates)

praisonai workflow auto "Comprehensive market analysis" --pattern orchestrator-workers

# Generate evaluator-optimizer workflow (iterative refinement)

praisonai workflow auto "Write and refine article" --pattern evaluator-optimizer

# Specify output file

praisonai workflow auto "Build a chatbot" --pattern routing

# Specify output file

praisonai workflow auto "Research AI" --pattern sequential --output my_workflow.yamlWorkflow CLI Options:

| Flag | Description |

|---|---|

--workflow-var key=value |

Set workflow variable (can be repeated) |

--var key=value |

Set variable for YAML workflows |

--pattern <pattern> |

Pattern for auto-generation (sequential, parallel, routing, loop, orchestrator-workers, evaluator-optimizer) |

--output <file> |

Output file for auto-generation |

--llm <model> |

LLM model (e.g., openai/gpt-4o-mini) |

--tools <tools> |

Tools (comma-separated, e.g., tavily) |

--planning |

Enable planning mode |

--reasoning |

Enable reasoning mode |

--memory |

Enable memory |

--verbose |

Enable verbose output |

--save |

Save output to file |

# List configured hooks

praisonai hooks list

# Show hooks statistics

praisonai hooks stats

# Create hooks.json template

praisonai hooks init# Enable Claude Memory Tool (Anthropic models only)

praisonai "Research and remember findings" --claude-memory --llm anthropic/claude-sonnet-4-20250514# Validate output with LLM guardrail

praisonai "Write code" --guardrail "Ensure code is secure and follows best practices"

# Combine with other flags

praisonai "Generate SQL query" --guardrail "No DROP or DELETE statements" --save# Display token usage and cost metrics

praisonai "Analyze this data" --metrics

# Combine with other features

praisonai "Complex task" --metrics --planningpraisonai schedule start <name> "task" --interval hourly

praisonai schedule list

praisonai schedule logs <name> [--follow]

praisonai schedule stop <name>

praisonai schedule restart <name>

praisonai schedule delete <name>

praisonai schedule describe <name>

praisonai schedule save <name> [file.yaml]

praisonai schedule "task" --interval hourly # foreground mode

praisonai schedule agents.yaml # foreground mode# Process images with vision-based tasks

praisonai "Describe this image" --image path/to/image.png

# Analyze image content

praisonai "What objects are in this photo?" --image photo.jpg --llm openai/gpt-4o# Enable usage monitoring and analytics

praisonai "Task" --telemetry

# Combine with metrics for full observability

praisonai "Complex analysis" --telemetry --metrics# Use MCP server tools

praisonai "Search files" --mcp "npx -y @modelcontextprotocol/server-filesystem ."

# MCP with environment variables

praisonai "Search web" --mcp "npx -y @modelcontextprotocol/server-brave-search" --mcp-env "BRAVE_API_KEY=your_key"

# Multiple MCP options

praisonai "Task" --mcp "npx server" --mcp-env "KEY1=value1,KEY2=value2"# Search codebase for relevant context

praisonai "Find authentication code" --fast-context ./src

# Add code context to any task

praisonai "Explain this function" --fast-context /path/to/project# Add documents to knowledge base

praisonai knowledge add document.pdf

praisonai knowledge add ./docs/

# Search knowledge base

praisonai knowledge search "API authentication"

# List indexed documents

praisonai knowledge list

# Clear knowledge base

praisonai knowledge clear

# Show knowledge base info

praisonai knowledge info

# Show all commands

praisonai knowledge help# List all saved sessions

praisonai session list

# Show session details

praisonai session show my-project

# Resume a session (load into memory)

praisonai session resume my-project

# Delete a session

praisonai session delete my-project

# Auto-save session after each run

praisonai "Analyze this code" --auto-save my-project

# Load history from last N sessions into context

praisonai "Continue our discussion" --history 5from praisonaiagents import Agent

# Auto-save session after each run

agent = Agent(

name="Assistant",

memory=True,

auto_save="my-project"

)

# Load history from past sessions via context management

agent = Agent(

name="Assistant",

memory=True,

context=True, # Enable context management for history

)from praisonaiagents.memory.workflows import WorkflowManager

manager = WorkflowManager()

# Save checkpoint after each step

result = manager.execute("deploy", checkpoint="deploy-v1")

# Resume from checkpoint

result = manager.execute("deploy", resume="deploy-v1")

# List/delete checkpoints

manager.list_checkpoints()

manager.delete_checkpoint("deploy-v1")praisonai tools list

praisonai tools info internet_search

praisonai tools search "web"

praisonai tools doctor

praisonai tools resolve shell_tool

praisonai tools discover

praisonai tools show-sources

praisonai tools show-sources --template ai-video-editor| Command | Example | Docs |

|---|---|---|

tools list |

example | docs |

tools resolve |

example | docs |

tools discover |

example | docs |

tools show-sources |

example | docs |

# Enable agent-to-agent task delegation

praisonai "Research and write article" --handoff "researcher,writer,editor"

# Complex multi-agent workflow

praisonai "Analyze data and create report" --handoff "analyst,visualizer,writer"# Enable automatic memory extraction

praisonai "Learn about user preferences" --auto-memory

# Combine with user isolation

praisonai "Remember my settings" --auto-memory --user-id user123# Generate todo list from task

praisonai "Plan the project" --todo

# Add a todo item

praisonai todo add "Implement feature X"

# List all todos

praisonai todo list

# Complete a todo

praisonai todo complete 1

# Delete a todo

praisonai todo delete 1

# Clear all todos

praisonai todo clear

# Show all commands

praisonai todo help# Auto-select best model based on task complexity

praisonai "Simple question" --router

# Specify preferred provider

praisonai "Complex analysis" --router --router-provider anthropic

# Router automatically selects:

# - Simple tasks → gpt-4o-mini, claude-3-haiku

# - Complex tasks → gpt-4-turbo, claude-3-opus

# Create workflow with model routing template

praisonai workflow create --template model-routing --output my_workflow.yamlCustom models can be configured in agents.yaml. See Model Router Docs for details.

# Enable visual workflow tracking

praisonai agents.yaml --flow-display

# Combine with other features

praisonai "Multi-step task" --planning --flow-display# List all project docs

praisonai docs list

# Create a new doc

praisonai docs create project-overview "This project is a Python web app..."

# Show a specific doc

praisonai docs show project-overview

# Delete a doc

praisonai docs delete old-doc

# Show all commands

praisonai docs help# List all MCP configurations

praisonai mcp list

# Create a new MCP config

praisonai mcp create filesystem npx -y @modelcontextprotocol/server-filesystem .

# Show a specific config

praisonai mcp show filesystem

# Enable/disable a config

praisonai mcp enable filesystem

praisonai mcp disable filesystem

# Delete a config

praisonai mcp delete filesystem

# Show all commands

praisonai mcp help# Full auto mode: stage all, security check, commit, and push

praisonai commit -a

# Interactive mode (requires git add first)

praisonai commit

# Interactive with auto-push

praisonai commit --push

# Skip security check (not recommended)

praisonai commit -a --no-verifyFeatures:

- 🤖 AI-generated conventional commit messages

- 🔒 Built-in security scanning (API keys, passwords, secrets, sensitive files)

- 📦 Auto-staging with

-aflag - 🚀 Auto-push in full auto mode

- ✏️ Edit message before commit in interactive mode

Security Detection:

- API keys, secrets, tokens (AWS, GitHub, GitLab, Slack)

- Passwords and private keys

- Sensitive files (

.env,id_rsa,.pem,.key, etc.)

# Start API server for agents defined in YAML

praisonai serve agents.yaml

# With custom port and host

praisonai serve agents.yaml --port 8005 --host 0.0.0.0

# Alternative flag style

praisonai agents.yaml --serve

# The server provides:

# POST /agents - Run all agents sequentially

# POST /agents/{name} - Run specific agent (e.g., /agents/researcher)

# GET /agents/list - List available agents# Export workflow to n8n and open in browser

praisonai agents.yaml --n8n

# With custom n8n URL

praisonai agents.yaml --n8n --n8n-url http://localhost:5678

# Set N8N_API_KEY for auto-import

export N8N_API_KEY="your-api-key"

praisonai agents.yaml --n8nUse external AI coding CLI tools (Claude Code, Gemini CLI, Codex CLI, Cursor CLI) as agent tools:

# Use Claude Code for coding tasks

praisonai "Refactor the auth module" --external-agent claude

# Use Gemini CLI for code analysis

praisonai "Analyze codebase architecture" --external-agent gemini

# Use OpenAI Codex CLI

praisonai "Fix all bugs in src/" --external-agent codex

# Use Cursor CLI

praisonai "Add comprehensive tests" --external-agent cursorPython API:

from praisonai.integrations import (

ClaudeCodeIntegration,

GeminiCLIIntegration,

CodexCLIIntegration,

CursorCLIIntegration

)

# Create integration

claude = ClaudeCodeIntegration(workspace="/project")

# Execute a coding task

result = await claude.execute("Refactor the auth module")

# Use as agent tool

from praisonai import Agent

tool = claude.as_tool()

agent = Agent(tools=[tool])Environment Variables:

export ANTHROPIC_API_KEY=your-key # Claude Code

export GEMINI_API_KEY=your-key # Gemini CLI

export OPENAI_API_KEY=your-key # Codex CLI

export CURSOR_API_KEY=your-key # Cursor CLISee External Agents Documentation for more details.

# Include file content in prompt

praisonai "@file:src/main.py explain this code"

# Include project doc

praisonai "@doc:project-overview help me add a feature"

# Search the web

praisonai "@web:python best practices give me tips"

# Fetch URL content

praisonai "@url:https://docs.python.org summarize this"

# Combine multiple mentions

praisonai "@file:main.py @doc:coding-standards review this code"Expand short prompts into detailed, actionable prompts:

# Expand a short prompt into detailed prompt

praisonai "write a movie script in 3 lines" --expand-prompt

# With verbose output

praisonai "blog about AI" --expand-prompt -v

# With tools for context gathering

praisonai "latest AI trends" --expand-prompt --expand-tools tools.py

# Combine with query rewrite

praisonai "AI news" --query-rewrite --expand-promptfrom praisonaiagents import PromptExpanderAgent, ExpandStrategy

# Basic usage

agent = PromptExpanderAgent()

result = agent.expand("write a movie script in 3 lines")

print(result.expanded_prompt)

# With specific strategy

result = agent.expand("blog about AI", strategy=ExpandStrategy.DETAILED)

# Available strategies: BASIC, DETAILED, STRUCTURED, CREATIVE, AUTOKey Difference:

-

--query-rewrite: Optimizes queries for search/retrieval (RAG) -

--expand-prompt: Expands prompts for detailed task execution

# Web Search - Get real-time information

praisonai "What are the latest AI news today?" --web-search --llm openai/gpt-4o-search-preview

# Web Fetch - Retrieve and analyze URL content (Anthropic only)

praisonai "Summarize https://docs.praison.ai" --web-fetch --llm anthropic/claude-sonnet-4-20250514

# Prompt Caching - Reduce costs for repeated prompts

praisonai "Analyze this document..." --prompt-caching --llm anthropic/claude-sonnet-4-20250514from praisonaiagents import Agent

# Web Search

agent = Agent(

instructions="You are a research assistant",

llm="openai/gpt-4o-search-preview",

web_search=True

)

# Web Fetch (Anthropic only)

agent = Agent(

instructions="You are a content analyzer",

llm="anthropic/claude-sonnet-4-20250514",

web_fetch=True

)

# Prompt Caching

agent = Agent(

instructions="You are an AI assistant..." * 50, # Long system prompt

llm="anthropic/claude-sonnet-4-20250514",

prompt_caching=True

)Supported Providers:

| Feature | Providers |

|---|---|

| Web Search | OpenAI, Gemini, Anthropic, xAI, Perplexity |

| Web Fetch | Anthropic |

| Prompt Caching | OpenAI (auto), Anthropic, Bedrock, Deepseek |

| Feature | Docs |

|---|---|

| 🔄 Query Rewrite - RAG optimization | 📖 |

| 🔬 Deep Research - Automated research | 📖 |

| 📋 Planning - Step-by-step execution | 📖 |

| 💾 Memory - Persistent agent memory | 📖 |

| 📜 Rules - Auto-discovered instructions | 📖 |

| 🔄 Workflow - Multi-step workflows | 📖 |

| 🪝 Hooks - Event-driven actions | 📖 |

| 🧠 Claude Memory - Anthropic memory tool | 📖 |

| 🛡️ Guardrail - Output validation | 📖 |

| 📊 Metrics - Token usage tracking | 📖 |

| 🖼️ Image - Vision processing | 📖 |

| 📡 Telemetry - Usage monitoring | 📖 |

| 🔌 MCP - Model Context Protocol | 📖 |

| ⚡ Fast Context - Codebase search | 📖 |

| 📚 Knowledge - RAG management | 📖 |

| 💬 Session - Conversation management | 📖 |

| 🔧 Tools - Tool discovery | 📖 |

| 🤝 Handoff - Agent delegation | 📖 |

| 🧠 Auto Memory - Memory extraction | 📖 |

| 📋 Todo - Task management | 📖 |

| 🎯 Router - Smart model selection | 📖 |

| 📈 Flow Display - Visual workflow | 📖 |

| ✨ Prompt Expansion - Detailed prompts | 📖 |

| 🌐 Web Search - Real-time search | 📖 |

| 📥 Web Fetch - URL content retrieval | 📖 |

| 💾 Prompt Caching - Cost reduction | 📖 |

| 📦 Template Catalog - Browse & discover templates | 📖 |

| Command | Description |

|---|---|

praisonai templates browse |

Open template catalog in browser |

praisonai templates browse --print |

Print catalog URL only |

praisonai templates validate |

Validate template YAML files |

praisonai templates validate --source <dir> |

Validate specific directory |

praisonai templates validate --strict |

Strict validation mode |

praisonai templates validate --json |

JSON output format |

praisonai templates catalog build |

Build catalog locally |

praisonai templates catalog build --out <dir> |

Build to specific directory |

praisonai templates catalog sync |

Sync template sources |

praisonai templates catalog sync --source <name> |

Sync specific source |

Examples: examples/catalog/ | Docs: Code | CLI

npm install praisonai

export OPENAI_API_KEY=xxxxxxxxxxxxxxxxxxxxxxconst { Agent } = require('praisonai');

const agent = new Agent({ instructions: 'You are a helpful AI assistant' });

agent.start('Write a movie script about a robot in Mars');View architecture diagrams and workflow patterns

graph LR

%% Define the main flow

Start([▶ Start]) --> Agent1

Agent1 --> Process[⚙ Process]

Process --> Agent2

Agent2 --> Output([✓ Output])

Process -.-> Agent1

%% Define subgraphs for agents and their tasks

subgraph Agent1[ ]

Task1[📋 Task]

AgentIcon1[🤖 AI Agent]

Tools1[🔧 Tools]

Task1 --- AgentIcon1

AgentIcon1 --- Tools1

end

subgraph Agent2[ ]

Task2[📋 Task]

AgentIcon2[🤖 AI Agent]

Tools2[🔧 Tools]

Task2 --- AgentIcon2

AgentIcon2 --- Tools2

end

classDef input fill:#8B0000,stroke:#7C90A0,color:#fff

classDef process fill:#189AB4,stroke:#7C90A0,color:#fff

classDef tools fill:#2E8B57,stroke:#7C90A0,color:#fff

classDef transparent fill:none,stroke:none

class Start,Output,Task1,Task2 input

class Process,AgentIcon1,AgentIcon2 process

class Tools1,Tools2 tools

class Agent1,Agent2 transparentCreate AI agents that can use tools to interact with external systems and perform actions.

flowchart TB

subgraph Tools

direction TB

T3[Internet Search]

T1[Code Execution]

T2[Formatting]

end

Input[Input] ---> Agents

subgraph Agents

direction LR

A1[Agent 1]

A2[Agent 2]

A3[Agent 3]

end

Agents ---> Output[Output]

T3 --> A1

T1 --> A2

T2 --> A3

style Tools fill:#189AB4,color:#fff

style Agents fill:#8B0000,color:#fff

style Input fill:#8B0000,color:#fff

style Output fill:#8B0000,color:#fffCreate AI agents with memory capabilities for maintaining context and information across tasks.

flowchart TB

subgraph Memory

direction TB

STM[Short Term]

LTM[Long Term]

end

subgraph Store

direction TB

DB[(Vector DB)]

end

Input[Input] ---> Agents

subgraph Agents

direction LR

A1[Agent 1]

A2[Agent 2]

A3[Agent 3]

end

Agents ---> Output[Output]

Memory <--> Store

Store <--> A1

Store <--> A2

Store <--> A3

style Memory fill:#189AB4,color:#fff

style Store fill:#2E8B57,color:#fff

style Agents fill:#8B0000,color:#fff

style Input fill:#8B0000,color:#fff

style Output fill:#8B0000,color:#fffThe simplest form of task execution where tasks are performed one after another.

graph LR

Input[Input] --> A1

subgraph Agents

direction LR

A1[Agent 1] --> A2[Agent 2] --> A3[Agent 3]

end

A3 --> Output[Output]

classDef input fill:#8B0000,stroke:#7C90A0,color:#fff

classDef process fill:#189AB4,stroke:#7C90A0,color:#fff

classDef transparent fill:none,stroke:none

class Input,Output input

class A1,A2,A3 process

class Agents transparentUses a manager agent to coordinate task execution and agent assignments.

graph TB

Input[Input] --> Manager

subgraph Agents

Manager[Manager Agent]

subgraph Workers

direction LR

W1[Worker 1]

W2[Worker 2]

W3[Worker 3]

end

Manager --> W1

Manager --> W2

Manager --> W3

end

W1 --> Manager

W2 --> Manager

W3 --> Manager

Manager --> Output[Output]

classDef input fill:#8B0000,stroke:#7C90A0,color:#fff

classDef process fill:#189AB4,stroke:#7C90A0,color:#fff

classDef transparent fill:none,stroke:none

class Input,Output input

class Manager,W1,W2,W3 process

class Agents,Workers transparentAdvanced process type supporting complex task relationships and conditional execution.

graph LR

Input[Input] --> Start

subgraph Workflow

direction LR

Start[Start] --> C1{Condition}

C1 --> |Yes| A1[Agent 1]

C1 --> |No| A2[Agent 2]

A1 --> Join

A2 --> Join

Join --> A3[Agent 3]

end

A3 --> Output[Output]

classDef input fill:#8B0000,stroke:#7C90A0,color:#fff

classDef process fill:#189AB4,stroke:#7C90A0,color:#fff

classDef decision fill:#2E8B57,stroke:#7C90A0,color:#fff

classDef transparent fill:none,stroke:none

class Input,Output input

class Start,A1,A2,A3,Join process

class C1 decision

class Workflow transparentCreate AI agents that can dynamically route tasks to specialized LLM instances.

flowchart LR

In[In] --> Router[LLM Call Router]

Router --> LLM1[LLM Call 1]

Router --> LLM2[LLM Call 2]

Router --> LLM3[LLM Call 3]

LLM1 --> Out[Out]

LLM2 --> Out

LLM3 --> Out

style In fill:#8B0000,color:#fff

style Router fill:#2E8B57,color:#fff

style LLM1 fill:#2E8B57,color:#fff

style LLM2 fill:#2E8B57,color:#fff

style LLM3 fill:#2E8B57,color:#fff

style Out fill:#8B0000,color:#fffCreate AI agents that orchestrate and distribute tasks among specialized workers.

flowchart LR

In[In] --> Router[LLM Call Router]

Router --> LLM1[LLM Call 1]

Router --> LLM2[LLM Call 2]

Router --> LLM3[LLM Call 3]

LLM1 --> Synthesizer[Synthesizer]

LLM2 --> Synthesizer

LLM3 --> Synthesizer

Synthesizer --> Out[Out]

style In fill:#8B0000,color:#fff

style Router fill:#2E8B57,color:#fff

style LLM1 fill:#2E8B57,color:#fff

style LLM2 fill:#2E8B57,color:#fff

style LLM3 fill:#2E8B57,color:#fff

style Synthesizer fill:#2E8B57,color:#fff

style Out fill:#8B0000,color:#fffCreate AI agents that can autonomously monitor, act, and adapt based on environment feedback.

flowchart LR

Human[Human] <--> LLM[LLM Call]

LLM -->|ACTION| Environment[Environment]

Environment -->|FEEDBACK| LLM

LLM --> Stop[Stop]

style Human fill:#8B0000,color:#fff

style LLM fill:#2E8B57,color:#fff

style Environment fill:#8B0000,color:#fff

style Stop fill:#333,color:#fffCreate AI agents that can execute tasks in parallel for improved performance.

flowchart LR

In[In] --> LLM2[LLM Call 2]

In --> LLM1[LLM Call 1]

In --> LLM3[LLM Call 3]

LLM1 --> Aggregator[Aggregator]

LLM2 --> Aggregator

LLM3 --> Aggregator

Aggregator --> Out[Out]

style In fill:#8B0000,color:#fff

style LLM1 fill:#2E8B57,color:#fff

style LLM2 fill:#2E8B57,color:#fff

style LLM3 fill:#2E8B57,color:#fff

style Aggregator fill:#fff,color:#000

style Out fill:#8B0000,color:#fffCreate AI agents with sequential prompt chaining for complex workflows.

flowchart LR

In[In] --> LLM1[LLM Call 1] --> Gate{Gate}

Gate -->|Pass| LLM2[LLM Call 2] -->|Output 2| LLM3[LLM Call 3] --> Out[Out]

Gate -->|Fail| Exit[Exit]

style In fill:#8B0000,color:#fff

style LLM1 fill:#2E8B57,color:#fff

style LLM2 fill:#2E8B57,color:#fff

style LLM3 fill:#2E8B57,color:#fff

style Out fill:#8B0000,color:#fff

style Exit fill:#8B0000,color:#fffCreate AI agents that can generate and optimize solutions through iterative feedback.

flowchart LR

In[In] --> Generator[LLM Call Generator]

Generator -->|SOLUTION| Evaluator[LLM Call Evaluator] -->|ACCEPTED| Out[Out]

Evaluator -->|REJECTED + FEEDBACK| Generator

style In fill:#8B0000,color:#fff

style Generator fill:#2E8B57,color:#fff

style Evaluator fill:#2E8B57,color:#fff

style Out fill:#8B0000,color:#fffCreate AI agents that can efficiently handle repetitive tasks through automated loops.

flowchart LR

In[Input] --> LoopAgent[("Looping Agent")]

LoopAgent --> Task[Task]

Task --> |Next iteration| LoopAgent

Task --> |Done| Out[Output]

style In fill:#8B0000,color:#fff

style LoopAgent fill:#2E8B57,color:#fff,shape:circle

style Task fill:#2E8B57,color:#fff

style Out fill:#8B0000,color:#fffexport OPENAI_BASE_URL=http://localhost:11434/v1Replace xxxx with Groq API KEY:

export OPENAI_API_KEY=xxxxxxxxxxx

export OPENAI_BASE_URL=https://api.groq.com/openai/v1PraisonAI supports 100+ LLM models from various providers. Visit our models documentation for the complete list.

Create agents.yaml file and add the code below:

framework: praisonai

topic: Artificial Intelligence

agents: # Canonical: use 'agents' instead of 'roles'

screenwriter:

instructions: "Skilled in crafting scripts with engaging dialogue about {topic}." # Canonical: use 'instructions' instead of 'backstory'

goal: Create scripts from concepts.

role: Screenwriter

tasks:

scriptwriting_task:

description: "Develop scripts with compelling characters and dialogue about {topic}."

expected_output: "Complete script ready for production."To run the playbook:

praisonai agents.yamlPraisonAI supports multiple ways to create and integrate custom tools (plugins) into your agents.

from praisonaiagents import Agent, tool

@tool

def search(query: str) -> str:

"""Search the web for information."""

return f"Results for: {query}"

@tool

def calculate(expression: str) -> float:

"""Evaluate a math expression."""

return eval(expression)

agent = Agent(

instructions="You are a helpful assistant",

tools=[search, calculate]

)

agent.start("Search for AI news and calculate 15*4")from praisonaiagents import Agent, BaseTool

class WeatherTool(BaseTool):

name = "weather"

description = "Get current weather for a location"

def run(self, location: str) -> str:

return f"Weather in {location}: 72°F, Sunny"

agent = Agent(

instructions="You are a weather assistant",

tools=[WeatherTool()]

)

agent.start("What's the weather in Paris?")# pyproject.toml

[project]

name = "my-praisonai-tools"

version = "1.0.0"

dependencies = ["praisonaiagents"]

[project.entry-points."praisonaiagents.tools"]

my_tool = "my_package:MyTool"# my_package/__init__.py

from praisonaiagents import BaseTool

class MyTool(BaseTool):

name = "my_tool"

description = "My custom tool"

def run(self, param: str) -> str:

return f"Result: {param}"After pip install, tools are auto-discovered:

agent = Agent(tools=["my_tool"]) # Works automatically!PraisonAI provides zero-dependency persistent memory for agents. For detailed examples, see section 6. Agent Memory in the Python Code Examples.

PraisonAI provides a complete knowledge stack for building RAG applications with multiple vector stores, retrieval strategies, rerankers, and query modes.

from praisonaiagents import Agent

from praisonaiagents.rag.models import RetrievalStrategy

# Agent with RAG - simplest approach

agent = Agent(

name="Research Assistant",

knowledge=["docs/manual.pdf", "data/faq.txt"],

knowledge_config={"vector_store": {"provider": "chroma"}},

rag_config={

"include_citations": True,

"retrieval_strategy": RetrievalStrategy.HYBRID, # Dense + BM25

"rerank": True,

}

)

# Query with citations

result = agent.rag_query("How do I authenticate?")

print(result.answer)

for citation in result.citations:

print(f" [{citation.id}] {citation.source}")| Command | Description |

|---|---|

praisonai rag query "<question>" |

One-shot question answering with citations |

praisonai rag chat |

Interactive RAG chat session |

praisonai rag serve |

Start RAG as a microservice API |

praisonai rag eval <test_file> |

Evaluate RAG retrieval quality |

# Query with hybrid retrieval (dense + BM25 keyword search)

praisonai rag query "What are the key findings?" --hybrid

# Query with hybrid + reranking for best quality

praisonai rag query "Summarize conclusions" --hybrid --rerank

# Interactive chat with hybrid retrieval

praisonai rag chat --collection research --hybrid --rerank

# Start API server with OpenAI-compatible endpoint

praisonai rag serve --hybrid --rerank --openai-compat --port 8080

# Query with profiling

praisonai rag query "Summary?" --profile --profile-out ./profile.json| Command | Description |

|---|---|

praisonai knowledge index <sources> |

Index documents into knowledge base |

praisonai knowledge search <query> |

Search knowledge base (no LLM generation) |

praisonai knowledge list |

List indexed documents |

# Index documents

praisonai knowledge index ./docs/ --collection myproject

# Search with hybrid retrieval

praisonai knowledge search "authentication" --hybrid --collection myproject

# Index with profiling

praisonai knowledge index ./data --profile --profile-out ./profile.json- Knowledge is the indexing and retrieval substrate - use for indexing and raw search

- RAG orchestrates on top - use for question answering with LLM-generated responses and citations

- AutoRagAgent wraps an Agent with automatic retrieval decision - use when you want the agent to decide when to retrieve

- All share the same underlying index

AutoRagAgent automatically decides when to retrieve context from knowledge bases vs direct chat, based on query heuristics.

from praisonaiagents import Agent, AutoRagAgent

# Create agent with knowledge

agent = Agent(

name="Research Assistant",

knowledge=["docs/manual.pdf"],

user_id="user123", # Required for RAG retrieval

)

# Wrap with AutoRagAgent

auto_rag = AutoRagAgent(

agent=agent,

retrieval_policy="auto", # auto, always, never

top_k=5,

hybrid=True,

rerank=True,

)

# Auto-decides: retrieves for questions, skips for greetings

result = auto_rag.chat("What are the key findings?") # Retrieves

result = auto_rag.chat("Hello!") # Skips retrieval

# Force retrieval or skip per-call

result = auto_rag.chat("Hi", force_retrieval=True)

result = auto_rag.chat("Summary?", skip_retrieval=True)CLI Usage:

# Enable auto-rag with default policy (auto)

praisonai --auto-rag "What are the key findings?"

# Always retrieve

praisonai --auto-rag --rag-policy always "Tell me about X"

# With hybrid retrieval and reranking

praisonai --auto-rag --rag-hybrid --rag-rerank "Summarize the document"Settings are applied in this order (highest priority first):

-

CLI flags -

--hybrid,--rerank,--top-k -

Environment variables -

PRAISONAI_HYBRID=true -

Config file - YAML configuration (

--config) - Defaults

# Environment variables

export PRAISONAI_HYBRID=true

export PRAISONAI_RERANK=true

export PRAISONAI_TOP_K=10# Base install (minimal, fast imports)

pip install praisonaiagents

# With RAG API server support

pip install "praisonai[rag-api]"Run integration tests with real API keys:

# Enable live tests

export PRAISONAI_LIVE_TESTS=1

export OPENAI_API_KEY="your-key"

# Run live tests

pytest -m live tests/integration/| Feature | Description | SDK Docs | CLI Docs |

|---|---|---|---|

| Hybrid Retrieval | Dense vectors + BM25 keyword search with RRF fusion | SDK | CLI |

| Reranking | LLM, Cross-Encoder, Cohere rerankers | SDK | CLI |

| RAG Serve | Microservice API with OpenAI-compatible mode | SDK | CLI |

| Vector Stores | ChromaDB, Pinecone, Qdrant, Weaviate, In-Memory | SDK | CLI |

| Data Readers | Load PDF, Markdown, Text, HTML, URLs | SDK | CLI |

| Profiling | Performance profiling with --profile flag |

SDK | CLI |

- 🔬 Deep Research Agents - OpenAI & Gemini support for automated research

- 🔄 Query Rewriter Agent - HyDE, Step-back, Multi-query strategies for RAG optimization

- 🌐 Native Web Search - Real-time search via OpenAI, Gemini, Anthropic, xAI, Perplexity

- 📥 Web Fetch - Retrieve full content from URLs (Anthropic)

- 📝 Prompt Expander Agent - Expand short prompts into detailed instructions

- 💾 Prompt Caching - Reduce costs & latency (OpenAI, Anthropic, Bedrock, Deepseek)

- 🧠 Claude Memory Tool - Persistent cross-conversation memory (Anthropic Beta)

- 💾 File-Based Memory - Zero-dependency persistent memory for all agents

- 🔍 Built-in Search Tools - Tavily, You.com, Exa for web search, news, content extraction

- 📋 Planning Mode - Plan before execution for agents & multi-agent systems

- 🔧 Planning Tools - Research with tools during planning phase

- 🧠 Planning Reasoning - Chain-of-thought planning for complex tasks

- ⛓️ Prompt Chaining - Sequential prompt workflows with conditional gates

- 🔍 Evaluator Optimiser - Generate and optimize through iterative feedback

- 👷 Orchestrator Workers - Distribute tasks among specialised workers

- ⚡ Parallelisation - Execute tasks in parallel for improved performance

- 🔁 Repetitive Agents - Handle repetitive tasks through automated loops

- 🤖 Autonomous Workflow - Monitor, act, adapt based on environment feedback

- 🖼️ Image Generation Agent - Create images from text descriptions