Upscaler

A consolidation of various compiled open-source AI image/video upscaling product for a working CLI friendly image and video upscaling program.

Stars: 262

Holloway's Upscaler is a consolidation of various compiled open-source AI image/video upscaling products for a CLI-friendly image and video upscaling program. It provides low-cost AI upscaling software that can run locally on a laptop, programmable for albums and videos, reliable for large video files, and works without GUI overheads. The repository supports hardware testing on various systems and provides important notes on GPU compatibility, video types, and image decoding bugs. Dependencies include ffmpeg and ffprobe for video processing. The user manual covers installation, setup pathing, calling for help, upscaling images and videos, and contributing back to the project. Benchmarks are provided for performance evaluation on different hardware setups.

README:

This project is a consolidation of various compiled open-source AI image/video

upscaling product for a working CLI-friendly image and video upscaling program.

For these reasons:

- Low-cost image/video AI upscaling software - run locally in your laptop with an AI solution.

- Programmable - when you upscale an album or a video, the AI program has to be programmable and not restricted by any GUI's design.

- Reliabily working for big subject - video files are usually large and require streaming algorithm approach to prevent uncontrollable resources consumption in a simple OS system (e.g. disk space, RAM, and vRAM).

- I urgently need a video upscaling technologies to work locally - for both image and video without any GUI overheads.

This repository was made possible by the following contributors:

-

Joly0

- Windows support via PowerShell.

- Cory Galyna - Repository & CI management.

- Jean Shuralyov - Documentations.

-

(Holloway) Chew, Kean Ho

- FFMPEG & UNIX Support via POSIX Shell and Polygot Script.

Here are the tested hardware and operating system:

| System | Results | Usable Processing Units |

|---|---|---|

debian-amd64 (linux) |

PASS |

NVIDIA GeForce MX150, Intel(R) UHD Graphics 620 (KBL GT2)

|

darwin-amd64 (macOS) |

FAILED |

Binary failed to use Intel Iris Graphics iGPU and CPU. |

windows-amd64 (windows) |

PASS |

Nvidia Quadro T600, Intel Iris Xe Graphics

|

IMPORTANT NOTES

(1)

You seriously need a compatible GPU to drastically speed up the upscaling efforts from hours to seconds for image. I tested mine against

NVIDIA GeForce MX150vs.Intel(R) UHD Graphics 620 (KBL GT2)built-in graphic hardwares in my laptop. It did a huge difference.(2)

At the moment, the algorithm only works on constant bit rate video. Variable bit rate (VBR) video are considered but may be done in the future with proper programming language. Most (as in 99% of video) are constant bit rate video so VBR support is at least concern.

(3)

Not all images can be upscaled (e.g. some AI generated images from Stable Diffussion) due to the binary's internal image decoder bug. Tracking issue: https://github.com/xinntao/Real-ESRGAN/issues/595

(4)

Re-packaging efforts are unlikely because currently it's a bad investment. It's either I spend the time study NCNN and write the whole XinTao's RealESRGAN-Vulcan program as my own codes from scratch or glued some existing programs together. At the moment, I do not have the resources to rewrite or study the NCNN (yet).

NOTE TO MacOS USERS

The binary

bin/mac-amd64is currently unsigned. Hence, you need to explictly grant the use permission in yourSettings > Security & Privacysection.Please be informed that my test result is in accordance with the Upscayl team: many CPU and iGPUs are not working and supported yet.

If you're working on video, you need ffmpeg and ffprobe for dissecting and

reassembling a video file.

You can proceed to install them in 1 go at: https://ffmpeg.org/

You need to install Microsoft Visual C++ Redistributable Package from their official website if you haven't do so. Usually other software may automatically include a version installed already. Please check your "Add/Remove Program" control panel to verify.

Here are the basic user manuals:

There are different ways of installing this program depending on your interested versions:

You can download the latest version of the upscaler-[VERSION].zip

package from https://github.com/hollowaykeanho/Upscaler/releases

and then unzip it to an appropriate location. Note that the models are already

included in this package.

For those who wants to just update the models, the

upscaler-models-[VERSION].zip is made available for you. Simply overwrite

the models/ directory's contents in the software.

For those who wants to run test and benchmarks for the repository, the

upscaler-tests-[VERSION].zip is made available for you. Simply integrate

the tests/ directory into your existing software program (the unpacked

upscaler-[VERSION].zip).

You need to git clone the repository into an appropriate location.

$ git clone https://github.com/hollowaykeanho/Upscaler.git

We advise you to symlink the start.cmd into $PATH or %PATH% directory

depending on your operating system. Example, on Debian Linux:

$ ln -s /path/to/Upscaler/start.cmd /path/to/bin/upscaler

Alternatively, on UNIX (Linux & Mac) systems, you can create a shell script that

pass all arguments into the start.cmd. Example:

#!/bin/sh

/path/to/Upscaler/start.cmd "$@"

TIP: if had you decided to use the shell script approach, you can also design the command to use your default model and scaling for your programming efficiencies. Recommend you use

$HOME/bindirectory if it is set visible in your$PATHvalue.

This repository was unified using

Holloway's Polygot Script to

keep user instruction extremely simple. Hence, in any operating system (UNIX or

WINDOWS), simply interact with the repository's start.cmd script will do.

Example for requesting a help instruction:

$ ./Upscaler/start.cmd --help

In the help display, you generally want to take notice of the AVAILABLE MODELS

list. The upscaling algorithms are solely based on the available models in the

models directory.

The scripts are written in a way to dynamically index each of them and present

it in the help display without re-writing itself.

To upscale an image simple run start.cmd against the image:

$ ./start.cmd \

--model ultrasharp \

--scale 4 \

--format webp \

--input my-image.jpg

To determine the available models, their respective scale limits, and their

output formats, simple execute the --help and look for: AVAILABLE MODELS and

AVAILABLE FORMATS respectively.

If done correctly, an image based on orginal filename with a suffix -upscaled

is created. If we follow the example above, it should be

my-image-upscaled.webp.

Unless you're working on 8 seconds 8MB sized video, you would want to follow the instructions below to make sure your project are always in-tact and resumeable.

Please keep in mind that you need at least 3x video size storage for the job depending on the video frame rate, frame size, color schemes and etc. The minimum 3x is due to:

- 1 set is your original video.

- 1 set is for all the upscaled images (can be a lot bigger since we're doing it frame by frame; losing the video compression effect).

- 1 set is your output video (bigger than original of course).

Hence, please plan out your storage budget before starting a video upscaling project.

IMPORTANT

Know your hardware limitations before determining the scaling factor. A scale of 4x on a 1090p for a 12GB memory laptop can crash the entire OS (I'm referring the very stable Debian OS) during the video re-assembly phase with FFMPEG due to memory starvation.

You're advised to create a project directory for upscaling video project due to its large sized data.

Instead of executing the start.cmd straight away, please script it inside and

place it in a project directory.

A simple UNIX example would be a shell script (e.g. run.sh) as follows:

#!/bin/sh

/path/to/Upscaler/start.cmd --model ultrasharp \

--scale 4 \

--input ./sample-vid.mp4 \

--video

A simple WINDOWS example would be a batch script (e.g. run.bat) as follows:

@echo off

/path/to/Upscaler/start.cmd --model ultrasharp ^

--scale 4 ^

--input ./sample-vid.mp4 ^

--video

This is for resuming the upscaling project in case of crashes or long hours work. A good directory looks something as follows:

/path/to/my-upscaled-project/

├── run.sh

└── sample-vid.mp4

To initiate or to resume project, simply run your initiated project:

# cd /path/to/my-upscaled-project

$ ./run.sh

The Upscaler will create a workspace to house its output frames and control values. You can inspect the frame images while Upscaler is at work as long as you're viewing the frame that it is working on.

I recommend you inpect the frames first for determining whether the AI model is suitable or otherwise. Otherwise, please stop the process and execute the next step.

Just in case if you bump into something odd that requires to restart the project

from start, you can delete the [FILENAME]-workspace directory inside the

project directory. This will force the program to restart everything all over

again.

The project is set to intentionally leave the workspace as it is in case you need to use the frames for other purposes (e.g. thumbnail).

Star, Watch, or the best: sponsor the contributors.

In case you need to contribute back:

- Raise an issue ticket.

- Fork the repository and work against the

mainbranch. - Develop your contributions and ensure your commit are GPG-signed.

- Once done, DO NOT raise a pull request unless instructed. Simply notify

me via your ticket and I will clone your forked repo locally and

cherry-pickthem (I need those GPG signatures preserved where GitHub Pull Request cannot fulfill). An exception would be the you have a need to earn the GitHub Pair Extraordinaire Badge. For doing that, please add me into your forked repo and I will make it happen for you. - Delete your fork repo once I notify you that the merging is completed.

- Thank you.

Submit results of benchmarks running tests/benchmark.cmd at the root of the

repository. These data serves few purposes:

- To test the repository's programs are running properly for both video and image upscaling.

- To identify what platform, OS, and hardware capable of running this project. (good for determining usability before procurement).

- To know about its statistical performances.

IMPORTANT NOTE

The

benchmark.cmdcaptures wall-clock timing. Hence, please leave the system dedicated to only running the benchmark and not doing something else for maintaining results consistencies. We're capturing wall-clock timing for real time use (e.g. subjected to OS and background processes interferences).Our recommendation is to leave it run overnight before you sleep (~400 frames) so it takes some time.

| Version | Sample 1 (Video) |

|---|---|

v0.7.0 |

9587 seconds |

v0.6.0 |

9260 seconds |

v0.5.0 |

9192 seconds |

v0.4.0 |

10316 seconds |

| Version | Sample 1 (Video) |

|---|---|

v0.6.0 |

3649 seconds |

| Version | Sample 1 (Video) |

|---|---|

v0.7.0 |

11633 seconds |

In case you can't access to the help details found from the --help command,

here's a copy from the UNIX side:

u0:Upscaler$ ./start.cmd --help

HOLLOWAY'S UPSCALER

-------------------

COMMAND:

$ ./start.cmd \

--model MODEL_NAME \

--scale SCALE_FACTOR \

--format FORMAT \

--parallel TOTAL_WORKING_THREADS # only for video upscaling (coming soon) \

--video # only for video upscaling \

--input PATH_TO_FILE \

--output PATH_TO_FILE_OR_DIR # optional

EXAMPLES

$ ./start.cmd \

--model ultrasharp \

--scale 4 \

--format webp \

--input my-image.jpg

$ ./start.cmd \

--model ultrasharp \

--scale 4 \

--format webp \

--input my-image.jpg \

--output my-image-upscaled.webp

$ ./start.cmd \

--model ultrasharp \

--scale 4 \

--format png \

--parallel 1 \

--video \

--input my-video.mp4 \

--output my-video-upscaled.mp4

$ ./start.cmd \

--model ultrasharp \

--scale 4 \

--format png \

--parallel 1 \

--input video/frames/input \

--output video/frames/output

AVAILABLE FORMATS:

(1) PNG

(2) JPG

(3) ...

AVAILABLE MODELS:

...

This is a binaries assembled repository. You may find the source codes from the original contributors here:

Test sample video in the tests/ directory was supplied by

Igrid North

from

Pexels. Original 4k sized video is also available at

origin for upscaling comparison.

This project is aligned to its upstream sources and is licensed under BSD-3-Clause "New or "Revised" License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Upscaler

Similar Open Source Tools

Upscaler

Holloway's Upscaler is a consolidation of various compiled open-source AI image/video upscaling products for a CLI-friendly image and video upscaling program. It provides low-cost AI upscaling software that can run locally on a laptop, programmable for albums and videos, reliable for large video files, and works without GUI overheads. The repository supports hardware testing on various systems and provides important notes on GPU compatibility, video types, and image decoding bugs. Dependencies include ffmpeg and ffprobe for video processing. The user manual covers installation, setup pathing, calling for help, upscaling images and videos, and contributing back to the project. Benchmarks are provided for performance evaluation on different hardware setups.

vim-ollama

The 'vim-ollama' plugin for Vim adds Copilot-like code completion support using Ollama as a backend, enabling intelligent AI-based code completion and integrated chat support for code reviews. It does not rely on cloud services, preserving user privacy. The plugin communicates with Ollama via Python scripts for code completion and interactive chat, supporting Vim only. Users can configure LLM models for code completion tasks and interactive conversations, with detailed installation and usage instructions provided in the README.

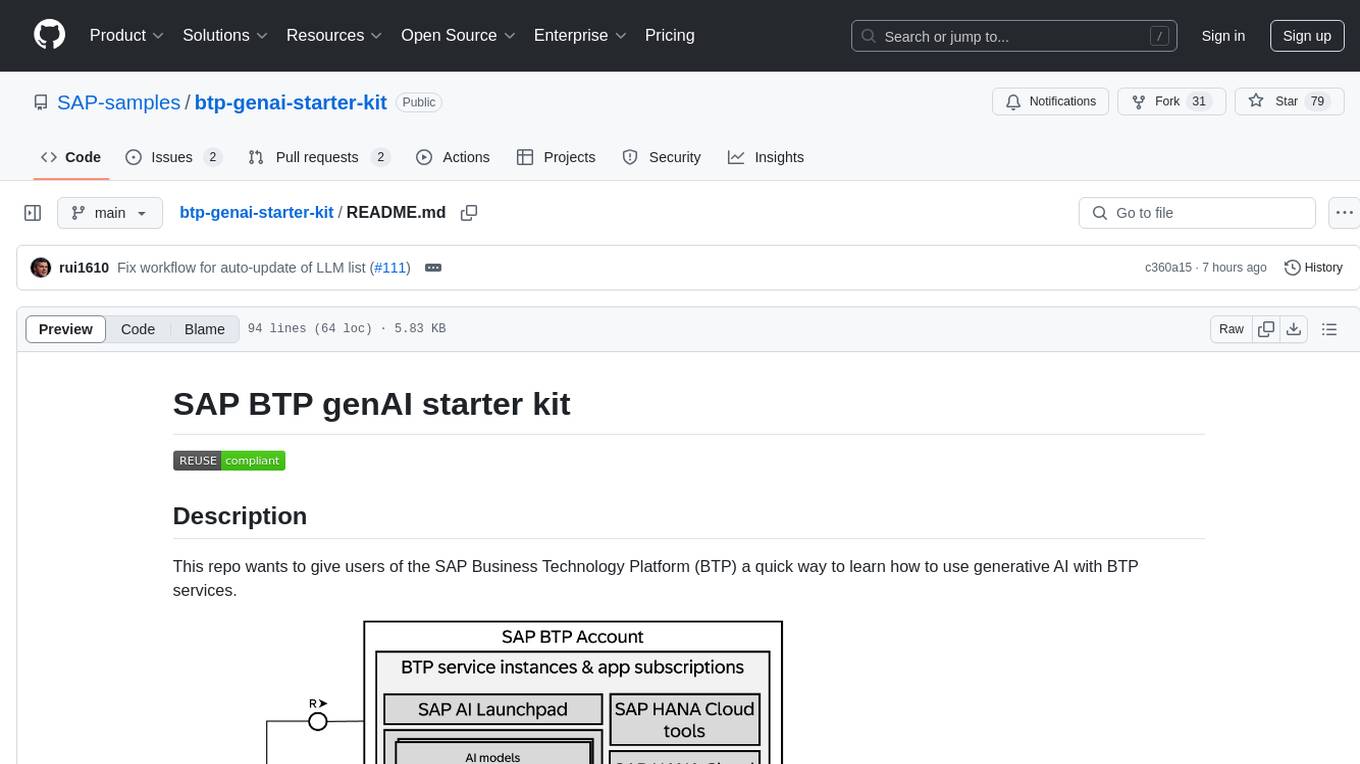

btp-genai-starter-kit

This repository provides a quick way for users of the SAP Business Technology Platform (BTP) to learn how to use generative AI with BTP services. It guides users through setting up the necessary infrastructure, deploying AI models, and running genAI experiments on SAP BTP. The repository includes scripts, examples, and instructions to help users get started with generative AI on the SAP BTP platform.

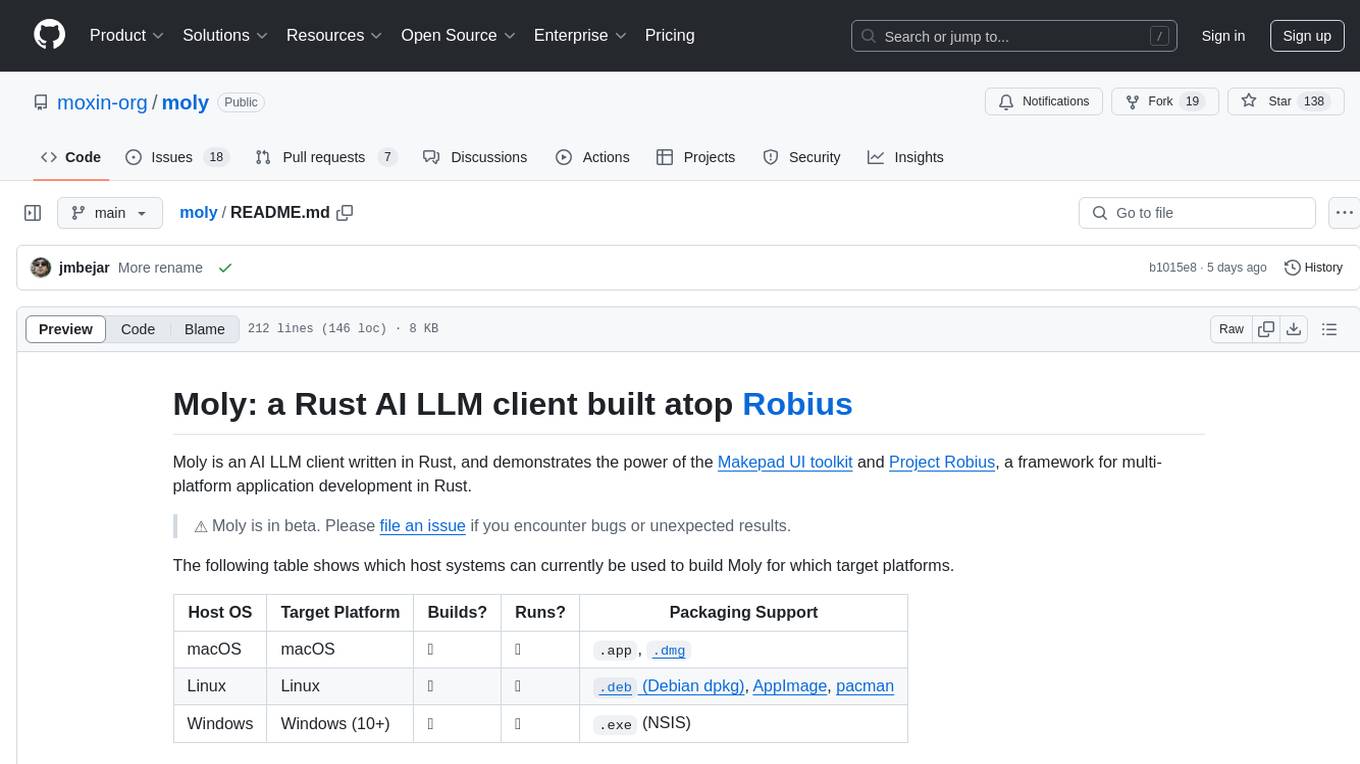

moly

Moly is an AI LLM client written in Rust, showcasing the capabilities of the Makepad UI toolkit and Project Robius, a framework for multi-platform application development in Rust. It is currently in beta, allowing users to build and run Moly on macOS, Linux, and Windows. The tool provides packaging support for different platforms, such as `.app`, `.dmg`, `.deb`, AppImage, pacman, and `.exe` (NSIS). Users can easily set up WasmEdge using `moly-runner` and leverage `cargo` commands to build and run Moly. Additionally, Moly offers pre-built releases for download and supports packaging for distribution on Linux, Windows, and macOS.

WindowsAgentArena

Windows Agent Arena (WAA) is a scalable Windows AI agent platform designed for testing and benchmarking multi-modal, desktop AI agents. It provides researchers and developers with a reproducible and realistic Windows OS environment for AI research, enabling testing of agentic AI workflows across various tasks. WAA supports deploying agents at scale using Azure ML cloud infrastructure, allowing parallel running of multiple agents and delivering quick benchmark results for hundreds of tasks in minutes.

blinkid-ios

BlinkID iOS is a mobile SDK that enables developers to easily integrate ID scanning and data extraction capabilities into their iOS applications. The SDK supports scanning and processing various types of identity documents, such as passports, driver's licenses, and ID cards. It provides accurate and fast data extraction, including personal information and document details. With BlinkID iOS, developers can enhance their apps with secure and reliable ID verification functionality, improving user experience and streamlining identity verification processes.

svelte-bench

SvelteBench is an LLM benchmark tool for evaluating Svelte components generated by large language models. It supports multiple LLM providers such as OpenAI, Anthropic, Google, and OpenRouter. Users can run predefined test suites to verify the functionality of the generated components. The tool allows configuration of API keys for different providers and offers debug mode for faster development. Users can provide a context file to improve component generation. Benchmark results are saved in JSON format for analysis and visualization.

Agentless

Agentless is an open-source tool designed for automatically solving software development problems. It follows a two-phase process of localization and repair to identify faults in specific files, classes, and functions, and generate candidate patches for fixing issues. The tool is aimed at simplifying the software development process by automating issue resolution and patch generation.

fabric

Fabric is an open-source framework for augmenting humans using AI. It provides a structured approach to breaking down problems into individual components and applying AI to them one at a time. Fabric includes a collection of pre-defined Patterns (prompts) that can be used for a variety of tasks, such as extracting the most interesting parts of YouTube videos and podcasts, writing essays, summarizing academic papers, creating AI art prompts, and more. Users can also create their own custom Patterns. Fabric is designed to be easy to use, with a command-line interface and a variety of helper apps. It is also extensible, allowing users to integrate it with their own AI applications and infrastructure.

lantern

Lantern is an open-source PostgreSQL database extension designed to store vector data, generate embeddings, and handle vector search operations efficiently. It introduces a new index type called 'lantern_hnsw' for vector columns, which speeds up 'ORDER BY ... LIMIT' queries. Lantern utilizes the state-of-the-art HNSW implementation called usearch. Users can easily install Lantern using Docker, Homebrew, or precompiled binaries. The tool supports various distance functions, index construction parameters, and operator classes for efficient querying. Lantern offers features like embedding generation, interoperability with pgvector, parallel index creation, and external index graph generation. It aims to provide superior performance metrics compared to other similar tools and has a roadmap for future enhancements such as cloud-hosted version, hardware-accelerated distance metrics, industry-specific application templates, and support for version control and A/B testing of embeddings.

HuggingFaceGuidedTourForMac

HuggingFaceGuidedTourForMac is a guided tour on how to install optimized pytorch and optionally Apple's new MLX, JAX, and TensorFlow on Apple Silicon Macs. The repository provides steps to install homebrew, pytorch with MPS support, MLX, JAX, TensorFlow, and Jupyter lab. It also includes instructions on running large language models using HuggingFace transformers. The repository aims to help users set up their Macs for deep learning experiments with optimized performance.

mark

Mark is a CLI tool that allows users to interact with large language models (LLMs) using Markdown format. It enables users to seamlessly integrate GPT responses into Markdown files, supports image recognition, scraping of local and remote links, and image generation. Mark focuses on using Markdown as both a prompt and response medium for LLMs, offering a unique and flexible way to interact with language models for various use cases in development and documentation processes.

ai-starter-kit

SambaNova AI Starter Kits is a collection of open-source examples and guides designed to facilitate the deployment of AI-driven use cases for developers and enterprises. The kits cover various categories such as Data Ingestion & Preparation, Model Development & Optimization, Intelligent Information Retrieval, and Advanced AI Capabilities. Users can obtain a free API key using SambaNova Cloud or deploy models using SambaStudio. Most examples are written in Python but can be applied to any programming language. The kits provide resources for tasks like text extraction, fine-tuning embeddings, prompt engineering, question-answering, image search, post-call analysis, and more.

blinkid-android

The BlinkID Android SDK is a comprehensive solution for implementing secure document scanning and extraction. It offers powerful capabilities for extracting data from a wide range of identification documents. The SDK provides features for integrating document scanning into Android apps, including camera requirements, SDK resource pre-bundling, customizing the UX, changing default strings and localization, troubleshooting integration difficulties, and using the SDK through various methods. It also offers options for completely custom UX with low-level API integration. The SDK size is optimized for different processor architectures, and API documentation is available for reference. For any questions or support, users can contact the Microblink team at help.microblink.com.

generative-models

Generative Models by Stability AI is a repository that provides various generative models for research purposes. It includes models like Stable Video 4D (SV4D) for video synthesis, Stable Video 3D (SV3D) for multi-view synthesis, SDXL-Turbo for text-to-image generation, and more. The repository focuses on modularity and implements a config-driven approach for building and combining submodules. It supports training with PyTorch Lightning and offers inference demos for different models. Users can access pre-trained models like SDXL-base-1.0 and SDXL-refiner-1.0 under a CreativeML Open RAIL++-M license. The codebase also includes tools for invisible watermark detection in generated images.

NeoGPT

NeoGPT is an AI assistant that transforms your local workspace into a powerhouse of productivity from your CLI. With features like code interpretation, multi-RAG support, vision models, and LLM integration, NeoGPT redefines how you work and create. It supports executing code seamlessly, multiple RAG techniques, vision models, and interacting with various language models. Users can run the CLI to start using NeoGPT and access features like Code Interpreter, building vector database, running Streamlit UI, and changing LLM models. The tool also offers magic commands for chat sessions, such as resetting chat history, saving conversations, exporting settings, and more. Join the NeoGPT community to experience a new era of efficiency and contribute to its evolution.

For similar tasks

StableSwarmUI

StableSwarmUI is a modular Stable Diffusion web user interface that emphasizes making power tools easily accessible, high performance, and extensible. It is designed to be a one-stop-shop for all things Stable Diffusion, providing a wide range of features and capabilities to enhance the user experience.

upscayl

Upscayl is a free and open-source AI image upscaler that uses advanced AI algorithms to enlarge and enhance low-resolution images without losing quality. It is a cross-platform application built with the Linux-first philosophy, available on all major desktop operating systems. Upscayl utilizes Real-ESRGAN and Vulkan architecture for image enhancement, and its backend is fully open-source under the AGPLv3 license. It is important to note that a Vulkan compatible GPU is required for Upscayl to function effectively.

ailia-models

The collection of pre-trained, state-of-the-art AI models. ailia SDK is a self-contained, cross-platform, high-speed inference SDK for AI. The ailia SDK provides a consistent C++ API across Windows, Mac, Linux, iOS, Android, Jetson, and Raspberry Pi platforms. It also supports Unity (C#), Python, Rust, Flutter(Dart) and JNI for efficient AI implementation. The ailia SDK makes extensive use of the GPU through Vulkan and Metal to enable accelerated computing. # Supported models 323 models as of April 8th, 2024

models

This repository contains self-trained single image super resolution (SISR) models. The models are trained on various datasets and use different network architectures. They can be used to upscale images by 2x, 4x, or 8x, and can handle various types of degradation, such as JPEG compression, noise, and blur. The models are provided as safetensors files, which can be loaded into a variety of deep learning frameworks, such as PyTorch and TensorFlow. The repository also includes a number of resources, such as examples, results, and a website where you can compare the outputs of different models.

adobe-photoshopCRCK

Adobe PhotoshopCRCK is a tool designed to provide users with the latest version of Adobe Photoshop for free on Windows. It allows users to access advanced photo editing features and functionalities without the need for a paid subscription. The tool is intended for individuals looking to explore professional photo editing capabilities without incurring additional costs. With Adobe PhotoshopCRCK, users can enhance their images, create stunning graphics, and unleash their creativity through a wide range of editing tools and options.

lassxToolkit

lassxToolkit is a versatile tool designed for file processing tasks. It allows users to manipulate files and folders based on specified configurations in a strict .json format. The tool supports various AI models for tasks such as image upscaling and denoising. Users can customize settings like input/output paths, error handling, file selection, and plugin integration. lassxToolkit provides detailed instructions on configuration options, default values, and model selection. It also offers features like tree restoration, recursive processing, and regex-based file filtering. The tool is suitable for users looking to automate file processing tasks with AI capabilities.

clarity-upscaler

Clarity AI is a free and open-source AI image upscaler and enhancer, providing an alternative to Magnific. It offers various features such as multi-step upscaling, resemblance fixing, speed improvements, support for custom safetensors checkpoints, anime upscaling, LoRa support, pre-downscaling, and fractality. Users can access the tool through the ClarityAI.co app, ComfyUI manager, API, or by deploying and running locally or in the cloud with cog or A1111 webUI. The tool aims to enhance image quality and resolution using advanced AI algorithms and models.

AI-Lossless-Zoomer

AI-Lossless-Zoomer is a tool that utilizes the Real-ESRGAN model provided by Tencent ARC Lab to enhance images, particularly portraits and anime pictures, with fast processing. It supports multi-thread processing, batch image processing, customizable options, output formats, output paths, AI engine selection, and batch cleaning tasks. The tool is designed for Windows 7 or later with .NET Framework 4.6+. Users can choose between the installable version (.exe) and the portable version (.zip) that includes the latest AI engine. The tool is efficient for enlarging images while maintaining quality.

For similar jobs

Qwen-TensorRT-LLM

Qwen-TensorRT-LLM is a project developed for the NVIDIA TensorRT Hackathon 2023, focusing on accelerating inference for the Qwen-7B-Chat model using TRT-LLM. The project offers various functionalities such as FP16/BF16 support, INT8 and INT4 quantization options, Tensor Parallel for multi-GPU parallelism, web demo setup with gradio, Triton API deployment for maximum throughput/concurrency, fastapi integration for openai requests, CLI interaction, and langchain support. It supports models like qwen2, qwen, and qwen-vl for both base and chat models. The project also provides tutorials on Bilibili and blogs for adapting Qwen models in NVIDIA TensorRT-LLM, along with hardware requirements and quick start guides for different model types and quantization methods.

dl_model_infer

This project is a c++ version of the AI reasoning library that supports the reasoning of tensorrt models. It provides accelerated deployment cases of deep learning CV popular models and supports dynamic-batch image processing, inference, decode, and NMS. The project has been updated with various models and provides tutorials for model exports. It also includes a producer-consumer inference model for specific tasks. The project directory includes implementations for model inference applications, backend reasoning classes, post-processing, pre-processing, and target detection and tracking. Speed tests have been conducted on various models, and onnx downloads are available for different models.

joliGEN

JoliGEN is an integrated framework for training custom generative AI image-to-image models. It implements GAN, Diffusion, and Consistency models for various image translation tasks, including domain and style adaptation with conservation of semantics. The tool is designed for real-world applications such as Controlled Image Generation, Augmented Reality, Dataset Smart Augmentation, and Synthetic to Real transforms. JoliGEN allows for fast and stable training with a REST API server for simplified deployment. It offers a wide range of options and parameters with detailed documentation available for models, dataset formats, and data augmentation.

ai-edge-torch

AI Edge Torch is a Python library that supports converting PyTorch models into a .tflite format for on-device applications on Android, iOS, and IoT devices. It offers broad CPU coverage with initial GPU and NPU support, closely integrating with PyTorch and providing good coverage of Core ATen operators. The library includes a PyTorch converter for model conversion and a Generative API for authoring mobile-optimized PyTorch Transformer models, enabling easy deployment of Large Language Models (LLMs) on mobile devices.

awesome-RK3588

RK3588 is a flagship 8K SoC chip by Rockchip, integrating Cortex-A76 and Cortex-A55 cores with NEON coprocessor for 8K video codec. This repository curates resources for developing with RK3588, including official resources, RKNN models, projects, development boards, documentation, tools, and sample code.

cl-waffe2

cl-waffe2 is an experimental deep learning framework in Common Lisp, providing fast, systematic, and customizable matrix operations, reverse mode tape-based Automatic Differentiation, and neural network model building and training features accelerated by a JIT Compiler. It offers abstraction layers, extensibility, inlining, graph-level optimization, visualization, debugging, systematic nodes, and symbolic differentiation. Users can easily write extensions and optimize their networks without overheads. The framework is designed to eliminate barriers between users and developers, allowing for easy customization and extension.

TensorRT-Model-Optimizer

The NVIDIA TensorRT Model Optimizer is a library designed to quantize and compress deep learning models for optimized inference on GPUs. It offers state-of-the-art model optimization techniques including quantization and sparsity to reduce inference costs for generative AI models. Users can easily stack different optimization techniques to produce quantized checkpoints from torch or ONNX models. The quantized checkpoints are ready for deployment in inference frameworks like TensorRT-LLM or TensorRT, with planned integrations for NVIDIA NeMo and Megatron-LM. The tool also supports 8-bit quantization with Stable Diffusion for enterprise users on NVIDIA NIM. Model Optimizer is available for free on NVIDIA PyPI, and this repository serves as a platform for sharing examples, GPU-optimized recipes, and collecting community feedback.

depthai

This repository contains a demo application for DepthAI, a tool that can load different networks, create pipelines, record video, and more. It provides documentation for installation and usage, including running programs through Docker. Users can explore DepthAI features via command line arguments or a clickable QT interface. Supported models include various AI models for tasks like face detection, human pose estimation, and object detection. The tool collects anonymous usage statistics by default, which can be disabled. Users can report issues to the development team for support and troubleshooting.