lassxToolkit

AI图片放大工具, 整合了多种模型, 支持自动遍历文件夹, 支持批量处理.

Stars: 63

lassxToolkit is a versatile tool designed for file processing tasks. It allows users to manipulate files and folders based on specified configurations in a strict .json format. The tool supports various AI models for tasks such as image upscaling and denoising. Users can customize settings like input/output paths, error handling, file selection, and plugin integration. lassxToolkit provides detailed instructions on configuration options, default values, and model selection. It also offers features like tree restoration, recursive processing, and regex-based file filtering. The tool is suitable for users looking to automate file processing tasks with AI capabilities.

README:

2024.2.19 update(标粗为版本升级带来的变动):

-

修改了输入路径的判断方法(判断 文件 / 文件夹)

-

修正了 xcopy 的 /-i 开关在旧版Windows系统中无法使用的问题

-

修正了程序在 pos_slash 变量为 infinity 时(输入路径中没有 ”\“ )的异常行为。

Version 4.1.a Beta 为内测版本,没有UI界面(需要手动修改配置文件)。有任何 ‘使用方面的问题 / BUG’ 应在 ‘B站 / Github / QQ群(935718273)/ 洛谷 ’ 提出。

从此版本起,使用时应将配置文件拖入主程序,而不是直接打开主程序!

也就是说,程序不再局限于单个配置文件。

配置文件为严格的 .json 文件。任何格式错误都会导致 程序在控制台输出 "ERROR" 并跳过此配置。

接下来会介绍配置文件的内容、默认值,以及与之相关的必要的知识。

-

每个配置前的括号内标明了该配置的属性。

-

带 *号的内容会在后文中详细解释。

-

加粗的字符串为对应其功能的键名(key name)。

如有不清晰,可参见文末的测试用配置文件。

-

(必填,String)input_path:输入 文件 / 文件夹 的路径

-

(必填,String)output_path:输出 文件 / 文件夹 的路径

-

*(选填,String)error_path:错误文件夹的路径

-

*(选填,String)selector:选择器, 用来匹配文件

- 文件夹遍历时会用到选择器。

-

*(必填,String)model:选用的AI模型

-

(必填,String)scale:模型的放大倍数

-

(选填,String)denoise:模型的降噪等级

- realesr 系列模型不支持降噪等级,这时此项无意义。

-

(选填,String)syncgap:图片分块等级

-

我也不知道有什么效果(也许可以加速处理?),但有的模型需要这个参数。

-

只有 realcugan 模型支持图片分块等级,其它情况下此项无意义。

-

-

*(必填,Boolean)tree_restore:是否还原目录结构

-

(必填,Boolean)subdir_find:是否递归处理子目录

-

(选填,Boolean)emptydir_find:是否处理 不包含目标文件 的目录

-

如果将此项设为 true,程序就会根据输入文件夹的结构,生成完全相同的输出文件夹(可能有些输出文件夹为空)。某种意义上,程序处理了空的文件夹(相比于那些包含了待处理文件的),因此将此项命名为 emptydir_find(空目录寻找)。

-

可以帮你还原整个目录结构,即使不处理全部文件。

-

-

*(选填,Boolean)file_error:是否处理文件错误

-

*(选填,Boolean)dir_error:是否处理文件夹错误

- 一般来说,dir_error 完全包含 file_error。

-

*(选填,Array)match:正则表达式形式的黑名单

-

可以筛选文件的名称("name")和目录("path")。

-

现有完全匹配("match"),部分匹配("search")两种模式。

-

-

*(选填,Object)addons:插件模块

- 自己写的插件要放到 项目根目录下 addons 文件夹里,填入文件名即可。

"error_path": "" // 空字符串

"selector": "\*" // 用于筛选所有文件

"denoise": "0"

"syncgap": "0"

"emptydir_find": false // 这一项一般用不到

"file_error": true

"dir_error": false

"match": null

"addons": null以下是模型列表和一些注意事项(加粗为模型的默认值,仅为参考):

-

DF2K / DF2K-JPEG(realesr一代模型)

- 放大倍数:2 / 4

-

realesrgan / realesrnet(realesr二代模型)

- 放大倍数:2 / 4

-

realesrgan-anime(realesr三代模型,使用内存)

- 放大倍数:2 / 3 / 4

-

realcugan

-

放大倍数:1 / 2 / 3 / 4

-

降噪等级:-1 / 0 / 1 / 2 / 3

-

分块等级:0 / 1 / 2 / 3

-

-

waifu2x-anime / waifu2x-photo(waifu2x模型)

-

放大倍数:1 / 2 / 4 / 8 / 16 / 32

-

降噪等级:-1 / 0 / 1 / 2 / 3

-

-

realesr 系列(realesrgan / realesrnet / realesrgan-anime / DF2K / DF2K-JPEG)模型不支持 denoise(降噪等级),这时此项无意义。

-

只有 realcugan 模型支持 syncgap(图片分块等级),其它情况下此项无意义。

虽然模型有默认值,但配置文件内还是要手动设置。

注意事项:

-

AI 模型使用显卡放大图片,处理速度取决于显卡算力和图片分辨率。

-

模型之间有一定的差异,具体可见 testimagine.7z 压缩包。

-

realesrgan-anime 模型适合超分动漫图片。

-

realesrnet & waifu2x-photo 模型适合超分真实图片。

-

若没有独显或独显很弱,建议使用 realesrgan-anime 模型。此模型处理速度最快。

-

realesrgan-anime 模型为Ram版本(内存版本),没有独显也可以超分大图片。独显性能不受影响。

-

除 realesrgan-anime 以外所有模型都是非Ram版本,显存和内存不足8G可能会导致崩溃。通常在处理 ’$30MB\ /\ 10^8$(一亿)像素‘ 以上的图片时会崩溃。

-

显卡测试1: RX588 ARCAEA-8K-HKT.png 16MB 7680*4320

-

realesrgan模型-用时30min

-

realesrgan-anime模型-用时14min.

此数据来自B站用户:ZXOJ-LJX-安然x。

其实就是同学帮忙测的 -

-

注意:关于显存占用问题,

-

如果使用独显, 在任务管理器中看不到显卡占用;在NA软件中显示占用满。

-

集显可以直接在任务管理器中看到。

-

-

对真实图片进行超分不能使用anime模型!

会有意外惊喜

选择器决定了程序在遍历文件夹、搜索输入文件时得到的结果。它是一个由Windows通配符和普通字符组成的字符串,在使用上和Windows资源管理器(explorer.exe)的搜索框相同。

简单说,"?"(英文问号)可以代替文件路径中任意的一个字符,"*"(乘号 / 星号)可以代替路径中任意的一个或多个字符。比如:

-

"*":所有文件 / 文件夹

-

"*.jpg":所有 .jpg 格式的文件

-

"a*.jpg":所有以 'a' 开头, .jpg 格式的文件

更多细节可以参考微软官方文档:通配符简介 - PowerShell | Microsoft Learn

tree_restore & subdir_find & emptydir_find 三个参数功能相似,这里只对 tree_restore 参数展开描述。

tree_restore 参数功能很简单,当它为 true 时,程序会自动在输出文件夹内建立子文件夹,并将文件依次丢回原来的子文件夹里。举个例子:

- 假如输入文件夹内文件结构如下:

Input/

├── dir1/

│ ├── A.jpg

│ └── B.png

├── dir2/

│ ├── dir3/

│ │ └── C.jpeg

│ └── D.txt

└── E.webp

- 那么,如果 tree_restore = true 并且只处理图片的话,输出文件夹内是这样的:

Input/

├── dir1/

│ ├── A.jpg

│ └── B.png

├── dir2/

│ └── dir3/

│ └── C.jpeg

└── E.webp

- 反之,如果 tree_restore = false,其他条件不变,输出文件夹内是这样的:

Input/

├── A.jpg

├── B.png

├── C.jpeg

└── E.webp

很直观,此参数会直接影响输出文件夹结构。另外两个参数就是字面意思,这里不做解释。

一般来说,程序的错误分为以下四种:

-

当 ‘输出文件夹和输入文件夹间 存在名称相同完全的文件’ 时,构成 ‘标准错误’ 。

-

当 ‘配置文件不存在 / json 格式错误 / 缺少必填配置’ 时,构成‘ 配置文件错误’ 。

-

当 ‘模型崩溃 / 程序卡死 / 文件、文件夹输出有误’ 时,构成 ‘程序运行错误’ 。

-

当 ’插件无法调用 / 插件失效 / 文件路径表示出错‘ 时,构成 ’其它功能错误‘ 。

程序内置了错误处理模块,以尽可能避免错误。由于第二至四项错误都 极少见 或由 ‘使用者操作不当 / 代码功能不完善’ 导致,无法预测,因此程序只提供了标准错误的解决方案和配置空间。

一个文件夹路径,该文件夹用于保存输出文件夹内的错误文件,相当于一个备份文件夹。

该文件夹一般位于输入或输出文件夹下,名称随意。比如:

"input_path": "D:\input"

"output_path": "D:\output"

"error_path": "D:\output\ERROR" // 在输出文件夹下当然,你也可以让 该错误文件夹 设为 输出文件夹。这样做,输出文件夹内的错误文件就会被直接覆盖。

当 file_error 或 dir_error 其中一项为 true 时,error_path 便不能为空!否则后果自负!

没测过,不知道会发生什么。。可能是文件泄露?

是否备份错误的文件。推荐设为 true。

是否备份错误的文件夹,默认为 false。

此选项在最终效果上完全覆盖 file_error 选项。也就是说,

"file_error": false,

"dir_error": true完全等同于

"file_error": true,

"dir_error": truelassxTookit 于 Version 4.0.0.b Beta 版本加入正则表达式功能。此功能在效果上与普通正则表达式无异,是一个黑名单,可以借此筛掉一些不需要处理的文件。

正则表达式的详细介绍可以参见菜鸟教程:正则表达式教程 | 菜鸟教程

如果想检查正则表达式的语法,可以去这个网站(英文):正则表达式测试 | Regex101

接下来介绍 match 键配置内的格式要求:

"match": [ // "match" 为正则表达式匹配模块的键名

{

"name": "RegexRule1", // "name"键用来筛选文件名

"path": "RegexRule2", // "path"键用来筛选路径(不包括文件名)

"name_mode": "MatchMode1",

"path_mode": "MatchMode2"

}, // RegexRule1 第一个正则表达式配置

{...}, // RegexRule2 注意Object外的逗号!

.

.

.

]如上文所示,match 键内含若干个Object类型的子类,子类中接收 "name" & "path" & "name_mode" & "path_mode" 四个键值对。

MatchMode(匹配模式)现有 "search"(搜索)、"match"(完全匹配)两种。

-

"match" 模式要求,字符串(文件名 / 路径)必须与正则表达式完全匹配。

-

"search" 模式要求,只要字符串中的某一子串与正则表达式匹配就视为匹配成功。

各个子类内部执行 and(与)逻辑,子类之间执行 or(或)逻辑。

lassxTookit 于 Version 4.0.0.b Beta 版本同时引入了插件功能。

所有自己写的插件都要放到项目根目录下 addons 文件夹里!

可运行的程序都可以作为插件被主程序调用。

"addons"(插件)模块细分为三种键名,分别为:

-

"first":这组插件在 ‘程序开始时 & 所有图片未做任何处理时’ 被调用。

-

插件需接收命令行传入的一个参数。

-

此参数为一个 Json 格式的字符串,内含配置文件中的所有内容。

-

-

"second":每处理一个 ‘文件 / 文件夹’,这组插件就会被调用一次。

-

插件需接收命令行传入的两个参数。

-

第一个参数的形式和意义与 "first" 插件相同。

-

第二个参数为 刚刚处理完成的 ‘文件 / 文件夹’ 路径。

-

-

"third":这组插件在所有图片处理完成后被调用。

-

插件需接收命令行传入的两个参数。

-

第一个参数的形式和意义与 "first" 插件相同。

-

第二个参数为一个 Json 格式的字符串,内含本次处理中所有 ‘文件 / 文件夹’ 路径。

-

以上三种键名都对应着一个 Array(数组)作为值。

插件模块格式如下:

"addons": { // "addons" 为插件模块的键名

"first": [

"a.exe", "b.exe" ... // first组的插件列表,填入文件名即可

],

"second": [ ... ], // second组的插件列表

"third": [ ... ] // third组的插件列表

}软件自带 "print.exe" 插件,此插件可以输出命令行传入的字符串。可以借此自行测试并理解插件调用机制。

{

"input_path": "D:\\input",

"output_path": "D:\\output",

"error_path": "D:\\error", // error 文件夹也可以放在其他地方

"selector": "*", // 处理所有文件

"model":"realesrgan-anime", // anime模型,处理动漫图片

"scale":"4", // 放大倍数

"denoise":"0", // 降噪等级

"syncgap":"0", // 分块等级

"tree_restore": true, // 还原目录结构

"subdir_find": true, // 处理子文件夹

"emptydir_find": false, // 不处理空文件夹

"file_error": true, // 处理文件标准错误

"dir_error": false, // 不处理文件夹标准错误

"match": [

// 所有含有字母D的文件都不会被处理

{ "name": "D", "name_mode": "search" }

],

"addons": {

"first": [],

"second": [],

"third": [

"print.exe" // 调用 print.exe 作为第三组插件,查看参数传递情况

]

}

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for lassxToolkit

Similar Open Source Tools

lassxToolkit

lassxToolkit is a versatile tool designed for file processing tasks. It allows users to manipulate files and folders based on specified configurations in a strict .json format. The tool supports various AI models for tasks such as image upscaling and denoising. Users can customize settings like input/output paths, error handling, file selection, and plugin integration. lassxToolkit provides detailed instructions on configuration options, default values, and model selection. It also offers features like tree restoration, recursive processing, and regex-based file filtering. The tool is suitable for users looking to automate file processing tasks with AI capabilities.

openai-forward

OpenAI-Forward is an efficient forwarding service implemented for large language models. Its core features include user request rate control, token rate limiting, intelligent prediction caching, log management, and API key management, aiming to provide efficient and convenient model forwarding services. Whether proxying local language models or cloud-based language models like LocalAI or OpenAI, OpenAI-Forward makes it easy. Thanks to support from libraries like uvicorn, aiohttp, and asyncio, OpenAI-Forward achieves excellent asynchronous performance.

MahoShojo-Generator

MahoShojo-Generator is a web-based AI structured generation tool that allows players to create personalized and evolving magical girls (or quirky characters) and related roles. It offers exciting cyber battles, storytelling activities, and even a ranking feature. The project also includes AI multi-channel polling, user system, public data card sharing, and sensitive word detection. It supports various functionalities such as character generation, arena system, growth and social interaction, cloud and sharing, and other features like scenario generation, tavern ecosystem linkage, and content safety measures.

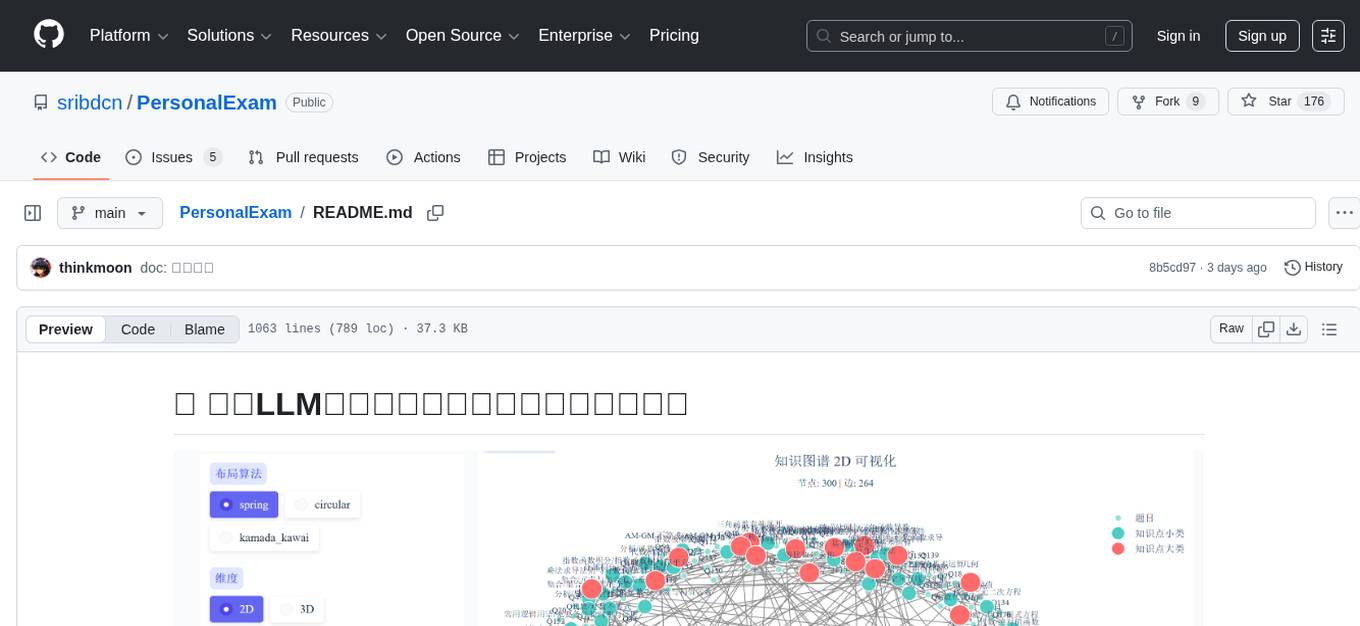

PersonalExam

PersonalExam is a personalized question generation system based on LLM and knowledge graph collaboration. It utilizes the BKT algorithm, RAG engine, and OpenPangu model to achieve personalized intelligent question generation and recommendation. The system features adaptive question recommendation, fine-grained knowledge tracking, AI answer evaluation, student profiling, visual reports, interactive knowledge graph, user management, and system monitoring.

NovelForge

NovelForge is an AI-assisted writing tool with the potential for creating long-form content of millions of words. It offers a solution that combines world-building, structured content generation, and consistency maintenance. The tool is built around four core concepts: modular 'cards', customizable 'dynamic output models', flexible 'context injection', and consistency assurance through a 'knowledge graph'. It provides a highly structured and configurable writing environment, inspired by the Snowflake Method, allowing users to create and organize their content in a tree-like structure. NovelForge is highly customizable and extensible, allowing users to tailor their writing workflow to their specific needs.

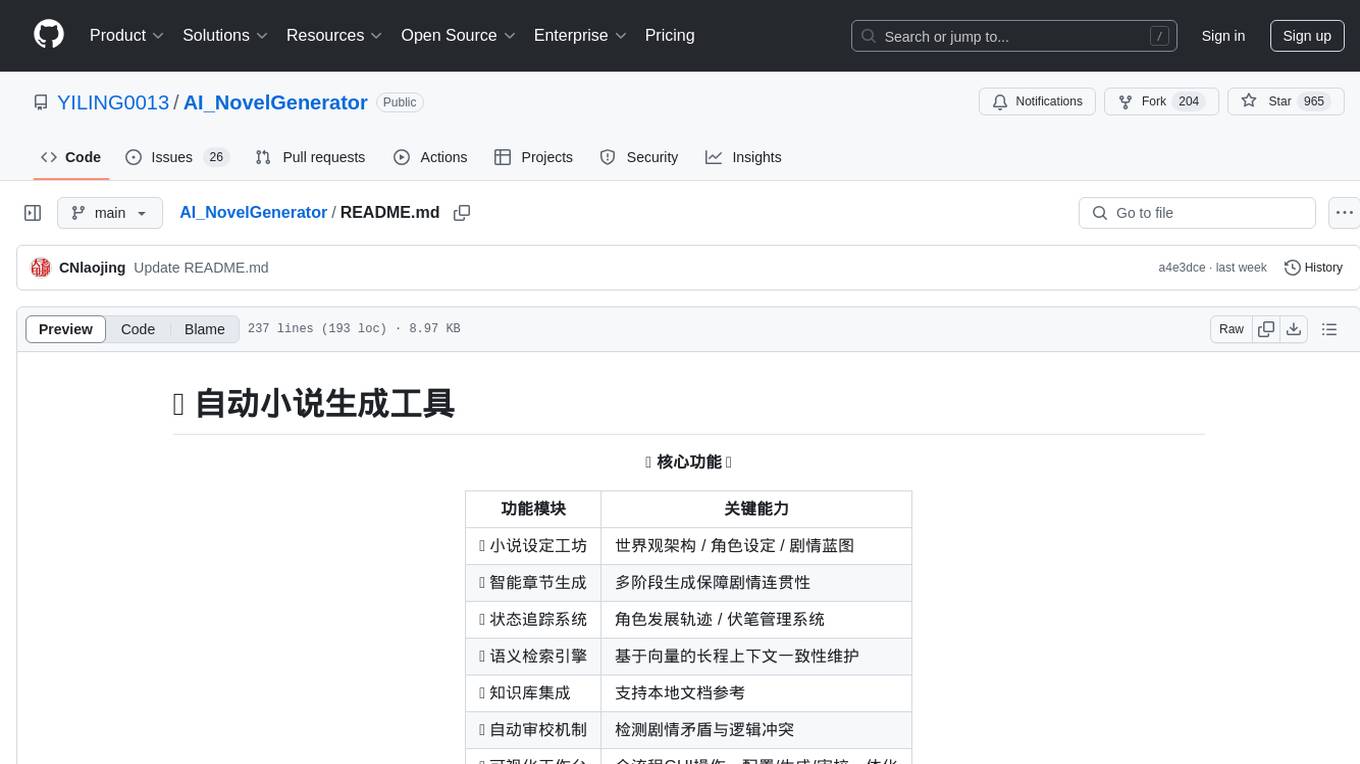

AI_NovelGenerator

AI_NovelGenerator is a versatile novel generation tool based on large language models. It features a novel setting workshop for world-building, character development, and plot blueprinting, intelligent chapter generation for coherent storytelling, a status tracking system for character arcs and foreshadowing management, a semantic retrieval engine for maintaining long-range context consistency, integration with knowledge bases for local document references, an automatic proofreading mechanism for detecting plot contradictions and logic conflicts, and a visual workspace for GUI operations encompassing configuration, generation, and proofreading. The tool aims to assist users in efficiently creating logically rigorous and thematically consistent long-form stories.

GalTransl

GalTransl is an automated translation tool for Galgames that combines minor innovations in several basic functions with deep utilization of GPT prompt engineering. It is used to create embedded translation patches. The core of GalTransl is a set of automated translation scripts that solve most known issues when using ChatGPT for Galgame translation and improve overall translation quality. It also integrates with other projects to streamline the patch creation process, reducing the learning curve to some extent. Interested users can more easily build machine-translated patches of a certain quality through this project and may try to efficiently build higher-quality localization patches based on this framework.

InterPilot

InterPilot is an AI-based assistant tool that captures audio from Windows input/output devices, transcribes it into text, and then calls the Large Language Model (LLM) API to provide answers. The project includes recording, transcription, and AI response modules, aiming to provide support for personal legitimate learning, work, and research. It may assist in scenarios like interviews, meetings, and learning, but it is strictly for learning and communication purposes only. The tool can hide its interface using third-party tools to prevent screen recording or screen sharing, but it does not have this feature built-in. Users bear the risk of using third-party tools independently.

AivisSpeech-Engine

AivisSpeech-Engine is a powerful open-source tool for speech recognition and synthesis. It provides state-of-the-art algorithms for converting speech to text and text to speech. The tool is designed to be user-friendly and customizable, allowing developers to easily integrate speech capabilities into their applications. With AivisSpeech-Engine, users can transcribe audio recordings, create voice-controlled interfaces, and generate natural-sounding speech output. Whether you are building a virtual assistant, developing a speech-to-text application, or experimenting with voice technology, AivisSpeech-Engine offers a comprehensive solution for all your speech processing needs.

hugging-llm

HuggingLLM is a project that aims to introduce ChatGPT to a wider audience, particularly those interested in using the technology to create new products or applications. The project focuses on providing practical guidance on how to use ChatGPT-related APIs to create new features and applications. It also includes detailed background information and system design introductions for relevant tasks, as well as example code and implementation processes. The project is designed for individuals with some programming experience who are interested in using ChatGPT for practical applications, and it encourages users to experiment and create their own applications and demos.

uDesktopMascot

uDesktopMascot is an open-source project for a desktop mascot application with a theme of 'freedom of creation'. It allows users to load and display VRM or GLB/FBX model files on the desktop, customize GUI colors and background images, and access various features through a menu screen. The application supports Windows 10/11 and macOS platforms.

Nano

Nano is a Transformer-based autoregressive language model for personal enjoyment, research, modification, and alchemy. It aims to implement a specific and lightweight Transformer language model based on PyTorch, without relying on Hugging Face. Nano provides pre-training and supervised fine-tuning processes for models with 56M and 168M parameters, along with LoRA plugins. It supports inference on various computing devices and explores the potential of Transformer models in various non-NLP tasks. The repository also includes instructions for experiencing inference effects, installing dependencies, downloading and preprocessing data, pre-training, supervised fine-tuning, model conversion, and various other experiments.

prompt-optimizer

Prompt Optimizer is a powerful AI prompt optimization tool that helps you write better AI prompts, improving AI output quality. It supports both web application and Chrome extension usage. The tool features intelligent optimization for prompt words, real-time testing to compare before and after optimization, integration with multiple mainstream AI models, client-side processing for security, encrypted local storage for data privacy, responsive design for user experience, and more.

siteproxy

Siteproxy 2.0 is a web proxy tool that utilizes service worker for enhanced stability and increased website coverage. It replaces express with hono for a 4x speed boost and supports deployment on Cloudflare worker. It enables reverse proxying, allowing access to YouTube/Google without VPN, and supports login for GitHub and Telegram web. The tool also features DuckDuckGo AI Chat with free access to GPT3.5 and Claude3. It offers a pure web-based online proxy with no client configuration required, facilitating reverse proxying to the internet.

rime_wanxiang

Rime Wanxiang is a pinyin input method based on deep optimized lexicon and language model. It features a lexicon with tones, AI and large corpus filtering, and frequency addition to provide more accurate sentence output. The tool supports various input methods and customization options, aiming to enhance user experience through lexicon and transcription. Users can also refresh the lexicon with different types of auxiliary codes using the LMDG toolkit package. Wanxiang offers core features like tone-marked pinyin annotations, phrase composition, and word frequency, with customizable functionalities. The tool is designed to provide a seamless input experience based on lexicon and transcription.

CodeAsk

CodeAsk is a code analysis tool designed to tackle complex issues such as code that seems to self-replicate, cryptic comments left by predecessors, messy and unclear code, and long-lasting temporary solutions. It offers intelligent code organization and analysis, security vulnerability detection, code quality assessment, and other interesting prompts to help users understand and work with legacy code more efficiently. The tool aims to translate 'legacy code mountains' into understandable language, creating an illusion of comprehension and facilitating knowledge transfer to new team members.

For similar tasks

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

floneum

Floneum is a graph editor that makes it easy to develop your own AI workflows. It uses large language models (LLMs) to run AI models locally, without any external dependencies or even a GPU. This makes it easy to use LLMs with your own data, without worrying about privacy. Floneum also has a plugin system that allows you to improve the performance of LLMs and make them work better for your specific use case. Plugins can be used in any language that supports web assembly, and they can control the output of LLMs with a process similar to JSONformer or guidance.

mindsdb

MindsDB is a platform for customizing AI from enterprise data. You can create, serve, and fine-tune models in real-time from your database, vector store, and application data. MindsDB "enhances" SQL syntax with AI capabilities to make it accessible for developers worldwide. With MindsDB’s nearly 200 integrations, any developer can create AI customized for their purpose, faster and more securely. Their AI systems will constantly improve themselves — using companies’ own data, in real-time.

aiscript

AiScript is a lightweight scripting language that runs on JavaScript. It supports arrays, objects, and functions as first-class citizens, and is easy to write without the need for semicolons or commas. AiScript runs in a secure sandbox environment, preventing infinite loops from freezing the host. It also allows for easy provision of variables and functions from the host.

activepieces

Activepieces is an open source replacement for Zapier, designed to be extensible through a type-safe pieces framework written in Typescript. It features a user-friendly Workflow Builder with support for Branches, Loops, and Drag and Drop. Activepieces integrates with Google Sheets, OpenAI, Discord, and RSS, along with 80+ other integrations. The list of supported integrations continues to grow rapidly, thanks to valuable contributions from the community. Activepieces is an open ecosystem; all piece source code is available in the repository, and they are versioned and published directly to npmjs.com upon contributions. If you cannot find a specific piece on the pieces roadmap, please submit a request by visiting the following link: Request Piece Alternatively, if you are a developer, you can quickly build your own piece using our TypeScript framework. For guidance, please refer to the following guide: Contributor's Guide

superagent-js

Superagent is an open source framework that enables any developer to integrate production ready AI Assistants into any application in a matter of minutes.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.