midjourney-proxy

代理 Midjourney 的 Discord 频道,实现 API 形式调用 AI 绘图,支持图片、视频换脸,公益项目,提供免费绘图接口。

Stars: 173

Midjourney Proxy is an open-source project that acts as a proxy for the Midjourney Discord channel, allowing API-based AI drawing calls for charitable purposes. It provides drawing API for free use, ensuring full functionality, security, and minimal memory usage. The project supports various commands and actions related to Imagine, Blend, Describe, and more. It also offers real-time progress tracking, Chinese prompt translation, sensitive word pre-detection, user-token connection via wss for error information retrieval, and various account configuration options. Additionally, it includes features like image zooming, seed value retrieval, account-specific speed mode settings, multiple account configurations, and more. The project aims to support mainstream drawing clients and API calls, with features like task hierarchy, Remix mode, image saving, and CDN acceleration, among others.

README:

中文 | English

代理 Midjourney 的 Discord 频道,实现 API 形式调用 AI 绘图,支持图片、视频一键换脸,公益项目,提供绘图 API 免费使用。

完全开源,不会存在部分开源或部分闭源,欢迎 PR。

功能最全、最安全、占用内存最小(100MB+)的 Midjourney Proxy API ~~

如果觉得项目不错,欢迎帮助点个 Star,万分谢谢!

非常感谢赞助商和群友的帮助和支持,万分感谢!

提示:Windows 平台直接下载启动即可,详情参考下方说明。

由于目前文档不是很完善,使用上和部署上可能会有问题,欢迎加入交流群,一起讨论和解决问题。

Midjourney公益群(QQ群:565908696)

- [x] 支持 Imagine 指令和相关动作 [V1/V2.../U1/U2.../R]

- [x] Imagine 时支持添加图片 base64,作为垫图

- [x] 支持 Blend (图片混合)、Describe (图生文) 指令、Shorten (提示词分析) 指令

- [x] 支持任务实时进度

- [x] 支持中文 prompt 翻译,需配置百度翻译、GPT 翻译

- [x] prompt 敏感词预检测,支持覆盖调整

- [x] user-token 连接 wss,可以获取错误信息和完整功能

- [x] 支持 Shorten(prompt分析) 指令

- [x] 支持焦点移动:Pan ⬅️➡⬆️⬇️

- [x] 支持局部重绘:Vary (Region) 🖌

- [x] 支持所有的关联按钮动作

- [x] 支持图片变焦,自定义变焦 Zoom 🔍

- [x] 支持获取图片的 seed 值

- [x] 支持账号指定生成速度模式 RELAX | FAST | TURBO

- [x] 支持多账号配置,每个账号可设置对应的任务队列,支持账号选择模式 BestWaitIdle | Random | Weight | Polling

- [x] 账号池持久化,动态维护

- [x] 支持获取账号 /info、/settings 信息

- [x] 账号 settings 设置

- [x] 支持 niji・journey Bot 和 Midjourney Bot

- [x] zlib-stream 安全压缩传输 https://discord.com/developers/docs/topics/gateway

- [x] 内嵌MJ管理后台页面,支持多语言 https://github.com/trueai-org/midjourney-proxy-webui

- [x] 支持MJ账号的增删改查功能

- [x] 支持MJ账号的详细信息查询和账号同步操作

- [x] 支持MJ账号的并发队列设置

- [x] 支持MJ的账号settings设置

- [x] 支持MJ的任务查询

- [x] 提供功能齐全的绘图测试页面

- [x] 兼容支持市面上主流绘图客户端和 API 调用

- [x] 任务增加父级任务信息等

- [x] 🎛️ Remix 模式和 Remix 模式自动提交

- [x] 内置图片保存到本地、内置 CDN 加速

- [x] 绘图时当未读消息过多时,自动模拟读未读消息

- [x] 图生文之再生图 PicReader、Picread 指令支持,以及批量再生图指令支持(无需 fast 模式)

- [x] 支持 BOOKMARK 等指令

- [x] 支持指定实例绘图,支持过滤指定速度的账号绘图,支持过滤

remix模式账号绘图等,详情参考 SwaggeraccountFilter字段 - [x] 逆向根据 job id 或 图片生成系统任务信息

- [x] 支持账号排序、并行数、队列数、最大队列数、任务执行间隔等配置

- [x] 支持客户端路径指定模式,默认地址例子 https://{BASE_URL}/mj/submit/imagine, /mj-turbo/mj 是 turbo mode, /mj-relax/mj 是 relax mode, /mj-fast/mj 是 fast mode, /mj 不指定模式

- [x] CloudFlare 手动真人验证,触发后自动锁定账号,通过 GUI 直接验证或通过邮件通知验证

- [x] CloudFlare 自动真人验证,配置验证服务器地址(自动验证器仅支持 Windows 部署)

- [x] 支持工作时间段配置,连续 24 小时不间断绘图可能会触发警告,建议休息 8~10 小时,示例:

09:10-23:55, 13:00-08:10 - [x] 内置 IP 限流、IP 段限流、黑名单、白名单、自动黑名单等功能

- [x] 单日绘图上限支持,超出上限后不在进行新的绘图任务,仍可以进行变化、重绘等操作

- [x] 开启注册、开启访客

- [x] 可视化配置功能

- [x] 支持 Swagger 文档独立开启

- [x] 配置机器人 Token 可选配置,不配置机器人也可以使用

- [x] 优化指令和状态进度显示

- [x] 摸鱼时间配置,账号增加咸鱼模式/放松模式,避免高频作业(此模式下不可创建新的绘图,仍可以执行其他命令,可以配置为多个时间段等策略)

- [x] 账号垂直分类支持,账号支持词条配置,每个账号只做某一类作品,例如:只做风景、只做人物

- [x] 允许共享频道或子频道绘画,即便账号被封,也可以继续之前的绘画,将被封的账号频道作为正常账号的子频道即可,保存永久邀请链接,和子频道链接,支持批量修改。

- [x] 多数据库支持本地数据库、MongoDB 等,如果你的任务数据超过 10万条,则建议使用 MongoDB 存储任务(默认保留 100万条记录),支持数据自动迁移。

- [x] 支持

mjplus或其他服务一键迁移到本服务,支持迁移账号、任务等 - [x] 内置违禁词管理,支持多词条分组

- [x] prompt 中非官方链接自动转为官方链接,允许国内或自定义参考链接,以避免触发验证等问题。

- [x] 支持快速模式时长用完时,自动切换到慢速模式,可自定义开启,当购买快速时长或到期续订时将会自动恢复。

- [x] 支持图片存储到阿里云 OSS,支持自定义 CDN,支持自定义样式,支持缩略图(推荐使用 OSS,与源站分离,加载更快)

- [x] 支持 Shorten 分析 Prompt 之再生图指令

- [x] 支持图片换脸,请遵守相关法律法规,不得用于违法用途。

- [x] 支持视频换脸,请遵守相关法律法规,不得用于违法用途。

公益接口为慢速模式,接口免费调用,账号池由赞助者提供,请大家合理使用。

- 管理后台:https://ai.trueai.org

- 账号密码:

无 - 公益接口:https://ai.trueai.org/mj

- 接口文档:https://ai.trueai.org/swagger

- 接口密钥:

无 - CloudFlare 自动验证服务器地址:http://47.76.110.222:8081

- CloudFlare 自动验证服务器文档:http://47.76.110.222:8081/swagger

CloudFlare 自动验证配置示例(免费自动过人机验证)

"CaptchaServer": "http://47.76.110.222:8081", // 自动验证器地址

"CaptchaNotifyHook": "https://ai.trueai.org" // 验证完成通知回调,默认为你的域名-

ChatGPT-Midjourney: https://github.com/Licoy/ChatGPT-Midjourney

- 一键拥有你自己的 ChatGPT+StabilityAI+Midjourney 网页服务 -> https://chat-gpt-midjourney-96vk.vercel.app/#/mj

- 打开网站 -> 设置 -> 自定义接口 -> 模型(Midjourney) -> 接口地址 -> https://ai.trueai.org/mj

-

ChatGPT Web Midjourney Proxy: https://github.com/Dooy/chatgpt-web-midjourney-proxy

- 打开网站 https://vercel.ddaiai.com -> 设置 -> MJ 绘画接口地址 -> https://ai.trueai.org

-

GoAmzAI: https://github.com/Licoy/GoAmzAI

- 打开后台 -> 绘画管理 -> 新增 -> MJ 绘画接口地址 -> https://ai.trueai.org/mj

Docker 版本

注意:一定确认映射文件和路径不要出错⚠⚠

# 自动安装并启动

# 推荐使用一键升级脚本

# 1.首次下载(下载后可以编辑此脚本,进行自定义配置,例如:路径、端口、内存等配置,默认8086端口)

wget -O docker-upgrade.sh https://raw.githubusercontent.com/trueai-org/midjourney-proxy/main/scripts/docker-upgrade.sh && bash docker-upgrade.sh

# 2.更新升级(以后升级只需要执行此脚本即可)

sh docker-upgrade.sh# 手动安装并启动

# 阿里云镜像(推荐国内使用)

docker pull registry.cn-guangzhou.aliyuncs.com/trueai-org/midjourney-proxy

# 公益演示站点启动配置示例

# 1.下载并重命名配置文件(示例配置)

# 提示:3.x 版本无需配置文件

wget -O /root/mjopen/appsettings.Production.json https://raw.githubusercontent.com/trueai-org/midjourney-proxy/main/src/Midjourney.API/appsettings.json

# 或使用 curl 下载并重命名配置文件(示例配置)

# 提示:3.x 版本无需配置文件

curl -o /root/mjopen/appsettings.Production.json https://raw.githubusercontent.com/trueai-org/midjourney-proxy/main/src/Midjourney.API/appsettings.json

# 2.停止并移除旧的 Docker 容器

docker stop mjopen && docker rm mjopen

# 3.启动新的 Docker 容器

# 提示:3.x 版本无需配置文件

docker run -m 1g --name mjopen -d --restart=always \

-p 8086:8080 --user root \

-v /root/mjopen/logs:/app/logs:rw \

-v /root/mjopen/data:/app/data:rw \

-v /root/mjopen/attachments:/app/wwwroot/attachments:rw \

-v /root/mjopen/ephemeral-attachments:/app/wwwroot/ephemeral-attachments:rw \

-v /root/mjopen/appsettings.Production.json:/app/appsettings.Production.json:ro \

-e TZ=Asia/Shanghai \

-v /etc/localtime:/etc/localtime:ro \

-v /etc/timezone:/etc/timezone:ro \

registry.cn-guangzhou.aliyuncs.com/trueai-org/midjourney-proxy

# 生产环境启动配置示例

docker run --name mjopen -d --restart=always \

-p 8086:8080 --user root \

-v /root/mjopen/logs:/app/logs:rw \

-v /root/mjopen/data:/app/data:rw \

-v /root/mjopen/attachments:/app/wwwroot/attachments:rw \

-v /root/mjopen/ephemeral-attachments:/app/wwwroot/ephemeral-attachments:rw \

-v /root/mjopen/appsettings.Production.json:/app/appsettings.Production.json:ro \

-e TZ=Asia/Shanghai \

-v /etc/localtime:/etc/localtime:ro \

-v /etc/timezone:/etc/timezone:ro \

registry.cn-guangzhou.aliyuncs.com/trueai-org/midjourney-proxy

# GitHub 镜像

docker pull ghcr.io/trueai-org/midjourney-proxy

docker run --name mjopen -d --restart=always \

-p 8086:8080 --user root \

-v /root/mjopen/logs:/app/logs:rw \

-v /root/mjopen/data:/app/data:rw \

-v /root/mjopen/attachments:/app/wwwroot/attachments:rw \

-v /root/mjopen/ephemeral-attachments:/app/wwwroot/ephemeral-attachments:rw \

-v /root/mjopen/appsettings.Production.json:/app/appsettings.Production.json:ro \

-e TZ=Asia/Shanghai \

-v /etc/localtime:/etc/localtime:ro \

-v /etc/timezone:/etc/timezone:ro \

ghcr.io/trueai-org/midjourney-proxy

# DockerHub 镜像

docker pull trueaiorg/midjourney-proxy

docker run --name mjopen -d --restart=always \

-p 8086:8080 --user root \

-v /root/mjopen/logs:/app/logs:rw \

-v /root/mjopen/data:/app/data:rw \

-v /root/mjopen/attachments:/app/wwwroot/attachments:rw \

-v /root/mjopen/ephemeral-attachments:/app/wwwroot/ephemeral-attachments:rw \

-v /root/mjopen/appsettings.Production.json:/app/appsettings.Production.json:ro \

-e TZ=Asia/Shanghai \

-v /etc/localtime:/etc/localtime:ro \

-v /etc/timezone:/etc/timezone:ro \

trueaiorg/midjourney-proxyWindows 版本

a. 通过 https://github.com/trueai-org/midjourney-proxy/releases 下载 windows 最新免安装版,例如:midjourney-proxy-win-x64.zip

b. 解压并执行 Midjourney.API.exe

c. 打开网站 http://localhost:8080

d. 部署到 IIS(可选),在 IIS 添加网站,将文件夹部署到 IIS,配置应用程序池为`无托管代码`,启动网站。

e. 使用系统自带的 `任务计划程序`(可选),创建基本任务,选择 `.exe` 程序即可,请选择`请勿启动多个实例`,保证只有一个任务执行即可。Linux 版本

a. 通过 https://github.com/trueai-org/midjourney-proxy/releases 下载 linux 最新免安装版,例如:midjourney-proxy-linux-x64.zip

b. 解压到当前目录: tar -xzf midjourney-proxy-linux-x64-<VERSION>.tar.gz

c. 执行: run_app.sh

c. 启动方式1: sh run_app.sh

d. 启动方式2: chmod +x run_app.sh && ./run_app.shmacOS 版本

a. 通过 https://github.com/trueai-org/midjourney-proxy/releases 下载 macOS 最新免安装版,例如:midjourney-proxy-osx-x64.zip

b. 解压到当前目录: tar -xzf midjourney-proxy-osx-x64-<VERSION>.tar.gz

c. 执行: run_app_osx.sh

c. 启动方式1: sh run_app_osx.sh

d. 启动方式2: chmod +x run_app_osx.sh && ./run_app_osx.shLinux 一键安装脚本(❤感谢 @dbccccccc)

# 方式1

wget -N --no-check-certificate https://raw.githubusercontent.com/trueai-org/midjourney-proxy/main/scripts/linux_install.sh && chmod +x linux_install.sh && bash linux_install.sh

# 方式2

curl -o linux_install.sh https://raw.githubusercontent.com/trueai-org/midjourney-proxy/main/scripts/linux_install.sh && chmod +x linux_install.sh && bash linux_install.sh-

appsettings.json默认配置 -

appsettings.Production.json生产环境配置 -

/app/data数据目录,存放账号、任务等数据-

/app/data/mj.db数据库文件

-

-

/app/logs日志目录 -

/app/wwwroot静态文件目录-

/app/wwwroot/attachments绘图文件目录 -

/app/wwwroot/ephemeral-attachmentsdescribe 生成图片目录

-

-

普通用户:只可用于绘图接口,无法登录后台。 -

管理员:可以登录后台,可以查看任务、配置等。

- 启动站点,如果之前没有设置过

AdminToken,则默认管理员 token 为:admin

3.x 版本,无需此配置,修改配置请通过 GUI 修改

{

"Demo": null, // 网站配置为演示模式

"UserToken": "", // 用户绘画令牌 token,可以用来访问绘画接口,可以不用设定

"AdminToken": "", // 管理后台令牌 token,可以用来访问绘画接口和管理员账号等功能

"mj": {

"MongoDefaultConnectionString": null, // MongoDB 连接字符串

"MongoDefaultDatabase": null, // MongoDB 数据库名称

"AccountChooseRule": "BestWaitIdle", // BestWaitIdle | Random | Weight | Polling = 最佳空闲规则 | 随机 | 权重 | 轮询

"Discord": { // Discord 配置,默认可以为 null

"GuildId": "125652671***", // 服务器 ID

"ChannelId": "12565267***", // 频道 ID

"PrivateChannelId": "1256495659***", // MJ 私信频道 ID,用来接受 seed 值

"NijiBotChannelId": "1261608644***", // NIJI 私信频道 ID,用来接受 seed 值

"UserToken": "MTI1NjQ5N***", // 用户 token

"BotToken": "MTI1NjUyODEy***", // 机器人 token

"UserAgent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/112.0.0.0 Safari/537.36",

"Enable": true, // 是否默认启动

"CoreSize": 3, // 并发数

"QueueSize": 10, // 队列数

"MaxQueueSize": 100, // 最大队列数

"TimeoutMinutes": 5, // 任务超时分钟数

"Mode": null, // RELAX | FAST | TURBO 指定生成速度模式 --fast, --relax, or --turbo parameter at the end.

"Weight": 1 // 权重

},

"NgDiscord": { // NG Discord 配置,默认可以为 null

"Server": "",

"Cdn": "",

"Wss": "",

"ResumeWss": "",

"UploadServer": "",

"SaveToLocal": false, // 是否开启图片保存到本地,如果开启则使用本地部署的地址,也可以同时配置 CDN 地址

"CustomCdn": "" // 如果不填写,并且开启了保存到本地,则默认为根目录,建议填写自己的域名地址

},

"Proxy": { // 代理配置,默认可以为 null

"Host": "",

"Port": 10809

},

"Accounts": [], // 账号池配置

"Openai": {

"GptApiUrl": "https://goapi.gptnb.ai/v1/chat/completions", // your_gpt_api_url

"GptApiKey": "", // your_gpt_api_key

"Timeout": "00:00:30",

"Model": "gpt-4o-mini",

"MaxTokens": 2048,

"Temperature": 0

},

"BaiduTranslate": { // 百度翻译配置,默认可以为 null

"Appid": "", // your_appid

"AppSecret": "" // your_app_secret

},

"TranslateWay": "NULL", // NULL | GTP | BAIDU, 翻译配置, 默认: NULL

"ApiSecret": "", // your_api_secret

"NotifyHook": "", // your_notify_hook, 回调配置

"NotifyPoolSize": 10,

"Smtp": {

"Host": "smtp.mxhichina.com", // SMTP服务器信息

"Port": 465, // SMTP端口,一般为587或465,具体依据你的SMTP服务器而定

"EnableSsl": true, // 根据你的SMTP服务器要求设置

"FromName": "system", // 发件人昵称

"FromEmail": "system@***.org", // 发件人邮箱地址

"FromPassword": "", // 你的邮箱密码或应用专用密码

"To": "" // 收件人

},

"CaptchaServer": "", // CF 验证服务器地址

"CaptchaNotifyHook": "" // CF 验证通知地址(验证通过后的回调通知,默认就是你的当前域名)

},

// IP/IP 段 限流配置,可以用来限制某个 IP/IP 段 的访问频率

// 触发限流后会返回 429 状态码

// 黑名单直接返回 403 状态码

// 黑白名、白名单支持 IP 和 CIDR 格式 IP 段,例如:192.168.1.100、192.168.1.0/24

"IpRateLimiting": {

"Enable": false,

"Whitelist": [], // 永久白名单 "127.0.0.1", "::1/10", "::1"

"Blacklist": [], // 永久黑名单

// 0.0.0.0/32 单个 ip

"IpRules": {

// 限制 mj/submit 接口下的所有接口

"*/mj/submit/*": {

"3": 1, // 每 3 秒 最多访问 1 次

"60": 6, // 每 60 秒最多访问 6 次

"600": 20, // 每 600 秒最多访问 20 次

"3600": 60, // 每 3600 秒最多访问 60 次

"86400": 120 // 每天最多访问 120 次

}

},

// 0.0.0.0/24 ip 段

"Ip24Rules": {

// 限制 mj/submit 接口下的所有接口

"*/mj/submit/*": {

"5": 10, // 每 5 秒 最多访问 10 次

"60": 30, // 每 60 秒最多访问 30 次

"600": 100, // 每 600 秒最多访问 100 次

"3600": 300, // 每 3600 秒最多访问 300 次

"86400": 360 // 每天最多访问 360 次

}

},

// 0.0.0.0/16 ip 段

"Ip16Rules": {}

},

// IP 黑名单限流配置,触发后自动封锁 IP,支持封锁时间配置

// 触发限流后,加入黑名单会返回 403 状态码

// 黑白名、白名单支持 IP 和 CIDR 格式 IP 段,例如:192.168.1.100、192.168.1.0/24

"IpBlackRateLimiting": {

"Enable": false,

"Whitelist": [], // 永久白名单 "127.0.0.1", "::1/10", "::1"

"Blacklist": [], // 永久黑名单

"BlockTime": 1440, // 封锁时间,单位:分钟

"IpRules": {

"*/mj/*": {

"1": 30,

"60": 900

}

},

"Ip24Rules": {

"*/mj/*": {

"1": 90,

"60": 3000

}

}

},

"Serilog": {

"MinimumLevel": {

"Default": "Information",

"Override": {

"Default": "Warning",

"System": "Warning",

"Microsoft": "Warning"

}

},

"WriteTo": [

{

"Name": "File",

"Args": {

"path": "logs/log.txt",

"rollingInterval": "Day",

"fileSizeLimitBytes": null,

"rollOnFileSizeLimit": false,

"retainedFileCountLimit": 31

}

},

{

"Name": "Console"

}

]

},

"Logging": {

"LogLevel": {

"Default": "Information",

"Microsoft.AspNetCore": "Warning"

}

},

"AllowedHosts": "*",

"urls": "http://*:8080" // 默认端口

}{

"enable": true,

"bucketName": "mjopen",

"region": null,

"accessKeyId": "LTAIa***",

"accessKeySecret": "QGqO7***",

"endpoint": "oss-cn-hongkong-internal.aliyuncs.com",

"customCdn": "https://mjcdn.googlec.cc",

"imageStyle": "x-oss-process=style/webp",

"thumbnailImageStyle": "x-oss-process=style/w200"

}仅支持 Windows 部署(并且支持 TLS 1.3,系统要求 Windows11 或 Windows Server 2022),由于 CloudFlare 验证器需要使用到 Chrome 浏览器,所以需要在 Windows 环境下部署,而在 Linux 环境下部署会依赖很多库,所以暂时不支持 Linux 部署。

注意:自行部署需提供 2captcha.com 的 API Key,否则无法使用,价格:1000次/9元,官网:https://2captcha.cn/p/cloudflare-turnstile

提示:首次启动会下载 Chrome 浏览器,会比较慢,请耐心等待。

appsettings.json配置参考

{

"Demo": null, // 网站配置为演示模式

"Captcha": {

"Headless": true, // chrome 是否后台运行

"TwoCaptchaKey": "" // 2captcha.com 的 API Key

},

"urls": "http://*:8081" // 默认端口

}

本项目利用 Discord 机器人 Token 连接 wss,可以获取错误信息和完整功能,确保消息的高可用性等问题。

1. 创建应用

https://discord.com/developers/applications

2. 设置应用权限(确保拥有读取内容权限,参考截图)

[Bot] 设置 -> 全部开启

3. 添加应用到频道服务器(参考截图)

client_id 可以在应用详情页找到,为 APPLICATION ID

https://discord.com/oauth2/authorize?client_id=xxx&permissions=8&scope=bot

4. 复制或重置 Bot Token 到配置文件

设置应用权限(确保拥有读取内容权限,参考截图)

添加应用到频道服务器(参考截图)

如果你的任务量未来可能超过 10 万,推荐 Docker 部署 MongoDB。

注意:切换 MongoDB 历史任务可选择自动迁移。

- 启动容器

xxx为你的密码 - 打开系统设置 -> 输入 MongoDB 连接字符串

mongodb://mongoadmin:xxx@ip即可 - 填写 MongoDB 数据库名称 ->

mj-> 保存 - 重启服务

# 启动容器

docker run -d \

--name mjopen-mongo \

-p 27017:27017 \

-v /root/mjopen/mongo/data:/data/db \

--restart always \

-e MONGO_INITDB_ROOT_USERNAME=mongoadmin \

-e MONGO_INITDB_ROOT_PASSWORD=xxx \

mongo

# 创建数据库(也可以通过 BT 创建数据库)(可选)- 打开官网注册并复制 Token: https://replicate.com/codeplugtech/face-swap

{

"token": "****",

"enableFaceSwap": true,

"faceSwapVersion": "278a81e7ebb22db98bcba54de985d22cc1abeead2754eb1f2af717247be69b34",

"faceSwapCoreSize": 3,

"faceSwapQueueSize": 10,

"faceSwapTimeoutMinutes": 10,

"enableVideoFaceSwap": true,

"videoFaceSwapVersion": "104b4a39315349db50880757bc8c1c996c5309e3aa11286b0a3c84dab81fd440",

"videoFaceSwapCoreSize": 3,

"videoFaceSwapQueueSize": 10,

"videoFaceSwapTimeoutMinutes": 30,

"maxFileSize": 10485760,

"webhook": null,

"webhookEventsFilter": []

}- 任务间隔 36~120 秒,执行前间隔 3.6 秒以上

- 每日最大 200 张

- 每日工作时间,建议 9:10~22:50

- 如果有多个账号,则建议开启垂直领域功能,每个账号只做某一类作品

- [ ] 支持腾讯云存储等

- [ ] 支持通过 openai 分析 prompt 词条,然后分配到领域账号,更加智能。通过 shorten 分析 prompt 词条,并分配到领域。

- [ ] 接入官网绘图 API 支持

- [ ] 最终提示词增加翻译中文显示支持

- [ ] 账号支持单独的代理

- [ ] 多数据库支持 MySQL、Sqlite、SqlServer、PostgeSQL、Redis 等

- [ ] 支付接入支持、支持微信、支付宝,支持绘图定价策略等

- [ ] 增加公告功能

- [ ] 图生文 seed 值处理

- [ ] 自动读私信消息

- [ ] 多账号分组支持

- [ ] 服务重启后,如果有未启动的任务,则加入到执行的队列中

- [ ] 子频道自动化支持,可直接输入邀请链接,或共享频道地址,系统自动加入频道转换。或者通过转交所有权实现。

- [ ] 通过 discord www.picsi.ai 换脸支持

- 如果觉得这个项目对您有所帮助,请帮忙点个 Star⭐

- 您也可以提供暂时空闲的绘画公益账号(赞助 1 个慢速队列),支持此项目的发展😀

由于部分开源作者被请去喝茶,使用本项目不得用于违法犯罪用途。

- 请务必遵守国家法律,任何用于违法犯罪的行为将由使用者自行承担。

- 本项目遵循 GPL 协议,允许个人和商业用途,但必须经作者允许且保留版权信息。

- 请遵守当地国家法律法规,不得用于违法用途。

- 请勿用于非法用途。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for midjourney-proxy

Similar Open Source Tools

midjourney-proxy

Midjourney Proxy is an open-source project that acts as a proxy for the Midjourney Discord channel, allowing API-based AI drawing calls for charitable purposes. It provides drawing API for free use, ensuring full functionality, security, and minimal memory usage. The project supports various commands and actions related to Imagine, Blend, Describe, and more. It also offers real-time progress tracking, Chinese prompt translation, sensitive word pre-detection, user-token connection via wss for error information retrieval, and various account configuration options. Additionally, it includes features like image zooming, seed value retrieval, account-specific speed mode settings, multiple account configurations, and more. The project aims to support mainstream drawing clients and API calls, with features like task hierarchy, Remix mode, image saving, and CDN acceleration, among others.

glm-free-api

GLM AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, intelligent agent dialogue support, AI drawing support, online search support, long document interpretation support, image parsing support. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository also includes six other free APIs for various services like Moonshot AI, StepChat, Qwen, Metaso, Spark, and Emohaa. The tool supports tasks such as chat completions, AI drawing, document interpretation, image parsing, and refresh token survival check.

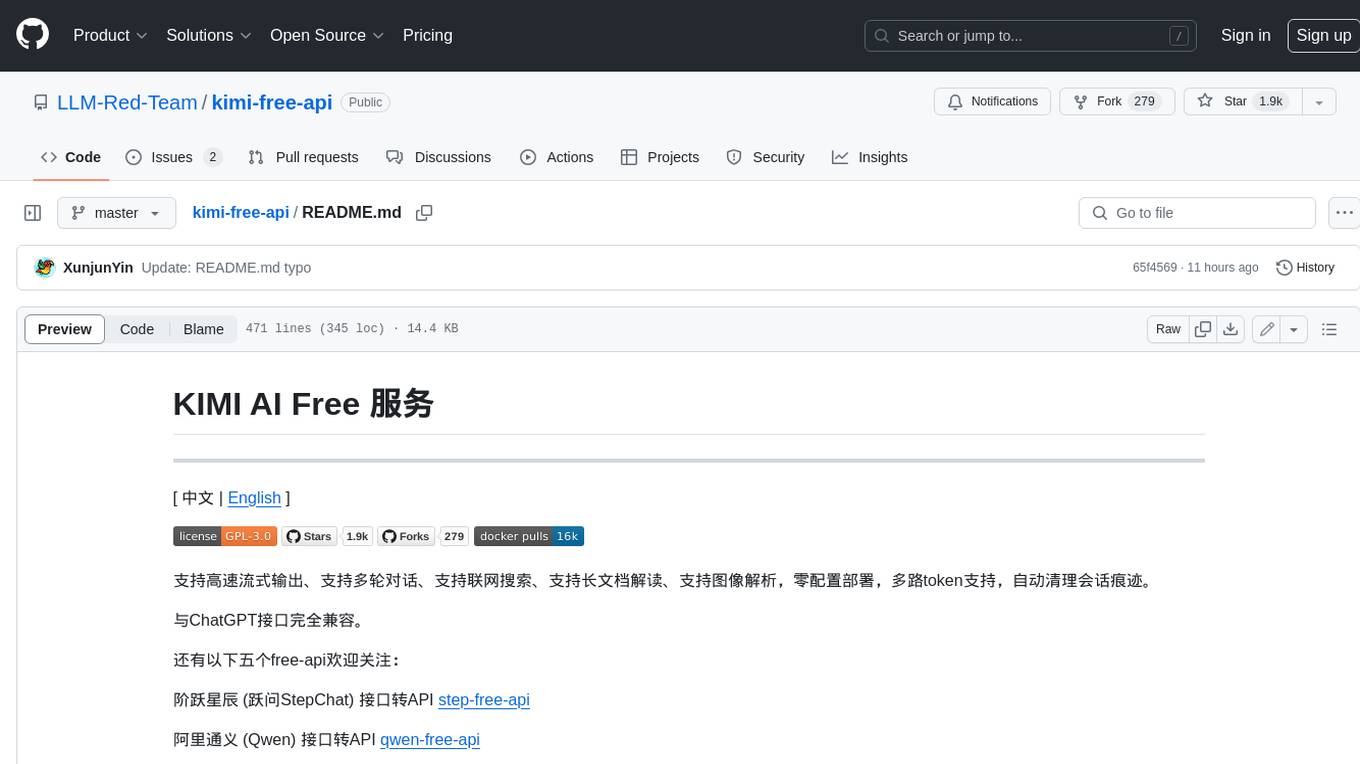

kimi-free-api

KIMI AI Free 服务 支持高速流式输出、支持多轮对话、支持联网搜索、支持长文档解读、支持图像解析,零配置部署,多路token支持,自动清理会话痕迹。 与ChatGPT接口完全兼容。 还有以下五个free-api欢迎关注: 阶跃星辰 (跃问StepChat) 接口转API step-free-api 阿里通义 (Qwen) 接口转API qwen-free-api ZhipuAI (智谱清言) 接口转API glm-free-api 秘塔AI (metaso) 接口转API metaso-free-api 聆心智能 (Emohaa) 接口转API emohaa-free-api

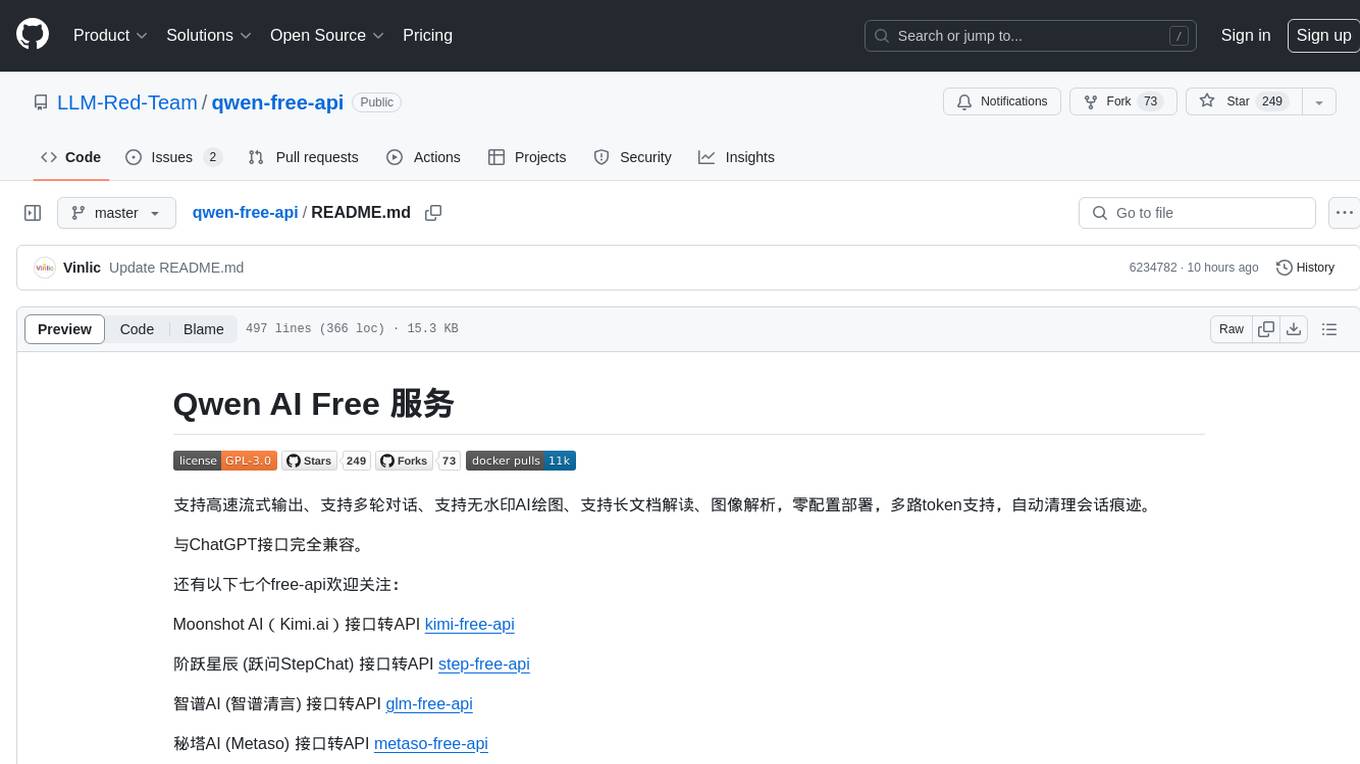

qwen-free-api

Qwen AI Free service supports high-speed streaming output, multi-turn dialogue, watermark-free AI drawing, long document interpretation, image parsing, zero-configuration deployment, multi-token support, automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository provides various free APIs for different AI services. Users can access the service through different deployment methods like Docker, Docker-compose, Render, Vercel, and native deployment. It offers interfaces for chat completions, AI drawing, document interpretation, image parsing, and token checking. Users need to provide 'login_tongyi_ticket' for authorization. The project emphasizes research, learning, and personal use only, discouraging commercial use to avoid service pressure on the official platform.

spark-free-api

Spark AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, AI drawing support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository includes multiple free-api projects for various AI services. Users can access the API for tasks such as chat completions, AI drawing, document interpretation, image analysis, and ssoSessionId live checking. The project also provides guidelines for deployment using Docker, Docker-compose, Render, Vercel, and native deployment methods. It recommends using custom clients for faster and simpler access to the free-api series projects.

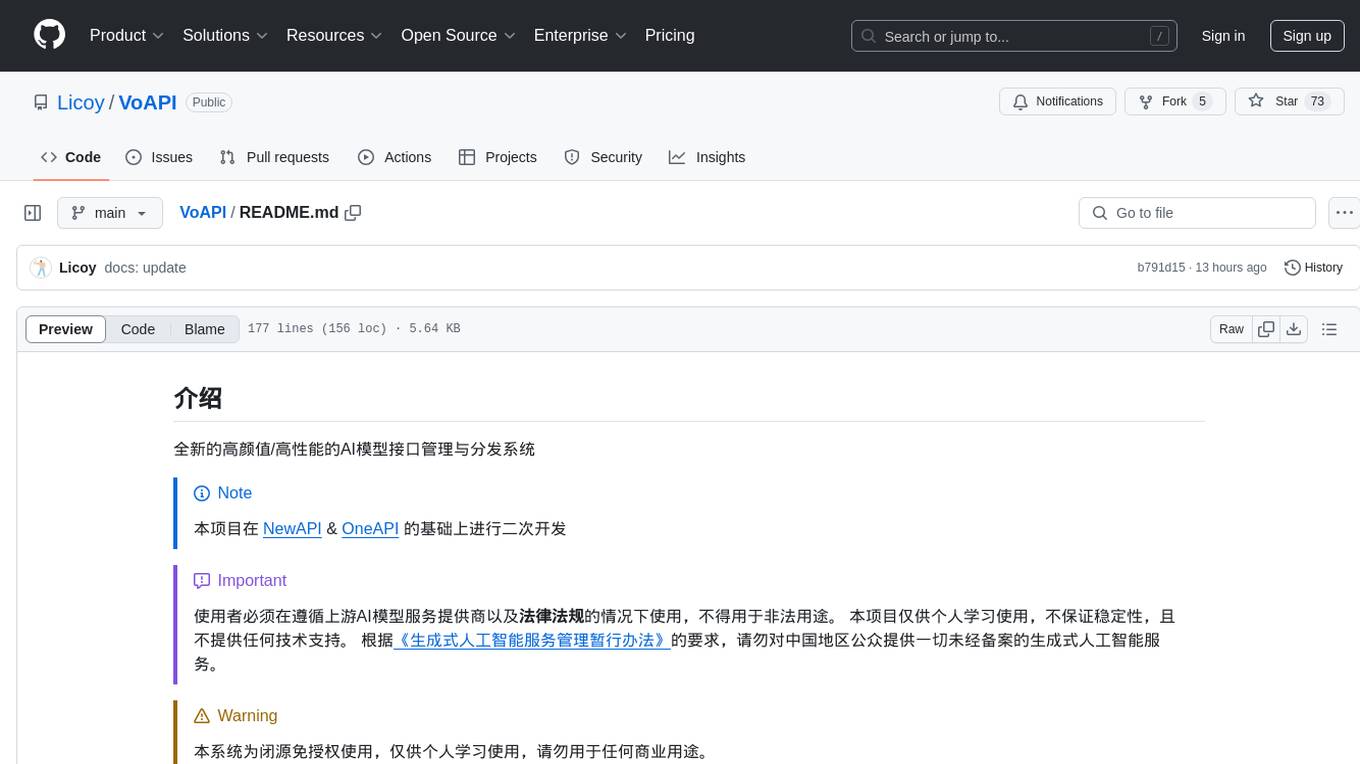

VoAPI

VoAPI is a new high-value/high-performance AI model interface management and distribution system. It is a closed-source tool for personal learning use only, not for commercial purposes. Users must comply with upstream AI model service providers and legal regulations. The system offers a visually appealing interface, independent development documentation page support, service monitoring page configuration support, and third-party login support. It also optimizes interface elements, user registration time support, data operation button positioning, and more.

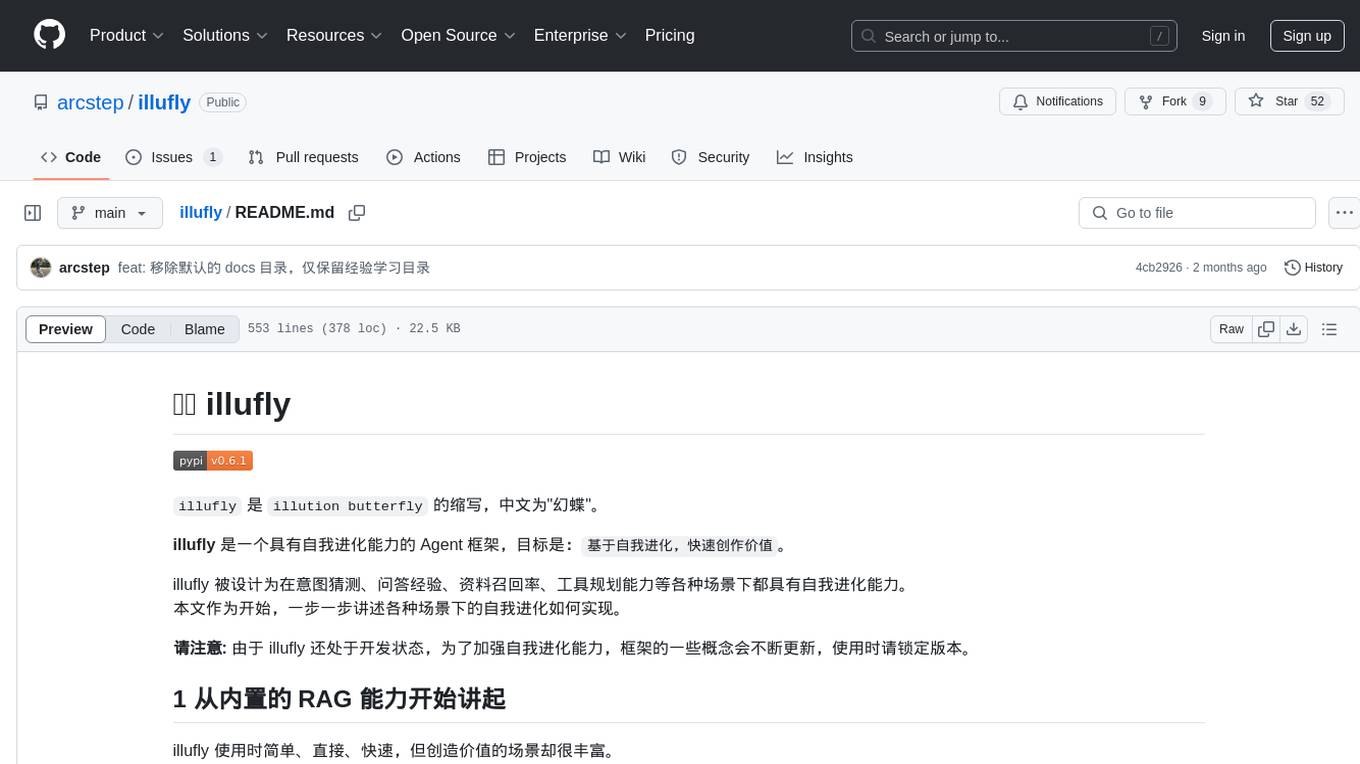

illufly

illufly is an Agent framework with self-evolution capabilities, aiming to quickly create value based on self-evolution. It is designed to have self-evolution capabilities in various scenarios such as intent guessing, Q&A experience, data recall rate, and tool planning ability. The framework supports continuous dialogue, built-in RAG support, and self-evolution during conversations. It also provides tools for managing experience data and supports multiple agents collaboration.

step-free-api

The StepChat Free service provides high-speed streaming output, multi-turn dialogue support, online search support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. Additionally, it provides seven other free APIs for various services. The repository includes a disclaimer about using reverse APIs and encourages users to avoid commercial use to prevent service pressure on the official platform. It offers online testing links, showcases different demos, and provides deployment guides for Docker, Docker-compose, Render, Vercel, and native deployments. The repository also includes information on using multiple accounts, optimizing Nginx reverse proxy, and checking the liveliness of refresh tokens.

Senparc.AI

Senparc.AI is an AI extension package for the Senparc ecosystem, focusing on LLM (Large Language Models) interaction. It provides modules for standard interfaces and basic functionalities, as well as interfaces using SemanticKernel for plug-and-play capabilities. The package also includes a library for supporting the 'PromptRange' ecosystem, compatible with various systems and frameworks. Users can configure different AI platforms and models, define AI interface parameters, and run AI functions easily. The package offers examples and commands for dialogue, embedding, and DallE drawing operations.

Streamer-Sales

Streamer-Sales is a large model for live streamers that can explain products based on their characteristics and inspire users to make purchases. It is designed to enhance sales efficiency and user experience, whether for online live sales or offline store promotions. The model can deeply understand product features and create tailored explanations in vivid and precise language, sparking user's desire to purchase. It aims to revolutionize the shopping experience by providing detailed and unique product descriptions to engage users effectively.

VoAPI

VoAPI is a new high-value/high-performance AI model interface management and distribution system. It is a closed-source tool for personal learning use only, not for commercial purposes. Users must comply with upstream AI model service providers and legal regulations. The system offers a visually appealing interface with features such as independent development documentation page support, service monitoring page configuration support, and third-party login support. Users can manage user registration time, optimize interface elements, and support features like online recharge, model pricing display, and sensitive word filtering. VoAPI also provides support for various AI models and platforms, with the ability to configure homepage templates, model information, and manufacturer information.

YesImBot

YesImBot, also known as Athena, is a Koishi plugin designed to allow large AI models to participate in group chat discussions. It offers easy customization of the bot's name, personality, emotions, and other messages. The plugin supports load balancing multiple API interfaces for large models, provides immersive context awareness, blocks potentially harmful messages, and automatically fetches high-quality prompts. Users can adjust various settings for the bot and customize system prompt words. The ultimate goal is to seamlessly integrate the bot into group chats without detection, with ongoing improvements and features like message recognition, emoji sending, multimodal image support, and more.

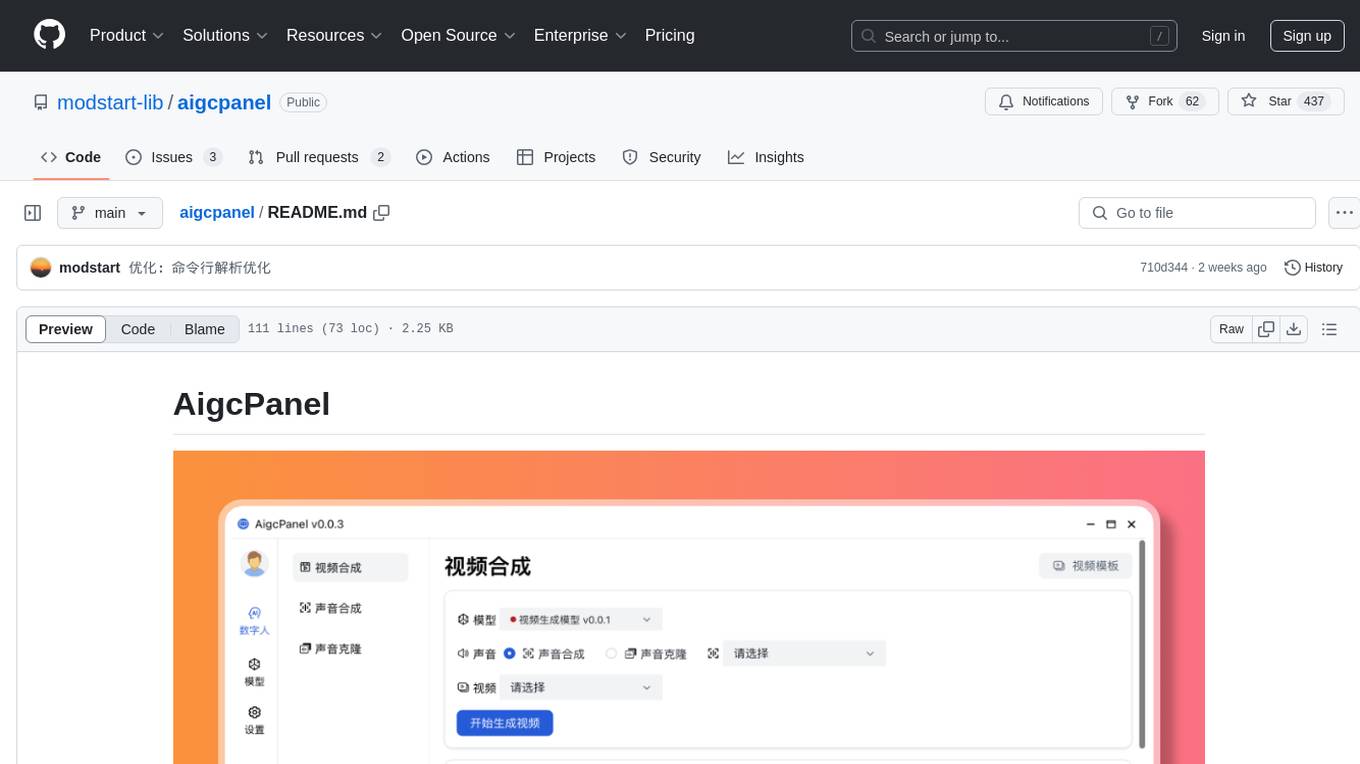

aigcpanel

AigcPanel is a simple and easy-to-use all-in-one AI digital human system that even beginners can use. It supports video synthesis, voice synthesis, voice cloning, simplifies local model management, and allows one-click import and use of AI models. It prohibits the use of this product for illegal activities and users must comply with the laws and regulations of the People's Republic of China.

Gensokyo-llm

Gensokyo-llm is a tool designed for Gensokyo and Onebotv11, providing a one-click solution for large models. It supports various Onebotv11 standard frameworks, HTTP-API, and reverse WS. The tool is lightweight, with built-in SQLite for context maintenance and proxy support. It allows easy integration with the Gensokyo framework by configuring reverse HTTP and forward HTTP addresses. Users can set system settings, role cards, and context length. Additionally, it offers an openai original flavor API with automatic context. The tool can be used as an API or integrated with QQ channel robots. It supports converting GPT's SSE type and ensures memory safety in concurrent SSE environments. The tool also supports multiple users simultaneously transmitting SSE bidirectionally.

goodsKill

The 'goodsKill' project aims to build a complete project framework integrating good technologies and development techniques, mainly focusing on backend technologies. It provides a simulated flash sale project with unified flash sale simulation request interface. The project uses SpringMVC + Mybatis for the overall technology stack, Dubbo3.x for service intercommunication, Nacos for service registration and discovery, and Spring State Machine for data state transitions. It also integrates Spring AI service for simulating flash sale actions.

For similar tasks

chatgpt-plus

ChatGPT-PLUS is an open-source AI assistant solution based on AI large language model API, with a built-in operational management backend for easy deployment. It integrates multiple large language models from platforms like OpenAI, Azure, ChatGLM, Xunfei Xinghuo, and Wenxin Yanyan. Additionally, it includes MidJourney and Stable Diffusion AI drawing features. The system offers a complete open-source solution with ready-to-use frontend and backend applications, providing a seamless typing experience via Websocket. It comes with various pre-trained role applications such as Xiaohongshu writer, English translation master, Socrates, Confucius, Steve Jobs, and weekly report assistant to meet various chat and application needs. Users can enjoy features like Suno Wensheng music, integration with MidJourney/Stable Diffusion AI drawing, personal WeChat QR code for payment, built-in Alipay and WeChat payment functions, support for various membership packages and point card purchases, and plugin API integration for developing powerful plugins using large language model functions.

ruoyi-ai

ruoyi-ai is a platform built on top of ruoyi-plus to implement AI chat and drawing functionalities on the backend. The project is completely open source and free. The backend management interface uses elementUI, while the server side is built using Java 17 and SpringBoot 3.X. It supports various AI models such as ChatGPT4, Dall-E-3, ChatGPT-4-All, voice cloning based on GPT-SoVITS, GPTS, and MidJourney. Additionally, it supports WeChat mini programs, personal QR code real-time payments, monitoring and AI auto-reply in live streaming rooms like Douyu and Bilibili, and personal WeChat integration with ChatGPT. The platform also includes features like private knowledge base management and provides various demo interfaces for different platforms such as mobile, web, and PC.

midjourney-proxy

Midjourney Proxy is an open-source project that acts as a proxy for the Midjourney Discord channel, allowing API-based AI drawing calls for charitable purposes. It provides drawing API for free use, ensuring full functionality, security, and minimal memory usage. The project supports various commands and actions related to Imagine, Blend, Describe, and more. It also offers real-time progress tracking, Chinese prompt translation, sensitive word pre-detection, user-token connection via wss for error information retrieval, and various account configuration options. Additionally, it includes features like image zooming, seed value retrieval, account-specific speed mode settings, multiple account configurations, and more. The project aims to support mainstream drawing clients and API calls, with features like task hierarchy, Remix mode, image saving, and CDN acceleration, among others.

atidraw

Atidraw is a web application that allows users to create, enhance, and share drawings using Cloudflare R2 and Cloudflare AI. It features intuitive drawing with signature_pad, AI-powered enhancements such as alt text generation and image generation with Stable Diffusion, global storage on Cloudflare R2, flexible authentication options, and high-performance server-side rendering on Cloudflare Pages. Users can deploy Atidraw with zero configuration on their Cloudflare account using NuxtHub.

galxe-aio

Galxe AIO is a versatile tool designed to automate various tasks on social media platforms like Twitter, email, and Discord. It supports tasks such as following, retweeting, liking, and quoting on Twitter, as well as solving quizzes, submitting surveys, and more. Users can link their Twitter accounts, email accounts (IMAP or mail3.me), and Discord accounts to the tool to streamline their activities. Additionally, the tool offers features like claiming rewards, quiz solving, submitting surveys, and managing referral links and account statistics. It also supports different types of rewards like points, mystery boxes, gas-less OATs, gas OATs and NFTs, and participation in raffles. The tool provides settings for managing EVM wallets, proxies, twitters, emails, and discords, along with custom configurations in the `config.toml` file. Users can run the tool using Python 3.11 and install dependencies using `pip` and `playwright`. The tool generates results and logs in specific folders and allows users to donate using TRC-20 or ERC-20 tokens.

ChopperBot

A multifunctional, intelligent, personalized, scalable, easy to build, and fully automated multi platform intelligent live video editing and publishing robot. ChopperBot is a comprehensive AI tool that automatically analyzes and slices the most interesting clips from popular live streaming platforms, generates and publishes content, and manages accounts. It supports plugin DIY development and hot swapping functionality, making it easy to customize and expand. With ChopperBot, users can quickly build their own live video editing platform without the need to install any software, thanks to its visual management interface.

Tiktok_Automation_Bot

TikTok Automation Bot is an Appium-based tool for automating TikTok account creation and video posting on real devices. It offers functionalities such as automated account creation and video posting, along with integrations like Crane tweak, SMSActivate service, and IPQualityScore service. The tool also provides device and automation management system, anti-bot system for human behavior modeling, and IP rotation system for different IP addresses. It is designed to simplify the process of managing TikTok accounts and posting videos efficiently.

chatgpt-mirai-qq-bot

Kirara AI is a chatbot that supports mainstream language models and chat platforms. It features various functionalities such as image sending, keyword-triggered replies, multi-account support, content moderation, personality settings, and support for platforms like QQ, Telegram, Discord, and WeChat. It also offers HTTP server capabilities, plugin support, conditional triggers, admin commands, drawing models, voice replies, multi-turn conversations, cross-platform message sending, and custom workflows. The tool can be accessed via HTTP API for integration with other platforms.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.