kimi-free-api

🚀 KIMI AI 长文本大模型逆向API白嫖测试【特长:长文本解读整理】,支持高速流式输出、智能体对话、联网搜索、长文档解读、图像OCR、多轮对话,零配置部署,多路token支持,自动清理会话痕迹。

Stars: 3373

KIMI AI Free 服务 支持高速流式输出、支持多轮对话、支持联网搜索、支持长文档解读、支持图像解析,零配置部署,多路token支持,自动清理会话痕迹。 与ChatGPT接口完全兼容。 还有以下五个free-api欢迎关注: 阶跃星辰 (跃问StepChat) 接口转API step-free-api 阿里通义 (Qwen) 接口转API qwen-free-api ZhipuAI (智谱清言) 接口转API glm-free-api 秘塔AI (metaso) 接口转API metaso-free-api 聆心智能 (Emohaa) 接口转API emohaa-free-api

README:

[ 中文 | English ]

支持高速流式输出、支持多轮对话、支持联网搜索、支持智能体对话、支持长文档解读、支持图像OCR,零配置部署,多路token支持,自动清理会话痕迹。

与ChatGPT接口完全兼容。

还有以下八个free-api欢迎关注:

阶跃星辰 (跃问StepChat) 接口转API step-free-api

阿里通义 (Qwen) 接口转API qwen-free-api

智谱AI (智谱清言) 接口转API glm-free-api

秘塔AI (Metaso) 接口转API metaso-free-api

讯飞星火(Spark)接口转API spark-free-api

MiniMax(海螺AI)接口转API hailuo-free-api

深度求索(DeepSeek)接口转API deepseek-free-api

聆心智能 (Emohaa) 接口转API emohaa-free-api

逆向API是不稳定的,建议前往MoonshotAI官方 https://platform.moonshot.cn/ 付费使用API,避免封禁的风险。

本组织和个人不接受任何资金捐助和交易,此项目是纯粹研究交流学习性质!

仅限自用,禁止对外提供服务或商用,避免对官方造成服务压力,否则风险自担!

仅限自用,禁止对外提供服务或商用,避免对官方造成服务压力,否则风险自担!

仅限自用,禁止对外提供服务或商用,避免对官方造成服务压力,否则风险自担!

此链接仅临时测试功能,不可长期使用,长期使用请自行部署。

https://udify.app/chat/Po0F6BMJ15q5vu2P

此处使用 翻译通 智能体。

从 kimi.moonshot.cn 获取refresh_token

进入kimi随便发起一个对话,然后F12打开开发者工具,从Application > Local Storage中找到refresh_token的值,这将作为Authorization的Bearer Token值:Authorization: Bearer TOKEN

如果你看到的refresh_token是一个数组,请使用.拼接起来再使用。

目前kimi限制普通账号每3小时内只能进行30轮长文本的问答(短文本不限),你可以通过提供多个账号的refresh_token并使用,拼接提供:

Authorization: Bearer TOKEN1,TOKEN2,TOKEN3

每次请求服务会从中挑选一个。

请准备一台具有公网IP的服务器并将8000端口开放。

拉取镜像并启动服务

docker run -it -d --init --name kimi-free-api -p 8000:8000 -e TZ=Asia/Shanghai vinlic/kimi-free-api:latest查看服务实时日志

docker logs -f kimi-free-api重启服务

docker restart kimi-free-api停止服务

docker stop kimi-free-apiversion: '3'

services:

kimi-free-api:

container_name: kimi-free-api

image: vinlic/kimi-free-api:latest

restart: always

ports:

- "8000:8000"

environment:

- TZ=Asia/Shanghai注意:部分部署区域可能无法连接kimi,如容器日志出现请求超时或无法连接(新加坡实测不可用)请切换其他区域部署! 注意:免费账户的容器实例将在一段时间不活动时自动停止运行,这会导致下次请求时遇到50秒或更长的延迟,建议查看Render容器保活

-

fork本项目到你的github账号下。

-

访问 Render 并登录你的github账号。

-

构建你的 Web Service(New+ -> Build and deploy from a Git repository -> Connect你fork的项目 -> 选择部署区域 -> 选择实例类型为Free -> Create Web Service)。

-

等待构建完成后,复制分配的域名并拼接URL访问即可。

注意:Vercel免费账户的请求响应超时时间为10秒,但接口响应通常较久,可能会遇到Vercel返回的504超时错误!

请先确保安装了Node.js环境。

npm i -g vercel --registry http://registry.npmmirror.com

vercel login

git clone https://github.com/LLM-Red-Team/kimi-free-api

cd kimi-free-api

vercel --prod注意:免费账户的容器实例可能无法稳定运行

请准备一台具有公网IP的服务器并将8000端口开放。

请先安装好Node.js环境并且配置好环境变量,确认node命令可用。

安装依赖

npm i安装PM2进行进程守护

npm i -g pm2编译构建,看到dist目录就是构建完成

npm run build启动服务

pm2 start dist/index.js --name "kimi-free-api"查看服务实时日志

pm2 logs kimi-free-api重启服务

pm2 reload kimi-free-api停止服务

pm2 stop kimi-free-api使用以下二次开发客户端接入free-api系列项目更快更简单,支持文档/图像上传!

由 Clivia 二次开发的LobeChat https://github.com/Yanyutin753/lobe-chat

由 时光@ 二次开发的ChatGPT Web https://github.com/SuYxh/chatgpt-web-sea

目前支持与openai兼容的 /v1/chat/completions 接口,可自行使用与openai或其他兼容的客户端接入接口,或者使用 dify 等线上服务接入使用。

对话补全接口,与openai的 chat-completions-api 兼容。

POST /v1/chat/completions

header 需要设置 Authorization 头部:

Authorization: Bearer [refresh_token]

请求数据:

{

// model随意填写,如果不希望输出检索过程模型名称请包含silent_search

// 如果使用kimi+智能体,model请填写智能体ID,就是浏览器地址栏上尾部的一串英文+数字20个字符的ID

"model": "kimi",

// 目前多轮对话基于消息合并实现,某些场景可能导致能力下降且受单轮最大Token数限制

// 如果您想获得原生的多轮对话体验,可以传入首轮消息获得的id,来接续上下文,注意如果使用这个,首轮必须传none,否则第二轮会空响应!

// "conversation_id": "cnndivilnl96vah411dg",

"messages": [

{

"role": "user",

"content": "测试"

}

],

// 是否开启联网搜索,默认false

"use_search": true,

// 如果使用SSE流请设置为true,默认false

"stream": false

}响应数据:

{

// 如果想获得原生多轮对话体验,此id,你可以传入到下一轮对话的conversation_id来接续上下文

"id": "cnndivilnl96vah411dg",

"model": "kimi",

"object": "chat.completion",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "你好!我是Kimi,由月之暗面科技有限公司开发的人工智能助手。我擅长中英文对话,可以帮助你获取信息、解答疑问,还能阅读和理解你提供的文件和网页内容。如果你有任何问题或需要帮助,随时告诉我!"

},

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 1,

"completion_tokens": 1,

"total_tokens": 2

},

"created": 1710152062

}提供一个可访问的文件URL或者BASE64_URL进行解析。

POST /v1/chat/completions

header 需要设置 Authorization 头部:

Authorization: Bearer [refresh_token]

请求数据:

{

// 模型名称随意填写,如果不希望输出检索过程模型名称请包含silent_search

"model": "kimi",

"messages": [

{

"role": "user",

"content": [

{

"type": "file",

"file_url": {

"url": "https://mj101-1317487292.cos.ap-shanghai.myqcloud.com/ai/test.pdf"

}

},

{

"type": "text",

"text": "文档里说了什么?"

}

]

}

],

// 建议关闭联网搜索,防止干扰解读结果

"use_search": false

}响应数据:

{

"id": "cnmuo7mcp7f9hjcmihn0",

"model": "kimi",

"object": "chat.completion",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "文档中包含了几个古代魔法咒语的例子,这些咒语来自古希腊和罗马时期的魔法文本,被称为PGM(Papyri Graecae Magicae)。以下是文档中提到的几个咒语的内容:\n\n1. 第一个咒语(PMG 4.1390 – 1495)描述了一个仪式,要求留下一些你吃剩的面包,将其分成七块小片,然后去到英雄、角斗士和那些死于非命的人被杀的地方。对面包片念咒并扔出去,然后从仪式地点捡起一些被污染的泥土扔进你心仪的女人的家中,之后去睡觉。咒语的内容是向命运女神(Moirai)、罗马的命运女神(Fates)和自然力量(Daemons)祈求,希望他们帮助实现愿望。\n\n2. 第二个咒语(PMG 4.1342 – 57)是一个召唤咒语,通过念出一系列神秘的名字和词语来召唤一个名为Daemon的存在,以使一个名为Tereous的人(由Apia所生)受到精神和情感上的折磨,直到她来到施法者Didymos(由Taipiam所生)的身边。\n\n3. 第三个咒语(PGM 4.1265 – 74)提到了一个名为NEPHERIĒRI的神秘名字,这个名字与爱神阿佛洛狄忒(Aphrodite)有关。为了赢得一个美丽女人的心,需要保持三天的纯洁,献上乳香,并在献祭时念出这个名字。然后,在接近那位女士时,心中默念这个名字七次,连续七天这样做,以期成功。\n\n4. 第四个咒语(PGM 4.1496 – 1)描述了在燃烧没药(myrrh)时念诵的咒语。这个咒语是向没药祈祷,希望它能够像“肉食者”和“心灵点燃者”一样,吸引一个名为[名字]的女人(她的母亲名为[名字]),让她无法安坐、饮食、注视或亲吻其他人,而是让她的心中只有施法者,直到她来到施法者身边。\n\n这些咒语反映了古代人们对魔法和超自然力量的信仰,以及他们试图通过这些咒语来影响他人情感和行为的方式。"

},

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 1,

"completion_tokens": 1,

"total_tokens": 2

},

"created": 100920

}提供一个可访问的图像URL或者BASE64_URL进行解析。

此格式兼容 gpt-4-vision-preview API格式,您也可以用这个格式传送文档进行解析。

POST /v1/chat/completions

header 需要设置 Authorization 头部:

Authorization: Bearer [refresh_token]

请求数据:

{

// 模型名称随意填写,如果不希望输出检索过程模型名称请包含silent_search

"model": "kimi",

"messages": [

{

"role": "user",

"content": [

{

"type": "image_url",

"image_url": {

"url": "https://www.moonshot.cn/assets/logo/normal-dark.png"

}

},

{

"type": "text",

"text": "图像描述了什么?"

}

]

}

],

// 建议关闭联网搜索,防止干扰解读结果

"use_search": false

}响应数据:

{

"id": "cnn6l8ilnl92l36tu8ag",

"model": "kimi",

"object": "chat.completion",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "图像中展示了“Moonshot AI”的字样,这可能是月之暗面科技有限公司(Moonshot AI)的标志或者品牌标识。通常这样的图像用于代表公司或产品,传达品牌信息。由于图像是PNG格式,它可能是一个透明背景的logo,用于网站、应用程序或其他视觉材料中。"

},

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 1,

"completion_tokens": 1,

"total_tokens": 2

},

"created": 1710123627

}检测refresh_token是否存活,如果存活live为true,否则为false,请不要频繁(小于10分钟)调用此接口。

POST /token/check

请求数据:

{

"token": "eyJhbGciOiJIUzUxMiIsInR5cCI6IkpXVCJ9..."

}响应数据:

{

"live": true

}如果您正在使用Nginx反向代理kimi-free-api,请添加以下配置项优化流的输出效果,优化体验感。

# 关闭代理缓冲。当设置为off时,Nginx会立即将客户端请求发送到后端服务器,并立即将从后端服务器接收到的响应发送回客户端。

proxy_buffering off;

# 启用分块传输编码。分块传输编码允许服务器为动态生成的内容分块发送数据,而不需要预先知道内容的大小。

chunked_transfer_encoding on;

# 开启TCP_NOPUSH,这告诉Nginx在数据包发送到客户端之前,尽可能地发送数据。这通常在sendfile使用时配合使用,可以提高网络效率。

tcp_nopush on;

# 开启TCP_NODELAY,这告诉Nginx不延迟发送数据,立即发送小数据包。在某些情况下,这可以减少网络的延迟。

tcp_nodelay on;

# 设置保持连接的超时时间,这里设置为120秒。如果在这段时间内,客户端和服务器之间没有进一步的通信,连接将被关闭。

keepalive_timeout 120;由于推理侧不在kimi-free-api,因此token不可统计,将以固定数字返回!!!!!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for kimi-free-api

Similar Open Source Tools

kimi-free-api

KIMI AI Free 服务 支持高速流式输出、支持多轮对话、支持联网搜索、支持长文档解读、支持图像解析,零配置部署,多路token支持,自动清理会话痕迹。 与ChatGPT接口完全兼容。 还有以下五个free-api欢迎关注: 阶跃星辰 (跃问StepChat) 接口转API step-free-api 阿里通义 (Qwen) 接口转API qwen-free-api ZhipuAI (智谱清言) 接口转API glm-free-api 秘塔AI (metaso) 接口转API metaso-free-api 聆心智能 (Emohaa) 接口转API emohaa-free-api

glm-free-api

GLM AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, intelligent agent dialogue support, AI drawing support, online search support, long document interpretation support, image parsing support. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository also includes six other free APIs for various services like Moonshot AI, StepChat, Qwen, Metaso, Spark, and Emohaa. The tool supports tasks such as chat completions, AI drawing, document interpretation, image parsing, and refresh token survival check.

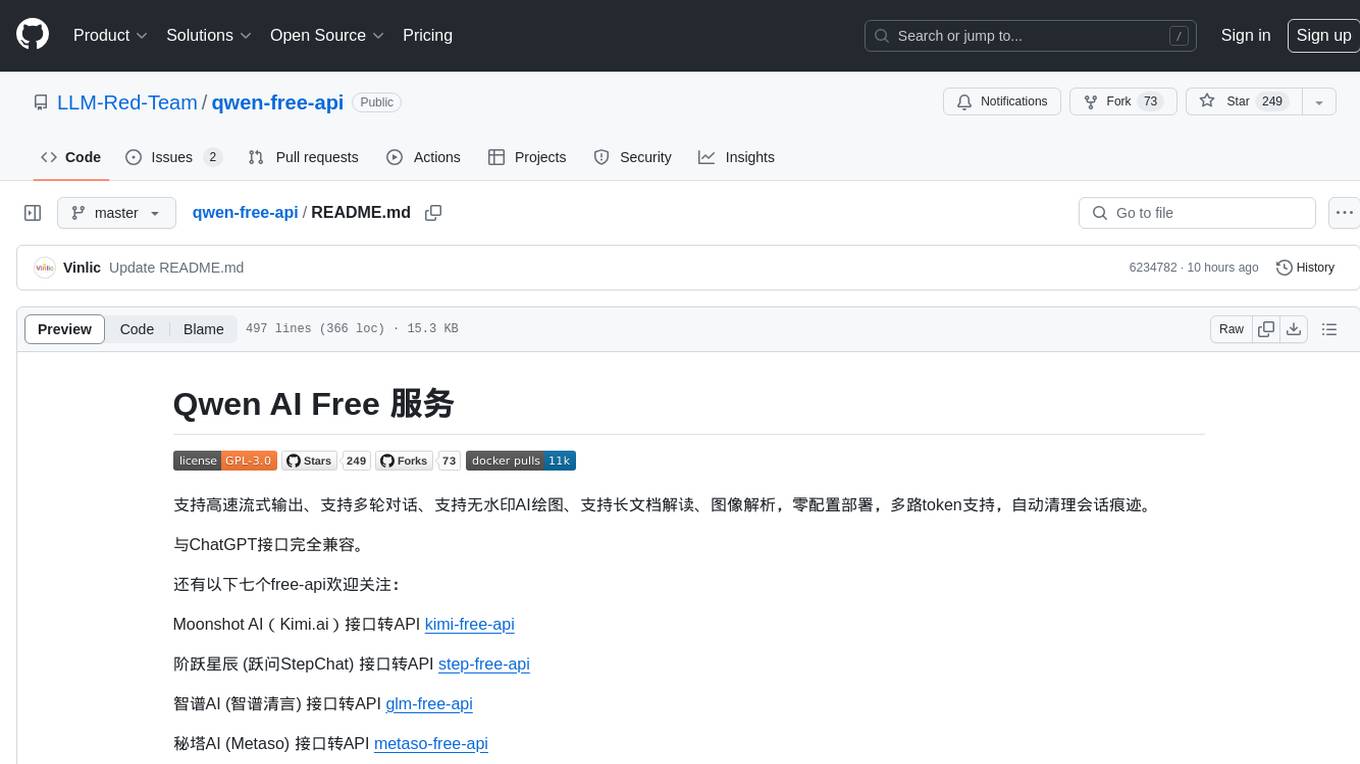

qwen-free-api

Qwen AI Free service supports high-speed streaming output, multi-turn dialogue, watermark-free AI drawing, long document interpretation, image parsing, zero-configuration deployment, multi-token support, automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository provides various free APIs for different AI services. Users can access the service through different deployment methods like Docker, Docker-compose, Render, Vercel, and native deployment. It offers interfaces for chat completions, AI drawing, document interpretation, image parsing, and token checking. Users need to provide 'login_tongyi_ticket' for authorization. The project emphasizes research, learning, and personal use only, discouraging commercial use to avoid service pressure on the official platform.

spark-free-api

Spark AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, AI drawing support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository includes multiple free-api projects for various AI services. Users can access the API for tasks such as chat completions, AI drawing, document interpretation, image analysis, and ssoSessionId live checking. The project also provides guidelines for deployment using Docker, Docker-compose, Render, Vercel, and native deployment methods. It recommends using custom clients for faster and simpler access to the free-api series projects.

step-free-api

The StepChat Free service provides high-speed streaming output, multi-turn dialogue support, online search support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. Additionally, it provides seven other free APIs for various services. The repository includes a disclaimer about using reverse APIs and encourages users to avoid commercial use to prevent service pressure on the official platform. It offers online testing links, showcases different demos, and provides deployment guides for Docker, Docker-compose, Render, Vercel, and native deployments. The repository also includes information on using multiple accounts, optimizing Nginx reverse proxy, and checking the liveliness of refresh tokens.

newapi-ai-check-in

The newapi.ai-check-in repository is designed for automatically signing in with multiple accounts on public welfare websites. It supports various features such as single/multiple account automatic check-ins, multiple robot notifications, Linux.do and GitHub login authentication, and Cloudflare bypass. Users can fork the repository, set up GitHub environment secrets, configure multiple account formats, enable GitHub Actions, and test the check-in process manually. The script runs every 8 hours and users can trigger manual check-ins at any time. Notifications can be enabled through email, DingTalk, Feishu, WeChat Work, PushPlus, ServerChan, and Telegram bots. To prevent automatic disabling of Actions due to inactivity, users can set up a trigger PAT in the GitHub settings. The repository also provides troubleshooting tips for failed check-ins and instructions for setting up a local development environment.

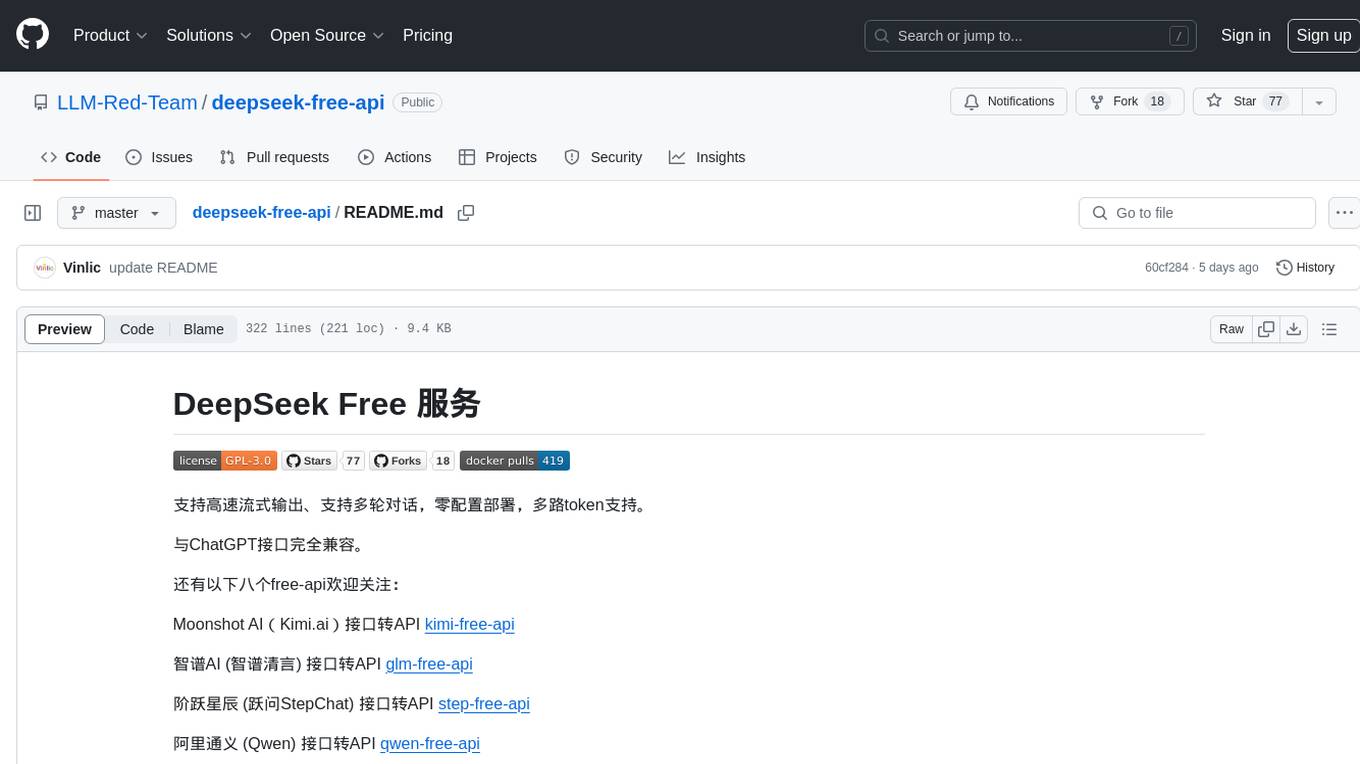

deepseek-free-api

DeepSeek Free API is a high-speed streaming output tool that supports multi-turn conversations and zero-configuration deployment. It is compatible with the ChatGPT interface and offers multiple token support. The tool provides eight free APIs for various AI interfaces. Users can access the tool online, prepare for integration, deploy using Docker, Docker-compose, Render, Vercel, or native deployment methods. It also offers client recommendations for faster integration and supports dialogue completion and userToken live checks. The tool comes with important considerations for Nginx reverse proxy optimization and token statistics.

metaso-free-api

Metaso AI Free service supports high-speed streaming output, secret tower AI super network search (full network or academic as well as concise, in-depth, research three modes), zero-configuration deployment, multi-token support. Fully compatible with ChatGPT interface. It also has seven other free APIs available for use. The tool provides various deployment options such as Docker, Docker-compose, Render, Vercel, and native deployment. Users can access the tool for chat completions and token live checks. Note: Reverse API is unstable, it is recommended to use the official Metaso AI website to avoid the risk of banning. This project is for research and learning purposes only, not for commercial use.

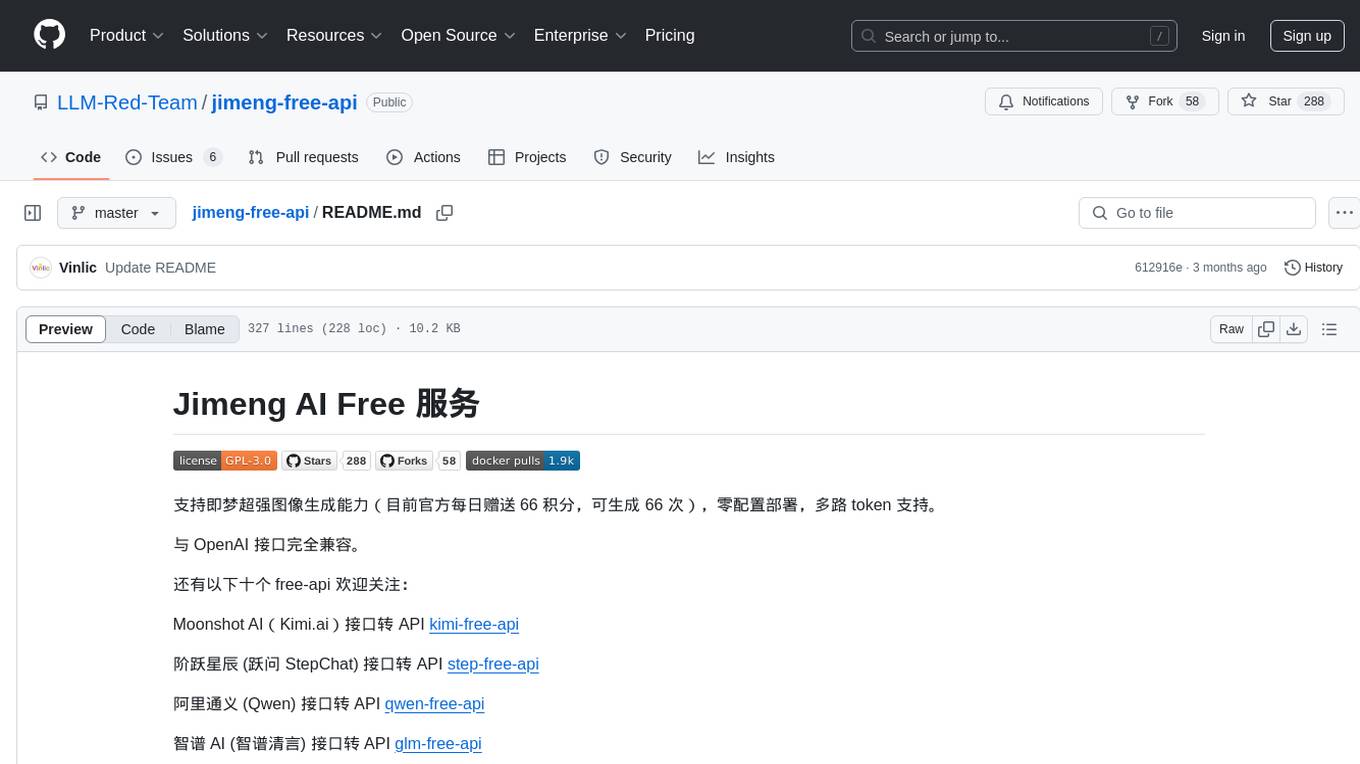

jimeng-free-api

Jimeng AI Free service provides powerful image generation capabilities with zero configuration deployment and support for multiple tokens. It is fully compatible with the OpenAI interface. The repository also includes other free APIs like Moonshot AI, StepChat, Qwen, GLM AI, Metaso AI, Doubao by ByteDance, Spark by Xunfei, Hailuo AI, DeepSeek, and Emohaa AI. Users can access the service by obtaining a sessionid from Jimeng and using it as a Bearer Token in the Authorization header for API requests. The service supports chat completions and image generations, with different models and parameters available for customization. Various deployment options are provided, including Docker, Docker-compose, Render, Vercel, and native deployment. Users are advised to use the recommended client applications for faster and simpler access to the free API services.

emohaa-free-api

Emohaa AI Free API is a free API that allows you to access the Emohaa AI chatbot. Emohaa AI is a powerful chatbot that can understand and respond to a wide range of natural language queries. It can be used for a variety of purposes, such as customer service, information retrieval, and language translation. The Emohaa AI Free API is easy to use and can be integrated into any application. It is a great way to add AI capabilities to your projects without having to build your own chatbot from scratch.

midjourney-proxy

Midjourney Proxy is an open-source project that acts as a proxy for the Midjourney Discord channel, allowing API-based AI drawing calls for charitable purposes. It provides drawing API for free use, ensuring full functionality, security, and minimal memory usage. The project supports various commands and actions related to Imagine, Blend, Describe, and more. It also offers real-time progress tracking, Chinese prompt translation, sensitive word pre-detection, user-token connection via wss for error information retrieval, and various account configuration options. Additionally, it includes features like image zooming, seed value retrieval, account-specific speed mode settings, multiple account configurations, and more. The project aims to support mainstream drawing clients and API calls, with features like task hierarchy, Remix mode, image saving, and CDN acceleration, among others.

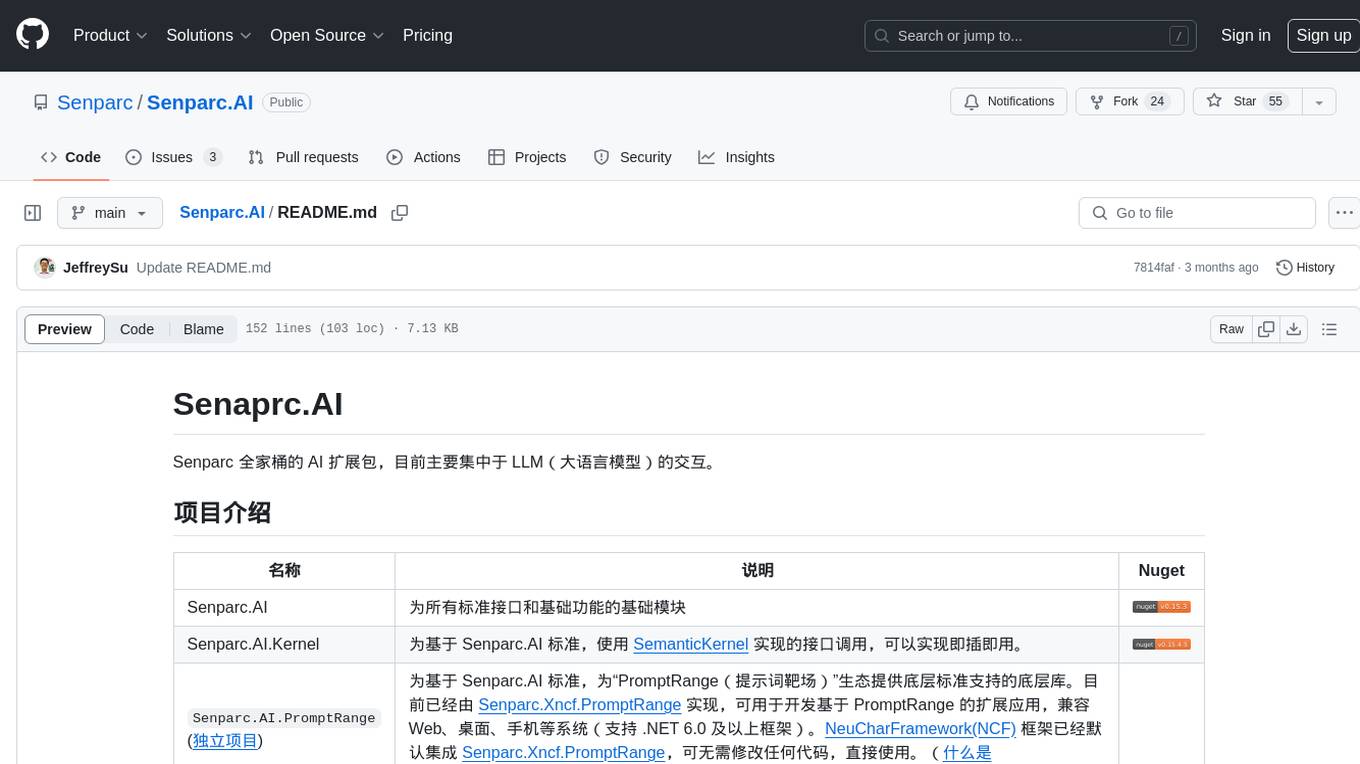

Senparc.AI

Senparc.AI is an AI extension package for the Senparc ecosystem, focusing on LLM (Large Language Models) interaction. It provides modules for standard interfaces and basic functionalities, as well as interfaces using SemanticKernel for plug-and-play capabilities. The package also includes a library for supporting the 'PromptRange' ecosystem, compatible with various systems and frameworks. Users can configure different AI platforms and models, define AI interface parameters, and run AI functions easily. The package offers examples and commands for dialogue, embedding, and DallE drawing operations.

Chat-Style-Bot

Chat-Style-Bot is an intelligent chatbot designed to mimic the chatting style of a specified individual. By analyzing and learning from WeChat chat records, Chat-Style-Bot can imitate your unique chatting style and become your personal chat assistant. Whether it's communicating with friends or handling daily conversations, Chat-Style-Bot can provide a natural, personalized interactive experience.

YesImBot

YesImBot, also known as Athena, is a Koishi plugin designed to allow large AI models to participate in group chat discussions. It offers easy customization of the bot's name, personality, emotions, and other messages. The plugin supports load balancing multiple API interfaces for large models, provides immersive context awareness, blocks potentially harmful messages, and automatically fetches high-quality prompts. Users can adjust various settings for the bot and customize system prompt words. The ultimate goal is to seamlessly integrate the bot into group chats without detection, with ongoing improvements and features like message recognition, emoji sending, multimodal image support, and more.

Gensokyo-llm

Gensokyo-llm is a tool designed for Gensokyo and Onebotv11, providing a one-click solution for large models. It supports various Onebotv11 standard frameworks, HTTP-API, and reverse WS. The tool is lightweight, with built-in SQLite for context maintenance and proxy support. It allows easy integration with the Gensokyo framework by configuring reverse HTTP and forward HTTP addresses. Users can set system settings, role cards, and context length. Additionally, it offers an openai original flavor API with automatic context. The tool can be used as an API or integrated with QQ channel robots. It supports converting GPT's SSE type and ensures memory safety in concurrent SSE environments. The tool also supports multiple users simultaneously transmitting SSE bidirectionally.

For similar tasks

open-webui

Open WebUI is an extensible, feature-rich, and user-friendly self-hosted WebUI designed to operate entirely offline. It supports various LLM runners, including Ollama and OpenAI-compatible APIs. For more information, be sure to check out our Open WebUI Documentation.

mistral.rs

Mistral.rs is a fast LLM inference platform written in Rust. We support inference on a variety of devices, quantization, and easy-to-use application with an Open-AI API compatible HTTP server and Python bindings.

llama-cpp-agent

The llama-cpp-agent framework is a tool designed for easy interaction with Large Language Models (LLMs). Allowing users to chat with LLM models, execute structured function calls and get structured output (objects). It provides a simple yet robust interface and supports llama-cpp-python and OpenAI endpoints with GBNF grammar support (like the llama-cpp-python server) and the llama.cpp backend server. It works by generating a formal GGML-BNF grammar of the user defined structures and functions, which is then used by llama.cpp to generate text valid to that grammar. In contrast to most GBNF grammar generators it also supports nested objects, dictionaries, enums and lists of them.

baml

BAML is a config file format for declaring LLM functions that you can then use in TypeScript or Python. With BAML you can Classify or Extract any structured data using Anthropic, OpenAI or local models (using Ollama) ## Resources  [Discord Community](https://discord.gg/boundaryml)  [Follow us on Twitter](https://twitter.com/boundaryml) * Discord Office Hours - Come ask us anything! We hold office hours most days (9am - 12pm PST). * Documentation - Learn BAML * Documentation - BAML Syntax Reference * Documentation - Prompt engineering tips * Boundary Studio - Observability and more #### Starter projects * BAML + NextJS 14 * BAML + FastAPI + Streaming ## Motivation Calling LLMs in your code is frustrating: * your code uses types everywhere: classes, enums, and arrays * but LLMs speak English, not types BAML makes calling LLMs easy by taking a type-first approach that lives fully in your codebase: 1. Define what your LLM output type is in a .baml file, with rich syntax to describe any field (even enum values) 2. Declare your prompt in the .baml config using those types 3. Add additional LLM config like retries or redundancy 4. Transpile the .baml files to a callable Python or TS function with a type-safe interface. (VSCode extension does this for you automatically). We were inspired by similar patterns for type safety: protobuf and OpenAPI for RPCs, Prisma and SQLAlchemy for databases. BAML guarantees type safety for LLMs and comes with tools to give you a great developer experience:  Jump to BAML code or how Flexible Parsing works without additional LLM calls. | BAML Tooling | Capabilities | | ----------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | | BAML Compiler install | Transpiles BAML code to a native Python / Typescript library (you only need it for development, never for releases) Works on Mac, Windows, Linux  | | VSCode Extension install | Syntax highlighting for BAML files Real-time prompt preview Testing UI | | Boundary Studio open (not open source) | Type-safe observability Labeling |

wenxin-starter

WenXin-Starter is a spring-boot-starter for Baidu's "Wenxin Qianfan WENXINWORKSHOP" large model, which can help you quickly access Baidu's AI capabilities. It fully integrates the official API documentation of Wenxin Qianfan. Supports text-to-image generation, built-in dialogue memory, and supports streaming return of dialogue. Supports QPS control of a single model and supports queuing mechanism. Plugins will be added soon.

intel-extension-for-transformers

Intel® Extension for Transformers is an innovative toolkit designed to accelerate GenAI/LLM everywhere with the optimal performance of Transformer-based models on various Intel platforms, including Intel Gaudi2, Intel CPU, and Intel GPU. The toolkit provides the below key features and examples: * Seamless user experience of model compressions on Transformer-based models by extending [Hugging Face transformers](https://github.com/huggingface/transformers) APIs and leveraging [Intel® Neural Compressor](https://github.com/intel/neural-compressor) * Advanced software optimizations and unique compression-aware runtime (released with NeurIPS 2022's paper [Fast Distilbert on CPUs](https://arxiv.org/abs/2211.07715) and [QuaLA-MiniLM: a Quantized Length Adaptive MiniLM](https://arxiv.org/abs/2210.17114), and NeurIPS 2021's paper [Prune Once for All: Sparse Pre-Trained Language Models](https://arxiv.org/abs/2111.05754)) * Optimized Transformer-based model packages such as [Stable Diffusion](examples/huggingface/pytorch/text-to-image/deployment/stable_diffusion), [GPT-J-6B](examples/huggingface/pytorch/text-generation/deployment), [GPT-NEOX](examples/huggingface/pytorch/language-modeling/quantization#2-validated-model-list), [BLOOM-176B](examples/huggingface/pytorch/language-modeling/inference#BLOOM-176B), [T5](examples/huggingface/pytorch/summarization/quantization#2-validated-model-list), [Flan-T5](examples/huggingface/pytorch/summarization/quantization#2-validated-model-list), and end-to-end workflows such as [SetFit-based text classification](docs/tutorials/pytorch/text-classification/SetFit_model_compression_AGNews.ipynb) and [document level sentiment analysis (DLSA)](workflows/dlsa) * [NeuralChat](intel_extension_for_transformers/neural_chat), a customizable chatbot framework to create your own chatbot within minutes by leveraging a rich set of [plugins](https://github.com/intel/intel-extension-for-transformers/blob/main/intel_extension_for_transformers/neural_chat/docs/advanced_features.md) such as [Knowledge Retrieval](./intel_extension_for_transformers/neural_chat/pipeline/plugins/retrieval/README.md), [Speech Interaction](./intel_extension_for_transformers/neural_chat/pipeline/plugins/audio/README.md), [Query Caching](./intel_extension_for_transformers/neural_chat/pipeline/plugins/caching/README.md), and [Security Guardrail](./intel_extension_for_transformers/neural_chat/pipeline/plugins/security/README.md). This framework supports Intel Gaudi2/CPU/GPU. * [Inference](https://github.com/intel/neural-speed/tree/main) of Large Language Model (LLM) in pure C/C++ with weight-only quantization kernels for Intel CPU and Intel GPU (TBD), supporting [GPT-NEOX](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptneox), [LLAMA](https://github.com/intel/neural-speed/tree/main/neural_speed/models/llama), [MPT](https://github.com/intel/neural-speed/tree/main/neural_speed/models/mpt), [FALCON](https://github.com/intel/neural-speed/tree/main/neural_speed/models/falcon), [BLOOM-7B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/bloom), [OPT](https://github.com/intel/neural-speed/tree/main/neural_speed/models/opt), [ChatGLM2-6B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/chatglm), [GPT-J-6B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptj), and [Dolly-v2-3B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptneox). Support AMX, VNNI, AVX512F and AVX2 instruction set. We've boosted the performance of Intel CPUs, with a particular focus on the 4th generation Intel Xeon Scalable processor, codenamed [Sapphire Rapids](https://www.intel.com/content/www/us/en/products/docs/processors/xeon-accelerated/4th-gen-xeon-scalable-processors.html).

bce-qianfan-sdk

The Qianfan SDK provides best practices for large model toolchains, allowing AI workflows and AI-native applications to access the Qianfan large model platform elegantly and conveniently. The core capabilities of the SDK include three parts: large model reasoning, large model training, and general and extension: * `Large model reasoning`: Implements interface encapsulation for reasoning of Yuyan (ERNIE-Bot) series, open source large models, etc., supporting dialogue, completion, Embedding, etc. * `Large model training`: Based on platform capabilities, it supports end-to-end large model training process, including training data, fine-tuning/pre-training, and model services. * `General and extension`: General capabilities include common AI development tools such as Prompt/Debug/Client. The extension capability is based on the characteristics of Qianfan to adapt to common middleware frameworks.

lmdeploy

LMDeploy is a toolkit for compressing, deploying, and serving LLM, developed by the MMRazor and MMDeploy teams. It has the following core features: * **Efficient Inference** : LMDeploy delivers up to 1.8x higher request throughput than vLLM, by introducing key features like persistent batch(a.k.a. continuous batching), blocked KV cache, dynamic split&fuse, tensor parallelism, high-performance CUDA kernels and so on. * **Effective Quantization** : LMDeploy supports weight-only and k/v quantization, and the 4-bit inference performance is 2.4x higher than FP16. The quantization quality has been confirmed via OpenCompass evaluation. * **Effortless Distribution Server** : Leveraging the request distribution service, LMDeploy facilitates an easy and efficient deployment of multi-model services across multiple machines and cards. * **Interactive Inference Mode** : By caching the k/v of attention during multi-round dialogue processes, the engine remembers dialogue history, thus avoiding repetitive processing of historical sessions.

For similar jobs

h2ogpt

h2oGPT is an Apache V2 open-source project that allows users to query and summarize documents or chat with local private GPT LLMs. It features a private offline database of any documents (PDFs, Excel, Word, Images, Video Frames, Youtube, Audio, Code, Text, MarkDown, etc.), a persistent database (Chroma, Weaviate, or in-memory FAISS) using accurate embeddings (instructor-large, all-MiniLM-L6-v2, etc.), and efficient use of context using instruct-tuned LLMs (no need for LangChain's few-shot approach). h2oGPT also offers parallel summarization and extraction, reaching an output of 80 tokens per second with the 13B LLaMa2 model, HYDE (Hypothetical Document Embeddings) for enhanced retrieval based upon LLM responses, a variety of models supported (LLaMa2, Mistral, Falcon, Vicuna, WizardLM. With AutoGPTQ, 4-bit/8-bit, LORA, etc.), GPU support from HF and LLaMa.cpp GGML models, and CPU support using HF, LLaMa.cpp, and GPT4ALL models. Additionally, h2oGPT provides Attention Sinks for arbitrarily long generation (LLaMa-2, Mistral, MPT, Pythia, Falcon, etc.), a UI or CLI with streaming of all models, the ability to upload and view documents through the UI (control multiple collaborative or personal collections), Vision Models LLaVa, Claude-3, Gemini-Pro-Vision, GPT-4-Vision, Image Generation Stable Diffusion (sdxl-turbo, sdxl) and PlaygroundAI (playv2), Voice STT using Whisper with streaming audio conversion, Voice TTS using MIT-Licensed Microsoft Speech T5 with multiple voices and Streaming audio conversion, Voice TTS using MPL2-Licensed TTS including Voice Cloning and Streaming audio conversion, AI Assistant Voice Control Mode for hands-free control of h2oGPT chat, Bake-off UI mode against many models at the same time, Easy Download of model artifacts and control over models like LLaMa.cpp through the UI, Authentication in the UI by user/password via Native or Google OAuth, State Preservation in the UI by user/password, Linux, Docker, macOS, and Windows support, Easy Windows Installer for Windows 10 64-bit (CPU/CUDA), Easy macOS Installer for macOS (CPU/M1/M2), Inference Servers support (oLLaMa, HF TGI server, vLLM, Gradio, ExLLaMa, Replicate, OpenAI, Azure OpenAI, Anthropic), OpenAI-compliant, Server Proxy API (h2oGPT acts as drop-in-replacement to OpenAI server), Python client API (to talk to Gradio server), JSON Mode with any model via code block extraction. Also supports MistralAI JSON mode, Claude-3 via function calling with strict Schema, OpenAI via JSON mode, and vLLM via guided_json with strict Schema, Web-Search integration with Chat and Document Q/A, Agents for Search, Document Q/A, Python Code, CSV frames (Experimental, best with OpenAI currently), Evaluate performance using reward models, and Quality maintained with over 1000 unit and integration tests taking over 4 GPU-hours.

mistral.rs

Mistral.rs is a fast LLM inference platform written in Rust. We support inference on a variety of devices, quantization, and easy-to-use application with an Open-AI API compatible HTTP server and Python bindings.

ollama

Ollama is a lightweight, extensible framework for building and running language models on the local machine. It provides a simple API for creating, running, and managing models, as well as a library of pre-built models that can be easily used in a variety of applications. Ollama is designed to be easy to use and accessible to developers of all levels. It is open source and available for free on GitHub.

llama-cpp-agent

The llama-cpp-agent framework is a tool designed for easy interaction with Large Language Models (LLMs). Allowing users to chat with LLM models, execute structured function calls and get structured output (objects). It provides a simple yet robust interface and supports llama-cpp-python and OpenAI endpoints with GBNF grammar support (like the llama-cpp-python server) and the llama.cpp backend server. It works by generating a formal GGML-BNF grammar of the user defined structures and functions, which is then used by llama.cpp to generate text valid to that grammar. In contrast to most GBNF grammar generators it also supports nested objects, dictionaries, enums and lists of them.

llama_ros

This repository provides a set of ROS 2 packages to integrate llama.cpp into ROS 2. By using the llama_ros packages, you can easily incorporate the powerful optimization capabilities of llama.cpp into your ROS 2 projects by running GGUF-based LLMs and VLMs.

MITSUHA

OneReality is a virtual waifu/assistant that you can speak to through your mic and it'll speak back to you! It has many features such as: * You can speak to her with a mic * It can speak back to you * Has short-term memory and long-term memory * Can open apps * Smarter than you * Fluent in English, Japanese, Korean, and Chinese * Can control your smart home like Alexa if you set up Tuya (more info in Prerequisites) It is built with Python, Llama-cpp-python, Whisper, SpeechRecognition, PocketSphinx, VITS-fast-fine-tuning, VITS-simple-api, HyperDB, Sentence Transformers, and Tuya Cloud IoT.

wenxin-starter

WenXin-Starter is a spring-boot-starter for Baidu's "Wenxin Qianfan WENXINWORKSHOP" large model, which can help you quickly access Baidu's AI capabilities. It fully integrates the official API documentation of Wenxin Qianfan. Supports text-to-image generation, built-in dialogue memory, and supports streaming return of dialogue. Supports QPS control of a single model and supports queuing mechanism. Plugins will be added soon.

FlexFlow

FlexFlow Serve is an open-source compiler and distributed system for **low latency**, **high performance** LLM serving. FlexFlow Serve outperforms existing systems by 1.3-2.0x for single-node, multi-GPU inference and by 1.4-2.4x for multi-node, multi-GPU inference.