PrivHunterAI

一款通过被动代理方式,利用主流 AI(如 Kimi、DeepSeek、GPT 等)检测越权漏洞的工具。其核心检测功能依托相关 AI 引擎的开放 API 构建,支持 HTTPS 协议的数据传输与交互。

Stars: 238

PrivHunterAI is a tool that detects authorization vulnerabilities using mainstream AI engines such as Kimi, DeepSeek, and GPT through passive proxying. The core detection function relies on open APIs of related AI engines and supports data transmission and interaction over HTTPS protocol. It continuously improves by adding features like scan failure retry mechanism, response Content-Type whitelist, limiting AI request size, URL analysis, frontend result display, additional headers for requests, cost optimization by filtering authorization keywords before calling AI, and terminal output of request package records.

README:

一款通过被动代理方式,利用主流 AI(如 Kimi、DeepSeek、GPT 等)检测越权漏洞的工具。其核心检测功能依托相关 AI 引擎的开放 API 构建,支持 HTTPS 协议的数据传输与交互。

- 2025.02.18

- ⭐️新增扫描失败重试机制,避免出现漏扫;

- ⭐️新增响应Content-Type白名单,静态文件不扫描;

- ⭐️新增限制每次扫描向AI请求的最大字节,避免因请求包过大导致扫描失败。

- 2025.02.25 -02.27

- ⭐️新增对URL的分析(初步判断是否可能是无需数据鉴权的公共接口);

- ⭐️新增前端结果展示功能。

- ⭐️新增针对请求B添加其他headers的功能(适配有些鉴权不在cookie中做的场景)。

- 2025.03.01

- 优化Prompt,降低误报率;

- 优化重试机制,重试会提示类似:

AI分析异常,重试中,异常原因: API returned 401: {"code":"InvalidApiKey","message":"Invalid API-key provided.","request_id":"xxxxx"},每10秒重试一次,重试5次失败后放弃重试(避免无限重试)。

- 2025.03.03

- 💰成本优化:在调用 AI 判断越权前,新增鉴权关键字(如 “暂无查询权限”“权限不足” 等)过滤环节,若匹配到关键字则直接输出未越权结果,节省 AI tokens 花销,提升资源利用效率;

- 2025.03.21

- ⭐️新增终端输出请求包记录。

{

"role": "你是一个AI,负责通过比较两个HTTP响应数据包来检测潜在的越权行为,并自行做出判断。",

"inputs": {

"reqA": "原始请求A",

"responseA": "账号A请求URL的响应。",

"responseB": "使用账号B的Cookie(也可能是token等其他参数)重放请求的响应。",

"statusB": "账号B重放请求的请求状态码。",

"dynamicFields": ["timestamp", "nonce", "session_id", "uuid", "request_id"]

},

"analysisRequirements": {

"structureAndContentComparison": {

"urlAnalysis": "结合原始请求A和响应A分析,判断是否可能是无需数据鉴权的公共接口(不作为主要判断依据)。",

"responseComparison": "比较响应A和响应B的结构和内容,忽略动态字段(如时间戳、随机数、会话ID、X-Request-ID等),并进行语义匹配。",

"httpStatusCode": "对比HTTP状态码:403/401直接判定越权失败(false),500标记为未知(unknown),200需进一步分析。",

"similarityAnalysis": "使用字段对比和文本相似度计算(Levenshtein/Jaccard)评估内容相似度。",

"errorKeywords": "检查responseB是否包含 'Access Denied'、'Permission Denied'、'403 Forbidden' 等错误信息,若有,则判定越权失败。",

"emptyResponseHandling": "如果responseB返回null、[]、{}或HTTP 204,且responseA有数据,判定为权限受限(false)。",

"sensitiveDataDetection": "如果responseB包含responseA的敏感数据(如user_id、email、balance),判定为越权成功(true)。",

"consistencyCheck": "如果responseB和responseA结构一致但关键数据不同,判定可能是权限控制正确(false)。"

},

"judgmentCriteria": {

"authorizationSuccess (true)": "如果不是公共接口,且responseB的结构和非动态字段内容与responseA高度相似,或者responseB包含responseA的敏感数据,则判定为越权成功。",

"authorizationFailure (false)": "如果是公共接口,或者responseB的结构和responseA不相似,或者responseB明确定义权限错误(403/401/Access Denied),或者responseB为空,则判定为越权失败。",

"unknown": "如果responseB返回500,或者responseA和responseB结构不同但没有权限相关信息,或者responseB只是部分字段匹配但无法确定影响,则判定为unknown。"

}

},

"outputFormat": {

"json": {

"res": "\"true\", \"false\" 或 \"unknown\"",

"reason": "清晰的判断原因,总体不超过50字。"

}

},

"notes": [

"仅输出 JSON 格式的结果,不添加任何额外文本或解释。",

"确保 JSON 格式正确,便于后续处理。",

"保持客观,仅根据响应内容进行分析。",

"优先使用 HTTP 状态码、错误信息和数据结构匹配进行判断。",

"支持用户提供额外的动态字段,提高匹配准确性。"

],

"process": [

"接收并理解原始请求A、responseA和responseB。",

"分析原始请求A,判断是否是无需鉴权的公共接口。",

"提取并忽略动态字段(时间戳、随机数、会话ID)。",

"对比HTTP状态码,403/401直接判定为false,500标记为unknown。",

"检查responseB是否包含responseA的敏感数据(如user_id、email),如果有,则判定为true。",

"检查responseB是否返回错误信息(Access Denied / Forbidden),如果有,则判定为false。",

"计算responseA和responseB的结构相似度,并使用Levenshtein编辑距离计算文本相似度,计算时忽略动态字段(如时间戳、随机数、会话ID、X-Request-ID等)。",

"如果responseB内容为空(null、{}、[]),判断可能是权限受限,判定为false。",

"根据分析结果,返回JSON结果。"

]

}

- 下载源代码 或 Releases;

- 编辑根目录下的

config.json文件,配置AI和对应的apiKeys(只需要配置一个即可);(AI的值可配置qianwen、kimi、hunyuan、gpt、glm 或 deepseek) ; - 配置

headers2(请求B对应的headers);可按需配置suffixes、allowedRespHeaders(接口后缀白名单,如.js); - 执行

go build编译项目,并运行二进制文件(如果下载的是Releases可直接运行二进制文件); - 首次启动程序后需安装证书以解析 HTTPS 流量,证书会在首次启动程序后自动生成,路径为 ~/.mitmproxy/mitmproxy-ca-cert.pem(Windows 路径为%USERPROFILE%.mitmproxy\mitmproxy-ca-cert.pem)。安装步骤可参考 Python mitmproxy 文档:About Certificates。

- BurpSuite 挂下级代理

127.0.0.1:9080(端口可在mitmproxy.go的Addr:":9080",中配置)即可开始扫描; - 终端和web界面均可查看扫描结果,前端查看结果请访问

127.0.0.1:8222。

| 字段 | 用途 | 内容举例 |

|---|---|---|

AI |

指定所使用的 AI 模型 |

qianwen、kimi、hunyuan 、gpt、glm 或 deepseek

|

apiKeys |

存储不同 AI 服务对应的 API 密钥 (填一个即可,与AI对应) | - "kimi": "sk-xxxxxxx"- "deepseek": "sk-yyyyyyy"- "qianwen": "sk-zzzzzzz"- "hunyuan": "sk-aaaaaaa"

|

headers2 |

自定义请求B的 HTTP 请求头信息 | - "Cookie": "Cookie2"- "User-Agent": "PrivHunterAI"- "Custom-Header": "CustomValue"

|

suffixes |

需要过滤的文件后缀名列表 |

.js、.ico、.png、.jpg、 .jpeg

|

allowedRespHeaders |

需要过滤的 HTTP 响应头中的内容类型(Content-Type) |

image/png、text/html、application/pdf、text/css、audio/mpeg、audio/wav、video/mp4、application/grpc

|

respBodyBWhiteList |

鉴权关键字(如暂无查询权限、权限不足),用于初筛未越权的接口 | - 参数错误- 数据页数不正确- 文件不存在- 系统繁忙,请稍后再试- 请求参数格式不正确- 权限不足- Token不可为空- 内部错误

|

持续优化中,目前输出效果如下:

- 终端输出:

- 前端输出(访问127.0.0.1:8222):

声明:仅用于技术交流,请勿用于非法用途。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for PrivHunterAI

Similar Open Source Tools

PrivHunterAI

PrivHunterAI is a tool that detects authorization vulnerabilities using mainstream AI engines such as Kimi, DeepSeek, and GPT through passive proxying. The core detection function relies on open APIs of related AI engines and supports data transmission and interaction over HTTPS protocol. It continuously improves by adding features like scan failure retry mechanism, response Content-Type whitelist, limiting AI request size, URL analysis, frontend result display, additional headers for requests, cost optimization by filtering authorization keywords before calling AI, and terminal output of request package records.

qwen-free-api

Qwen AI Free service supports high-speed streaming output, multi-turn dialogue, watermark-free AI drawing, long document interpretation, image parsing, zero-configuration deployment, multi-token support, automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository provides various free APIs for different AI services. Users can access the service through different deployment methods like Docker, Docker-compose, Render, Vercel, and native deployment. It offers interfaces for chat completions, AI drawing, document interpretation, image parsing, and token checking. Users need to provide 'login_tongyi_ticket' for authorization. The project emphasizes research, learning, and personal use only, discouraging commercial use to avoid service pressure on the official platform.

step-free-api

The StepChat Free service provides high-speed streaming output, multi-turn dialogue support, online search support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. Additionally, it provides seven other free APIs for various services. The repository includes a disclaimer about using reverse APIs and encourages users to avoid commercial use to prevent service pressure on the official platform. It offers online testing links, showcases different demos, and provides deployment guides for Docker, Docker-compose, Render, Vercel, and native deployments. The repository also includes information on using multiple accounts, optimizing Nginx reverse proxy, and checking the liveliness of refresh tokens.

spark-free-api

Spark AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, AI drawing support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository includes multiple free-api projects for various AI services. Users can access the API for tasks such as chat completions, AI drawing, document interpretation, image analysis, and ssoSessionId live checking. The project also provides guidelines for deployment using Docker, Docker-compose, Render, Vercel, and native deployment methods. It recommends using custom clients for faster and simpler access to the free-api series projects.

glm-free-api

GLM AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, intelligent agent dialogue support, AI drawing support, online search support, long document interpretation support, image parsing support. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository also includes six other free APIs for various services like Moonshot AI, StepChat, Qwen, Metaso, Spark, and Emohaa. The tool supports tasks such as chat completions, AI drawing, document interpretation, image parsing, and refresh token survival check.

ai

Ai is a Japanese bot for Misskey, designed to provide various functionalities such as posting random notes, learning keywords, playing Reversi, server monitoring, and more. Users can interact with Ai by setting up a `config.json` file with specific parameters. The tool can be installed using Node.js and npm, with optional dependencies like MeCab for additional features. Ai can also be run using Docker for easier deployment. Some features may require specific fonts to be installed in the directory. Ai stores its memory using an in-memory database, ensuring persistence across sessions. The tool is licensed under MIT and has received the 'Works on my machine' award.

newapi-ai-check-in

The newapi.ai-check-in repository is designed for automatically signing in with multiple accounts on public welfare websites. It supports various features such as single/multiple account automatic check-ins, multiple robot notifications, Linux.do and GitHub login authentication, and Cloudflare bypass. Users can fork the repository, set up GitHub environment secrets, configure multiple account formats, enable GitHub Actions, and test the check-in process manually. The script runs every 8 hours and users can trigger manual check-ins at any time. Notifications can be enabled through email, DingTalk, Feishu, WeChat Work, PushPlus, ServerChan, and Telegram bots. To prevent automatic disabling of Actions due to inactivity, users can set up a trigger PAT in the GitHub settings. The repository also provides troubleshooting tips for failed check-ins and instructions for setting up a local development environment.

chatgpt-on-wechat

This project is a smart chatbot based on a large model, supporting WeChat, WeChat Official Account, Feishu, and DingTalk access. You can choose from GPT3.5/GPT4.0/Claude/Wenxin Yanyi/Xunfei Xinghuo/Tongyi Qianwen/Gemini/LinkAI/ZhipuAI, which can process text, voice, and images, and access external resources such as operating systems and the Internet through plugins, supporting the development of enterprise AI applications based on proprietary knowledge bases.

kimi-free-api

KIMI AI Free 服务 支持高速流式输出、支持多轮对话、支持联网搜索、支持长文档解读、支持图像解析,零配置部署,多路token支持,自动清理会话痕迹。 与ChatGPT接口完全兼容。 还有以下五个free-api欢迎关注: 阶跃星辰 (跃问StepChat) 接口转API step-free-api 阿里通义 (Qwen) 接口转API qwen-free-api ZhipuAI (智谱清言) 接口转API glm-free-api 秘塔AI (metaso) 接口转API metaso-free-api 聆心智能 (Emohaa) 接口转API emohaa-free-api

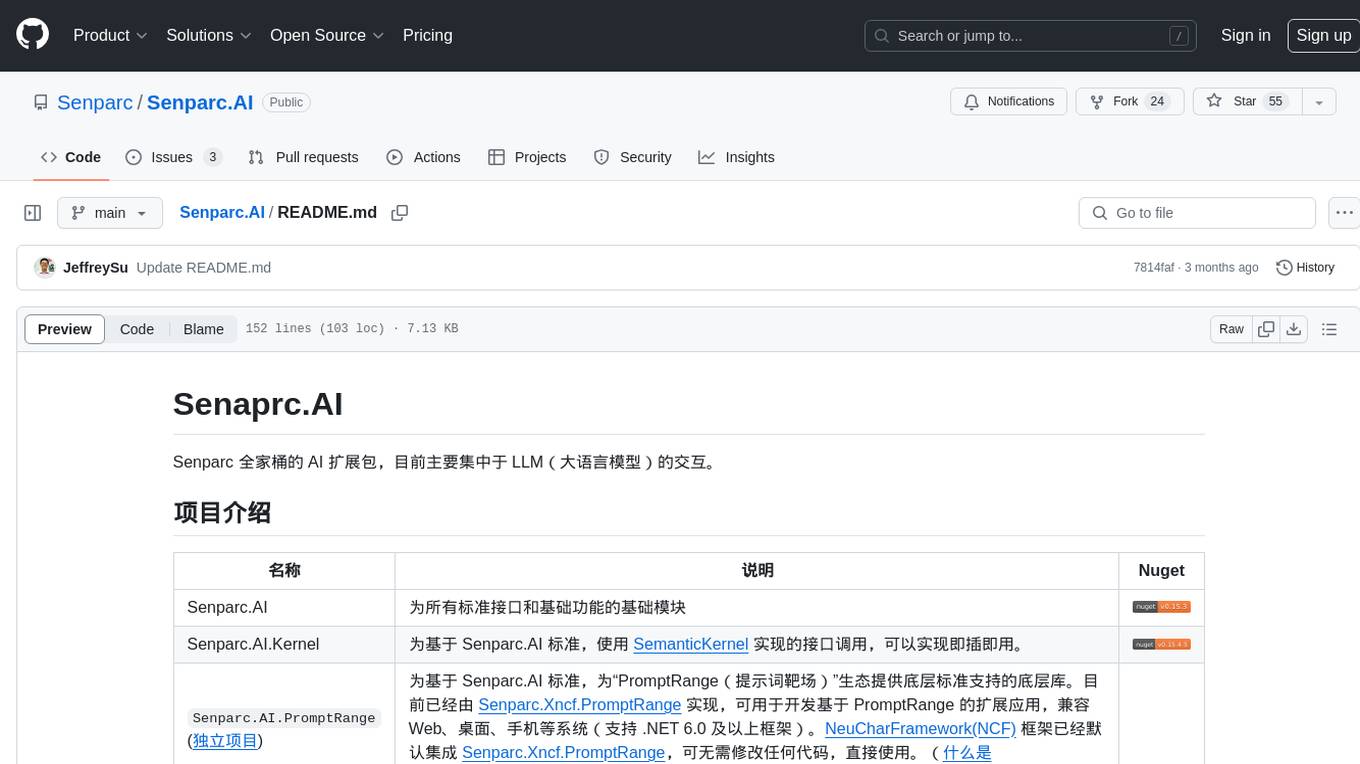

Senparc.AI

Senparc.AI is an AI extension package for the Senparc ecosystem, focusing on LLM (Large Language Models) interaction. It provides modules for standard interfaces and basic functionalities, as well as interfaces using SemanticKernel for plug-and-play capabilities. The package also includes a library for supporting the 'PromptRange' ecosystem, compatible with various systems and frameworks. Users can configure different AI platforms and models, define AI interface parameters, and run AI functions easily. The package offers examples and commands for dialogue, embedding, and DallE drawing operations.

llm_finetuning

This repository provides a comprehensive set of tools for fine-tuning large language models (LLMs) using various techniques, including full parameter training, LoRA (Low-Rank Adaptation), and P-Tuning V2. It supports a wide range of LLM models, including Qwen, Yi, Llama, and others. The repository includes scripts for data preparation, training, and inference, making it easy for users to fine-tune LLMs for specific tasks. Additionally, it offers a collection of pre-trained models and provides detailed documentation and examples to guide users through the process.

Gensokyo-llm

Gensokyo-llm is a tool designed for Gensokyo and Onebotv11, providing a one-click solution for large models. It supports various Onebotv11 standard frameworks, HTTP-API, and reverse WS. The tool is lightweight, with built-in SQLite for context maintenance and proxy support. It allows easy integration with the Gensokyo framework by configuring reverse HTTP and forward HTTP addresses. Users can set system settings, role cards, and context length. Additionally, it offers an openai original flavor API with automatic context. The tool can be used as an API or integrated with QQ channel robots. It supports converting GPT's SSE type and ensures memory safety in concurrent SSE environments. The tool also supports multiple users simultaneously transmitting SSE bidirectionally.

illufly

illufly is an Agent framework with self-evolution capabilities, aiming to quickly create value based on self-evolution. It is designed to have self-evolution capabilities in various scenarios such as intent guessing, Q&A experience, data recall rate, and tool planning ability. The framework supports continuous dialogue, built-in RAG support, and self-evolution during conversations. It also provides tools for managing experience data and supports multiple agents collaboration.

Chat-Style-Bot

Chat-Style-Bot is an intelligent chatbot designed to mimic the chatting style of a specified individual. By analyzing and learning from WeChat chat records, Chat-Style-Bot can imitate your unique chatting style and become your personal chat assistant. Whether it's communicating with friends or handling daily conversations, Chat-Style-Bot can provide a natural, personalized interactive experience.

EasyAIVtuber

EasyAIVtuber is a tool designed to animate 2D waifus by providing features like automatic idle actions, speaking animations, head nodding, singing animations, and sleeping mode. It also offers API endpoints and a web UI for interaction. The tool requires dependencies like torch and pre-trained models for optimal performance. Users can easily test the tool using OBS and UnityCapture, with options to customize character input, output size, simplification level, webcam output, model selection, port configuration, sleep interval, and movement extension. The tool also provides an API using Flask for actions like speaking based on audio, rhythmic movements, singing based on music and voice, stopping current actions, and changing images.

For similar tasks

PrivHunterAI

PrivHunterAI is a tool that detects authorization vulnerabilities using mainstream AI engines such as Kimi, DeepSeek, and GPT through passive proxying. The core detection function relies on open APIs of related AI engines and supports data transmission and interaction over HTTPS protocol. It continuously improves by adding features like scan failure retry mechanism, response Content-Type whitelist, limiting AI request size, URL analysis, frontend result display, additional headers for requests, cost optimization by filtering authorization keywords before calling AI, and terminal output of request package records.

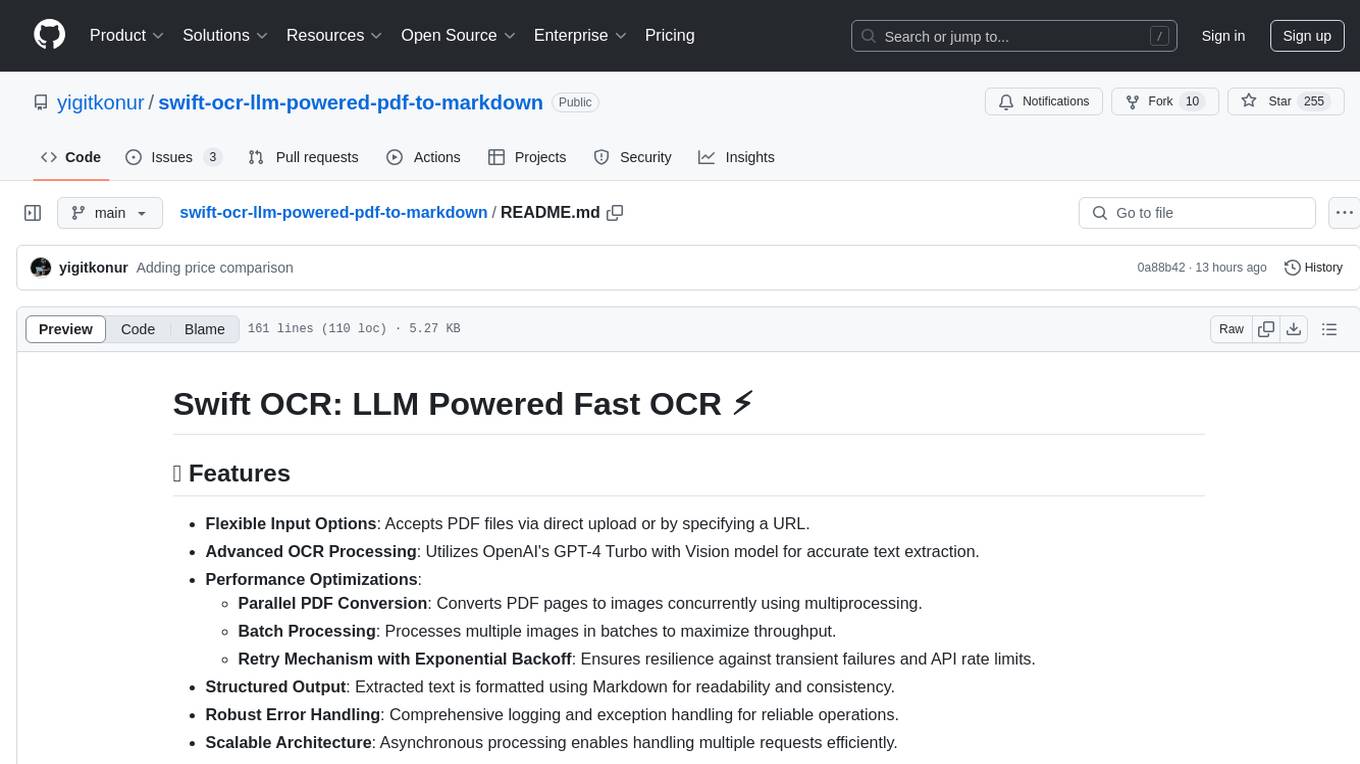

swift-ocr-llm-powered-pdf-to-markdown

Swift OCR is a powerful tool for extracting text from PDF files using OpenAI's GPT-4 Turbo with Vision model. It offers flexible input options, advanced OCR processing, performance optimizations, structured output, robust error handling, and scalable architecture. The tool ensures accurate text extraction, resilience against failures, and efficient handling of multiple requests.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.