Best AI tools for< Optimize Cost >

20 - AI tool Sites

cloudNito

cloudNito is an AI-driven platform that specializes in cloud cost optimization and management for businesses using AWS services. The platform offers automated cost optimization, comprehensive insights and analytics, unified cloud management, anomaly detection, cost and usage explorer, recommendations for waste reduction, and resource optimization. By leveraging advanced AI solutions, cloudNito aims to help businesses efficiently manage their AWS cloud resources, reduce costs, and enhance performance.

PrimeOrbit

PrimeOrbit is an AI-driven cloud cost optimization platform designed to empower operations and boost ROI for enterprises. The platform focuses on streamlining operations and simplifying cost management by delivering quality-centric solutions. It offers AI-driven optimization recommendations, automated cost allocation, and tailored FinOps for optimal efficiency and control. PrimeOrbit stands out by providing user-centric approach, superior AI recommendations, customization, and flexible enterprise workflow. It supports major cloud providers including AWS, Azure, and GCP, with full support for GCP and Kubernetes coming soon. The platform ensures complete cost allocation across cloud resources, empowering decision-makers to optimize cloud spending efficiently and effectively.

Keebo

Keebo is an AI tool designed for Snowflake optimization, offering automated query, cost, and tuning optimization. It is the only fully-automated Snowflake optimizer that dynamically adjusts to save customers 25% and more. Keebo's patented technology, based on cutting-edge research, optimizes warehouse size, clustering, and memory without impacting performance. It learns and adjusts to workload changes in real-time, setting up in just 30 minutes and delivering savings within 24 hours. The tool uses telemetry metadata for optimizations, providing full visibility and adjustability for complex scenarios and schedules.

Microsoft Azure

Microsoft Azure is a cloud computing service that offers a wide range of products and solutions for businesses and developers. It provides services such as databases, analytics, compute, containers, hybrid cloud, AI, application development, and more. Azure aims to help organizations innovate, modernize, and scale their operations by leveraging the power of the cloud. With a focus on flexibility, performance, and security, Azure is designed to support a variety of workloads and use cases across different industries.

Cerebium

Cerebium is a serverless AI infrastructure platform that allows teams to build, test, and deploy AI applications quickly and efficiently. With a focus on speed, performance, and cost optimization, Cerebium offers a range of features and tools to simplify the development and deployment of AI projects. The platform ensures high reliability, security, and compliance while providing real-time logging, cost tracking, and observability tools. Cerebium also offers GPU variety and effortless autoscaling to meet the diverse needs of developers and businesses.

Integrail

Integrail is an AI tool that simplifies the process of building AI applications by allowing users to design and deploy multi-agent applications without the need for coding skills. It offers a range of features such as integrating external apps, optimizing cost and accuracy, and deploying applications securely in the cloud or on-premises. Integrail Studio provides access to popular AI models and enables users to transform business workflows efficiently.

Lunary

Lunary is an AI developer platform designed to bring AI applications to production. It offers a comprehensive set of tools to manage, improve, and protect LLM apps. With features like Logs, Metrics, Prompts, Evaluations, and Threads, Lunary empowers users to monitor and optimize their AI agents effectively. The platform supports tasks such as tracing errors, labeling data for fine-tuning, optimizing costs, running benchmarks, and testing open-source models. Lunary also facilitates collaboration with non-technical teammates through features like A/B testing, versioning, and clean source-code management.

Eden AI

Eden AI is a platform that provides access to over 100 AI models through a unified API gateway. Users can easily integrate and manage multiple AI models from a single API, with control over cost, latency, and quality. The platform offers ready-to-use AI APIs, custom chatbot, image generation, speech to text, text to speech, OCR, prompt optimization, AI model comparison, cost monitoring, API monitoring, batch processing, caching, multi-API key management, and more. Additionally, professional services are available for custom AI projects tailored to specific business needs.

Microtica

Microtica is an AI-powered cloud delivery platform that offers a comprehensive suite of DevOps tools to help users build, deploy, and optimize their infrastructure efficiently. With features like AI Incident Investigator, AI Infrastructure Builder, Kubernetes deployment simplification, alert monitoring, pipeline automation, and cloud monitoring, Microtica aims to streamline the development and management processes for DevOps teams. The platform provides real-time insights, cost optimization suggestions, and guided deployments, making it a valuable tool for businesses looking to enhance their cloud infrastructure operations.

CometAPI

CometAPI is a developer-focused AI model API aggregation platform that provides unified access to over 500 AI models. It offers a wide range of AI capabilities, seamless integration, and cost efficiency. Users can access various AI models from different providers in one place, manage payments easily, and switch between providers effortlessly. CometAPI aims to simplify AI integration, optimize costs, and provide exclusive API access to advanced models like Midjourney and Suno.

PredictModel

PredictModel is an AI tool that specializes in creating custom Machine Learning models tailored to meet unique requirements. The platform offers a comprehensive three-step process, including generating synthetic data, training ML models, and deploying them to AWS. PredictModel helps businesses streamline processes, improve customer segmentation, enhance client interaction, and boost overall business performance. The tool maximizes accuracy through customized synthetic data generation and saves time and money by providing expert ML engineers. With a focus on automated lead prioritization, fraud detection, cost optimization, and planning, PredictModel aims to stay ahead of the curve in the ML industry.

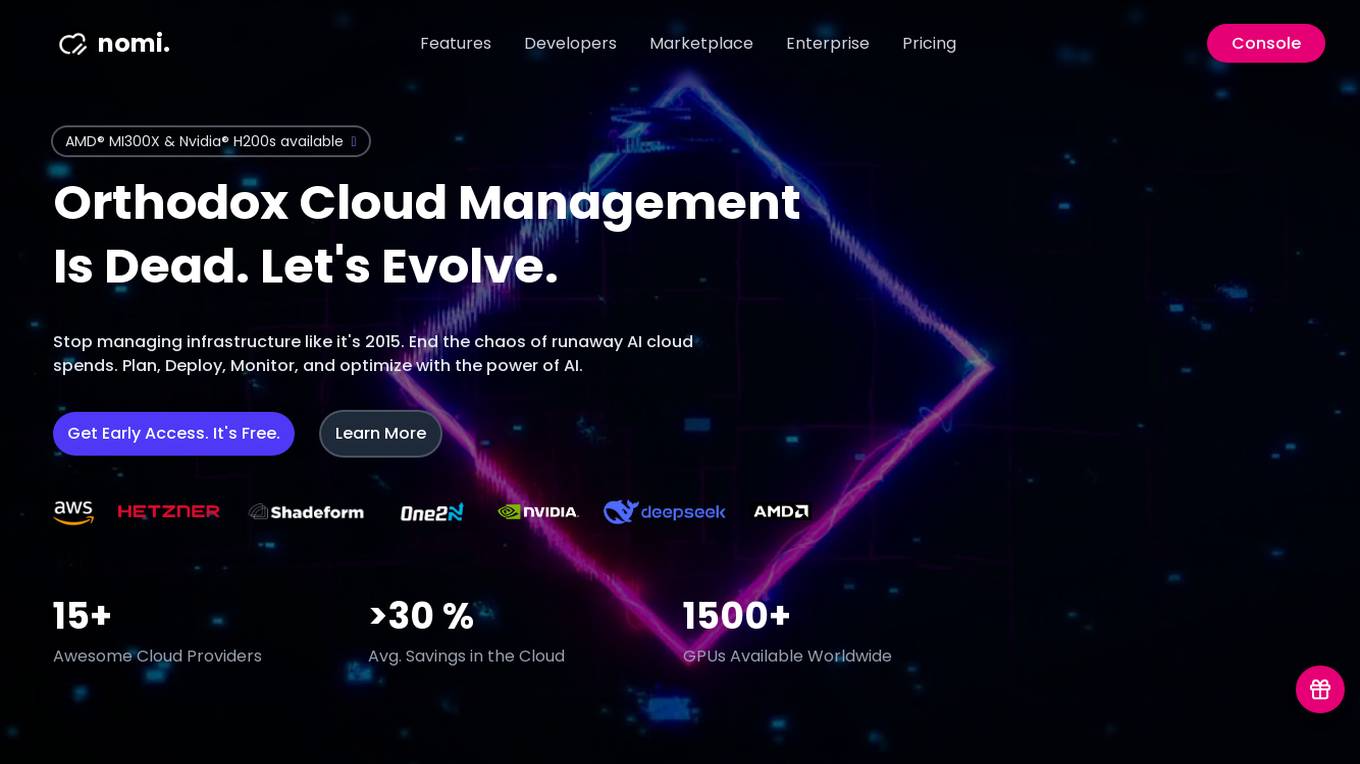

Nomi.cloud

Nomi.cloud is a modern AI-powered CloudOps and HPC assistant designed for next-gen businesses. It offers developers, marketplace, enterprise solutions, and pricing console. With features like single pane of glass view, instant deployment, continuous monitoring, AI-powered insights, and budgets & alerts built-in, Nomi.cloud aims to revolutionize cloud management. It provides a user-friendly interface to manage infrastructure efficiently, optimize costs, and deploy resources across multiple regions with ease. Nomi.cloud is built for scale, trusted by enterprises, and offers a range of GPUs and cloud providers to suit various needs.

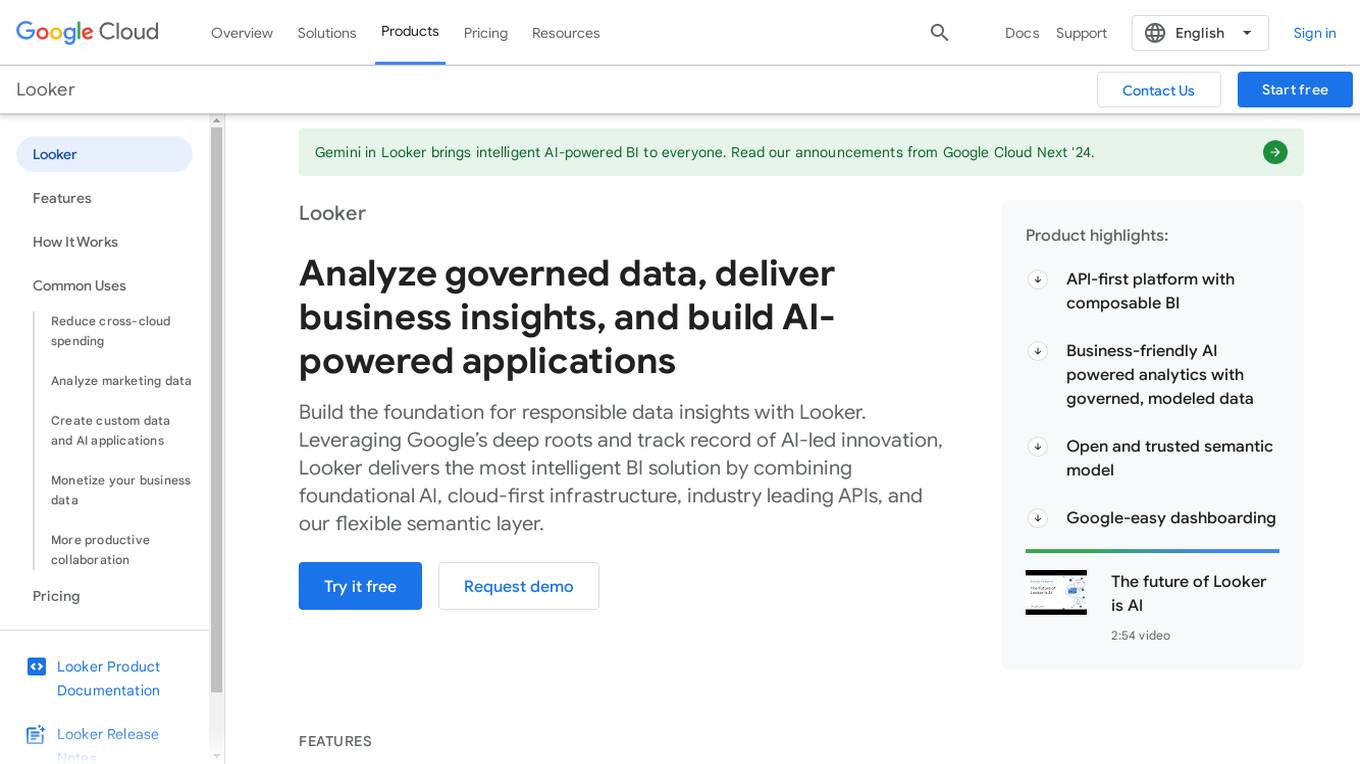

Looker

Looker is a business intelligence platform that offers embedded analytics and AI-powered BI solutions. Leveraging Google's AI-led innovation, Looker delivers intelligent BI by combining foundational AI, cloud-first infrastructure, industry-leading APIs, and a flexible semantic layer. It allows users to build custom data experiences, transform data into integrated experiences, and create deeply integrated dashboards. Looker also provides a universal semantic modeling layer for unified, trusted data sources and offers self-service analytics capabilities through Looker and Looker Studio. Additionally, Looker features Gemini, an AI-powered analytics assistant that accelerates analytical workflows and offers a collaborative and conversational user experience.

Reality AI Software

Reality AI Software is an Edge AI software development environment that combines advanced signal processing, machine learning, and anomaly detection on every MCU/MPU Renesas core. The software is underpinned by the proprietary Reality AI ML algorithm that delivers accurate and fully explainable results supporting diverse applications. It enables features like equipment monitoring, predictive maintenance, and sensing user behavior and the surrounding environment with minimal impact on the Bill of Materials (BoM). Reality AI software running on Renesas processors helps deliver endpoint intelligence in products across various markets.

datasurfr

datasurfr is an AI-driven risk monitoring and analysis platform augmented by human intelligence. It provides operational risk data and intelligence that is curated, customized, and comprehensive. The platform offers tailored alerts detected by AI, curated by surfers, and analyzed by subject matter experts for global security teams. datasurfr also offers predictive risk analytics, proactive alert risk assessment, resilience strategy, data-driven decision-making, supply chain resilience, dynamic incident response, regulatory compliance, and future-proofing strategies. The platform's algorithm works on AI detection and structuring, while human-based smart curation eliminates irrelevant data. Subject matter experts provide event summaries with insights and recommendations. datasurfr offers multi-modal communication through intuitive dashboards, mobile apps, emails, WhatsApp, and seamless APIs for versatile consumption.

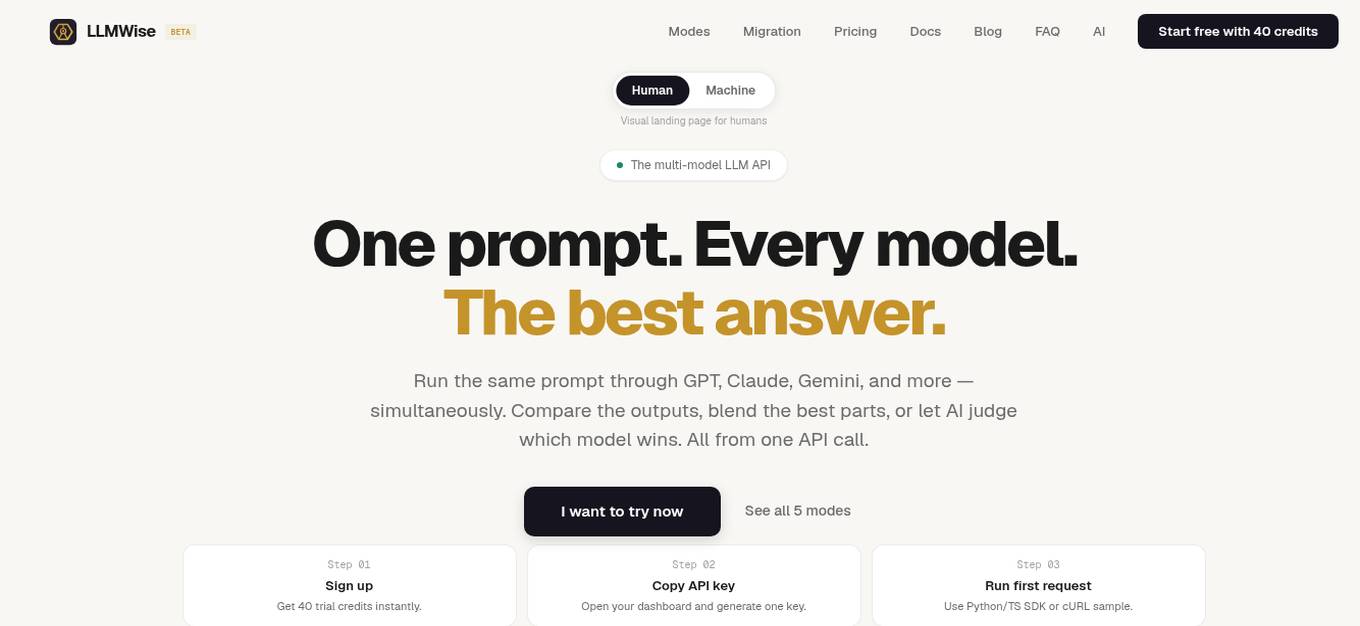

LLMWise

LLMWise is a multi-model LLM API tool that allows users to compare, blend, and route AI models simultaneously. It offers 5 modes - Chat, Compare, Blend, Judge, and Failover - to help users make informed decisions based on model outputs. With 31+ available models, LLMWise provides a user-friendly platform for orchestrating AI models, ensuring reliability, and optimizing costs. The tool is designed to streamline the integration of various AI models through one API call, offering features like real-time responses, per-model metrics, and failover routing.

Paradiso AI

Paradiso AI is an AI application that offers a range of generative AI solutions tailored to businesses. From AI chatbots to AI employees and document generators, Paradiso AI helps businesses boost ROI, enhance customer satisfaction, optimize costs, and accelerate time-to-value. The platform provides customizable AI tools that seamlessly adapt to unique processes, accelerating tasks, ensuring precision, and driving exceptional outcomes. With a focus on data security, compliance, and cost efficiency, Paradiso AI aims to deliver high-quality outcomes at lower operating costs through sophisticated prompt optimization and ongoing refinements.

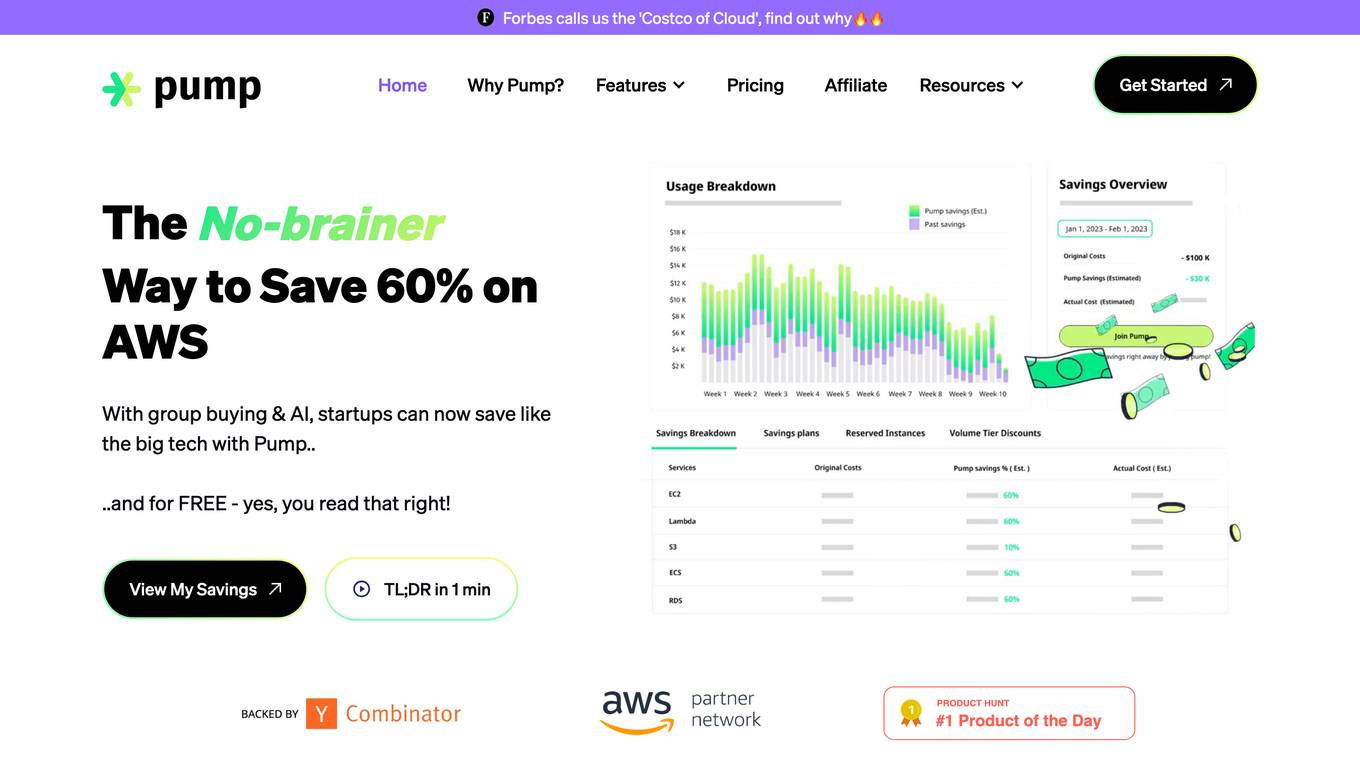

Pump

Pump is a cloud cost optimization tool that leverages group buying and AI technology to help startups save up to 60% on cloud costs. It is designed to provide discounts previously only available to large companies, with automated AI working tirelessly to find and apply the best savings for users. Pump offers a seamless and efficient experience for exploring cloud spend, ensuring secure AWS protection, and promising to slash runaway cloud computing costs. The tool is free to use and has gained popularity among startups worldwide for its innovative approach to cloud cost savings.

Creatus.AI

Creatus.AI is an AI-powered platform that provides a range of tools and services to help businesses boost productivity and transform their workplaces. With over 35 AI models and tools, and 90+ business integrations, Creatus.AI offers a comprehensive suite of solutions for businesses of all sizes. The platform's AI-native workspace and autonomous team members enable businesses to automate tasks, improve efficiency, and gain valuable insights from data. Creatus.AI also specializes in custom AI integrations and solutions, helping businesses to tailor AI solutions to their specific needs.

Kling2.5

Kling2.5 is an AI-powered video generator that offers studio-grade video creation with advanced reasoning capabilities. It delivers cost-optimized video generation, superior motion flow, and understanding of complex causal relationships and temporal sequences. Kling2.5 Turbo provides features like native HDR video output, draft mode for rapid iteration, and enhanced style consistency. The application is suitable for professional video content creation, commercial projects, and various industries.

2 - Open Source AI Tools

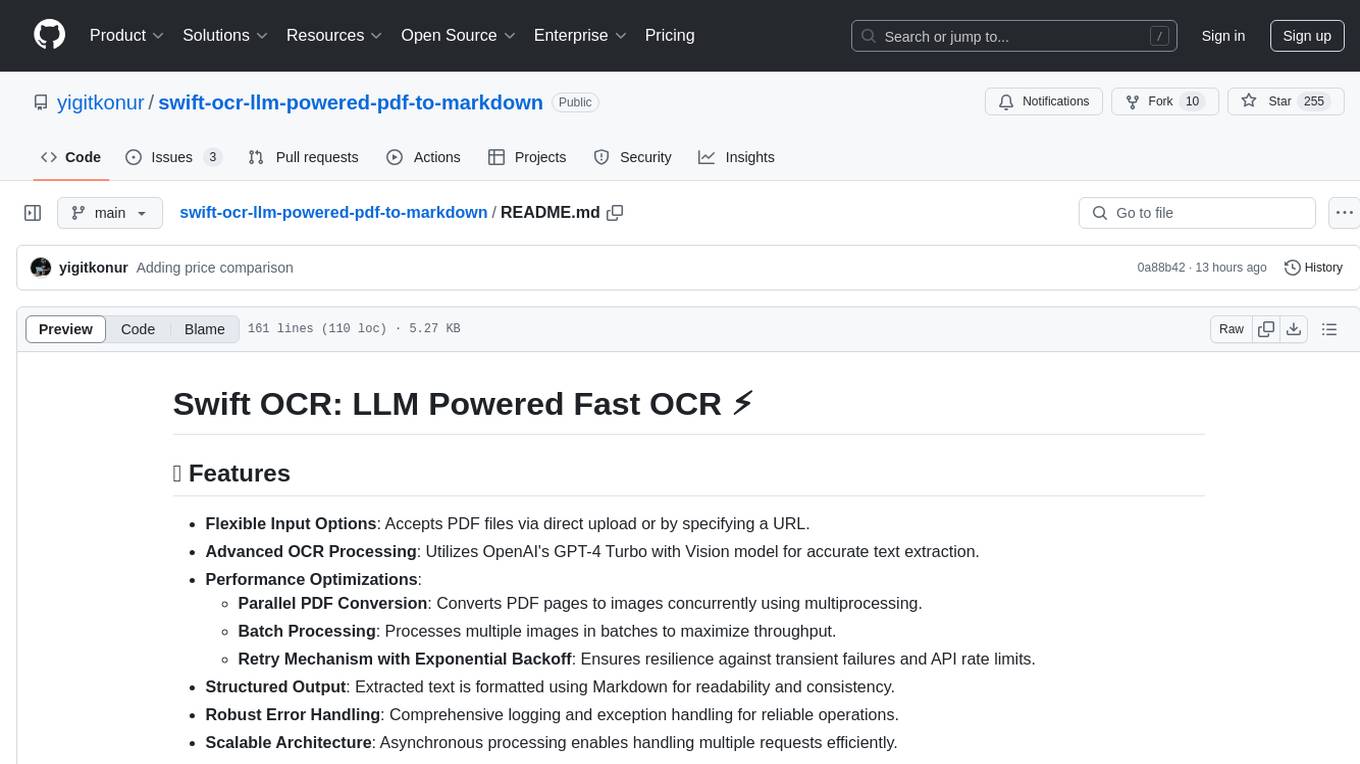

swift-ocr-llm-powered-pdf-to-markdown

Swift OCR is a powerful tool for extracting text from PDF files using OpenAI's GPT-4 Turbo with Vision model. It offers flexible input options, advanced OCR processing, performance optimizations, structured output, robust error handling, and scalable architecture. The tool ensures accurate text extraction, resilience against failures, and efficient handling of multiple requests.

PrivHunterAI

PrivHunterAI is a tool that detects authorization vulnerabilities using mainstream AI engines such as Kimi, DeepSeek, and GPT through passive proxying. The core detection function relies on open APIs of related AI engines and supports data transmission and interaction over HTTPS protocol. It continuously improves by adding features like scan failure retry mechanism, response Content-Type whitelist, limiting AI request size, URL analysis, frontend result display, additional headers for requests, cost optimization by filtering authorization keywords before calling AI, and terminal output of request package records.

20 - OpenAI Gpts

Cloudwise Consultant

Expert in cloud-native solutions, provides tailored tech advice and cost estimates.

Staff Scheduling Advisor

Coordinates and optimizes staff schedules for operational efficiency.

Cloud Computing

Expert in cloud computing, offering insights on services, security, and infrastructure.

Calorie Count & Cut Cost: Food Data

Apples vs. Oranges? Optimize your low-calorie diet. Compare food items. Get tailored advice on satiating, nutritious, cost-effective food choices based on 240 items.

Supplier Relationship Management Advisor

Streamlines supplier interactions to optimize organizational efficiency and cost-effectiveness.

AzurePilot | Steer & Streamline Your Cloud Costs🌐

Specialized advisor on Azure costs and optimizations

Customer Acquisition Cost (CAC) Calculator

Professional analyst for CAC insights and summaries

Production Controlling Advisor

Guides financial planning and cost management in production.

Category Management Advisor

Advises on strategic sourcing and procurement to optimize category management.

Cloud Architecture Advisor

Guides cloud strategy and architecture to optimize business operations.

Qtech | FPS

Frost Protection System is an AI bot optimizing open field farming of fruits, vegetables, and flowers, combining real-time data and AI to boost yield, cut costs, and foster sustainable practices in a user-friendly interface.

ChefGPT

I'm a master chef with expertise in recipes, cost analysis, and kitchen optimization. First upload your inventory and cost list, so I can offer recipes from your inventory with cost analysis.