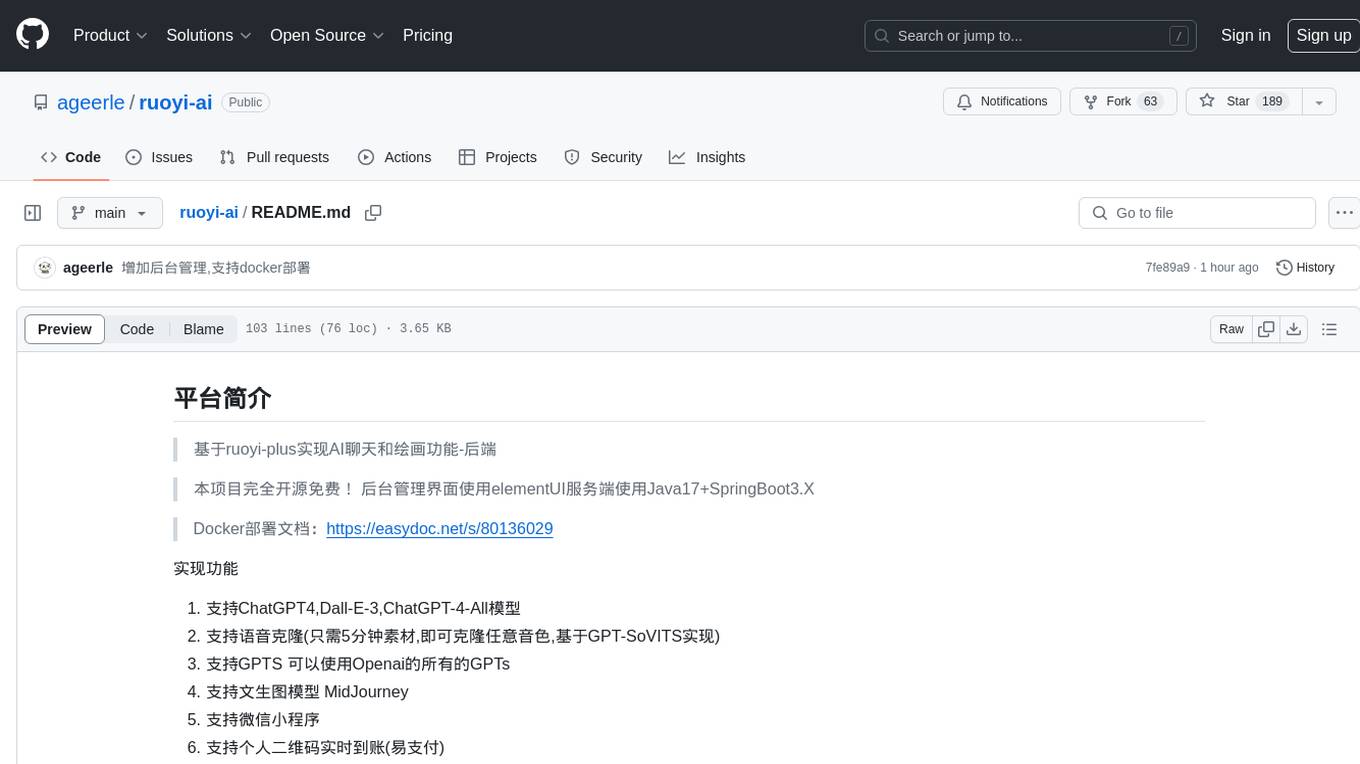

ruoyi-ai

RuoYi AI 是一个全栈式 AI 开发平台,旨在帮助开发者快速构建和部署个性化的 AI 应用。

Stars: 2068

ruoyi-ai is a platform built on top of ruoyi-plus to implement AI chat and drawing functionalities on the backend. The project is completely open source and free. The backend management interface uses elementUI, while the server side is built using Java 17 and SpringBoot 3.X. It supports various AI models such as ChatGPT4, Dall-E-3, ChatGPT-4-All, voice cloning based on GPT-SoVITS, GPTS, and MidJourney. Additionally, it supports WeChat mini programs, personal QR code real-time payments, monitoring and AI auto-reply in live streaming rooms like Douyu and Bilibili, and personal WeChat integration with ChatGPT. The platform also includes features like private knowledge base management and provides various demo interfaces for different platforms such as mobile, web, and PC.

README:

全新升级,开箱即用,简单高效

探索本项目的文档 »

项目预览

·

报告Bug

·

提出新特性

- 项目文档: https://doc.pandarobot.chat

- 前端-后台管理: https://github.com/ageerle/ruoyi-admin

- 前端-用户端: https://github.com/ageerle/ruoyi-web

- 小程序端: https://github.com/ageerle/ruoyi-uniapp

- 演示地址: https://web.pandarobot.chat

- 后台管理: https://admin.pandarobot.chat

- 用户名: admin 密码:admin123

- https://gitcode.com/ageerle/ruoyi-ai

- https://gitcode.com/ageerle/ruoyi-web

- https://gitcode.com/ageerle/ruoyi-admin

- https://gitcode.com/ageerle/ruoyi-uniapp

- 全套开源系统:提供完整的前端应用、后台管理以及小程序应用,基于MIT协议,开箱即用。

- 本地RAG方案:集成Milvus/Weaviate向量库、本地向量化模型与Ollama,实现本地化RAG

- 丰富插件功能:支持联网、SQL查询插件及Text2API插件,扩展系统能力与应用场景。

- 内置SSE、websocket等网络协议,支持对接多种大语言模型,同时还集成了MidJourney和DALLE AI绘画功能

- 强大的多媒体功能:支持AI翻译、PPT制作、语音克隆和翻唱等

- 扩展功能:支持将大模型接入个人或企业微信

- 支付功能:支持易支付、微信支付等多种支付方式

- jdk 17

- mysql 5.7、8.0

- redis 版本必须 >= 5.X

- maven 3.8+

- nodejs 20+ & pnpm

RuoYi-AI

├─ ruoyi-admin // 管理模块

│ └─ RuoYiApplication // 启动类

│ └─ RuoYiServletInitializer // 容器部署初始化类

│ └─ resources // 资源文件

│ └─ i18n/messages.properties // 国际化配置文件

│ └─ application.yml // 框架总配置文件

│ └─ application-dev.yml // 开发环境配置文件

│ └─ application-prod.yml // 生产环境配置文件

│ └─ banner.txt // 框架启动图标

│ └─ logback-plus.xml // 日志配置文件

│ └─ ip2region.xdb // IP区域地址库

├─ ruoyi-common // 通用模块

│ └─ ruoyi-common-bom // common依赖包管理

└─ ruoyi-common-chat // 聊天模块

│ └─ ruoyi-common-core // 核心模块

│ └─ ruoyi-common-doc // 系统接口模块

│ └─ ruoyi-common-encrypt // 数据加解密模块

│ └─ ruoyi-common-excel // excel模块

│ └─ ruoyi-common-idempotent // 幂等功能模块

│ └─ ruoyi-common-json // 序列化模块

│ └─ ruoyi-common-log // 日志模块

│ └─ ruoyi-common-mail // 邮件模块

│ └─ ruoyi-common-mybatis // 数据库模块

│ └─ ruoyi-common-oss // oss服务模块

│ └─ ruoyi-common-pay // 支付模块

│ └─ ruoyi-common-ratelimiter // 限流功能模块

│ └─ ruoyi-common-redis // 缓存服务模块

│ └─ ruoyi-common-satoken // satoken模块

│ └─ ruoyi-common-security // 安全模块

│ └─ ruoyi-common-sensitive // 脱敏模块

│ └─ ruoyi-common-sms // 短信模块

│ └─ ruoyi-common-tenant // 租户模块

│ └─ ruoyi-common-translation // 通用翻译模块

│ └─ ruoyi-common-web // web模块

├─ ruoyi-modules // 模块组

│ └─ ruoyi-demo // 演示模块

│ └─ ruoyi-system // 业务模块

├─ .run // 执行脚本文件

├─ .editorconfig // 编辑器编码格式配置

├─ LICENSE // 开源协议

├─ pom.xml // 公共依赖

├─ README.md // 框架说明文件

该项目使用Git进行版本管理。您可以在repository参看当前可用版本。

该项目使用了MIT授权许可,详情请参阅 LICENSE.txt

最近,我们的项目意外地受到了广泛关注,甚至被许多人误以为是一个已经成熟且能够快速落地的项目。然而,事实并非如此。这个项目是我个人在业余时间进行的研究,主要目的是学习和探索。它是一个以人工智能(AI)为核心的平台,旨在帮助企业通过配置的方式快速构建AI应用。

目前,项目还处于早期阶段,距离成熟还有很长的路要走。由于个人精力有限,项目的发展速度受到了一定的限制。为了加快项目的进度,我真诚地希望更多人能够参与到项目中来。无论是经验丰富的开发者,还是刚刚入门的小白,我都热烈欢迎你们提交Pull Request(PR)。即使代码修改得很少,或者存在一些错误,都没有关系。我会认真审核每一位贡献者的代码,并和大家一起完善项目。

- 智能体管理

通过设置提示词、插件、知识库等,用户可以快速构建一个AI应用。这将极大地简化AI应用的开发流程,降低开发门槛,使更多企业能够轻松地利用AI技术。

- 流程编排

通过流程编排功能,用户可以将不同的模型按照业务逻辑进行有序连接。这将解决单一模型能力不足的问题,充分发挥多个模型的协同作用,从而更好地满足企业的复杂业务需求。

- 感谢

最后,我要感谢RuoYi-Vue-Plus、chatgpt-java、chatgpt-web-midjourney-proxy等优秀框架。正是因为这些项目的开源和共享,我才能够在这个基础上进行开发,使我们的项目能够取得今天的成果。再次感谢这些项目及其背后的开发者们!

希望更多志同道合的朋友能够加入我们,共同推动这个项目的发展。让我们一起努力,将这个项目打造成一个真正成熟、实用的AI平台!

贡献使开源社区成为一个学习、激励和创造的绝佳场所。你所作的任何贡献都是非常感谢的。

- Fork 这个项目

- 创建你的功能分支 (

git checkout -b feature/dev) - 提交你的更改 (

git commit -m 'Add some dev') - 推送到分支 (

git push origin feature/dev) - 打开拉取请求

- pr请提交到GitHub上,会定时同步到gitee

- 项目文档基于vitepress构建

- 按照如何参与开源项目拉取 https://github.com/ageerle/ruoyi-doc

- 安装依赖:npm install

- 启动项目:npm run docs:dev

- 主页路径:docs/guide/introduction/index.md

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ruoyi-ai

Similar Open Source Tools

ruoyi-ai

ruoyi-ai is a platform built on top of ruoyi-plus to implement AI chat and drawing functionalities on the backend. The project is completely open source and free. The backend management interface uses elementUI, while the server side is built using Java 17 and SpringBoot 3.X. It supports various AI models such as ChatGPT4, Dall-E-3, ChatGPT-4-All, voice cloning based on GPT-SoVITS, GPTS, and MidJourney. Additionally, it supports WeChat mini programs, personal QR code real-time payments, monitoring and AI auto-reply in live streaming rooms like Douyu and Bilibili, and personal WeChat integration with ChatGPT. The platform also includes features like private knowledge base management and provides various demo interfaces for different platforms such as mobile, web, and PC.

resume-design

Resume-design is an open-source and free resume design and template download website, built with Vue3 + TypeScript + Vite + Element-plus + pinia. It provides two design tools for creating beautiful resumes and a complete backend management system. The project has released two frontend versions and will integrate with a backend system in the future. Users can learn frontend by downloading the released versions or learn design tools by pulling the latest frontend code.

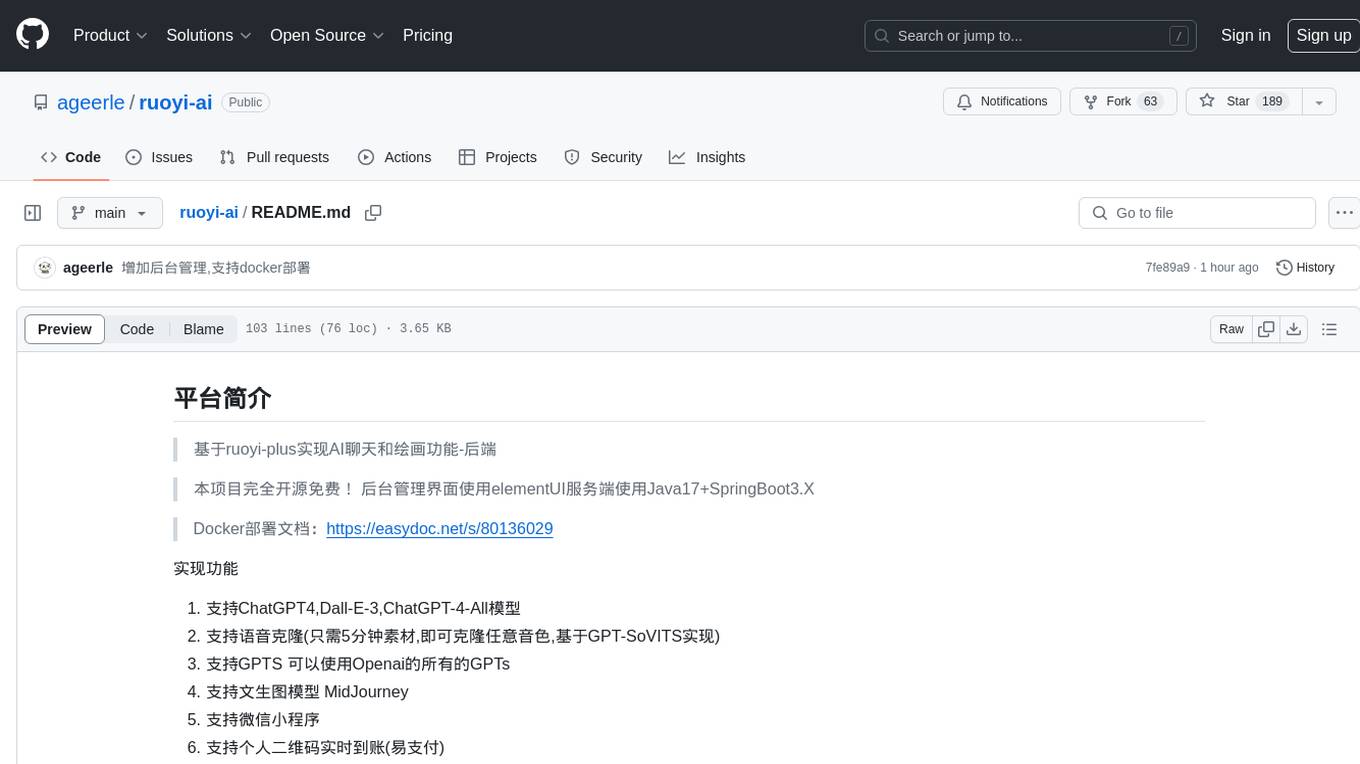

aippt_PresentationGen

A SpringBoot web application that generates PPT files using a llm. The tool preprocesses single-page templates and dynamically combines them to generate PPTX files with text replacement functionality. It utilizes technologies such as SpringBoot, MyBatis, MySQL, Redis, WebFlux, Apache POI, Aspose Slides, OSS, and Vue2. Users can deploy the tool by configuring various parameters in the application.yml file and setting up necessary resources like MySQL, OSS, and API keys. The tool also supports integration with open-source image libraries like Unsplash for adding images to the presentations.

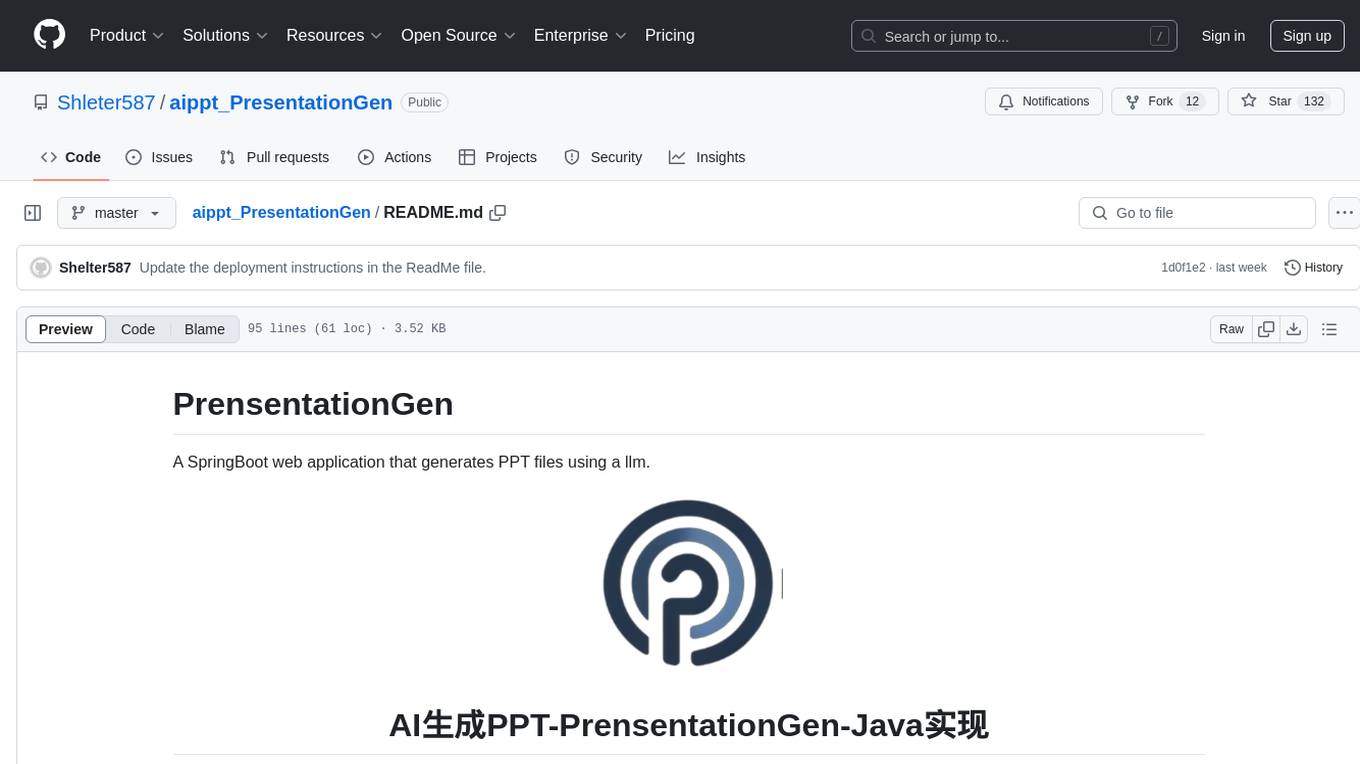

Y2A-Auto

Y2A-Auto is an automation tool that transfers YouTube videos to AcFun. It automates the entire process from downloading, translating subtitles, content moderation, intelligent tagging, to partition recommendation and upload. It also includes a web management interface and YouTube monitoring feature. The tool supports features such as downloading videos and covers using yt-dlp, AI translation and embedding of subtitles, AI generation of titles/descriptions/tags, content moderation using Aliyun Green, uploading to AcFun, task management, manual review, and forced upload. It also offers settings for automatic mode, concurrency, proxies, subtitles, login protection, brute force lock, YouTube monitoring, channel/trend capturing, scheduled tasks, history records, optional GPU/hardware acceleration, and Docker deployment or local execution.

MarkMap-OpenAi-ChatGpt

MarkMap-OpenAi-ChatGpt is a Vue.js-based mind map generation tool that allows users to generate mind maps by entering titles or content. The application integrates the markmap-lib and markmap-view libraries, supports visualizing mind maps, and provides functions for zooming and adapting the map to the screen. Users can also export the generated mind map in PNG, SVG, JPEG, and other formats. This project is suitable for quickly organizing ideas, study notes, project planning, etc. By simply entering content, users can get an intuitive mind map that can be continuously expanded, downloaded, and shared.

AiToEarn

AiToEarn is a one-click publishing tool for multiple self-media platforms such as Douyin, Xiaohongshu, Video Number, and Kuaishou. It allows users to publish videos with ease, observe popular content across the web, and view rankings of explosive articles on Xiaohongshu. The tool is also capable of providing daily and weekly rankings of popular content on Xiaohongshu, Douyin, Video Number, and Kuaishou. In progress features include expanding publishing parameters to support short video e-commerce, adding an AI tool ranking list, enabling AI automatic comments, and AI comment search.

AI-CloudOps

AI+CloudOps is a cloud-native operations management platform designed for enterprises. It aims to integrate artificial intelligence technology with cloud-native practices to significantly improve the efficiency and level of operations work. The platform offers features such as AIOps for monitoring data analysis and alerts, multi-dimensional permission management, visual CMDB for resource management, efficient ticketing system, deep integration with Prometheus for real-time monitoring, and unified Kubernetes management for cluster optimization.

LunaBox

LunaBox is a lightweight, fast, and feature-rich tool for managing and tracking visual novels, with the ability to customize game categories, automatically track playtime, generate personalized reports through AI analysis, import data from other platforms, backup data locally or on cloud services, and ensure privacy and security by storing sensitive data locally. The tool supports multi-dimensional statistics, offers a variety of customization options, and provides a user-friendly interface for easy navigation and usage.

hub

Hub is an open-source, high-performance LLM gateway written in Rust. It serves as a smart proxy for LLM applications, centralizing control and tracing of all LLM calls and traces. Built for efficiency, it provides a single API to connect to any LLM provider. The tool is designed to be fast, efficient, and completely open-source under the Apache 2.0 license.

lobe-editor

LobeHub Editor is a modern, extensible rich text editor built on Meta's Lexical framework with dual-architecture design, featuring both a powerful kernel and React integration. Optimized for AI applications and chat interfaces, it offers a dual architecture with kernel-based API and React components, rich plugin ecosystem, chat interface ready, slash commands, multiple export formats, customizable UI, file & media support, TypeScript native, and modern build system. The editor provides features like dual architecture, React-first design, rich plugin ecosystem, chat interface readiness, slash commands, multiple export formats, customizable UI, file & media support, TypeScript native, and modern build system.

NGCBot

NGCBot is a WeChat bot based on the HOOK mechanism, supporting scheduled push of security news from FreeBuf, Xianzhi, Anquanke, and Qianxin Attack and Defense Community, KFC copywriting, filing query, phone number attribution query, WHOIS information query, constellation query, weather query, fishing calendar, Weibei threat intelligence query, beautiful videos, beautiful pictures, and help menu. It supports point functions, automatic pulling of people, ad detection, automatic mass sending, Ai replies, rich customization, and easy for beginners to use. The project is open-source and periodically maintained, with additional features such as Ai (Gpt, Xinghuo, Qianfan), keyword invitation to groups, automatic mass sending, and group welcome messages.

mcp-prompts

mcp-prompts is a Python library that provides a collection of prompts for generating creative writing ideas. It includes a variety of prompts such as story starters, character development, plot twists, and more. The library is designed to inspire writers and help them overcome writer's block by offering unique and engaging prompts to spark creativity. With mcp-prompts, users can access a wide range of writing prompts to kickstart their imagination and enhance their storytelling skills.

banana-slides

Banana-slides is a native AI-powered PPT generation application based on the nano banana pro model. It supports generating complete PPT presentations from ideas, outlines, and page descriptions. The app automatically extracts attachment charts, uploads any materials, and allows verbal modifications, aiming to truly 'Vibe PPT'. It lowers the threshold for creating PPTs, enabling everyone to quickly create visually appealing and professional presentations.

WenShape

WenShape is a context engineering system for creating long novels. It addresses the challenge of narrative consistency over thousands of words by using an orchestrated writing process, dynamic fact tracking, and precise token budget management. All project data is stored in YAML/Markdown/JSONL text format, naturally supporting Git version control.

private-llm-qa-bot

This is a production-grade knowledge Q&A chatbot implementation based on AWS services and the LangChain framework, with optimizations at various stages. It supports flexible configuration and plugging of vector models and large language models. The front and back ends are separated, making it easy to integrate with IM tools (such as Feishu).

TrainPPTAgent

TrainPPTAgent is an AI-based intelligent presentation generation tool. Users can input a topic and the system will automatically generate a well-structured and content-rich PPT outline and page-by-page content. The project adopts a front-end and back-end separation architecture: the front-end is responsible for interaction, outline editing, and template selection, while the back-end leverages large language models (LLM) and reinforcement learning (GRPO) to complete content generation and optimization, making the generated PPT more tailored to user goals.

For similar tasks

h2ogpt

h2oGPT is an Apache V2 open-source project that allows users to query and summarize documents or chat with local private GPT LLMs. It features a private offline database of any documents (PDFs, Excel, Word, Images, Video Frames, Youtube, Audio, Code, Text, MarkDown, etc.), a persistent database (Chroma, Weaviate, or in-memory FAISS) using accurate embeddings (instructor-large, all-MiniLM-L6-v2, etc.), and efficient use of context using instruct-tuned LLMs (no need for LangChain's few-shot approach). h2oGPT also offers parallel summarization and extraction, reaching an output of 80 tokens per second with the 13B LLaMa2 model, HYDE (Hypothetical Document Embeddings) for enhanced retrieval based upon LLM responses, a variety of models supported (LLaMa2, Mistral, Falcon, Vicuna, WizardLM. With AutoGPTQ, 4-bit/8-bit, LORA, etc.), GPU support from HF and LLaMa.cpp GGML models, and CPU support using HF, LLaMa.cpp, and GPT4ALL models. Additionally, h2oGPT provides Attention Sinks for arbitrarily long generation (LLaMa-2, Mistral, MPT, Pythia, Falcon, etc.), a UI or CLI with streaming of all models, the ability to upload and view documents through the UI (control multiple collaborative or personal collections), Vision Models LLaVa, Claude-3, Gemini-Pro-Vision, GPT-4-Vision, Image Generation Stable Diffusion (sdxl-turbo, sdxl) and PlaygroundAI (playv2), Voice STT using Whisper with streaming audio conversion, Voice TTS using MIT-Licensed Microsoft Speech T5 with multiple voices and Streaming audio conversion, Voice TTS using MPL2-Licensed TTS including Voice Cloning and Streaming audio conversion, AI Assistant Voice Control Mode for hands-free control of h2oGPT chat, Bake-off UI mode against many models at the same time, Easy Download of model artifacts and control over models like LLaMa.cpp through the UI, Authentication in the UI by user/password via Native or Google OAuth, State Preservation in the UI by user/password, Linux, Docker, macOS, and Windows support, Easy Windows Installer for Windows 10 64-bit (CPU/CUDA), Easy macOS Installer for macOS (CPU/M1/M2), Inference Servers support (oLLaMa, HF TGI server, vLLM, Gradio, ExLLaMa, Replicate, OpenAI, Azure OpenAI, Anthropic), OpenAI-compliant, Server Proxy API (h2oGPT acts as drop-in-replacement to OpenAI server), Python client API (to talk to Gradio server), JSON Mode with any model via code block extraction. Also supports MistralAI JSON mode, Claude-3 via function calling with strict Schema, OpenAI via JSON mode, and vLLM via guided_json with strict Schema, Web-Search integration with Chat and Document Q/A, Agents for Search, Document Q/A, Python Code, CSV frames (Experimental, best with OpenAI currently), Evaluate performance using reward models, and Quality maintained with over 1000 unit and integration tests taking over 4 GPU-hours.

serverless-chat-langchainjs

This sample shows how to build a serverless chat experience with Retrieval-Augmented Generation using LangChain.js and Azure. The application is hosted on Azure Static Web Apps and Azure Functions, with Azure Cosmos DB for MongoDB vCore as the vector database. You can use it as a starting point for building more complex AI applications.

react-native-vercel-ai

Run Vercel AI package on React Native, Expo, Web and Universal apps. Currently React Native fetch API does not support streaming which is used as a default on Vercel AI. This package enables you to use AI library on React Native but the best usage is when used on Expo universal native apps. On mobile you get back responses without streaming with the same API of `useChat` and `useCompletion` and on web it will fallback to `ai/react`

LLamaSharp

LLamaSharp is a cross-platform library to run 🦙LLaMA/LLaVA model (and others) on your local device. Based on llama.cpp, inference with LLamaSharp is efficient on both CPU and GPU. With the higher-level APIs and RAG support, it's convenient to deploy LLM (Large Language Model) in your application with LLamaSharp.

gpt4all

GPT4All is an ecosystem to run powerful and customized large language models that work locally on consumer grade CPUs and any GPU. Note that your CPU needs to support AVX or AVX2 instructions. Learn more in the documentation. A GPT4All model is a 3GB - 8GB file that you can download and plug into the GPT4All open-source ecosystem software. Nomic AI supports and maintains this software ecosystem to enforce quality and security alongside spearheading the effort to allow any person or enterprise to easily train and deploy their own on-edge large language models.

ChatGPT-Telegram-Bot

ChatGPT Telegram Bot is a Telegram bot that provides a smooth AI experience. It supports both Azure OpenAI and native OpenAI, and offers real-time (streaming) response to AI, with a faster and smoother experience. The bot also has 15 preset bot identities that can be quickly switched, and supports custom bot identities to meet personalized needs. Additionally, it supports clearing the contents of the chat with a single click, and restarting the conversation at any time. The bot also supports native Telegram bot button support, making it easy and intuitive to implement required functions. User level division is also supported, with different levels enjoying different single session token numbers, context numbers, and session frequencies. The bot supports English and Chinese on UI, and is containerized for easy deployment.

twinny

Twinny is a free and open-source AI code completion plugin for Visual Studio Code and compatible editors. It integrates with various tools and frameworks, including Ollama, llama.cpp, oobabooga/text-generation-webui, LM Studio, LiteLLM, and Open WebUI. Twinny offers features such as fill-in-the-middle code completion, chat with AI about your code, customizable API endpoints, and support for single or multiline fill-in-middle completions. It is easy to install via the Visual Studio Code extensions marketplace and provides a range of customization options. Twinny supports both online and offline operation and conforms to the OpenAI API standard.

agnai

Agnaistic is an AI roleplay chat tool that allows users to interact with personalized characters using their favorite AI services. It supports multiple AI services, persona schema formats, and features such as group conversations, user authentication, and memory/lore books. Agnaistic can be self-hosted or run using Docker, and it provides a range of customization options through its settings.json file. The tool is designed to be user-friendly and accessible, making it suitable for both casual users and developers.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.