hugging-llm

HuggingLLM, Hugging Future.

Stars: 2928

HuggingLLM is a project that aims to introduce ChatGPT to a wider audience, particularly those interested in using the technology to create new products or applications. The project focuses on providing practical guidance on how to use ChatGPT-related APIs to create new features and applications. It also includes detailed background information and system design introductions for relevant tasks, as well as example code and implementation processes. The project is designed for individuals with some programming experience who are interested in using ChatGPT for practical applications, and it encourages users to experiment and create their own applications and demos.

README:

Table of Contents generated with DocToc

随着ChatGPT的爆火,其背后其实蕴含着一个基本事实:AI能力得到了极大突破——大模型的能力有目共睹,未来只会变得更强。这世界唯一不变的就是变,适应变化、拥抱变化、喜欢变化,天行健君子以自强不息。我们相信未来会有越来越多的大模型出现,AI正在逐渐平民化,将来每个人都可以利用大模型轻松地做出自己的AI产品。所以,我们把项目起名为HuggingLLM,我们相信我们正在经历一个伟大的时代,我们相信这是一个值得每个人全身心拥抱的时代,我们更加相信这个世界必将会因此而变得更加美好。

项目简介:介绍 ChatGPT 原理、使用和应用,降低使用门槛,让更多感兴趣的非NLP或算法专业人士能够无障碍使用LLM创造价值。

立项理由:ChatGPT改变了NLP行业,甚至正在改变整个产业。我们想借这个项目将ChatGPT介绍给更多的人,尤其是对此感兴趣、想利用相关技术做一些新产品或应用的学习者,尤其是非本专业人员。希望新的技术突破能够更多地改善我们所处的世界。

项目受众

- 项目适合以下人员:

- 对ChatGPT感兴趣。

- 希望在实际中运用该技术创造提供新的服务或解决已有问题。

- 有一定编程基础。

- 不适合以下需求人员:

- 研究其底层算法细节,比如PPO怎么实现的,能不能换成NLPO或ILQL,效果如何等。

- 自己从头到尾研发一个 ChatGPT。

- 对其他技术细节感兴趣。

另外,要说明的是,本项目并不是特别针对算法或NLP工程师等业内从业人员设计的,当然,你也可以通过本项目获得一定受益。

项目亮点

- 聚焦于如何使用ChatGPT相关API(可使用国内大模型API)创造新的功能和应用。

- 对相关任务有详细的背景和系统设计介绍。

- 提供示例代码和实现流程。

国内大模型API使用介绍

《GLM》

- 安装智谱GLM的SDK

pip install zhipuai- 调用GLM API的示例

# GLM

from zhipuai import ZhipuAI

client = ZhipuAI(api_key="") # 请填写您自己的APIKey

messages = [{"role": "system", "content": "你是一个乐于解答各种问题的助手,你的任务是为用户提供专业、准确、有见地的建议。"},

{"role": "user", "content": "请你介绍一下Datawhale。"},]

response = client.chat.completions.create(

model="glm-4", # 请选择参考官方文档,填写需要调用的模型名称

messages=messages, # 将结果设置为“消息”格式

stream=True, # 流式输出

)

full_content = '' # 合并输出

for chunk in response:

full_content += chunk.choices[0].delta.content

print('回答:\n' + full_content)回答:

Datawhale是一个专注于人工智能领域的开源学习社区。它汇聚了一群热爱人工智能技术的人才,旨在降低人工智能的学习门槛,推动技术的普及和应用。Datawhale通过开源项目、线上课程、实践挑战等形式,为AI爱好者和从业者提供学习资源、交流平台和成长机会。

从提供的参考信息来看,Datawhale有着丰富的内容资源和活跃的社区氛围。例如,他们推出了包括“蝴蝶书”在内的多本与人工智能相关的书籍,这些书籍内容实战性强,旨在帮助读者掌握ChatGPT等先进技术的原理和应用开发。此外,Datawhale还举办了宣传大使的招募活动,鼓励更多人参与到AI技术的推广和开源学习活动中来。

Datawhale社区倡导开源共生的理念,不仅为成员提供荣誉证书、精美文创等物质激励,还搭建了开放的交流社群,为成员之间的信息交流和技术探讨提供平台。同时,社区还助力成员的职业发展,提供简历指导和内推渠道等支持。

总体来说,Datawhale是一个集学习、交流、实战和技术推广于一体的AI社区,对于希望在人工智能领域内提升自己能力、扩展视野的人来说,是一个非常有价值的资源和平台。《Qwen》

- 安装千问Qwen的SDK

pip install dashscope- 调用Qwen API的示例

# qwen

from http import HTTPStatus

import dashscope

DASHSCOPE_API_KEY="" # 请填写您自己的APIKey

messages = [{'role': 'system', 'content': 'You are a helpful assistant.'},

{'role': 'user', 'content': '请你介绍一下Datawhale。'}]

responses = dashscope.Generation.call(

dashscope.Generation.Models.qwen_max, # 请选择参考官方文档,填写需要调用的模型名称

api_key=DASHSCOPE_API_KEY,

messages=messages,

result_format='message', # 将结果设置为“消息”格式

stream=True, #流式输出

incremental_output=True

)

full_content = '' # 合并输出

for response in responses:

if response.status_code == HTTPStatus.OK:

full_content += response.output.choices[0]['message']['content']

# print(response)

else:

print('Request id: %s, Status code: %s, error code: %s, error message: %s' % (

response.request_id, response.status_code,

response.code, response.message

))

print('回答:\n' + full_content)回答:

Datawhale是一个专注于数据科学与人工智能领域的开源组织,由一群来自国内外顶级高校和知名企业的志愿者们共同发起。该组织以“学习、分享、成长”为理念,通过组织和运营各类高质量的公益学习活动,如学习小组、实战项目、在线讲座等,致力于培养和提升广大学习者在数据科学领域的知识技能和实战经验。

Datawhale积极推广开源文化,鼓励成员参与并贡献开源项目,已成功孵化了多个优秀的开源项目,在GitHub上积累了大量的社区关注度和Star数。此外,Datawhale还与各大高校、企业以及社区开展广泛合作,为在校学生、开发者及行业人士提供丰富的学习资源和实践平台,助力他们在数据科学领域快速成长和发展。

总之,Datawhale是一个充满活力、富有社会责任感的开源学习社区,无论你是数据科学的小白还是资深从业者,都能在这里找到适合自己的学习路径和交流空间。本教程内容彼此之间相对独立,大家可以针对任一感兴趣内容阅读或上手,也可从头到尾学习。

以下内容为原始稿,书稿见:https://datawhalechina.github.io/hugging-llm/

-

ChatGPT 基础科普 @长琴

- LM

- Transformer

- GPT

- RLHF

-

ChatGPT 使用指南:相似匹配 @长琴

- Embedding 基础

- API 使用

- QA 任务

- 聚类任务

- 推荐应用

-

ChatGPT 使用指南:句词分类 @长琴

- NLU 基础

- API 使用

- 文档问答任务

- 分类与实体识别微调任务

- 智能对话应用

-

ChatGPT 使用指南:文本生成 @玉琳

- 文本摘要

- 文本纠错

- 机器翻译

-

ChatGPT 使用指南:文本推理 @华挥

- 什么是推理

- 导入ChatGPT

- 测试ChatGPT推理能力

- 调用ChatGPT推理能力

- ChatGPT以及GPT-4的推理能力

- ChatGPT 工程实践 @长琴

- 评测

- 安全

- 网络

-

ChatGPT 局限不足 @Carles

- 事实错误

- 实时更新

- 资源耗费

-

ChatGPT 商业应用 @Jason

- 背景

- 工具应用:搜索、办公、教育

- 行业应用:游戏、音乐、零售电商、广告营销、媒体新闻、金融、医疗、设计、影视、工业

📢说明:项目的 docs 目录是书稿电子版(但不是最终版,编辑可能做了一点点修改);content 是 Jupyter Notebook 的初始版本和迭代版本。实践可以选择 content,阅读可以选择 docs。

要学习本教程内容(主要是四个使用指南),需具备以下条件:

- 能够正常使用OpenAI的API,能够调用模型:gpt-3.5-turbo。或国内大模型API、开源大模型也可。

- 可以没有算法经验,但应具备一定的编程基础或实际项目经历。

- 学习期间有足够的时间保证,《使用指南》每个章节的学习时长为2-3天,除《文本推理》外,其他均需要6-8个小时。

学习完成后,需要提交一个大作业,整个学习期间就一个任务,要求如下:

- 以其中任一方向为例:描述应用和设计流程,实现应用相关功能,完成一个应用或Demo程序。

- 方向包括所有内容,比如:一个新闻推荐阅读器、一个多轮的客服机器人、Doc问答机器人、模型输出内容检测器等等,鼓励大家偏应用方向。

历次组队学习中成员完成的项目汇总(部分):

- https://datawhaler.feishu.cn/base/EdswbrhNvaIEJdsLJ0bcVYt1n2c?table=tbluxatFhjfyXShH&view=vewQJKA0Gi

请学习者务必注意以下几点:

- 学习本教程并不能让你成为算法工程师,如果能激发起你的兴趣,我们非常欢迎你参与学习DataWhale更多算法类开源教程。

- 在学习了教程中的一些知识和任务后,千万不要认为这些东西实际上就是看到那么简单。一方面实际操作起来还是会有很多问题,另一方面每个知识其实有非常多的细节,这在本教程中是无法涉及的。请持续学习、并始终对知识保持敬畏。

- 本教程主要是负责引导入门的,鼓励大家在了解了相关知识后,根据实际情况或自己意愿大胆实践。实践出真知,脑子想、嘴说和亲自干是完全不一样的。

- 由于创作团队水平和精力有限,难免会有疏漏,请不吝指正。

最后,祝愿大家都能学有所得,期望大家未来能做出举世瞩目的产品和应用。

——HuggingLLM开源项目全体成员

B站配套视频教程:https://b23.tv/hdnXn1L

智海配套课程:https://aiplusx.momodel.cn/classroom/class/658d3ecd891ad518e0274bce?activeKey=intro

核心贡献者

- 长琴-项目负责人(Datawhale成员-AI算法工程师)

- 玉琳(内容创作者-Datawhale成员)

- 华挥(内容创作者-Datawhale成员)

- Carles(内容创作者)

- Jason(内容创作者)

- 胡锐锋(Datawhale成员-华东交通大学-系统架构设计师)

- @kal1x 指出第一章错别字:https://github.com/datawhalechina/hugging-llm/issues/22

- @fancyboi999 更新OpenAI的API:https://github.com/datawhalechina/hugging-llm/pull/23

其他

Datawhale,一个专注于AI领域的学习圈子。初衷是for the learner,和学习者一起成长。目前加入学习社群的人数已经数千人,组织了机器学习,深度学习,数据分析,数据挖掘,爬虫,编程,统计学,Mysql,数据竞赛等多个领域的内容学习,微信搜索公众号Datawhale可以加入我们。

本作品采用知识共享署名-非商业性使用-相同方式共享 4.0 国际许可协议进行许可。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for hugging-llm

Similar Open Source Tools

hugging-llm

HuggingLLM is a project that aims to introduce ChatGPT to a wider audience, particularly those interested in using the technology to create new products or applications. The project focuses on providing practical guidance on how to use ChatGPT-related APIs to create new features and applications. It also includes detailed background information and system design introductions for relevant tasks, as well as example code and implementation processes. The project is designed for individuals with some programming experience who are interested in using ChatGPT for practical applications, and it encourages users to experiment and create their own applications and demos.

langchain4j-aideepin

LangChain4j-AIDeepin is an open-source, offline deployable retrieval enhancement generation (RAG) project based on large language models such as ChatGPT and Langchain4j application framework. It offers features like registration & login, multi-session support, image generation, prompt words, quota control, knowledge base, model-based search, model switching, and search engine switching. The project integrates models like ChatGPT 3.5, Tongyi Qianwen, Wenxin Yiyuan, Ollama, and DALL-E 2. The backend uses technologies like JDK 17, Spring Boot 3.0.5, Langchain4j, and PostgreSQL with pgvector extension, while the frontend is built with Vue3, TypeScript, and PNPM.

CodeAsk

CodeAsk is a code analysis tool designed to tackle complex issues such as code that seems to self-replicate, cryptic comments left by predecessors, messy and unclear code, and long-lasting temporary solutions. It offers intelligent code organization and analysis, security vulnerability detection, code quality assessment, and other interesting prompts to help users understand and work with legacy code more efficiently. The tool aims to translate 'legacy code mountains' into understandable language, creating an illusion of comprehension and facilitating knowledge transfer to new team members.

AI-automatically-generates-novels

AI Novel Writing Assistant is an intelligent productivity tool for novel creation based on AI + prompt words. It has been used by hundreds of studios and individual authors to quickly and batch generate novels. With AI technology to enhance writing efficiency and a comprehensive prompt word management feature, it achieves 20 times efficiency improvement in intelligent book disassembly, intelligent book title and synopsis generation, text polishing, and shift+L quick term insertion, making writing easier and more professional. It has been upgraded to v5.2. The tool supports mind map construction of outlines and chapters, AI self-optimization of novels, writing knowledge base management, shift+L quick term insertion in the text input field, support for any mainstream large models integration, custom skin color, prompt word import and export, support for large text memory, right-click polishing, expansion, and de-AI flavoring of outlines, chapters, and text, multiple sets of novel prompt word library management, and book disassembly function.

Avalonia-Assistant

Avalonia-Assistant is an open-source desktop intelligent assistant that aims to provide a user-friendly interactive experience based on the Avalonia UI framework and the integration of Semantic Kernel with OpenAI or other large LLM models. By utilizing Avalonia-Assistant, you can perform various desktop operations through text or voice commands, enhancing your productivity and daily office experience.

chatgpt-webui

ChatGPT WebUI is a user-friendly web graphical interface for various LLMs like ChatGPT, providing simplified features such as core ChatGPT conversation and document retrieval dialogues. It has been optimized for better RAG retrieval accuracy and supports various search engines. Users can deploy local language models easily and interact with different LLMs like GPT-4, Azure OpenAI, and more. The tool offers powerful functionalities like GPT4 API configuration, system prompt setup for role-playing, and basic conversation features. It also provides a history of conversations, customization options, and a seamless user experience with themes, dark mode, and PWA installation support.

MoneyPrinterTurbo

MoneyPrinterTurbo is a tool that can automatically generate video content based on a provided theme or keyword. It can create video scripts, materials, subtitles, and background music, and then compile them into a high-definition short video. The tool features a web interface and an API interface, supporting AI-generated video scripts, customizable scripts, multiple HD video sizes, batch video generation, customizable video segment duration, multilingual video scripts, multiple voice synthesis options, subtitle generation with font customization, background music selection, access to high-definition and copyright-free video materials, and integration with various AI models like OpenAI, moonshot, Azure, and more. The tool aims to simplify the video creation process and offers future plans to enhance voice synthesis, add video transition effects, provide more video material sources, offer video length options, include free network proxies, enable real-time voice and music previews, support additional voice synthesis services, and facilitate automatic uploads to YouTube platform.

LabelQuick

LabelQuick_V2.0 is a fast image annotation tool designed and developed by the AI Horizon team. This version has been optimized and improved based on the previous version. It provides an intuitive interface and powerful annotation and segmentation functions to efficiently complete dataset annotation work. The tool supports video object tracking annotation, quick annotation by clicking, and various video operations. It introduces the SAM2 model for accurate and efficient object detection in video frames, reducing manual intervention and improving annotation quality. The tool is designed for Windows systems and requires a minimum of 6GB of memory.

Fay

Fay is an open-source digital human framework that offers different versions for various purposes. The '带货完整版' is suitable for online and offline salespersons. The '助理完整版' serves as a human-machine interactive digital assistant that can also control devices upon command. The 'agent版' is designed to be an autonomous agent capable of making decisions and contacting its owner. The framework provides updates and improvements across its different versions, including features like emotion analysis integration, model optimizations, and compatibility enhancements. Users can access detailed documentation for each version through the provided links.

AirPower4T

AirPower4T is a development base library based on Vue3 TypeScript Element Plus Vite, using decorators, object-oriented, Hook and other front-end development methods. It provides many common components and some feedback components commonly used in background management systems, and provides a lot of enums and decorators.

LotteryMaster

LotteryMaster is a tool designed to fetch lottery data, save it to Excel files, and provide analysis reports including number prediction, number recommendation, and number trends. It supports multiple platforms for access such as Web and mobile App. The tool integrates AI models like Qwen API and DeepSeek for generating analysis reports and trend analysis charts. Users can configure API parameters for controlling randomness, diversity, presence penalty, and maximum tokens. The tool also includes a frontend project based on uniapp + Vue3 + TypeScript for multi-platform applications. It provides a backend service running on Fastify with Node.js, Cheerio.js for web scraping, Pino for logging, xlsx for Excel file handling, and Jest for testing. The project is still in development and some features may not be fully implemented. The analysis reports are for reference only and do not constitute investment advice. Users are advised to use the tool responsibly and avoid addiction to gambling.

DeepBattler

DeepBattler is a tool designed for Hearthstone Battlegrounds players, providing real-time strategic advice and insights to improve gameplay experience. It integrates with the Hearthstone Deck Tracker plugin and offers voice-assisted guidance. The tool is powered by a large language model (LLM) and can match the strength of top players on EU servers. Users can set up the tool by adding dependencies, configuring the plugin path, and launching the LLM agent. DeepBattler is licensed for personal, educational, and non-commercial use, with guidelines on non-commercial distribution and acknowledgment of external contributions.

EduChat

EduChat is a large-scale language model-based chatbot system designed for intelligent education by the EduNLP team at East China Normal University. The project focuses on developing a dialogue-based language model for the education vertical domain, integrating diverse education vertical domain data, and providing functions such as automatic question generation, homework correction, emotional support, course guidance, and college entrance examination consultation. The tool aims to serve teachers, students, and parents to achieve personalized, fair, and warm intelligent education.

AI-Assistant-ChatGPT

AI Assistant ChatGPT is a web client tool that allows users to create or chat using ChatGPT or Claude. It enables generating long texts and conversations with efficient control over quality and content direction. The tool supports customization of reverse proxy address, conversation management, content editing, markdown document export, JSON backup, context customization, session-topic management, role customization, dynamic content navigation, and more. Users can access the tool directly at https://eaias.com or deploy it independently. It offers features for dialogue management, assistant configuration, session configuration, and more. The tool lacks data cloud storage and synchronization but provides guidelines for independent deployment. It is a frontend project that can be deployed using Cloudflare Pages and customized with backend modifications. The project is open-source under the MIT license.

DeepMCPAgent

DeepMCPAgent is a model-agnostic tool that enables the creation of LangChain/LangGraph agents powered by MCP tools over HTTP/SSE. It allows for dynamic discovery of tools, connection to remote MCP servers, and integration with any LangChain chat model instance. The tool provides a deep agent loop for enhanced functionality and supports typed tool arguments for validated calls. DeepMCPAgent emphasizes the importance of MCP-first approach, where agents dynamically discover and call tools rather than hardcoding them.

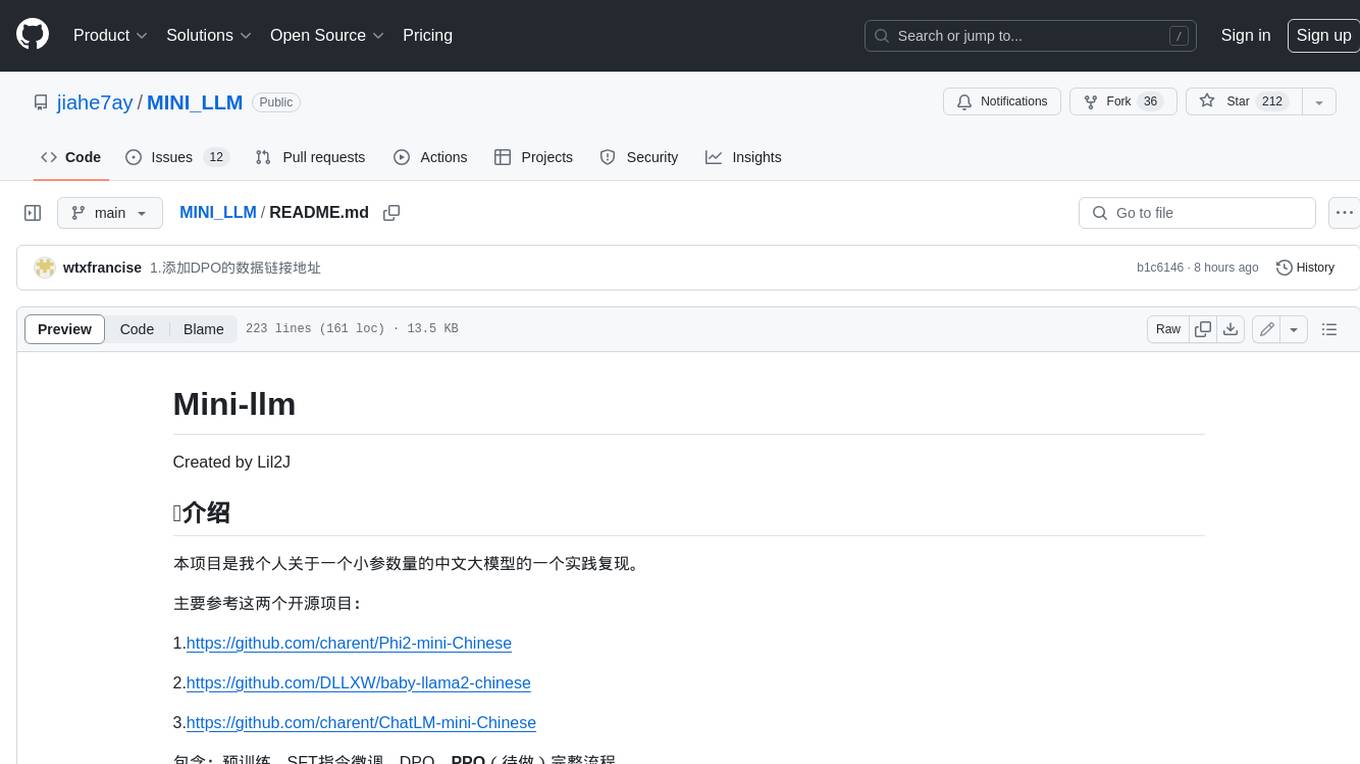

MINI_LLM

This project is a personal implementation and reproduction of a small-parameter Chinese LLM. It mainly refers to these two open source projects: https://github.com/charent/Phi2-mini-Chinese and https://github.com/DLLXW/baby-llama2-chinese. It includes the complete process of pre-training, SFT instruction fine-tuning, DPO, and PPO (to be done). I hope to share it with everyone and hope that everyone can work together to improve it!

For similar tasks

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.