langchain4j-aideepin

基于AI的工作效率提升工具 | Ai-based productivity tools (Chat,Draw,RAG etc)

Stars: 461

LangChain4j-AIDeepin is an open-source, offline deployable retrieval enhancement generation (RAG) project based on large language models such as ChatGPT and Langchain4j application framework. It offers features like registration & login, multi-session support, image generation, prompt words, quota control, knowledge base, model-based search, model switching, and search engine switching. The project integrates models like ChatGPT 3.5, Tongyi Qianwen, Wenxin Yiyuan, Ollama, and DALL-E 2. The backend uses technologies like JDK 17, Spring Boot 3.0.5, Langchain4j, and PostgreSQL with pgvector extension, while the frontend is built with Vue3, TypeScript, and PNPM.

README:

LangChain4j-AIDeepin(得应) 是基于AI的工作效率提升工具。

可用于辅助企业/团队进行技术研发、产品设计、人事/财务/IT信息咨询、系统/商品咨询、客服话术支撑等工作

🌟该项目如对您有帮助,欢迎点赞🌟

AIDEEPIN

|__ 服务端(langchain4j-aideepin)

|__ 用户端WEB(langchain4j-aideepin-web)

|__ 管理端WEB(langchain4j-aideepin-admin)

👉详细文档

前端项目:

- 注册&登录

- 多会话(多角色)

- 图片生成(文生图、修图、图生图)

- 提示词

- 额度控制

- 基于大模型的知识库(RAG)

- 向量搜索

- 图搜索

- 基于大模型的网络搜索(RAG)

- 多模型随意切换

- 多搜索引擎随意切换

- DeepSeek

- ChatGPT 3.5

- 通义千问

- 通义万相

- 文心一言

- ollama

- DALL-E 2

- DALL-E 3

Bing (TODO)

百度 (TODO)

该仓库为后端服务

技术栈:

- jdk17

- springboot3.0.5

- langchain4j(Java version of LangChain)

- Postgresql

- pgvector扩展:https://github.com/pgvector/pgvector

- Apage AGE扩展:https://github.com/apache/age

前端技术栈:

- vue3

- vite

- typescript

- pnpm

- pinia

- naiveui

a. 初始化数据库

-

创建数据库aideepin

-

执行docs/create.sql

-

配置模型(至少设置一个) 或者 使用管理端在界面上配置

- 配置AI平台

-- DeepSeek的secretKey update adi_sys_config set value = '{"base_url":"https://api.deepseek.com","secret_key":"my_deepseek_secret_key"}' where name = 'deepseek_setting'; -- openai的secretKey update adi_sys_config set value = '{"secret_key":"my_openai_secret_key"}' where name = 'openai_setting'; -- 灵积大模型平台的apiKey update adi_sys_config set value = '{"api_key":"my_dashcope_api_key"}' where name = 'dashscope_setting'; -- 千帆大模型平台的配置 update adi_sys_config set value = '{"api_key":"my_qianfan_api_key","secret_key":"my_qianfan_secret_key"}' where name = 'qianfan_setting'; -- ollama的配置 update adi_sys_config set value = '{"base_url":"my_ollama_base_url"}' where name = 'ollama_setting'; - 启用AI平台下的模型或新增模型

-- Enable model update adi_ai_model set is_enable = true where name = 'deepseek-chat'; update adi_ai_model set is_enable = true where name = 'gpt-3.5-turbo'; update adi_ai_model set is_enable = true where name = 'dall-e-2'; update adi_ai_model set is_enable = true where name = 'qwen-turbo'; update adi_ai_model set is_enable = true where name = 'ERNIE-Speed-128K'; update adi_ai_model set is_enable = true where name = 'tinydolphin'; -- Add new model INSERT INTO adi_ai_model (name, type, platform, is_enable) VALUES ('vicuna', 'text', 'ollama', true);

- 配置AI平台

-

填充搜索引擎的配置

- Google的配置

update adi_sys_config set value = '{"url":"https://www.googleapis.com/customsearch/v1","key":"my key from cloud.google.com","cx":"my cx from programmablesearchengine.google.com"}' where name = 'google_setting';

- Google的配置

b. 修改配置文件

- postgresql: application-[dev|prod].xml中的spring.datasource

- redis: application-[dev|prod].xml中的spring.data.redis

- mail: application.xml中的spring.mail

-

进入项目

cd langchain4j-aideepin -

打包:

mvn clean package -Dmaven.test.skip=true -

运行

- jar包启动:

cd adi-bootstrap/target nohup java -jar -Xms768m -Xmx1024m -XX:+HeapDumpOnOutOfMemoryError adi-bootstrap-0.0.1-SNAPSHOT.jar --spring.profiles.active=[dev|prod] dev/null 2>&1 &- docker启动

cd adi-bootstrap docker build . -t aideepin:0.0.1 docker run -d \ --name=aideepin \ -e APP_PROFILE=[dev|prod] \ -v="/data/aideepin/logs:/data/logs" \ aideepin:0.0.1

- 高级RAG

- 查询压缩 √

- 查询路由

- Re-rank:支持本地rerank模型

- AI聊天

- 多角色 √

- 预设通用角色(管理后台创建) √

- 图片模型:

- DALL-E 2 & DALL-E 3 √

- 通义万相 √

- 聊天视图 √

- 画廊视图 √

- 开放/收藏图片 √

- 图片评论 √

- 知识库:

- 向量 √

- 知识图谱 √

- 文档召回数量可设置

- 自动调整(根据LLM的上下文窗口大小) √

- 手动调整 √

- 文档召回最低分数可设置 √

- 切块时内容重叠数量可设置 √

- 请求模型时temperature可设置 √

- 严格模式与非严格模式 √

- 答案来源 √

- 支持拉取在线文档

- FAQ

- 评论

- 多模态支持

- 图片 √

- 音频

- 视频

- 自定义AI Agent(工作流)

- 工具

- FAQ提取

- 文档对话

- 翻译

- 图片处理

- 修图

- 抠图

- 扩图

- 合成

- 背景生成 √

- 搜索引擎

- Google √

- Bing

- 百度

- 额度统计及控制

- 免费额度统计及限制 √

- 计费额度统计及限制 √

- 总额度统计 √

- 开放接口

- 其他

- 阿里云OSS √

AI画图:

向量化:

知识图谱:

额度统计:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for langchain4j-aideepin

Similar Open Source Tools

langchain4j-aideepin

LangChain4j-AIDeepin is an open-source, offline deployable retrieval enhancement generation (RAG) project based on large language models such as ChatGPT and Langchain4j application framework. It offers features like registration & login, multi-session support, image generation, prompt words, quota control, knowledge base, model-based search, model switching, and search engine switching. The project integrates models like ChatGPT 3.5, Tongyi Qianwen, Wenxin Yiyuan, Ollama, and DALL-E 2. The backend uses technologies like JDK 17, Spring Boot 3.0.5, Langchain4j, and PostgreSQL with pgvector extension, while the frontend is built with Vue3, TypeScript, and PNPM.

hugging-llm

HuggingLLM is a project that aims to introduce ChatGPT to a wider audience, particularly those interested in using the technology to create new products or applications. The project focuses on providing practical guidance on how to use ChatGPT-related APIs to create new features and applications. It also includes detailed background information and system design introductions for relevant tasks, as well as example code and implementation processes. The project is designed for individuals with some programming experience who are interested in using ChatGPT for practical applications, and it encourages users to experiment and create their own applications and demos.

Avalonia-Assistant

Avalonia-Assistant is an open-source desktop intelligent assistant that aims to provide a user-friendly interactive experience based on the Avalonia UI framework and the integration of Semantic Kernel with OpenAI or other large LLM models. By utilizing Avalonia-Assistant, you can perform various desktop operations through text or voice commands, enhancing your productivity and daily office experience.

AutoAgents

AutoAgents is a cutting-edge multi-agent framework built in Rust that enables the creation of intelligent, autonomous agents powered by Large Language Models (LLMs) and Ractor. Designed for performance, safety, and scalability. AutoAgents provides a robust foundation for building complex AI systems that can reason, act, and collaborate. With AutoAgents you can create Cloud Native Agents, Edge Native Agents and Hybrid Models as well. It is so extensible that other ML Models can be used to create complex pipelines using Actor Framework.

mcp-memory-service

The MCP Memory Service is a universal memory service designed for AI assistants, providing semantic memory search and persistent storage. It works with various AI applications and offers fast local search using SQLite-vec and global distribution through Cloudflare. The service supports intelligent memory management, universal compatibility with AI tools, flexible storage options, and is production-ready with cross-platform support and secure connections. Users can store and recall memories, search by tags, check system health, and configure the service for Claude Desktop integration and environment variables.

Rankify

Rankify is a Python toolkit designed for unified retrieval, re-ranking, and retrieval-augmented generation (RAG) research. It integrates 40 pre-retrieved benchmark datasets and supports 7 retrieval techniques, 24 state-of-the-art re-ranking models, and multiple RAG methods. Rankify provides a modular and extensible framework, enabling seamless experimentation and benchmarking across retrieval pipelines. It offers comprehensive documentation, open-source implementation, and pre-built evaluation tools, making it a powerful resource for researchers and practitioners in the field.

Fay

Fay is an open-source digital human framework that offers different versions for various purposes. The '带货完整版' is suitable for online and offline salespersons. The '助理完整版' serves as a human-machine interactive digital assistant that can also control devices upon command. The 'agent版' is designed to be an autonomous agent capable of making decisions and contacting its owner. The framework provides updates and improvements across its different versions, including features like emotion analysis integration, model optimizations, and compatibility enhancements. Users can access detailed documentation for each version through the provided links.

chatgpt-webui

ChatGPT WebUI is a user-friendly web graphical interface for various LLMs like ChatGPT, providing simplified features such as core ChatGPT conversation and document retrieval dialogues. It has been optimized for better RAG retrieval accuracy and supports various search engines. Users can deploy local language models easily and interact with different LLMs like GPT-4, Azure OpenAI, and more. The tool offers powerful functionalities like GPT4 API configuration, system prompt setup for role-playing, and basic conversation features. It also provides a history of conversations, customization options, and a seamless user experience with themes, dark mode, and PWA installation support.

uDesktopMascot

uDesktopMascot is an open-source project for a desktop mascot application with a theme of 'freedom of creation'. It allows users to load and display VRM or GLB/FBX model files on the desktop, customize GUI colors and background images, and access various features through a menu screen. The application supports Windows 10/11 and macOS platforms.

mcp

Model Context Protocol (MCP) is an open protocol that standardizes how applications provide context to large language models (LLMs). It allows AI applications to connect with various data sources and tools in a consistent manner, enhancing their capabilities and flexibility. This repository contains core libraries, test frameworks, engineering systems, pipelines, and tooling for Microsoft MCP Server contributors to unify engineering investments and reduce duplication and divergence. For more details, visit the official MCP website.

resume-design

Resume-design is an open-source and free resume design and template download website, built with Vue3 + TypeScript + Vite + Element-plus + pinia. It provides two design tools for creating beautiful resumes and a complete backend management system. The project has released two frontend versions and will integrate with a backend system in the future. Users can learn frontend by downloading the released versions or learn design tools by pulling the latest frontend code.

aegra

Aegra is a self-hosted AI agent backend platform that provides LangGraph power without vendor lock-in. Built with FastAPI + PostgreSQL, it offers complete control over agent orchestration for teams looking to escape vendor lock-in, meet data sovereignty requirements, enable custom deployments, and optimize costs. Aegra is Agent Protocol compliant and perfect for teams seeking a free, self-hosted alternative to LangGraph Platform with zero lock-in, full control, and compatibility with existing LangGraph Client SDK.

nodetool

NodeTool is a platform designed for AI enthusiasts, developers, and creators, providing a visual interface to access a variety of AI tools and models. It simplifies access to advanced AI technologies, offering resources for content creation, data analysis, automation, and more. With features like a visual editor, seamless integration with leading AI platforms, model manager, and API integration, NodeTool caters to both newcomers and experienced users in the AI field.

NovelForge

NovelForge is an AI-assisted writing tool with the potential for creating long-form content of millions of words. It offers a solution that combines world-building, structured content generation, and consistency maintenance. The tool is built around four core concepts: modular 'cards', customizable 'dynamic output models', flexible 'context injection', and consistency assurance through a 'knowledge graph'. It provides a highly structured and configurable writing environment, inspired by the Snowflake Method, allowing users to create and organize their content in a tree-like structure. NovelForge is highly customizable and extensible, allowing users to tailor their writing workflow to their specific needs.

timeline-studio

Timeline Studio is a next-generation professional video editor with AI integration that automates content creation for social media. It combines the power of desktop applications with the convenience of web interfaces. With 257 AI tools, GPU acceleration, plugin system, multi-language interface, and local processing, Timeline Studio offers complete video production automation. Users can create videos for various social media platforms like TikTok, YouTube, Vimeo, Telegram, and Instagram with optimized versions. The tool saves time, understands trends, provides professional quality, and allows for easy feature extension through plugins. Timeline Studio is open source, transparent, and offers significant time savings and quality improvements for video editing tasks.

LabelQuick

LabelQuick_V2.0 is a fast image annotation tool designed and developed by the AI Horizon team. This version has been optimized and improved based on the previous version. It provides an intuitive interface and powerful annotation and segmentation functions to efficiently complete dataset annotation work. The tool supports video object tracking annotation, quick annotation by clicking, and various video operations. It introduces the SAM2 model for accurate and efficient object detection in video frames, reducing manual intervention and improving annotation quality. The tool is designed for Windows systems and requires a minimum of 6GB of memory.

For similar tasks

langchain4j-aideepin

LangChain4j-AIDeepin is an open-source, offline deployable retrieval enhancement generation (RAG) project based on large language models such as ChatGPT and Langchain4j application framework. It offers features like registration & login, multi-session support, image generation, prompt words, quota control, knowledge base, model-based search, model switching, and search engine switching. The project integrates models like ChatGPT 3.5, Tongyi Qianwen, Wenxin Yiyuan, Ollama, and DALL-E 2. The backend uses technologies like JDK 17, Spring Boot 3.0.5, Langchain4j, and PostgreSQL with pgvector extension, while the frontend is built with Vue3, TypeScript, and PNPM.

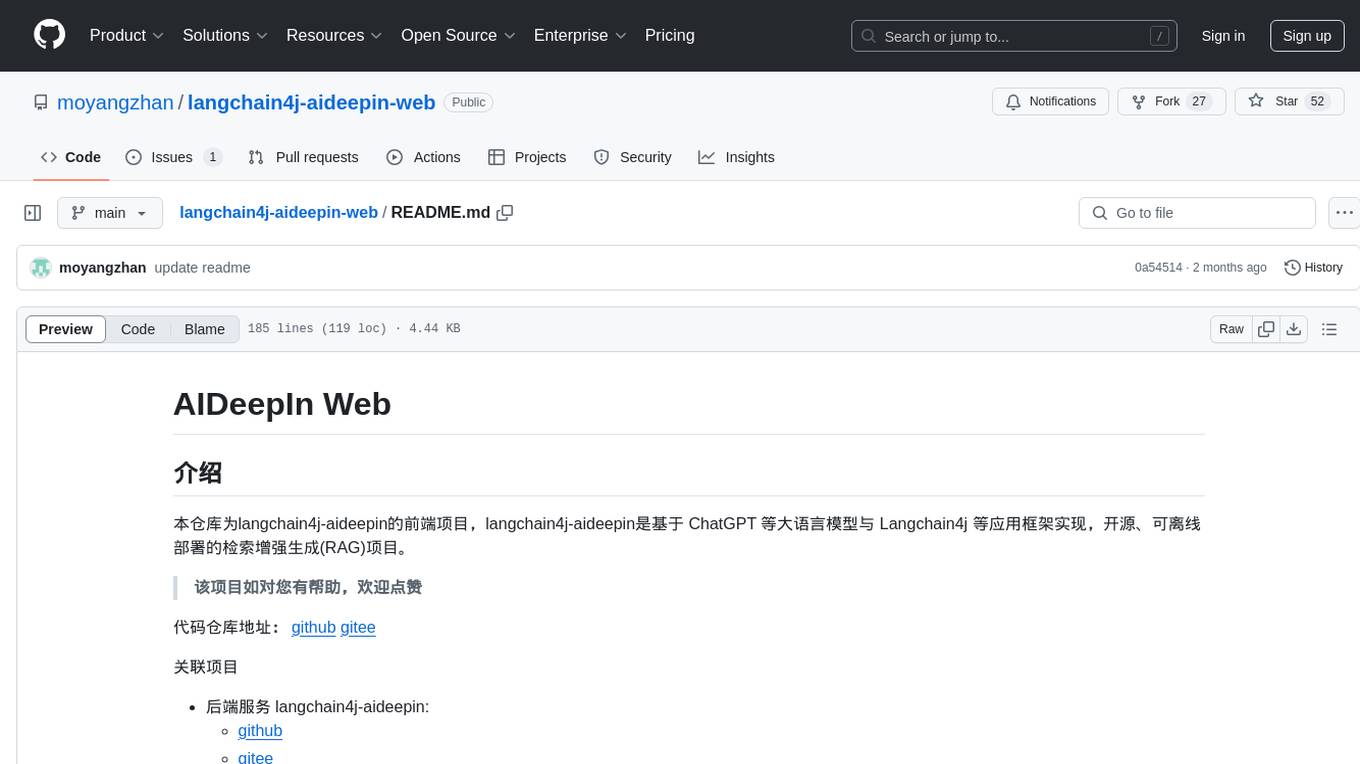

langchain4j-aideepin-web

The langchain4j-aideepin-web repository is the frontend project of langchain4j-aideepin, an open-source, offline deployable retrieval enhancement generation (RAG) project based on large language models such as ChatGPT and application frameworks such as Langchain4j. It includes features like registration & login, multi-sessions (multi-roles), image generation (text-to-image, image editing, image-to-image), suggestions, quota control, knowledge base (RAG) based on large models, model switching, and search engine switching.

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

InvokeAI

InvokeAI is a leading creative engine built to empower professionals and enthusiasts alike. Generate and create stunning visual media using the latest AI-driven technologies. InvokeAI offers an industry leading Web Interface, interactive Command Line Interface, and also serves as the foundation for multiple commercial products.

LocalAI

LocalAI is a free and open-source OpenAI alternative that acts as a drop-in replacement REST API compatible with OpenAI (Elevenlabs, Anthropic, etc.) API specifications for local AI inferencing. It allows users to run LLMs, generate images, audio, and more locally or on-premises with consumer-grade hardware, supporting multiple model families and not requiring a GPU. LocalAI offers features such as text generation with GPTs, text-to-audio, audio-to-text transcription, image generation with stable diffusion, OpenAI functions, embeddings generation for vector databases, constrained grammars, downloading models directly from Huggingface, and a Vision API. It provides a detailed step-by-step introduction in its Getting Started guide and supports community integrations such as custom containers, WebUIs, model galleries, and various bots for Discord, Slack, and Telegram. LocalAI also offers resources like an LLM fine-tuning guide, instructions for local building and Kubernetes installation, projects integrating LocalAI, and a how-tos section curated by the community. It encourages users to cite the repository when utilizing it in downstream projects and acknowledges the contributions of various software from the community.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

StableSwarmUI

StableSwarmUI is a modular Stable Diffusion web user interface that emphasizes making power tools easily accessible, high performance, and extensible. It is designed to be a one-stop-shop for all things Stable Diffusion, providing a wide range of features and capabilities to enhance the user experience.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.