nagato-ai

Simple cross-LLM AI Agent library

Stars: 76

Nagato-AI is an intuitive AI Agent library that supports multiple LLMs including OpenAI's GPT, Anthropic's Claude, Google's Gemini, and Groq LLMs. Users can create agents from these models and combine them to build an effective AI Agent system. The library is named after the powerful ninja Nagato from the anime Naruto, who can control multiple bodies with different abilities. Nagato-AI acts as a linchpin to summon and coordinate AI Agents for specific missions. It provides flexibility in programming and supports tools like Coordinator, Researcher, Critic agents, and HumanConfirmInputTool.

README:

Nagato-AI is an intuitive AI Agent library that works across multiple LLMs.

Currently it supports OpenAI's GPT, Anthpropic's Claude, Google's Gemini, and Groq (e.g. Llama 3) LLMs. You can create agents from any of the aforementioned family of models and combine them together to build the most effective AI Agent system you desire.

The name Nagato is inspired from the popular anime Naruto. In Naruto, Nagato is a very powerful ninja who possesses special eyes (Rinnegan) that gives him immense powers. Nagato's powers enable him to control multiple bodies endowed with different abilities. Nagato is also able to see through the eyes of all the bodies which he controls, thereby minimising blindspots that opponents may want to exploit.

Therefore, you can think of Nagato as the linchpin that summons and coordinates AI Agents which have a specific mission to complete.

Note that from now on I will use the terms Nagato and Nagato-AI interchangibly to refer to this library.

If you're working on the source repository (either via a fork or the original repository), you must ensure that you have poetry packaging/dependency management installed on your machine. Once poetry is installed, then simply run the following command in your termninal (from the root folder of nagato code base) to install all required dependencies:

poetry install

Simply run the command:

pip install nagatoai_core

That's it! Nagato AI available to use in your code.

By default, Nagato will look for environment variables to create the AI Agents and tools.

First, make sure to create a .env file. Then add those variables to the .env file you just created.

You only need to add some of the below environment variables for the model and the tools you plan to use. The current list of environment variables is the following:

OPENAI_API_KEY=

ANTHROPIC_API_KEY=

GROQ_API_KEY=

GOOGLE_API_KEY=

READWISE_API_KEY=

SERPER_API_KEY=

ELEVENLABS_API_KEY=

For instance if you only plan to use GPT-based agents and Readwise tools you should only set the OPENAI_API_KEY and READWISE_API_KEY environment variables.

Assuming your program's entrypoint is defined in a file called main.py, you can run it by typing the following command:

poetry run python main.py

Nagato currently supports the following LLMs

- Claude 3 (Anthropic)

- GPT-3 to GPT-4 (OpenAI)

- Groq (which gives you access to Llama 3.1)

- Google Gemini

Currently Nagato AI uses Langfuse for tracing LLM calls. Set the environment variables below to be able to send traces:

LANGFUSE_SECRET_KEY=

LANGFUSE_PUBLIC_KEY=

You can see how Langfuse is being used in the SingleAgentTaskRunner class.

Nagato is built with flexibility at its core, so you could program it using your paradigm of choice. However these are some of the ways I've seen people use Nagato so far.

By default Nagato expects all LLM API keys to be set as environment variables. Nagato may load the keys from the following variables:

OPENAI_API_KEY=<api-key>

ANTHROPIC_API_KEY=<api-key>

READWISE_API_KEY=<api-key>

In this configuration we have the following:

- 🎯 Coordinator: breaks down a problem statement (from stdin) into an objective and suggests tasks

- 📚 Researcher: works on a task by performing research

- ✅ Critic: evaluates whether the task was completed

# Using Claude-3 Opus as the coordinator agent

coordinator_agent: Agent = create_agent(

anthropic_api_key,

"claude-3-opus-20240229",

"Coordinator",

COORDINATOR_SYSTEM_PROMPT,

"Coordinator Agent",

)

# Using GPT-4 turbo as the researcher agent

researcher_agent = create_agent(

anthropic_api_key,

"gpt-4-turbo-2024-04-09",

"Researcher",

RESEARCHER_SYSTEM_PROMPT,

"Researcher Agent",

)

# Use Google Genini 1.5 Flash as the critic ahemt

critic_agent = create_agent(

google_api_key,

"gemini-1.5-flash",

"Critic",

CRITIC_SYSTEM_PROMPT,

"Critic Agent",

)

...The full blow example is available here

In this configuration we directly submit as input an objective and a set of tasks needed to complete the objective. Therefore we can skip the coordinator agent and have the worker agent(s) work on the tasks, while the critic agent evaluates whether the task carried out meets the requirements originally specified.

task_list: List[Task] = [

Task(

goal="Fetch last 100 user tweets",

description="Fetch the tweets from the user using the Twitter API. Limit the number of tweets fetched to 100 only."),

Task(

goal="Perform sentiment analysis on the tweets",

description="Feed the tweets to the AI Agent to analyze sentiment per overall sentiment acoss tweets. Range of values for sentiment can be: Positive, Negative, or Neutral"

)]

coordinator_agent: Agent = create_agent(

anthropic_api_key,

"claude-3-sonnet-20240229",

"Coordinator",

COORDINATOR_SYSTEM_PROMPT,

"Coordinator Agent",

)

critic_agent = create_agent(

anthropic_api_key,

"claude-3-haiku-20240307",

"Critic",

CRITIC_SYSTEM_PROMPT,

"Critic Agent",

)

for task in task_list:

# Insert the task into the prompt

worker_prompt = ...

worker_exchange = researcher_agent.chat(worker_prompt, task, 0.7, 2000)

# insert the response from the agent into prompt for the critic

critic_prompt = ...

critic_exchange = critic_agent(critic_prompt, task, 0.7, 2000)

# Evaluate whether the task was completed based on the answer from the critic agent

...Check the full example here to see how tool calling works. We now support tool calling for GPT, Claude 3, and Llama 3 (via Groq) models.

Creating a tool is straightforward. You must create have these two elements in place for a tool to be usable:

- A config class that contains the parameters that your tool will be called with

- A tool class that inherits from

AbstractTool, and contains the main logic for your tool.

For instance the below shows how we've created a tool to get the user to confirm yes/no in the terminal

from typing import Any, Type

from pydantic import BaseModel, Field

from rich.prompt import Confirm

from nagatoai_core.tool.abstract_tool import AbstractTool

class HumanConfirmInputConfig(BaseModel):

"""

HumanConfirmInputConfig represents the configuration for the HumanConfirmInputTool.

"""

message: str = Field(

...,

description="The message to display to the user to confirm whether to proceed or not",

)

class HumanConfirmInputTool(AbstractTool):

"""

HumanConfirmInputTool represents a tool that prompts the user to confirm whether to proceed or not.

"""

name: str = "human_confirm_input"

description: str = (

"""Prompts the user to confirm whether to proceed or not. Returns a boolean value indicating the user's choice."""

)

args_schema: Type[BaseModel] = HumanConfirmInputConfig

def _run(self, config: HumanConfirmInputConfig) -> Any:

"""

Prompts the user to confirm whether to proceed or not.

:param message: The message to display to the user to confirm whether to proceed or not.

:return: A boolean value indicating the user's choice.

"""

confirm = Confirm.ask("[bold yellow]" + config.message + "[/bold yellow]")

return confirmNagato is still in its very early development phase. This means that I am likely to introduce breaking changes over the next iterations of the library.

Moreover, there is a lot of functionality currently missing from Nagato. I will remedy this over time. There is no official roadmap per se but I plan to add the following capabilities to Nagato:

- ✅ implement function calling (complement to adding tools)

- ✅ introduce basic tools (e.g. surfing the web)

- ✅ implement agent based on Llama 3 model (via Groq)

- ✅ implement agent based on Google Gemini models (without function calling)

- ✅ cache results from function calling

- ✅ implement v1 of self-reflection and re-planning for agents

- ✅ Implement audio/text-to-speech tools

- ✅ implement function calling for Google Gemini agent

- ✅ LLMOps instrumentation (via Langfuse)

- 🎯 implement short/long-term memory for agents (with RAG and memory synthesis)

- 🎯 implement additional modalities (e.g. image, sound, etc.)

- 🎯 Support for local LLMs (e.g. via Ollama)

I'd be grateful if you could do some of the following to support this project:

- star this repository on Github

- follow me on X/Twitter

- raise Github issues if you've come across any bug using Nagato or would like a feature to be added to Nagato

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for nagato-ai

Similar Open Source Tools

nagato-ai

Nagato-AI is an intuitive AI Agent library that supports multiple LLMs including OpenAI's GPT, Anthropic's Claude, Google's Gemini, and Groq LLMs. Users can create agents from these models and combine them to build an effective AI Agent system. The library is named after the powerful ninja Nagato from the anime Naruto, who can control multiple bodies with different abilities. Nagato-AI acts as a linchpin to summon and coordinate AI Agents for specific missions. It provides flexibility in programming and supports tools like Coordinator, Researcher, Critic agents, and HumanConfirmInputTool.

pydantic-ai

PydanticAI is a Python agent framework designed to make it less painful to build production grade applications with Generative AI. It is built by the Pydantic Team and supports various AI models like OpenAI, Anthropic, Gemini, Ollama, Groq, and Mistral. PydanticAI seamlessly integrates with Pydantic Logfire for real-time debugging, performance monitoring, and behavior tracking of LLM-powered applications. It is type-safe, Python-centric, and offers structured responses, dependency injection system, and streamed responses. PydanticAI is in early beta, offering a Python-centric design to apply standard Python best practices in AI-driven projects.

ai-rag-chat-evaluator

This repository contains scripts and tools for evaluating a chat app that uses the RAG architecture. It provides parameters to assess the quality and style of answers generated by the chat app, including system prompt, search parameters, and GPT model parameters. The tools facilitate running evaluations, with examples of evaluations on a sample chat app. The repo also offers guidance on cost estimation, setting up the project, deploying a GPT-4 model, generating ground truth data, running evaluations, and measuring the app's ability to say 'I don't know'. Users can customize evaluations, view results, and compare runs using provided tools.

Tools4AI

Tools4AI is a Java-based Agentic Framework for building AI agents to integrate with enterprise Java applications. It enables the conversion of natural language prompts into actionable behaviors, streamlining user interactions with complex systems. By leveraging AI capabilities, it enhances productivity and innovation across diverse applications. The framework allows for seamless integration of AI with various systems, such as customer service applications, to interpret user requests, trigger actions, and streamline workflows. Prompt prediction anticipates user actions based on input prompts, enhancing user experience by proactively suggesting relevant actions or services based on context.

abliterator

abliterator.py is a simple Python library/structure designed to ablate features in large language models (LLMs) supported by TransformerLens. It provides capabilities to enter temporary contexts, cache activations with N samples, calculate refusal directions, and includes tokenizer utilities. The library aims to streamline the process of experimenting with ablation direction turns by encapsulating useful logic and minimizing code complexity. While currently basic and lacking comprehensive documentation, the library serves well for personal workflows and aims to expand beyond feature ablation to augmentation and additional features over time with community support.

HackBot

HackBot is an AI-powered cybersecurity chatbot designed to provide accurate answers to cybersecurity-related queries, conduct code analysis, and scan analysis. It utilizes the Meta-LLama2 AI model through the 'LlamaCpp' library to respond coherently. The chatbot offers features like local AI/Runpod deployment support, cybersecurity chat assistance, interactive interface, clear output presentation, static code analysis, and vulnerability analysis. Users can interact with HackBot through a command-line interface and utilize it for various cybersecurity tasks.

tribe

Tribe AI is a low code tool designed to rapidly build and coordinate multi-agent teams. It leverages the langgraph framework to customize and coordinate teams of agents, allowing tasks to be split among agents with different strengths for faster and better problem-solving. The tool supports persistent conversations, observability, tool calling, human-in-the-loop functionality, easy deployment with Docker, and multi-tenancy for managing multiple users and teams.

call-gpt

Call GPT is a voice application that utilizes Deepgram for Speech to Text, elevenlabs for Text to Speech, and OpenAI for GPT prompt completion. It allows users to chat with ChatGPT on the phone, providing better transcription, understanding, and speaking capabilities than traditional IVR systems. The app returns responses with low latency, allows user interruptions, maintains chat history, and enables GPT to call external tools. It coordinates data flow between Deepgram, OpenAI, ElevenLabs, and Twilio Media Streams, enhancing voice interactions.

lovelaice

Lovelaice is an AI-powered assistant for your terminal and editor. It can run bash commands, search the Internet, answer general and technical questions, complete text files, chat casually, execute code in various languages, and more. Lovelaice is configurable with API keys and LLM models, and can be used for a wide range of tasks requiring bash commands or coding assistance. It is designed to be versatile, interactive, and helpful for daily tasks and projects.

llama-on-lambda

This project provides a proof of concept for deploying a scalable, serverless LLM Generative AI inference engine on AWS Lambda. It leverages the llama.cpp project to enable the usage of more accessible CPU and RAM configurations instead of limited and expensive GPU capabilities. By deploying a container with the llama.cpp converted models onto AWS Lambda, this project offers the advantages of scale, minimizing cost, and maximizing compute availability. The project includes AWS CDK code to create and deploy a Lambda function leveraging your model of choice, with a FastAPI frontend accessible from a Lambda URL. It is important to note that you will need ggml quantized versions of your model and model sizes under 6GB, as your inference RAM requirements cannot exceed 9GB or your Lambda function will fail.

rag-experiment-accelerator

The RAG Experiment Accelerator is a versatile tool that helps you conduct experiments and evaluations using Azure AI Search and RAG pattern. It offers a rich set of features, including experiment setup, integration with Azure AI Search, Azure Machine Learning, MLFlow, and Azure OpenAI, multiple document chunking strategies, query generation, multiple search types, sub-querying, re-ranking, metrics and evaluation, report generation, and multi-lingual support. The tool is designed to make it easier and faster to run experiments and evaluations of search queries and quality of response from OpenAI, and is useful for researchers, data scientists, and developers who want to test the performance of different search and OpenAI related hyperparameters, compare the effectiveness of various search strategies, fine-tune and optimize parameters, find the best combination of hyperparameters, and generate detailed reports and visualizations from experiment results.

brokk

Brokk is a code assistant designed to understand code semantically, allowing LLMs to work effectively on large codebases. It offers features like agentic search, summarizing related classes, parsing stack traces, adding source for usages, and autonomously fixing errors. Users can interact with Brokk through different panels and commands, enabling them to manipulate context, ask questions, search codebase, run shell commands, and more. Brokk helps with tasks like debugging regressions, exploring codebase, AI-powered refactoring, and working with dependencies. It is particularly useful for making complex, multi-file edits with o1pro.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

clippinator

Clippinator is a code assistant tool that helps users develop code autonomously by planning, writing, debugging, and testing projects. It consists of agents based on GPT-4 that work together to assist the user in coding tasks. The main agent, Taskmaster, delegates tasks to specialized subagents like Architect, Writer, Frontender, Editor, QA, and Devops. The tool provides project architecture, tools for file and terminal operations, browser automation with Selenium, linting capabilities, CI integration, and memory management. Users can interact with the tool to provide feedback and guide the coding process, making it a powerful tool when combined with human intervention.

ChatGPT-Telegram-Bot

The ChatGPT Telegram Bot is a powerful Telegram bot that utilizes various GPT models, including GPT3.5, GPT4, GPT4 Turbo, GPT4 Vision, DALL·E 3, Groq Mixtral-8x7b/LLaMA2-70b, and Claude2.1/Claude3 opus/sonnet API. It enables users to engage in efficient conversations and information searches on Telegram. The bot supports multiple AI models, online search with DuckDuckGo and Google, user-friendly interface, efficient message processing, document interaction, Markdown rendering, and convenient deployment options like Zeabur, Replit, and Docker. Users can set environment variables for configuration and deployment. The bot also provides Q&A functionality, supports model switching, and can be deployed in group chats with whitelisting. The project is open source under GPLv3 license.

wtffmpeg

wtffmpeg is a command-line tool that uses a Large Language Model (LLM) to translate plain-English descriptions of video or audio tasks into actual, executable ffmpeg commands. It aims to streamline the process of generating ffmpeg commands by allowing users to describe what they want to do in natural language, review the generated command, optionally edit it, and then decide whether to run it. The tool provides an interactive REPL interface where users can input their commands, retain conversational context, and history, and control the level of interactivity. wtffmpeg is designed to assist users in efficiently working with ffmpeg commands, reducing the need to search for solutions, read lengthy explanations, and manually adjust commands.

For similar tasks

nagato-ai

Nagato-AI is an intuitive AI Agent library that supports multiple LLMs including OpenAI's GPT, Anthropic's Claude, Google's Gemini, and Groq LLMs. Users can create agents from these models and combine them to build an effective AI Agent system. The library is named after the powerful ninja Nagato from the anime Naruto, who can control multiple bodies with different abilities. Nagato-AI acts as a linchpin to summon and coordinate AI Agents for specific missions. It provides flexibility in programming and supports tools like Coordinator, Researcher, Critic agents, and HumanConfirmInputTool.

surfkit

Surfkit is a versatile toolkit designed for building and sharing AI agents that can operate on various devices. Users can create multimodal agents, share them with the community, run them locally or in the cloud, manage agent tasks at scale, and track and observe agent actions. The toolkit provides functionalities for creating agents, devices, solving tasks, managing devices, tracking tasks, and publishing agents. It also offers integrations with libraries like MLLM, Taskara, Skillpacks, and Threadmem. Surfkit aims to simplify the development and deployment of AI agents across different environments.

labo

LABO is a time series forecasting and analysis framework that integrates pre-trained and fine-tuned LLMs with multi-domain agent-based systems. It allows users to create and tune agents easily for various scenarios, such as stock market trend prediction and web public opinion analysis. LABO requires a specific runtime environment setup, including system requirements, Python environment, dependency installations, and configurations. Users can fine-tune their own models using LABO's Low-Rank Adaptation (LoRA) for computational efficiency and continuous model updates. Additionally, LABO provides a Python library for building model training pipelines and customizing agents for specific tasks.

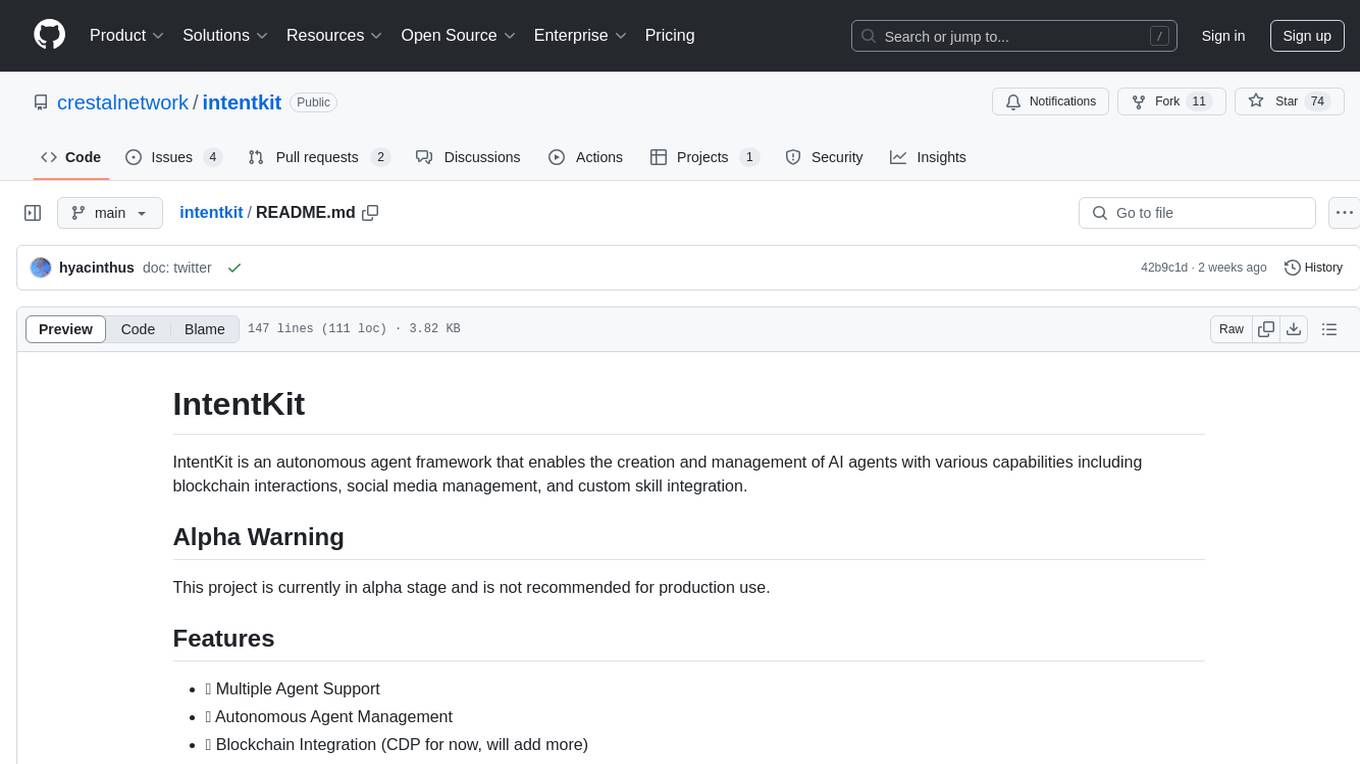

intentkit

IntentKit is an autonomous agent framework that enables the creation and management of AI agents with capabilities including blockchain interactions, social media management, and custom skill integration. It supports multiple agents, autonomous agent management, blockchain integration, social media integration, extensible skill system, and plugin system. The project is in alpha stage and not recommended for production use. It provides quick start guides for Docker and local development, integrations with Twitter and Coinbase, configuration options using environment variables or AWS Secrets Manager, project structure with core application code, entry points, configuration management, database models, skills, skill sets, and utility functions. Developers can add new skills by creating, implementing, and registering them in the skill directory.

neuron-ai

Neuron is a PHP framework for creating and orchestrating AI Agents, providing tools for the entire agentic application development lifecycle. It allows integration of AI entities in existing PHP applications with a powerful and flexible architecture. Neuron offers tutorials and educational content to help users get started using AI Agents in their projects. The framework supports various LLM providers, tools, and toolkits, enabling users to create fully functional agents for tasks like data analysis, chatbots, and structured output. Neuron also facilitates monitoring and debugging of AI applications, ensuring control over agent behavior and decision-making processes.

LayerSkip

LayerSkip is an implementation enabling early exit inference and self-speculative decoding. It provides a code base for running models trained using the LayerSkip recipe, offering speedup through self-speculative decoding. The tool integrates with Hugging Face transformers and provides checkpoints for various LLMs. Users can generate tokens, benchmark on datasets, evaluate tasks, and sweep over hyperparameters to optimize inference speed. The tool also includes correctness verification scripts and Docker setup instructions. Additionally, other implementations like gpt-fast and Native HuggingFace are available. Training implementation is a work-in-progress, and contributions are welcome under the CC BY-NC license.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.