forge

AI enabled pair programmer for Claude, GPT, O Series, Grok, Deepseek, Gemini and 300+ models

Stars: 4560

Forge is a powerful open-source tool for building modern web applications. It provides a simple and intuitive interface for developers to quickly scaffold and deploy projects. With Forge, you can easily create custom components, manage dependencies, and streamline your development workflow. Whether you are a beginner or an experienced developer, Forge offers a flexible and efficient solution for your web development needs.

README:

A comprehensive coding agent that integrates AI capabilities with your development environment

npx forgecode@latest

Table of Contents

To get started with Forge, run the command below:

npx forgecode@latestOn first run, Forge will guide you through setting up your AI provider credentials using the interactive login flow. Alternatively, you can configure providers beforehand:

# Configure your provider credentials interactively

forge provider login

# Then start Forge

forgeThat's it! Forge is now ready to assist you with your development tasks.

Forge can be used in different ways depending on your needs. Here are some common usage patterns:

Code Understanding

> Can you explain how the authentication system works in this codebase?

Forge will analyze your project's structure, identify authentication-related files, and provide a detailed explanation of the authentication flow, including the relationships between different components.

Implementing New Features

> I need to add a dark mode toggle to our React application. How should I approach this?

Forge will suggest the best approach based on your current codebase, explain the steps needed, and even scaffold the necessary components and styles for you.

Debugging Assistance

> I'm getting this error: "TypeError: Cannot read property 'map' of undefined". What might be causing it?

Forge will analyze the error, suggest potential causes based on your code, and propose different solutions to fix the issue.

Code Reviews

> Please review the code in src/components/UserProfile.js and suggest improvements

Forge will analyze the code, identify potential issues, and suggest improvements for readability, performance, security, and maintainability.

Learning New Technologies

> I want to integrate GraphQL into this Express application. Can you explain how to get started?

Forge will provide a tailored tutorial on integrating GraphQL with Express, using your specific project structure as context.

Database Schema Design

> I need to design a database schema for a blog with users, posts, comments, and categories

Forge will suggest an appropriate schema design, including tables/collections, relationships, indexes, and constraints based on your project's existing database technology.

Refactoring Legacy Code

> Help me refactor this class-based component to use React Hooks

Forge can help modernize your codebase by walking you through refactoring steps and implementing them with your approval.

Git Operations

> I need to merge branch 'feature/user-profile' into main but there are conflicts

Forge can guide you through resolving git conflicts, explaining the differences and suggesting the best way to reconcile them.

Forge is designed for developers who want to enhance their workflow with AI assistance while maintaining full control over their development environment.

- Zero configuration - Just add your API key and you're ready to go

- Seamless integration - Works right in your terminal, where you already work

- Multi-provider support - Use OpenAI, Anthropic, or other LLM providers

- Secure by design - Your code stays on your machine

- Open-source - Transparent, extensible, and community-driven

Forge helps you code faster, solve complex problems, and learn new technologies without leaving your terminal.

Here's a quick reference of Forge's command-line options:

| Option | Description |

|---|---|

-p, --prompt <PROMPT> |

Direct prompt to process without entering interactive mode |

-c, --command <COMMAND> |

Path to a file containing initial commands to execute |

-w, --workflow <WORKFLOW> |

Path to a file containing the workflow to execute |

-e, --event <EVENT> |

Dispatch an event to the workflow |

--conversation <CONVERSATION> |

Path to a file containing the conversation to execute |

-r, --restricted |

Enable restricted shell mode for enhanced security |

--verbose |

Enable verbose output mode |

-h, --help |

Print help information |

-V, --version |

Print version |

Forge supports multiple AI providers. The recommended way to configure providers is using the interactive login command:

forge provider loginThis will:

- Show you a list of available providers

- Guide you through entering the required credentials

# Login to a provider (add or update credentials)

forge provider login

# Remove provider credentials

forge provider logout

# List supported providers

forge provider list

⚠️ DEPRECATED: Using.envfiles for provider configuration is deprecated and will be removed in a future version. Please useforge provider logininstead.

For backward compatibility, Forge still supports environment variables. On first run, any credentials found in environment variables will be automatically migrated to file-based storage.

Legacy Environment Variable Setup (Deprecated)

OpenRouter

# .env

OPENROUTER_API_KEY=<your_openrouter_api_key>Requesty

# .env

REQUESTY_API_KEY=<your_requesty_api_key>x-ai

# .env

XAI_API_KEY=<your_xai_api_key>z.ai

# .env

ZAI_API_KEY=<your_zai_api_key>

# Or for coding plan subscription

ZAI_CODING_API_KEY=<your_zai_coding_api_key>Cerebras

# .env

CEREBRAS_API_KEY=<your_cerebras_api_key>IO Intelligence

# .env

IO_INTELLIGENCE_API_KEY=<your_io_intelligence_api_key># forge.yaml

model: meta-llama/Llama-3.3-70B-InstructOpenAI

# .env

OPENAI_API_KEY=<your_openai_api_key># forge.yaml

model: o3-mini-highAnthropic

# .env

ANTHROPIC_API_KEY=<your_anthropic_api_key># forge.yaml

model: claude-3.7-sonnetGoogle Vertex AI

Setup Instructions:

-

Install Google Cloud CLI and authenticate:

gcloud auth login gcloud config set project YOUR_PROJECT_ID -

Get your authentication token:

gcloud auth print-access-token

-

Use the token when logging in via Forge:

forge provider login # Select Google Vertex AI and enter your credentials

Legacy .env setup:

# .env

PROJECT_ID=<your_project_id>

LOCATION=<your_location>

VERTEX_AI_AUTH_TOKEN=<your_auth_token># forge.yaml

model: google/gemini-2.5-proAvailable Models:

- Claude models:

claude-sonnet-4@20250514 - Gemini models:

gemini-2.5-pro,gemini-2.0-flash

Use the /model command in Forge CLI to see all available models.

OpenAI-Compatible Providers

# .env

OPENAI_API_KEY=<your_provider_api_key>

OPENAI_URL=<your_provider_url># forge.yaml

model: <provider-specific-model>Groq

# .env

OPENAI_API_KEY=<your_groq_api_key>

OPENAI_URL=https://api.groq.com/openai/v1# forge.yaml

model: deepseek-r1-distill-llama-70bAmazon Bedrock

To use Amazon Bedrock models with Forge, you'll need to first set up the Bedrock Access Gateway:

-

Set up Bedrock Access Gateway:

- Follow the deployment steps in the Bedrock Access Gateway repo

- Create your own API key in Secrets Manager

- Deploy the CloudFormation stack

- Note your API Base URL from the CloudFormation outputs

-

Configure in Forge:

forge provider login # Select OpenAI-compatible provider and enter your Bedrock Gateway details

Legacy .env setup:

# .env

OPENAI_API_KEY=<your_bedrock_gateway_api_key>

OPENAI_URL=<your_bedrock_gateway_base_url># forge.yaml

model: anthropic.claude-3-opusForge Services

# .env

FORGE_API_KEY=<your_forge_api_key># forge.yaml

model: claude-3.7-sonnetForge supports several environment variables for advanced configuration and fine-tuning. These can be set in your .env file or system environment.

Retry Configuration

Control how Forge handles retry logic for failed requests:

# .env

FORGE_RETRY_INITIAL_BACKOFF_MS=1000 # Initial backoff time in milliseconds (default: 1000)

FORGE_RETRY_BACKOFF_FACTOR=2 # Multiplier for backoff time (default: 2)

FORGE_RETRY_MAX_ATTEMPTS=3 # Maximum retry attempts (default: 3)

FORGE_SUPPRESS_RETRY_ERRORS=false # Suppress retry error messages (default: false)

FORGE_RETRY_STATUS_CODES=429,500,502 # HTTP status codes to retry (default: 429,500,502,503,504)HTTP Configuration

Fine-tune HTTP client behavior for API requests:

# .env

FORGE_HTTP_CONNECT_TIMEOUT=30 # Connection timeout in seconds (default: 30)

FORGE_HTTP_READ_TIMEOUT=900 # Read timeout in seconds (default: 900)

FORGE_HTTP_POOL_IDLE_TIMEOUT=90 # Pool idle timeout in seconds (default: 90)

FORGE_HTTP_POOL_MAX_IDLE_PER_HOST=5 # Max idle connections per host (default: 5)

FORGE_HTTP_MAX_REDIRECTS=10 # Maximum redirects to follow (default: 10)

FORGE_HTTP_USE_HICKORY=false # Use Hickory DNS resolver (default: false)

FORGE_HTTP_TLS_BACKEND=default # TLS backend: "default" or "rustls" (default: "default")

FORGE_HTTP_MIN_TLS_VERSION=1.2 # Minimum TLS version: "1.0", "1.1", "1.2", "1.3"

FORGE_HTTP_MAX_TLS_VERSION=1.3 # Maximum TLS version: "1.0", "1.1", "1.2", "1.3"

FORGE_HTTP_ADAPTIVE_WINDOW=true # Enable HTTP/2 adaptive window (default: true)

FORGE_HTTP_KEEP_ALIVE_INTERVAL=60 # Keep-alive interval in seconds (default: 60, use "none"/"disabled" to disable)

FORGE_HTTP_KEEP_ALIVE_TIMEOUT=10 # Keep-alive timeout in seconds (default: 10)

FORGE_HTTP_KEEP_ALIVE_WHILE_IDLE=true # Keep-alive while idle (default: true)

FORGE_HTTP_ACCEPT_INVALID_CERTS=false # Accept invalid certificates (default: false) - USE WITH CAUTION

FORGE_HTTP_ROOT_CERT_PATHS=/path/to/cert1.pem,/path/to/cert2.crt # Paths to root certificate files (PEM, CRT, CER format), multiple paths separated by commas

⚠️ Security Warning: SettingFORGE_HTTP_ACCEPT_INVALID_CERTS=truedisables SSL/TLS certificate verification, which can expose you to man-in-the-middle attacks. Only use this in development environments or when you fully trust the network and endpoints.

API Configuration

Override default API endpoints and provider/model settings:

# .env

FORGE_API_URL=https://api.forgecode.dev # Custom Forge API URL (default: https://api.forgecode.dev)

FORGE_OVERRIDE_MODEL=claude-3.7-sonnet # Override model for all providers (takes precedence over configured models)

FORGE_OVERRIDE_PROVIDER=anthropic # Override default provider (takes precedence over configured provider)

FORGE_WORKSPACE_SERVER_URL=http://localhost:8080 # URL for the indexing server (default: https://api.forgecode.dev/)Tool Configuration

Configuring the tool calls settings:

# .env

FORGE_TOOL_TIMEOUT=300 # Maximum execution time in seconds for a tool before it is terminated to prevent hanging the session. (default: 300)

FORGE_MAX_IMAGE_SIZE=262144 # Maximum image file size in bytes for read_image operations (default: 262144 - 256 KB)

FORGE_DUMP_AUTO_OPEN=false # Automatically open dump files in browser (default: false)

FORGE_DEBUG_REQUESTS=/path/to/debug/requests.json # Write debug HTTP request files to specified path (supports absolute and relative paths)ZSH Plugin Configuration

Configure the ZSH plugin behavior:

# .env

FORGE_BIN=forge # Command to use for forge operations (default: "forge")The FORGE_BIN environment variable allows you to customize the command used by the ZSH plugin when transforming # prefixed commands. If not set, it defaults to "forge".

Display Configuration

Configure display options for the Forge UI and ZSH theme:

# .env

FORGE_CURRENCY_SYMBOL="$" # Currency symbol for cost display in ZSH theme (default: "$")

FORGE_CURRENCY_CONVERSION_RATE=1.0 # Conversion rate for currency display (default: 1.0)

NERD_FONT=1 # Enable Nerd Font icons in ZSH theme (default: auto-detected, set to "1" or "true" to enable, "0" or "false" to disable)

USE_NERD_FONT=1 # Alternative variable for enabling Nerd Font icons (same behavior as NERD_FONT)The FORGE_CURRENCY_SYMBOL and FORGE_CURRENCY_CONVERSION_RATE variables control how costs are displayed in the ZSH theme right prompt. Use these to customize the currency display for your region or preferred currency.

System Configuration

System-level environment variables (usually set automatically):

# .env

FORGE_MAX_SEARCH_RESULT_BYTES=10240 # Maximum bytes for search results (default: 10240 - 10 KB)

FORGE_HISTORY_FILE=/path/to/history # Custom path for Forge history file (default: uses system default location)

FORGE_BANNER="Your custom banner text" # Custom banner text to display on startup (default: Forge ASCII art)

FORGE_MAX_CONVERSATIONS=100 # Maximum number of conversations to show in list (default: 100)

FORGE_MAX_LINE_LENGTH=2000 # Maximum characters per line for file read operations (default: 2000)

FORGE_STDOUT_MAX_LINE_LENGTH=2000 # Maximum characters per line for shell output (default: 2000)

SHELL=/bin/zsh # Shell to use for command execution (Unix/Linux/macOS)

COMSPEC=cmd.exe # Command processor to use (Windows)Semantic Search Configuration

Configure semantic search behavior for code understanding:

# .env

FORGE_SEM_SEARCH_LIMIT=200 # Maximum number of results to return from initial vector search (default: 200)

FORGE_SEM_SEARCH_TOP_K=20 # Top-k parameter for relevance filtering during semantic search (default: 20)Logging Configuration

Configure logging verbosity and output:

# .env

FORGE_LOG=forge=info # Log filter level (default: forge=debug when tracking disabled, forge=info when tracking enabled)The FORGE_LOG variable controls the logging level for Forge's internal operations using the standard tracing filter syntax. Common values:

-

forge=error- Only errors -

forge=warn- Warnings and errors -

forge=info- Informational messages (default when tracking enabled) -

forge=debug- Debug information (default when tracking disabled) -

forge=trace- Detailed tracing

The forge.yaml file supports several advanced configuration options that let you customize Forge's behavior.

Custom Rules

Add your own guidelines that all agents should follow when generating responses.

# forge.yaml

custom_rules: |

1. Always add comprehensive error handling to any code you write.

2. Include unit tests for all new functions.

3. Follow our team's naming convention: camelCase for variables, PascalCase for classes.Commands

Define custom commands as shortcuts for repetitive prompts:

# forge.yaml

commands:

- name: "refactor"

description: "Refactor selected code"

prompt: "Please refactor this code to improve readability and performance"Model

Specify the default AI model to use for all agents in the workflow.

# forge.yaml

model: "claude-3.7-sonnet"Max Walker Depth

Control how deeply Forge traverses your project directory structure when gathering context.

# forge.yaml

max_walker_depth: 3 # Limit directory traversal to 3 levels deepTemperature

Adjust the creativity and randomness in AI responses. Lower values (0.0-0.3) produce more focused, deterministic outputs, while higher values (0.7-2.0) generate more diverse and creative results.

# forge.yaml

temperature: 0.7 # Balanced creativity and focusTool Max Failure Limit

Control how many times a tool can fail before Forge forces completion to prevent infinite retry loops. This helps avoid situations where an agent gets stuck repeatedly trying the same failing operation.

# forge.yaml

max_tool_failure_per_turn: 3 # Allow up to 3 failures per tool before forcing completionSet to a higher value if you want more retry attempts, or lower if you want faster failure detection.

Max Requests Per Turn

Limit the maximum number of requests an agent can make in a single conversation turn. This prevents runaway conversations and helps control API usage and costs.

# forge.yaml

max_requests_per_turn: 50 # Allow up to 50 requests per turnWhen this limit is reached, Forge will:

- Ask you if you wish to continue

- If you respond with 'Yes', it will continue the conversation

- If you respond with 'No', it will end the conversation

Model Context Protocol (MCP)

The MCP feature allows AI agents to communicate with external tools and services. This implementation follows Anthropic's Model Context Protocol design.

Configure MCP servers using the CLI:

# List all MCP servers

forge mcp list

# Add a new server

forge mcp add

# Add a server using JSON format

forge mcp add-json

# Get server details

forge mcp get

# Remove a server

forge mcp removeOr manually create a .mcp.json file with the following structure:

{

"mcpServers": {

"server_name": {

"command": "command_to_execute",

"args": ["arg1", "arg2"],

"env": { "ENV_VAR": "value" }

},

"another_server": {

"url": "http://localhost:3000/events"

}

}

}MCP configurations are read from two locations (in order of precedence):

- Local configuration (project-specific)

- User configuration (user-specific)

MCP can be used for various integrations:

- Web browser automation

- External API interactions

- Tool integration

- Custom service connections

MCP tools can be used as part of multi-agent workflows, allowing specialized agents to interact with external systems as part of a collaborative problem-solving approach.

For comprehensive documentation on all features and capabilities, please visit the documentation site.

Join our vibrant Discord community to connect with other Forge users and contributors, get help with your projects, share ideas, and provide feedback!

Your support drives Forge's continued evolution! By starring our GitHub repository, you:

- Help others discover this powerful tool 🔍

- Motivate our development team 💪

- Enable us to prioritize new features 🛠️

- Strengthen our open-source community 🌱

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for forge

Similar Open Source Tools

forge

Forge is a powerful open-source tool for building modern web applications. It provides a simple and intuitive interface for developers to quickly scaffold and deploy projects. With Forge, you can easily create custom components, manage dependencies, and streamline your development workflow. Whether you are a beginner or an experienced developer, Forge offers a flexible and efficient solution for your web development needs.

text-extract-api

The text-extract-api is a powerful tool that allows users to convert images, PDFs, or Office documents to Markdown text or JSON structured documents with high accuracy. It is built using FastAPI and utilizes Celery for asynchronous task processing, with Redis for caching OCR results. The tool provides features such as PDF/Office to Markdown and JSON conversion, improving OCR results with LLama, removing Personally Identifiable Information from documents, distributed queue processing, caching using Redis, switchable storage strategies, and a CLI tool for task management. Users can run the tool locally or on cloud services, with support for GPU processing. The tool also offers an online demo for testing purposes.

llm-vscode

llm-vscode is an extension designed for all things LLM, utilizing llm-ls as its backend. It offers features such as code completion with 'ghost-text' suggestions, the ability to choose models for code generation via HTTP requests, ensuring prompt size fits within the context window, and code attribution checks. Users can configure the backend, suggestion behavior, keybindings, llm-ls settings, and tokenization options. Additionally, the extension supports testing models like Code Llama 13B, Phind/Phind-CodeLlama-34B-v2, and WizardLM/WizardCoder-Python-34B-V1.0. Development involves cloning llm-ls, building it, and setting up the llm-vscode extension for use.

flapi

flAPI is a powerful service that automatically generates read-only APIs for datasets by utilizing SQL templates. Built on top of DuckDB, it offers features like automatic API generation, support for Model Context Protocol (MCP), connecting to multiple data sources, caching, security implementation, and easy deployment. The tool allows users to create APIs without coding and enables the creation of AI tools alongside REST endpoints using SQL templates. It supports unified configuration for REST endpoints and MCP tools/resources, concurrent servers for REST API and MCP server, and automatic tool discovery. The tool also provides DuckLake-backed caching for modern, snapshot-based caching with features like full refresh, incremental sync, retention, compaction, and audit logs.

python-tgpt

Python-tgpt is a Python package that enables seamless interaction with over 45 free LLM providers without requiring an API key. It also provides image generation capabilities. The name _python-tgpt_ draws inspiration from its parent project tgpt, which operates on Golang. Through this Python adaptation, users can effortlessly engage with a number of free LLMs available, fostering a smoother AI interaction experience.

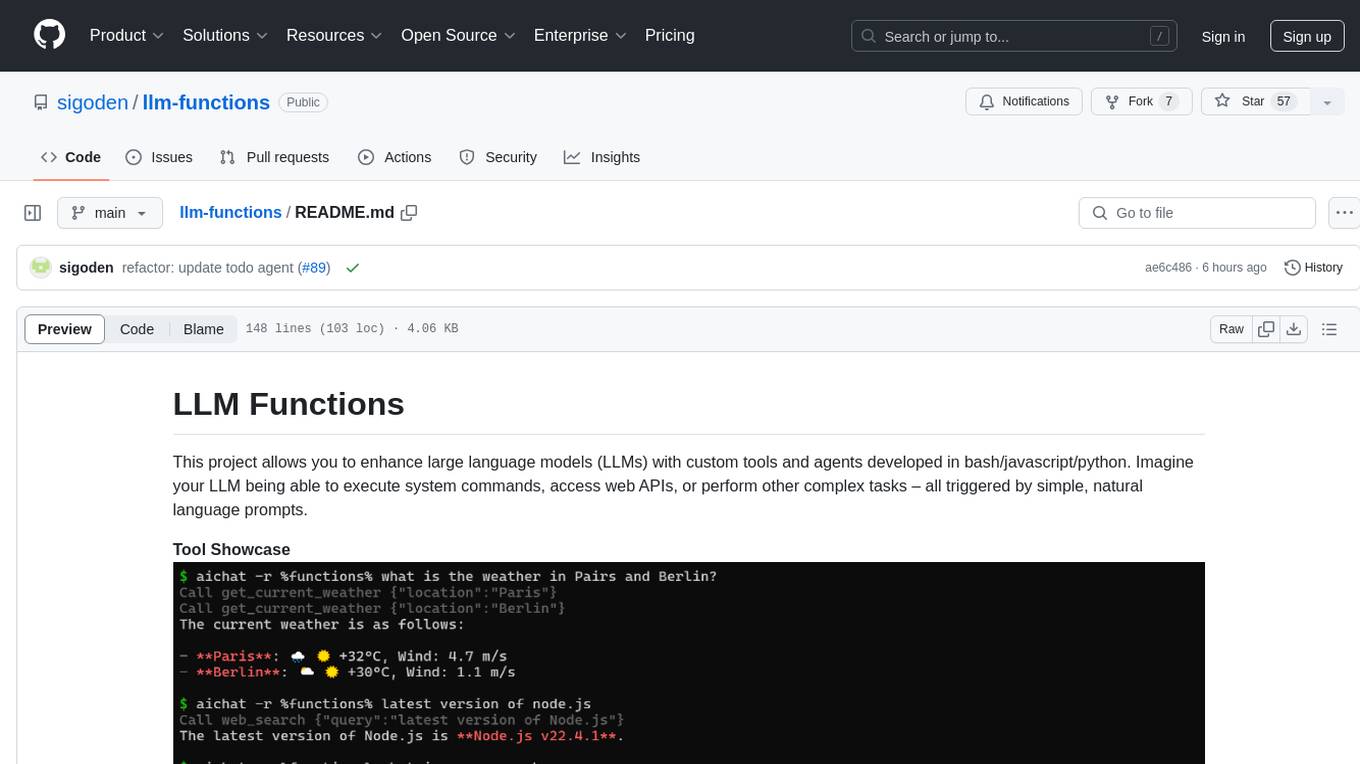

llm-functions

LLM Functions is a project that enables the enhancement of large language models (LLMs) with custom tools and agents developed in bash, javascript, and python. Users can create tools for their LLM to execute system commands, access web APIs, or perform other complex tasks triggered by natural language prompts. The project provides a framework for building tools and agents, with tools being functions written in the user's preferred language and automatically generating JSON declarations based on comments. Agents combine prompts, function callings, and knowledge (RAG) to create conversational AI agents. The project is designed to be user-friendly and allows users to easily extend the capabilities of their language models.

consult-llm-mcp

Consult LLM MCP is an MCP server that enables users to consult powerful AI models like GPT-5.2, Gemini 3.0 Pro, and DeepSeek Reasoner for complex problem-solving. It supports multi-turn conversations, direct queries with optional file context, git changes inclusion for code review, comprehensive logging with cost estimation, and various CLI modes for Gemini and Codex. The tool is designed to simplify the process of querying AI models for assistance in resolving coding issues and improving code quality.

k8sgpt

K8sGPT is a tool for scanning your Kubernetes clusters, diagnosing, and triaging issues in simple English. It has SRE experience codified into its analyzers and helps to pull out the most relevant information to enrich it with AI.

shortest

Shortest is an AI-powered natural language end-to-end testing framework built on Playwright. It provides a seamless testing experience by allowing users to write tests in natural language and execute them using Anthropic Claude API. The framework also offers GitHub integration with 2FA support, making it suitable for testing web applications with complex authentication flows. Shortest simplifies the testing process by enabling users to run tests locally or in CI/CD pipelines, ensuring the reliability and efficiency of web applications.

models.dev

Models.dev is an open-source database providing detailed specifications, pricing, and capabilities of various AI models. It serves as a centralized platform for accessing information on AI models, allowing users to contribute and utilize the data through an API. The repository contains data stored in TOML files, organized by provider and model, along with SVG logos. Users can contribute by adding new models following specific guidelines and submitting pull requests for validation. The project aims to maintain an up-to-date and comprehensive database of AI model information.

twitter-automation-ai

Advanced Twitter Automation AI is a modular Python-based framework for automating Twitter at scale. It supports multiple accounts, robust Selenium automation with optional undetected Chrome + stealth, per-account proxies and rotation, structured LLM generation/analysis, community posting, and per-account metrics/logs. The tool allows seamless management and automation of multiple Twitter accounts, content scraping, publishing, LLM integration for generating and analyzing tweet content, engagement automation, configurable automation, browser automation using Selenium, modular design for easy extension, comprehensive logging, community posting, stealth mode for reduced fingerprinting, per-account proxies, LLM structured prompts, and per-account JSON summaries and event logs for observability.

llama.vim

llama.vim is a plugin that provides local LLM-assisted text completion for Vim users. It offers features such as auto-suggest on cursor movement, manual suggestion toggling, suggestion acceptance with Tab and Shift+Tab, control over text generation time, context configuration, ring context with chunks from open and edited files, and performance stats display. The plugin requires a llama.cpp server instance to be running and supports FIM-compatible models. It aims to be simple, lightweight, and provide high-quality and performant local FIM completions even on consumer-grade hardware.

shell-pilot

Shell-pilot is a simple, lightweight shell script designed to interact with various AI models such as OpenAI, Ollama, Mistral AI, LocalAI, ZhipuAI, Anthropic, Moonshot, and Novita AI from the terminal. It enhances intelligent system management without any dependencies, offering features like setting up a local LLM repository, using official models and APIs, viewing history and session persistence, passing input prompts with pipe/redirector, listing available models, setting request parameters, generating and running commands in the terminal, easy configuration setup, system package version checking, and managing system aliases.

repomix

Repomix is a powerful tool that packs your entire repository into a single, AI-friendly file. It is designed to format your codebase for easy understanding by AI tools like Large Language Models (LLMs), Claude, ChatGPT, and Gemini. Repomix offers features such as AI optimization, token counting, simplicity in usage, customization options, Git awareness, and security-focused checks using Secretlint. It allows users to pack their entire repository or specific directories/files using glob patterns, and even supports processing remote Git repositories. The tool generates output in plain text, XML, or Markdown formats, with options for including/excluding files, removing comments, and performing security checks. Repomix also provides a global configuration option, custom instructions for AI context, and a security check feature to detect sensitive information in files.

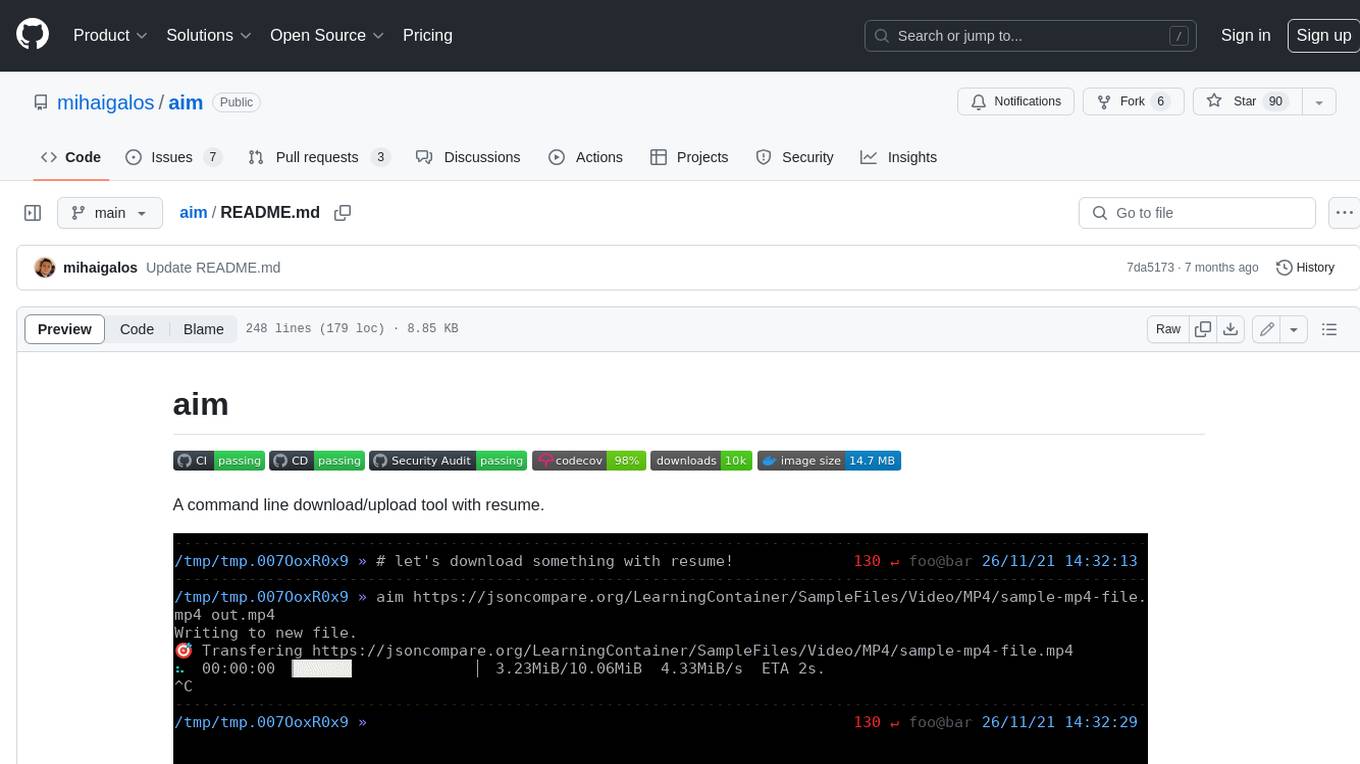

aim

Aim is a command-line tool for downloading and uploading files with resume support. It supports various protocols including HTTP, FTP, SFTP, SSH, and S3. Aim features an interactive mode for easy navigation and selection of files, as well as the ability to share folders over HTTP for easy access from other devices. Additionally, it offers customizable progress indicators and output formats, and can be integrated with other commands through piping. Aim can be installed via pre-built binaries or by compiling from source, and is also available as a Docker image for platform-independent usage.

chat-ui

A chat interface using open source models, eg OpenAssistant or Llama. It is a SvelteKit app and it powers the HuggingChat app on hf.co/chat.

For similar tasks

forge

Forge is a powerful open-source tool for building modern web applications. It provides a simple and intuitive interface for developers to quickly scaffold and deploy projects. With Forge, you can easily create custom components, manage dependencies, and streamline your development workflow. Whether you are a beginner or an experienced developer, Forge offers a flexible and efficient solution for your web development needs.

bit

Bit is a build system that organizes source code into composable components, enabling the creation of reliable, scalable, and consistent applications. It supports the creation of reusable UI components, standard building blocks, shell applications, and atomic deployments. Bit is compatible with various tools in the JavaScript ecosystem and offers official dev environments for popular frameworks. It can be used in different codebase structures like monorepos or polyrepos, and even without repositories. Users can install Bit, create shell applications, compose components, release and deploy components, and modernize existing projects using Bit Cloud or self-hosted scopes.

indie-hacker-tools-plus

Indie Hacker Tools Plus is a curated repository of essential tools and technology stacks for independent developers. The repository aims to help developers enhance efficiency, save costs, and mitigate risks by using popular and validated tools. It provides a collection of tools recognized by the industry to empower developers with the most refined technical support. Developers can contribute by submitting articles, software, or resources through issues or pull requests.

magma

Magma is a powerful and flexible framework for building scalable and efficient machine learning pipelines. It provides a simple interface for creating complex workflows, enabling users to easily experiment with different models and data processing techniques. With Magma, users can streamline the development and deployment of machine learning projects, saving time and resources.

metaflow

Metaflow is a user-friendly library designed to assist scientists and engineers in developing and managing real-world data science projects. Initially created at Netflix, Metaflow aimed to enhance the productivity of data scientists working on diverse projects ranging from traditional statistics to cutting-edge deep learning. For further information, refer to Metaflow's website and documentation.

ck

Collective Mind (CM) is a collection of portable, extensible, technology-agnostic and ready-to-use automation recipes with a human-friendly interface (aka CM scripts) to unify and automate all the manual steps required to compose, run, benchmark and optimize complex ML/AI applications on any platform with any software and hardware: see online catalog and source code. CM scripts require Python 3.7+ with minimal dependencies and are continuously extended by the community and MLCommons members to run natively on Ubuntu, MacOS, Windows, RHEL, Debian, Amazon Linux and any other operating system, in a cloud or inside automatically generated containers while keeping backward compatibility - please don't hesitate to report encountered issues here and contact us via public Discord Server to help this collaborative engineering effort! CM scripts were originally developed based on the following requirements from the MLCommons members to help them automatically compose and optimize complex MLPerf benchmarks, applications and systems across diverse and continuously changing models, data sets, software and hardware from Nvidia, Intel, AMD, Google, Qualcomm, Amazon and other vendors: * must work out of the box with the default options and without the need to edit some paths, environment variables and configuration files; * must be non-intrusive, easy to debug and must reuse existing user scripts and automation tools (such as cmake, make, ML workflows, python poetry and containers) rather than substituting them; * must have a very simple and human-friendly command line with a Python API and minimal dependencies; * must require minimal or zero learning curve by using plain Python, native scripts, environment variables and simple JSON/YAML descriptions instead of inventing new workflow languages; * must have the same interface to run all automations natively, in a cloud or inside containers. CM scripts were successfully validated by MLCommons to modularize MLPerf inference benchmarks and help the community automate more than 95% of all performance and power submissions in the v3.1 round across more than 120 system configurations (models, frameworks, hardware) while reducing development and maintenance costs.

client-python

The Mistral Python Client is a tool inspired by cohere-python that allows users to interact with the Mistral AI API. It provides functionalities to access and utilize the AI capabilities offered by Mistral. Users can easily install the client using pip and manage dependencies using poetry. The client includes examples demonstrating how to use the API for various tasks, such as chat interactions. To get started, users need to obtain a Mistral API Key and set it as an environment variable. Overall, the Mistral Python Client simplifies the integration of Mistral AI services into Python applications.

ComposeAI

ComposeAI is an Android & iOS application similar to ChatGPT, built using Compose Multiplatform. It utilizes various technologies such as Compose Multiplatform, Material 3, OpenAI Kotlin, Voyager, Koin, SQLDelight, Multiplatform Settings, Coil3, Napier, BuildKonfig, Firebase Analytics & Crashlytics, and AdMob. The app architecture follows Google's latest guidelines. Users need to set up their own OpenAI API key before using the app.

For similar jobs

pro-chat

ProChat is a components library focused on quickly building large language model chat interfaces. It empowers developers to create rich, dynamic, and intuitive chat interfaces with features like automatic chat caching, streamlined conversations, message editing tools, auto-rendered Markdown, and programmatic controls. The tool also includes design evolution plans such as customized dialogue rendering, enhanced request parameters, personalized error handling, expanded documentation, and atomic component design.

bumpgen

bumpgen is a tool designed to automatically upgrade TypeScript / TSX dependencies and make necessary code changes to handle any breaking issues that may arise. It uses an abstract syntax tree to analyze code relationships, type definitions for external methods, and a plan graph DAG to execute changes in the correct order. The tool is currently limited to TypeScript and TSX but plans to support other strongly typed languages in the future. It aims to simplify the process of upgrading dependencies and handling code changes caused by updates.

monacopilot

Monacopilot is a powerful and customizable AI auto-completion plugin for the Monaco Editor. It supports multiple AI providers such as Anthropic, OpenAI, Groq, and Google, providing real-time code completions with an efficient caching system. The plugin offers context-aware suggestions, customizable completion behavior, and framework agnostic features. Users can also customize the model support and trigger completions manually. Monacopilot is designed to enhance coding productivity by providing accurate and contextually appropriate completions in daily spoken language.

pear-landing-page

PearAI Landing Page is an open-source AI-powered code editor managed by Nang and Pan. It is built with Next.js, Vercel, Tailwind CSS, and TypeScript. The project requires setting up environment variables for proper configuration. Users can run the project locally by starting the development server and visiting the specified URL in the browser. Recommended extensions include Prettier, ESLint, and JavaScript and TypeScript Nightly. Contributions to the project are welcomed and appreciated.

magic-resume

Magic Resume is a modern online resume editor that makes creating professional resumes simple and fun. Built on Next.js and Framer Motion, it supports real-time preview and custom themes. Features include Next.js 14+ based construction, smooth animation effects (Framer Motion), custom theme support, responsive design, dark mode, export to PDF, real-time preview, auto-save, and local storage. The technology stack includes Next.js 14+, TypeScript, Framer Motion, Tailwind CSS, Shadcn/ui, and Lucide Icons.

forge

Forge is a powerful open-source tool for building modern web applications. It provides a simple and intuitive interface for developers to quickly scaffold and deploy projects. With Forge, you can easily create custom components, manage dependencies, and streamline your development workflow. Whether you are a beginner or an experienced developer, Forge offers a flexible and efficient solution for your web development needs.

eslint-config-airbnb-extended

A powerful ESLint configuration extending the popular Airbnb style guide, with added support for TypeScript. It provides a one-to-one replacement for old Airbnb ESLint configs, TypeScript support, customizable settings, pre-configured rules, and a CLI utility for quick setup. The package 'eslint-config-airbnb-extended' fully supports TypeScript to enforce consistent coding standards across JavaScript and TypeScript files. The 'create-airbnb-x-config' tool automates the setup of the ESLint configuration package and ensures correct ESLint rules application across JavaScript and TypeScript code.

ultracite

Ultracite is an AI-ready formatter built in Rust for lightning-fast performance, providing robust linting and formatting experience for Next.js, React, and TypeScript projects. It enforces strict type checking, ensures code style consistency, and integrates seamlessly with AI models like GitHub Copilot. With zero configuration needed, Ultracite automatically formats code, fixes lint issues, and improves accessibility on save, allowing developers to focus on coding and shipping without interruptions.