Toolify

Empower any LLM with function calling capabilities.

Stars: 67

Toolify is a middleware proxy that empowers Large Language Models (LLMs) and OpenAI API interfaces by enabling function calling capabilities. It acts as an intermediary between applications and LLM APIs, injecting prompts and parsing tool calls from the model's response. Key features include universal function calling, multiple function calls support, flexible initiation, compatibility with

README:

Empower any LLM with function calling capabilities.

Toolify is a middleware proxy designed to inject OpenAI-compatible function calling capabilities into Large Language Models that do not natively support it, or into OpenAI API interfaces that do not provide this functionality. It acts as an intermediary between your application and the upstream LLM API, injecting necessary prompts and parsing tool calls from the model's response.

- Universal Function Calling: Enables function calling for LLMs or APIs that adhere to the OpenAI format but lack native support.

- Multiple Function Calls: Supports executing multiple functions simultaneously in a single response.

- Flexible Initiation: Allows function calls to be initiated at any stage of the model's output.

-

Think Tag Compatibility: Seamlessly handles

<think>tags, ensuring they don't interfere with tool parsing. - Streaming Support: Fully supports streaming responses, detecting and parsing function calls on the fly.

- Multi-Service Routing: Routes requests to different upstream services based on the requested model name.

- Client Authentication: Secures the middleware with configurable client API keys.

- Enhanced Context Awareness: Provides LLMs with the details (name and parameters) of previous tool calls alongside the execution results, improving contextual understanding.

-

Intercept Request: Toolify intercepts the

chat/completionsrequest from the client, which includes the desired tools. - Inject Prompt: It generates a specific system prompt instructing the LLM how to output function calls using a structured XML format and a unique trigger signal.

- Proxy to Upstream: The modified request is sent to the configured upstream LLM service.

- Parse Response: Toolify analyzes the upstream response. If the trigger signal is detected, it parses the XML structure to extract the function calls.

-

Format Response: It transforms the parsed tool calls into the standard OpenAI

tool_callsformat and sends it back to the client.

You can run Toolify using Docker Compose or Python directly.

This is the recommended way for easy deployment.

- Docker and Docker Compose installed.

-

Clone the repository:

git clone https://github.com/funnycups/toolify.git cd toolify -

Configure the application:

Copy the example configuration file and edit it:

cp config.example.yaml config.yaml

Edit

config.yaml. Thedocker-compose.ymlfile is configured to mount this file into the container. -

Start the service:

docker-compose up -d --build

This will build the Docker image and start the Toolify service in detached mode, accessible at

http://localhost:8000.

- Python 3.8+

-

Clone the repository:

git clone https://github.com/funnycups/toolify.git cd toolify -

Install dependencies:

pip install -r requirements.txt

-

Configure the application:

Copy the example configuration file and edit it:

cp config.example.yaml config.yaml

Edit

config.yamlto set up your upstream services, API keys, and allowed client keys. -

Run the server:

python main.py

Refer to config.example.yaml for detailed configuration options.

-

server: Middleware host, port, and timeout settings. -

upstream_services: List of upstream LLM providers (e.g., Groq, OpenAI, Anthropic).- Define

base_url,api_key, supportedmodels, and set one service asis_default: true.

- Define

-

client_authentication: List ofallowed_keysfor clients accessing this middleware. -

features: Toggle features like logging, role conversion, and API key handling.-

key_passthrough: Set totrueto directly forward the client-provided API key to the upstream service, bypassing the configuredapi_keyinupstream_services. -

model_passthrough: Set totrueto forward all requests directly to the upstream service named 'openai', ignoring any model-based routing rules. -

prompt_template: Customize the system prompt used to instruct the model on how to use tools.

-

Once Toolify is running, configure your client application (e.g., using the OpenAI SDK) to use Toolify's address as the base_url. Use one of the configured allowed_keys for authentication.

from openai import OpenAI

client = OpenAI(

base_url="http://localhost:8000/v1", # Toolify endpoint

api_key="sk-my-secret-key-1" # Your configured client key

)

# The rest of your OpenAI API calls remain the same, including tool definitions.Toolify handles the translation between the standard OpenAI tool format and the prompt-based method required by unsupported LLMs.

This project is licensed under the GPL-3.0-or-later license.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Toolify

Similar Open Source Tools

Toolify

Toolify is a middleware proxy that empowers Large Language Models (LLMs) and OpenAI API interfaces by enabling function calling capabilities. It acts as an intermediary between applications and LLM APIs, injecting prompts and parsing tool calls from the model's response. Key features include universal function calling, multiple function calls support, flexible initiation, compatibility with

action_mcp

Action MCP is a powerful tool for managing and automating your cloud infrastructure. It provides a user-friendly interface to easily create, update, and delete resources on popular cloud platforms. With Action MCP, you can streamline your deployment process, reduce manual errors, and improve overall efficiency. The tool supports various cloud providers and offers a wide range of features to meet your infrastructure management needs. Whether you are a developer, system administrator, or DevOps engineer, Action MCP can help you simplify and optimize your cloud operations.

RA.Aid

RA.Aid is an AI software development agent powered by `aider` and advanced reasoning models like `o1`. It combines `aider`'s code editing capabilities with LangChain's agent-based task execution framework to provide an intelligent assistant for research, planning, and implementation of multi-step development tasks. It handles complex programming tasks by breaking them down into manageable steps, running shell commands automatically, and leveraging expert reasoning models like OpenAI's o1. RA.Aid is designed for everyday software development, offering features such as multi-step task planning, automated command execution, and the ability to handle complex programming tasks beyond single-shot code edits.

model-compose

model-compose is an open-source, declarative workflow orchestrator inspired by docker-compose. It lets you define and run AI model pipelines using simple YAML files. Effortlessly connect external AI services or run local AI models within powerful, composable workflows. Features include declarative design, multi-workflow support, modular components, flexible I/O routing, streaming mode support, and more. It supports running workflows locally or serving them remotely, Docker deployment, environment variable support, and provides a CLI interface for managing AI workflows.

extension-gen-ai

The Looker GenAI Extension provides code examples and resources for building a Looker Extension that integrates with Vertex AI Large Language Models (LLMs). Users can leverage the power of LLMs to enhance data exploration and analysis within Looker. The extension offers generative explore functionality to ask natural language questions about data and generative insights on dashboards to analyze data by asking questions. It leverages components like BQML Remote Models, BQML Remote UDF with Vertex AI, and Custom Fine Tune Model for different integration options. Deployment involves setting up infrastructure with Terraform and deploying the Looker Extension by creating a Looker project, copying extension files, configuring BigQuery connection, connecting to Git, and testing the extension. Users can save example prompts and configure user settings for the extension. Development of the Looker Extension environment includes installing dependencies, starting the development server, and building for production.

Groqqle

Groqqle 2.1 is a revolutionary, free AI web search and API that instantly returns ORIGINAL content derived from source articles, websites, videos, and even foreign language sources, for ANY target market of ANY reading comprehension level! It combines the power of large language models with advanced web and news search capabilities, offering a user-friendly web interface, a robust API, and now a powerful Groqqle_web_tool for seamless integration into your projects. Developers can instantly incorporate Groqqle into their applications, providing a powerful tool for content generation, research, and analysis across various domains and languages.

company-research-agent

Agentic Company Researcher is a multi-agent tool that generates comprehensive company research reports by utilizing a pipeline of AI agents to gather, curate, and synthesize information from various sources. It features multi-source research, AI-powered content filtering, real-time progress streaming, dual model architecture, modern React frontend, and modular architecture. The tool follows an agentic framework with specialized research and processing nodes, leverages separate models for content generation, uses a content curation system for relevance scoring and document processing, and implements a real-time communication system via WebSocket connections. Users can set up the tool quickly using the provided setup script or manually, and it can also be deployed using Docker and Docker Compose. The application can be used for local development and deployed to various cloud platforms like AWS Elastic Beanstalk, Docker, Heroku, and Google Cloud Run.

py-llm-core

PyLLMCore is a light-weighted interface with Large Language Models with native support for llama.cpp, OpenAI API, and Azure deployments. It offers a Pythonic API that is simple to use, with structures provided by the standard library dataclasses module. The high-level API includes the assistants module for easy swapping between models. PyLLMCore supports various models including those compatible with llama.cpp, OpenAI, and Azure APIs. It covers use cases such as parsing, summarizing, question answering, hallucinations reduction, context size management, and tokenizing. The tool allows users to interact with language models for tasks like parsing text, summarizing content, answering questions, reducing hallucinations, managing context size, and tokenizing text.

evolving-agents

A toolkit for agent autonomy, evolution, and governance enabling agents to learn from experience, collaborate, communicate, and build new tools within governance guardrails. It focuses on autonomous evolution, agent self-discovery, governance firmware, self-building systems, and agent-centric architecture. The toolkit leverages existing frameworks to enable agent autonomy and self-governance, moving towards truly autonomous AI systems.

pentagi

PentAGI is an innovative tool for automated security testing that leverages cutting-edge artificial intelligence technologies. It is designed for information security professionals, researchers, and enthusiasts who need a powerful and flexible solution for conducting penetration tests. The tool provides secure and isolated operations in a sandboxed Docker environment, fully autonomous AI-powered agent for penetration testing steps, a suite of 20+ professional security tools, smart memory system for storing research results, web intelligence for gathering information, integration with external search systems, team delegation system, comprehensive monitoring and reporting, modern interface, API integration, persistent storage, scalable architecture, self-hosted solution, flexible authentication, and quick deployment through Docker Compose.

tinystruct

Tinystruct is a simple Java framework designed for easy development with better performance. It offers a modern approach with features like CLI and web integration, built-in lightweight HTTP server, minimal configuration philosophy, annotation-based routing, and performance-first architecture. Developers can focus on real business logic without dealing with unnecessary complexities, making it transparent, predictable, and extensible.

code2prompt

Code2Prompt is a powerful command-line tool that generates comprehensive prompts from codebases, designed to streamline interactions between developers and Large Language Models (LLMs) for code analysis, documentation, and improvement tasks. It bridges the gap between codebases and LLMs by converting projects into AI-friendly prompts, enabling users to leverage AI for various software development tasks. The tool offers features like holistic codebase representation, intelligent source tree generation, customizable prompt templates, smart token management, Gitignore integration, flexible file handling, clipboard-ready output, multiple output options, and enhanced code readability.

roast

Roast is a convention-oriented framework for creating structured AI workflows maintained by the Augmented Engineering team at Shopify. It provides a structured, declarative approach to building AI workflows with convention over configuration, built-in tools for file operations, search, and AI interactions, Ruby integration for custom steps, shared context between steps, step customization with AI models and parameters, session replay, parallel execution, function caching, and extensive instrumentation for monitoring workflow execution, AI calls, and tool usage.

Google_GenerativeAI

Google GenerativeAI (Gemini) is an unofficial C# .Net SDK based on REST APIs for accessing Google Gemini models. It offers a complete rewrite of the previous SDK with improved performance, flexibility, and ease of use. The SDK seamlessly integrates with LangChain.net, providing easy methods for JSON-based interactions and function calling with Google Gemini models. It includes features like enhanced JSON mode handling, function calling with code generator, multi-modal functionality, Vertex AI support, multimodal live API, image generation and captioning, retrieval-augmented generation with Vertex RAG Engine and Google AQA, easy JSON handling, Gemini tools and function calling, multimodal live API, and more.

RainbowGPT

RainbowGPT is a versatile tool that offers a range of functionalities, including Stock Analysis for financial decision-making, MySQL Management for database navigation, and integration of AI technologies like GPT-4 and ChatGlm3. It provides a user-friendly interface suitable for all skill levels, ensuring seamless information flow and continuous expansion of emerging technologies. The tool enhances adaptability, creativity, and insight, making it a valuable asset for various projects and tasks.

premsql

PremSQL is an open-source library designed to help developers create secure, fully local Text-to-SQL solutions using small language models. It provides essential tools for building and deploying end-to-end Text-to-SQL pipelines with customizable components, ideal for secure, autonomous AI-powered data analysis. The library offers features like Local-First approach, Customizable Datasets, Robust Executors and Evaluators, Advanced Generators, Error Handling and Self-Correction, Fine-Tuning Support, and End-to-End Pipelines. Users can fine-tune models, generate SQL queries from natural language inputs, handle errors, and evaluate model performance against predefined metrics. PremSQL is extendible for customization and private data usage.

For similar tasks

Toolify

Toolify is a middleware proxy that empowers Large Language Models (LLMs) and OpenAI API interfaces by enabling function calling capabilities. It acts as an intermediary between applications and LLM APIs, injecting prompts and parsing tool calls from the model's response. Key features include universal function calling, multiple function calls support, flexible initiation, compatibility with

semantic-router

Semantic Router is a superfast decision-making layer for your LLMs and agents. Rather than waiting for slow LLM generations to make tool-use decisions, we use the magic of semantic vector space to make those decisions — _routing_ our requests using _semantic_ meaning.

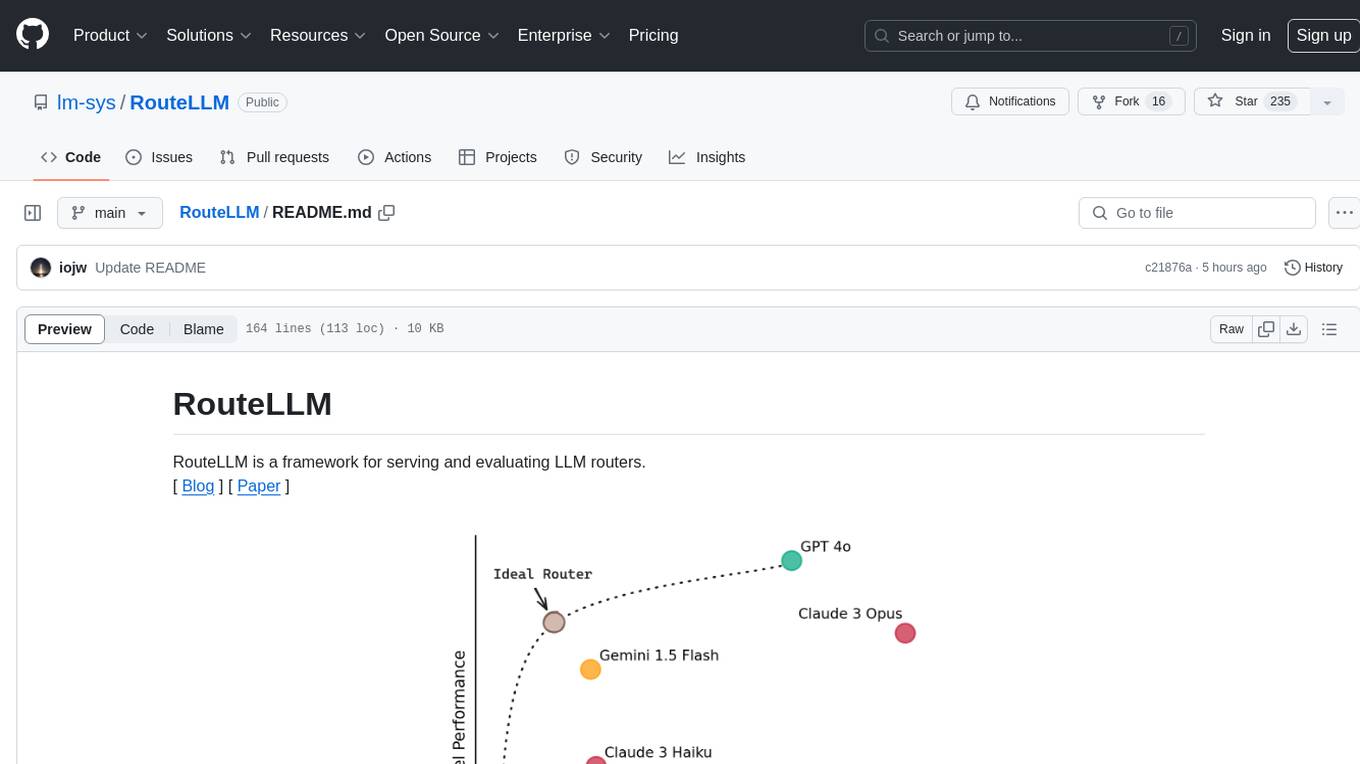

RouteLLM

RouteLLM is a framework for serving and evaluating LLM routers. It allows users to launch an OpenAI-compatible API that routes requests to the best model based on cost thresholds. Trained routers are provided to reduce costs while maintaining performance. Users can easily extend the framework, compare router performance, and calibrate cost thresholds. RouteLLM supports multiple routing strategies and benchmarks, offering a lightweight server and evaluation framework. It enables users to evaluate routers on benchmarks, calibrate thresholds, and modify model pairs. Contributions for adding new routers and benchmarks are welcome.

DeepAI

DeepAI is a proxy server that enhances the interaction experience of large language models (LLMs) by integrating the 'thinking chain' process. It acts as an intermediary layer, receiving standard OpenAI API compatible requests, using independent 'thinking services' to generate reasoning processes, and then forwarding the enhanced requests to the LLM backend of your choice. This ensures that responses are not only generated by the LLM but also based on pre-inference analysis, resulting in more insightful and coherent answers. DeepAI supports seamless integration with applications designed for the OpenAI API, providing endpoints for '/v1/chat/completions' and '/v1/models', making it easy to integrate into existing applications. It offers features such as reasoning chain enhancement, flexible backend support, API key routing, weighted random selection, proxy support, comprehensive logging, and graceful shutdown.

tingly-box

Tingly Box is a tool that helps in deciding which model to call, compressing context, and routing requests efficiently. It offers secure, reliable, and customizable functional extensions. With features like unified API, smart routing, context compression, auto API translation, blazing fast performance, flexible authentication, visual control panel, and client-side usage stats, Tingly Box provides a comprehensive solution for managing AI models and tokens. It supports integration with various IDEs, CLI tools, SDKs, and AI applications, making it versatile and easy to use. The tool also allows seamless integration with OAuth providers like Claude Code, enabling users to utilize existing quotas in OpenAI-compatible tools. Tingly Box aims to simplify AI model management and usage by providing a single endpoint for multiple providers with minimal configuration, promoting seamless integration with SDKs and CLI tools.

ClawRouter

ClawRouter is a tool designed to route every request to the cheapest model that can handle it, offering a wallet-based system with 30+ models available without the need for API keys. It provides 100% local routing with 14-dimension weighted scoring, zero external calls for routing decisions, and supports various models from providers like OpenAI, Anthropic, Google, DeepSeek, xAI, and Moonshot. Users can pay per request with USDC on Base, benefiting from an open-source, MIT-licensed, fully inspectable routing logic. The tool is optimized for agent swarm and multi-step workflows, offering cost-efficient solutions for parallel web research, multi-agent orchestration, and long-running automation tasks.

parallax

Parallax is a fully decentralized inference engine developed by Gradient. It allows users to build their own AI cluster for model inference across distributed nodes with varying configurations and physical locations. Core features include hosting local LLM on personal devices, cross-platform support, pipeline parallel model sharding, paged KV cache management, continuous batching for Mac, dynamic request scheduling, and routing for high performance. The backend architecture includes P2P communication powered by Lattica, GPU backend powered by SGLang and vLLM, and MAC backend powered by MLX LM.

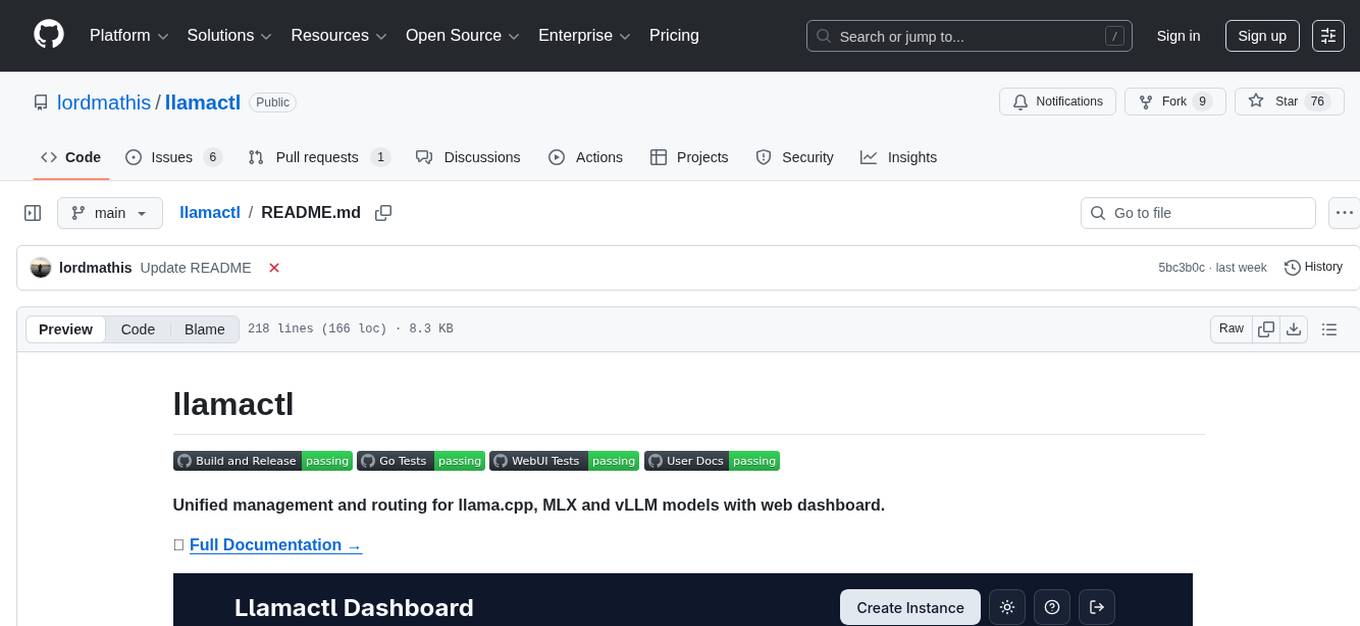

llamactl

llamactl is a tool for unified management and routing of llama.cpp, MLX, and vLLM models with a web dashboard. It offers easy model management with built-in model downloader, dynamic multi-model instances, smart resource management, and a modern React UI dashboard. It provides flexible integration with API compatibility for OpenAI chat completions and resources endpoints, multi-backend support, and Docker readiness. The tool supports distributed deployment with remote instances and central management. Users can quickly start by installing a backend, downloading llamactl, creating an instance, and starting inferencing.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.