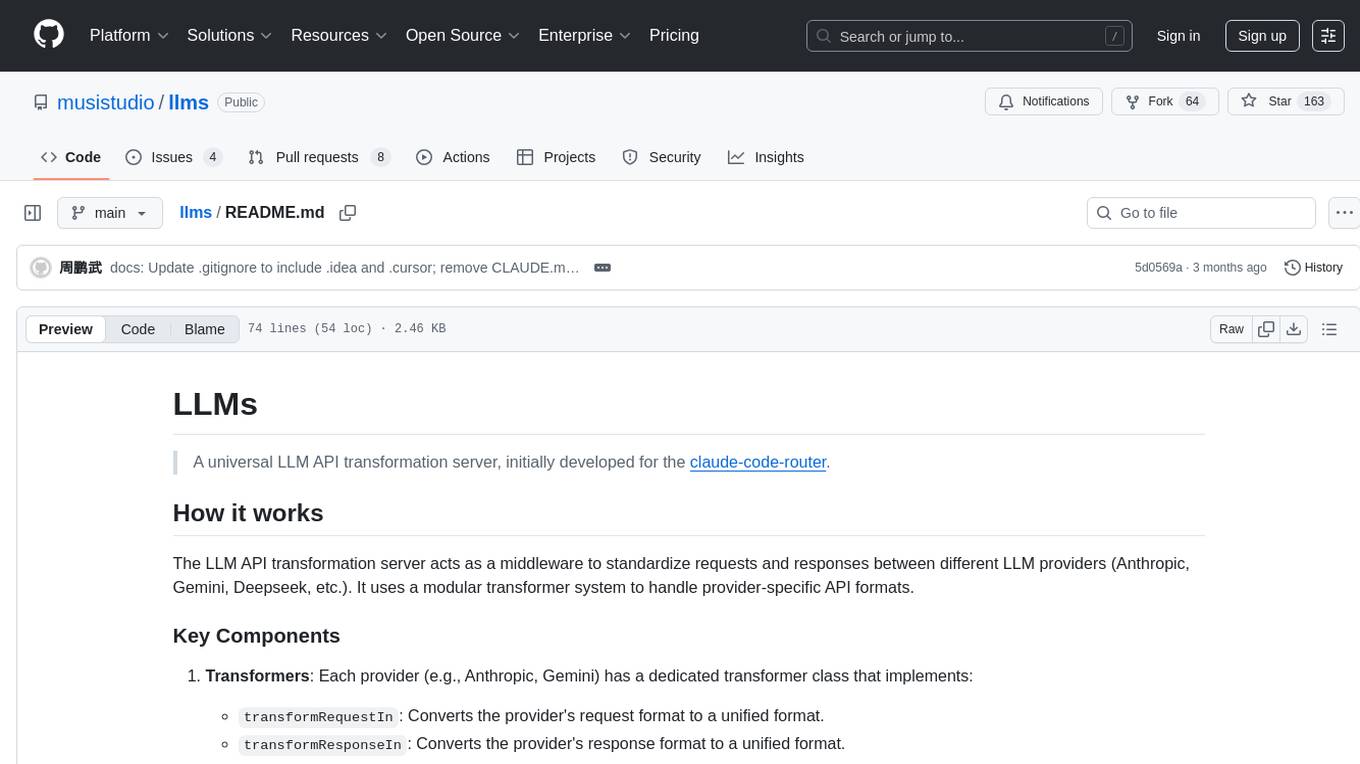

llms

A universal LLM API transformation server

Stars: 181

LLMs is a universal LLM API transformation server designed to standardize requests and responses between different LLM providers such as Anthropic, Gemini, and Deepseek. It uses a modular transformer system to handle provider-specific API formats, supporting real-time streaming responses and converting data into standardized formats. The server transforms requests and responses to and from unified formats, enabling seamless communication between various LLM providers.

README:

A universal LLM API transformation server, initially developed for the claude-code-router.

The LLM API transformation server acts as a middleware to standardize requests and responses between different LLM providers (Anthropic, Gemini, Deepseek, etc.). It uses a modular transformer system to handle provider-specific API formats.

-

Transformers: Each provider (e.g., Anthropic, Gemini) has a dedicated transformer class that implements:

-

transformRequestIn: Converts the provider's request format to a unified format. -

transformResponseIn: Converts the provider's response format to a unified format. -

transformRequestOut: Converts the unified request format to the provider's format. -

transformResponseOut: Converts the unified response format back to the provider's format. -

endPoint: Specifies the API endpoint for the provider (e.g., "/v1/messages" for Anthropic).

-

-

Unified Formats:

- Requests and responses are standardized using

UnifiedChatRequestandUnifiedChatResponsetypes.

- Requests and responses are standardized using

-

Streaming Support:

- Handles real-time streaming responses for providers like Anthropic, converting chunked data into a standardized format.

-

Request:

- Incoming provider-specific requests are transformed into the unified format.

- The unified request is processed by the server.

-

Response:

- The server's unified response is transformed back into the provider's format.

- Streaming responses are handled with chunked data conversion.

- Anthropic: Converts between OpenAI-style and Anthropic-style message formats.

- Gemini: Adjusts tool definitions and parameter formats for Gemini compatibility.

- Deepseek: Enforces token limits and handles reasoning content in streams.

-

Install dependencies:

npm install # or pnpm install -

Development:

npm run dev # Uses nodemon + tsx for hot-reloading src/server.ts -

Build:

npm run build # Outputs to dist/cjs and dist/esm -

Test:

npm test # See CLAUDE.md for details

-

Path alias:

-

@is mapped to thesrcdirectory, useimport xxx from '@/xxx'.

-

-

Environment variables:

- Supports

.envandconfig.json, seesrc/services/config.ts.

- Supports

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llms

Similar Open Source Tools

llms

LLMs is a universal LLM API transformation server designed to standardize requests and responses between different LLM providers such as Anthropic, Gemini, and Deepseek. It uses a modular transformer system to handle provider-specific API formats, supporting real-time streaming responses and converting data into standardized formats. The server transforms requests and responses to and from unified formats, enabling seamless communication between various LLM providers.

Toolify

Toolify is a middleware proxy that empowers Large Language Models (LLMs) and OpenAI API interfaces by enabling function calling capabilities. It acts as an intermediary between applications and LLM APIs, injecting prompts and parsing tool calls from the model's response. Key features include universal function calling, multiple function calls support, flexible initiation, compatibility with

llmgateway

The llmgateway repository is a tool that provides a gateway for interacting with various LLM (Large Language Model) models. It allows users to easily access and utilize pre-trained language models for tasks such as text generation, sentiment analysis, and language translation. The tool simplifies the process of integrating LLMs into applications and workflows, enabling developers to leverage the power of state-of-the-art language models for various natural language processing tasks.

llm_aided_ocr

The LLM-Aided OCR Project is an advanced system that enhances Optical Character Recognition (OCR) output by leveraging natural language processing techniques and large language models. It offers features like PDF to image conversion, OCR using Tesseract, error correction using LLMs, smart text chunking, markdown formatting, duplicate content removal, quality assessment, support for local and cloud-based LLMs, asynchronous processing, detailed logging, and GPU acceleration. The project provides detailed technical overview, text processing pipeline, LLM integration, token management, quality assessment, logging, configuration, and customization. It requires Python 3.12+, Tesseract OCR engine, PDF2Image library, PyTesseract, and optional OpenAI or Anthropic API support for cloud-based LLMs. The installation process involves setting up the project, installing dependencies, and configuring environment variables. Users can place a PDF file in the project directory, update input file path, and run the script to generate post-processed text. The project optimizes processing with concurrent processing, context preservation, and adaptive token management. Configuration settings include choosing between local or API-based LLMs, selecting API provider, specifying models, and setting context size for local LLMs. Output files include raw OCR output and LLM-corrected text. Limitations include performance dependency on LLM quality and time-consuming processing for large documents.

open-webui-tools

Open WebUI Tools Collection is a set of tools for structured planning, arXiv paper search, Hugging Face text-to-image generation, prompt enhancement, and multi-model conversations. It enhances LLM interactions with academic research, image generation, and conversation management. Tools include arXiv Search Tool and Hugging Face Image Generator. Function Pipes like Planner Agent offer autonomous plan generation and execution. Filters like Prompt Enhancer improve prompt quality. Installation and configuration instructions are provided for each tool and pipe.

model-compose

model-compose is an open-source, declarative workflow orchestrator inspired by docker-compose. It lets you define and run AI model pipelines using simple YAML files. Effortlessly connect external AI services or run local AI models within powerful, composable workflows. Features include declarative design, multi-workflow support, modular components, flexible I/O routing, streaming mode support, and more. It supports running workflows locally or serving them remotely, Docker deployment, environment variable support, and provides a CLI interface for managing AI workflows.

company-research-agent

Agentic Company Researcher is a multi-agent tool that generates comprehensive company research reports by utilizing a pipeline of AI agents to gather, curate, and synthesize information from various sources. It features multi-source research, AI-powered content filtering, real-time progress streaming, dual model architecture, modern React frontend, and modular architecture. The tool follows an agentic framework with specialized research and processing nodes, leverages separate models for content generation, uses a content curation system for relevance scoring and document processing, and implements a real-time communication system via WebSocket connections. Users can set up the tool quickly using the provided setup script or manually, and it can also be deployed using Docker and Docker Compose. The application can be used for local development and deployed to various cloud platforms like AWS Elastic Beanstalk, Docker, Heroku, and Google Cloud Run.

OpenGradient-SDK

OpenGradient Python SDK is a tool for decentralized model management and inference services on the OpenGradient platform. It provides programmatic access to distributed AI infrastructure with cryptographic verification capabilities. The SDK supports verifiable LLM inference, multi-provider support, TEE execution, model hub integration, consensus-based verification, and command-line interface. Users can leverage this SDK to build AI applications with execution guarantees through Trusted Execution Environments and blockchain-based settlement, ensuring auditability and tamper-proof AI execution.

graphiti

Graphiti is a framework for building and querying temporally-aware knowledge graphs, tailored for AI agents in dynamic environments. It continuously integrates user interactions, structured and unstructured data, and external information into a coherent, queryable graph. The framework supports incremental data updates, efficient retrieval, and precise historical queries without complete graph recomputation, making it suitable for developing interactive, context-aware AI applications.

llama-cpp-agent

The llama-cpp-agent framework is a tool designed for easy interaction with Large Language Models (LLMs). Allowing users to chat with LLM models, execute structured function calls and get structured output (objects). It provides a simple yet robust interface and supports llama-cpp-python and OpenAI endpoints with GBNF grammar support (like the llama-cpp-python server) and the llama.cpp backend server. It works by generating a formal GGML-BNF grammar of the user defined structures and functions, which is then used by llama.cpp to generate text valid to that grammar. In contrast to most GBNF grammar generators it also supports nested objects, dictionaries, enums and lists of them.

extension-gen-ai

The Looker GenAI Extension provides code examples and resources for building a Looker Extension that integrates with Vertex AI Large Language Models (LLMs). Users can leverage the power of LLMs to enhance data exploration and analysis within Looker. The extension offers generative explore functionality to ask natural language questions about data and generative insights on dashboards to analyze data by asking questions. It leverages components like BQML Remote Models, BQML Remote UDF with Vertex AI, and Custom Fine Tune Model for different integration options. Deployment involves setting up infrastructure with Terraform and deploying the Looker Extension by creating a Looker project, copying extension files, configuring BigQuery connection, connecting to Git, and testing the extension. Users can save example prompts and configure user settings for the extension. Development of the Looker Extension environment includes installing dependencies, starting the development server, and building for production.

Vodalus-Expert-LLM-Forge

Vodalus Expert LLM Forge is a tool designed for crafting datasets and efficiently fine-tuning models using free open-source tools. It includes components for data generation, LLM interaction, RAG engine integration, model training, fine-tuning, and quantization. The tool is suitable for users at all levels and is accompanied by comprehensive documentation. Users can generate synthetic data, interact with LLMs, train models, and optimize performance for local execution. The tool provides detailed guides and instructions for setup, usage, and customization.

gitdiagram

GitDiagram is a tool that turns any GitHub repository into an interactive diagram for visualization in seconds. It offers instant visualization, interactivity, fast generation, customization, and API access. The tool utilizes a tech stack including Next.js, FastAPI, PostgreSQL, Claude 3.5 Sonnet, Vercel, EC2, GitHub Actions, PostHog, and Api-Analytics. Users can self-host the tool for local development and contribute to its development. GitDiagram is inspired by Gitingest and has future plans to use larger context models, allow user API key input, implement RAG with Mermaid.js docs, and include font-awesome icons in diagrams.

py-llm-core

PyLLMCore is a light-weighted interface with Large Language Models with native support for llama.cpp, OpenAI API, and Azure deployments. It offers a Pythonic API that is simple to use, with structures provided by the standard library dataclasses module. The high-level API includes the assistants module for easy swapping between models. PyLLMCore supports various models including those compatible with llama.cpp, OpenAI, and Azure APIs. It covers use cases such as parsing, summarizing, question answering, hallucinations reduction, context size management, and tokenizing. The tool allows users to interact with language models for tasks like parsing text, summarizing content, answering questions, reducing hallucinations, managing context size, and tokenizing text.

FileScopeMCP

FileScopeMCP is a TypeScript-based tool for ranking files in a codebase by importance, tracking dependencies, and providing summaries. It analyzes code structure, generates importance scores, maps bidirectional dependencies, visualizes file relationships, and allows adding custom summaries. The tool supports multiple languages, persistent storage, and offers tools for file tree management, file analysis, file summaries, diagram generation, and file watching. It is built with TypeScript/Node.js, implements the Model Context Protocol, uses Mermaid.js for diagram generation, and stores data in JSON format. FileScopeMCP aims to enhance code understanding and visualization for developers.

Auto-Analyst

Auto-Analyst is an AI-driven data analytics agentic system designed to simplify and enhance the data science process. By integrating various specialized AI agents, this tool aims to make complex data analysis tasks more accessible and efficient for data analysts and scientists. Auto-Analyst provides a streamlined approach to data preprocessing, statistical analysis, machine learning, and visualization, all within an interactive Streamlit interface. It offers plug and play Streamlit UI, agents with data science speciality, complete automation, LLM agnostic operation, and is built using lightweight frameworks.

For similar tasks

llms

LLMs is a universal LLM API transformation server designed to standardize requests and responses between different LLM providers such as Anthropic, Gemini, and Deepseek. It uses a modular transformer system to handle provider-specific API formats, supporting real-time streaming responses and converting data into standardized formats. The server transforms requests and responses to and from unified formats, enabling seamless communication between various LLM providers.

For similar jobs

llms

LLMs is a universal LLM API transformation server designed to standardize requests and responses between different LLM providers such as Anthropic, Gemini, and Deepseek. It uses a modular transformer system to handle provider-specific API formats, supporting real-time streaming responses and converting data into standardized formats. The server transforms requests and responses to and from unified formats, enabling seamless communication between various LLM providers.

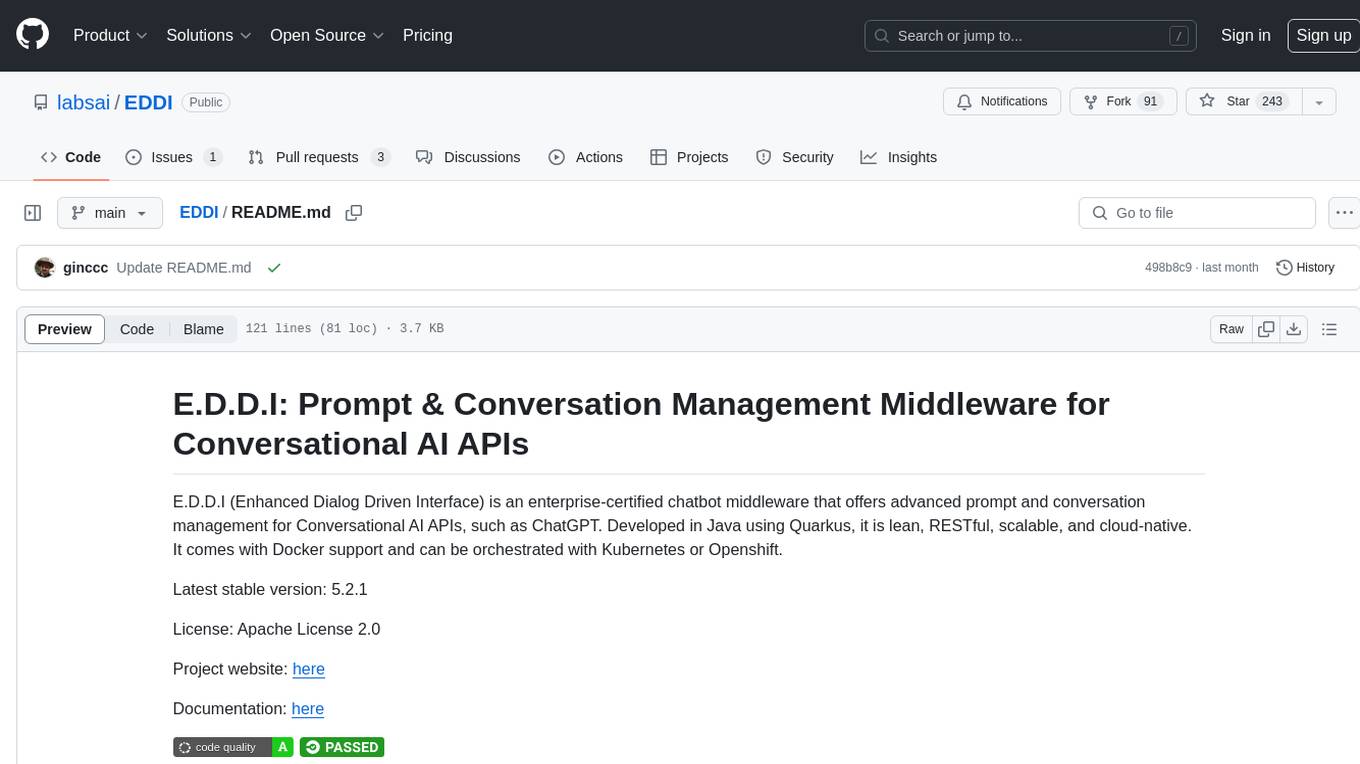

EDDI

E.D.D.I (Enhanced Dialog Driven Interface) is an enterprise-certified chatbot middleware that offers advanced prompt and conversation management for Conversational AI APIs. Developed in Java using Quarkus, it is lean, RESTful, scalable, and cloud-native. E.D.D.I is highly scalable and designed to efficiently manage conversations in AI-driven applications, with seamless API integration capabilities. Notable features include configurable NLP and Behavior rules, support for multiple chatbots running concurrently, and integration with MongoDB, OAuth 2.0, and HTML/CSS/JavaScript for UI. The project requires Java 21, Maven 3.8.4, and MongoDB >= 5.0 to run. It can be built as a Docker image and deployed using Docker or Kubernetes, with additional support for integration testing and monitoring through Prometheus and Kubernetes endpoints.

empower-functions

Empower Functions is a family of large language models (LLMs) that provide GPT-4 level capabilities for real-world 'tool using' use cases. These models offer compatibility support to be used as drop-in replacements, enabling interactions with external APIs by recognizing when a function needs to be called and generating JSON containing necessary arguments based on user inputs. This capability is crucial for building conversational agents and applications that convert natural language into API calls, facilitating tasks such as weather inquiries, data extraction, and interactions with knowledge bases. The models can handle multi-turn conversations, choose between tools or standard dialogue, ask for clarification on missing parameters, integrate responses with tool outputs in a streaming fashion, and efficiently execute multiple functions either in parallel or sequentially with dependencies.

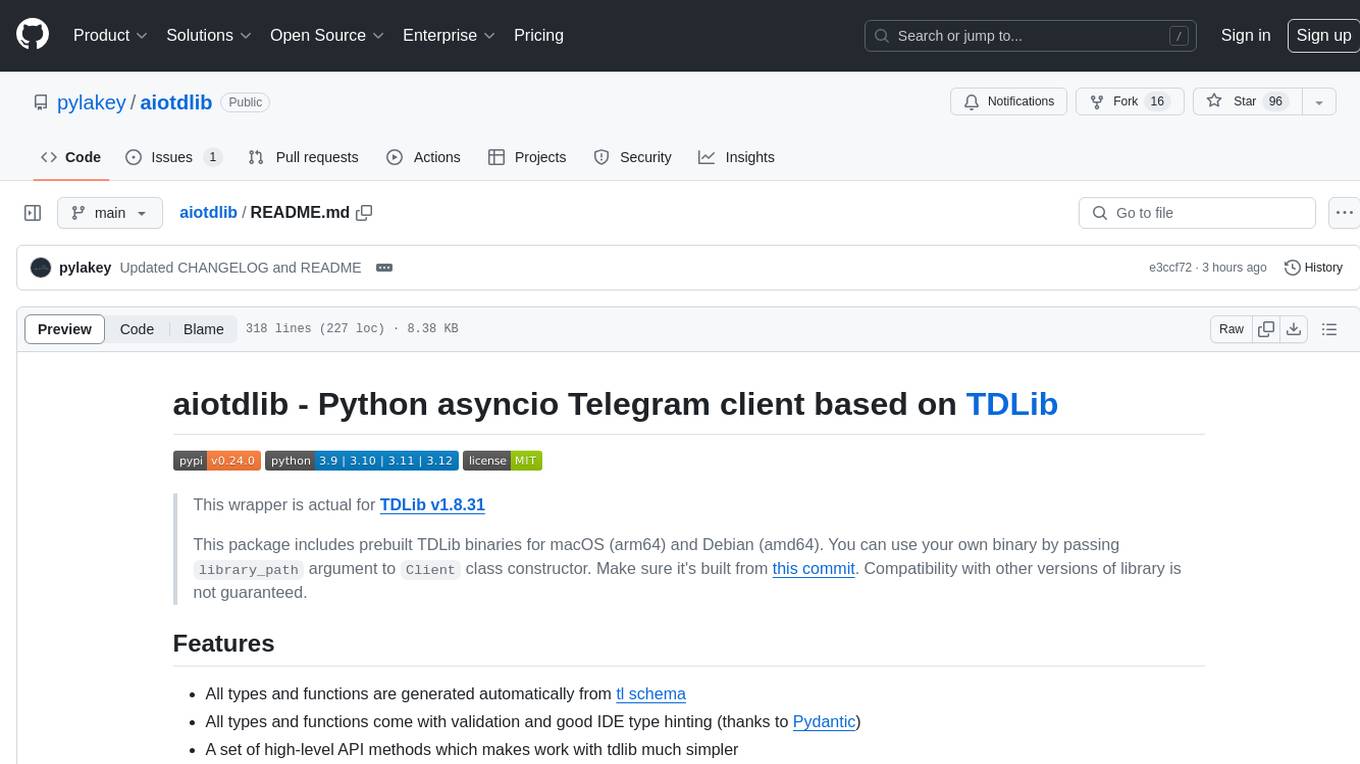

aiotdlib

aiotdlib is a Python asyncio Telegram client based on TDLib. It provides automatic generation of types and functions from tl schema, validation, good IDE type hinting, and high-level API methods for simpler work with tdlib. The package includes prebuilt TDLib binaries for macOS (arm64) and Debian Bullseye (amd64). Users can use their own binary by passing `library_path` argument to `Client` class constructor. Compatibility with other versions of the library is not guaranteed. The tool requires Python 3.9+ and users need to get their `api_id` and `api_hash` from Telegram docs for installation and usage.

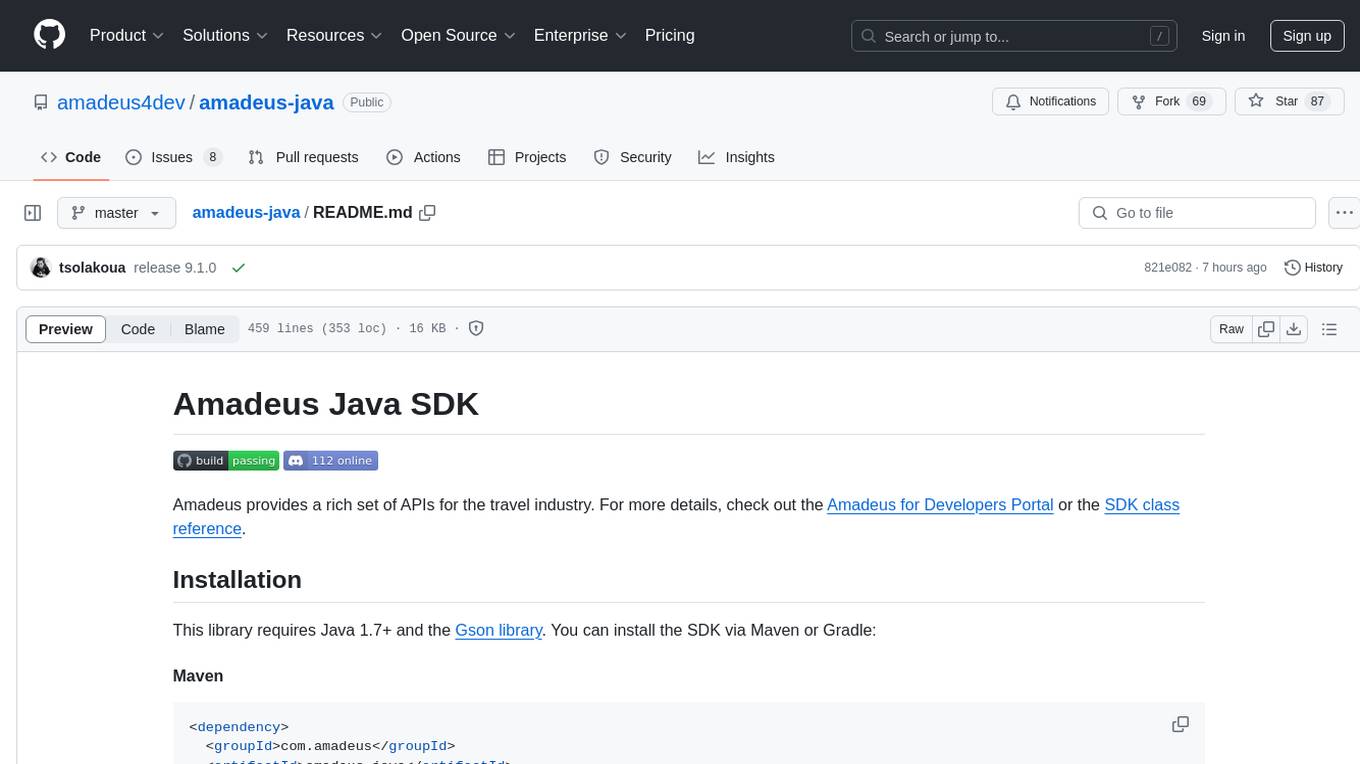

amadeus-java

Amadeus Java SDK provides a rich set of APIs for the travel industry, allowing developers to access various functionalities such as flight search, booking, airport information, and more. The SDK simplifies interaction with the Amadeus API by providing self-contained code examples and detailed documentation. Developers can easily make API calls, handle responses, and utilize features like pagination and logging. The SDK supports various endpoints for tasks like flight search, booking management, airport information retrieval, and travel analytics. It also offers functionalities for hotel search, booking, and sentiment analysis. Overall, the Amadeus Java SDK is a comprehensive tool for integrating Amadeus APIs into Java applications.

java-genai

Java idiomatic SDK for the Gemini Developer APIs and Vertex AI APIs. The SDK provides a Client class for interacting with both APIs, allowing seamless switching between the 2 backends without code rewriting. It supports features like generating content, embedding content, generating images, upscaling images, editing images, and generating videos. The SDK also includes options for setting API versions, HTTP request parameters, client behavior, and response schemas.

ProxyPilot

ProxyPilot is a powerful local API proxy tool built in Go that eliminates the need for separate API keys when using Claude Code, Codex, Gemini, Kiro, and Qwen subscriptions with any AI coding tool. It handles OAuth authentication, token management, and API translation automatically, providing a single server to route requests. The tool supports multiple authentication providers, universal API translation, tool calling repair, extended thinking models, OAuth integration, multi-account support, quota auto-switching, usage statistics tracking, context compression, agentic harness for coding agents, session memory, system tray app, auto-updates, rollback support, and over 60 management APIs. ProxyPilot also includes caching layers for response and prompt caching to reduce latency and token usage.

forksilly.doc

ForkSilly.doc is a repository mainly for storing documentation of ForkSilly, an Android project developed using React Native/Expo. It is suitable for users with experience in SillyTavern. The project is self-shared and may not accept feature requests. It is designed for pure text cards, illustration cards, and Stable Diffusion text-image. It is compatible with SillyTavern V2 character cards, world books, regex, presets, and chat records. Users can import and export at any time. The tool supports various customization options such as chat font, background image, and quick toggle of preset entries. It also allows the use of various OpenAI-compatible APIs and provides built-in storage management features. Users can utilize text-image functionality and access free text-image services like pollinations.ai. Additionally, it supports Stable Diffusion text-image features and integration with silicon-based flow and Gemini embedding models. The tool does not support TTS or connecting to NAI.