Best AI tools for< Support Model Quantization >

20 - AI tool Sites

Private LLM

Private LLM is a secure, local, and private AI chatbot designed for iOS and macOS devices. It operates offline, ensuring that user data remains on the device, providing a safe and private experience. The application offers a range of features for text generation and language assistance, utilizing state-of-the-art quantization techniques to deliver high-quality on-device AI experiences without compromising privacy. Users can access a variety of open-source LLM models, integrate AI into Siri and Shortcuts, and benefit from AI language services across macOS apps. Private LLM stands out for its superior model performance and commitment to user privacy, making it a smart and secure tool for creative and productive tasks.

AnythingLLM

AnythingLLM is an all-in-one AI application designed for everyone. It offers a comprehensive suite of tools for working with LLMs (Large Language Models), documents, and agents in a fully private manner. Users can download AnythingLLM for Desktop on Windows, MacOS, and Linux, enabling flexible one-click installation. The application supports custom model integration, including closed-source models like GPT-4 and custom fine-tuned models like Llama2. With the ability to handle various document formats beyond PDFs, AnythingLLM provides tailored solutions with locally running defaults for privacy. Additionally, users can access AnythingLLM Cloud for extended functionalities.

Perchance AI

Perchance AI is a free online platform offering 18 AI image generators without the need for sign-up. Users can explore 75 text-to-image art styles and utilize various models like Flux AI, SDXL, SDXL Lightning, and SDXL Flash. The platform supports a wide range of creative applications, from generating bedroom scenes to creating unique art pieces. With a user-friendly interface and diverse generator options, Perchance AI caters to both casual users and professionals seeking AI-powered creative tools.

Fooocus

Fooocus is a cutting-edge AI-powered image generation and editing platform that empowers users to bring their creative visions to life. With advanced features like unique inpainting algorithms, image prompt enhancements, and versatile model support, Fooocus stands out as a leading platform in creative AI technology. Users can leverage Fooocus's capabilities to generate stunning images, edit and refine them with precision, and collaborate with others to explore new creative horizons.

Gorilla

Gorilla is an AI tool that integrates a large language model (LLM) with massive APIs to enable users to interact with a wide range of services. It offers features such as training the model to support parallel functions, benchmarking LLMs on function-calling capabilities, and providing a runtime for executing LLM-generated actions like code and API calls. Gorilla is open-source and focuses on enhancing interaction between apps and services with human-out-of-loop functionality.

AIby.email

AIby.email is an AI-powered email assistant that helps you write better emails, faster. It uses natural language processing to understand your intent and generate personalized email responses. AIby.email also offers a variety of other features, such as email scheduling, tracking, and analytics.

Bibit AI

Bibit AI is a real estate marketing AI designed to enhance the efficiency and effectiveness of real estate marketing and sales. It can help create listings, descriptions, and property content, and offers a host of other features. Bibit AI is the world's first AI for Real Estate. We are transforming the real estate industry by boosting efficiency and simplifying tasks like listing creation and content generation.

Sapling

Sapling is a language model copilot and API for businesses. It provides real-time suggestions to help sales, support, and success teams more efficiently compose personalized responses. Sapling also offers a variety of features to help businesses improve their customer service, including: * Autocomplete Everywhere: Provides deep learning-powered autocomplete suggestions across all messaging platforms, allowing agents to compose replies more quickly. * Sapling Suggest: Retrieves relevant responses from a team response bank and allows agents to respond more quickly to customer inquiries by simply clicking on suggested responses in real time. * Snippet macros: Allow for quick insertion of common responses. * Grammar and language quality improvements: Sapling catches 60% more language quality issues than other spelling and grammar checkers using a machine learning system trained on millions of English sentences. * Enterprise teams can define custom settings for compliance and content governance. * Distribute knowledge: Ensure team knowledge is shared in a snippet library accessible on all your web applications. * Perform blazing fast search on your knowledge library for compliance, upselling, training, and onboarding.

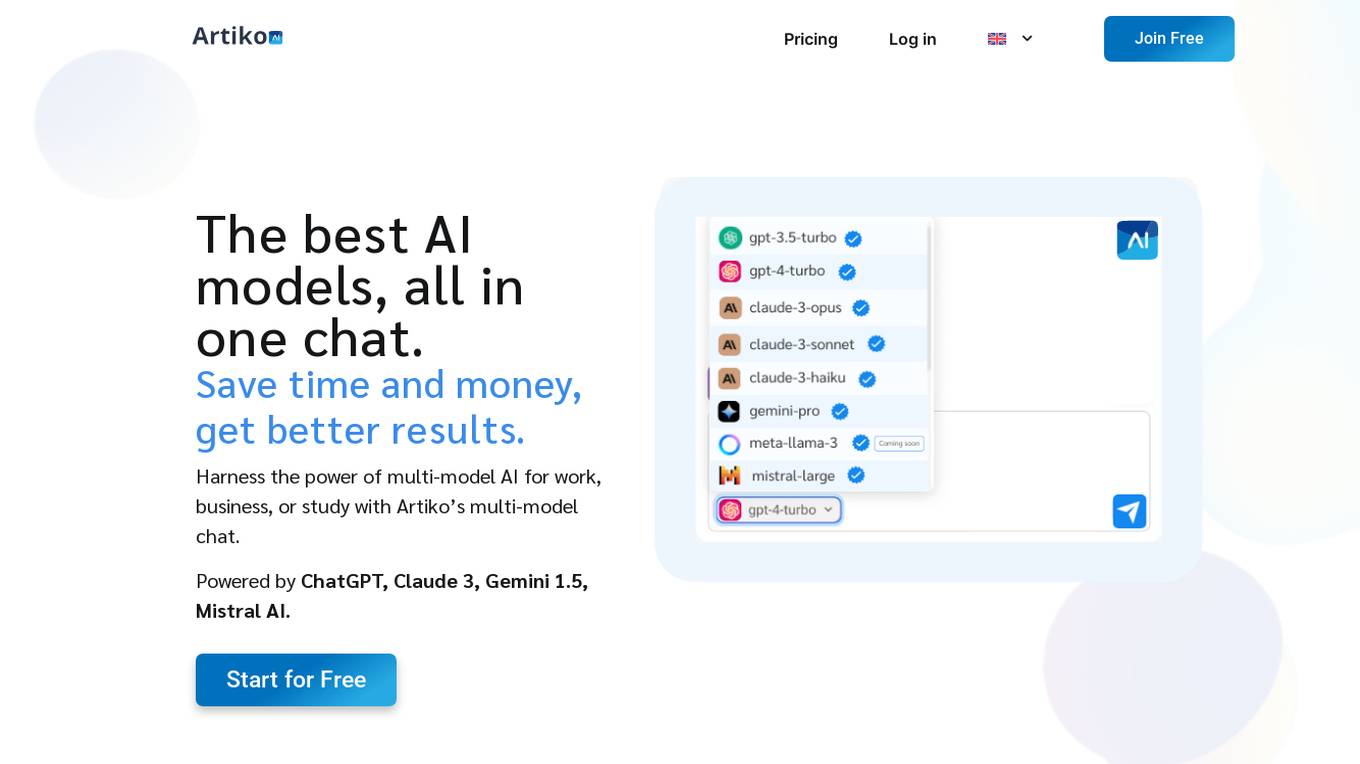

Artiko.ai

Artiko.ai is a multi-model AI chat platform that integrates advanced AI models such as ChatGPT, Claude 3, Gemini 1.5, and Mistral AI. It offers a convenient and cost-effective solution for work, business, or study by providing a single chat interface to harness the power of multi-model AI. Users can save time and money while achieving better results through features like text rewriting, data conversation, AI assistants, website chatbot, PDF and document chat, translation, brainstorming, and integration with various tools like Woocommerce, Amazon, Salesforce, and more.

Frontier Model Forum

The Frontier Model Forum (FMF) is a collaborative effort among leading AI companies to advance AI safety and responsibility. The FMF brings together technical and operational expertise to identify best practices, conduct research, and support the development of AI applications that meet society's most pressing needs. The FMF's core objectives include advancing AI safety research, identifying best practices, collaborating across sectors, and helping AI meet society's greatest challenges.

Anthropic

Anthropic is a research and deployment company founded in 2021 by former OpenAI researchers Dario Amodei, Daniela Amodei, and Geoffrey Irving. The company is developing large language models, including Claude, a multimodal AI model that can perform a variety of language-related tasks, such as answering questions, generating text, and translating languages.

Flux Pro Image Generator

Flux Pro Image Generator is an advanced AI tool that revolutionizes text-to-image generation. It offers cutting-edge features such as lightning-fast image creation, unparalleled image quality, user-friendly interface, advanced control options, and a collection of fun tools to spark creativity. Users can easily turn their ideas into stunning visuals in seconds without requiring expertise. Flux Pro is faster, more user-friendly, and produces higher quality images compared to many competitors. It is open-source, regularly updated, and allows for commercial use of generated images. The tool is web-based with potential mobile app releases in the future.

Genailia

Genailia is an AI platform that offers a range of products and services such as translation, transcription, chatbot, LLM, GPT, TTS, ASR, and social media insights. It harnesses AI to redefine possibilities by providing generative AI, linguistic interfaces, accelerators, and more in a single platform. The platform aims to streamline various tasks through AI technology, making it a valuable tool for businesses and individuals seeking efficient solutions.

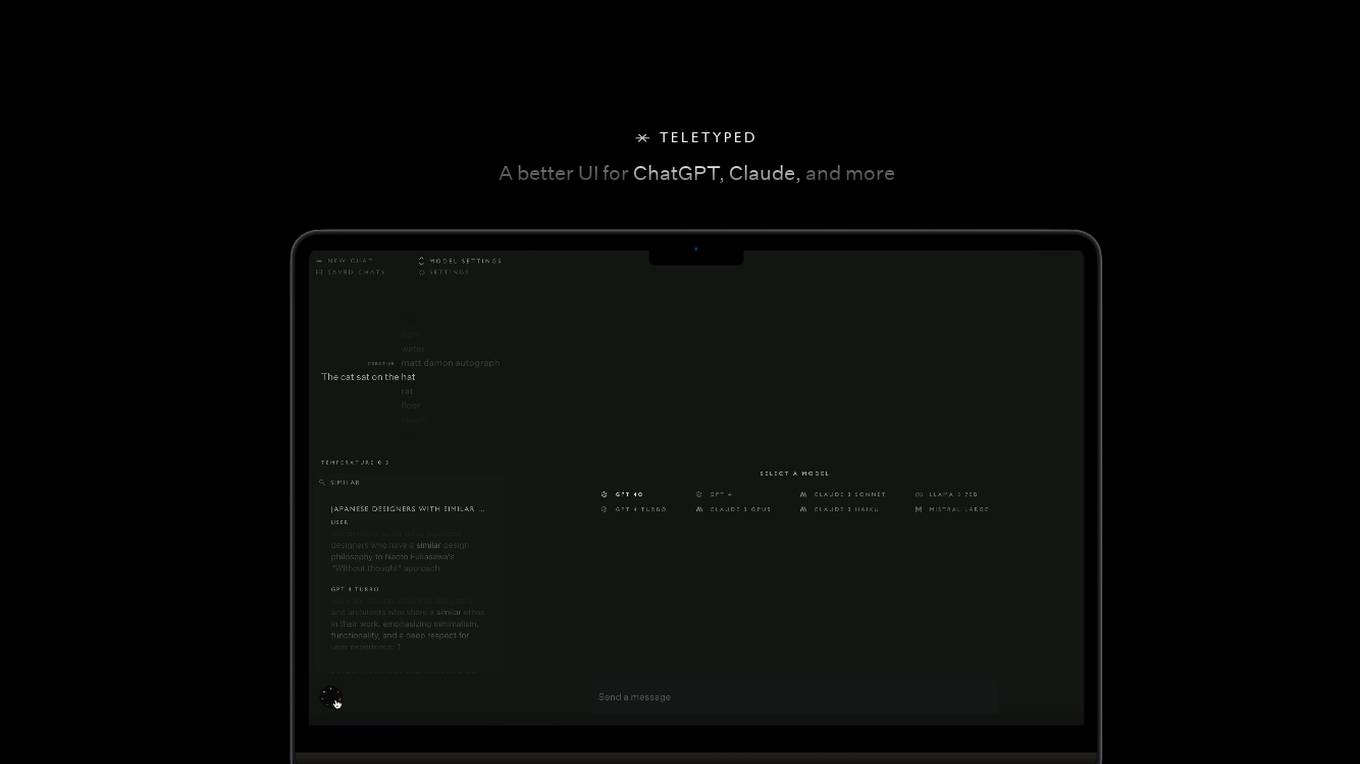

Teletyped

Teletyped is an AI tool designed to enhance the user experience of ChatGPT and other chat applications. It offers a better user interface, full-text search functionality across all chats, the ability to save chats, and automatic deletion of temporary chats. Users can customize the color themes, switch between different models mid-chat, and edit model responses. Teletyped also provides features like model regeneration, editing mode for models, and subscription-based model credits.

MeetYou

MeetYou is an AI application that allows users to create a digital entity based on their experiences, memories, and thoughts. The platform enables users to interact with their entity through chat, voice, or video, and customize its appearance and behavior. MeetYou leverages AI technology to extract and structure data, create models, and enhance the entity's capabilities with additional knowledge. With over 150 data sources available, users can shape their entity to reflect their personality and preferences. The platform also promotes privacy and offers a range of features to help users build a digital legacy.

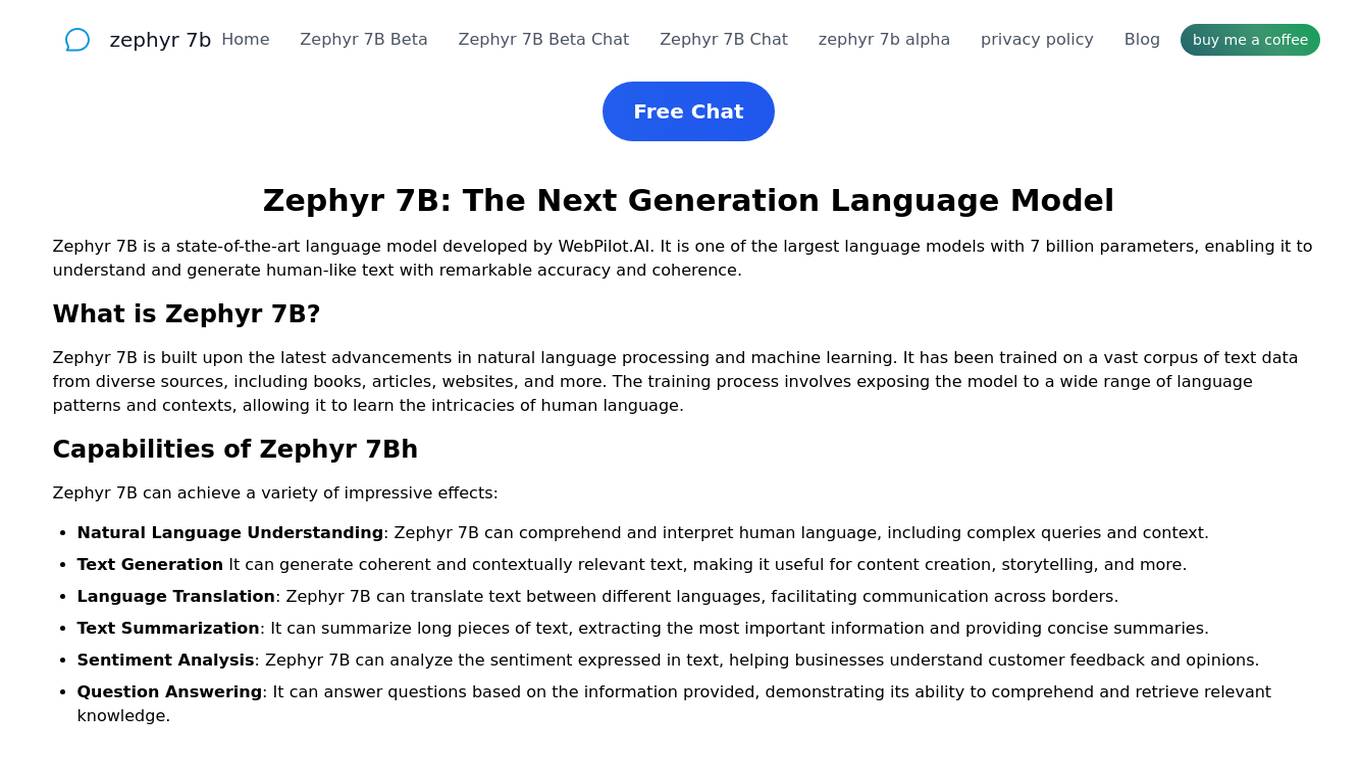

Zephyr 7B

Zephyr 7B is a state-of-the-art language model developed by WebPilot.AI with 7 billion parameters. It can understand and generate human-like text with remarkable accuracy and coherence. The model is built upon the latest advancements in natural language processing and machine learning, trained on a vast corpus of text data from diverse sources. Zephyr 7B offers capabilities such as natural language understanding, text generation, language translation, text summarization, sentiment analysis, and question answering. It represents a significant advancement in natural language processing, making it a powerful tool for content creation, customer support, research, and more.

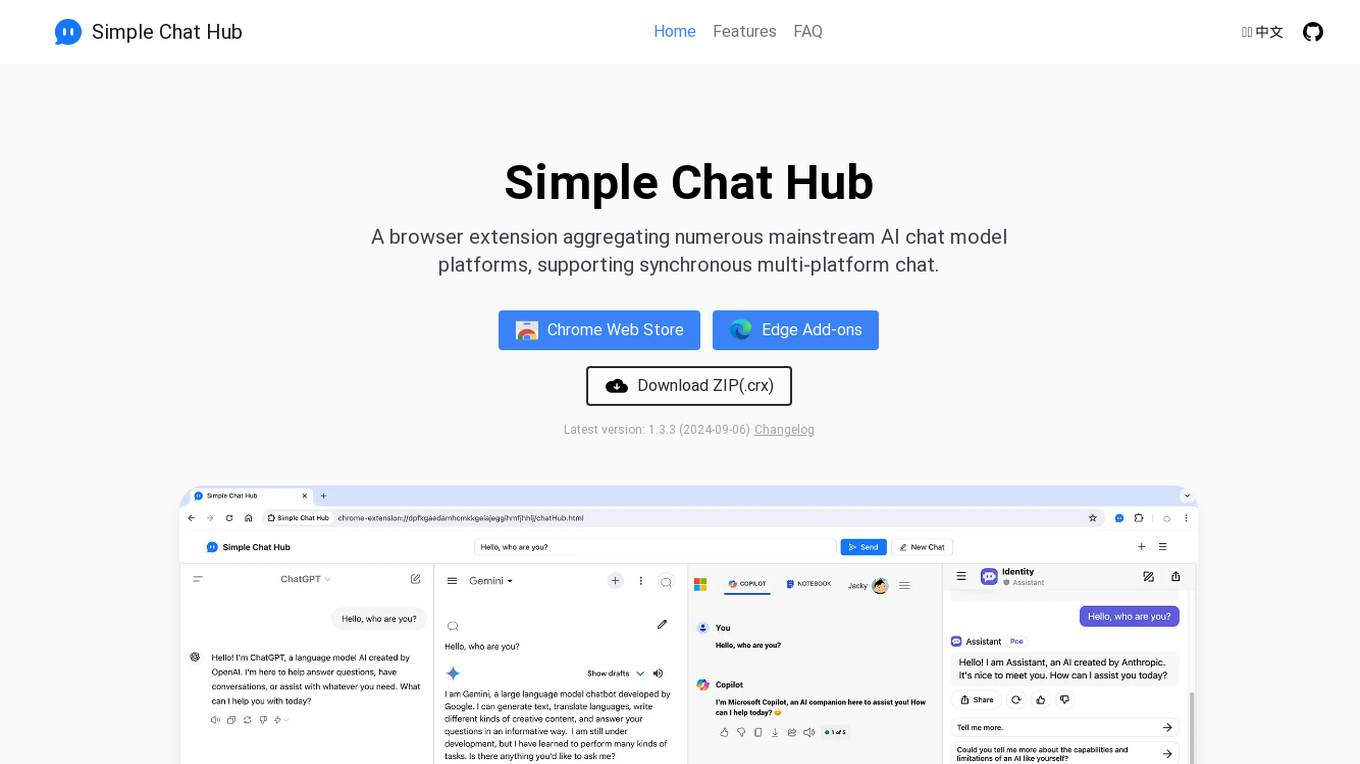

Simple Chat Hub

Simple Chat Hub is a browser extension that serves as an all-in-one AI chat solution by aggregating various mainstream AI chat model platforms. It supports synchronous multi-platform chat, allowing users to send messages to multiple platforms and receive replies simultaneously. The extension is easy to use, customizable, and supports features like screenshot sharing and international language switching. Users can operate chat sessions independently in each platform window without the need for API keys. Simple Chat Hub is free to use and constantly expanding its support for popular AI model chat platforms.

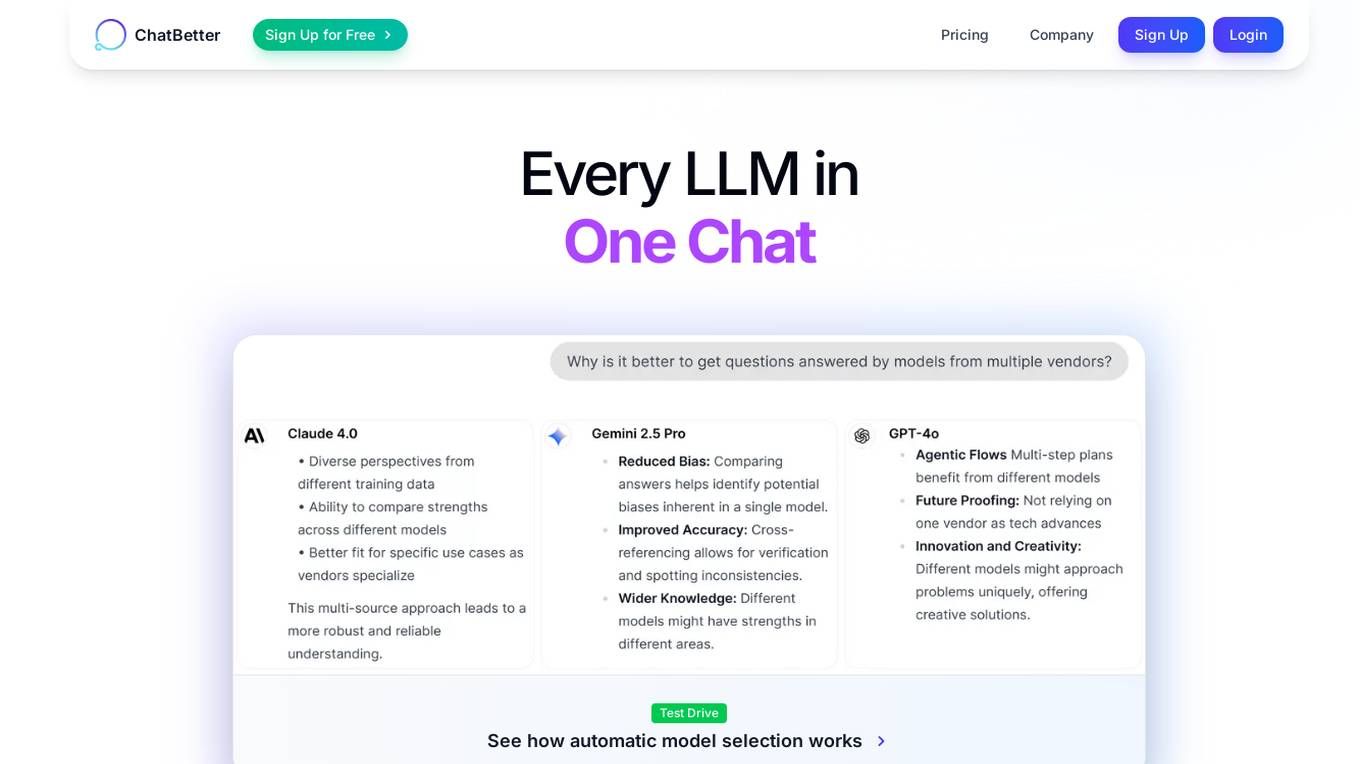

ChatBetter

ChatBetter is an AI tool that offers automatic model selection for users, allowing them to compare and merge responses from various language models. It simplifies the process by automatically routing questions to the best model, ensuring accurate answers every time. The platform provides access to major AI providers like OpenAI, Google, and more, enabling users to leverage a wide range of models in one interface. ChatBetter is designed for both users seeking quick and accurate responses and admins looking for team collaboration and data connections.

Novita AI

Novita AI is an AI cloud platform that offers Model APIs, Serverless, and GPU Instance solutions integrated into one cost-effective platform. It provides tools for building AI products, scaling with serverless architecture, and deploying with GPU instances. Novita AI caters to startups and businesses looking to leverage AI technologies without the need for extensive machine learning expertise. The platform also offers a Startup Program, 24/7 service support, and has received positive feedback for its reasonable pricing and stable API services.

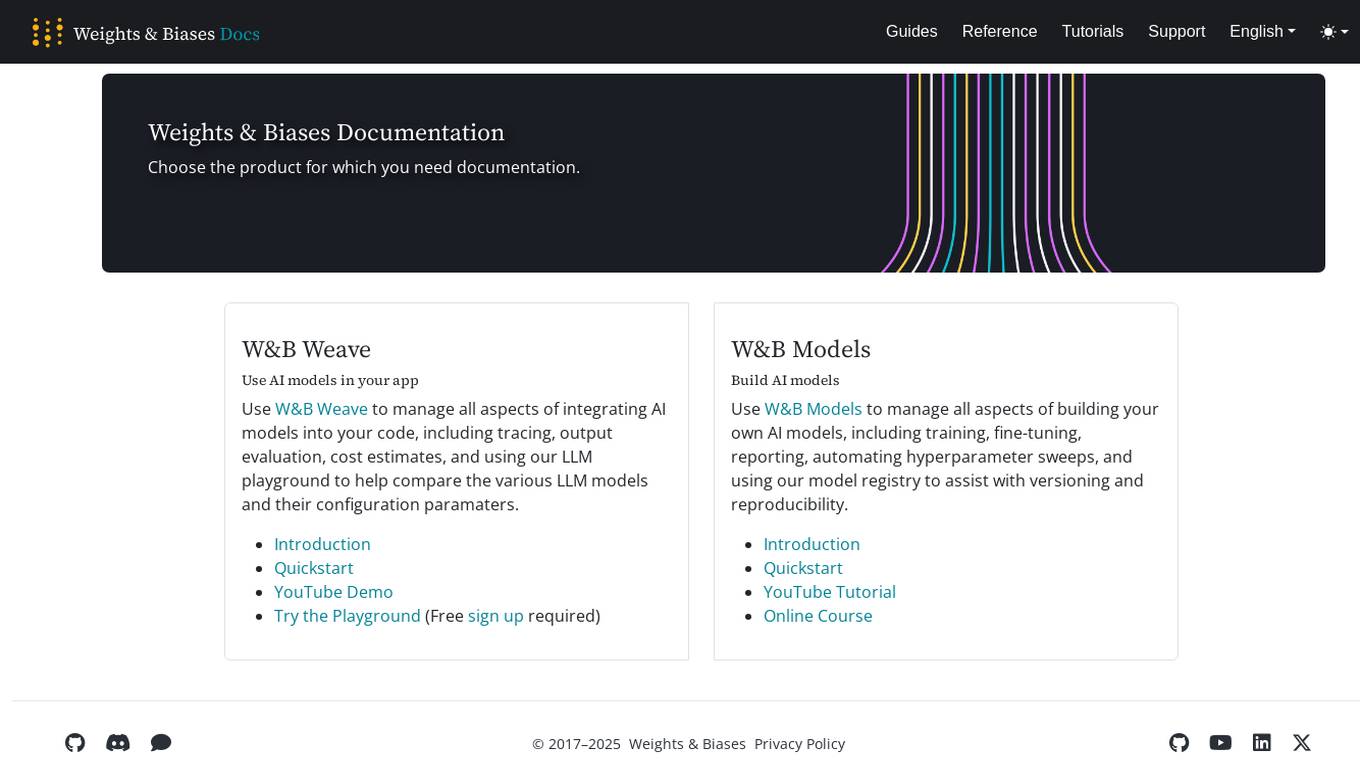

Weights & Biases

Weights & Biases is an AI tool that offers documentation, guides, tutorials, and support for using AI models in applications. The platform provides two main products: W&B Weave for integrating AI models into code and W&B Models for building custom AI models. Users can access features such as tracing, output evaluation, cost estimates, hyperparameter sweeps, model registry, and more. Weights & Biases aims to simplify the process of working with AI models and improving model reproducibility.

1 - Open Source AI Tools

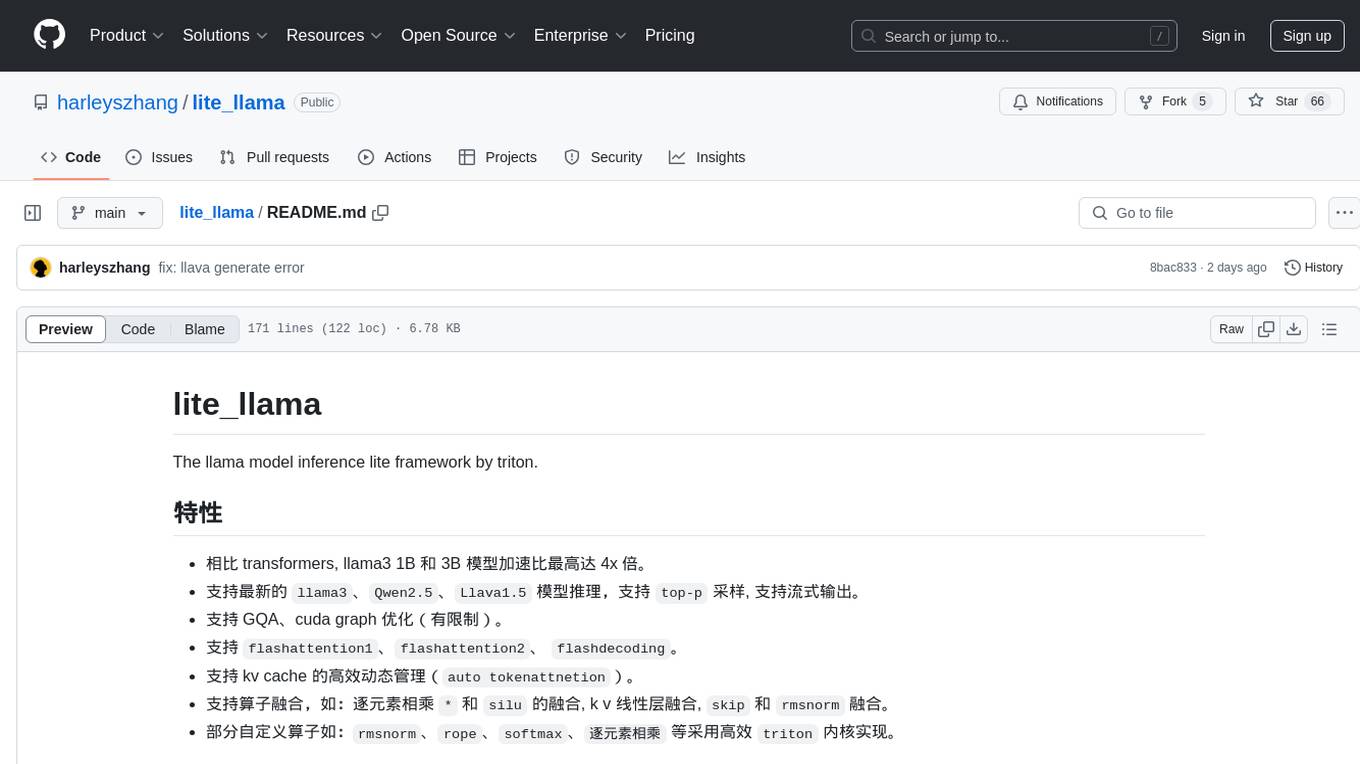

lite_llama

lite_llama is a llama model inference lite framework by triton. It offers accelerated inference for llama3, Qwen2.5, and Llava1.5 models with up to 4x speedup compared to transformers. The framework supports top-p sampling, stream output, GQA, and cuda graph optimizations. It also provides efficient dynamic management for kv cache, operator fusion, and custom operators like rmsnorm, rope, softmax, and element-wise multiplication using triton kernels.

20 - OpenAI Gpts

BRI - Educational with Rabindranath Tagore

Analyzing historical trends and advising on BRI strategies using Ibn Khaldun's insights. Write: "We are constantly enriching the model and rely on your support: http://Donate.U-Model.org"

Agent Prompt Generator for LLM's

This GPT generates the best possible LLM-agents for your system prompts. You can also specify the model size, like 3B, 33B, 70B, etc.

PETIT BRABANÇON DOG

"Small in size, big in heart, I'm the perfect companion for you from the start!"

Frankenstein by My BookGPTs

Embark on a journey into 《Frankenstein; Or, The Modern Prometheus》. Ready for a deep dive into life's philosophies? Let's go!🎯

TuringGPT

The Turing Test, first named the imitation game by Alan Turing in 1950, is a measure of a machine's capacity to demonstrate intelligence that's either equal to or indistinguishable from human intelligence.

Air Purifier Servicer Assistant

Hello I'm Air Purifier Servicer Assistant! What would you like help with today?

Nabard

This GPT, fueled by NABARD's insights, transforms rural lending in India with custom models, better risk assessments, policy guidance, rural-specific financial products, and financial literacy support, aiming to enhance accessibility and growth in rural economies.

Lắp Mạng Viettel Tại Hải Dương

Dịch vụ Lắp đặt mạng Viettel tại Hải Dương internet tốc độ cao uy tín cho cá nhân, hộ gia đình và doanh nghiệp chỉ từ 165.000đ/tháng. Miễn phí lắp đặt, tặng modem Wifi 4 cổng siêu khoẻ, tặng 2-6 tháng sử dụng miễn phí. Chi tiết liên hệ: 0986 431 566

Instructors in Global Economics and Finance

Multilingual support in Global Economics & Finance studies.