trapster-community

Modern honeypot supporting multiple services, realistic website cloning, and AI-powered features

Stars: 141

Trapster Community is a low-interaction honeypot designed for internal networks or credential capture. It monitors and detects suspicious activities, providing deceptive security layer. Features include mimicking network services, asynchronous framework, easy configuration, expandable services, and HTTP honeypot engine with AI capabilities. Supported protocols include DNS, HTTP/HTTPS, FTP, LDAP, MSSQL, POSTGRES, RDP, SNMP, SSH, TELNET, VNC, and RSYNC. The tool generates various types of logs and offers HTTP engine with AI capabilities to emulate websites using YAML configuration. Contributions are welcome under AGPLv3+ license.

README:

🌐 Website · 📚 Documentation · 💬 Discord

Trapster Community is a low-interaction honeypot designed to be deployed on internal networks or to capture credentials. It is built to monitor and detect suspicious activities, providing a deceptive layer to network security.

Visit the Trapster website to learn more about our commercial version, which includes advanced features like pre-configured hardened OS, automatic deployment, webhook, SIEM integration and much more...

- Deceptive Security: Mimics network services to lure and detect potential intruders.

-

Asynchronous Framework: Utilizes Python's

asynciofor efficient, non-blocking operations. -

Configuration Management: Easily configurable through

trapster.conf. - Expandable Services: Add and configure as many services as needed with minimal effort.

- HTTP Honeypot Engine with AI capabilities: Clone any website using YAML configuration, and use AI to generate responses to some HTTP requests.

| Protocol | Notes |

|---|---|

| FTP (21) | Capture FTP login attempts |

| SSH (22) | Capture SSH login attempts |

| Telnet (23) | Capture TELNET login attempts |

| DNS (53) | Works as a proxy to a real DNS server, and log queries |

| HTTP/HTTPS (80/443) | Copy website, features custom YAML configuration templating engine |

| SNMP (161) | Log SNMP queries |

| LDAP (389) | Capture LDAP login attempts and queries |

| Rsync (873) | Capture RSYNC login attempts |

| MSSQL (1433) | Capture MSSQL login attempts |

| MySQL (3306) | Capture MySQL login attempts |

| RDP (3389) | Capture RDP login attempts |

| PostgreSQL (5432) | Capture POSTGRES login attempts |

| VNC (5900) | Capture VNC login attempts |

https://docs.trapster.cloud/community/

Quick start with a demo configuration file:

git clone https://github.com/0xBallpoint/trapster-community

cd trapster-community

docker compose up --buildFor a quick start with AI responses for HTTP (port 8081), just add a .env file, and run docker compose up again:

AI_MODEL=o4-mini

AI_BASE_URL=https://api.openai.com/v1/

AI_API_KEY=<YOUR_OPENAI_API_KEY>

Each module can generate up to 4 types of logs: connection, data, login, and query.

-

connection: Indicates that a connection has been made to the module. -

data: Represents raw data that has been sent, logged in HEX format. This data is unprocessed. -

login: Captures login attempts to the module. The data field is in JSON format and contains processed information. -

query: Logs data that has been processed and does not correspond to an authentication attempt. The data field is in JSON format and contains processed information.

You can then filter log type you don't need.

The HTTP module can emulate any website. It works with YAML configuration files to match requests using regular expressions, and can generate responses using either a template or an AI model.

The configuration are stored in trapster/data/http, each folder represent a website. An example of the functionnalities can be found at trapster/data/http/demo_api/config.yaml

Structure:

- config.yaml: contains the configuration for the website.

- files/: contains the static files for the website.

- templates/: contains the templates for the website, it supports jinja2 syntax.

Documentation : https://docs.trapster.cloud/community/modules/web/

The default HTTPS server shows a fortigate login page:

If someone tries to login, you will get a log like this one:

{

"device":"trapster-1",

"logtype":"https.login",

"dst_ip":"127.0.0.1",

"dst_port":8443,

"src_ip":"127.0.0.1",

"src_port":45182,

"timestamp":"2025-02-28 18:53:18.498008",

"data":"616a61783d3126757365726e616d653d61646d696e267365637265746b65793d61646d696e2672656469723d253246",

"extra":{

"method":"POST",

"target":"/logincheck",

"headers":{

"host":"127.0.0.1:8443",

"connection":"keep-alive",

"content-length":"47",

"cache-control":"no-store, no-cache, must-revalidate",

"sec-ch-ua-platform":"\"Linux\"",

"pragma":"no-cache",

"sec-ch-ua":"\"Not(A:Brand\";v=\"99\", \"Google Chrome\";v=\"133\", \"Chromium\";v=\"133\"",

"sec-ch-ua-mobile":"?0",

"user-agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/132.0.0.0 Safari/537.3",

"if-modified-since":"Sat, 1 Jan 2000 00:00:00 GMT",

"content-type":"text/plain;charset=UTF-8",

"accept":"*/*",

"origin":"https://127.0.0.1:8443",

"sec-fetch-site":"same-origin",

"sec-fetch-mode":"cors",

"sec-fetch-dest":"empty",

"referer":"https://127.0.0.1:8443/login?redir=%2F",

"accept-encoding":"gzip, deflate, br, zstd",

"accept-language":"en-US,en;q=0.9"

},

"status_code":200,

"username":"admin",

"password":"admin"

}

}To use AI, install the dependencies:

pip install trapster[ai]

# or locally

python3 -m pip install ".[ai]" Then, you need to set your environnement variables. First, copy the example.env file

cp example.env .envNow, you can set:

AI_MODEL=

AI_BASE_URL=

AI_API_KEY=

AI_MEMORY_ENABLE=false

# AI_MEMORY_PATH=

AI_MEMORY_ENABLE and AI_MEMORY_PATH are optionnal, it allows you to set persistant data between session using a database. Sessions are based on the IP of the user, and the username.

By default, if you set AI_MEMORY_ENABLE=true, then the database will be in trapster/data/ai_memory.db

You can also use OPENAI_API_KEY directly if you want to use the default o4-mini model:

export OPENAI_API_KEY=... && venv/bin/python3 main.pyTrapster can generate fake shell responses when user connect to SSH.

To enable AI for SSH, allow the users to connect with username/password combination that you can define in the configuration file trapster.conf like :

...

"ssh": [

{

"port": 2222,

"version": "SSH-2.0-OpenSSH_8.1p1 Debian-1",

"banner": null,

"users": {

"guest":"guest",

"admin":"admin",

"ubuntu":"ubuntu",

"pi":"raspberry",

"debian":"password"

}

}

...

To generate responses, you can use the ai field in the configuration. It will generate a response for the corresponding URL. You can change the prompt for each URL. This enable to fast, pre-determined responses for the honeypot website, and only AI responses when the URL is unkown.

For example, this image show a request to capture SQLi attempts. Only the SQLi attempts are generated by AI.

A full example is available in trapster/data/demo_ai

Contributions are welcome! Please follow these steps:

- Fork the repository.

- Create a new branch (git checkout -b feature-branch).

- Make your changes.

- Commit your changes (git commit -m 'Add new feature').

- Push to the branch (git push origin feature-branch).

- Create a pull request.

Trapster is licensed under the GNU Affero General Public License v3 or later (AGPLv3+). See the LICENSE file for more details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for trapster-community

Similar Open Source Tools

trapster-community

Trapster Community is a low-interaction honeypot designed for internal networks or credential capture. It monitors and detects suspicious activities, providing deceptive security layer. Features include mimicking network services, asynchronous framework, easy configuration, expandable services, and HTTP honeypot engine with AI capabilities. Supported protocols include DNS, HTTP/HTTPS, FTP, LDAP, MSSQL, POSTGRES, RDP, SNMP, SSH, TELNET, VNC, and RSYNC. The tool generates various types of logs and offers HTTP engine with AI capabilities to emulate websites using YAML configuration. Contributions are welcome under AGPLv3+ license.

clewdr

Clewdr is a collaborative platform for data analysis and visualization. It allows users to upload datasets, perform various data analysis tasks, and create interactive visualizations. The platform supports multiple users working on the same project simultaneously, enabling real-time collaboration and sharing of insights. Clewdr is designed to streamline the data analysis process and facilitate communication among team members. With its user-friendly interface and powerful features, Clewdr is suitable for data scientists, analysts, researchers, and anyone working with data to gain valuable insights and make informed decisions.

tuui

TUUI is a desktop MCP client designed for accelerating AI adoption through the Model Context Protocol (MCP) and enabling cross-vendor LLM API orchestration. It is an LLM chat desktop application based on MCP, created using AI-generated components with strict syntax checks and naming conventions. The tool integrates AI tools via MCP, orchestrates LLM APIs, supports automated application testing, TypeScript, multilingual, layout management, global state management, and offers quick support through the GitHub community and official documentation.

notte

Notte is a web browser designed specifically for LLM agents, providing a language-first web navigation experience without the need for DOM/HTML parsing. It transforms websites into structured, navigable maps described in natural language, enabling users to interact with the web using natural language commands. By simplifying browser complexity, Notte allows LLM policies to focus on conversational reasoning and planning, reducing token usage, costs, and latency. The tool supports various language model providers and offers a reinforcement learning style action space and controls for full navigation control.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

ai-gateway

LangDB AI Gateway is an open-source enterprise AI gateway built in Rust. It provides a unified interface to all LLMs using the OpenAI API format, focusing on high performance, enterprise readiness, and data control. The gateway offers features like comprehensive usage analytics, cost tracking, rate limiting, data ownership, and detailed logging. It supports various LLM providers and provides OpenAI-compatible endpoints for chat completions, model listing, embeddings generation, and image generation. Users can configure advanced settings, such as rate limiting, cost control, dynamic model routing, and observability with OpenTelemetry tracing. The gateway can be run with Docker Compose and integrated with MCP tools for server communication.

scylla

Scylla is an intelligent proxy pool tool designed for humanities, enabling users to extract content from the internet and build their own Large Language Models in the AI era. It features automatic proxy IP crawling and validation, an easy-to-use JSON API, a simple web-based user interface, HTTP forward proxy server, Scrapy and requests integration, and headless browser crawling. Users can start using Scylla with just one command, making it a versatile tool for various web scraping and content extraction tasks.

Gmail-MCP-Server

Gmail AutoAuth MCP Server is a Model Context Protocol (MCP) server designed for Gmail integration in Claude Desktop. It supports auto authentication and enables AI assistants to manage Gmail through natural language interactions. The server provides comprehensive features for sending emails, reading messages, managing labels, searching emails, and batch operations. It offers full support for international characters, email attachments, and Gmail API integration. Users can install and authenticate the server via Smithery or manually with Google Cloud Project credentials. The server supports both Desktop and Web application credentials, with global credential storage for convenience. It also includes Docker support and instructions for cloud server authentication.

snak

The starknet-agent-kit is a toolkit designed for creating AI agents that can interact with the Starknet blockchain. It provides support for multiple AI providers such as Anthropic, OpenAI, Google Gemini, and Ollama. The kit includes an NPM package and a NestJS server with a web interface. Users can run the server in different modes like Chat Mode for conversations, checking balances, executing transfers, and managing accounts, as well as Autonomous Mode for automated monitoring. Additionally, the kit offers a library mode for more advanced usage, allowing users to interact with the StarknetAgent class for executing specific actions. The kit aims to simplify the process of integrating AI capabilities with blockchain interactions.

concierge

Concierge AI is a tool that implements the Model Context Protocol (MCP) to connect AI agents to tools in a standardized way. It ensures deterministic results and reliable tool invocation by progressively disclosing only relevant tools. Users can scaffold new projects or wrap existing MCP servers easily. Concierge works at the MCP protocol level, dynamically changing which tools are returned based on the current workflow step. It allows users to group tools into steps, define transitions, share state between steps, enable semantic search, and run over HTTP. The tool offers features like progressive disclosure, enforced tool ordering, shared state, semantic search, protocol compatibility, session isolation, multiple transports, and a scaffolding CLI for quick project setup.

refact-lsp

Refact Agent is a small executable written in Rust as part of the Refact Agent project. It lives inside your IDE to keep AST and VecDB indexes up to date, supporting connection graphs between definitions and usages in popular programming languages. It functions as an LSP server, offering code completion, chat functionality, and integration with various tools like browsers, databases, and debuggers. Users can interact with it through a Text UI in the command line.

llm-web-api

LLM Web API is a tool that provides a web page to API interface for ChatGPT, allowing users to bypass Cloudflare challenges, switch models, and dynamically display supported models. It uses Playwright to control a fingerprint browser, simulating user operations to send requests to the OpenAI website and converting the responses into API interfaces. The API currently supports the OpenAI-compatible /v1/chat/completions API, accessible using OpenAI or other compatible clients.

aiohttp-cors

The aiohttp_cors library provides Cross Origin Resource Sharing (CORS) support for aiohttp, an asyncio-powered asynchronous HTTP server. CORS allows overriding the Same-origin policy for specific resources, enabling web pages to access resources from different origins. The library helps configure CORS settings for resources and routes in aiohttp applications, allowing control over origins, credentials passing, headers, and preflight requests.

context7

Context7 is a powerful tool for analyzing and visualizing data in various formats. It provides a user-friendly interface for exploring datasets, generating insights, and creating interactive visualizations. With advanced features such as data filtering, aggregation, and customization, Context7 is suitable for both beginners and experienced data analysts. The tool supports a wide range of data sources and formats, making it versatile for different use cases. Whether you are working on exploratory data analysis, data visualization, or data storytelling, Context7 can help you uncover valuable insights and communicate your findings effectively.

bot-on-anything

The 'bot-on-anything' repository allows developers to integrate various AI models into messaging applications, enabling the creation of intelligent chatbots. By configuring the connections between models and applications, developers can easily switch between multiple channels within a project. The architecture is highly scalable, allowing the reuse of algorithmic capabilities for each new application and model integration. Supported models include ChatGPT, GPT-3.0, New Bing, and Google Bard, while supported applications range from terminals and web platforms to messaging apps like WeChat, Telegram, QQ, and more. The repository provides detailed instructions for setting up the environment, configuring the models and channels, and running the chatbot for various tasks across different messaging platforms.

mcpdoc

The MCP LLMS-TXT Documentation Server is an open-source server that provides developers full control over tools used by applications like Cursor, Windsurf, and Claude Code/Desktop. It allows users to create a user-defined list of `llms.txt` files and use a `fetch_docs` tool to read URLs within these files, enabling auditing of tool calls and context returned. The server supports various applications and provides a way to connect to them, configure rules, and test tool calls for tasks related to documentation retrieval and processing.

For similar tasks

trapster-community

Trapster Community is a low-interaction honeypot designed for internal networks or credential capture. It monitors and detects suspicious activities, providing deceptive security layer. Features include mimicking network services, asynchronous framework, easy configuration, expandable services, and HTTP honeypot engine with AI capabilities. Supported protocols include DNS, HTTP/HTTPS, FTP, LDAP, MSSQL, POSTGRES, RDP, SNMP, SSH, TELNET, VNC, and RSYNC. The tool generates various types of logs and offers HTTP engine with AI capabilities to emulate websites using YAML configuration. Contributions are welcome under AGPLv3+ license.

semantic-router

Semantic Router is a superfast decision-making layer for your LLMs and agents. Rather than waiting for slow LLM generations to make tool-use decisions, we use the magic of semantic vector space to make those decisions — _routing_ our requests using _semantic_ meaning.

hass-ollama-conversation

The Ollama Conversation integration adds a conversation agent powered by Ollama in Home Assistant. This agent can be used in automations to query information provided by Home Assistant about your house, including areas, devices, and their states. Users can install the integration via HACS and configure settings such as API timeout, model selection, context size, maximum tokens, and other parameters to fine-tune the responses generated by the AI language model. Contributions to the project are welcome, and discussions can be held on the Home Assistant Community platform.

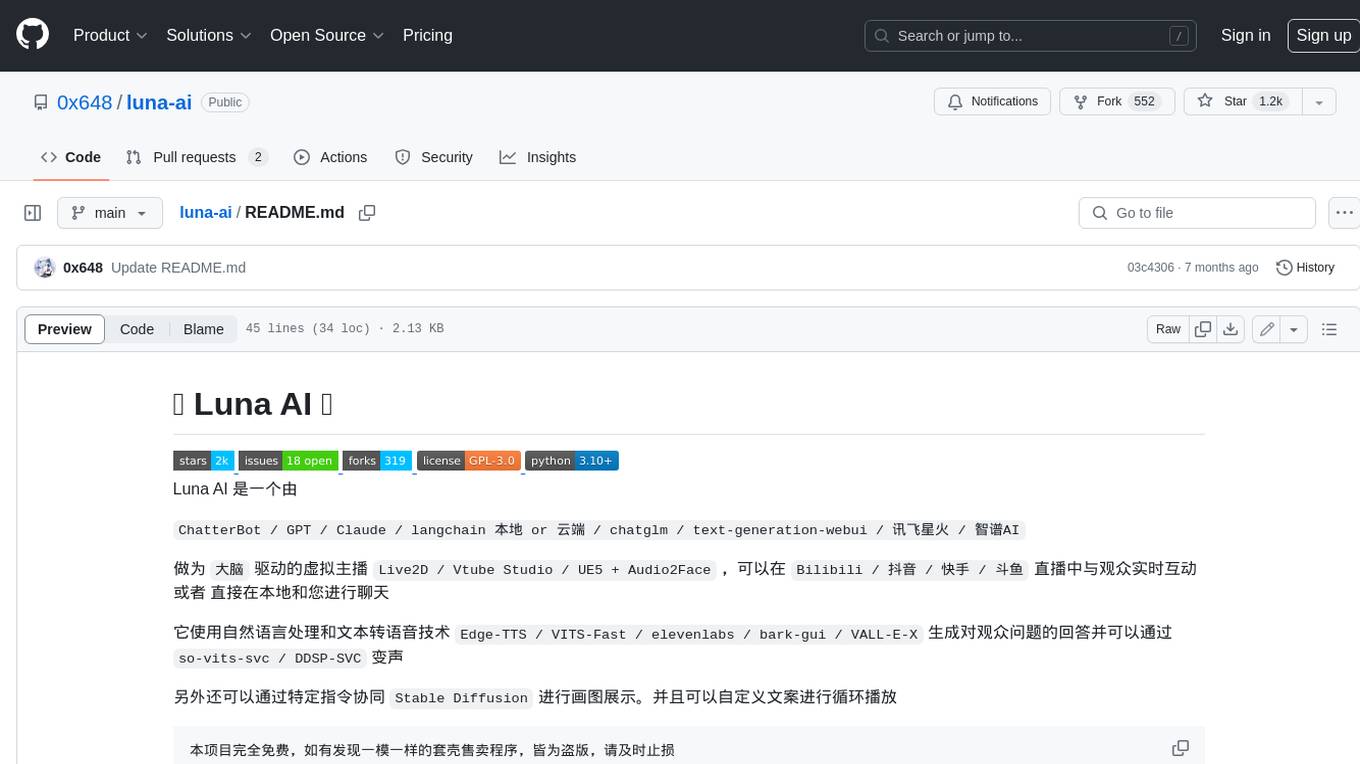

luna-ai

Luna AI is a virtual streamer driven by a 'brain' composed of ChatterBot, GPT, Claude, langchain, chatglm, text-generation-webui, 讯飞星火, 智谱AI. It can interact with viewers in real-time during live streams on platforms like Bilibili, Douyin, Kuaishou, Douyu, or chat with you locally. Luna AI uses natural language processing and text-to-speech technologies like Edge-TTS, VITS-Fast, elevenlabs, bark-gui, VALL-E-X to generate responses to viewer questions and can change voice using so-vits-svc, DDSP-SVC. It can also collaborate with Stable Diffusion for drawing displays and loop custom texts. This project is completely free, and any identical copycat selling programs are pirated, please stop them promptly.

KULLM

KULLM (구름) is a Korean Large Language Model developed by Korea University NLP & AI Lab and HIAI Research Institute. It is based on the upstage/SOLAR-10.7B-v1.0 model and has been fine-tuned for instruction. The model has been trained on 8×A100 GPUs and is capable of generating responses in Korean language. KULLM exhibits hallucination and repetition phenomena due to its decoding strategy. Users should be cautious as the model may produce inaccurate or harmful results. Performance may vary in benchmarks without a fixed system prompt.

cria

Cria is a Python library designed for running Large Language Models with minimal configuration. It provides an easy and concise way to interact with LLMs, offering advanced features such as custom models, streams, message history management, and running multiple models in parallel. Cria simplifies the process of using LLMs by providing a straightforward API that requires only a few lines of code to get started. It also handles model installation automatically, making it efficient and user-friendly for various natural language processing tasks.

beyondllm

Beyond LLM offers an all-in-one toolkit for experimentation, evaluation, and deployment of Retrieval-Augmented Generation (RAG) systems. It simplifies the process with automated integration, customizable evaluation metrics, and support for various Large Language Models (LLMs) tailored to specific needs. The aim is to reduce LLM hallucination risks and enhance reliability.

Groma

Groma is a grounded multimodal assistant that excels in region understanding and visual grounding. It can process user-defined region inputs and generate contextually grounded long-form responses. The tool presents a unique paradigm for multimodal large language models, focusing on visual tokenization for localization. Groma achieves state-of-the-art performance in referring expression comprehension benchmarks. The tool provides pretrained model weights and instructions for data preparation, training, inference, and evaluation. Users can customize training by starting from intermediate checkpoints. Groma is designed to handle tasks related to detection pretraining, alignment pretraining, instruction finetuning, instruction following, and more.

For similar jobs

ciso-assistant-community

CISO Assistant is a tool that helps organizations manage their cybersecurity posture and compliance. It provides a centralized platform for managing security controls, threats, and risks. CISO Assistant also includes a library of pre-built frameworks and tools to help organizations quickly and easily implement best practices.

PurpleLlama

Purple Llama is an umbrella project that aims to provide tools and evaluations to support responsible development and usage of generative AI models. It encompasses components for cybersecurity and input/output safeguards, with plans to expand in the future. The project emphasizes a collaborative approach, borrowing the concept of purple teaming from cybersecurity, to address potential risks and challenges posed by generative AI. Components within Purple Llama are licensed permissively to foster community collaboration and standardize the development of trust and safety tools for generative AI.

vpnfast.github.io

VPNFast is a lightweight and fast VPN service provider that offers secure and private internet access. With VPNFast, users can protect their online privacy, bypass geo-restrictions, and secure their internet connection from hackers and snoopers. The service provides high-speed servers in multiple locations worldwide, ensuring a reliable and seamless VPN experience for users. VPNFast is easy to use, with a user-friendly interface and simple setup process. Whether you're browsing the web, streaming content, or accessing sensitive information, VPNFast helps you stay safe and anonymous online.

taranis-ai

Taranis AI is an advanced Open-Source Intelligence (OSINT) tool that leverages Artificial Intelligence to revolutionize information gathering and situational analysis. It navigates through diverse data sources like websites to collect unstructured news articles, utilizing Natural Language Processing and Artificial Intelligence to enhance content quality. Analysts then refine these AI-augmented articles into structured reports that serve as the foundation for deliverables such as PDF files, which are ultimately published.

NightshadeAntidote

Nightshade Antidote is an image forensics tool used to analyze digital images for signs of manipulation or forgery. It implements several common techniques used in image forensics including metadata analysis, copy-move forgery detection, frequency domain analysis, and JPEG compression artifacts analysis. The tool takes an input image, performs analysis using the above techniques, and outputs a report summarizing the findings.

h4cker

This repository is a comprehensive collection of cybersecurity-related references, scripts, tools, code, and other resources. It is carefully curated and maintained by Omar Santos. The repository serves as a supplemental material provider to several books, video courses, and live training created by Omar Santos. It encompasses over 10,000 references that are instrumental for both offensive and defensive security professionals in honing their skills.

AIMr

AIMr is an AI aimbot tool written in Python that leverages modern technologies to achieve an undetected system with a pleasing appearance. It works on any game that uses human-shaped models. To optimize its performance, users should build OpenCV with CUDA. For Valorant, additional perks in the Discord and an Arduino Leonardo R3 are required.

admyral

Admyral is an open-source Cybersecurity Automation & Investigation Assistant that provides a unified console for investigations and incident handling, workflow automation creation, automatic alert investigation, and next step suggestions for analysts. It aims to tackle alert fatigue and automate security workflows effectively by offering features like workflow actions, AI actions, case management, alert handling, and more. Admyral combines security automation and case management to streamline incident response processes and improve overall security posture. The tool is open-source, transparent, and community-driven, allowing users to self-host, contribute, and collaborate on integrations and features.