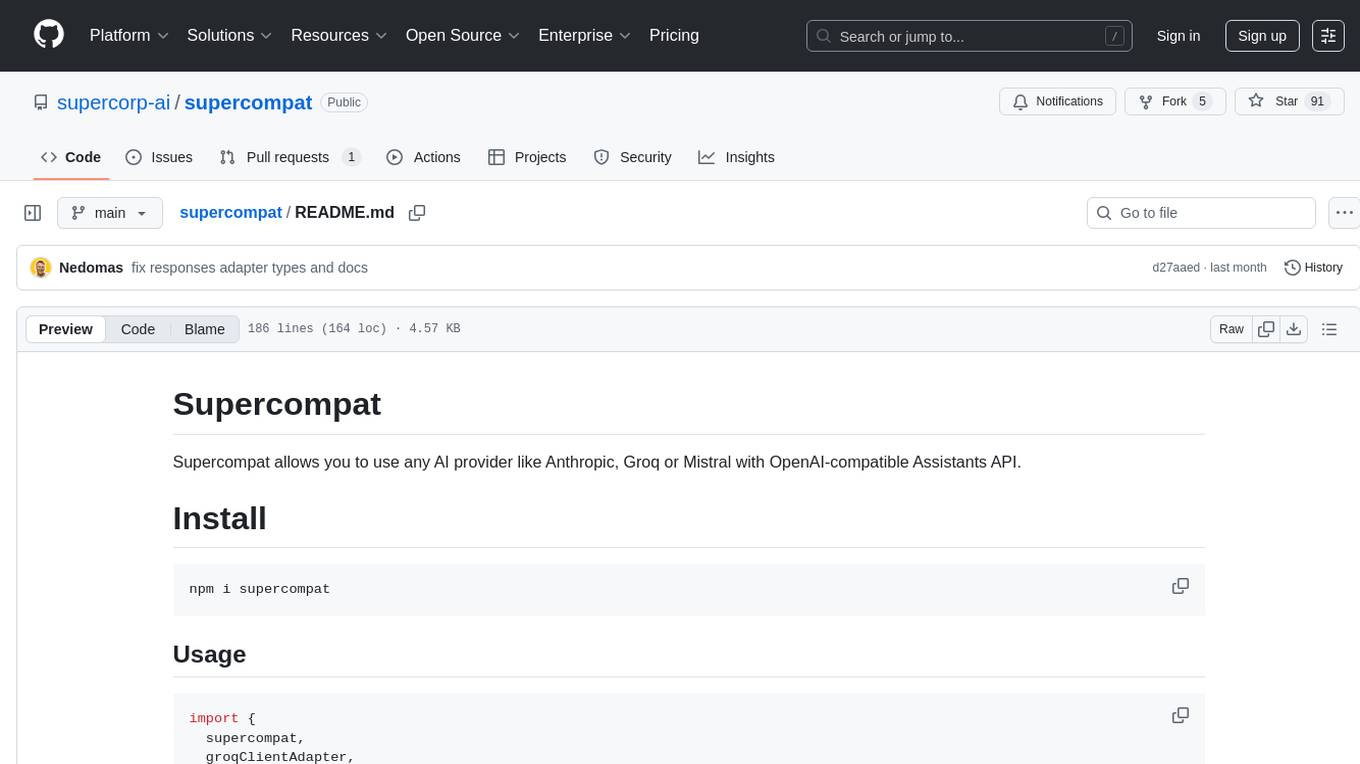

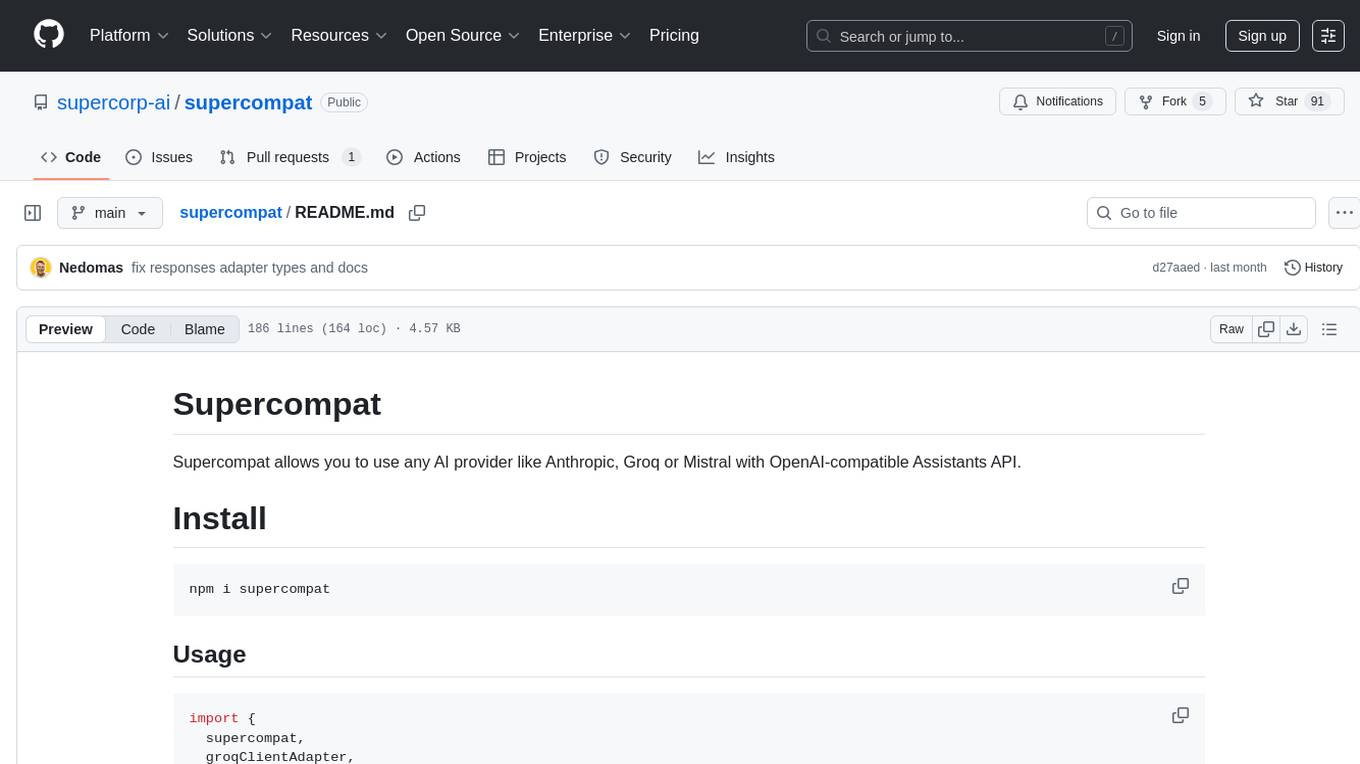

supercompat

Supercompat allows you to use any AI provider like Anthropic, Groq or Mistral with OpenAI-compatible Assistants API.

Stars: 91

Supercompat is a tool that enables users to integrate various AI providers like Anthropic, Groq, or Mistral with the OpenAI-compatible Assistants API. It provides adapters for different AI services and storage options, allowing seamless communication between the user's application and the AI providers. With Supercompat, developers can easily leverage the capabilities of multiple AI services within their projects, enhancing the functionality and intelligence of their applications.

README:

Supercompat allows you to use any AI provider like Anthropic, Groq or Mistral with OpenAI-compatible Assistants API.

npm i supercompat

import {

supercompat,

groqClientAdapter,

openaiClientAdapter,

prismaStorageAdapter,

openaiResponsesStorageAdapter,

completionsRunAdapter,

responsesRunAdapter,

} from 'supercompat'

import Groq from 'groq-sdk'

import OpenAI from 'openai'

// Groq with Prisma storage and the Completions adapter

const client = supercompat({

client: groqClientAdapter({ groq: new Groq() }),

storage: prismaStorageAdapter({ prisma }),

runAdapter: completionsRunAdapter(),

})

// OpenAI Responses API storage and run adapter

const openai = new OpenAI({ apiKey: process.env.OPENAI_API_KEY })

const responsesClient = supercompat({

client: openaiClientAdapter({ openai }),

storage: openaiResponsesStorageAdapter({ openai }),

runAdapter: responsesRunAdapter(),

})

const message = await responsesClient.beta.threads.messages.create(thread.id, {

role: 'user',

content: 'Who won the world series in 2020?',

})// prisma.schema

model Thread {

id String @id @default(dbgenerated("gen_random_uuid()")) @db.Uuid

assistantId String @db.Uuid

assistant Assistant @relation(fields: [assistantId], references: [id], onDelete: Cascade)

metadata Json?

messages Message[]

runs Run[]

runSteps RunStep[]

createdAt DateTime @default(now()) @db.Timestamptz(6)

updatedAt DateTime @updatedAt @db.Timestamptz(6)

@@index([assistantId])

@@index([createdAt(sort: Desc)])

}

enum MessageRole {

USER

ASSISTANT

}

enum MessageStatus {

IN_PROGRESS

INCOMPLETE

COMPLETED

}

model Message {

id String @id @default(dbgenerated("gen_random_uuid()")) @db.Uuid

threadId String @db.Uuid

thread Thread @relation(fields: [threadId], references: [id], onDelete: Cascade)

role MessageRole

content Json

status MessageStatus @default(COMPLETED)

assistantId String? @db.Uuid

assistant Assistant? @relation(fields: [assistantId], references: [id], onDelete: Cascade)

runId String? @db.Uuid

run Run? @relation(fields: [runId], references: [id], onDelete: Cascade)

completedAt DateTime? @db.Timestamptz(6)

incompleteAt DateTime? @db.Timestamptz(6)

incompleteDetails Json?

attachments Json[] @default([])

metadata Json?

toolCalls Json?

createdAt DateTime @default(now()) @db.Timestamptz(6)

updatedAt DateTime @updatedAt @db.Timestamptz(6)

@@index([threadId])

@@index([createdAt(sort: Desc)])

}

enum RunStatus {

QUEUED

IN_PROGRESS

REQUIRES_ACTION

CANCELLING

CANCELLED

FAILED

COMPLETED

EXPIRED

}

model Run {

id String @id @default(dbgenerated("gen_random_uuid()")) @db.Uuid

threadId String @db.Uuid

thread Thread @relation(fields: [threadId], references: [id], onDelete: Cascade)

assistantId String @db.Uuid

assistant Assistant @relation(fields: [assistantId], references: [id], onDelete: Cascade)

status RunStatus

requiredAction Json?

lastError Json?

expiresAt Int

startedAt Int?

cancelledAt Int?

failedAt Int?

completedAt Int?

model String

instructions String

tools Json[] @default([])

metadata Json?

usage Json?

truncationStrategy Json @default("{ \"type\": \"auto\" }")

responseFormat Json @default("{ \"type\": \"text\" }")

runSteps RunStep[]

messages Message[]

createdAt DateTime @default(now()) @db.Timestamptz(6)

updatedAt DateTime @updatedAt @db.Timestamptz(6)

}

enum RunStepType {

MESSAGE_CREATION

TOOL_CALLS

}

enum RunStepStatus {

IN_PROGRESS

CANCELLED

FAILED

COMPLETED

EXPIRED

}

model RunStep {

id String @id @default(dbgenerated("gen_random_uuid()")) @db.Uuid

threadId String @db.Uuid

thread Thread @relation(fields: [threadId], references: [id], onDelete: Cascade)

assistantId String @db.Uuid

assistant Assistant @relation(fields: [assistantId], references: [id], onDelete: Cascade)

runId String @db.Uuid

run Run @relation(fields: [runId], references: [id], onDelete: Cascade)

type RunStepType

status RunStepStatus

stepDetails Json

lastError Json?

expiredAt Int?

cancelledAt Int?

failedAt Int?

completedAt Int?

metadata Json?

usage Json?

createdAt DateTime @default(now()) @db.Timestamptz(6)

updatedAt DateTime @updatedAt @db.Timestamptz(6)

@@index([threadId, runId, type, status])

@@index([createdAt(sort: Asc)])

}

model Assistant {

id String @id @default(dbgenerated("gen_random_uuid()")) @db.Uuid

threads Thread[]

runs Run[]

runSteps RunStep[]

messages Message[]

createdAt DateTime @default(now()) @db.Timestamptz(6)

updatedAt DateTime @updatedAt @db.Timestamptz(6)

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for supercompat

Similar Open Source Tools

supercompat

Supercompat is a tool that enables users to integrate various AI providers like Anthropic, Groq, or Mistral with the OpenAI-compatible Assistants API. It provides adapters for different AI services and storage options, allowing seamless communication between the user's application and the AI providers. With Supercompat, developers can easily leverage the capabilities of multiple AI services within their projects, enhancing the functionality and intelligence of their applications.

island-ai

island-ai is a TypeScript toolkit tailored for developers engaging with structured outputs from Large Language Models. It offers streamlined processes for handling, parsing, streaming, and leveraging AI-generated data across various applications. The toolkit includes packages like zod-stream for interfacing with LLM streams, stream-hooks for integrating streaming JSON data into React applications, and schema-stream for JSON streaming parsing based on Zod schemas. Additionally, related packages like @instructor-ai/instructor-js focus on data validation and retry mechanisms, enhancing the reliability of data processing workflows.

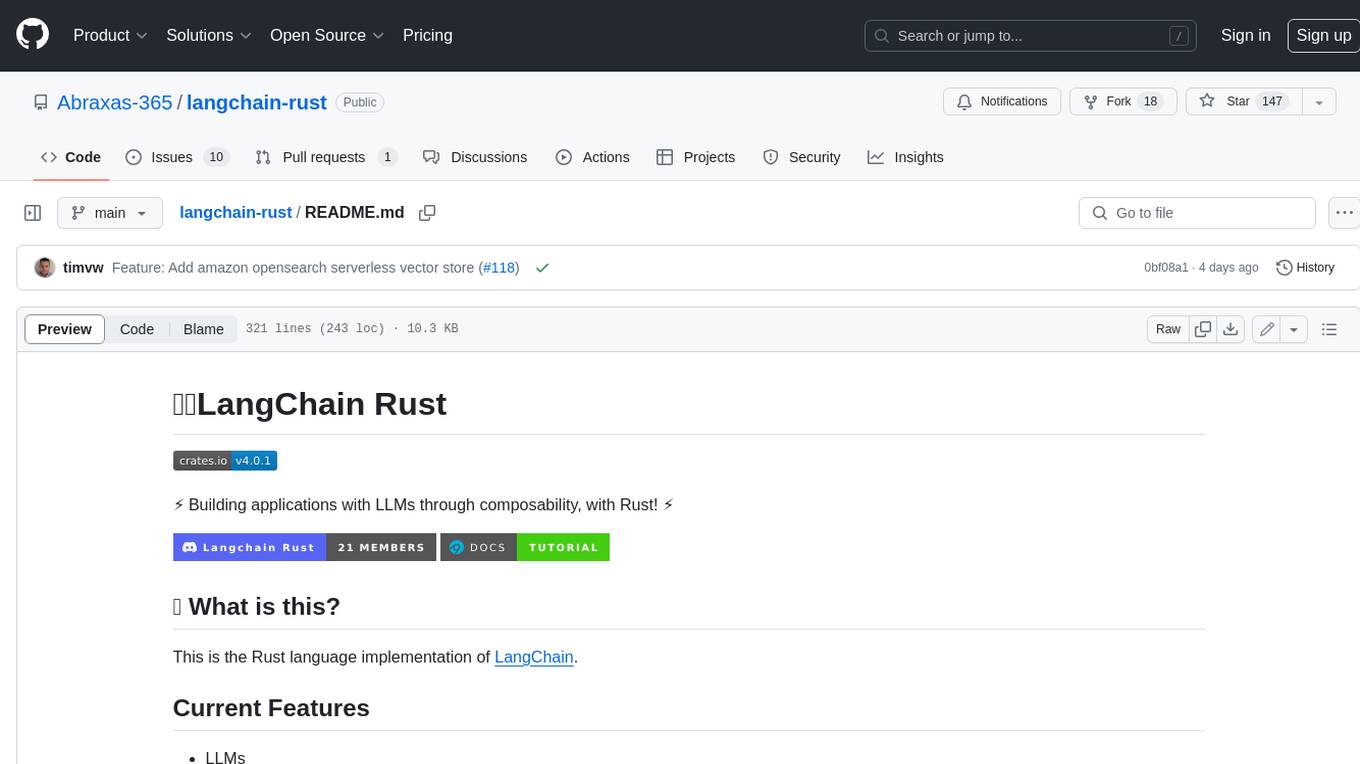

langchain-rust

LangChain Rust is a library for building applications with Large Language Models (LLMs) through composability. It provides a set of tools and components that can be used to create conversational agents, document loaders, and other applications that leverage LLMs. LangChain Rust supports a variety of LLMs, including OpenAI, Azure OpenAI, Ollama, and Anthropic Claude. It also supports a variety of embeddings, vector stores, and document loaders. LangChain Rust is designed to be easy to use and extensible, making it a great choice for developers who want to build applications with LLMs.

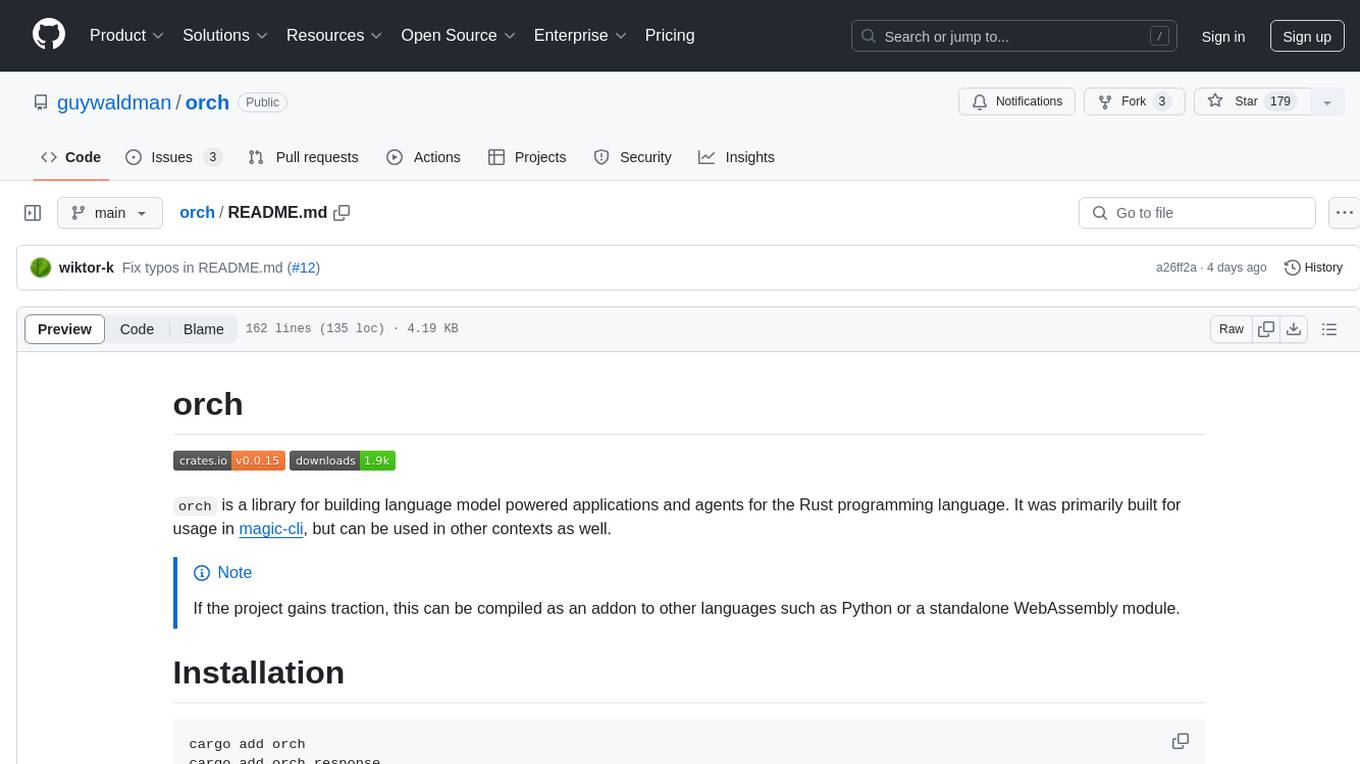

orch

orch is a library for building language model powered applications and agents for the Rust programming language. It can be used for tasks such as text generation, streaming text generation, structured data generation, and embedding generation. The library provides functionalities for executing various language model tasks and can be integrated into different applications and contexts. It offers flexibility for developers to create language model-powered features and applications in Rust.

nb_utils

nb_utils is a Flutter package that provides a collection of useful methods, extensions, widgets, and utilities to simplify Flutter app development. It includes features like shared preferences, text styles, decorations, widgets, extensions for strings, colors, build context, date time, device, numbers, lists, scroll controllers, system methods, network utils, JWT decoding, and custom dialogs. The package aims to enhance productivity and streamline common tasks in Flutter development.

tambo

tambo ai is a React library that simplifies the process of building AI assistants and agents in React by handling thread management, state persistence, streaming responses, AI orchestration, and providing a compatible React UI library. It eliminates React boilerplate for AI features, allowing developers to focus on creating exceptional user experiences with clean React hooks that seamlessly integrate with their codebase.

halbot

halbot is a Telegram bot that uses ChatGPT, Gemini, Mistral, and other AI engines to provide a variety of services, including text generation, translation, summarization, and question answering. It is easy to use and extend, and it can be integrated into your own projects. halbot is open source and free to use.

go-anthropic

Go-anthropic is an unofficial API wrapper for Anthropic Claude in Go. It supports completions, streaming completions, messages, streaming messages, vision, and tool use. Users can interact with the Anthropic Claude API to generate text completions, analyze messages, process images, and utilize specific tools for various tasks.

chrome-ai

Chrome AI is a Vercel AI provider for Chrome's built-in model (Gemini Nano). It allows users to create language models using Chrome's AI capabilities. The tool is under development and may contain errors and frequent changes. Users can install the ChromeAI provider module and use it to generate text, stream text, and generate objects. To enable AI in Chrome, users need to have Chrome version 127 or greater and turn on specific flags. The tool is designed for developers and researchers interested in experimenting with Chrome's built-in AI features.

SwiftAgent

A type-safe, declarative framework for building AI agents in Swift, SwiftAgent is built on Apple FoundationModels. It allows users to compose agents by combining Steps in a declarative syntax similar to SwiftUI. The framework ensures compile-time checked input/output types, native Apple AI integration, structured output generation, and built-in security features like permission, sandbox, and guardrail systems. SwiftAgent is extensible with MCP integration, distributed agents, and a skills system. Users can install SwiftAgent with Swift 6.2+ on iOS 26+, macOS 26+, or Xcode 26+ using Swift Package Manager.

lagent

Lagent is a lightweight open-source framework that allows users to efficiently build large language model(LLM)-based agents. It also provides some typical tools to augment LLM. The overview of our framework is shown below:

x

Ant Design X is a tool for crafting AI-driven interfaces effortlessly. It is built on the best practices of enterprise-level AI products, offering flexible and diverse atomic components for various AI dialogue scenarios. The tool provides out-of-the-box model integration with inference services compatible with OpenAI standards. It also enables efficient management of conversation data flows, supports rich template options, complete TypeScript support, and advanced theme customization. Ant Design X is designed to enhance development efficiency and deliver exceptional AI interaction experiences.

ai

The Vercel AI SDK is a library for building AI-powered streaming text and chat UIs. It provides React, Svelte, Vue, and Solid helpers for streaming text responses and building chat and completion UIs. The SDK also includes a React Server Components API for streaming Generative UI and first-class support for various AI providers such as OpenAI, Anthropic, Mistral, Perplexity, AWS Bedrock, Azure, Google Gemini, Hugging Face, Fireworks, Cohere, LangChain, Replicate, Ollama, and more. Additionally, it offers Node.js, Serverless, and Edge Runtime support, as well as lifecycle callbacks for saving completed streaming responses to a database in the same request.

dashscope-sdk

DashScope SDK for .NET is an unofficial SDK maintained by Cnblogs, providing various APIs for text embedding, generation, multimodal generation, image synthesis, and more. Users can interact with the SDK to perform tasks such as text completion, chat generation, function calls, file operations, and more. The project is under active development, and users are advised to check the Release Notes before upgrading.

mediapipe-rs

MediaPipe-rs is a Rust library designed for MediaPipe tasks on WasmEdge WASI-NN. It offers easy-to-use low-code APIs similar to mediapipe-python, with low overhead and flexibility for custom media input. The library supports various tasks like object detection, image classification, gesture recognition, and more, including TfLite models, TF Hub models, and custom models. Users can create task instances, run sessions for pre-processing, inference, and post-processing, and speed up processing by reusing sessions. The library also provides support for audio tasks using audio data from symphonia, ffmpeg, or raw audio. Users can choose between CPU, GPU, or TPU devices for processing.

openai-scala-client

This is a no-nonsense async Scala client for OpenAI API supporting all the available endpoints and params including streaming, chat completion, vision, and voice routines. It provides a single service called OpenAIService that supports various calls such as Models, Completions, Chat Completions, Edits, Images, Embeddings, Batches, Audio, Files, Fine-tunes, Moderations, Assistants, Threads, Thread Messages, Runs, Run Steps, Vector Stores, Vector Store Files, and Vector Store File Batches. The library aims to be self-contained with minimal dependencies and supports API-compatible providers like Azure OpenAI, Azure AI, Anthropic, Google Vertex AI, Groq, Grok, Fireworks AI, OctoAI, TogetherAI, Cerebras, Mistral, Deepseek, Ollama, FastChat, and more.

For similar tasks

semantic-router

Semantic Router is a superfast decision-making layer for your LLMs and agents. Rather than waiting for slow LLM generations to make tool-use decisions, we use the magic of semantic vector space to make those decisions — _routing_ our requests using _semantic_ meaning.

hass-ollama-conversation

The Ollama Conversation integration adds a conversation agent powered by Ollama in Home Assistant. This agent can be used in automations to query information provided by Home Assistant about your house, including areas, devices, and their states. Users can install the integration via HACS and configure settings such as API timeout, model selection, context size, maximum tokens, and other parameters to fine-tune the responses generated by the AI language model. Contributions to the project are welcome, and discussions can be held on the Home Assistant Community platform.

luna-ai

Luna AI is a virtual streamer driven by a 'brain' composed of ChatterBot, GPT, Claude, langchain, chatglm, text-generation-webui, 讯飞星火, 智谱AI. It can interact with viewers in real-time during live streams on platforms like Bilibili, Douyin, Kuaishou, Douyu, or chat with you locally. Luna AI uses natural language processing and text-to-speech technologies like Edge-TTS, VITS-Fast, elevenlabs, bark-gui, VALL-E-X to generate responses to viewer questions and can change voice using so-vits-svc, DDSP-SVC. It can also collaborate with Stable Diffusion for drawing displays and loop custom texts. This project is completely free, and any identical copycat selling programs are pirated, please stop them promptly.

KULLM

KULLM (구름) is a Korean Large Language Model developed by Korea University NLP & AI Lab and HIAI Research Institute. It is based on the upstage/SOLAR-10.7B-v1.0 model and has been fine-tuned for instruction. The model has been trained on 8×A100 GPUs and is capable of generating responses in Korean language. KULLM exhibits hallucination and repetition phenomena due to its decoding strategy. Users should be cautious as the model may produce inaccurate or harmful results. Performance may vary in benchmarks without a fixed system prompt.

cria

Cria is a Python library designed for running Large Language Models with minimal configuration. It provides an easy and concise way to interact with LLMs, offering advanced features such as custom models, streams, message history management, and running multiple models in parallel. Cria simplifies the process of using LLMs by providing a straightforward API that requires only a few lines of code to get started. It also handles model installation automatically, making it efficient and user-friendly for various natural language processing tasks.

beyondllm

Beyond LLM offers an all-in-one toolkit for experimentation, evaluation, and deployment of Retrieval-Augmented Generation (RAG) systems. It simplifies the process with automated integration, customizable evaluation metrics, and support for various Large Language Models (LLMs) tailored to specific needs. The aim is to reduce LLM hallucination risks and enhance reliability.

Groma

Groma is a grounded multimodal assistant that excels in region understanding and visual grounding. It can process user-defined region inputs and generate contextually grounded long-form responses. The tool presents a unique paradigm for multimodal large language models, focusing on visual tokenization for localization. Groma achieves state-of-the-art performance in referring expression comprehension benchmarks. The tool provides pretrained model weights and instructions for data preparation, training, inference, and evaluation. Users can customize training by starting from intermediate checkpoints. Groma is designed to handle tasks related to detection pretraining, alignment pretraining, instruction finetuning, instruction following, and more.

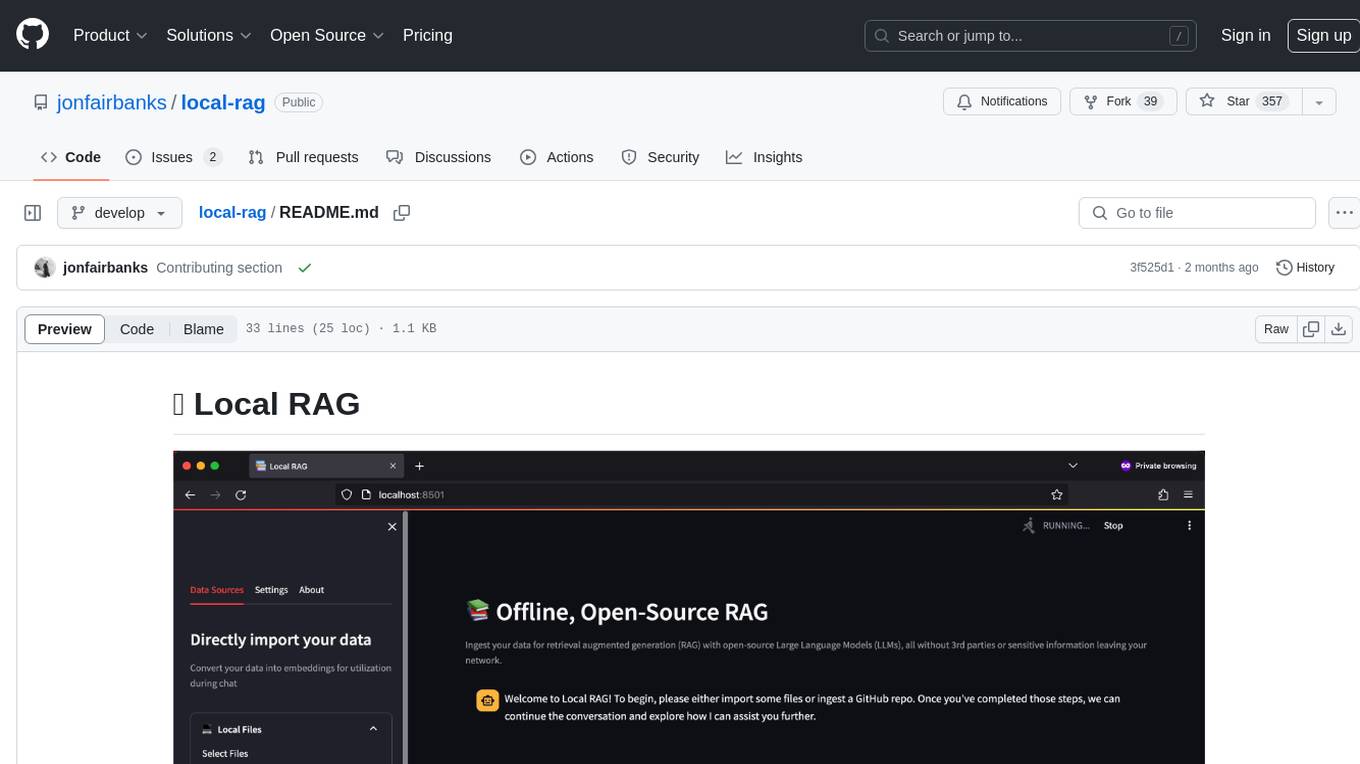

local-rag

Local RAG is an offline, open-source tool that allows users to ingest files for retrieval augmented generation (RAG) using large language models (LLMs) without relying on third parties or exposing sensitive data. It supports offline embeddings and LLMs, multiple sources including local files, GitHub repos, and websites, streaming responses, conversational memory, and chat export. Users can set up and deploy the app, learn how to use Local RAG, explore the RAG pipeline, check planned features, known bugs and issues, access additional resources, and contribute to the project.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.