gigachat

Библиотека для доступа к GigaChat

Stars: 74

GigaChat is a Python library that allows GigaChain to interact with GigaChat, a neural network model capable of engaging in dialogue, writing code, creating texts, and images on demand. Data exchange with the service is facilitated through the GigaChat API. The library supports processing token streaming, as well as working in synchronous or asynchronous mode. It enables precise token counting in text using the GigaChat API.

README:

GigaChat — это Python-библиотека для работы с REST API GigaChat. Она является частью GigaChain и входит в состав langchain-gigachat — партнерского пакета opensource-фреймворка LangChain.

Библиотека управляет авторизацией запросов и предоставляет все необходимые методы для работы с API. Кроме этого она поддерживает:

- обработку потоковой передачи токенов;

- работу с функциями;

- создание эмбеддингов;

- работу в синхронном или в асинхронном режиме.

[!TIP] Примеры работы с библиотекой gigachat — в папке examples.

Для установки библиотеки используйте менеджер пакетов pip:

pip install gigachatДля работы с библиотекой вам понадобится ключ авторизации API.

Чтобы получить ключ авторизации:

- Создайте проект GigaChat API в личном кабинете Studio.

- В интерфейсе проекта, в левой панели выберите раздел Настройки API.

- Нажмите кнопку Получить ключ.

В открывшемся окне скопируйте и сохраните значение поля Authorization Key. Ключ авторизации, отображается только один раз и не хранятся в личном кабинете. При компрометации или утере ключа авторизации вы можете сгенерировать его повторно.

Подробно о том, как создать проект GigaChat API — в официальной документации, в разделах Быстрый старт для физических лиц и Быстрый старт для ИП и юридических лиц.

Передайте полученный ключ авторизации в параметре credentials при инициализации объекта GigaChat.

Пример показывает как отправить простой запрос на генерацию с помощью библиотеки GigaChat:

from gigachat import GigaChat

# Укажите ключ авторизации, полученный в личном кабинете, в интерфейсе проекта GigaChat API

with GigaChat(credentials="ваш_ключ_авторизации", verify_ssl_certs=False) as giga:

response = giga.chat("Какие факторы влияют на стоимость страховки на дом?")

print(response.choices[0].message.content)[!NOTE] Этот и другие примеры работы с библиотекой gigachat — в папке examples.

В таблице описаны параметры, которые можно передать при инициализации объекта GigaChat:

| Параметр | Обязательный | Описание |

|---|---|---|

credentials |

да | Ключ авторизации для обмена сообщениями с GigaChat API. Ключ авторизации содержит информацию о версии API, к которой выполняются запросы. Если вы используете версию API для ИП или юрлиц, укажите это явно в параметре scope

|

verify_ssl_certs |

нет | Отключение проверки ssl-сертификатов. Для обращения к GigaChat API нужно установить корневой сертификат НУЦ Минцифры. Используйте параметр ответственно, так как отключение проверки сертификатов снижает безопасность обмена данными |

scope |

нет | Версия API, к которой будет выполнен запрос. По умолчанию запросы передаются в версию для физических лиц. Возможные значения:

|

model |

нет | необязательный параметр, в котором можно явно задать модель GigaChat. Вы можете посмотреть список доступных моделей с помощью метода get_models(), который выполняет запрос GET /models.Стоимость запросов к разным моделям отличается. Подробную информацию о тарификации запросов к той или иной модели вы ищите в официальной документации |

base_url |

нет | Адрес API. По умолчанию запросы отправляются по адресу https://gigachat.devices.sberbank.ru/api/v1/, но если вы хотите использовать модели в раннем доступе, укажите адрес https://gigachat-preview.devices.sberbank.ru/api/v1

|

![TIP] Чтобы не указывать параметры при каждой инициализации, задайте их в переменных окружения.

Для авторизации запросов, кроме ключа, полученного в личном кабинете, вы можете использовать:

- имя пользователя и пароль для доступа к сервису;

- сертификаты TLS;

- токен доступа (access token), полученный в обмен на ключ авторизации в запросе

POST /api/v2/oauth.

Для этого передайте соответствующие параметры при инициализации.

Пример авторизации с помощью логина и пароля:

giga = GigaChat(

base_url="https://gigachat.devices.sberbank.ru/api/v1",

user="имя_пользоваеля",

password="пароль",

)Авторизация с помощью сертификатов по протоколу TLS (mTLS):

giga = GigaChat(

base_url="https://gigachat.devices.sberbank.ru/api/v1",

ca_bundle_file="certs/ca.pem", # chain_pem.txt

cert_file="certs/tls.pem", # published_pem.txt

key_file="certs/tls.key",

key_file_password="123456",

ssl_context=context # optional ssl.SSLContext instance

)Авторизация с помощью токена доступа:

giga = GigaChat(

access_token="ваш_токен_доступа",

)![NOTE] Токен действителен в течение 30 минут.

По умолчанию, библиотека GigaChat получает токен доступа при первом запросе к API.

Если вам нужно получить токен и авторизоваться до выполнения запроса, инициализируйте объект GigaChat и вызовите метод get_token().

giga = GigaChat(

base_url="https://gigachat.devices.sberbank.ru/api/v1",

user="имя_пользователя",

password="пароль",

)

giga.get_token()Чтобы библиотека GigaChat могла передавать запросы в GigaChat API, вам нужно установить корневой сертификат НУЦ Минцифры.

Для этого выполните команду:

curl -k "https://gu-st.ru/content/Other/doc/russian_trusted_root_ca.cer" -w "\n" >> $(python -m certifi)Вы также можете отключить проверку сертификатов с помощью параметра verify_ssl_certs=False, который передается при инициализации.

giga = GigaChat(

credentials="ключ_авторизации",

verify_ssl_certs=False

)![WARNING] Отключение проверки сертификатов снижает безопасность обмена данными.

Чтобы задать параметры с помощью переменных окружения, в названии переменной используйте префикс GIGACHAT_.

Пример переменных окружения, которые задают ключ авторизации, версию API и отключают проверку сертификатов.

export GIGACHAT_CREDENTIALS=...

export GIGACHAT_SCOPE=...

export GIGACHAT_VERIFY_SSL_CERTS=FalseПример переменных окружения, которые задают адрес API, имя пользователя и пароль.

export GIGACHAT_BASE_URL=https://gigachat.devices.sberbank.ru/api/v1

export GIGACHAT_USER=...

export GIGACHAT_PASSWORD=...For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for gigachat

Similar Open Source Tools

gigachat

GigaChat is a Python library that allows GigaChain to interact with GigaChat, a neural network model capable of engaging in dialogue, writing code, creating texts, and images on demand. Data exchange with the service is facilitated through the GigaChat API. The library supports processing token streaming, as well as working in synchronous or asynchronous mode. It enables precise token counting in text using the GigaChat API.

tb1

A Telegram bot for accessing Google Gemini, MS Bing, etc. The bot responds to the keywords 'bot' and 'google' to provide information. It can handle voice messages, text files, images, and links. It can generate images based on descriptions, extract text from images, and summarize content. The bot can interact with various AI models and perform tasks like voice control, text-to-speech, and text recognition. It supports long texts, large responses, and file transfers. Users can interact with the bot using voice commands and text. The bot can be customized for different AI providers and has features for both users and administrators.

ddddocr

ddddocr is a Rust version of a simple OCR API server that provides easy deployment for captcha recognition without relying on the OpenCV library. It offers a user-friendly general-purpose captcha recognition Rust library. The tool supports recognizing various types of captchas, including single-line text, transparent black PNG images, target detection, and slider matching algorithms. Users can also import custom OCR training models and utilize the OCR API server for flexible OCR result control and range limitation. The tool is cross-platform and can be easily deployed.

bce-qianfan-sdk

The Qianfan SDK provides best practices for large model toolchains, allowing AI workflows and AI-native applications to access the Qianfan large model platform elegantly and conveniently. The core capabilities of the SDK include three parts: large model reasoning, large model training, and general and extension: * `Large model reasoning`: Implements interface encapsulation for reasoning of Yuyan (ERNIE-Bot) series, open source large models, etc., supporting dialogue, completion, Embedding, etc. * `Large model training`: Based on platform capabilities, it supports end-to-end large model training process, including training data, fine-tuning/pre-training, and model services. * `General and extension`: General capabilities include common AI development tools such as Prompt/Debug/Client. The extension capability is based on the characteristics of Qianfan to adapt to common middleware frameworks.

Senparc.AI

Senparc.AI is an AI extension package for the Senparc ecosystem, focusing on LLM (Large Language Models) interaction. It provides modules for standard interfaces and basic functionalities, as well as interfaces using SemanticKernel for plug-and-play capabilities. The package also includes a library for supporting the 'PromptRange' ecosystem, compatible with various systems and frameworks. Users can configure different AI platforms and models, define AI interface parameters, and run AI functions easily. The package offers examples and commands for dialogue, embedding, and DallE drawing operations.

cool-admin-midway

Cool-admin (midway version) is a cool open-source backend permission management system that supports modular, plugin-based, rapid CRUD development. It facilitates the quick construction and iteration of backend management systems, deployable in various ways such as serverless, docker, and traditional servers. It features AI coding for generating APIs and frontend pages, flow orchestration for drag-and-drop functionality, modular and plugin-based design for clear and maintainable code. The tech stack includes Node.js, Midway.js, Koa.js, TypeScript for backend, and Vue.js, Element-Plus, JSX, Pinia, Vue Router for frontend. It offers friendly technology choices for both frontend and backend developers, with TypeScript syntax similar to Java and PHP for backend developers. The tool is suitable for those looking for a modern, efficient, and fast development experience.

botgroup.chat

botgroup.chat is a multi-person AI chat application based on React and Cloudflare Pages for free one-click deployment. It supports multiple AI roles participating in conversations simultaneously, providing an interactive experience similar to group chat. The application features real-time streaming responses, customizable AI roles and personalities, group management functionality, AI role mute function, Markdown format support, mathematical formula display with KaTeX, aesthetically pleasing UI design, and responsive design for mobile devices.

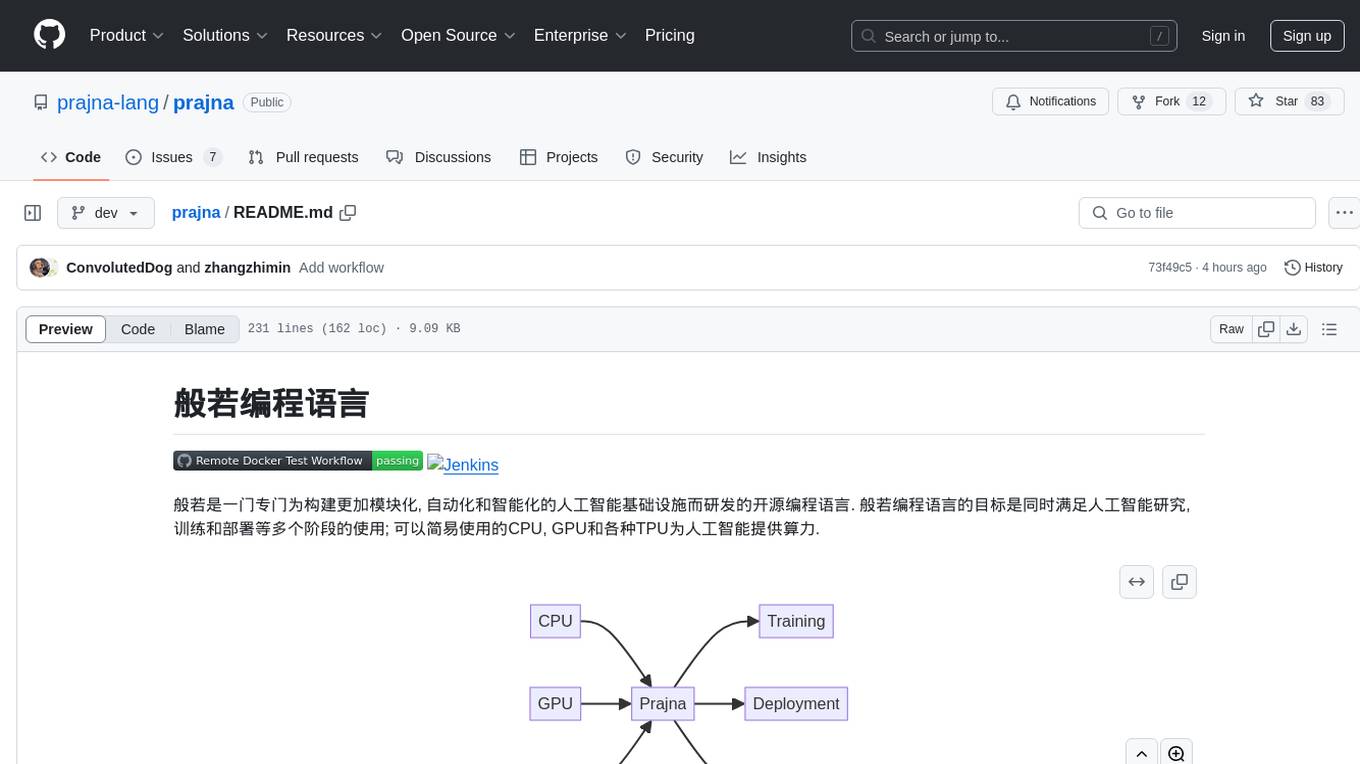

prajna

Prajna is an open-source programming language specifically developed for building more modular, automated, and intelligent artificial intelligence infrastructure. It aims to cater to various stages of AI research, training, and deployment by providing easy access to CPU, GPU, and various TPUs for AI computing. Prajna features just-in-time compilation, GPU/heterogeneous programming support, tensor computing, syntax improvements, and user-friendly interactions through main functions, Repl, and Jupyter, making it suitable for algorithm development and deployment in various scenarios.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.

solon-ai

Solon-AI is a Java AI & MCP application development framework that supports various AI development capabilities. It is designed to be versatile, efficient, and open for integration with frameworks like SpringBoot, jFinal, and Vert.x. The framework provides examples of embedding solon-ai(& mcp) and showcases interfaces for chat models, function calling, vision, RAG (EmbeddingModel, Repository, DocumentLoader, RerankingModel), Ai Flow, MCP server, MCP client, and MCP Proxy. Solon-AI is part of the Solon project ecosystem, which includes other repositories for different functionalities.

zig-aio

zig-aio is a library that provides an io_uring-like asynchronous API and coroutine-powered IO tasks for the Zig programming language. It offers support for different operating systems and backends, such as io_uring, iocp, and posix. The library aims to provide efficient IO operations by leveraging coroutines and async IO mechanisms. Users can create servers and clients with ease using the provided API functions for socket operations, sending and receiving data, and managing connections.

Streamer-Sales

Streamer-Sales is a large model for live streamers that can explain products based on their characteristics and inspire users to make purchases. It is designed to enhance sales efficiency and user experience, whether for online live sales or offline store promotions. The model can deeply understand product features and create tailored explanations in vivid and precise language, sparking user's desire to purchase. It aims to revolutionize the shopping experience by providing detailed and unique product descriptions to engage users effectively.

open-lx01

Open-LX01 is a project aimed at turning the Xiao Ai Mini smart speaker into a fully self-controlled device. The project involves steps such as gaining control, flashing custom firmware, and achieving autonomous control. It includes analysis of main services, reverse engineering methods, cross-compilation environment setup, customization of programs on the speaker, and setting up a web server. The project also covers topics like using custom ASR and TTS, developing a wake-up program, and creating a UI for various configurations. Additionally, it explores topics like gdb-server setup, open-mico-aivs-lab, and open-mipns-sai integration using Porcupine or Kaldi.

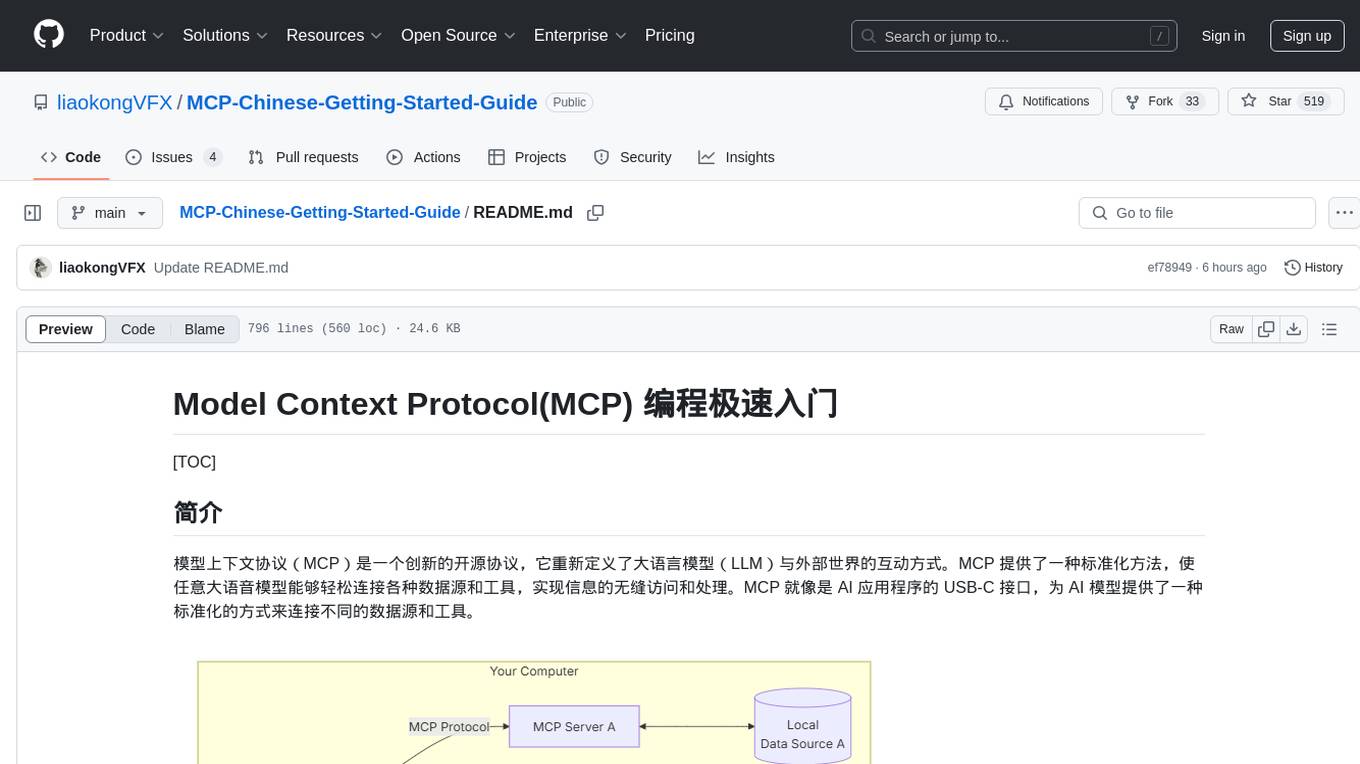

MCP-Chinese-Getting-Started-Guide

The Model Context Protocol (MCP) is an innovative open-source protocol that redefines the interaction between large language models (LLMs) and the external world. MCP provides a standardized approach for any large language model to easily connect to various data sources and tools, enabling seamless access and processing of information. MCP acts as a USB-C interface for AI applications, offering a standardized way for AI models to connect to different data sources and tools. The core functionalities of MCP include Resources, Prompts, Tools, Sampling, Roots, and Transports. This guide focuses on developing an MCP server for network search using Python and uv management. It covers initializing the project, installing dependencies, creating a server, implementing tool execution methods, and running the server. Additionally, it explains how to debug the MCP server using the Inspector tool, how to call tools from the server, and how to connect multiple MCP servers. The guide also introduces the Sampling feature, which allows pre- and post-tool execution operations, and demonstrates how to integrate MCP servers into LangChain for AI applications.

tafrigh

Tafrigh is a tool for transcribing visual and audio content into text using advanced artificial intelligence techniques provided by OpenAI and wit.ai. It allows direct downloading of content from platforms like YouTube, Facebook, Twitter, and SoundCloud, and provides various output formats such as txt, srt, vtt, csv, tsv, and json. Users can install Tafrigh via pip or by cloning the GitHub repository and using Poetry. The tool supports features like skipping transcription if output exists, specifying playlist items, setting download retries, using different Whisper models, and utilizing wit.ai for transcription. Tafrigh can be used via command line or programmatically, and Docker images are available for easy usage.

HiveChat

HiveChat is an AI chat application designed for small and medium teams. It supports various models such as DeepSeek, Open AI, Claude, and Gemini. The tool allows easy configuration by one administrator for the entire team to use different AI models. It supports features like email or Feishu login, LaTeX and Markdown rendering, DeepSeek mind map display, image understanding, AI agents, cloud data storage, and integration with multiple large model service providers. Users can engage in conversations by logging in, while administrators can configure AI service providers, manage users, and control account registration. The technology stack includes Next.js, Tailwindcss, Auth.js, PostgreSQL, Drizzle ORM, and Ant Design.

For similar tasks

tokencost

Tokencost is a clientside tool for calculating the USD cost of using major Large Language Model (LLMs) APIs by estimating the cost of prompts and completions. It helps track the latest price changes of major LLM providers, accurately count prompt tokens before sending OpenAI requests, and easily integrate to get the cost of a prompt or completion with a single function. Users can calculate prompt and completion costs using OpenAI requests, count tokens in prompts formatted as message lists or string prompts, and refer to a cost table with updated prices for various LLM models. The tool also supports callback handlers for LLM wrapper/framework libraries like LlamaIndex and Langchain.

llm

The 'llm' package for Emacs provides an interface for interacting with Large Language Models (LLMs). It abstracts functionality to a higher level, concealing API variations and ensuring compatibility with various LLMs. Users can set up providers like OpenAI, Gemini, Vertex, Claude, Ollama, GPT4All, and a fake client for testing. The package allows for chat interactions, embeddings, token counting, and function calling. It also offers advanced prompt creation and logging capabilities. Users can handle conversations, create prompts with placeholders, and contribute by creating providers.

gigachat

GigaChat is a Python library that allows GigaChain to interact with GigaChat, a neural network model capable of engaging in dialogue, writing code, creating texts, and images on demand. Data exchange with the service is facilitated through the GigaChat API. The library supports processing token streaming, as well as working in synchronous or asynchronous mode. It enables precise token counting in text using the GigaChat API.

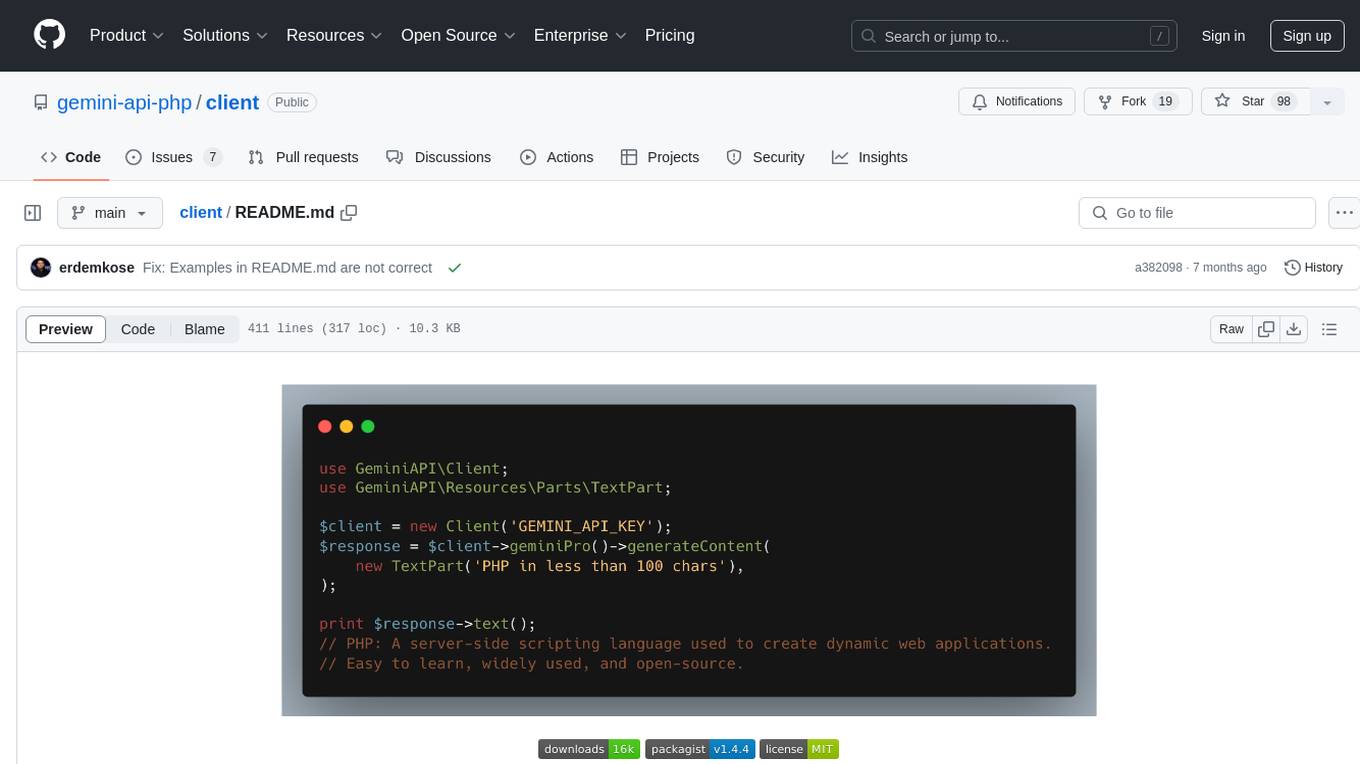

client

Gemini API PHP Client is a library that allows you to interact with Google's generative AI models, such as Gemini Pro and Gemini Pro Vision. It provides functionalities for basic text generation, multimodal input, chat sessions, streaming responses, tokens counting, listing models, and advanced usages like safety settings and custom HTTP client usage. The library requires an API key to access Google's Gemini API and can be installed using Composer. It supports various features like generating content, starting chat sessions, embedding content, counting tokens, and listing available models.

gemini-cli

gemini-cli is a versatile command-line interface for Google's Gemini LLMs, written in Go. It includes tools for chatting with models, generating/comparing embeddings, and storing data in SQLite for analysis. Users can interact with Gemini models through various subcommands like prompt, chat, counttok, embed content, embed db, and embed similar.

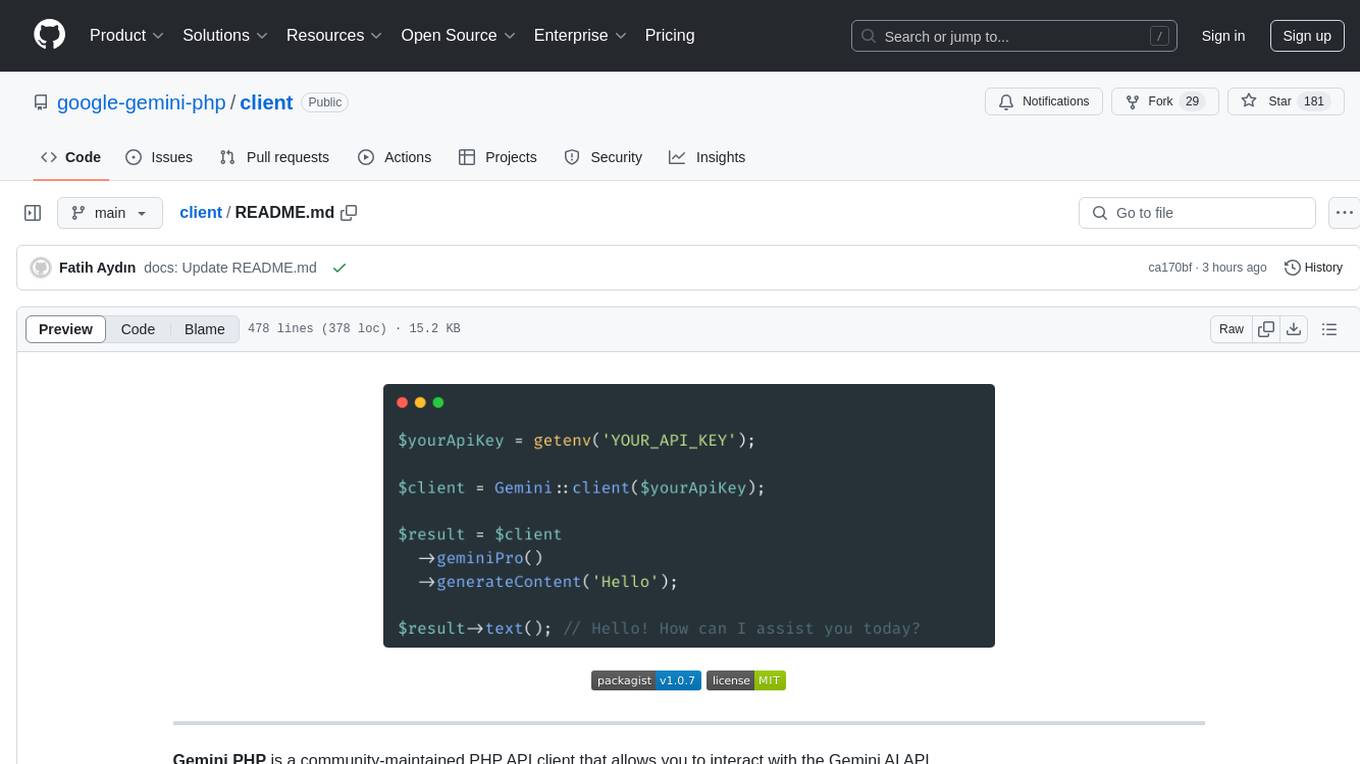

client

Gemini PHP is a PHP API client for interacting with the Gemini AI API. It allows users to generate content, chat, count tokens, configure models, embed resources, list models, get model information, troubleshoot timeouts, and test API responses. The client supports various features such as text-only input, text-and-image input, multi-turn conversations, streaming content generation, token counting, model configuration, and embedding techniques. Users can interact with Gemini's API to perform tasks related to natural language generation and text analysis.

ai21-python

The AI21 Labs Python SDK is a comprehensive tool for interacting with the AI21 API. It provides functionalities for chat completions, conversational RAG, token counting, error handling, and support for various cloud providers like AWS, Azure, and Vertex. The SDK offers both synchronous and asynchronous usage, along with detailed examples and documentation. Users can quickly get started with the SDK to leverage AI21's powerful models for various natural language processing tasks.

Tiktoken

Tiktoken is a high-performance implementation focused on token count operations. It provides various encodings like o200k_base, cl100k_base, r50k_base, p50k_base, and p50k_edit. Users can easily encode and decode text using the provided API. The repository also includes a benchmark console app for performance tracking. Contributions in the form of PRs are welcome.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.