open-lx01

小爱音箱mini定制固件 Let the Xiao Ai Speaker Mini free

Stars: 224

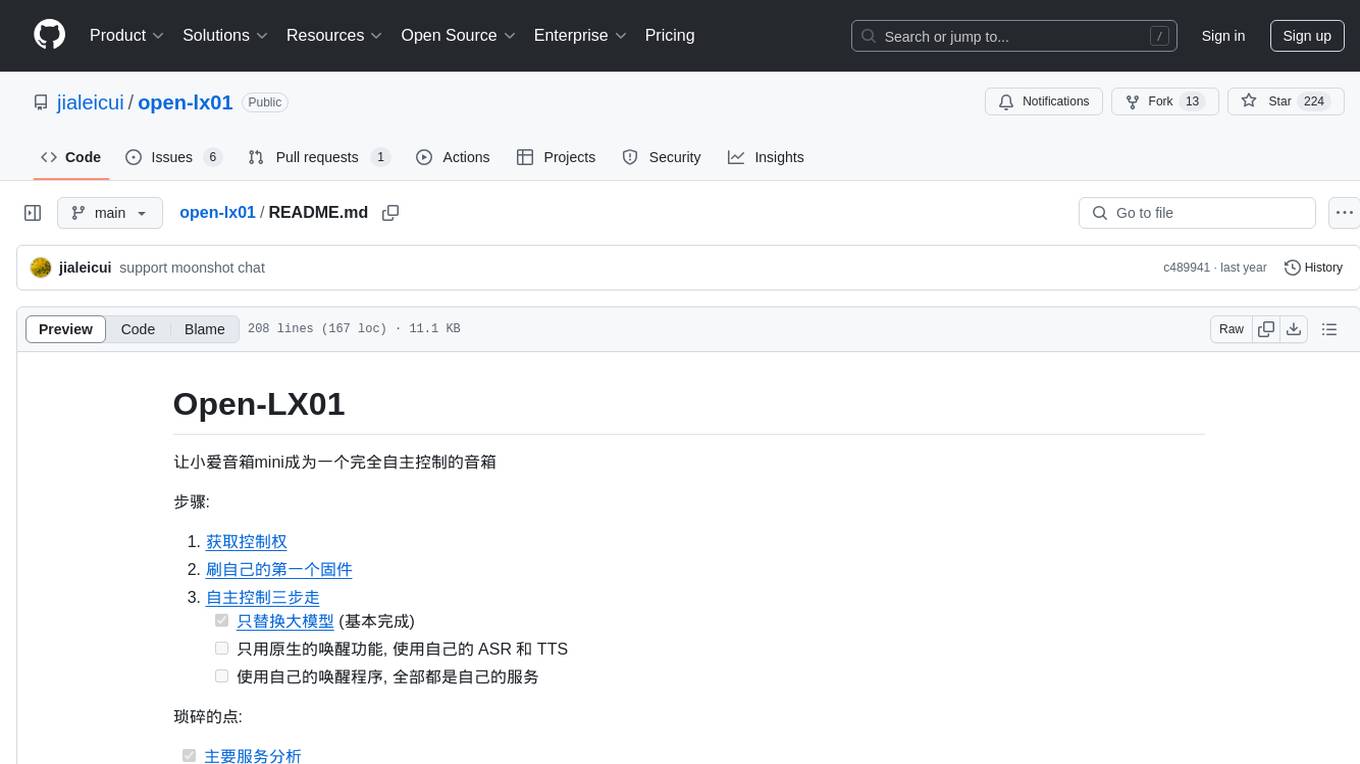

Open-LX01 is a project aimed at turning the Xiao Ai Mini smart speaker into a fully self-controlled device. The project involves steps such as gaining control, flashing custom firmware, and achieving autonomous control. It includes analysis of main services, reverse engineering methods, cross-compilation environment setup, customization of programs on the speaker, and setting up a web server. The project also covers topics like using custom ASR and TTS, developing a wake-up program, and creating a UI for various configurations. Additionally, it explores topics like gdb-server setup, open-mico-aivs-lab, and open-mipns-sai integration using Porcupine or Kaldi.

README:

让小爱音箱mini成为一个完全自主控制的音箱

步骤:

琐碎的点:

- [x] 主要服务分析

- [x] 逆向方法

- [x] 交叉编译环境

- [x] 音箱上的自定义程序

- [x] 自动上传交互文字到 server

- [x] 根据 server 应答播放文字 (官方tts)

- [x] 关键词匹配跳过上传(比如开灯/停等)

- [x] Web Server

- [x] 接收音箱对话记录

- [x] 声音检测 (目前未用到)

- [x] 大语言模型

- [x] Moonshot

- [x] Github Copilot

- [ ] 支持各种配置的UI (进行中)

- [ ] gdb-server(host=arm) + gdb(target=arm)

- [ ] open-mico-aivs-lab

- [ ] open-mipns-sai (使用 porcupine 或 kaldi)

https://github.com/jialeicui/open-lx01/assets/3217223/b5e0a511-1a28-42c8-9462-f9f20279fd30

具体步骤可以参考B站 system-player 的文章

总结步骤如下:

- 淘宝买一个 USB 转 TTL 的刷机线, 3块钱-7块钱不等 (小爱音箱mini咸鱼30块钱左右, 电烙铁+焊锡20块钱)

- 拆机, 找到电路板上的 TX/RX/GND 焊接上刷机线

- 这里注意电路板上 TX/RX 标识不止一处, 使用MI标识右下角的那组

- GND -> GND

- TX -> RX

- RX -> TX

- USB 插电脑, 安装必要软件

- Windows

- 需要安装驱动, 淘宝店家一般都会给链接 CH340 / PL2304 之类的驱动(功能一样, 只不过是国产还是非国产的区别)

- 安装好之后, 插上 USB 在设备管理器应该能看到串口, 记下 COMX 里的 X

- 下载 PuTTY 连接方式选串口, 地址写 COMX (X是个数字), 波特率写 115200, 确认

- 给音箱上电之后应该能看到打印了

- macOS

- 不需要装驱动

- 和串口交互的软件使用 screen 命令行就够: brew install screen

- USB 串口设备一般是 /dev/cu.xxxx, ls 一下应该就能看到一个

- screen /dev/cu.xxxx 115200

- 音箱上电看是否有打印

- Linux

- PuTTY 和 screen 均可 (我倾向于 screen, 可以直接在当前终端 tmux 中各种嵌入)

- 串口设备一般是 /dev/ttyUSBX, X是一个数字

- 如果没有这个设备, 可以执行 sudo dmesg -w, 插拔 USB, 看打印的日志就能找到这个 device

- PuTTY 或者 screen 按照前面类似的配置好

- 音箱商店看是否有打印

- Windows

- 进 shell (在串口打印正常的前提下)

- 如果你的音箱固件一直没有升级过 (就是在小爱音箱app里点升级), 可能按几次回车直接就能进到 shell, 看到类似

root@LX01:~#的提示符, 那本步骤就可以结束, 后面不用看 - 大概率会来到这步, 需要输入账户名和密码

- 网上有很多如何计算密码的教程, 根据不同的型号, 不同的固件有不同的方式, 但是大概率已经失效了, 如何确认失效了? 下面:

- 如果输入账户 root 回车之后, 有

magic xxx之类的提示, 或者随便输入密码, 有dsa verify faile类似字样, 就说明我们基本没办法得知密码了, 只能刷机

- 如果你的音箱固件一直没有升级过 (就是在小爱音箱app里点升级), 可能按几次回车直接就能进到 shell, 看到类似

这步我们的目的可能是让音箱默认开 ssh, 也可能是因为我们不知道密码, 想进 shell, 这里一起搞定

(下面几个步骤的自动化脚本参考 打包)

-

下载官方固件,

后续都假设基于 1.56.1

-

解压固件

- 懒人方法, 使用 这个脚本, 执行

/path/to/mico_firmware.py -e /path/to/mico_firmware_c1cac_1.56.1.bin -d /path/to/extract - 自助方法, 使用 binwalk (binwalk 需要安装, 根据自己的系统自行搜索)

$ binwalk mico_firmware_c1cac_1.56.1.bin DECIMAL HEXADECIMAL DESCRIPTION -------------------------------------------------------------------------------- 23944 0x5D88 uImage header, header size: 64 bytes, header CRC: 0x32556CD8, created: 1970-01-01 00:00:00, image size: 2944424 bytes, Data Address: 0x40008000, Entry Point: 0x40008000, data CRC: 0xCBE58ED2, OS: Linux, CPU: ARM, image type: OS Kernel Image, compression type: none, image name: "ARM OpenWrt Linux-3.4.39" 24008 0x5DC8 Linux kernel ARM boot executable zImage (little-endian) 40299 0x9D6B gzip compressed data, maximum compression, from Unix, last modified: 1970-01-01 00:00:00 (null date) 4195272 0x4003C8 Squashfs filesystem, little endian, version 4.0, compression:xz, size: 27150277 bytes, 2061 inodes, blocksize: 262144 bytes, created: 2021-10-28 13:55:53可以看到4行, 其实是两部分, 前3行是 kernel, 最后一行是 rootfs

第一行显示是一个 uImage, 是用于 uboot 引导的映像格式, zImage 是一个压缩过的的映像, uImage 就是 zImage 加了一些头信息, 让 uboot 更方便的加载通常来讲 kernel 不用升级, 我们只关注 rootfs 即可

$ dd if=mico_firmware_c1cac_1.56.1.bin bs=1 skip=4195272 of=rootfs.img 27263264+0 records in 27263264+0 records out 27263264 bytes (27 MB, 26 MiB) copied, 30.5024 s, 894 kB/s sudo unsquashfs rootfs.img 1968 inodes (1545 blocks) to write created 1389 files created 93 directories created 578 symlinks created 1 device created 0 fifos created 0 sockets created 0 hardlinks - 懒人方法, 使用 这个脚本, 执行

-

修改固件

说明:

- squashfs 是只读的, 进入系统之后, 系统内的 rootfs 分区都是只读, 意味着对于 rootfs 的修改我们都必须通过固件完成, 进入系统之后是无法修改的

- 进入系统之后, /tmp 和 /data 都是可写的, /tmp 是挂载的内存, /data 是可以持久化的, 挂载点和大小如下

$ df -h Filesystem Size Used Available Use% Mounted on rootfs 26.0M 26.0M 0 100% / /dev/root 26.0M 26.0M 0 100% / tmpfs 60.2M 692.0K 59.6M 1% /tmp tmpfs 512.0K 0 512.0K 0% /dev /dev/by-name/UDISK 13.3M 10.5M 2.1M 83% /data

- rootfs.img 不能超过 32MB, 超过的话, 刷入会把其他的部分刷坏

开始修改:

-

修改

squashfs-root/etc/inittab

把::askconsole:/bin/login改成::askconsole:/bin/sh -

先生成一个密码, 自己定好 salt 和你自己的密码, 这里以 xiaoai/root 为例:

openssl passwd -1 -salt "xiaoai" "root", 会生成字符串:$1$xiaoai$803hWklCcQwX7v5gYP6pB0

说明: 1 是计算方式 MD5, 具体可以man openssl-passwd查看更多帮助修改

squashfs-root/etc/shadow的 root 那一行, 改成root:$1$xiaoai$803hWklCcQwX7v5gYP6pB0:18128:0:99999:7:::保存这样就把 root 的密码修改成了 root, 如果前面我们尝试登陆的时候发现是 dsa 校验, 那么我们还需要修改校验方式为默认, 修改方法如下:

修改

squashfs-root/etc/pam.d/common-auth, 将libmico-pam.so相关的行注释掉(前面加 #)

把auth [success=1 default=ignore] pam_unix.so nullok_secure取消注释 (删掉前面的 #)

保存 -

说明: 固件里使用 dropbear 作为 ssh server (轻量) 修改 etc/init.d/dropbear 启动脚本(较复杂, 可参考 patch)

# 自动启动 sudo ln -s ../init.d/dropbear squashfs-root/etc/rc.d/S96dropbear -

mksquashfs squashfs-root patch.img -comp xz -b 256k 我们会看到一个叫做 patch.img 的文件, 文件大小应该没有太大变化 (没有往里塞东西)

-

刷固件

软件准备:

-

电脑端需要安装 fastboot: 搜 android platform tools, 进到 Android 的网站 按照提示根据不同的平台安装 (注意 Windows 还需要装一个驱动才行)

-

进 fastboot 模式: 打开串口输入框, 重启小爱, 同时按住 s 键, 会进入到 uboot 的命令行, 提示符为

sunxi#(记得把输入的一大堆 s 删掉) -

敲命令

fastboot_test, 音箱就进入了 fastboot 模式 -

在电脑端执行

fastboot devices应该能看到Android Fastboot Android Fastboot -

执行

fastboot flash rootfs1 patch.img等 7s 左右提示成功 -

串口命令行中 Ctrl-C, 执行命令:

run setargs_first boot_first会使用 rootfs1 重启 (这个命令执行一次之后系统会记住启动分区, 以后再刷固件直接执行boot即可)

重启之后, 我们应该就可以进去 shell 了 (是否需要登陆取决于是否修改了 inittab)

BusyBox v1.24.1 () built-in shell (ash) _____ _ __ __ __ ___ ___ | ||_| ___ ___ | | | | | |_ | | | | || || _|| . | | |__|- -| | |_| |_ |_|_|_||_||___||___| |_____|__|__|___|_____| ---------------------------------------------- ROM Type:release / Ver:1.56.1 ---------------------------------------------- root@LX01:/#

ifconfig 查看是否已经连接到了局域网 (如果刷机之前就没有连接到局域网, 可以刷之前连一次, 或者看后续"手动连家里wifi"的步骤)

ps | grep dropbear应该也能看到进程, 这时候我们就可以ssh [email protected]到小爱音箱了可能遇到的问题:

- no matching host key type found. Their offer: ssh-rsa

增加ssh参数, 使用类似ssh -oHostKeyAlgorithms=+ssh-rsa [email protected]即可

-

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for open-lx01

Similar Open Source Tools

open-lx01

Open-LX01 is a project aimed at turning the Xiao Ai Mini smart speaker into a fully self-controlled device. The project involves steps such as gaining control, flashing custom firmware, and achieving autonomous control. It includes analysis of main services, reverse engineering methods, cross-compilation environment setup, customization of programs on the speaker, and setting up a web server. The project also covers topics like using custom ASR and TTS, developing a wake-up program, and creating a UI for various configurations. Additionally, it explores topics like gdb-server setup, open-mico-aivs-lab, and open-mipns-sai integration using Porcupine or Kaldi.

ChatPilot

ChatPilot is a chat agent tool that enables AgentChat conversations, supports Google search, URL conversation (RAG), and code interpreter functionality, replicates Kimi Chat (file, drag and drop; URL, send out), and supports OpenAI/Azure API. It is based on LangChain and implements ReAct and OpenAI Function Call for agent Q&A dialogue. The tool supports various automatic tools such as online search using Google Search API, URL parsing tool, Python code interpreter, and enhanced RAG file Q&A with query rewriting support. It also allows front-end and back-end service separation using Svelte and FastAPI, respectively. Additionally, it supports voice input/output, image generation, user management, permission control, and chat record import/export.

cool-admin-midway

Cool-admin (midway version) is a cool open-source backend permission management system that supports modular, plugin-based, rapid CRUD development. It facilitates the quick construction and iteration of backend management systems, deployable in various ways such as serverless, docker, and traditional servers. It features AI coding for generating APIs and frontend pages, flow orchestration for drag-and-drop functionality, modular and plugin-based design for clear and maintainable code. The tech stack includes Node.js, Midway.js, Koa.js, TypeScript for backend, and Vue.js, Element-Plus, JSX, Pinia, Vue Router for frontend. It offers friendly technology choices for both frontend and backend developers, with TypeScript syntax similar to Java and PHP for backend developers. The tool is suitable for those looking for a modern, efficient, and fast development experience.

HiveChat

HiveChat is an AI chat application designed for small and medium teams. It supports various models such as DeepSeek, Open AI, Claude, and Gemini. The tool allows easy configuration by one administrator for the entire team to use different AI models. It supports features like email or Feishu login, LaTeX and Markdown rendering, DeepSeek mind map display, image understanding, AI agents, cloud data storage, and integration with multiple large model service providers. Users can engage in conversations by logging in, while administrators can configure AI service providers, manage users, and control account registration. The technology stack includes Next.js, Tailwindcss, Auth.js, PostgreSQL, Drizzle ORM, and Ant Design.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.

langchain4j-aideepin-web

The langchain4j-aideepin-web repository is the frontend project of langchain4j-aideepin, an open-source, offline deployable retrieval enhancement generation (RAG) project based on large language models such as ChatGPT and application frameworks such as Langchain4j. It includes features like registration & login, multi-sessions (multi-roles), image generation (text-to-image, image editing, image-to-image), suggestions, quota control, knowledge base (RAG) based on large models, model switching, and search engine switching.

AMchat

AMchat is a large language model that integrates advanced math concepts, exercises, and solutions. The model is based on the InternLM2-Math-7B model and is specifically designed to answer advanced math problems. It provides a comprehensive dataset that combines Math and advanced math exercises and solutions. Users can download the model from ModelScope or OpenXLab, deploy it locally or using Docker, and even retrain it using XTuner for fine-tuning. The tool also supports LMDeploy for quantization, OpenCompass for evaluation, and various other features for model deployment and evaluation. The project contributors have provided detailed documentation and guides for users to utilize the tool effectively.

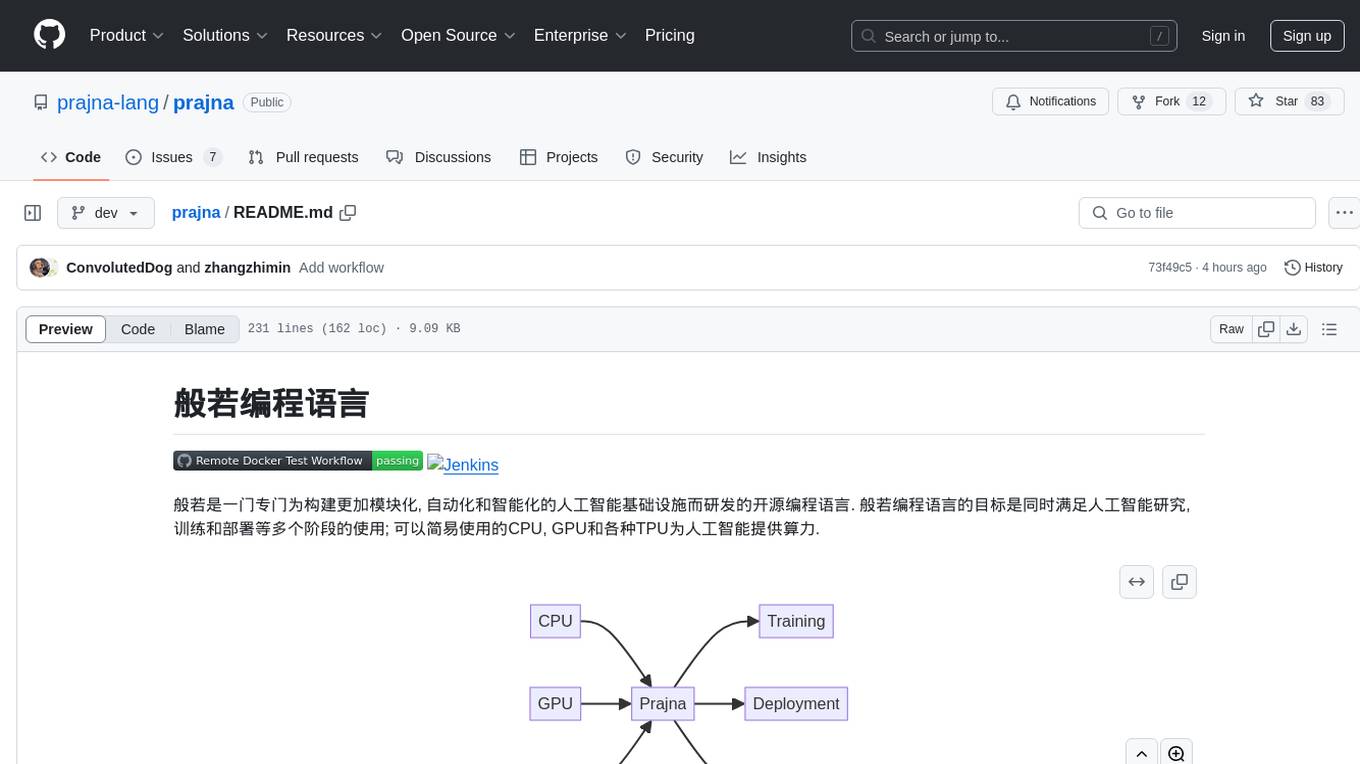

prajna

Prajna is an open-source programming language specifically developed for building more modular, automated, and intelligent artificial intelligence infrastructure. It aims to cater to various stages of AI research, training, and deployment by providing easy access to CPU, GPU, and various TPUs for AI computing. Prajna features just-in-time compilation, GPU/heterogeneous programming support, tensor computing, syntax improvements, and user-friendly interactions through main functions, Repl, and Jupyter, making it suitable for algorithm development and deployment in various scenarios.

herc.ai

Herc.ai is a powerful library for interacting with the Herc.ai API. It offers free access to users and supports all languages. Users can benefit from Herc.ai's features unlimitedly with a one-time subscription and API key. The tool provides functionalities for question answering and text-to-image generation, with support for various models and customization options. Herc.ai can be easily integrated into CLI, CommonJS, TypeScript, and supports beta models for advanced usage. Developed by FiveSoBes and Luppux Development.

bce-qianfan-sdk

The Qianfan SDK provides best practices for large model toolchains, allowing AI workflows and AI-native applications to access the Qianfan large model platform elegantly and conveniently. The core capabilities of the SDK include three parts: large model reasoning, large model training, and general and extension: * `Large model reasoning`: Implements interface encapsulation for reasoning of Yuyan (ERNIE-Bot) series, open source large models, etc., supporting dialogue, completion, Embedding, etc. * `Large model training`: Based on platform capabilities, it supports end-to-end large model training process, including training data, fine-tuning/pre-training, and model services. * `General and extension`: General capabilities include common AI development tools such as Prompt/Debug/Client. The extension capability is based on the characteristics of Qianfan to adapt to common middleware frameworks.

EduChat

EduChat is a large-scale language model-based chatbot system designed for intelligent education by the EduNLP team at East China Normal University. The project focuses on developing a dialogue-based language model for the education vertical domain, integrating diverse education vertical domain data, and providing functions such as automatic question generation, homework correction, emotional support, course guidance, and college entrance examination consultation. The tool aims to serve teachers, students, and parents to achieve personalized, fair, and warm intelligent education.

new-api

New API is an open-source project based on One API with additional features and improvements. It offers a new UI interface, supports Midjourney-Proxy(Plus) interface, online recharge functionality, model-based charging, channel weight randomization, data dashboard, token-controlled models, Telegram authorization login, Suno API support, Rerank model integration, and various third-party models. Users can customize models, retry channels, and configure caching settings. The deployment can be done using Docker with SQLite or MySQL databases. The project provides documentation for Midjourney and Suno interfaces, and it is suitable for AI enthusiasts and developers looking to enhance AI capabilities.

siliconflow-plugin

SiliconFlow-PLUGIN (SF-PLUGIN) is a versatile AI integration plugin for the Yunzai robot framework, supporting multiple AI services and models. It includes features such as AI drawing, intelligent conversations, real-time search, text-to-speech synthesis, resource management, link handling, video parsing, group functions, WebSocket support, and Jimeng-Api interface. The plugin offers functionalities for drawing, conversation, search, image link retrieval, video parsing, group interactions, and more, enhancing the capabilities of the Yunzai framework.

ddddocr

ddddocr is a Rust version of a simple OCR API server that provides easy deployment for captcha recognition without relying on the OpenCV library. It offers a user-friendly general-purpose captcha recognition Rust library. The tool supports recognizing various types of captchas, including single-line text, transparent black PNG images, target detection, and slider matching algorithms. Users can also import custom OCR training models and utilize the OCR API server for flexible OCR result control and range limitation. The tool is cross-platform and can be easily deployed.

midjourney-proxy

Midjourney-proxy is a proxy for the Discord channel of MidJourney, enabling API-based calls for AI drawing. It supports Imagine instructions, adding image base64 as a placeholder, Blend and Describe commands, real-time progress tracking, Chinese prompt translation, prompt sensitive word pre-detection, user-token connection to WSS, multi-account configuration, and more. For more advanced features, consider using midjourney-proxy-plus, which includes Shorten, focus shifting, image zooming, local redrawing, nearly all associated button actions, Remix mode, seed value retrieval, account pool persistence, dynamic maintenance, /info and /settings retrieval, account settings configuration, Niji bot robot, InsightFace face replacement robot, and an embedded management dashboard.

ChatGLM3

ChatGLM3 is a conversational pretrained model jointly released by Zhipu AI and THU's KEG Lab. ChatGLM3-6B is the open-sourced model in the ChatGLM3 series. It inherits the advantages of its predecessors, such as fluent conversation and low deployment threshold. In addition, ChatGLM3-6B introduces the following features: 1. A stronger foundation model: ChatGLM3-6B's foundation model ChatGLM3-6B-Base employs more diverse training data, more sufficient training steps, and more reasonable training strategies. Evaluation on datasets from different perspectives, such as semantics, mathematics, reasoning, code, and knowledge, shows that ChatGLM3-6B-Base has the strongest performance among foundation models below 10B parameters. 2. More complete functional support: ChatGLM3-6B adopts a newly designed prompt format, which supports not only normal multi-turn dialogue, but also complex scenarios such as tool invocation (Function Call), code execution (Code Interpreter), and Agent tasks. 3. A more comprehensive open-source sequence: In addition to the dialogue model ChatGLM3-6B, the foundation model ChatGLM3-6B-Base, the long-text dialogue model ChatGLM3-6B-32K, and ChatGLM3-6B-128K, which further enhances the long-text comprehension ability, are also open-sourced. All the above weights are completely open to academic research and are also allowed for free commercial use after filling out a questionnaire.

For similar tasks

open-lx01

Open-LX01 is a project aimed at turning the Xiao Ai Mini smart speaker into a fully self-controlled device. The project involves steps such as gaining control, flashing custom firmware, and achieving autonomous control. It includes analysis of main services, reverse engineering methods, cross-compilation environment setup, customization of programs on the speaker, and setting up a web server. The project also covers topics like using custom ASR and TTS, developing a wake-up program, and creating a UI for various configurations. Additionally, it explores topics like gdb-server setup, open-mico-aivs-lab, and open-mipns-sai integration using Porcupine or Kaldi.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.