Best AI tools for< Monitor Llm Applications >

20 - AI tool Sites

Inductor

Inductor is a developer tool for evaluating, ensuring, and improving the quality of your LLM applications – both during development and in production. It provides a fantastic workflow for continuous testing and evaluation as you develop, so that you always know your LLM app’s quality. Systematically improve quality and cost-effectiveness by actionably understanding your LLM app’s behavior and quickly testing different app variants. Rigorously assess your LLM app’s behavior before you deploy, in order to ensure quality and cost-effectiveness when you’re live. Easily monitor your live traffic: detect and resolve issues, analyze usage in order to improve, and seamlessly feed back into your development process. Inductor makes it easy for engineering and other roles to collaborate: get critical human feedback from non-engineering stakeholders (e.g., PM, UX, or subject matter experts) to ensure that your LLM app is user-ready.

Confident AI

Confident AI is an open-source evaluation infrastructure for Large Language Models (LLMs). It provides a centralized platform to judge LLM applications, ensuring substantial benefits and addressing any weaknesses in LLM implementation. With Confident AI, companies can define ground truths to ensure their LLM is behaving as expected, evaluate performance against expected outputs to pinpoint areas for iterations, and utilize advanced diff tracking to guide towards the optimal LLM stack. The platform offers comprehensive analytics to identify areas of focus and features such as A/B testing, evaluation, output classification, reporting dashboard, dataset generation, and detailed monitoring to help productionize LLMs with confidence.

Langtrace AI

Langtrace AI is an open-source observability tool powered by Scale3 Labs that helps monitor, evaluate, and improve LLM (Large Language Model) applications. It collects and analyzes traces and metrics to provide insights into the ML pipeline, ensuring security through SOC 2 Type II certification. Langtrace supports popular LLMs, frameworks, and vector databases, offering end-to-end observability and the ability to build and deploy AI applications with confidence.

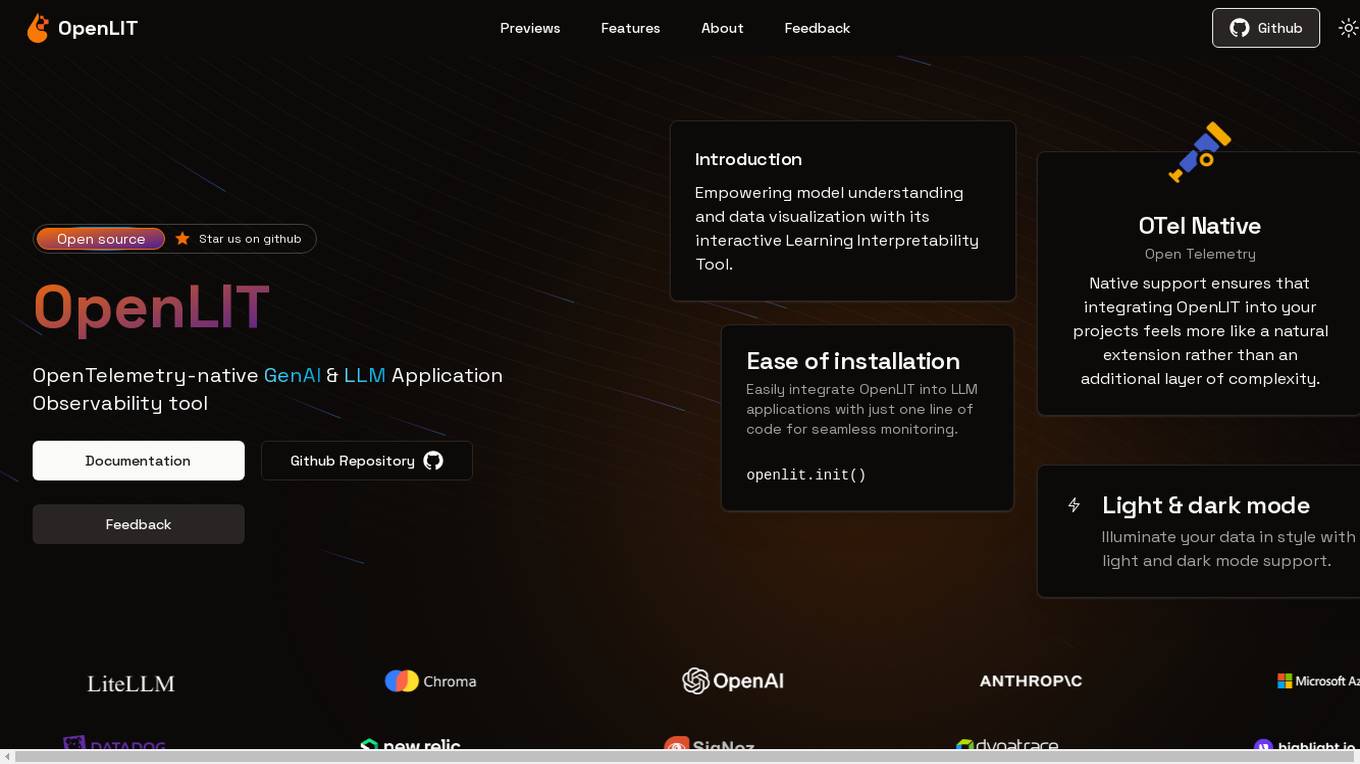

OpenLIT

OpenLIT is an AI application designed as an Observability tool for GenAI and LLM applications. It empowers model understanding and data visualization through an interactive Learning Interpretability Tool. With OpenTelemetry-native support, it seamlessly integrates into projects, offering features like fine-tuning performance, real-time data streaming, low latency processing, and visualizing data insights. The tool simplifies monitoring with easy installation and light/dark mode options, connecting to popular observability platforms for data export. Committed to OpenTelemetry community standards, OpenLIT provides valuable insights to enhance application performance and reliability.

Ottic

Ottic is an AI tool designed to empower both technical and non-technical teams to test Language Model (LLM) applications efficiently and accelerate the development cycle. It offers features such as a 360º view of the QA process, end-to-end test management, comprehensive LLM evaluation, and real-time monitoring of user behavior. Ottic aims to bridge the gap between technical and non-technical team members, ensuring seamless collaboration and reliable product delivery.

Fiddler AI

Fiddler AI is an AI Observability platform that provides tools for monitoring, explaining, and improving the performance of AI models. It offers a range of capabilities, including explainable AI, NLP and CV model monitoring, LLMOps, and security features. Fiddler AI helps businesses to build and deploy high-performing AI solutions at scale.

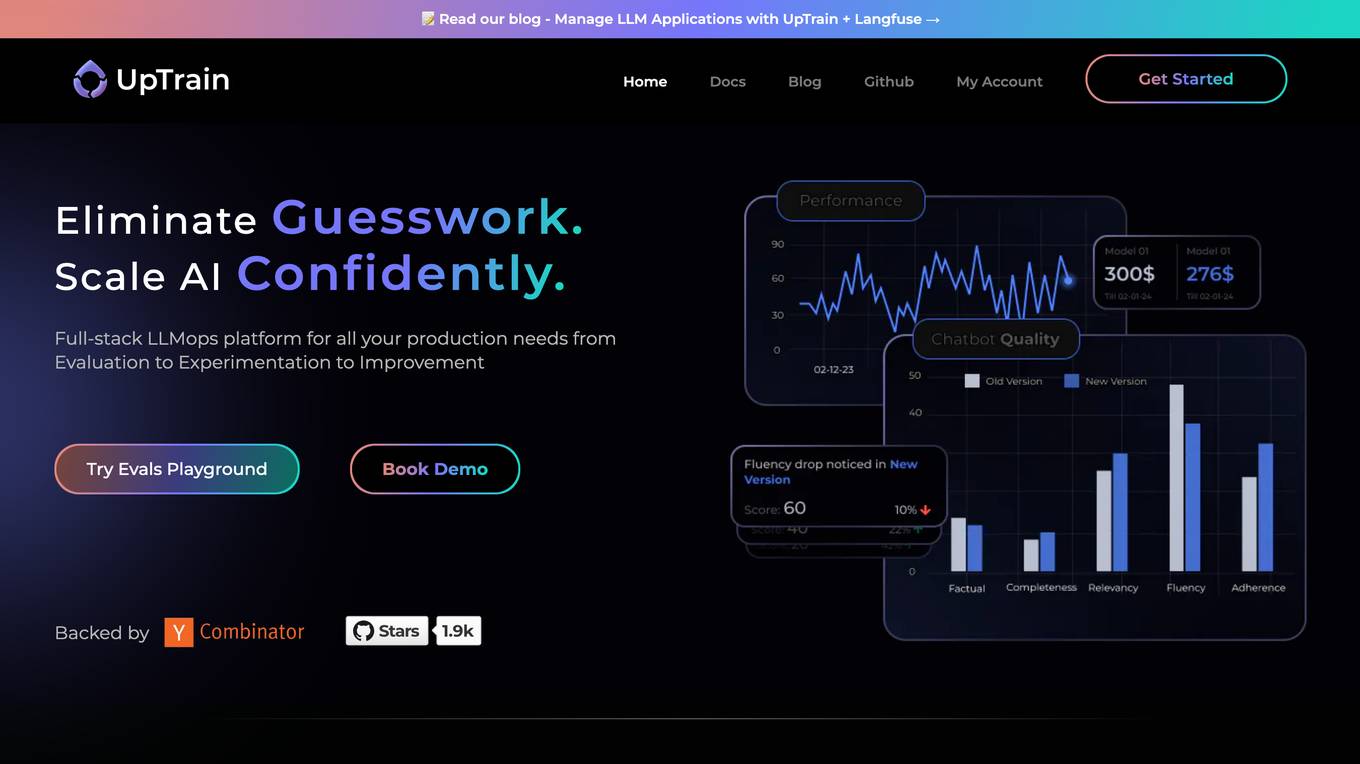

UpTrain

UpTrain is a full-stack LLMOps platform designed to help users confidently scale AI by providing a comprehensive solution for all production needs, from evaluation to experimentation to improvement. It offers diverse evaluations, automated regression testing, enriched datasets, and innovative techniques to generate high-quality scores. UpTrain is built for developers, compliant to data governance needs, cost-efficient, remarkably reliable, and open-source. It provides precision metrics, task understanding, safeguard systems, and covers a wide range of language features and quality aspects. The platform is suitable for developers, product managers, and business leaders looking to enhance their LLM applications.

StreamDeploy

StreamDeploy is an AI-powered cloud deployment platform designed to streamline and secure application deployment for agile teams. It offers a range of features to help developers maximize productivity and minimize costs, including a Dockerfile generator, automated security checks, and support for continuous integration and delivery (CI/CD) pipelines. StreamDeploy is currently in closed beta, but interested users can book a demo or follow the company on Twitter for updates.

deepset

deepset is an AI platform that offers enterprise-level products and solutions for AI teams. It provides deepset Cloud, a platform built with Haystack, enabling fast and accurate prototyping, building, and launching of advanced AI applications. The platform streamlines the AI application development lifecycle, offering processes, tools, and expertise to move from prototype to production efficiently. With deepset Cloud, users can optimize solution accuracy, performance, and cost, and deploy AI applications at any scale with one click. The platform also allows users to explore new models and configurations without limits, extending their team with access to world-class AI engineers for guidance and support.

Langtail

Langtail is a platform that helps developers build, test, and deploy AI-powered applications. It provides a suite of tools to help developers debug prompts, run tests, and monitor the performance of their AI models. Langtail also offers a community forum where developers can share tips and tricks, and get help from other users.

Haystack

Haystack is a production-ready open-source AI framework designed to facilitate building AI applications. It offers a flexible components and pipelines architecture, allowing users to customize and build applications according to their specific requirements. With partnerships with leading LLM providers and AI tools, Haystack provides freedom of choice for users. The framework is built for production, with fully serializable pipelines, logging, monitoring integrations, and deployment guides for full-scale deployments on various platforms. Users can build Haystack apps faster using deepset Studio, a platform for drag-and-drop construction of pipelines, testing, debugging, and sharing prototypes.

Lunary

Lunary is an AI developer platform designed to bring AI applications to production. It offers a comprehensive set of tools to manage, improve, and protect LLM apps. With features like Logs, Metrics, Prompts, Evaluations, and Threads, Lunary empowers users to monitor and optimize their AI agents effectively. The platform supports tasks such as tracing errors, labeling data for fine-tuning, optimizing costs, running benchmarks, and testing open-source models. Lunary also facilitates collaboration with non-technical teammates through features like A/B testing, versioning, and clean source-code management.

Cast AI

Cast AI is an intelligent Kubernetes automation platform that offers live migration for AWS EKS, enabling users to migrate stateful workloads with zero downtime. The platform provides application performance automation by automating and optimizing the entire application stack, including Kubernetes cluster optimization, security, workload optimization, LLM optimization for AIOps, cost monitoring, and database optimization. Cast AI integrates with various cloud services and tools, offering solutions for migration of stateful workloads, inference at scale, and cutting AI costs without sacrificing scale. The platform helps users improve performance, reduce costs, and boost productivity through end-to-end application performance automation.

LangChain

LangChain is an AI tool that offers a suite of products supporting developers in the LLM application lifecycle. It provides a framework to construct LLM-powered apps easily, visibility into app performance, and a turnkey solution for serving APIs. LangChain enables developers to build context-aware, reasoning applications and future-proof their applications by incorporating vendor optionality. LangSmith, a part of LangChain, helps teams improve accuracy and performance, iterate faster, and ship new AI features efficiently. The tool is designed to drive operational efficiency, increase discovery & personalization, and deliver premium products that generate revenue.

Future AGI

Future AGI is a revolutionary AI data management platform that aims to achieve 99% accuracy in AI applications across software and hardware. It provides a comprehensive evaluation and optimization platform for enterprises to enhance the performance of their AI models. Future AGI offers features such as creating trustworthy, accurate, and responsible AI, 10x faster processing, generating and managing diverse synthetic datasets, testing and analyzing agentic workflow configurations, assessing agent performance, enhancing LLM application performance, monitoring and protecting applications in production, and evaluating AI across different modalities.

Protect AI

Protect AI is a comprehensive platform designed to secure AI systems by providing visibility and manageability to detect and mitigate unique AI security threats. The platform empowers organizations to embrace a security-first approach to AI, offering solutions for AI Security Posture Management, ML model security enforcement, AI/ML supply chain vulnerability database, LLM security monitoring, and observability. Protect AI aims to safeguard AI applications and ML systems from potential vulnerabilities, enabling users to build, adopt, and deploy AI models confidently and at scale.

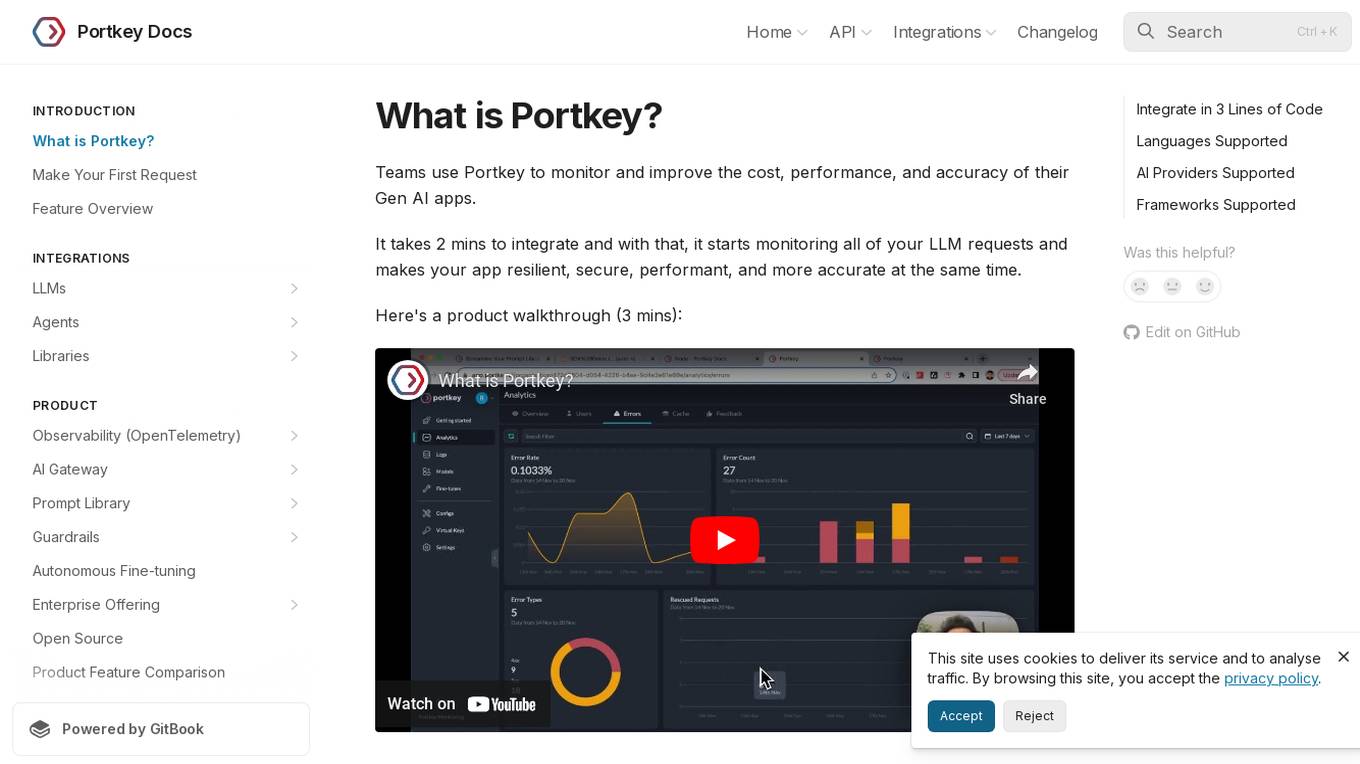

Portkey

Portkey is a monitoring and improvement tool for Gen AI apps, helping teams enhance cost, performance, and accuracy. It integrates quickly, monitors LLM requests, and boosts app resilience, security, performance, and accuracy. The tool offers a product walkthrough and easy integration with OpenAI Python and Node libraries.

FriendliAI

FriendliAI is a generative AI infrastructure company that offers efficient, fast, and reliable generative AI inference solutions for production. Their cutting-edge technologies enable groundbreaking performance improvements, cost savings, and lower latency. FriendliAI provides a platform for building and serving compound AI systems, deploying custom models effortlessly, and monitoring and debugging model performance. The application guarantees consistent results regardless of the model used and offers seamless data integration for real-time knowledge enhancement. With a focus on security, scalability, and performance optimization, FriendliAI empowers businesses to scale with ease.

Knostic AI

Knostic AI is an AI application that focuses on Copilot Readiness for Enterprise AI Security. It helps organizations locate and remediate data leaks from AI searches, ensuring data security and compliance. Knostic offers solutions to prevent data leakage, map knowledge boundaries, recommend permission adjustments, and provide independent verification of security posture readiness for AI adoption.

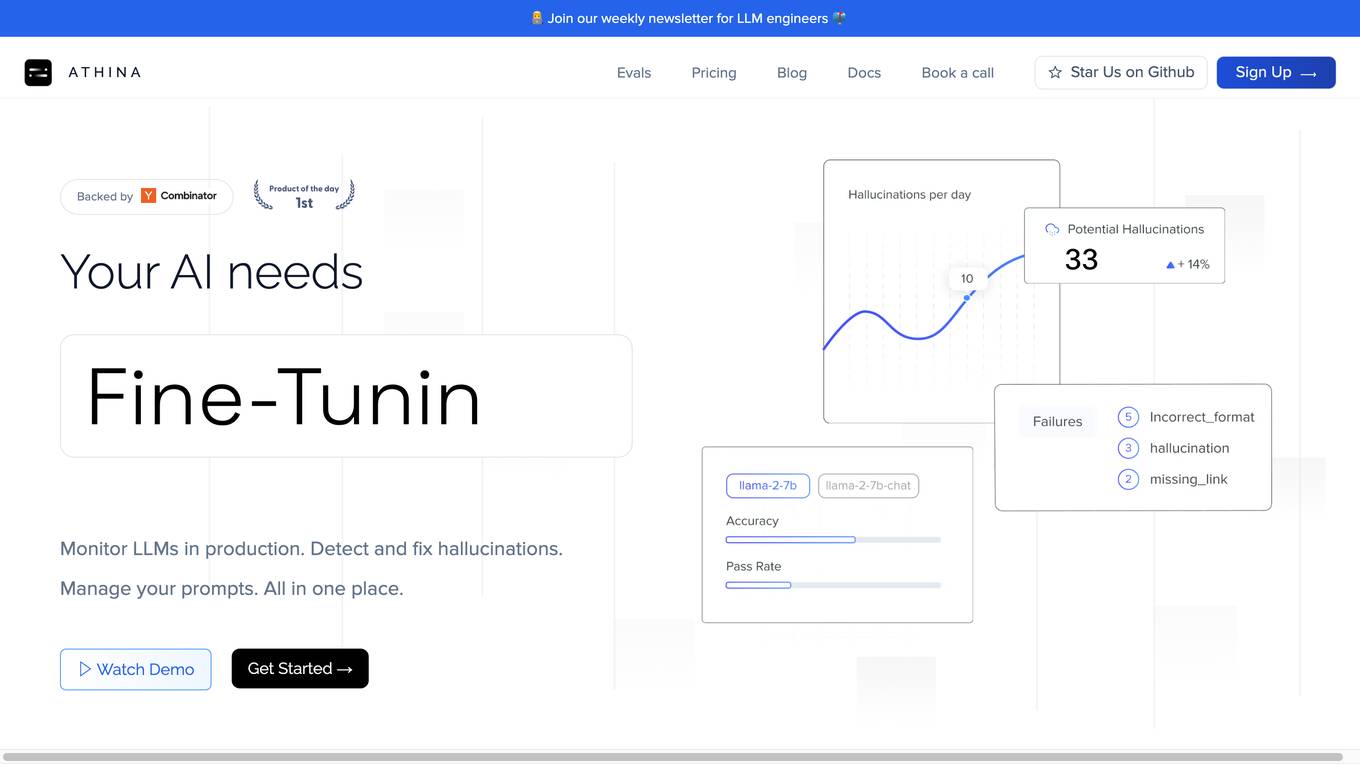

Athina AI

Athina AI is a comprehensive platform designed to monitor, debug, analyze, and improve the performance of Large Language Models (LLMs) in production environments. It provides a suite of tools and features that enable users to detect and fix hallucinations, evaluate output quality, analyze usage patterns, and optimize prompt management. Athina AI supports integration with various LLMs and offers a range of evaluation metrics, including context relevancy, harmfulness, summarization accuracy, and custom evaluations. It also provides a self-hosted solution for complete privacy and control, a GraphQL API for programmatic access to logs and evaluations, and support for multiple users and teams. Athina AI's mission is to empower organizations to harness the full potential of LLMs by ensuring their reliability, accuracy, and alignment with business objectives.

3 - Open Source AI Tools

doku

OpenLIT is an OpenTelemetry-native GenAI and LLM Application Observability tool. It's designed to make the integration process of observability into GenAI projects as easy as pie – literally, with just a single line of code. Whether you're working with popular LLM Libraries such as OpenAI and HuggingFace or leveraging vector databases like ChromaDB, OpenLIT ensures your applications are monitored seamlessly, providing critical insights to improve performance and reliability.

openllmetry

OpenLLMetry is a set of extensions built on top of OpenTelemetry that gives you complete observability over your LLM application. Because it uses OpenTelemetry under the hood, it can be connected to your existing observability solutions - Datadog, Honeycomb, and others. It's built and maintained by Traceloop under the Apache 2.0 license. The repo contains standard OpenTelemetry instrumentations for LLM providers and Vector DBs, as well as a Traceloop SDK that makes it easy to get started with OpenLLMetry, while still outputting standard OpenTelemetry data that can be connected to your observability stack. If you already have OpenTelemetry instrumented, you can just add any of our instrumentations directly.

openlit

OpenLIT is an OpenTelemetry-native GenAI and LLM Application Observability tool. It's designed to make the integration process of observability into GenAI projects as easy as pie – literally, with just **a single line of code**. Whether you're working with popular LLM Libraries such as OpenAI and HuggingFace or leveraging vector databases like ChromaDB, OpenLIT ensures your applications are monitored seamlessly, providing critical insights to improve performance and reliability.

20 - OpenAI Gpts

Quake and Volcano Watch Iceland

Seismic and volcanic monitor with in-depth data and visuals.

Qtech | FPS

Frost Protection System is an AI bot optimizing open field farming of fruits, vegetables, and flowers, combining real-time data and AI to boost yield, cut costs, and foster sustainable practices in a user-friendly interface.

DataKitchen DataOps and Data Observability GPT

A specialist in DataOps and Data Observability, aiding in data management and monitoring.

Financial Cybersecurity Analyst - Lockley Cash v1

stunspot's advisor for all things Financial Cybersec

AML/CFT Expert

Specializes in Anti-Money Laundering/Counter-Financing of Terrorism compliance and analysis.

Quality Assurance Advisor

Ensures product quality through systematic process monitoring and evaluation.

SkyNet - Global Conflict Analyst

Global Conflict Analyst that will provide a 'wartime update' on the worst global conflict atm.

Network Operations Advisor

Ensures efficient and effective network performance and security.