Confident AI

None

Confident AI is an open-source evaluation infrastructure for Large Language Models (LLMs). It provides a centralized platform to judge LLM applications, ensuring substantial benefits and addressing any weaknesses in LLM implementation. With Confident AI, companies can define ground truths to ensure their LLM is behaving as expected, evaluate performance against expected outputs to pinpoint areas for iterations, and utilize advanced diff tracking to guide towards the optimal LLM stack. The platform offers comprehensive analytics to identify areas of focus and features such as A/B testing, evaluation, output classification, reporting dashboard, dataset generation, and detailed monitoring to help productionize LLMs with confidence.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Features

Advantages

Disadvantages

Frequently Asked Questions

Alternative AI tools for Confident AI

Similar sites

Confident AI

Confident AI is an open-source evaluation infrastructure for Large Language Models (LLMs). It provides a centralized platform to judge LLM applications, ensuring substantial benefits and addressing any weaknesses in LLM implementation. With Confident AI, companies can define ground truths to ensure their LLM is behaving as expected, evaluate performance against expected outputs to pinpoint areas for iterations, and utilize advanced diff tracking to guide towards the optimal LLM stack. The platform offers comprehensive analytics to identify areas of focus and features such as A/B testing, evaluation, output classification, reporting dashboard, dataset generation, and detailed monitoring to help productionize LLMs with confidence.

Infermatic.ai

Infermatic.ai is a platform that provides access to top Large Language Models (LLMs) with a user-friendly interface. It offers complete privacy, robust security, and scalability for projects, research, and integrations. Users can test, choose, and scale LLMs according to their content needs or business strategies. The platform eliminates the complexities of infrastructure management, latency issues, version control problems, integration complexities, scalability concerns, and cost management issues. Infermatic.ai is designed to be secure, intuitive, and efficient for users who want to leverage LLMs for various tasks.

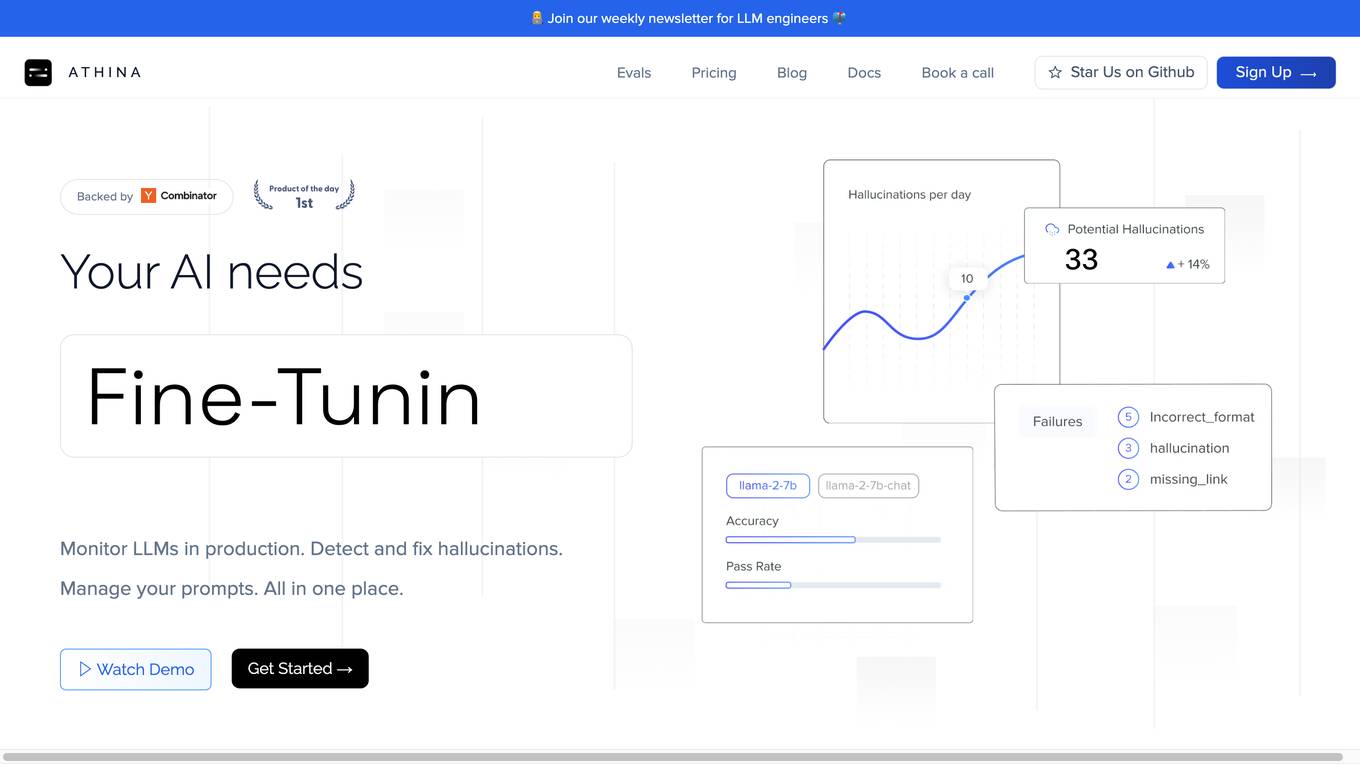

Athina AI

Athina AI is a comprehensive platform designed to monitor, debug, analyze, and improve the performance of Large Language Models (LLMs) in production environments. It provides a suite of tools and features that enable users to detect and fix hallucinations, evaluate output quality, analyze usage patterns, and optimize prompt management. Athina AI supports integration with various LLMs and offers a range of evaluation metrics, including context relevancy, harmfulness, summarization accuracy, and custom evaluations. It also provides a self-hosted solution for complete privacy and control, a GraphQL API for programmatic access to logs and evaluations, and support for multiple users and teams. Athina AI's mission is to empower organizations to harness the full potential of LLMs by ensuring their reliability, accuracy, and alignment with business objectives.

Articul8

Articul8 is a GenAI platform designed to bring order to chaos by enabling users to build sophisticated enterprise applications using their expertise. It offers features such as autonomous decision-making, automated data intelligence, and a library of specialized models. The platform aims to provide faster time to ROI, improved accuracy, and precision, along with rich semantic understanding of data. Articul8 is engineered for regulated industries and offers observability, traceability, and auditability at every step.

Feathery

Feathery is an AI-powered platform that enables users to create powerful forms and workflows without the need for coding. It offers advanced features such as AI data extraction, document intelligence, signatures, and collaboration tools. Feathery caters to various industries like insurance, healthcare, financial services, software, and education, providing solutions to streamline processes and enhance user experiences. The platform is designed to automate form workflows, extract and fill documents, and connect with different systems, making it a versatile tool for data management and workflow optimization.

AdminIQ

AdminIQ is an AI-powered site reliability platform that helps businesses improve the reliability and performance of their websites and applications. It uses machine learning to analyze data from various sources, including application logs, metrics, and user behavior, to identify and resolve issues before they impact users. AdminIQ also provides a suite of tools to help businesses automate their site reliability processes, such as incident management, change management, and performance monitoring.

Hyperscience

Hyperscience is a leading enterprise AI platform that provides hyperautomation solutions for businesses. Its platform enables organizations to automate complex business processes with high accuracy and efficiency. Hyperscience offers a range of solutions across various industries and processes, leveraging technologies such as intelligent document processing, machine learning, and natural language processing. The platform is designed to help businesses transform their operations, improve decision-making, and gain a competitive advantage.

Motific.ai

Motific.ai is a responsible GenAI tool powered by data at scale. It offers a fully managed service with natural language compliance and security guardrails, an intelligence service, and an enterprise data-powered, end-to-end retrieval augmented generation (RAG) service. Users can rapidly deliver trustworthy GenAI assistants and API endpoints, configure assistants with organization's data, optimize performance, and connect with top GenAI model providers. Motific.ai enables users to create custom knowledge bases, connect to various data sources, and ensure responsible AI practices. It supports English language only and offers insights on usage, time savings, and model optimization.

LlamaIndex

LlamaIndex is a framework for building context-augmented Large Language Model (LLM) applications. It provides tools to ingest and process data, implement complex query workflows, and build applications like question-answering chatbots, document understanding systems, and autonomous agents. LlamaIndex enables context augmentation by combining LLMs with private or domain-specific data, offering tools for data connectors, data indexes, engines for natural language access, chat engines, agents, and observability/evaluation integrations. It caters to users of all levels, from beginners to advanced developers, and is available in Python and Typescript.

RAGnexus

RAGnexus is a company that specializes in creating personalized AI assistants using RAG (Retriever-Augmented Generation) technology. Their assistants are designed to provide highly personalized and contextually relevant responses to clients' individual needs. RAGnexus uses private information provided by customers to ensure that responses are accurate and tailored to each specific use case. Retriever-Augmented Generation (RAG) technology uses a two-step approach for generating responses: first, it retrieves relevant information from a database, and then it uses that information to generate accurate and context-specific answers.

GizAI

GizAI is an advanced artificial intelligence tool designed to streamline and optimize various tasks across different industries. With cutting-edge machine learning algorithms, GizAI offers a wide range of features to enhance productivity and decision-making processes. From data analysis to predictive modeling, GizAI empowers users with actionable insights and automation capabilities. Whether you are a business professional, researcher, or student, GizAI provides a user-friendly interface to leverage the power of AI for your specific needs.

Predis.ai

Predis.ai is an AI-powered application that offers predictive analytics solutions for businesses. It leverages advanced machine learning algorithms to analyze data and provide valuable insights to help companies make informed decisions. With a user-friendly interface, Predis.ai simplifies the process of data analysis and forecasting, making it accessible to users with varying levels of technical expertise. The application is designed to assist organizations in optimizing their operations, improving efficiency, and identifying trends to stay ahead in a competitive market.

VisualEyes

VisualEyes is a user experience (UX) optimization tool that uses attention heatmaps and clarity scores to help businesses improve the effectiveness of their digital products. It provides insights into how users interact with websites and applications, allowing businesses to identify areas for improvement and make data-driven decisions about their designs. VisualEyes is part of Neurons, a leading neuroscience company that specializes in providing AI-powered solutions for businesses.

Taylor

Taylor is a deterministic AI tool that empowers Business & Engineering teams to enhance data at scale through bulk classification. It offers a Control Panel for Text Enrichment, enabling users to structure freeform text, enrich metadata, and customize enrichments according to their needs. Taylor's high impact, easy-to-use platform allows for total control over classification and extraction models, driving business impact from day one. With powerful integrations and the ability to integrate with various tools, Taylor simplifies the process of wrangling unstructured text data.

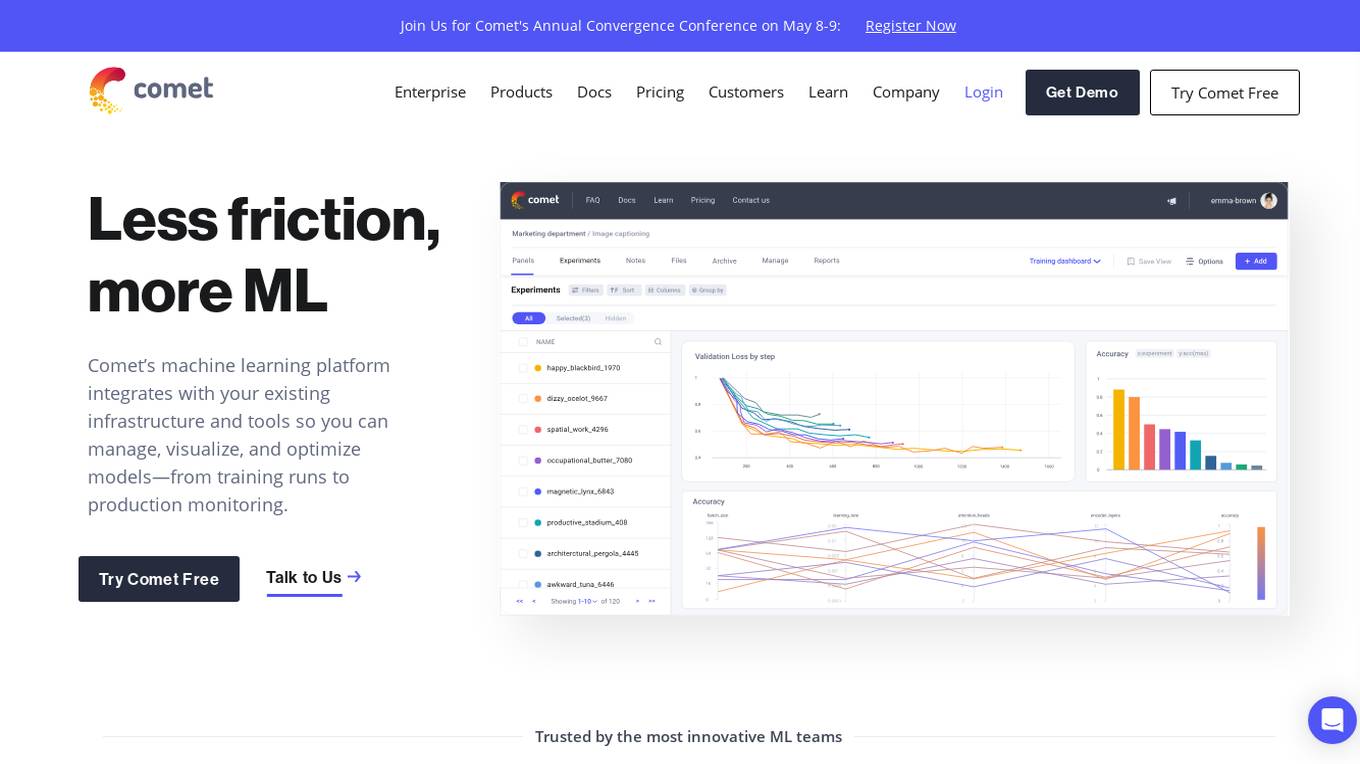

Comet ML

Comet ML is an extensible, fully customizable machine learning platform that aims to move ML forward by supporting productivity, reproducibility, and collaboration. It integrates with existing infrastructure and tools to manage, visualize, and optimize models from training runs to production monitoring. Users can track and compare training runs, create a model registry, and monitor models in production all in one platform. Comet's platform can be run on any infrastructure, enabling users to reshape their ML workflow and bring their existing software and data stack.

Plat.AI

Plat.AI is an automated predictive analytics software that offers model building solutions for various industries such as finance, insurance, and marketing. It provides a real-time decision-making engine that allows users to build and maintain AI models without any coding experience. The platform offers features like automated model building, data preprocessing tools, codeless modeling, and personalized approach to data analysis. Plat.AI aims to make predictive analytics easy and accessible for users of all experience levels, ensuring transparency, security, and compliance in decision-making processes.

For similar tasks

Confident AI

Confident AI is an open-source evaluation infrastructure for Large Language Models (LLMs). It provides a centralized platform to judge LLM applications, ensuring substantial benefits and addressing any weaknesses in LLM implementation. With Confident AI, companies can define ground truths to ensure their LLM is behaving as expected, evaluate performance against expected outputs to pinpoint areas for iterations, and utilize advanced diff tracking to guide towards the optimal LLM stack. The platform offers comprehensive analytics to identify areas of focus and features such as A/B testing, evaluation, output classification, reporting dashboard, dataset generation, and detailed monitoring to help productionize LLMs with confidence.

For similar jobs

Rationale

Rationale is a cutting-edge decision-making tool powered by advanced AI technology, including the latest GPT model and in-context learning capabilities. It leverages artificial intelligence to provide users with valuable insights and recommendations for making informed decisions across various domains.

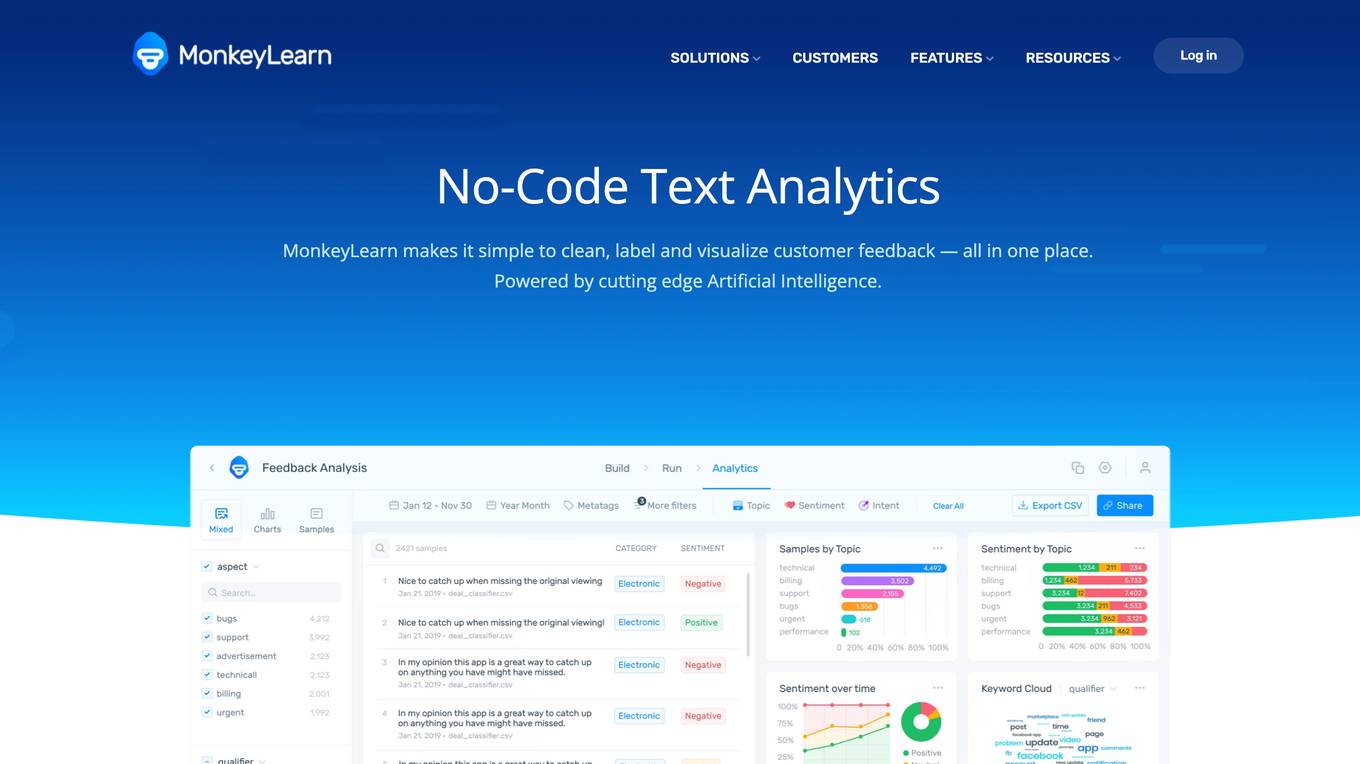

Medallia

Medallia is a real-time text analytics software that empowers organizations to derive actionable insights from customer interactions. With a focus on omnichannel analytics, Medallia's AI-powered platform enables users to identify emerging trends, prioritize key insights, and drive real-time actions. By leveraging natural language understanding and out-of-the-box topic models, Medallia offers customizable KPIs and scalable text analytics solutions for various industries. The platform aims to transform unstructured data into actionable insights to enhance customer and employee experiences.

Warmy

Warmy is an AI-driven email deliverability tool designed to revolutionize email warm-up for improved deliverability. It offers free tools, resources, and pricing options to help users fix email deliverability issues. By utilizing state-of-the-art AI-driven automation processes, Warmy prepares domains and IPs for email outreach campaigns, ensuring the highest email deliverability rates. The platform uses sophisticated algorithms to optimize email deliverability and reputation, leading to increased open and click rates across various email platforms like Gmail, Outlook, and Yahoo. Warmy helps emails bypass spam filters, build trust, and drive engagement, ultimately improving visibility and ensuring emails reach recipients' primary inboxes.

ValueProp.Dev

ValueProp.Dev is an AI-powered tool designed to help businesses create a Value Proposition Canvas based on their company description. The tool assists in identifying customer jobs, pains, gains, products, services, pain relievers, and gain creators to develop a compelling value proposition that resonates with the target audience. By leveraging AI technology, ValueProp.Dev streamlines the process of value proposition creation, enabling businesses to enhance their offerings and better meet customer needs.

functime

functime is a time-series machine learning tool designed to perform forecasting at scale. It provides functions for scoring, ranking, and plotting thousands of forecasts simultaneously. With a focus on guiding users through their first end-to-end forecasting pipeline, functime serves as an AI copilot to analyze trends, seasonality, and causal factors in forecasts. The tool offers a comprehensive API reference and documentation, making it a valuable resource for both beginners and experienced analysts.

XenonStack

XenonStack is an AI application that offers a comprehensive suite of tools and services for building and managing Agentic Systems. The platform provides solutions for data management, analytics, AI transformation, and decision-making processes. With features like AI-enabled catalogs, industrial automation, and agent orchestration, XenonStack aims to empower enterprises to reimagine their business workflows and drive efficiency and agility through intelligent AI agents.

Trends Critical

Trends Critical is an AI text generation SaaS application that combines trends with AI to provide faster and better outcomes. It helps users discover trend-validated opportunities, incorporate hype trends into business and personal growth, and create trend-inspired insights with AI. The platform accelerates growth by providing trend-backing partners and real-world hype trends, allowing users to launch products, build partnerships, and turn partner trends into profitable opportunities in seconds. With support for over 50 languages, Trends Critical offers 200+ trends, 60+ AI templates, and exclusive partnership opportunities for individuals, freelancers, influencers, brands, and corporates.

Slideworks

Slideworks is a platform offering strategy templates created by ex-McKinsey consultants. The website provides high-end PowerPoint and Excel templates for creating world-class strategy presentations. Users can access templates for consulting proposals, business strategies, market analysis, and more. Slideworks aims to streamline the process of creating professional presentations by offering proven frameworks, slide layouts, figures, and graphs. The platform is trusted by over 4,500 customers worldwide and is designed to meet the needs of individuals and businesses looking to enhance their strategic communication.

Isomeric

Isomeric is an AI tool that uses artificial intelligence to semantically understand unstructured text and extract specific data. It helps transform messy text into machine-readable JSON, enabling tasks such as web scraping, browser extensions, and general information extraction. With Isomeric, users can scale their data gathering pipeline in seconds, making it a valuable tool for various industries like customer support, data platforms, and legal services.

AnyAPI

AnyAPI is an AI tool that allows users to easily add AI features to their products in minutes. With the ability to craft the perfect GPT-3 prompt using A/B testing, users can quickly generate a live API endpoint to power their next AI feature. The platform offers a range of use cases, including turning emails into tasks, suggesting replies, and retrieving plain text JSON. AnyAPI is designed to streamline the integration of AI capabilities into various products and services, making it a valuable tool for developers and businesses seeking to enhance their offerings with AI technology.

PG's Principles

PG's Principles is an AI Mentor designed to provide responses based on the principles advocated by Paul Graham in his essays. It aims to help users make decisions by following the framework of the legendary figure. The tool offers a unique approach to mentorship by leveraging Graham's insights and philosophies.

AdIntelli

AdIntelli is an AI tool that helps users earn revenue from their AI Agents by displaying in-chat ads. It offers a platform for maximizing ad revenue through advanced AI-driven monetization technology, tailored for AI applications and ChatGPT Plus subscriptions. AdIntelli simplifies the process of integrating ads into AI Agents without the need for coding skills, providing a seamless user experience and personalized ad placements.

Prooftiles

Prooftiles is a platform designed to help businesses increase their conversion rate and average order value. It offers a suite of tools and features to optimize sales processes and enhance customer experience. With Prooftiles, businesses can access DocsLM to streamline document management and improve efficiency. The platform also provides pricing information, integrations with other tools, and valuable insights through its blog section.

Aicoachbud

Aicoachbud.com is a website that provides coaching services for personal development and career growth. The platform offers personalized coaching sessions with experienced professionals to help individuals achieve their goals and overcome challenges. With a focus on leveraging AI technology to enhance coaching effectiveness, aicoachbud.com aims to empower users with the tools and guidance needed to succeed in various aspects of their lives.

ChatCSV

ChatCSV is your personal data analyst that allows you to interact with your spreadsheets in a conversational manner. Simply upload a CSV file and start asking questions to get insights through visualizations. It is designed to assist users across various industries such as retail, finance, banking, marketing, and more, making data analysis more accessible and intuitive.

Rawbot

Rawbot is an AI model comparison tool designed to simplify the selection process by enabling users to identify and understand the strengths and weaknesses of various AI models. It allows users to compare AI models based on performance optimization, strengths and weaknesses identification, customization and tuning, cost and efficiency analysis, and informed decision-making. Rawbot is a user-friendly platform that caters to researchers, developers, and business leaders, offering a comprehensive solution for selecting the best AI models tailored to specific needs.

Business Automated

Business Automated is an independent automation consultancy that offers custom automation solutions for businesses. They provide services such as creating automated content blogs, managing projects, sales CRM, and more. The website also features tutorials and products related to automation tools like Airtable, GPT API, and ChatGPT.

AI Lean Canvas Generator

The AI Lean Canvas Generator is an AI-powered tool designed to help businesses create Lean Canvas for their company based on its description. It simplifies the process of summarizing key aspects of a business model, such as target market, value proposition, revenue streams, cost structure, and key metrics. The tool is based on the Lean Startup methodology, emphasizing rapid experimentation and iterative development to reduce risk and uncertainty in the early stages of a business. It offers a flexible and adaptable approach to building successful businesses, often used in conjunction with customer development and agile development practices.

Co-Founder AI

Co-Founder AI is an AI-powered tool that accelerates startup success by providing in-depth business reports and actionable insights. It utilizes AI to generate well-structured business plans and offers essential insights to validate IT-business ideas. The tool covers various aspects such as market trends, competitor analysis, sales techniques, and fundraising strategies, enabling users to make data-driven decisions for driving growth.

SunDevs

SunDevs is an AI application that focuses on solving business problems to provide exceptional customer experiences. The application offers various AI solutions, features, and resources to help businesses in different industries enhance their operations and customer interactions. SunDevs utilizes AI technology, such as chatbots and virtual assistants, to automate and scale business processes, leading to improved efficiency and customer satisfaction.

JobMojito

JobMojito is an AI Interview Platform that offers real-time avatar and voice interviews for job candidates. It provides interview coaching, job preparation, and support in English. The platform allows users to screen, evaluate, and select top talent using an AI Avatar that converses with candidates in real-time. JobMojito offers a comprehensive solution for managing the entire interview process, including preparation, conducting interviews with the avatar, providing instant feedback, and assessing candidates using AI technology. The platform is designed to attract new talent and streamline the recruitment process for organizations.

Lenny Rachitsky

Lenny Rachitsky is a website that offers insights and resources for product managers and entrepreneurs. It provides valuable articles, guides, and interviews to help professionals improve their product management skills and grow their businesses. The platform covers a wide range of topics such as product strategy, user research, and team management, making it a valuable resource for anyone working in the product development field.

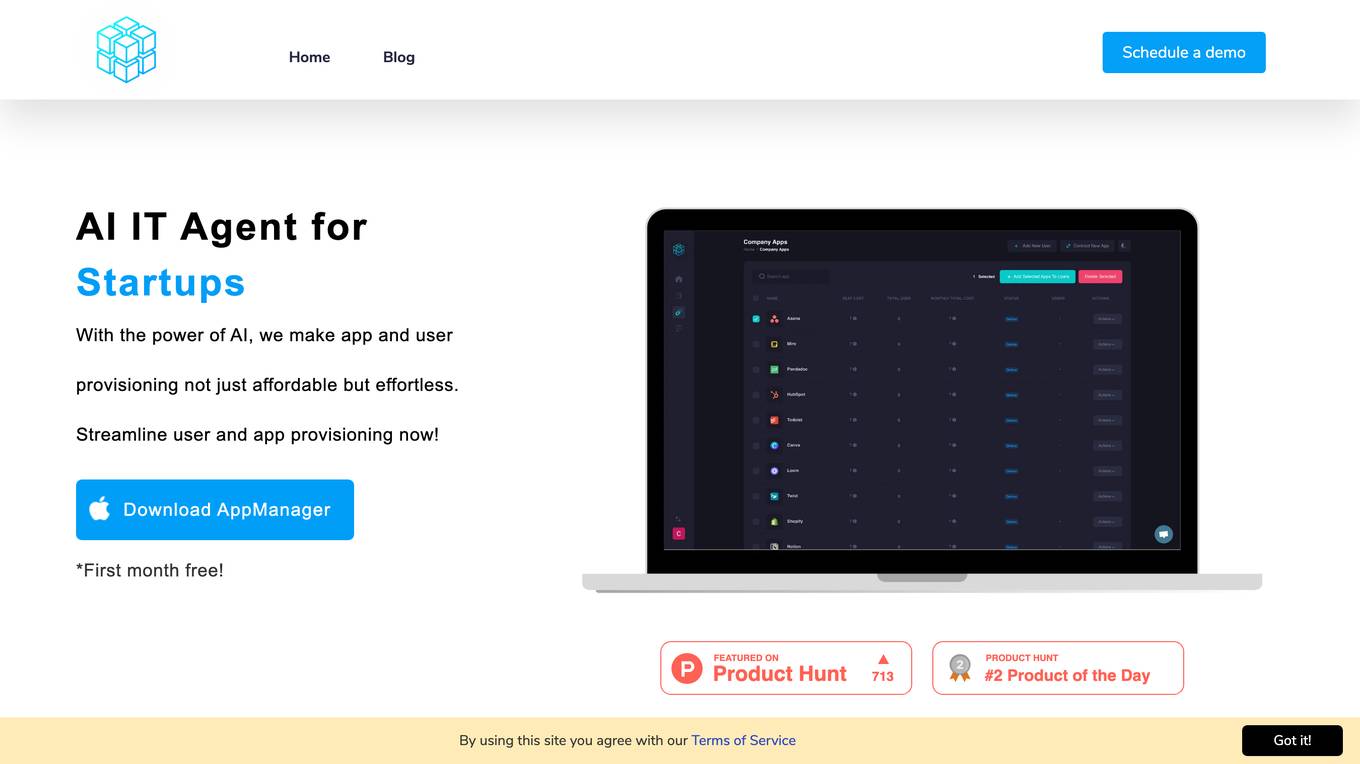

AppManager

AppManager is an AI IT agent designed specifically for startups to streamline app and user provisioning processes. With the power of AI, AppManager makes managing app subscriptions, user permissions, and payment methods effortless and cost-effective. It helps startups focus on growth by simplifying IT management tasks and providing smart spending insights.

WriteMyPrd

WriteMyPrd is an AI tool designed to make writing Product Requirements Documents (PRDs) easier and more efficient. By leveraging ChatGPT Olvy 3.0, the tool speeds up feedback analysis by 10x, helping users to quickly generate PRDs with basic information. Users can access resources, templates, and guides to assist in creating effective PRDs. The tool aims to simplify the process of initiating and outlining product planning and delivery through a user-friendly interface.