Best AI tools for< Integrate Large Models >

20 - AI tool Sites

Ai Helper

Ai Helper is an AI application that integrates artificial intelligence into your computer to provide various functions such as interacting with websites, PDFs, and videos, composing emails, optimizing SEO articles, automating workflows, coding assistance, and more. It offers a user-friendly interface and supports multiple AI engines and large models to meet different needs. Ai Helper is designed to enhance productivity and efficiency in various tasks across different domains.

LlamaIndex

LlamaIndex is a leading data framework designed for building LLM (Large Language Model) applications. It allows enterprises to turn their data into production-ready applications by providing functionalities such as loading data from various sources, indexing data, orchestrating workflows, and evaluating application performance. The platform offers extensive documentation, community-contributed resources, and integration options to support developers in creating innovative LLM applications.

Fleak AI Workflows

Fleak AI Workflows is a low-code serverless API Builder designed for data teams to effortlessly integrate, consolidate, and scale their data workflows. It simplifies the process of creating, connecting, and deploying workflows in minutes, offering intuitive tools to handle data transformations and integrate AI models seamlessly. Fleak enables users to publish, manage, and monitor APIs effortlessly, without the need for infrastructure requirements. It supports various data types like JSON, SQL, CSV, and Plain Text, and allows integration with large language models, databases, and modern storage technologies.

YourGPT

YourGPT is a suite of next-generation AI products designed to empower businesses with the potential of Large Language Models (LLMs). Its products include a no-code AI Chatbot solution for customer support and LLM Spark, a developer platform for building and deploying production-ready LLM applications. YourGPT prioritizes data security and is GDPR compliant, ensuring the privacy and protection of customer data. With over 2,000 satisfied customers, YourGPT has earned trust through its commitment to quality and customer satisfaction.

OneSky Localization Agent

OneSky Localization Agent (OLA) is an AI-powered platform designed to streamline the localization process for mobile apps, games, websites, and software products. OLA leverages multiple Large Language Models (LLMs) and a collaborative translation approach by a team of AI agents to deliver high-quality, accurate translations. It offers post-editing solutions, real-time progress tracking, and seamless integration into development workflows. With a focus on precision-engineered AI translations and human touch, OLA aims to provide a smarter way for global growth through efficient localization.

BoltAI

BoltAI is a native, high-performance AI application for Mac users, offering intuitive chat UI and powerful AI commands for various use cases. It provides features like AI coding assistance, content generation, and instant access to large language models. BoltAI is designed to enhance productivity across professions, from developers to students and everyone. It allows users to integrate AI into their workflow seamlessly, with features like custom AI assistants, prompt library, and secure data handling.

Unsloth

Unsloth is an AI tool designed to make finetuning large language models like Llama-3, Mistral, Phi-3, and Gemma 2x faster, use 70% less memory, and with no degradation in accuracy. The tool provides documentation to help users navigate through training their custom models, covering essentials such as installing and updating Unsloth, creating datasets, running, and deploying models. Users can also integrate third-party tools and utilize platforms like Google Colab.

Azure AI Platform

Azure AI Platform by Microsoft offers a comprehensive suite of artificial intelligence services and tools for developers and businesses. It provides a unified platform for building, training, and deploying AI models, as well as integrating AI capabilities into applications. With a focus on generative AI, multimodal models, and large language models, Azure AI empowers users to create innovative AI-driven solutions across various industries. The platform also emphasizes content safety, scalability, and agility in managing AI projects, making it a valuable resource for organizations looking to leverage AI technologies.

CaseYak

CaseYak is an AI tool that uses neural network models and Large Language Models (LLMs) to predict the value of motor vehicle accident claims. It offers AI Lead Magnets that can generate projected claim values, chat with website users, and introduce potential clients to law firms. The tool aims to transform law firm websites into 24/7 lead generation machines by providing data-driven appraisals of cases and attracting highly qualified leads.

Tipis AI

Tipis AI is an AI assistant for data processing that uses Large Language Models (LLMs) to quickly read and analyze mainstream documents with enhanced precision. It can also generate charts, integrate with a wide range of mainstream databases and data sources, and facilitate seamless collaboration with other team members. Tipis AI is easy to use and requires no configuration.

AllAIs

AllAIs is an AI ecosystem platform that brings together various AI tools, including large language models (LLMs), image generation capabilities, and development plugins, into a unified ecosystem. It aims to enhance productivity by providing a comprehensive suite of tools for both creative and technical tasks. Users can access popular LLMs, generate high-quality images, and streamline their projects using web and Visual Studio Code plugins. The platform offers integration with other tools and services, multiple pricing tiers, and regular updates to ensure high performance and compatibility with new technologies.

Puppeteer

Puppeteer is an AI application that offers Gen AI Nurses to empower patient support in healthcare. It addresses staffing shortages and enhances access to quality care through personalized and human-like patient experiences. The platform revolutionizes patient intake with features like mental health companions, virtual assistants, streamlined data collection, and clinic customization. Additionally, Puppeteer provides a comprehensive solution for building conversational bots, real-time API and database integrations, and personalized user experiences. It also offers a chatbot service for direct patient interaction and support in psychological help-seeking. The platform is designed to enhance healthcare delivery through AI integration and Large Language Models (LLMs) for modern medical solutions.

Asktro

Asktro is an AI tool that brings natural language search and an AI assistant to static documentation websites. It offers a modern search experience powered by embedded text similarity search and large language models. Asktro provides a ready-to-go search UI, plugin for data ingestion and indexing, documentation search, and an AI assistant for answering specific questions.

Koncile

Koncile is an AI-powered OCR solution that automates data extraction from various documents. It combines advanced OCR technology with large language models to transform unstructured data into structured information. Koncile can extract data from invoices, accounting documents, identity documents, and more, offering features like categorization, enrichment, and database integration. The tool is designed to streamline document management processes and accelerate data processing. Koncile is suitable for businesses of all sizes, providing flexible subscription plans and enterprise solutions tailored to specific needs.

Base64.ai

Base64.ai is an AI-powered document intelligence platform that offers a comprehensive solution for document processing and data extraction. It leverages advanced AI technology to automate business decisions, improve efficiency, accuracy, and digital transformation. Base64.ai provides features such as GenAI models, Semantic AI, Custom Model Builder, Question & Answer capabilities, and Large Action Models to streamline document processing. The platform supports over 50 file formats and offers integrations with scanners, RPA platforms, and third-party software.

Altilia

Altilia is a Major Player in the Intelligent Document Processing market, offering a cloud-native, no-code, SaaS platform powered by composite AI. The platform enables businesses to automate complex document processing tasks, streamline workflows, and enhance operational performance. Altilia's solution leverages GPT and Large Language Models to extract structured data from unstructured documents, providing significant efficiency gains and cost savings for organizations of all sizes and industries.

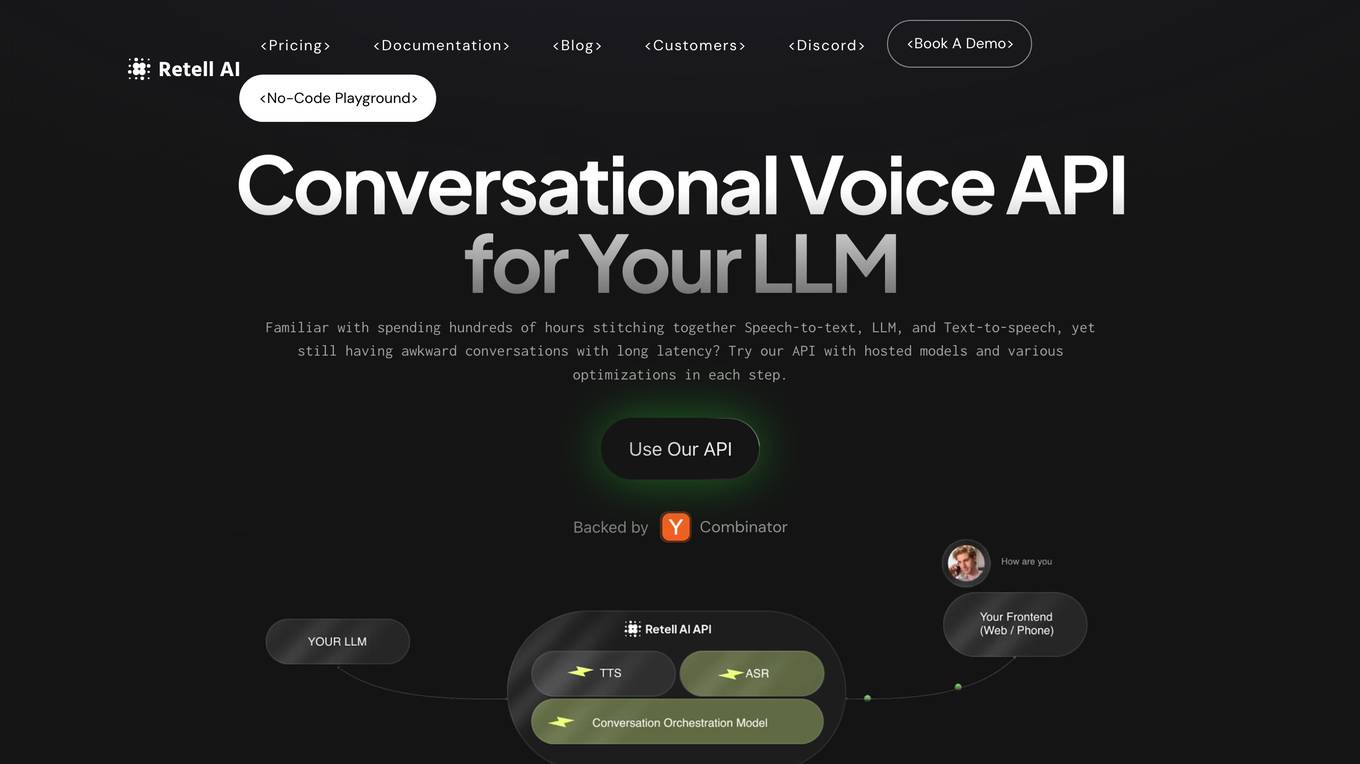

Retell AI

Retell AI provides a Conversational Voice API that enables developers to integrate human-like voice interactions into their applications. With Retell AI's API, developers can easily connect their own Large Language Models (LLMs) to create AI-powered voice agents that can engage in natural and engaging conversations. Retell AI's API offers a range of features, including ultra-low latency, realistic voices with emotions, interruption handling, and end-of-turn detection, ensuring seamless and lifelike conversations. Developers can also customize various aspects of the conversation experience, such as voice stability, backchanneling, and custom voice cloning, to tailor the AI agent to their specific needs. Retell AI's API is designed to be easy to integrate with existing LLMs and frontend applications, making it accessible to developers of all levels.

GPT Calculator

GPT Calculator is a free tool that helps you calculate the token count and cost of your GPT prompts. You can also use the API to integrate the calculator into your own applications. GPT Calculator is a valuable tool for anyone who uses GPT-3 or other large language models.

BigCheese.ai

BigCheese.ai is an AI application that helps companies launch and market AI products. The platform offers workshops, podcasts, and news updates to assist businesses in integrating AI into their products. Additionally, BigCheese.ai provides AI training seminars, prompt engineering, and custom AI workers to enhance productivity and efficiency. With a focus on data privacy and practical AI application for businesses, BigCheese.ai aims to support small and midsize companies in their AI transformation journey.

Officely AI

Officely AI is an AI application that offers a platform for users to access and utilize various LLM (Large Language Model) models. Users can let these models interact with each other and integrate seamlessly. The platform provides tools, use cases, channels, and pricing options for users to explore and leverage the power of AI in their processes.

3 - Open Source AI Tools

langchat

LangChat is an enterprise AIGC project solution in the Java ecosystem. It integrates AIGC large model functionality on top of the RBAC permission system to help enterprises quickly customize AI knowledge bases and enterprise AI robots. It supports integration with various large models such as OpenAI, Gemini, Ollama, Azure, Zhifu, Alibaba Tongyi, Baidu Qianfan, etc. The project is developed solely by TyCoding and is continuously evolving. It features multi-modality, dynamic configuration, knowledge base support, advanced RAG capabilities, function call customization, multi-channel deployment, workflows visualization, AIGC client application, and more.

fit-framework

FIT Framework is a Java enterprise AI development framework that provides a multi-language function engine (FIT), a flow orchestration engine (WaterFlow), and a Java ecosystem alternative solution (FEL). It runs in native/Spring dual mode, supports plug-and-play and intelligent deployment, seamlessly unifying large models and business systems. FIT Core offers language-agnostic computation base with plugin hot-swapping and intelligent deployment. WaterFlow Engine breaks the dimensional barrier of BPM and reactive programming, enabling graphical orchestration and declarative API-driven logic composition. FEL revolutionizes LangChain for the Java ecosystem, encapsulating large models, knowledge bases, and toolchains to integrate AI capabilities into Java technology stack seamlessly. The framework emphasizes engineering practices with intelligent conventions to reduce boilerplate code and offers flexibility for deep customization in complex scenarios.

Code-Review-GPT-Gitlab

A project that utilizes large models to help with Code Review on Gitlab, aimed at improving development efficiency. The project is customized for Gitlab and is developing a Multi-Agent plugin for collaborative review. It integrates various large models for code security issues and stays updated with the latest Code Review trends. The project architecture is designed to be powerful, flexible, and efficient, with easy integration of different models and high customization for developers.

20 - OpenAI Gpts

Home Automation Consultant

Helps integrate smart devices into home environments, ensuring ease of use and energy efficiency.

Missing Cluster Identification Program

I analyze and integrate missing clusters in data for coherent structuring.

Kafka Expert

I will help you to integrate the popular distributed event streaming platform Apache Kafka into your own cloud solutions.

ESG Strategy Navigator 🌱🧭

Optimize your business with sustainable practices! ESG Strategy Navigator helps integrate Environmental, Social, Governance (ESG) factors into corporate strategy, ensuring compliance, ethical impact, and value creation. 🌟

Consistent Image Generator

Geneate an image ➡ Request modifications. This GPT supports generating consistent and continuous images with Dalle. It also offers the ability to restore or integrate photos you upload. ✔️Where to use: Wordpress Blog Post, Youtube thumbnail, AI profile, facebook, X, threads feed, Instagram reels

SEO InLink Optimizer

GPT created by Max Del Rosso for SEO optimization, specialized in identifying internal linking opportunities. Through the review of existing content, it suggests targeted changes to integrate effective anchor texts, contributing to improving SERP rankings and user experience.

Quick QR Art - QR Code AI Art Generator

Create, Customize, and Track Stunning QR Codes Art with Our Free QR Code AI Art Generator. Seamlessly integrate these artistic codes into your marketing materials, packaging, and digital platforms.

Flashcard Maker, Research, Learn and Send to Anki

Creates educational flashcards and integrates with Anki.

System Sync

Expert in AiOS integration, technical troubleshooting, and IP rights management.

DevSecOps Guides

Comprehensive resource for integrating security into the software development lifecycle.

Odoo OCA Modules Advisor

Senior Odoo Engineer and OCA (Odoo Community Association) expert, advising on Odoo modules and solutions.