Best AI tools for< Bind Services >

1 - AI tool Sites

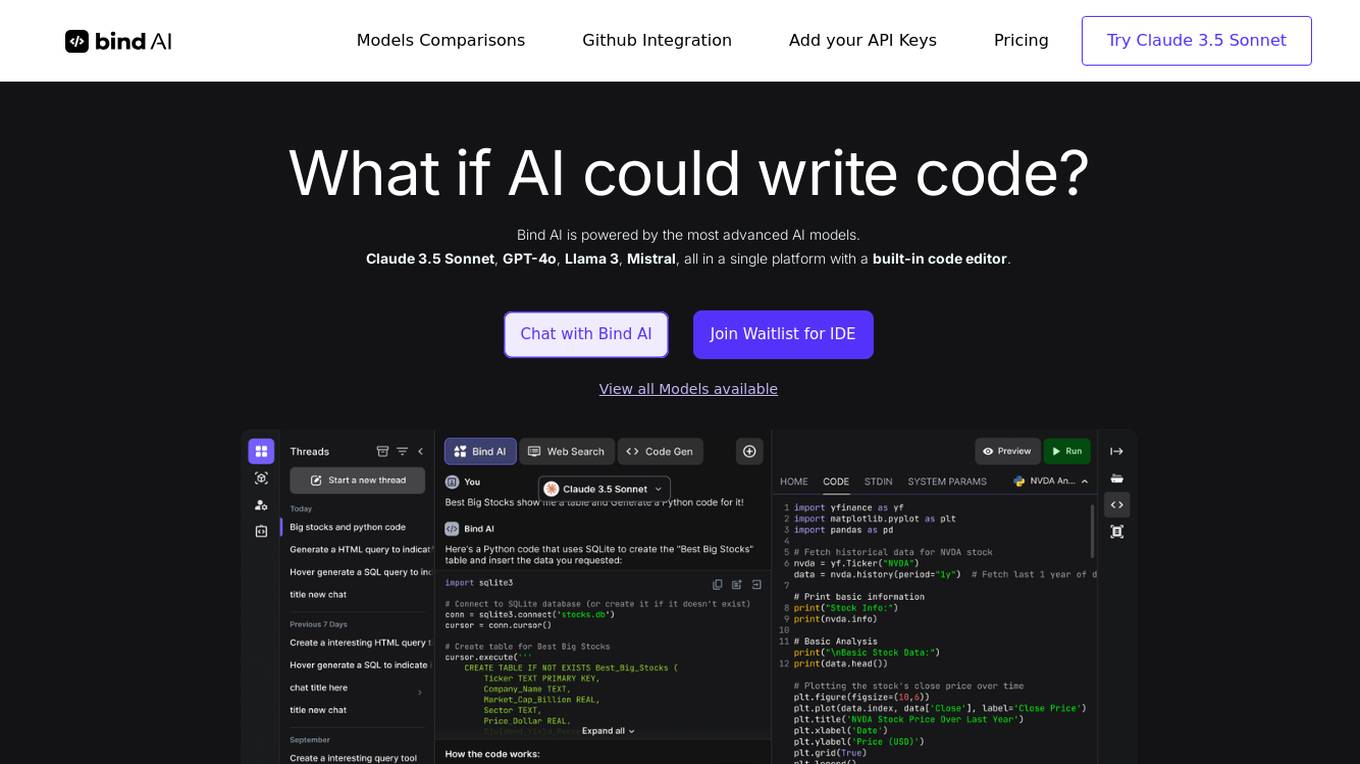

Bind AI

Bind AI is an advanced AI tool that enables users to generate code, scripts, and applications using a variety of AI models. It offers a platform with a built-in code editor and supports over 72 programming languages, including popular ones like Python, Java, and JavaScript. Users can create front-end web applications, parse JSON, write SQL queries, and automate tasks using the AI Code Editor. Bind AI also provides integration with Github and Google Drive, allowing users to sync their codebase and files for collaboration and development.

1 - Open Source AI Tools

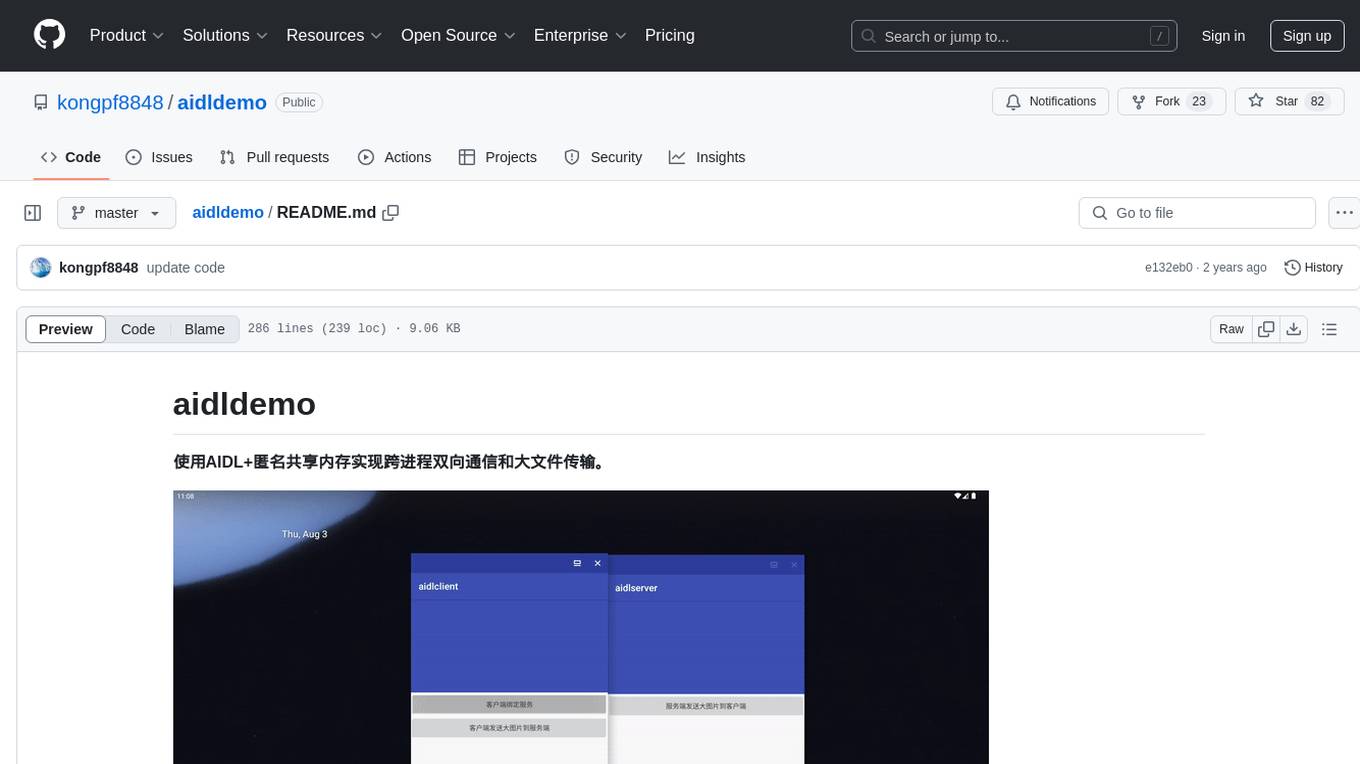

aidldemo

This repository demonstrates how to achieve cross-process bidirectional communication and large file transfer using AIDL and anonymous shared memory. AIDL is a way to implement Inter-Process Communication in Android, based on Binder. To overcome the data size limit of Binder, anonymous shared memory is used for large file transfer. Shared memory allows processes to share memory by mapping a common memory area into their respective process spaces. While efficient for transferring large data between processes, shared memory lacks synchronization mechanisms, requiring additional mechanisms like semaphores. Android's anonymous shared memory (Ashmem) is based on Linux shared memory and facilitates shared memory transfer using Binder and FileDescriptor. The repository provides practical examples of bidirectional communication and large file transfer between client and server using AIDL interfaces and MemoryFile in Android.