moxin

Moxin: an AI LLM platform in pure Rust

Stars: 136

Moxin is an AI LLM client written in Rust to demonstrate the functionality of the Robius framework for multi-platform application development. It is currently in early stages of development and not fully functional. The tool supports building and running on macOS and Linux systems, with packaging options available for distribution. Users can install the required WasmEdge WASM runtime and dependencies to build and run Moxin. Packaging for distribution includes generating `.deb` Debian packages, AppImage, and pacman installation packages for Linux, as well as `.app` bundles and `.dmg` disk images for macOS. The macOS app is not signed, leading to a warning on installation, which can be resolved by removing the quarantine attribute from the installed app.

README:

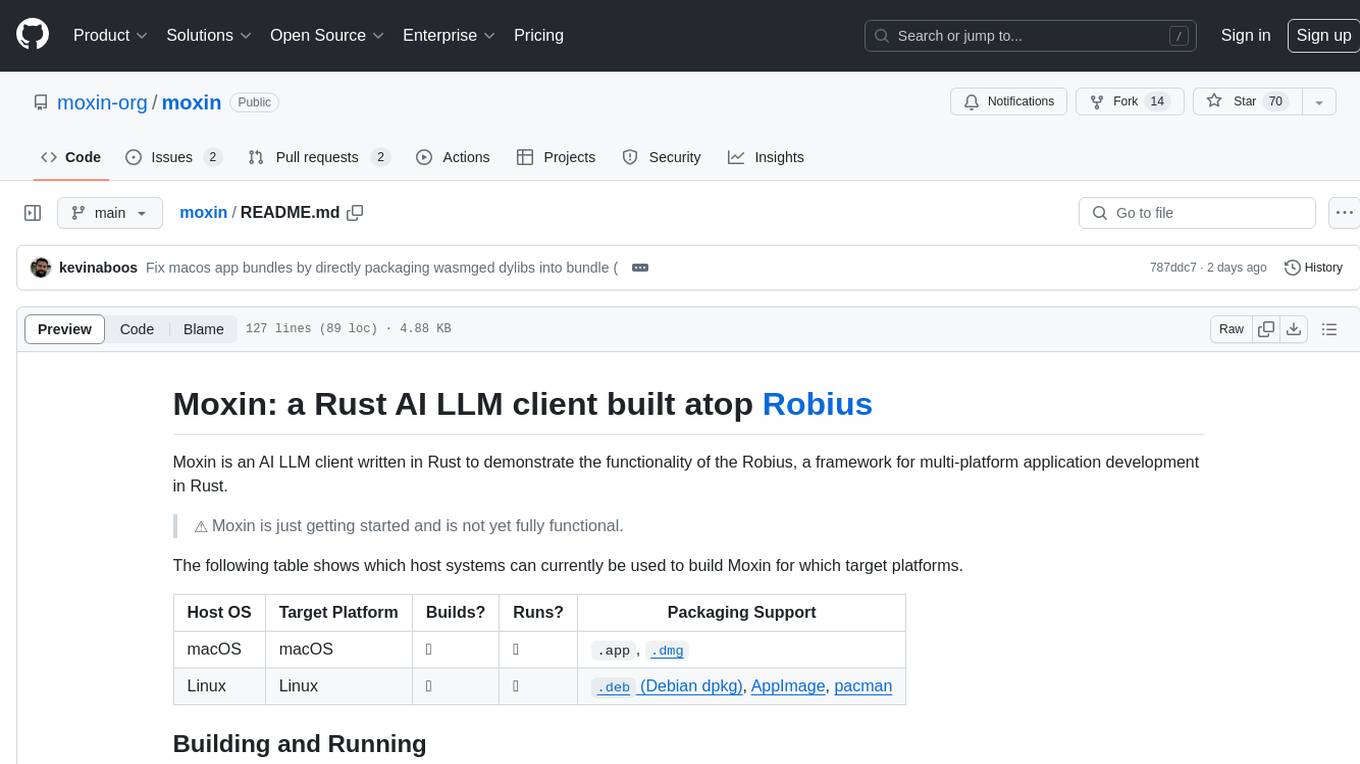

Moxin: a Rust AI LLM client built atop Robius

Moxin is an AI LLM client written in Rust, and demonstrates the power of the Makepad UI toolkit and Project Robius, a framework for multi-platform application development in Rust.

⚠️ Moxin is in beta. Please file an issue if you encounter bugs or unexpected results.

The following table shows which host systems can currently be used to build Moxin for which target platforms.

| Host OS | Target Platform | Builds? | Runs? | Packaging Support |

|---|---|---|---|---|

| macOS | macOS | ✅ | ✅ |

.app, .dmg

|

| Linux | Linux | ✅ | ✅ |

.deb (Debian dpkg), AppImage, pacman

|

| Windows | Windows (10+) | ✅ | ✅ |

.exe (NSIS) |

-

Obtain the source code for this repository:

git clone https://github.com/moxin-org/moxin.git[!TIP] On all platforms, you can use our helper program to auto-setup WasmEdge for you and run any

cargocommand:cargo run -p moxin-runner -- --install ## finds or installs WasmEdge, then stops. cargo run -p moxin-runner -- cargo build ## builds Moxin cargo run -p moxin-runner -- cargo run ## builds and runs Moxin cargo run -p moxin-runner -- cargo [your-command-here]

Install the required WasmEdge WASM runtime (or use moxin-runner):

curl -sSf https://raw.githubusercontent.com/WasmEdge/WasmEdge/master/utils/install_v2.sh | bash -s -- --version=0.14.0

source $HOME/.wasmedge/envThen use cargo to build and run Moxin:

cd moxin

cargo run --releaseInstall the required WasmEdge WASM runtime (or use moxin-runner):

curl -sSf https://raw.githubusercontent.com/WasmEdge/WasmEdge/master/utils/install_v2.sh | bash -s -- --version=0.14.0

source $HOME/.wasmedge/env[!IMPORTANT] If your CPU does not support AVX512, then you should append the

--noavxoption onto the above command. If you usemoxin-runner, it will handle this for you.

To build Moxin on Linux, you must install the following dependencies:

openssl, clang/libclang, binfmt, Xcursor/X11, asound/pulse.

On a Debian-like Linux distro (e.g., Ubuntu), run the following:

sudo apt-get update

sudo apt-get install libssl-dev pkg-config llvm clang libclang-dev binfmt-support libxcursor-dev libx11-dev libasound2-dev libpulse-devThen use cargo to build and run Moxin:

cd moxin

cargo run --release- Download and install the LLVM v17.0.6 release for Windows: Here is a direct link to LLVM-17.0.6-win64.exe, 333MB in size.

[!IMPORTANT] During the setup procedure, make sure to select

Add LLVM to the system PATH for all usersorfor the current user.

-

Restart your PC, or log out and log back in, which allows the LLVM path to be properly

- Alternatively you can add the LLVM path

C:\Program Files\LLVM\binto your system PATH.

- Alternatively you can add the LLVM path

-

Use

moxin-runnerto auto-setup WasmEdge and then build & run Moxin:

cargo run -p moxin-runner -- cargo run --release ## `--release` is optionalNote: we already have pre-built releases of Moxin available for download.

Install cargo-packager:

rustup update stable ## Rust version 1.79 or higher is required

cargo +stable install --force --locked cargo-packagerFor posterity, these instructions have been tested on cargo-packager version 0.10.1, which requires Rust v1.79.

On a Debian-based Linux distribution (e.g., Ubuntu), you can generate a .deb Debian package, an AppImage, and a pacman installation package.

[!IMPORTANT] You can only generate a

.debDebian package on a Debian-based Linux distribution, asdpkgis needed.

[!NOTE] The

pacmanpackage has not yet been tested.

Ensure you are in the root moxin directory, and then you can use cargo packager to generate all three package types at once:

cargo packager --release --verbose ## --verbose is optionalTo install the Moxin app from the .debpackage on a Debian-based Linux distribution (e.g., Ubuntu), run:

cd dist/

sudo apt install ./moxin_0.1.0_amd64.deb ## The "./" part is requiredWe recommend using apt install to install the .deb file instead of dpkg -i, because apt will auto-install all of Moxin's required dependencies, whereas dpkg will require you to install them manually.

To run the AppImage bundle, simply set the file as executable and then run it:

cd dist/

chmod +x moxin_0.1.0_x86_64.AppImage

./moxin_0.1.0_x86_64.AppImageThis can only be run on an actual Windows machine, due to platform restrictions.

First, follow the above instructions for building on Windows.

Ensure you are in the root moxin directory, and then you can use cargo packager to generate a setup.exe file using NSIS:

cargo run -p moxin-runner -- cargo packager --release --formats nsis --verbose ## --verbose is optionalAfter the command completes, you should see a Windows installer called moxin_0.1.0_x64-setup in the dist/ directory.

Double-click that file to install Moxin on your machine, and then run it as you would a regular application.

This can only be run on an actual macOS machine, due to platform restrictions.

Ensure you are in the root moxin directory, and then you can use cargo packager to generate an .app bundle and a .dmg disk image:

cargo packager --release --verbose ## --verbose is optional[!IMPORTANT] You will see a .dmg window pop up — please leave it alone, it will auto-close once the packaging procedure has completed.

[!TIP] If you receive the following error:

ERROR cargo_packager::cli: Error running create-dmg script: File exists (os error 17)then open Finder and unmount any Moxin-related disk images, then try the above

cargo packagercommand again.

[!TIP] If you receive an error like so:

Creating disk image... hdiutil: create failed - Operation not permitted could not access /Volumes/Moxin/Moxin.app - Operation not permittedthen you need to grant "App Management" permissions to the app in which you ran the

cargo packagercommand, e.g., Terminal, Visual Studio Code, etc. To do this, openSystem Preferences→Privacy & Security→App Management, and then click the toggle switch next to the relevant app to enable that permission. Then, try the abovecargo packagercommand again.

After the command completes, you should see both the Moxin.app and the .dmg in the dist/ directory.

You can immediately double-click the Moxin.app bundle to run it, or you can double-click the .dmg file to

Note that the

.dmgis what should be distributed for installation on other machines, not the.app.

If you'd like to modify the .dmg background, here is the Google Drawings file used to generate the MacOS .dmg background image.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for moxin

Similar Open Source Tools

moxin

Moxin is an AI LLM client written in Rust to demonstrate the functionality of the Robius framework for multi-platform application development. It is currently in early stages of development and not fully functional. The tool supports building and running on macOS and Linux systems, with packaging options available for distribution. Users can install the required WasmEdge WASM runtime and dependencies to build and run Moxin. Packaging for distribution includes generating `.deb` Debian packages, AppImage, and pacman installation packages for Linux, as well as `.app` bundles and `.dmg` disk images for macOS. The macOS app is not signed, leading to a warning on installation, which can be resolved by removing the quarantine attribute from the installed app.

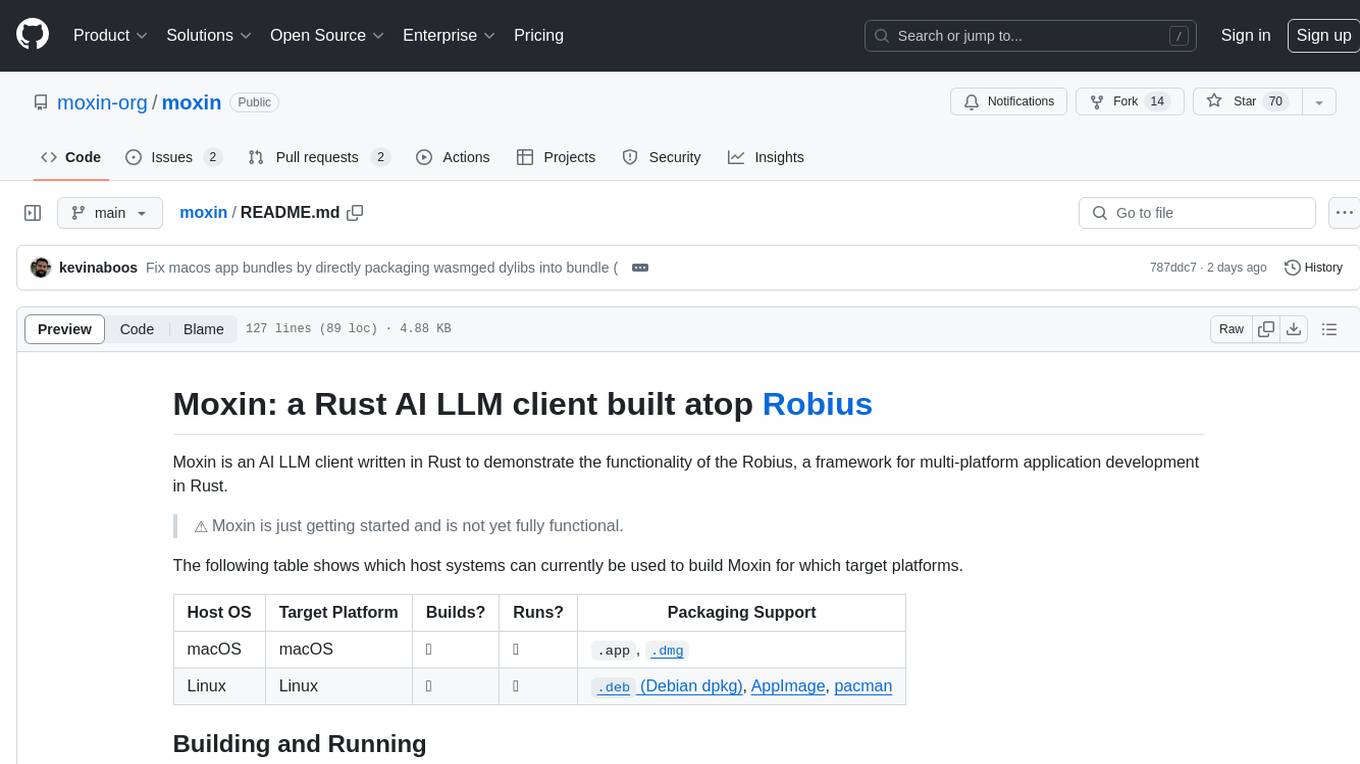

moly

Moly is an AI LLM client written in Rust, showcasing the capabilities of the Makepad UI toolkit and Project Robius, a framework for multi-platform application development in Rust. It is currently in beta, allowing users to build and run Moly on macOS, Linux, and Windows. The tool provides packaging support for different platforms, such as `.app`, `.dmg`, `.deb`, AppImage, pacman, and `.exe` (NSIS). Users can easily set up WasmEdge using `moly-runner` and leverage `cargo` commands to build and run Moly. Additionally, Moly offers pre-built releases for download and supports packaging for distribution on Linux, Windows, and macOS.

cli-agent

Pieces CLI for Developers is a comprehensive command-line interface (CLI) tool designed to interact seamlessly with Pieces OS. It provides functionalities such as asset management, application interaction, and integration with various Pieces OS features. The tool is compatible with Windows 10 or greater, Mac, and Windows operating systems. Users can install the tool by running 'pip install pieces-cli' or 'brew install pieces-cli'. After installation, users can access the tool's functionalities through the terminal by using the 'pieces' command followed by subcommands and options. The tool supports various commands, which can be found in the documentation. Developers can contribute to the project by forking and cloning the repository, setting up a virtual environment, installing dependencies with poetry, and running test cases with pytest and coverage.

anterion

Anterion is an open-source AI software engineer that extends the capabilities of `SWE-agent` to plan and execute open-ended engineering tasks, with a frontend inspired by `OpenDevin`. It is designed to help users fix bugs and prototype ideas with ease. Anterion is equipped with easy deployment and a user-friendly interface, making it accessible to users of all skill levels.

mods

AI for the command line, built for pipelines. LLM based AI is really good at interpreting the output of commands and returning the results in CLI friendly text formats like Markdown. Mods is a simple tool that makes it super easy to use AI on the command line and in your pipelines. Mods works with OpenAI, Groq, Azure OpenAI, and LocalAI To get started, install Mods and check out some of the examples below. Since Mods has built-in Markdown formatting, you may also want to grab Glow to give the output some _pizzazz_.

please-cli

Please CLI is an AI helper script designed to create CLI commands by leveraging the GPT model. Users can input a command description, and the script will generate a Linux command based on that input. The tool offers various functionalities such as invoking commands, copying commands to the clipboard, asking questions about commands, and more. It supports parameters for explanation, using different AI models, displaying additional output, storing API keys, querying ChatGPT with specific models, showing the current version, and providing help messages. Users can install Please CLI via Homebrew, apt, Nix, dpkg, AUR, or manually from source. The tool requires an OpenAI API key for operation and offers configuration options for setting API keys and OpenAI settings. Please CLI is licensed under the Apache License 2.0 by TNG Technology Consulting GmbH.

termax

Termax is an LLM agent in your terminal that converts natural language to commands. It is featured by: - Personalized Experience: Optimize the command generation with RAG. - Various LLMs Support: OpenAI GPT, Anthropic Claude, Google Gemini, Mistral AI, and more. - Shell Extensions: Plugin with popular shells like `zsh`, `bash` and `fish`. - Cross Platform: Able to run on Windows, macOS, and Linux.

tiledesk-dashboard

Tiledesk is an open-source live chat platform with integrated chatbots written in Node.js and Express. It is designed to be a multi-channel platform for web, Android, and iOS, and it can be used to increase sales or provide post-sales customer service. Tiledesk's chatbot technology allows for automation of conversations, and it also provides APIs and webhooks for connecting external applications. Additionally, it offers a marketplace for apps and features such as CRM, ticketing, and data export.

garak

Garak is a free tool that checks if a Large Language Model (LLM) can be made to fail in a way that is undesirable. It probes for hallucination, data leakage, prompt injection, misinformation, toxicity generation, jailbreaks, and many other weaknesses. Garak's a free tool. We love developing it and are always interested in adding functionality to support applications.

chat-ui

A chat interface using open source models, eg OpenAssistant or Llama. It is a SvelteKit app and it powers the HuggingChat app on hf.co/chat.

ML-Bench

ML-Bench is a tool designed to evaluate large language models and agents for machine learning tasks on repository-level code. It provides functionalities for data preparation, environment setup, usage, API calling, open source model fine-tuning, and inference. Users can clone the repository, load datasets, run ML-LLM-Bench, prepare data, fine-tune models, and perform inference tasks. The tool aims to facilitate the evaluation of language models and agents in the context of machine learning tasks on code repositories.

hash

HASH is a self-building, open-source database which grows, structures and checks itself. With it, we're creating a platform for decision-making, which helps you integrate, understand and use data in a variety of different ways.

ai-starter-kit

SambaNova AI Starter Kits is a collection of open-source examples and guides designed to facilitate the deployment of AI-driven use cases for developers and enterprises. The kits cover various categories such as Data Ingestion & Preparation, Model Development & Optimization, Intelligent Information Retrieval, and Advanced AI Capabilities. Users can obtain a free API key using SambaNova Cloud or deploy models using SambaStudio. Most examples are written in Python but can be applied to any programming language. The kits provide resources for tasks like text extraction, fine-tuning embeddings, prompt engineering, question-answering, image search, post-call analysis, and more.

codespin

CodeSpin.AI is a set of open-source code generation tools that leverage large language models (LLMs) to automate coding tasks. With CodeSpin, you can generate code in various programming languages, including Python, JavaScript, Java, and C++, by providing natural language prompts. CodeSpin offers a range of features to enhance code generation, such as custom templates, inline prompting, and the ability to use ChatGPT as an alternative to API keys. Additionally, CodeSpin provides options for regenerating code, executing code in prompt files, and piping data into the LLM for processing. By utilizing CodeSpin, developers can save time and effort in coding tasks, improve code quality, and explore new possibilities in code generation.

garak

Garak is a vulnerability scanner designed for LLMs (Large Language Models) that checks for various weaknesses such as hallucination, data leakage, prompt injection, misinformation, toxicity generation, and jailbreaks. It combines static, dynamic, and adaptive probes to explore vulnerabilities in LLMs. Garak is a free tool developed for red-teaming and assessment purposes, focusing on making LLMs or dialog systems fail. It supports various LLM models and can be used to assess their security and robustness.

1.5-Pints

1.5-Pints is a repository that provides a recipe to pre-train models in 9 days, aiming to create AI assistants comparable to Apple OpenELM and Microsoft Phi. It includes model architecture, training scripts, and utilities for 1.5-Pints and 0.12-Pint developed by Pints.AI. The initiative encourages replication, experimentation, and open-source development of Pint by sharing the model's codebase and architecture. The repository offers installation instructions, dataset preparation scripts, model training guidelines, and tools for model evaluation and usage. Users can also find information on finetuning models, converting lit models to HuggingFace models, and running Direct Preference Optimization (DPO) post-finetuning. Additionally, the repository includes tests to ensure code modifications do not disrupt the existing functionality.

For similar tasks

python-tutorial-notebooks

This repository contains Jupyter-based tutorials for NLP, ML, AI in Python for classes in Computational Linguistics, Natural Language Processing (NLP), Machine Learning (ML), and Artificial Intelligence (AI) at Indiana University.

open-parse

Open Parse is a Python library for visually discerning document layouts and chunking them effectively. It is designed to fill the gap in open-source libraries for handling complex documents. Unlike text splitting, which converts a file to raw text and slices it up, Open Parse visually analyzes documents for superior LLM input. It also supports basic markdown for parsing headings, bold, and italics, and has high-precision table support, extracting tables into clean Markdown formats with accuracy that surpasses traditional tools. Open Parse is extensible, allowing users to easily implement their own post-processing steps. It is also intuitive, with great editor support and completion everywhere, making it easy to use and learn.

MoonshotAI-Cookbook

The MoonshotAI-Cookbook provides example code and guides for accomplishing common tasks with the MoonshotAI API. To run these examples, you'll need an MoonshotAI account and associated API key. Most code examples are written in Python, though the concepts can be applied in any language.

AHU-AI-Repository

This repository is dedicated to the learning and exchange of resources for the School of Artificial Intelligence at Anhui University. Notes will be published on this website first: https://www.aoaoaoao.cn and will be synchronized to the repository regularly. You can also contact me at [email protected].

modern_ai_for_beginners

This repository provides a comprehensive guide to modern AI for beginners, covering both theoretical foundations and practical implementation. It emphasizes the importance of understanding both the mathematical principles and the code implementation of AI models. The repository includes resources on PyTorch, deep learning fundamentals, mathematical foundations, transformer-based LLMs, diffusion models, software engineering, and full-stack development. It also features tutorials on natural language processing with transformers, reinforcement learning, and practical deep learning for coders.

Building-AI-Applications-with-ChatGPT-APIs

This repository is for the book 'Building AI Applications with ChatGPT APIs' published by Packt. It provides code examples and instructions for mastering ChatGPT, Whisper, and DALL-E APIs through building innovative AI projects. Readers will learn to develop AI applications using ChatGPT APIs, integrate them with frameworks like Flask and Django, create AI-generated art with DALL-E APIs, and optimize ChatGPT models through fine-tuning.

examples

This repository contains a collection of sample applications and Jupyter Notebooks for hands-on experience with Pinecone vector databases and common AI patterns, tools, and algorithms. It includes production-ready examples for review and support, as well as learning-optimized examples for exploring AI techniques and building applications. Users can contribute, provide feedback, and collaborate to improve the resource.

lingoose

LinGoose is a modular Go framework designed for building AI/LLM applications. It offers the flexibility to import only the necessary modules, abstracts features for customization, and provides a comprehensive solution for developing AI/LLM applications from scratch. The framework simplifies the process of creating intelligent applications by allowing users to choose preferred implementations or create their own. LinGoose empowers developers to leverage its capabilities to streamline the development of cutting-edge AI and LLM projects.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.