Best AI tools for< Deploy Project >

20 - AI tool Sites

GPT Engineer

GPT Engineer is an AI tool designed to help users build web applications 10x faster by chatting with AI. Users can sync their projects with GitHub and deploy them with a single click. The tool offers features like displaying top stories from Hacker News, creating landing pages for startups, tracking crypto portfolios, managing startup operations, and building front-end with React, Tailwind & Vite. GPT Engineer is currently in beta and aims to streamline the web development process for users.

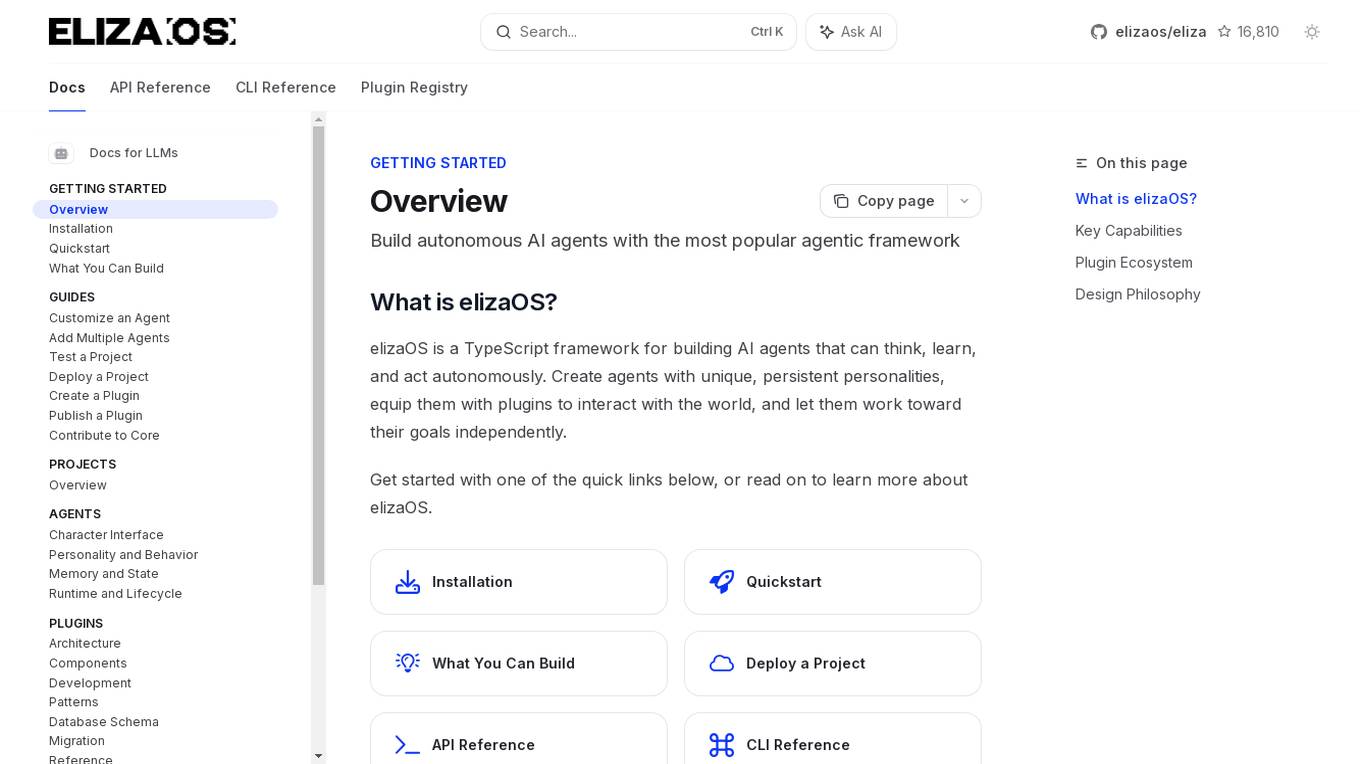

ElizaOS

ElizaOS is a TypeScript framework designed for building autonomous AI agents that can think, learn, and act independently. It offers a wide range of capabilities, including developing unique personalities, interacting with the real world through various plugins, executing complex action chains triggered by natural language, and remembering interactions with persistent memory. The framework comes with a rich plugin ecosystem, allowing users to mix and match capabilities without modifying core code. ElizaOS is ideal for builders who want to quickly ship projects, experiment freely, and contribute to the open-source community.

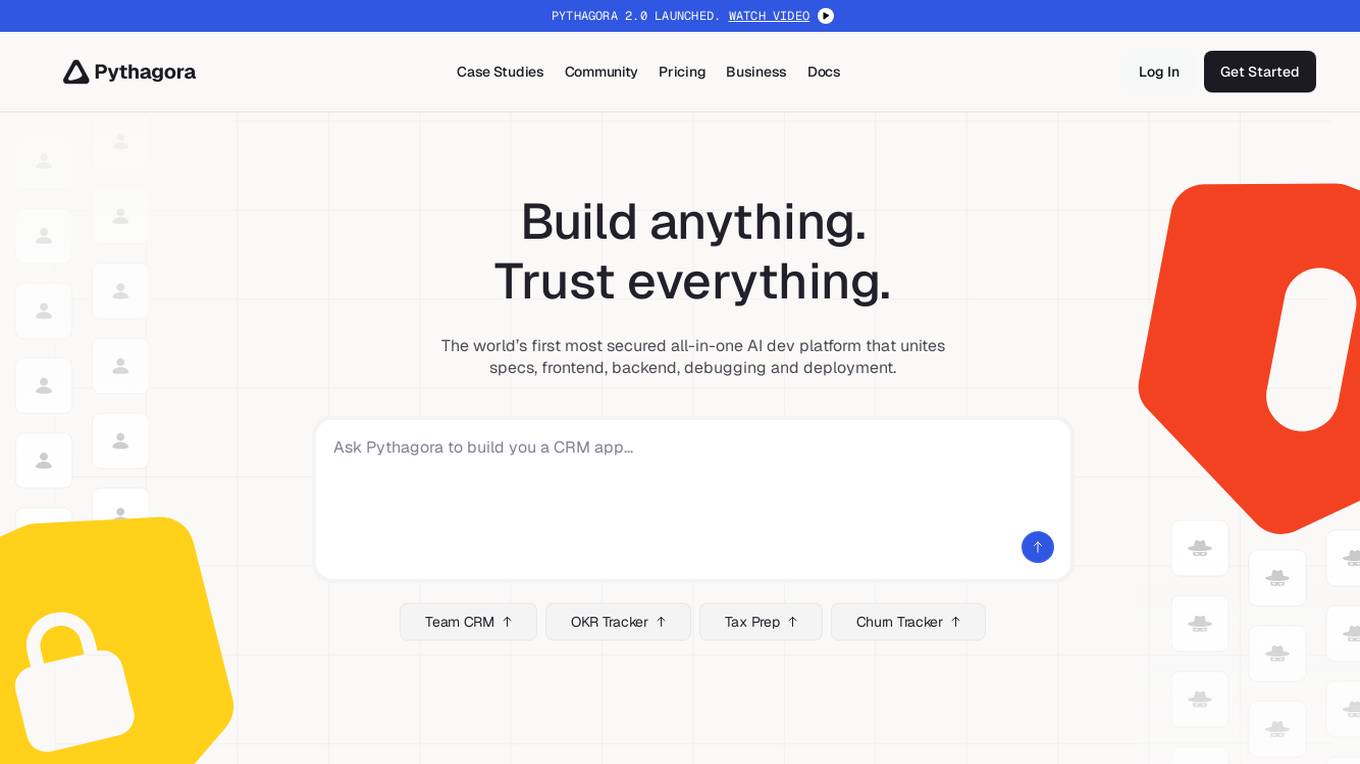

Pythagora

Pythagora is the world's first all-in-one AI development platform that offers a secure and comprehensive solution for building web applications. It combines frontend, backend, debugging, and deployment features in a single platform, enabling users to create apps without heavy coding requirements. Pythagora is powered by specialized AI agents and top-tier language models from OpenAI and Anthropic, providing users with tools for planning, writing, testing, and deploying full-stack web apps. The platform is designed to streamline the development process, offering enterprise-grade security, role-based authentication, and transparent control over projects.

Attri

Attri is a leading Generative AI application specialized in custom AI solutions for enterprises. It harnesses the power of Generative AI and Foundation Models to drive innovation and accelerate digital transformation. Attri offers a range of AI solutions for various industries, focusing on responsible AI deployment and ethical innovation.

Replit

Replit is a software creation platform that provides an integrated development environment (IDE), artificial intelligence (AI) assistance, and deployment services. It allows users to build, test, and deploy software projects directly from their browser, without the need for local setup or configuration. Replit offers real-time collaboration, code generation, debugging, and autocompletion features powered by AI. It supports multiple programming languages and frameworks, making it suitable for a wide range of development projects.

ClawOneClick

ClawOneClick is an AI tool that allows users to deploy their own AI assistant in seconds without the need for technical setup. It offers a one-click deployment of an always-on AI chatbot powered by the latest AI models. Users can choose from various AI models and messaging channels to customize their AI assistant. ClawOneClick handles all the cloud infrastructure provisioning and management, ensuring secure connections and end-to-end encryption. The tool is designed to adapt to various tasks and can assist with email summarization, quick replies, translation, proofreading, customer queries, report condensation, meeting reminders, voice memo transcription, deadline tracking, schedule organization, meeting action item capture, time zone coordination, task automation, expense logging, priority planning, content generation, idea brainstorming, fast topic research, book and article summarization, concept learning, creative suggestions, code explanation, document analysis, professional document drafting, project goal definition, team updates preparation, data trend interpretation, job posting writing, product and price comparison, meal plan suggestion, and more.

PixieBrix

PixieBrix is an AI engagement platform that allows users to build, deploy, and manage internal AI tools to drive team productivity. It unifies AI landscapes with oversight and governance for enterprise scale. The platform is enterprise-ready and fully customizable to meet unique needs, and can be deployed on any site, making it easy to integrate into existing systems. PixieBrix leverages the power of AI and automation to harness the latest technology to streamline workflows and take productivity to new heights.

Trae

Trae is an adaptive AI IDE that aims to help users ship faster by transforming the way they work. It collaborates with users to enhance productivity and efficiency. The platform provides a range of features to streamline the development process and improve overall workflow.

DORA

DORA is a research program by Google Cloud that focuses on understanding the capabilities driving software delivery and operations performance. It helps teams apply these capabilities to enhance organizational performance. The program introduces the DORA AI Capabilities Model, identifying key technical and cultural practices that amplify the positive impacts of AI on performance. DORA offers resources, guides, and tools like the DORA Quick Check to help organizations improve their software delivery goals.

Strong Analytics

Strong Analytics is a data science consulting and machine learning engineering company that specializes in building bespoke data science, machine learning, and artificial intelligence solutions for various industries. They offer end-to-end services to design, engineer, and deploy custom AI products and solutions, leveraging a team of full-stack data scientists and engineers with cross-industry experience. Strong Analytics is known for its expertise in accelerating innovation, deploying state-of-the-art techniques, and empowering enterprises to unlock the transformative value of AI.

Team9

Team9 is an AI workspace application that allows users to hire AI Staff and collaborate with them as real teammates. It is built on OpenClaw and Moltbook, offering a zero-setup, managed OpenClaw experience. Users can assign tasks, share context, and coordinate work within the platform. Team9 aims to help users build an AI team, run AI-powered collaboration, and increase work efficiency with minimal overhead. The application connects users to the revolutionary AI agent ecosystem, providing instant deployment of OpenClaw without the need for complex setup or manual configuration.

Glide

Glide is an AI-powered no code app builder that helps businesses create custom tools for a faster, more efficient way to work without the need for coding. It allows users to transform time-consuming processes into modern, interactive apps, tailored to their needs in weeks. Glide aims to future-proof businesses by automating tasks and enhancing productivity with a modern mobile experience. The platform has been used by over 100,000 companies to create custom apps, offering custom development without the headaches.

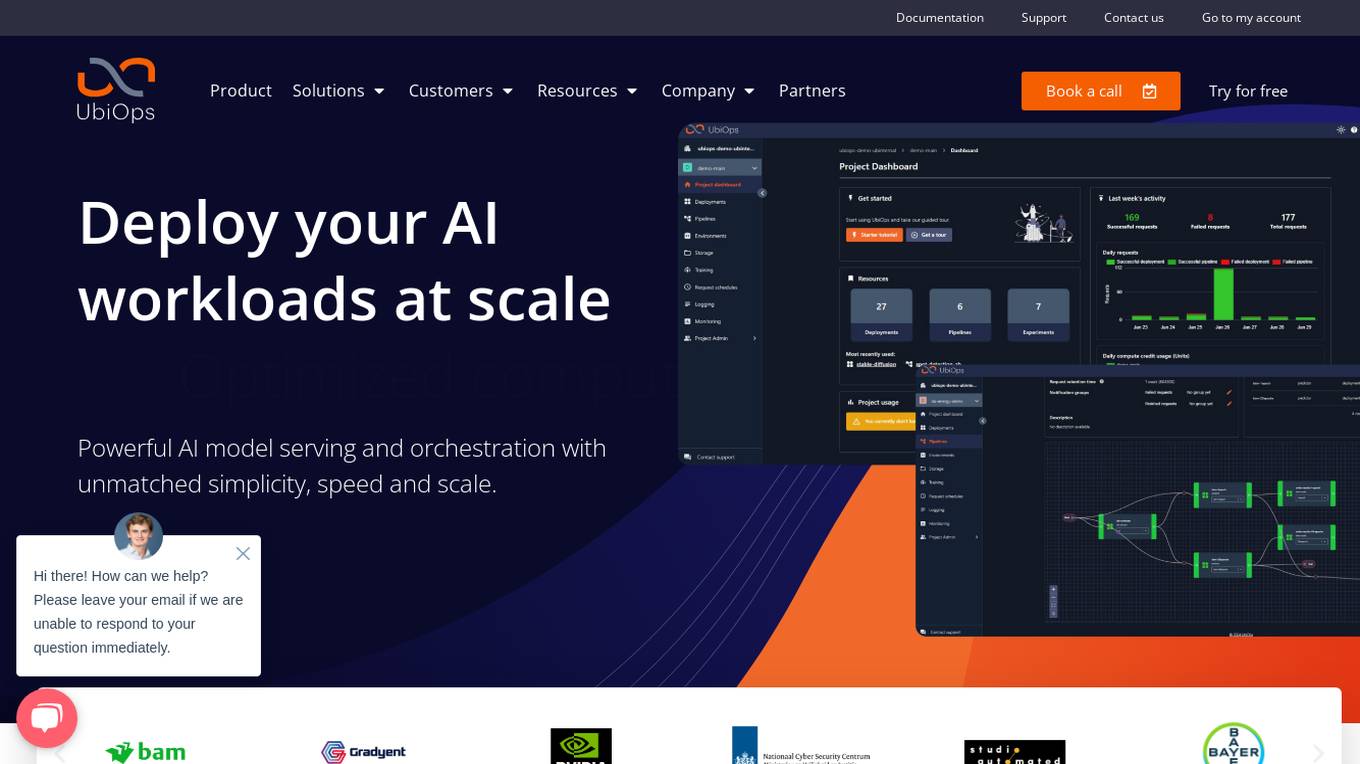

UbiOps

UbiOps is an AI infrastructure platform that helps teams quickly run their AI & ML workloads as reliable and secure microservices. It offers powerful AI model serving and orchestration with unmatched simplicity, speed, and scale. UbiOps allows users to deploy models and functions in minutes, manage AI workloads from a single control plane, integrate easily with tools like PyTorch and TensorFlow, and ensure security and compliance by design. The platform supports hybrid and multi-cloud workload orchestration, rapid adaptive scaling, and modular applications with unique workflow management system.

Denvr DataWorks AI Cloud

Denvr DataWorks AI Cloud is a cloud-based AI platform that provides end-to-end AI solutions for businesses. It offers a range of features including high-performance GPUs, scalable infrastructure, ultra-efficient workflows, and cost efficiency. Denvr DataWorks is an NVIDIA Elite Partner for Compute, and its platform is used by leading AI companies to develop and deploy innovative AI solutions.

Goptimise

Goptimise is a no-code AI-powered scalable backend builder that helps developers craft scalable, seamless, powerful, and intuitive backend solutions. It offers a solid foundation with robust and scalable infrastructure, including dedicated infrastructure, security, and scalability. Goptimise simplifies software rollouts with one-click deployment, automating the process and amplifying productivity. It also provides smart API suggestions, leveraging AI algorithms to offer intelligent recommendations for API design and accelerating development with automated recommendations tailored to each project. Goptimise's intuitive visual interface and effortless integration make it easy to use, and its customizable workspaces allow for dynamic data management and a personalized development experience.

SwiftSora

SwiftSora is an open-source project that enables users to generate videos from prompt text online. The project utilizes OpenAI's Sora model to streamline video creation and includes a straightforward one-click website deployment feature. With SwiftSora, users can effortlessly produce high-quality video assets, ranging from realistic scenes to imaginative visuals, by simply providing text instructions. The platform offers a user-friendly interface with customizable settings, making it accessible to both beginners and experienced video creators. SwiftSora empowers users to elevate their creativity and redefine the boundaries of possibility in video production.

Domino Data Lab

Domino Data Lab is an enterprise AI platform that enables users to build, deploy, and manage AI models across any environment. It fosters collaboration, establishes best practices, and ensures governance while reducing costs. The platform provides access to a broad ecosystem of open source and commercial tools, and infrastructure, allowing users to accelerate and scale AI impact. Domino serves as a central hub for AI operations and knowledge, offering integrated workflows, automation, and hybrid multicloud capabilities. It helps users optimize compute utilization, enforce compliance, and centralize knowledge across teams.

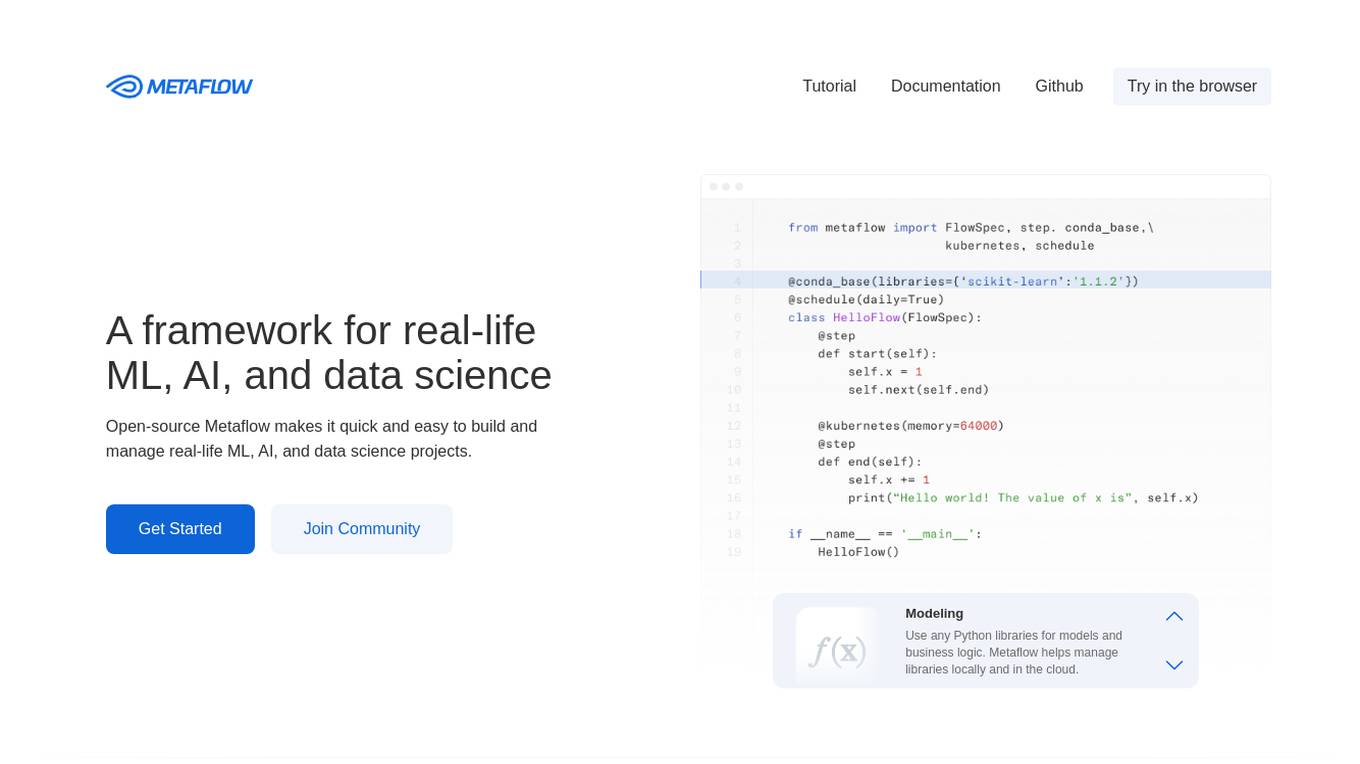

Metaflow

Metaflow is an open-source framework for building and managing real-life ML, AI, and data science projects. It makes it easy to use any Python libraries for models and business logic, deploy workflows to production with a single command, track and store variables inside the flow automatically for easy experiment tracking and debugging, and create robust workflows in plain Python. Metaflow is used by hundreds of companies, including Netflix, 23andMe, and Realtor.com.

Dynamiq

Dynamiq is an operating platform for GenAI applications that enables users to build compliant GenAI applications in their own infrastructure. It offers a comprehensive suite of features including rapid prototyping, testing, deployment, observability, and model fine-tuning. The platform helps streamline the development cycle of AI applications and provides tools for workflow automations, knowledge base management, and collaboration. Dynamiq is designed to optimize productivity, reduce AI adoption costs, and empower organizations to establish AI ahead of schedule.

JFrog ML

JFrog ML is an AI platform designed to streamline AI development from prototype to production. It offers a unified MLOps platform to build, train, deploy, and manage AI workflows at scale. With features like Feature Store, LLMOps, and model monitoring, JFrog ML empowers AI teams to collaborate efficiently and optimize AI & ML models in production.

5 - Open Source AI Tools

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and enhancing collaboration between teams. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, setting project and model configuration, launching and monitoring training jobs, and media upload and prediction. The SDK also includes tutorial-style Jupyter notebooks demonstrating its usage.

aipan-netdisk-search

Aipan-Netdisk-Search is a free and open-source web project for searching netdisk resources. It utilizes third-party APIs with IP access restrictions, suggesting self-deployment. The project can be easily deployed on Vercel and provides instructions for manual deployment. Users can clone the project, install dependencies, run it in the browser, and access it at localhost:3001. The project also includes documentation for deploying on personal servers using NUXT.JS. Additionally, there are options for donations and communication via WeChat.

ChatGPT-API-Faucet

ChatGPT API Faucet is a frontend project for the public platform ChatGPT API Faucet, inspired by the crypto project MultiFaucet. It allows developers in the AI ecosystem to claim $1.00 for free every 24 hours. The program is developed using the Next.js framework and React library, with key components like _app.tsx for initializing pages, index.tsx for main modifications, and Layout.tsx for defining layout components. Users can deploy the project by installing dependencies, building the project, starting the project, configuring reverse proxies or using port:IP access, and running a development server. The tool also supports token balance queries and is related to projects like one-api, ChatGPT-Cost-Calculator, and Poe.Monster. It is licensed under the MIT license.

Yi-Ai

Yi-Ai is a project based on the development of nineai 2.4.2. It is for learning and reference purposes only, not for commercial use. The project includes updates to popular models like gpt-4o and claude3.5, as well as new features such as model image recognition. It also supports various functionalities like model sorting, file type extensions, and bug fixes. The project provides deployment tutorials for both integrated and compiled packages, with instructions for environment setup, configuration, dependency installation, and project startup. Additionally, it offers a management platform with different access levels and emphasizes the importance of following the steps for proper system operation.

geti-sdk

The Intel® Geti™ SDK is a python package that enables teams to rapidly develop AI models by easing the complexities of model development and fostering collaboration. It provides tools to interact with an Intel® Geti™ server via the REST API, allowing for project creation, downloading, uploading, deploying for local inference with OpenVINO, configuration management, training job monitoring, media upload, and prediction. The repository also includes tutorial-style Jupyter notebooks demonstrating SDK usage.

20 - OpenAI Gpts

ML Engineer GPT

I'm a Python and PyTorch expert with knowledge of ML infrastructure requirements ready to help you build and scale your ML projects.

Dependency Chat

Talk about a project taking its dependencies into mind. Start by pasting in a GitHub repo URL

Spring Master

Expert in Spring and Spring Boot projects, code, syntax, issues, optimizations and more.

Code Architect for Nuxt

Nuxt coding assistant, with knowledge of the latest Nuxt documentation

C# Expert

Hello, I'm your C# Backend Expert! Ready to solve all your C# and .Net Core queries.