hordelib

A wrapper around ComfyUI to allow use by the AI Horde.

Stars: 56

horde-engine is a wrapper around ComfyUI designed to run inference pipelines visually designed in the ComfyUI GUI. It enables users to design inference pipelines in ComfyUI and then call them programmatically, maintaining compatibility with the existing horde implementation. The library provides features for processing Horde payloads, initializing the library, downloading and validating models, and generating images based on input data. It also includes custom nodes for preprocessing and tasks such as face restoration and QR code generation. The project depends on various open source projects and bundles some dependencies within the library itself. Users can design ComfyUI pipelines, convert them to the backend format, and run them using the run_image_pipeline() method in hordelib.comfy.Comfy(). The project is actively developed and tested using git, tox, and a specific model directory structure.

README:

Note: This project was formerly known as

hordelib. The project namespace will be changed in the near future to reflect this change.

horde-engine is a wrapper around ComfyUI primarily to enable the AI Horde to run inference pipelines designed visually in the ComfyUI GUI.

The developers of horde-engine can be found in the AI Horde Discord server: https://discord.gg/3DxrhksKzn

Note that horde-engine (previously known as hordelib) has been the default inference backend library of the AI Horde since hordelib v1.0.0.

The goal here is to be able to design inference pipelines in the excellent ComfyUI, and then call those inference pipelines programmatically. Whilst providing features that maintain compatibility with the existing horde implementation.

If being installed from pypi, use a requirements file of the form:

--extra-index-url https://download.pytorch.org/whl/cu121

hordelib

...your other dependencies...

On Linux you will need to install the Nvidia CUDA Toolkit. Linux installers are provided by Nvidia at https://developer.nvidia.com/cuda-downloads

Note if you only have 16GB of RAM and a default /tmp on tmpfs, you will likely need to increase the size of your temporary space to install the CUDA Toolkit or it may fail to extract the archive. One way to do that is just before installing the CUDA Toolkit:

sudo mount -o remount,size=16G /tmpIf you only have 16GB of RAM you will also need swap space. So if you typically run without swap, add some. You won't be able to run this library without it.

Horde payloads can be processed simply with (for example):

# import os

# Wherever your models are

# os.environ["AIWORKER_CACHE_HOME"] = "f:/ai/models" # Defaults to `models/` in the current working directory

import hordelib

hordelib.initialise() # This must be called before any other hordelib functions

from hordelib.horde import HordeLib

from hordelib.shared_model_manager import SharedModelManager

generate = HordeLib()

if SharedModelManager.manager.compvis is None:

raise Exception("Failed to load compvis model manager")

SharedModelManager.manager.compvis.download_model("Deliberate")

SharedModelManager.manager.compvis.validate_model("Deliberate")

data = {

"sampler_name": "k_dpmpp_2m",

"cfg_scale": 7.5,

"denoising_strength": 1.0,

"seed": 123456789,

"height": 512,

"width": 512,

"karras": False,

"tiling": False,

"hires_fix": False,

"clip_skip": 1,

"control_type": None,

"image_is_control": False,

"return_control_map": False,

"prompt": "an ancient llamia monster",

"ddim_steps": 25,

"n_iter": 1,

"model": "Deliberate",

}

pil_image = generate.basic_inference_single_image(data).image

if pil_image is None:

raise Exception("Failed to generate image")

pil_image.save("test.png")Note that hordelib.initialise() will erase all command line arguments from argv. So make sure you parse them before you call that.

See tests/run_*.py for more standalone examples.

If you don't want hordelib to setup and control the logging configuration (we use loguru) initialise with:

import hordelib

hordelib.initialise(setup_logging=False)hordelib depends on a large number of open source projects, and most of these dependencies are automatically downloaded and installed when you install hordelib. Due to the nature and purpose of hordelib some dependencies are bundled directly inside hordelib itself.

A powerful and modular stable diffusion GUI with a graph/nodes interface. Licensed under the terms of the GNU General Public License v3.0.

The entire purpose of hordelib is to access the power of ComfyUI.

Custom nodes for ComfyUI providing Controlnet preprocessing capability. Licened under the terms of the Apache License 2.0.

Custom nodes for ComfyUI providing face restoration.

Nodes for generating QR codes

Requirements:

-

git(install git) -

tox(pip install tox) - Set the environmental variable

AIWORKER_CACHE_HOMEto point to a model directory.

Note the model directory must currently be in the original AI Horde directory structure:

<AIWORKER_CACHE_HOME>\

clip\

codeformer\

compvis\

Deliberate.ckpt

...etc...

controlnet\

embeds\

esrgan\

gfpgan\

safety_checker\

Simply execute: tox (or tox -q for less noisy output)

This will take a while the first time as it installs all the dependencies.

If the tests run successfully images will be produced in the images/ folder.

tox -- -k <filename> for example tox -- -k test_initialisation

tox list

This will list all groups of tests which are involved in either the development, build or CI proccess. Tests which have the word 'fix' in them will automatically apply changes when run, such as to linting or formatting. You can do this by running:

tox -e [test_suite_name_here]

hordelib/pipeline_designs/

Contains ComfyUI pipelines in a format that can be opened by the ComfyUI web app. These saved directly from the web app.

hordelib/pipelines/

Contains the above pipeline JSON files converted to the format required by the backend pipeline processor. These are converted from the web app, see Converting ComfyUI pipelines below.

hordelib/nodes/ These are the custom ComfyUI nodes we use for hordelib specific processing.

In this example we install the dependencies in the OS default environment. When using the git version of hordelib, from the project root:

pip install -r requirements.txt --extra-index-url https://download.pytorch.org/whl/cu118 --upgrade

Ensure ComfyUI is installed, one way is running the tests:

tox -- -k test_comfy_install

From then on to run ComfyUI:

cd ComfyUI

python main.py

Then open a browser at: http://127.0.0.1:8188/

Use the standard ComfyUI web app. Use the "title" attribute to name the nodes, these names become parameter names in the hordelib. For example, a KSampler with the "title" of "sampler2" would become a parameter sampler2.seed, sampler2.cfg, etc. Load the pipeline hordelib/pipeline_designs/pipeline_stable_diffusion.json in the ComfyUI web app for an example.

Save any new pipeline in hordelib/pipeline_designs using the naming convention "pipeline_<name>.json".

Convert the JSON for the model (see Converting ComfyUI pipelines below) and save the resulting JSON in hordelib/pipelines using the same filename as the previous JSON file.

That is all. This can then be called from hordelib using the run_image_pipeline() method in hordelib.comfy.Comfy()

In addition to the design file saved from the UI, we need to save the pipeline file in the backend format. This file is created in the hordelib project root named comfy-prompt.json automatically if you run a pipeline through the ComfyUI version embedded in hordelib. Running ComfyUI with tox -e comfyui automatically patches ComfyUI so this JSON file is saved.

The main config files for the project are: pyproject.toml, tox.ini and requirements.txt

Pypi publishing is automatic all from the GitHub website.

- Create a PR from

maintoreleases - Label the PR with "release:patch" (0.0.1) or "release:minor" (0.1.0)

- Merge the PR with a standard merge commit (not squash)

Here's an example:

Start in a new empty directory. Create requirements.txt:

--extra-index-url https://download.pytorch.org/whl/cu118

hordelib

Create the directory images/ and copy the test_db0.jpg into it.

Copy run_controlnet.py from the hordelib/tests/ directory.

Build a venv:

python -m venv venv

.\venv\Scripts\activate

pip install -r requirements.txt

Run the test we copied:

python run_controlnet.py

The `images/` directory should have our test images.

This is useful when testing new nodes via the horde-reGen-worker etc

python build_helper.py

python -m build --sdist --wheel --outdir dist/ .

python build_helper.py --fixOn the venv where you want to install th new version

python -m pip install /path/to/hordelib/dist/horde_engine-*.whl- Change the value in

consts.pyto the desired ComfyUI version. - Run the test suite via

tox

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for hordelib

Similar Open Source Tools

hordelib

horde-engine is a wrapper around ComfyUI designed to run inference pipelines visually designed in the ComfyUI GUI. It enables users to design inference pipelines in ComfyUI and then call them programmatically, maintaining compatibility with the existing horde implementation. The library provides features for processing Horde payloads, initializing the library, downloading and validating models, and generating images based on input data. It also includes custom nodes for preprocessing and tasks such as face restoration and QR code generation. The project depends on various open source projects and bundles some dependencies within the library itself. Users can design ComfyUI pipelines, convert them to the backend format, and run them using the run_image_pipeline() method in hordelib.comfy.Comfy(). The project is actively developed and tested using git, tox, and a specific model directory structure.

py-vectara-agentic

The `vectara-agentic` Python library is designed for developing powerful AI assistants using Vectara and Agentic-RAG. It supports various agent types, includes pre-built tools for domains like finance and legal, and enables easy creation of custom AI assistants and agents. The library provides tools for summarizing text, rephrasing text, legal tasks like summarizing legal text and critiquing as a judge, financial tasks like analyzing balance sheets and income statements, and database tools for inspecting and querying databases. It also supports observability via LlamaIndex and Arize Phoenix integration.

Hurley-AI

Hurley AI is a next-gen framework for developing intelligent agents through Retrieval-Augmented Generation. It enables easy creation of custom AI assistants and agents, supports various agent types, and includes pre-built tools for domains like finance and legal. Hurley AI integrates with LLM inference services and provides observability with Arize Phoenix. Users can create Hurley RAG tools with a single line of code and customize agents with specific instructions. The tool also offers various helper functions to connect with Hurley RAG and search tools, along with pre-built tools for tasks like summarizing text, rephrasing text, understanding memecoins, and querying databases.

llm-ollama

LLM-ollama is a plugin that provides access to models running on an Ollama server. It allows users to query the Ollama server for a list of models, register them with LLM, and use them for prompting, chatting, and embedding. The plugin supports image attachments, embeddings, JSON schemas, async models, model aliases, and model options. Users can interact with Ollama models through the plugin in a seamless and efficient manner.

desktop

ComfyUI Desktop is a packaged desktop application that allows users to easily use ComfyUI with bundled features like ComfyUI source code, ComfyUI-Manager, and uv. It automatically installs necessary Python dependencies and updates with stable releases. The app comes with Electron, Chromium binaries, and node modules. Users can store ComfyUI files in a specified location and manage model paths. The tool requires Python 3.12+ and Visual Studio with Desktop C++ workload for Windows. It uses nvm to manage node versions and yarn as the package manager. Users can install ComfyUI and dependencies using comfy-cli, download uv, and build/launch the code. Troubleshooting steps include rebuilding modules and installing missing libraries. The tool supports debugging in VSCode and provides utility scripts for cleanup. Crash reports can be sent to help debug issues, but no personal data is included.

trubrics-python

Trubrics is a Python client for event tracking and analyzing LLM interactions. It offers fast and non-blocking queuing system with automatic flushing to Trubrics API. Users can track events and LLM interactions, adjust logging verbosity, and configure flush intervals and batch sizes. The tool simplifies tracking user interactions and analyzing data for LLM applications.

magic-cli

Magic CLI is a command line utility that leverages Large Language Models (LLMs) to enhance command line efficiency. It is inspired by projects like Amazon Q and GitHub Copilot for CLI. The tool allows users to suggest commands, search across command history, and generate commands for specific tasks using local or remote LLM providers. Magic CLI also provides configuration options for LLM selection and response generation. The project is still in early development, so users should expect breaking changes and bugs.

hash

HASH is a self-building, open-source database which grows, structures and checks itself. With it, we're creating a platform for decision-making, which helps you integrate, understand and use data in a variety of different ways.

garak

Garak is a free tool that checks if a Large Language Model (LLM) can be made to fail in a way that is undesirable. It probes for hallucination, data leakage, prompt injection, misinformation, toxicity generation, jailbreaks, and many other weaknesses. Garak's a free tool. We love developing it and are always interested in adding functionality to support applications.

codespin

CodeSpin.AI is a set of open-source code generation tools that leverage large language models (LLMs) to automate coding tasks. With CodeSpin, you can generate code in various programming languages, including Python, JavaScript, Java, and C++, by providing natural language prompts. CodeSpin offers a range of features to enhance code generation, such as custom templates, inline prompting, and the ability to use ChatGPT as an alternative to API keys. Additionally, CodeSpin provides options for regenerating code, executing code in prompt files, and piping data into the LLM for processing. By utilizing CodeSpin, developers can save time and effort in coding tasks, improve code quality, and explore new possibilities in code generation.

HuggingFaceGuidedTourForMac

HuggingFaceGuidedTourForMac is a guided tour on how to install optimized pytorch and optionally Apple's new MLX, JAX, and TensorFlow on Apple Silicon Macs. The repository provides steps to install homebrew, pytorch with MPS support, MLX, JAX, TensorFlow, and Jupyter lab. It also includes instructions on running large language models using HuggingFace transformers. The repository aims to help users set up their Macs for deep learning experiments with optimized performance.

appworld

AppWorld is a high-fidelity execution environment of 9 day-to-day apps, operable via 457 APIs, populated with digital activities of ~100 people living in a simulated world. It provides a benchmark of natural, diverse, and challenging autonomous agent tasks requiring rich and interactive coding. The repository includes implementations of AppWorld apps and APIs, along with tests. It also introduces safety features for code execution and provides guides for building agents and extending the benchmark.

SpeziLLM

The Spezi LLM Swift Package includes modules that help integrate LLM-related functionality in applications. It provides tools for local LLM execution, usage of remote OpenAI-based LLMs, and LLMs running on Fog node resources within the local network. The package contains targets like SpeziLLM, SpeziLLMLocal, SpeziLLMLocalDownload, SpeziLLMOpenAI, and SpeziLLMFog for different LLM functionalities. Users can configure and interact with local LLMs, OpenAI LLMs, and Fog LLMs using the provided APIs and platforms within the Spezi ecosystem.

ai2-scholarqa-lib

Ai2 Scholar QA is a system for answering scientific queries and literature review by gathering evidence from multiple documents across a corpus and synthesizing an organized report with evidence for each claim. It consists of a retrieval component and a three-step generator pipeline. The retrieval component fetches relevant evidence passages using the Semantic Scholar public API and reranks them. The generator pipeline includes quote extraction, planning and clustering, and summary generation. The system is powered by the ScholarQA class, which includes components like PaperFinder and MultiStepQAPipeline. It requires environment variables for Semantic Scholar API and LLMs, and can be run as local docker containers or embedded into another application as a Python package.

tiledesk-dashboard

Tiledesk is an open-source live chat platform with integrated chatbots written in Node.js and Express. It is designed to be a multi-channel platform for web, Android, and iOS, and it can be used to increase sales or provide post-sales customer service. Tiledesk's chatbot technology allows for automation of conversations, and it also provides APIs and webhooks for connecting external applications. Additionally, it offers a marketplace for apps and features such as CRM, ticketing, and data export.

mflux

MFLUX is a line-by-line port of the FLUX implementation in the Huggingface Diffusers library to Apple MLX. It aims to run powerful FLUX models from Black Forest Labs locally on Mac machines. The codebase is minimal and explicit, prioritizing readability over generality and performance. Models are implemented from scratch in MLX, with tokenizers from the Huggingface Transformers library. Dependencies include Numpy and Pillow for image post-processing. Installation can be done using `uv tool` or classic virtual environment setup. Command-line arguments allow for image generation with specified models, prompts, and optional parameters. Quantization options for speed and memory reduction are available. LoRA adapters can be loaded for fine-tuning image generation. Controlnet support provides more control over image generation with reference images. Current limitations include generating images one by one, lack of support for negative prompts, and some LoRA adapters not working.

For similar tasks

iris_android

This repository contains an offline Android chat application based on llama.cpp example. Users can install, download models, and run the app completely offline and privately. To use the app, users need to go to the releases page, download and install the app. Building the app requires downloading Android Studio, cloning the repository, and importing it into Android Studio. The app can be run offline by following specific steps such as enabling developer options, wireless debugging, and downloading the stable LM model. The project is maintained by Nerve Sparks and contributions are welcome through creating feature branches and pull requests.

podman-desktop-extension-ai-lab

Podman AI Lab is an open source extension for Podman Desktop designed to work with Large Language Models (LLMs) on a local environment. It features a recipe catalog with common AI use cases, a curated set of open source models, and a playground for learning, prototyping, and experimentation. Users can quickly and easily get started bringing AI into their applications without depending on external infrastructure, ensuring data privacy and security.

llamafile-docker

This repository, llamafile-docker, automates the process of checking for new releases of Mozilla-Ocho/llamafile, building a Docker image with the latest version, and pushing it to Docker Hub. Users can download a pre-trained model in gguf format and use the Docker image to interact with the model via a server or CLI version. Contributions are welcome under the Apache 2.0 license.

hordelib

horde-engine is a wrapper around ComfyUI designed to run inference pipelines visually designed in the ComfyUI GUI. It enables users to design inference pipelines in ComfyUI and then call them programmatically, maintaining compatibility with the existing horde implementation. The library provides features for processing Horde payloads, initializing the library, downloading and validating models, and generating images based on input data. It also includes custom nodes for preprocessing and tasks such as face restoration and QR code generation. The project depends on various open source projects and bundles some dependencies within the library itself. Users can design ComfyUI pipelines, convert them to the backend format, and run them using the run_image_pipeline() method in hordelib.comfy.Comfy(). The project is actively developed and tested using git, tox, and a specific model directory structure.

HuggingFaceModelDownloader

The HuggingFace Model Downloader is a utility tool for downloading models and datasets from the HuggingFace website. It offers multithreaded downloading for LFS files and ensures the integrity of downloaded models with SHA256 checksum verification. The tool provides features such as nested file downloading, filter downloads for specific LFS model files, support for HuggingFace Access Token, and configuration file support. It can be used as a library or a single binary for easy model downloading and inference in projects.

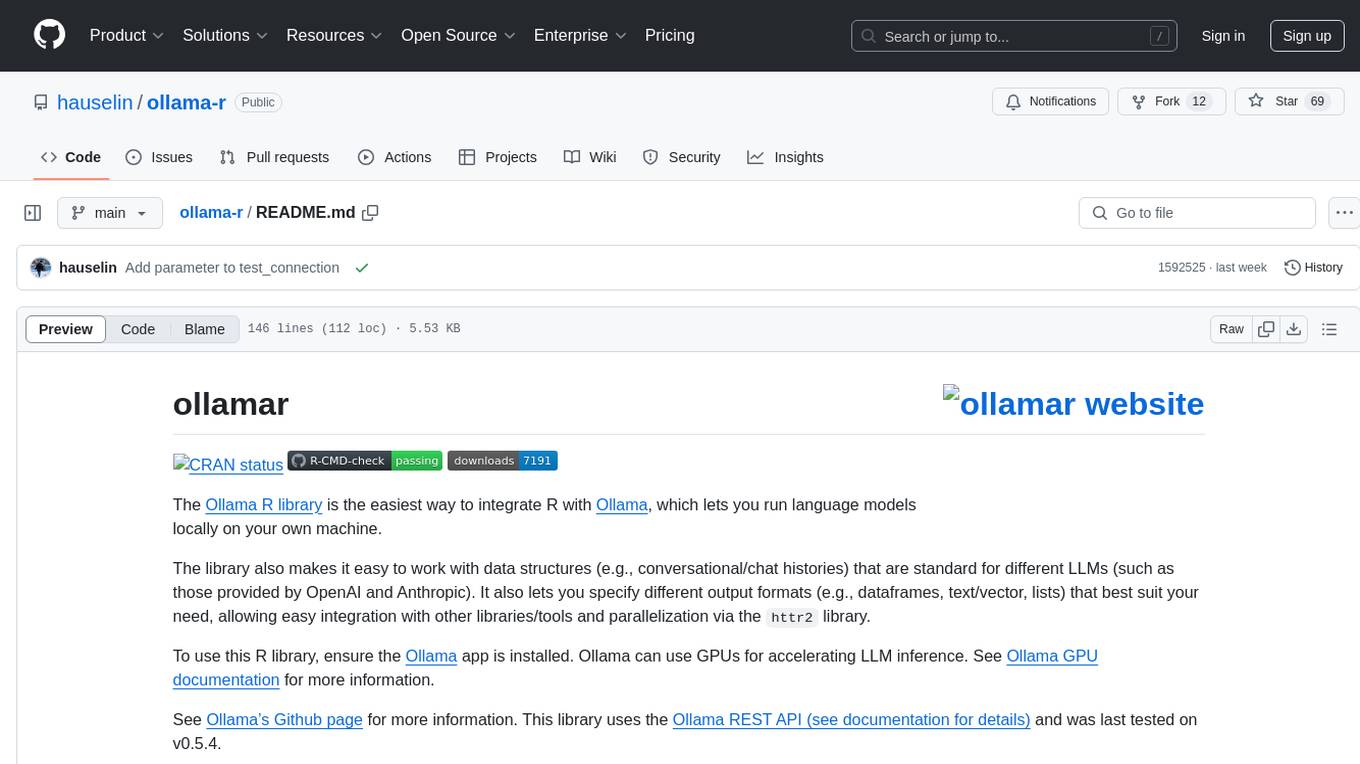

ollama-r

The Ollama R library provides an easy way to integrate R with Ollama for running language models locally on your machine. It supports working with standard data structures for different LLMs, offers various output formats, and enables integration with other libraries/tools. The library uses the Ollama REST API and requires the Ollama app to be installed, with GPU support for accelerating LLM inference. It is inspired by Ollama Python and JavaScript libraries, making it familiar for users of those languages. The installation process involves downloading the Ollama app, installing the 'ollamar' package, and starting the local server. Example usage includes testing connection, downloading models, generating responses, and listing available models.

DeepResearch

Tongyi DeepResearch is an agentic large language model with 30.5 billion total parameters, designed for long-horizon, deep information-seeking tasks. It demonstrates state-of-the-art performance across various search benchmarks. The model features a fully automated synthetic data generation pipeline, large-scale continual pre-training on agentic data, end-to-end reinforcement learning, and compatibility with two inference paradigms. Users can download the model directly from HuggingFace or ModelScope. The repository also provides benchmark evaluation scripts and information on the Deep Research Agent Family.

wenxin-starter

WenXin-Starter is a spring-boot-starter for Baidu's "Wenxin Qianfan WENXINWORKSHOP" large model, which can help you quickly access Baidu's AI capabilities. It fully integrates the official API documentation of Wenxin Qianfan. Supports text-to-image generation, built-in dialogue memory, and supports streaming return of dialogue. Supports QPS control of a single model and supports queuing mechanism. Plugins will be added soon.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.