heurist-agent-framework

A flexible multi-interface AI agent framework for building agents with reasoning, tool use, memory, deep research, blockchain interaction, MCP, and agents-as-a-service.

Stars: 764

Heurist Agent Framework is a flexible multi-interface AI agent framework that allows processing text and voice messages, generating images and videos, interacting across multiple platforms, fetching and storing information in a knowledge base, accessing external APIs and tools, and composing complex workflows using Mesh Agents. It supports various platforms like Telegram, Discord, Twitter, Farcaster, REST API, and MCP. The framework is built on a modular architecture and provides core components, tools, workflows, and tool integration with MCP support.

README:

A flexible multi-interface AI agent framework that can interact through various platforms including Telegram, Discord, Twitter, Farcaster, REST API, and MCP.

Grab a Heurist API Key instantly for free by using the code 'agent' while submitting the form on https://heurist.ai/dev-access

The Heurist Agent Framework is built on a modular architecture that allows an AI agent to:

- Process text and voice messages

- Generate images and videos

- Interact across multiple platforms with consistent behavior

- Fetch and store information in a knowledge base (Postgres and SQLite supported)

- Access external APIs, tools, and a wide range of Mesh Agents to compose complex workflows

- Features

- Heurist Mesh

- Heurist Agent Framework Architecture

- Development Setup

- How to Use GitHub Issues

- License

- Contributing

- Support

- Star History

- 🤖 Core Agent - Modular framework with advanced LLM integration

- 🧩 Component Architecture - Plug-and-play components for flexible agent or agentic application design

- 🔄 Workflow System - RAG, Chain of Thought, and Research workflows

- 🖼️ Media Generation - Image creation and processing capabilities

- 🎤 Voice Processing - Audio transcription and text-to-speech

- 💾 Vector Storage - Knowledge retrieval with PostgreSQL/SQLite support

- 🛠️ Tool Integration - Extensible tool framework with MCP support

- 🌐 Mesh Agent Access - Connect to community-contributed specialized agents via API or MCP

- 🔌 Multi-platform Support:

- Telegram bot

- Discord bot

- Twitter automation

- Farcaster integration

- REST API

- MCP integration

Heurist Mesh is the skills marketplace for AI agents - your gateway to Web3 intelligence. General-purpose AI models lack specialized knowledge about Web3 and often fail to deliver accurate results. Heurist Mesh solves this by providing 30+ specialized AI agents that are experts in crypto analytics, ready to give your applications or AI agents the Web3 expertise they need.

- Curated Web3 Tools: We curate the best Web3 data sources and APIs, constantly monitored and updated for reliable performance.

- Optimized for Agents: Input/output formats optimized for AI agents - 70% fewer tool calls, 30-50% less token usage vs simple API wrappers.

- Composable Architecture: Mix and match specialized agents to build powerful workflows.

- Flexible Access: REST API with API key, x402-enabled pay-per-use with USDC on Base, and MCP access.

- Trusted Agent Standard: Every agent MCP is registered on the ERC-8004 trusted agent standard on Ethereum.

| Category | Description | Example Agents |

|---|---|---|

| Aggregated Crypto Insights | Comprehensive token and market intelligence (recommended) | Token Resolver, Trending Tokens, Twitter Intelligence |

| Token Information | Price data, market metrics, token analytics | CoinGecko, DexScreener, Bitquery, aixbt |

| Social Media | Twitter/X analysis, influencer tracking, sentiment | Elfa, Moni, Twitter Info |

| Blockchain Data | On-chain analytics, address intelligence, forensics | Etherscan, ChainBase, Space and Time |

| Web Search | Web research with AI summarization | Exa, Firecrawl, Caesar |

| Crypto Products | Platform-specific tools for DeFi and NFTs | Pump.fun, LetsBonk, Zora, Aave |

| Wallet Analysis | Portfolio tracking and wallet behavior analysis | Pond AI, GoPlus, Zerion |

- Mesh Portal: mesh.heurist.ai - Browse agents and deploy dedicated MCP servers

- REST API: API Documentation

- X402 API: mesh.heurist.xyz/x402/agents - Pay-per-use with USDC on Base

- Full Agent List: View all agents

All Heurist Mesh agents are accessible via MCP. This means you can access them from any AI clients and frameworks that support MCP, including Claude, ChatGPT, Cursor, LangChain, Google ADK, n8n, etc.

Visit Heurist Mesh Console to view all MCP endpoints, and create private dedicated MCP servers by mixing and matching the agents that you need. The MCP server source code is available at Github: heurist-mesh-mcp-server.

🔍 Click the badge to explore technical insights, ask questions.

The framework follows a modular, component-based architecture:

-

BaseAgent (Abstract Base Class)

- Defines the interface and common functionality

- Manages component initialization and lifecycle

- Implements core messaging patterns

-

CoreAgent (Concrete Implementation)

- Implements BaseAgent functionality

- Orchestrates components and workflows

- Handles decision-making for workflow selection

Each interface inherits from BaseAgent and implements platform-specific handling:

-

Telegram (

interfaces/telegram_agent.py) -

Discord (

interfaces/discord_agent.py) -

API (

interfaces/flask_agent.py) -

Twitter (

interfaces/twitter_agent.py) -

Farcaster (

interfaces/farcaster_agent.py)

Heurist Core provides a set of core components, tools, and workflows for building LLM-powered agents or agentic applications. It can be used as a standalone package or as part of the Heurist Agent Framework.

Read the Heurist Core documentation

The framework uses a modular component system:

- PersonalityProvider: Manages agent personality and system prompts

- KnowledgeProvider: Handles knowledge retrieval from vector database

- ConversationManager: Manages conversation history and context

- ValidationManager: Validates inputs and outputs

- MediaHandler: Processes images, audio, and other media

- LLMProvider: Interfaces with language models

- MessageStore: Stores and retrieves messages with vector search

Workflows provide higher-level reasoning patterns:

- AugmentedLLMCall: Standard RAG + tools pattern for context-aware responses

- ChainOfThoughtReasoning: Multi-step reasoning with planning and execution phases

- ResearchWorkflow: Deep web search and analysis with hierarchical exploration

- ToolBox: Base framework for tool definition and registration

- Tools: Tool management and execution layer

- ToolsMCP: Integration with MCP Protocol for tool execution

- SearchClient: Unified client for web search (Firecrawl/Exa)

- MCPClient: Client for MCP Protocol for local or remote servers.

Read the Agent Usage and Development Guide

To set up your development environment:

- Install dependencies using uv:

uv sync- Activate the virtual environment:

source .venv/bin/activate # On Windows: .venv\Scripts\activate[!NOTE] To run a file, you can use either

python <filename>.pyoruv run <filename>.py.

We encourage the community to open GitHub issues whenever you have a new idea or find something that needs attention. When creating an issue, please use our Issue Template and select one of the following categories:

-

Integration Request

- For requests to integrate with a new data source (e.g., CoinGecko, arXiv) or a new AI use case.

- Most important for the community, as these issues help drive the direction of our framework's evolution.

- If you have an idea but aren't sure how to implement it, open an issue under this label so others can pick it up or offer suggestions.

-

Bug

- For reporting errors or unexpected behavior in the framework.

- Provide as much detail as possible (logs, steps to reproduce, environment, etc.).

-

Question

- For inquiries about usage, best practices, or clarifications on existing features.

-

Bounty

- For tasks with a reward (e.g., tokens, NFTs, or other benefits).

- The bounty label indicates that Heurist team or another community member are offering a reward to whoever resolves the issue.

-

Bounty Rules:

- Make sure to read the issue description carefully for scope and acceptance criteria.

- Once your Pull Request addressing the bounty is merged, we'll follow up on fulfilling the reward.

- Additional instructions (e.g., contact method) may be included in the issue itself.

- Look for Integration Requests or Bounty issues if you want to contribute new features or earn rewards.

- Feel free to discuss approaches in the comments. If you're ready to tackle it, mention "I'm working on this!" so others know it's in progress.

This process helps us stay organized, encourages community involvement, and keeps development transparent.

BSL 1.1 - See LICENSE file for details.

- Fork the repository

- Create a feature branch

- Commit your changes

- Push to the branch

- Create a Pull Request

For Heurist Mesh agents or to learn about contributing specialized community agents, please refer to the Mesh README

For support, please open an issue in the GitHub repository or contact the maintainers. Join the Heurist Ecosystem Builder telegram https://t.me/heuristsupport

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for heurist-agent-framework

Similar Open Source Tools

heurist-agent-framework

Heurist Agent Framework is a flexible multi-interface AI agent framework that allows processing text and voice messages, generating images and videos, interacting across multiple platforms, fetching and storing information in a knowledge base, accessing external APIs and tools, and composing complex workflows using Mesh Agents. It supports various platforms like Telegram, Discord, Twitter, Farcaster, REST API, and MCP. The framework is built on a modular architecture and provides core components, tools, workflows, and tool integration with MCP support.

obsidian-llmsider

LLMSider is an AI assistant plugin for Obsidian that offers flexible multi-model support, deep workflow integration, privacy-first design, and a professional tool ecosystem. It provides comprehensive AI capabilities for personal knowledge management, from intelligent writing assistance to complex task automation, making AI a capable assistant for thinking and creating while ensuring data privacy.

aider-desk

AiderDesk is a desktop application that enhances coding workflow by leveraging AI capabilities. It offers an intuitive GUI, project management, IDE integration, MCP support, settings management, cost tracking, structured messages, visual file management, model switching, code diff viewer, one-click reverts, and easy sharing. Users can install it by downloading the latest release and running the executable. AiderDesk also supports Python version detection and auto update disabling. It includes features like multiple project management, context file management, model switching, chat mode selection, question answering, cost tracking, MCP server integration, and MCP support for external tools and context. Development setup involves cloning the repository, installing dependencies, running in development mode, and building executables for different platforms. Contributions from the community are welcome following specific guidelines.

beeai-platform

BeeAI is an open-source platform that simplifies the discovery, running, and sharing of AI agents across different frameworks. It addresses challenges such as framework fragmentation, deployment complexity, and discovery issues by providing a standardized platform for individuals and teams to access agents easily. With features like a centralized agent catalog, framework-agnostic interfaces, containerized agents, and consistent user experiences, BeeAI aims to streamline the process of working with AI agents for both developers and teams.

talkcody

TalkCody is a free, open-source AI coding agent designed for developers who value speed, cost, control, and privacy. It offers true freedom to use any AI model without vendor lock-in, maximum speed through unique four-level parallelism, and complete privacy as everything runs locally without leaving the user's machine. With professional-grade features like multimodal input support, MCP server compatibility, and a marketplace for agents and skills, TalkCody aims to enhance development productivity and flexibility.

JamAIBase

JamAI Base is an open-source platform integrating SQLite and LanceDB databases with managed memory and RAG capabilities. It offers built-in LLM, vector embeddings, and reranker orchestration accessible through a spreadsheet-like UI and REST API. Users can transform static tables into dynamic entities, facilitate real-time interactions, manage structured data, and simplify chatbot development. The tool focuses on ease of use, scalability, flexibility, declarative paradigm, and innovative RAG techniques, making complex data operations accessible to users with varying technical expertise.

EpicStaff

EpicStaff is a powerful project management tool designed to streamline team collaboration and task management. It provides a user-friendly interface for creating and assigning tasks, tracking progress, and communicating with team members in real-time. With features such as task prioritization, deadline reminders, and file sharing capabilities, EpicStaff helps teams stay organized and productive. Whether you're working on a small project or managing a large team, EpicStaff is the perfect solution to keep everyone on the same page and ensure project success.

kagent

Kagent is a Kubernetes native framework for building AI agents, designed to be easy to understand and use. It provides a flexible and powerful way to build, deploy, and manage AI agents in Kubernetes. The framework consists of agents, tools, and model configurations defined as Kubernetes custom resources, making them easy to manage and modify. Kagent is extensible, flexible, observable, declarative, testable, and has core components like a controller, UI, engine, and CLI.

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

intellij-aicoder

AI Coding Assistant is a free and open-source IntelliJ plugin that leverages cutting-edge Language Model APIs to enhance developers' coding experience. It seamlessly integrates with various leading LLM APIs, offers an intuitive toolbar UI, and allows granular control over API requests. With features like Code & Patch Chat, Planning with AI Agents, Markdown visualization, and versatile text processing capabilities, this tool aims to streamline coding workflows and boost productivity.

ComfyUI-Copilot

ComfyUI-Copilot is an intelligent assistant built on the Comfy-UI framework that simplifies and enhances the AI algorithm debugging and deployment process through natural language interactions. It offers intuitive node recommendations, workflow building aids, and model querying services to streamline development processes. With features like interactive Q&A bot, natural language node suggestions, smart workflow assistance, and model querying, ComfyUI-Copilot aims to lower the barriers to entry for beginners, boost development efficiency with AI-driven suggestions, and provide real-time assistance for developers.

meeting-minutes

An open-source AI assistant for taking meeting notes that captures live meeting audio, transcribes it in real-time, and generates summaries while ensuring user privacy. Perfect for teams to focus on discussions while automatically capturing and organizing meeting content without external servers or complex infrastructure. Features include modern UI, real-time audio capture, speaker diarization, local processing for privacy, and more. The tool also offers a Rust-based implementation for better performance and native integration, with features like live transcription, speaker diarization, and a rich text editor for notes. Future plans include database connection for saving meeting minutes, improving summarization quality, and adding download options for meeting transcriptions and summaries. The backend supports multiple LLM providers through a unified interface, with configurations for Anthropic, Groq, and Ollama models. System architecture includes core components like audio capture service, transcription engine, LLM orchestrator, data services, and API layer. Prerequisites for setup include Node.js, Python, FFmpeg, and Rust. Development guidelines emphasize project structure, testing, documentation, type hints, and ESLint configuration. Contributions are welcome under the MIT License.

Riona-AI-Agent

Riona-AI-Agent is a versatile AI chatbot designed to assist users in various tasks. It utilizes natural language processing and machine learning algorithms to understand user queries and provide accurate responses. The chatbot can be integrated into websites, applications, and messaging platforms to enhance user experience and streamline communication. With its customizable features and easy deployment, Riona-AI-Agent is suitable for businesses, developers, and individuals looking to automate customer support, provide information, and engage with users in a conversational manner.

Mira

Mira is an agentic AI library designed for automating company research by gathering information from various sources like company websites, LinkedIn profiles, and Google Search. It utilizes a multi-agent architecture to collect and merge data points into a structured profile with confidence scores and clear source attribution. The core library is framework-agnostic and can be integrated into applications, pipelines, or custom workflows. Mira offers features such as real-time progress events, confidence scoring, company criteria matching, and built-in services for data gathering. The tool is suitable for users looking to streamline company research processes and enhance data collection efficiency.

lotti

Lotti is an open-source personal assistant that helps users capture, organize, and understand their work and life through AI-enhanced task management, audio recordings, and intelligent summaries. It ensures complete data ownership, configurable AI providers, privacy-first design, and no vendor lock-in. Users can pick up tasks, record voice notes, and ask for summaries. Core features include AI-powered intelligence, comprehensive tracking, and privacy & control. Lotti supports multiple AI providers, offers installation guides, beta testing options, and development instructions. It is built on Flutter with a focus on privacy, local AI, and user data ownership.

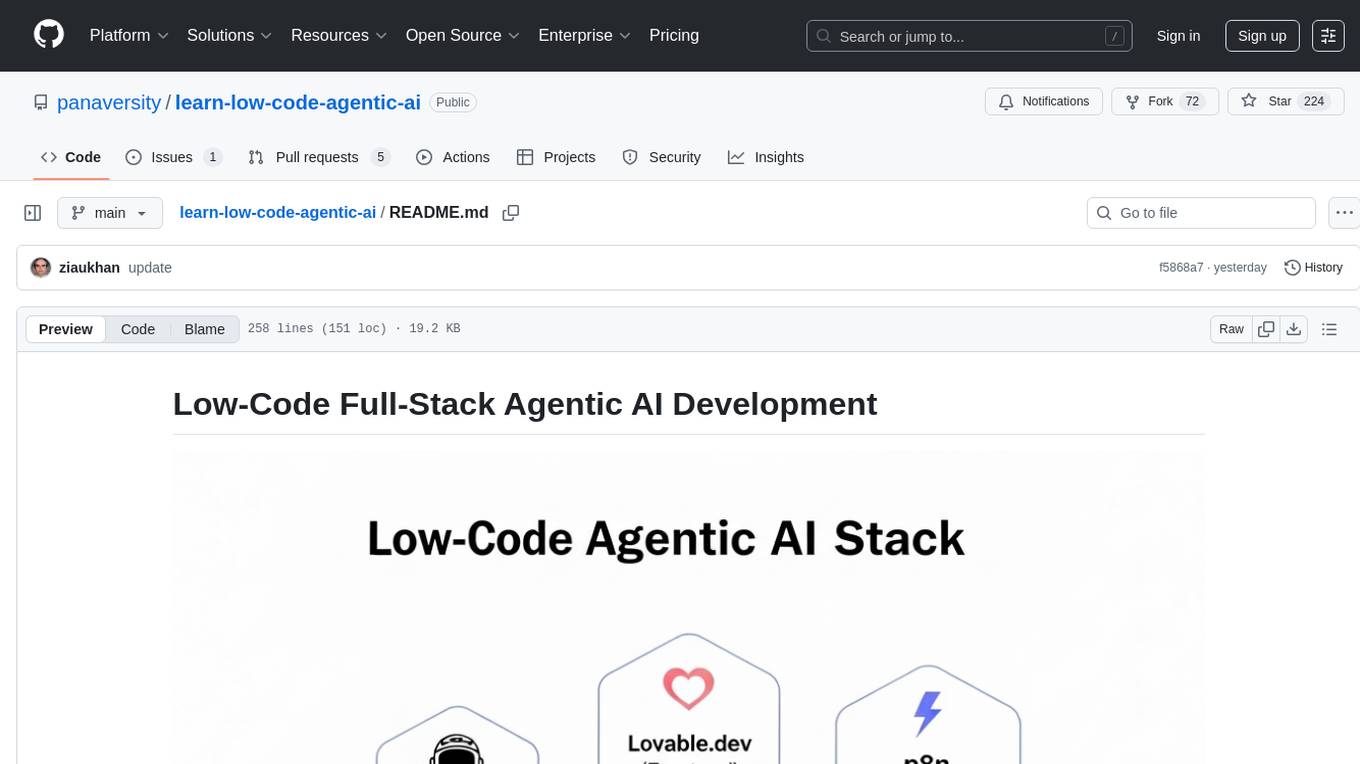

learn-low-code-agentic-ai

This repository is dedicated to learning about Low-Code Full-Stack Agentic AI Development. It provides material for building modern AI-powered applications using a low-code full-stack approach. The main tools covered are UXPilot for UI/UX mockups, Lovable.dev for frontend applications, n8n for AI agents and workflows, Supabase for backend data storage, authentication, and vector search, and Model Context Protocol (MCP) for integration. The focus is on prompt and context engineering as the foundation for working with AI systems, enabling users to design, develop, and deploy AI-driven full-stack applications faster, smarter, and more reliably.

For similar tasks

heurist-agent-framework

Heurist Agent Framework is a flexible multi-interface AI agent framework that allows processing text and voice messages, generating images and videos, interacting across multiple platforms, fetching and storing information in a knowledge base, accessing external APIs and tools, and composing complex workflows using Mesh Agents. It supports various platforms like Telegram, Discord, Twitter, Farcaster, REST API, and MCP. The framework is built on a modular architecture and provides core components, tools, workflows, and tool integration with MCP support.

generative-ai

This repository contains notebooks, code samples, sample apps, and other resources that demonstrate how to use, develop and manage generative AI workflows using Generative AI on Google Cloud, powered by Vertex AI. For more Vertex AI samples, please visit the Vertex AI samples Github repository.

AISuperDomain

Aila Desktop Application is a powerful tool that integrates multiple leading AI models into a single desktop application. It allows users to interact with various AI models simultaneously, providing diverse responses and insights to their inquiries. With its user-friendly interface and customizable features, Aila empowers users to engage with AI seamlessly and efficiently. Whether you're a researcher, student, or professional, Aila can enhance your AI interactions and streamline your workflow.

generative-ai-for-beginners

This course has 18 lessons. Each lesson covers its own topic so start wherever you like! Lessons are labeled either "Learn" lessons explaining a Generative AI concept or "Build" lessons that explain a concept and code examples in both **Python** and **TypeScript** when possible. Each lesson also includes a "Keep Learning" section with additional learning tools. **What You Need** * Access to the Azure OpenAI Service **OR** OpenAI API - _Only required to complete coding lessons_ * Basic knowledge of Python or Typescript is helpful - *For absolute beginners check out these Python and TypeScript courses. * A Github account to fork this entire repo to your own GitHub account We have created a **Course Setup** lesson to help you with setting up your development environment. Don't forget to star (🌟) this repo to find it easier later. ## 🧠 Ready to Deploy? If you are looking for more advanced code samples, check out our collection of Generative AI Code Samples in both **Python** and **TypeScript**. ## 🗣️ Meet Other Learners, Get Support Join our official AI Discord server to meet and network with other learners taking this course and get support. ## 🚀 Building a Startup? Sign up for Microsoft for Startups Founders Hub to receive **free OpenAI credits** and up to **$150k towards Azure credits to access OpenAI models through Azure OpenAI Services**. ## 🙏 Want to help? Do you have suggestions or found spelling or code errors? Raise an issue or Create a pull request ## 📂 Each lesson includes: * A short video introduction to the topic * A written lesson located in the README * Python and TypeScript code samples supporting Azure OpenAI and OpenAI API * Links to extra resources to continue your learning ## 🗃️ Lessons | | Lesson Link | Description | Additional Learning | | :-: | :------------------------------------------------------------------------------------------------------------------------------------------: | :---------------------------------------------------------------------------------------------: | ------------------------------------------------------------------------------ | | 00 | Course Setup | **Learn:** How to Setup Your Development Environment | Learn More | | 01 | Introduction to Generative AI and LLMs | **Learn:** Understanding what Generative AI is and how Large Language Models (LLMs) work. | Learn More | | 02 | Exploring and comparing different LLMs | **Learn:** How to select the right model for your use case | Learn More | | 03 | Using Generative AI Responsibly | **Learn:** How to build Generative AI Applications responsibly | Learn More | | 04 | Understanding Prompt Engineering Fundamentals | **Learn:** Hands-on Prompt Engineering Best Practices | Learn More | | 05 | Creating Advanced Prompts | **Learn:** How to apply prompt engineering techniques that improve the outcome of your prompts. | Learn More | | 06 | Building Text Generation Applications | **Build:** A text generation app using Azure OpenAI | Learn More | | 07 | Building Chat Applications | **Build:** Techniques for efficiently building and integrating chat applications. | Learn More | | 08 | Building Search Apps Vector Databases | **Build:** A search application that uses Embeddings to search for data. | Learn More | | 09 | Building Image Generation Applications | **Build:** A image generation application | Learn More | | 10 | Building Low Code AI Applications | **Build:** A Generative AI application using Low Code tools | Learn More | | 11 | Integrating External Applications with Function Calling | **Build:** What is function calling and its use cases for applications | Learn More | | 12 | Designing UX for AI Applications | **Learn:** How to apply UX design principles when developing Generative AI Applications | Learn More | | 13 | Securing Your Generative AI Applications | **Learn:** The threats and risks to AI systems and methods to secure these systems. | Learn More | | 14 | The Generative AI Application Lifecycle | **Learn:** The tools and metrics to manage the LLM Lifecycle and LLMOps | Learn More | | 15 | Retrieval Augmented Generation (RAG) and Vector Databases | **Build:** An application using a RAG Framework to retrieve embeddings from a Vector Databases | Learn More | | 16 | Open Source Models and Hugging Face | **Build:** An application using open source models available on Hugging Face | Learn More | | 17 | AI Agents | **Build:** An application using an AI Agent Framework | Learn More | | 18 | Fine-Tuning LLMs | **Learn:** The what, why and how of fine-tuning LLMs | Learn More |

cog-comfyui

Cog-comfyui allows users to run ComfyUI workflows on Replicate. ComfyUI is a visual programming tool for creating and sharing generative art workflows. With cog-comfyui, users can access a variety of pre-trained models and custom nodes to create their own unique artworks. The tool is easy to use and does not require any coding experience. Users simply need to upload their API JSON file and any necessary input files, and then click the "Run" button. Cog-comfyui will then generate the output image or video file.

ai-notes

Notes on AI state of the art, with a focus on generative and large language models. These are the "raw materials" for the https://lspace.swyx.io/ newsletter. This repo used to be called https://github.com/sw-yx/prompt-eng, but was renamed because Prompt Engineering is Overhyped. This is now an AI Engineering notes repo.

llms-with-matlab

This repository contains example code to demonstrate how to connect MATLAB to the OpenAI™ Chat Completions API (which powers ChatGPT™) as well as OpenAI Images API (which powers DALL·E™). This allows you to leverage the natural language processing capabilities of large language models directly within your MATLAB environment.

xef

xef.ai is a one-stop library designed to bring the power of modern AI to applications and services. It offers integration with Large Language Models (LLM), image generation, and other AI services. The library is packaged in two layers: core libraries for basic AI services integration and integrations with other libraries. xef.ai aims to simplify the transition to modern AI for developers by providing an idiomatic interface, currently supporting Kotlin. Inspired by LangChain and Hugging Face, xef.ai may transmit source code and user input data to third-party services, so users should review privacy policies and take precautions. Libraries are available in Maven Central under the `com.xebia` group, with `xef-core` as the core library. Developers can add these libraries to their projects and explore examples to understand usage.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.