discord-llm-chatbot

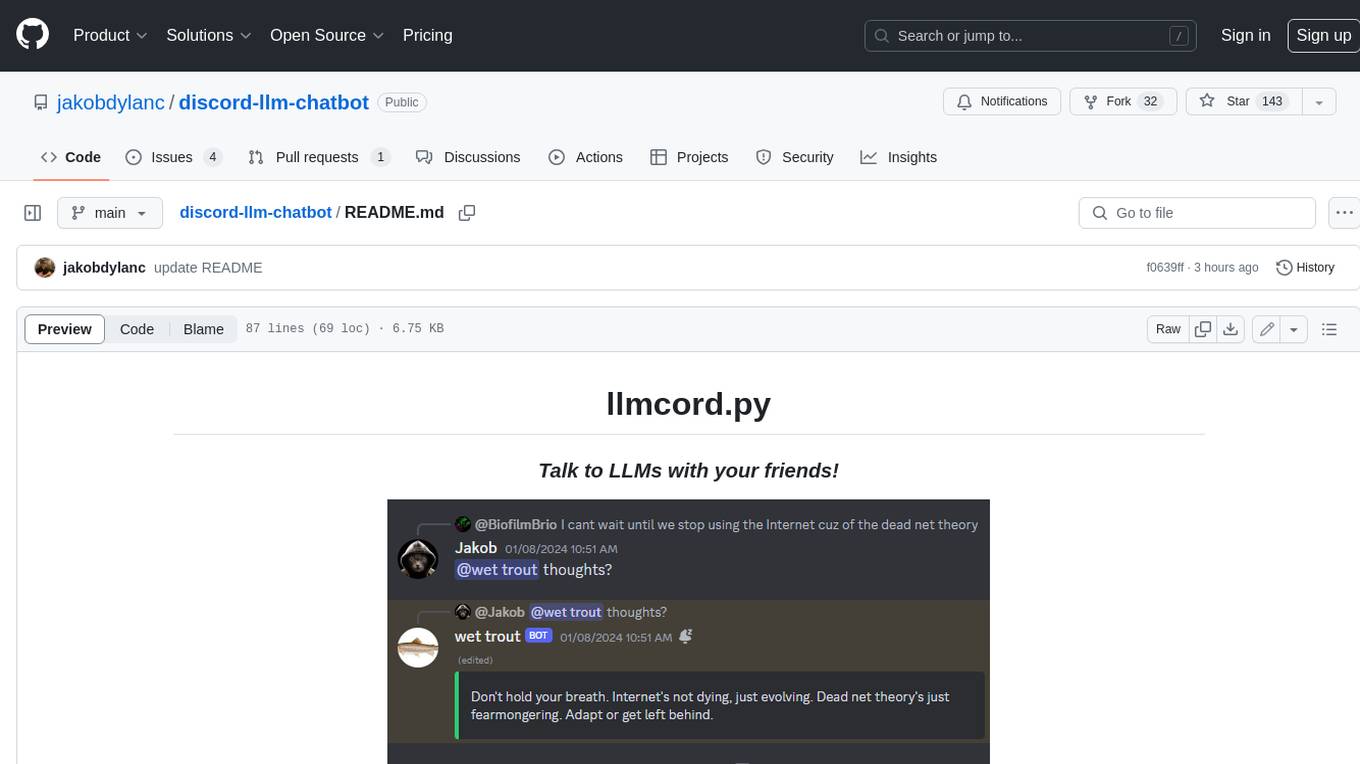

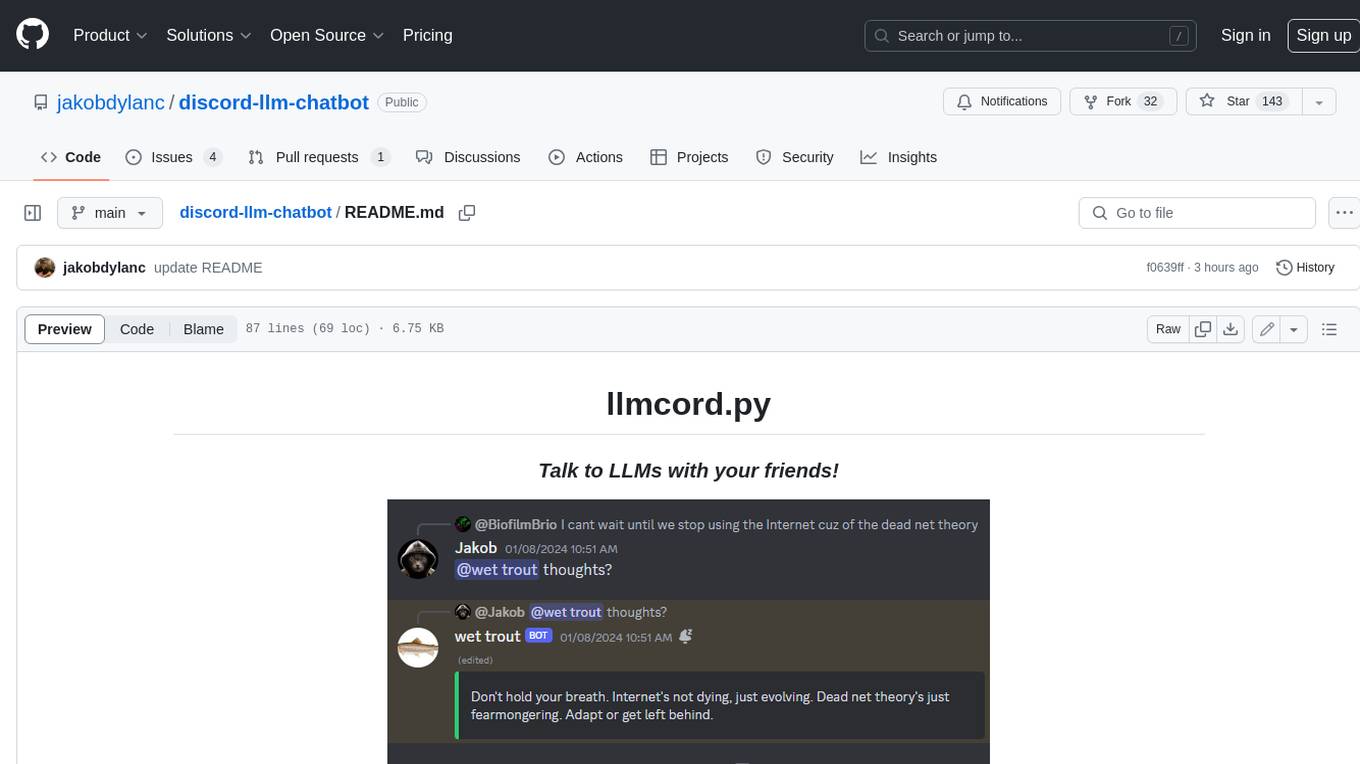

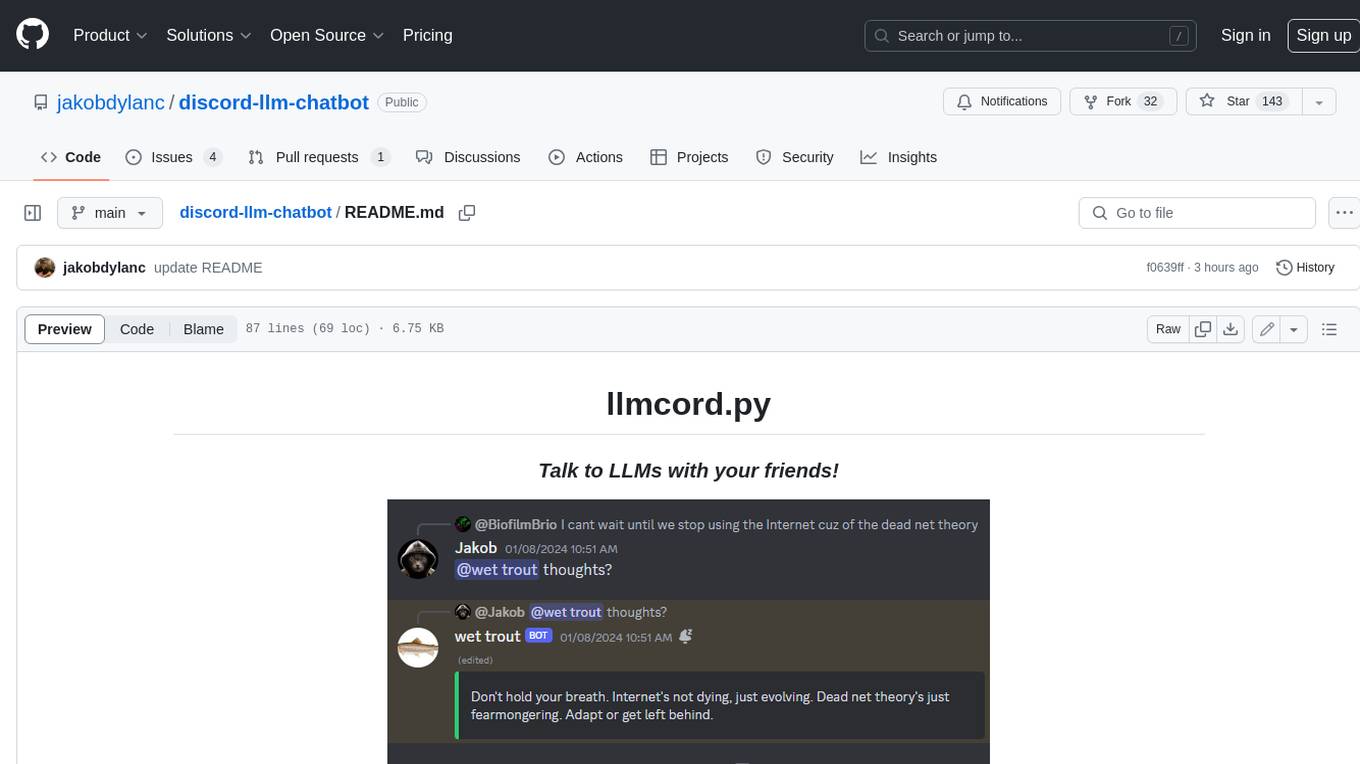

llmcord.py • Talk to LLMs with your friends!

Stars: 277

llmcord.py enables collaborative LLM prompting in your Discord server. It works with practically any LLM, remote or locally hosted. ### Features ### Reply-based chat system Just @ the bot to start a conversation and reply to continue. Build conversations with reply chains! You can do things like: - Build conversations together with your friends - "Rewind" a conversation simply by replying to an older message - @ the bot while replying to any message in your server to ask a question about it Additionally: - Back-to-back messages from the same user are automatically chained together. Just reply to the latest one and the bot will see all of them. - You can seamlessly move any conversation into a thread. Just create a thread from any message and @ the bot inside to continue. ### Choose any LLM Supports remote models from OpenAI API, Mistral API, Anthropic API and many more thanks to LiteLLM. Or run a local model with ollama, oobabooga, Jan, LM Studio or any other OpenAI compatible API server. ### And more: - Supports image attachments when using a vision model - Customizable system prompt - DM for private access (no @ required) - User identity aware (OpenAI API only) - Streamed responses (turns green when complete, automatically splits into separate messages when too long, throttled to prevent Discord ratelimiting) - Displays helpful user warnings when appropriate (like "Only using last 20 messages", "Max 5 images per message", etc.) - Caches message data in a size-managed (no memory leaks) and per-message mutex-protected (no race conditions) global dictionary to maximize efficiency and minimize Discord API calls - Fully asynchronous - 1 Python file, ~200 lines of code

README:

llmcord.py lets you (and your friends) chat with LLMs directly in Discord. It works with practically any LLM, remote or locally hosted.

Just @ the bot to start a conversation and reply to continue. Build conversations with reply chains!

You can do things like:

- Continue your own conversation or someone else's

- "Rewind" a conversation by simply replying to an older message

- @ the bot while replying to any message in your server to ask a question about it

Additionally:

- Back-to-back messages from the same user are automatically chained together. Just reply to the latest one and the bot will see all of them.

- You can seamlessly move any conversation into a thread. Just create a thread from any message and @ the bot inside to continue.

llmcord.py supports remote models from OpenAI API, Mistral API, Anthropic API and many more thanks to LiteLLM.

Or run a local model with ollama, oobabooga, Jan, LM Studio or any other OpenAI compatible API server.

- Supports image attachments when using a vision model (like gpt-4o, claude-3, llava, etc.)

- Supports text file attachments (.txt, .py, .c, etc.)

- Customizable system prompt

- DM for private access (no @ required)

- User identity aware (OpenAI API only)

- Streamed responses (turns green when complete, automatically splits into separate messages when too long)

- Displays helpful warning messages when appropriate (like "

⚠️ Only using last 25 messages" when the customizable message limit is exceeded) - Caches message data in a size-managed (no memory leaks) and mutex-protected (no race conditions) global dictionary to maximize efficiency and minimize Discord API calls

- Fully asynchronous

- 1 Python file, ~200 lines of code

Before you start, install Python and clone this git repo.

-

Install Python requirements:

pip install -U -r requirements.txt -

Create a copy of "config-example.json" named "config.json" and set it up (see below)

-

Create a copy of "example.env" named ".env" and add API keys as needed for your LiteLLM supported providers

-

Run the bot:

python llmcord.py(the invite URL will print to the console)

| Setting | Description |

|---|---|

| model | For LiteLLM supported providers (OpenAI API, Mistral API, Anthropic API, etc.), follow the LiteLLM instructions for its model name formatting. For local models (ollama, oobabooga, Jan, LM Studio, etc.), set to local/openai/<MODEL_NAME> where <MODEL_NAME> is the exact name of the model you're using. For some setups the model name doesn't matter, in which case set to local/openai/model or local/openai/vision-model if it's a vision model. |

| local_server_url | The URL of your local API server. Only applicable when "model" starts with local/.(Default: http://localhost:11434/v1) |

| extra_api_parameters | Extra API parameters for your LLM. Add more entries as needed. (Default: max_tokens=4096, temperature=1.0) |

| system_prompt | Write anything you want to customize the bot's behavior! |

| Setting | Description |

|---|---|

| bot_token | Create a new Discord bot at discord.com/developers/applications and generate a token under the "Bot" tab. Also enable "MESSAGE CONTENT INTENT". |

| client_id | Found under the "OAuth2" tab of the Discord bot you just made. |

| status_message | Set a custom message that displays on the bot's Discord profile. Max 128 characters. |

| allowed_channel_ids | A list of Discord channel IDs where the bot can be used. Leave empty to allow all channels. |

| allowed_role_ids | A list of Discord role IDs that can use the bot. Leave empty to allow everyone. Specifying at least one role also disables DMs. |

| max_text | The maximum amount of text allowed in a single message, including text from file attachments. (Default: 100,000) |

| max_images | The maximum number of image attachments allowed in a single message. Only applicable when using a vision model. (Default: 5) |

| max_messages | The maximum number of messages allowed in a reply chain. (Default: 25) |

| use_plain_responses | When set to true the bot's messages appear more like a regular user message. This disables embeds, streamed responses and warning messages.(Default: false) |

-

If you're having issues, try my suggestions here

-

Only models from OpenAI are "user identity aware" because only OpenAI API supports the message "name" property. Hopefully others support this in the future.

-

PRs are welcome :)

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for discord-llm-chatbot

Similar Open Source Tools

discord-llm-chatbot

llmcord.py enables collaborative LLM prompting in your Discord server. It works with practically any LLM, remote or locally hosted. ### Features ### Reply-based chat system Just @ the bot to start a conversation and reply to continue. Build conversations with reply chains! You can do things like: - Build conversations together with your friends - "Rewind" a conversation simply by replying to an older message - @ the bot while replying to any message in your server to ask a question about it Additionally: - Back-to-back messages from the same user are automatically chained together. Just reply to the latest one and the bot will see all of them. - You can seamlessly move any conversation into a thread. Just create a thread from any message and @ the bot inside to continue. ### Choose any LLM Supports remote models from OpenAI API, Mistral API, Anthropic API and many more thanks to LiteLLM. Or run a local model with ollama, oobabooga, Jan, LM Studio or any other OpenAI compatible API server. ### And more: - Supports image attachments when using a vision model - Customizable system prompt - DM for private access (no @ required) - User identity aware (OpenAI API only) - Streamed responses (turns green when complete, automatically splits into separate messages when too long, throttled to prevent Discord ratelimiting) - Displays helpful user warnings when appropriate (like "Only using last 20 messages", "Max 5 images per message", etc.) - Caches message data in a size-managed (no memory leaks) and per-message mutex-protected (no race conditions) global dictionary to maximize efficiency and minimize Discord API calls - Fully asynchronous - 1 Python file, ~200 lines of code

UFO

UFO is a UI-focused dual-agent framework to fulfill user requests on Windows OS by seamlessly navigating and operating within individual or spanning multiple applications.

obsidian-arcana

Arcana is a plugin for Obsidian that offers a collection of AI-powered tools inspired by famous historical figures to enhance creativity and productivity. It includes tools for conversation, text-to-speech transcription, speech-to-text replies, metadata markup, text generation, file moving, flashcard generation, auto tagging, and note naming. Users can interact with these tools using the command palette and sidebar views, with an OpenAI API key required for usage. The plugin aims to assist users in various note-taking and knowledge management tasks within the Obsidian vault environment.

DevDocs

DevDocs is a platform designed to simplify the process of digesting technical documentation for software engineers and developers. It automates the extraction and conversion of web content into markdown format, making it easier for users to access and understand the information. By crawling through child pages of a given URL, DevDocs provides a streamlined approach to gathering relevant data and integrating it into various tools for software development. The tool aims to save time and effort by eliminating the need for manual research and content extraction, ultimately enhancing productivity and efficiency in the development process.

deep-research

Deep Research is a lightning-fast tool that uses powerful AI models to generate comprehensive research reports in just a few minutes. It leverages advanced 'Thinking' and 'Task' models, combined with an internet connection, to provide fast and insightful analysis on various topics. The tool ensures privacy by processing and storing all data locally. It supports multi-platform deployment, offers support for various large language models, web search functionality, knowledge graph generation, research history preservation, local and server API support, PWA technology, multi-key payload support, multi-language support, and is built with modern technologies like Next.js and Shadcn UI. Deep Research is open-source under the MIT License.

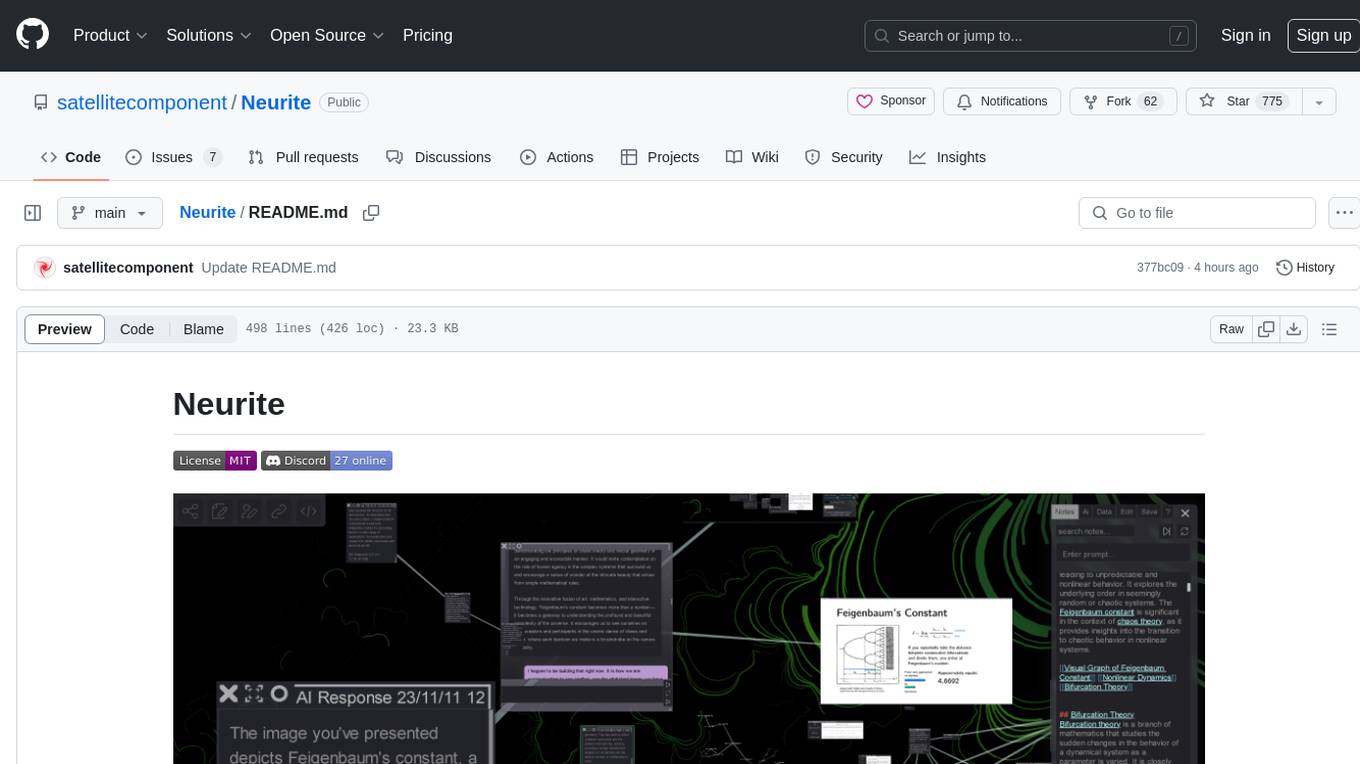

Neurite

Neurite is an innovative project that combines chaos theory and graph theory to create a digital interface that explores hidden patterns and connections for creative thinking. It offers a unique workspace blending fractals with mind mapping techniques, allowing users to navigate the Mandelbrot set in real-time. Nodes in Neurite represent various content types like text, images, videos, code, and AI agents, enabling users to create personalized microcosms of thoughts and inspirations. The tool supports synchronized knowledge management through bi-directional synchronization between mind-mapping and text-based hyperlinking. Neurite also features FractalGPT for modular conversation with AI, local AI capabilities for multi-agent chat networks, and a Neural API for executing code and sequencing animations. The project is actively developed with plans for deeper fractal zoom, advanced control over node placement, and experimental features.

sd-webui-agent-scheduler

AgentScheduler is an Automatic/Vladmandic Stable Diffusion Web UI extension designed to enhance image generation workflows. It allows users to enqueue prompts, settings, and controlnets, manage queued tasks, prioritize, pause, resume, and delete tasks, view generation results, and more. The extension offers hidden features like queuing checkpoints, editing queued tasks, and custom checkpoint selection. Users can access the functionality through HTTP APIs and API callbacks. Troubleshooting steps are provided for common errors. The extension is compatible with latest versions of A1111 and Vladmandic. It is licensed under Apache License 2.0.

midscene

Midscene.js is an AI-powered automation SDK that allows users to control web pages, perform assertions, and extract data in JSON format using natural language. It offers features such as natural language interaction, understanding UI and providing responses in JSON, intuitive assertion based on AI understanding, compatibility with public multimodal LLMs like GPT-4o, visualization tool for easy debugging, and a brand new experience in automation development.

arbigent

Arbigent (Arbiter-Agent) is an AI agent testing framework designed to make AI agent testing practical for modern applications. It addresses challenges faced by traditional UI testing frameworks and AI agents by breaking down complex tasks into smaller, dependent scenarios. The framework is customizable for various AI providers, operating systems, and form factors, empowering users with extensive customization capabilities. Arbigent offers an intuitive UI for scenario creation and a powerful code interface for seamless test execution. It supports multiple form factors, optimizes UI for AI interaction, and is cost-effective by utilizing models like GPT-4o mini. With a flexible code interface and open-source nature, Arbigent aims to revolutionize AI agent testing in modern applications.

llama-cpp-agent

The llama-cpp-agent framework is a tool designed for easy interaction with Large Language Models (LLMs). Allowing users to chat with LLM models, execute structured function calls and get structured output (objects). It provides a simple yet robust interface and supports llama-cpp-python and OpenAI endpoints with GBNF grammar support (like the llama-cpp-python server) and the llama.cpp backend server. It works by generating a formal GGML-BNF grammar of the user defined structures and functions, which is then used by llama.cpp to generate text valid to that grammar. In contrast to most GBNF grammar generators it also supports nested objects, dictionaries, enums and lists of them.

saga-reader

Saga Reader is an AI-driven think tank-style reader that automatically retrieves information from the internet based on user-specified topics and preferences. It uses cloud or local large models to summarize and provide guidance, and it includes an AI-driven interactive companion reading function, allowing you to discuss and exchange ideas with AI about the content you've read. Saga Reader is completely free and open-source, meaning all data is securely stored on your own computer and is not controlled by third-party service providers. Additionally, you can manage your subscription keywords based on your interests and preferences without being disturbed by advertisements and commercialized content.

dev3000

dev3000 captures your web app's complete development timeline including server logs, browser events, console messages, network requests, and automatic screenshots in a unified, timestamped feed for AI debugging. It creates a comprehensive log of your development session that AI assistants can easily understand, monitoring your app in a real browser and capturing server logs, console output, browser console messages and errors, network requests and responses, and automatic screenshots on navigation, errors, and key events. Logs are saved with timestamps and rotated to keep the 10 most recent per project, with the current session symlinked for easy access. The tool integrates with AI assistants for instant debugging and provides advanced querying options through the MCP server.

Srt-AI-Voice-Assistant

Srt-AI-Voice-Assistant is a convenient tool that generates audio from uploaded .srt subtitle files by calling APIs such as Bert-VITS2 (HiyoriUI), GPT-SoVITS, and Microsoft TTS (online). The code is currently not perfect, and feedback on bugs or suggestions can be provided at https://github.com/YYuX-1145/Srt-AI-Voice-Assistant/issues. Recent updates include adding custom API functionality with a focus on security, support for Microsoft online TTS (requires key configuration), error handling improvements, automatic project path detection, compatibility with API-v1 for limited functionality, and significant feature updates supporting card synthesis.

DevoxxGenieIDEAPlugin

Devoxx Genie is a Java-based IntelliJ IDEA plugin that integrates with local and cloud-based LLM providers to aid in reviewing, testing, and explaining project code. It supports features like code highlighting, chat conversations, and adding files/code snippets to context. Users can modify REST endpoints and LLM parameters in settings, including support for cloud-based LLMs. The plugin requires IntelliJ version 2023.3.4 and JDK 17. Building and publishing the plugin is done using Gradle tasks. Users can select an LLM provider, choose code, and use commands like review, explain, or generate unit tests for code analysis.

Director

Director is a framework to build video agents that can reason through complex video tasks like search, editing, compilation, generation, etc. It enables users to summarize videos, search for specific moments, create clips instantly, integrate GenAI projects and APIs, add overlays, generate thumbnails, and more. Built on VideoDB's 'video-as-data' infrastructure, Director is perfect for developers, creators, and teams looking to simplify media workflows and unlock new possibilities.

panda-etl

PandaETL is an open-source, no-code ETL tool designed to extract and parse data from various document types including PDFs, emails, websites, audio files, and more. With an intuitive interface and powerful backend, PandaETL simplifies the process of data extraction and transformation, making it accessible to users without programming skills.

For similar tasks

dbrx

DBRX is a large language model trained by Databricks and made available under an open license. It is a Mixture-of-Experts (MoE) model with 132B total parameters and 36B live parameters, using 16 experts, of which 4 are active during training or inference. DBRX was pre-trained for 12T tokens of text and has a context length of 32K tokens. The model is available in two versions: a base model and an Instruct model, which is finetuned for instruction following. DBRX can be used for a variety of tasks, including text generation, question answering, summarization, and translation.

Chinese-LLaMA-Alpaca

This project open sources the **Chinese LLaMA model and the Alpaca large model fine-tuned with instructions**, to further promote the open research of large models in the Chinese NLP community. These models **extend the Chinese vocabulary based on the original LLaMA** and use Chinese data for secondary pre-training, further enhancing the basic Chinese semantic understanding ability. At the same time, the Chinese Alpaca model further uses Chinese instruction data for fine-tuning, significantly improving the model's understanding and execution of instructions.

discord-llm-chatbot

llmcord.py enables collaborative LLM prompting in your Discord server. It works with practically any LLM, remote or locally hosted. ### Features ### Reply-based chat system Just @ the bot to start a conversation and reply to continue. Build conversations with reply chains! You can do things like: - Build conversations together with your friends - "Rewind" a conversation simply by replying to an older message - @ the bot while replying to any message in your server to ask a question about it Additionally: - Back-to-back messages from the same user are automatically chained together. Just reply to the latest one and the bot will see all of them. - You can seamlessly move any conversation into a thread. Just create a thread from any message and @ the bot inside to continue. ### Choose any LLM Supports remote models from OpenAI API, Mistral API, Anthropic API and many more thanks to LiteLLM. Or run a local model with ollama, oobabooga, Jan, LM Studio or any other OpenAI compatible API server. ### And more: - Supports image attachments when using a vision model - Customizable system prompt - DM for private access (no @ required) - User identity aware (OpenAI API only) - Streamed responses (turns green when complete, automatically splits into separate messages when too long, throttled to prevent Discord ratelimiting) - Displays helpful user warnings when appropriate (like "Only using last 20 messages", "Max 5 images per message", etc.) - Caches message data in a size-managed (no memory leaks) and per-message mutex-protected (no race conditions) global dictionary to maximize efficiency and minimize Discord API calls - Fully asynchronous - 1 Python file, ~200 lines of code

enchanted

Enchanted is an open-source, Ollama-compatible app for macOS and iOS that allows users to work with privately hosted models such as Llama 2, Mistral, Vicuna, Starling, and more. It provides a user-friendly interface for interacting with these models, making it easy to generate text, translate languages, write different kinds of creative content, and more. The app is designed to be secure and private, ensuring that user data is protected. It also offers a range of features such as dark/light mode, conversation history, markdown support, voice prompts, and image attachments.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

mistral.rs

Mistral.rs is a fast LLM inference platform written in Rust. We support inference on a variety of devices, quantization, and easy-to-use application with an Open-AI API compatible HTTP server and Python bindings.

generative-ai-python

The Google AI Python SDK is the easiest way for Python developers to build with the Gemini API. The Gemini API gives you access to Gemini models created by Google DeepMind. Gemini models are built from the ground up to be multimodal, so you can reason seamlessly across text, images, and code.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.