AlwaysReddy

AlwaysReddy is a LLM voice assistant that is always just a hotkey away.

Stars: 627

AlwaysReddy is a simple LLM assistant with no UI that you interact with entirely using hotkeys. It can easily read from or write to your clipboard, and voice chat with you via TTS and STT. Here are some of the things you can use AlwaysReddy for: - Explain a new concept to AlwaysReddy and have it save the concept (in roughly your words) into a note. - Ask AlwaysReddy "What is X called?" when you know how to roughly describe something but can't remember what it is called. - Have AlwaysReddy proofread the text in your clipboard before you send it. - Ask AlwaysReddy "From the comments in my clipboard, what do the r/LocalLLaMA users think of X?" - Quickly list what you have done today and get AlwaysReddy to write a journal entry to your clipboard before you shutdown the computer for the day.

README:

Hey, I'm Josh, the creator of AlwaysReddy. I am still a little bit of a noob when it comes to programming and I'm really trying to develop my skills over the next year, I'm treating this project as an attempt to better develop my skills, with that in mind I would really appreciate it if you could point out issues and bad practices in my code (of which I'm sure there will be plenty). I would also appreciate if you would make your own improvements to the project so I can learn from your changes. Twitter: https://twitter.com/MindofMachine1

Contact me: [email protected]

I'm looking for work, if you know of anyone needing a skillset like mine, please let me know! :)

The code base is a mess right now, I am in the middle of transforming AlwaysReddy from just being a voice chat bot into something that will allow users to create their own chatbots and extensions. This transition will be a little messy until I find solutions that I like, then I will start cleaning things up.

- Meet AlwaysReddy

- Philosophy of the project

- Setup

- Known Issues

- How to add custom actions

- Troubleshooting

- How to

- Supported LLM servers

- Supported TTS systems

AlwaysReddy is a simple LLM assistant with the perfect amount of UI... None! You interact with it entirely using hotkeys, it can easily read from or write to your clipboard. It's like having voice ChatGPT running on your computer at all times, you just press a hotkey and it will listen to any questions you have, no need to swap windows or tabs, and if you want to give it context of some extra text, just copy the text and double tap the hotkey!

Future of AlwaysReddy I would like to make AlwaysReddy an extensible interface where you can easily voice chat with a range of AIs, these AIs could be given the ability to access custom tools or applications so that they can do tasks for you on the fly, all of this with as little friction as possible.

Pull Requests Welcome!

Join the discord: https://discord.gg/su44drSBzb

Here is a demo video of me using it with Llama3 https://www.reddit.com/r/LocalLLaMA/comments/1ca510h/voice_chatting_with_llama_3_8b/

- Friction is the enemy

- I am building this for myself first but sharing it in case other people get value from it too

- Practicality first, I want this system to help me be as effective as possible

- I will change directions freely, when I think of a more useful direction for the code base I will start working in that direction, even if that makes things messy in the short term

- Help is always welcome, if you have an idea of how you could improve AlwaysReddy, jump in and get your hands dirty!

You interact with AlwaysReddy entirely with hotkeys, it has the ability to:

- Voice chat with you via TTS and STT

- Read from your clipboard (with

Ctrl + Alt + R + Rrapidly double tapping R). - Write text to your clipboard on request.

- Can be run 100% locally!!!

- Supports Windows, Mac (experimental), linux (super duper experimental, see Known Issues)

- You can create your own hotkeys to fire custom code using AlwaysReddy's inbuilt systems like TTS and STT

If you are and you're willing to help please consider look at the Known Issues, I'm pretty stuck here!

I often use AlwaysReddy for the following things:

- When I have just learned a new concept I will often explain the concept aloud to AlwaysReddy and have it save the concept (in roughly my words) into a note.

- "What is X called?" Often I know how to roughly describe something but cant remember what it is called, AlwaysReddy is handy for quickly giving me the answer without me having to open the browser.

- "Can you proof read the text in my clipboard before I send it?"

- "What do the r/LocalLLaMA users think of X, based on the comments in my clipboard?"

- Quick journal entries, I speedily list what I have done today and get it to write a journal entry to my clipboard before I shutdown the computer for the day.

- OpenAI

- Anthropic

- TogetherAI

- LM Studio (local) - Setup Guide

- Ollama (local) - Setup Guide

- Perplexity

- TabbyAPI (local)

- Piper TTS (local and fast) See how to change voice model

- OpenAI TTS API

- Default mac TTS

GPU Setup Instructions

To use GPU acceleration with the faster-whisper API, follow these steps:

-

Check if CUDA is already installed:

- Open a terminal or command prompt.

- Run the following command:

nvcc --version - If CUDA is installed, you should see output similar to:

nvcc: NVIDIA (R) Cuda compiler driver Copyright (c) 2005-2021 NVIDIA Corporation Built on Sun_Feb_14_21:12:58_PST_2021 Cuda compilation tools, release 11.2, V11.2.152 Build cuda_11.2.r11.2/compiler.29618528_0 - Note down the CUDA version (e.g., 11.2 in the example above).

-

If CUDA is not installed or you want to install a different version:

- Visit the official NVIDIA CUDA Toolkit website: CUDA Toolkit

- Download and install the appropriate CUDA Toolkit version for your system.

-

Install PyTorch with CUDA support based on your system and CUDA version. Follow the instructions on the official PyTorch website: PyTorch Installation

Example command for CUDA 11.6:

pip install torch==1.12.0+cu116 -f https://download.pytorch.org/whl/torch_stable.html -

In the

config.pyfile, setUSE_GPU = Trueto enable GPU acceleration.

Note for MacOS: it is expected that you have Brew installed on your system, look here for setup

- Clone this repo with

git clone https://github.com/ILikeAI/AlwaysReddy - Navigate into the directory:

cd AlwaysReddy - Run the setup script with

python setup.pyon windows orpython3 setup.pyon mac and linux. - Open the

config.pyand.envfiles and update them with your settings and API keys.

If you get module 'requests' not found run pip install requests or pip3 install requests

If you encounter any issues during the setup process, please refer to the Troubleshooting section below.

- Double-click on the

run_AlwaysReddy.batfile created during the setup process.

OR run python main.py from the command prompt or terminal.

- Activate the venv

venv\Scripts\activatethen run the main script directlypython main.py.

- Open a terminal, navigate to the AlwaysReddy directory, and run

./run_AlwaysReddy.sh.

OR run python3 main.py from the command prompt or terminal.

- Activate the venv

source venv/bin/activatethen run the main script directlypython3 main.py.

- On linux it only detects hotkey presses when the application is in foucs, this is a major issue as the whole point of the project is to have it run in the background, if you want to help out this would be a great place to start poking around! -- this may only be an issue with systems using wayland

- Using AlwaysReddy in the terminal on ubuntu does not work for me, when I press the hotkey it just prints the key in the terminal, running it in my IDE works.

If you have issues try deleting the venv folder and starting again. Set VERBOSE = True in the config to get more detailed logs and error traces

There are currently only main 2 actions:

Voice chat:

- Press

Ctrl + Alt + Rto start dictating, you can talk for as long as you want, then pressCtrl + Alt + Ragain to stop recording, a few seconds later you will get a voice response from the AI - You can also hold

Ctrl + Alt + Rto record and release it when you're done to get the transcription.

Voice chat with context of your clipboard:

- Double tap

Ctrl + Alt + R(or just holdCtrl + Altand quickly pressRTwice) This will give the AI the content of your clipboard so you can ask it to reference it, rewrite it, answer questions from its contents... whatever you like! - Clear the assistants memory with

Ctrl + Alt + W. - Cancel recording or TTS with

Ctrl + Alt + E

Get AlwaysReddy to output to your clipboard:

- Just ask it to! It is prompted to know how to save text to the clipboard instead of speaking it aloud.

Please let me know if you think of better hotkey defaults!

All hotkeys can be edited in config.py

- Go to https://huggingface.co/rhasspy/piper-voices/tree/main and navigate to your desired language.

- Click on the name of the voice you want to try. There are different sized models available; I suggest using the medium size as it's pretty fast but still sounds great (for a locally run model).

- Listen to the sample in the "sample" folder to ensure you like the voice.

- Download the

.onnxand.jsonfiles for the chosen voice. - Create a new folder in the

piper_tts\voicesdirectory and give it a descriptive name. You will need to enter the name of this folder into theconfig.pyfile. For example:PIPER_VOICE = "default_female_voice". - Move the two downloaded files (

.onnxand.json) into your newly created folder within thepiper_tts\voicesdirectory.

- Open the

config.pyfile. - Locate the "Transcription API Settings" section.

- Comment out the line

TRANSCRIPTION_API = "openai"by adding a#at the beginning of the line. - Uncomment the line

TRANSCRIPTION_API = "faster-whisper"by removing the#at the beginning of the line. - Adjust the

WHISPER_MODELandTRANSCRIPTION_LANGUAGEsettings according to your preferences. - Save the

config.pyfile.

Available models with faster-whisper: tiny.en, tiny, base.en, base, small.en, small, medium.en, medium, large-v1, large-v2, large-v3, large, distil-large-v2, distil-medium.en, distil-small.en, distil-large-v3

Here's an example of how your config.py file should look like for local whisper transcription:

### Transcription API Settings ###

## OPENAI API TRANSCRIPTION EXAMPLE ##

# TRANSCRIPTION_API = "openai" # this will use the hosted openai api

## Faster Whisper local transcription ###

TRANSCRIPTION_API = "FasterWhisper" # this will use the local whisper model

# Supported models:

WHISPER_MODEL = "tiny.en" # If you prefer not to use english set it to "tiny", if the transcription quality is too low then set it to "base" but this will be a little slowerNote: The default whisper model is english only, try setting WHISPER_MODEL to 'tiny' or 'base' for other languages

To swap models open the config.py file and uncomment the sections for the API you want to use. For example this is how you would use Claude 3 sonnet, if you wanted to use LM studio you would comment out the Anthropic section and uncomment the LM studio section.

### COMPLETIONS API SETTINGS ###

## LM Studio COMPLETIONS API EXAMPLE ##

# COMPLETIONS_API = "lm_studio"

# COMPLETION_MODEL = "local-model" #This stays as local-model no matter what model you are using

## ANTHROPIC COMPLETIONS API EXAMPLE ##

COMPLETIONS_API = "anthropic"

COMPLETION_MODEL = "claude-3-sonnet-20240229"

## TOGETHER COMPLETIONS API EXAMPLE ##

# COMPLETIONS_API = "together"

# COMPLETION_MODEL = "NousResearch/Nous-Hermes-2-Mixtral-8x7B-SFT"

## OPENAI COMPLETIONS API EXAMPLE ##

# COMPLETIONS_API = "openai"

# COMPLETION_MODEL = "gpt-4-0125-preview"To use local TTS just open the config file and set TTS_ENGINE="piper"

- Navigate to the system_prompts directory.

- Make a copy of an existing prompt file.

- Open the copy in a text or code editor and edit the prompt inside the two

'''as you like. - Edit your config.py file by setting the

ACTIVE_PROMPToption to the name of your new prompt file (without the .py extension) as a string.- For example, if your new prompt file is custom_prompt.py, then set in config.py:

ACTIVE_PROMPT = "custom_prompt"

- For example, if your new prompt file is custom_prompt.py, then set in config.py:

To add AlwaysReddy to your startup list so it starts automatically on your computer startup, follow these steps:

- run

venv\Scripts\activate - Run

python setup.py, follow the prompts, it will ask you if you want to add AlwaysReddy to the startup list, press Y the confrim

If you want to remove AlwaysReddy from the startup list you can follow the same steps again, only say no when asked if you want to add AlwaysReddy to the startup list and it will ask if you would like to remove it, press Y.

PLEASE NOTE: Custom actions is a very experimental feature that I am likely to chnage a lot, any actions you make will in all likelyhood need to be updated in some way as I update and change the actions system

The action system allows you to easily define new functionality and bind it to a hotkey event, it allows you to easily use the following functionalitys from the AlwayReddy code base:

- Record audio

- Transcribe audio

- Run and play TTS

- Generate responses from any of the supported LLM servers

- Read and save to the clipboard

This video shows the process of making an action from scratch: https://youtu.be/X0Bd20EDxfQ Example action: https://github.com/ILikeAI/alwaysreddy_add_to_md_note

The toggle_recording method starts or stops audio recording. When called the first time, it starts recording. The next call stops recording and returns the audio file path.

By default, if the recording times out, it's stopped and deleted. However, you can provide a callback function that will be executed on timeout instead. In the code example, transcription_action is passed as the callback. When the recording times out, transcription_action is called, which calls toggle_recording again, thereby stopping the recording and returning the audio file for transcription.

def transcription_action(self):

"""Handle the transcription process."""

recording_filename = self.AR.toggle_recording(self.transcription_action)

if recording_filename:

transcript = self.AR.transcription_manager.transcribe_audio(recording_filename)

to_clipboard(transcript)

print("Transcription copied to clipboard.")The setup method of your action will run when AlwaysReddy starts, this is where you use the add_action_hotkey method to bind your code to a hotkey press, below is an example of binding hotkeys to the transcription_action method.

self.AR.add_action_hotkey("ctrl+alt+t",

pressed=self.transcription_action,

held_release=self.transcription_action)Here we are binding the pressed and held_release hotkey events to our function.

Below are the arguments for add_action_hotkey:

hotkey (str): The hotkey combination.

pressed (callable, optional): Callback for when the hotkey is pressed.

released (callable, optional): Callback for when the hotkey is released.

held (callable, optional): Callback for when the hotkey is held.

held_release (callable, optional): Callback for when the hotkey is released after being held.

double_tap (callable, optional): Callback for when the hotkey is double-tapped.For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AlwaysReddy

Similar Open Source Tools

AlwaysReddy

AlwaysReddy is a simple LLM assistant with no UI that you interact with entirely using hotkeys. It can easily read from or write to your clipboard, and voice chat with you via TTS and STT. Here are some of the things you can use AlwaysReddy for: - Explain a new concept to AlwaysReddy and have it save the concept (in roughly your words) into a note. - Ask AlwaysReddy "What is X called?" when you know how to roughly describe something but can't remember what it is called. - Have AlwaysReddy proofread the text in your clipboard before you send it. - Ask AlwaysReddy "From the comments in my clipboard, what do the r/LocalLLaMA users think of X?" - Quickly list what you have done today and get AlwaysReddy to write a journal entry to your clipboard before you shutdown the computer for the day.

Open-LLM-VTuber

Open-LLM-VTuber is a project in early stages of development that allows users to interact with Large Language Models (LLM) using voice commands and receive responses through a Live2D talking face. The project aims to provide a minimum viable prototype for offline use on macOS, Linux, and Windows, with features like long-term memory using MemGPT, customizable LLM backends, speech recognition, and text-to-speech providers. Users can configure the project to chat with LLMs, choose different backend services, and utilize Live2D models for visual representation. The project supports perpetual chat, offline operation, and GPU acceleration on macOS, addressing limitations of existing solutions on macOS.

Discord-AI-Selfbot

Discord-AI-Selfbot is a Python-based Discord selfbot that uses the `discord.py-self` library to automatically respond to messages mentioning its trigger word using Groq API's Llama-3 model. It functions as a normal Discord bot on a real Discord account, enabling interactions in DMs, servers, and group chats without needing to invite a bot. The selfbot comes with features like custom AI instructions, free LLM model usage, mention and reply recognition, message handling, channel-specific responses, and a psychoanalysis command to analyze user messages for insights on personality.

kobold_assistant

Kobold-Assistant is a fully offline voice assistant interface to KoboldAI's large language model API. It can work online with the KoboldAI horde and online speech-to-text and text-to-speech models. The assistant, called Jenny by default, uses the latest coqui 'jenny' text to speech model and openAI's whisper speech recognition. Users can customize the assistant name, speech-to-text model, text-to-speech model, and prompts through configuration. The tool requires system packages like GCC, portaudio development libraries, and ffmpeg, along with Python >=3.7, <3.11, and runs on Ubuntu/Debian systems. Users can interact with the assistant through commands like 'serve' and 'list-mics'.

maxheadbox

Max Headbox is an open-source voice-activated LLM Agent designed to run on a Raspberry Pi. It can be configured to execute a variety of tools and perform actions. The project requires specific hardware and software setups, and provides detailed instructions for installation, configuration, and usage. Users can create custom tools by making JavaScript modules and backend API handlers. The project acknowledges the use of various open-source projects and resources in its development.

ai-voice-cloning

This repository provides a tool for AI voice cloning, allowing users to generate synthetic speech that closely resembles a target speaker's voice. The tool is designed to be user-friendly and accessible, with a graphical user interface that guides users through the process of training a voice model and generating synthetic speech. The tool also includes a variety of features that allow users to customize the generated speech, such as the pitch, volume, and speaking rate. Overall, this tool is a valuable resource for anyone interested in creating realistic and engaging synthetic speech.

AI-Horde-Worker

AI-Horde-Worker is a repository containing the original reference implementation for a worker that turns your graphics card(s) into a worker for the AI Horde. It allows users to generate or alchemize images for others. The repository provides instructions for setting up the worker on Windows and Linux, updating the worker code, running with multiple GPUs, and stopping the worker. Users can configure the worker using a WebUI to connect to the horde with their username and API key. The repository also includes information on model usage and running the Docker container with specified environment variables.

whisper_dictation

Whisper Dictation is a fast, offline, privacy-focused tool for voice typing, AI voice chat, voice control, and translation. It allows hands-free operation, launching and controlling apps, and communicating with OpenAI ChatGPT or a local chat server. The tool also offers the option to speak answers out loud and draw pictures. It includes client and server versions, inspired by the Star Trek series, and is designed to keep data off the internet and confidential. The project is optimized for dictation and translation tasks, with voice control capabilities and AI image generation using stable-diffusion API.

lovelaice

Lovelaice is an AI-powered assistant for your terminal and editor. It can run bash commands, search the Internet, answer general and technical questions, complete text files, chat casually, execute code in various languages, and more. Lovelaice is configurable with API keys and LLM models, and can be used for a wide range of tasks requiring bash commands or coding assistance. It is designed to be versatile, interactive, and helpful for daily tasks and projects.

llm.c

LLM training in simple, pure C/CUDA. There is no need for 245MB of PyTorch or 107MB of cPython. For example, training GPT-2 (CPU, fp32) is ~1,000 lines of clean code in a single file. It compiles and runs instantly, and exactly matches the PyTorch reference implementation. I chose GPT-2 as the first working example because it is the grand-daddy of LLMs, the first time the modern stack was put together.

claude.vim

Claude.vim is a Vim plugin that integrates Claude, an AI pair programmer, into your Vim workflow. It allows you to chat with Claude about what to build or how to debug problems, and Claude offers opinions, proposes modifications, or even writes code. The plugin provides a chat/instruction-centric interface optimized for human collaboration, with killer features like access to chat history and vimdiff interface. It can refactor code, modify or extend selected pieces of code, execute complex tasks by reading documentation, cloning git repositories, and more. Note that it is early alpha software and expected to rapidly evolve.

call-gpt

Call GPT is a voice application that utilizes Deepgram for Speech to Text, elevenlabs for Text to Speech, and OpenAI for GPT prompt completion. It allows users to chat with ChatGPT on the phone, providing better transcription, understanding, and speaking capabilities than traditional IVR systems. The app returns responses with low latency, allows user interruptions, maintains chat history, and enables GPT to call external tools. It coordinates data flow between Deepgram, OpenAI, ElevenLabs, and Twilio Media Streams, enhancing voice interactions.

ultravox

Ultravox is a fast multimodal Language Model (LLM) that can understand both text and human speech in real-time without the need for a separate Audio Speech Recognition (ASR) stage. By extending Meta's Llama 3 model with a multimodal projector, Ultravox converts audio directly into a high-dimensional space used by Llama 3, enabling quick responses and potential understanding of paralinguistic cues like timing and emotion in human speech. The current version (v0.3) has impressive speed metrics and aims for further enhancements. Ultravox currently converts audio to streaming text and plans to emit speech tokens for direct audio conversion. The tool is open for collaboration to enhance this functionality.

openui

OpenUI is a tool designed to simplify the process of building UI components by allowing users to describe UI using their imagination and see it rendered live. It supports converting HTML to React, Svelte, Web Components, etc. The tool is open source and aims to make UI development fun, fast, and flexible. It integrates with various AI services like OpenAI, Groq, Gemini, Anthropic, Cohere, and Mistral, providing users with the flexibility to use different models. OpenUI also supports LiteLLM for connecting to various LLM services and allows users to create custom proxy configs. The tool can be run locally using Docker or Python, and it offers a development environment for quick setup and testing.

gpt-subtrans

GPT-Subtrans is an open-source subtitle translator that utilizes large language models (LLMs) as translation services. It supports translation between any language pairs that the language model supports. Note that GPT-Subtrans requires an active internet connection, as subtitles are sent to the provider's servers for translation, and their privacy policy applies.

polis

Polis is an AI powered sentiment gathering platform that offers a more organic approach than surveys and requires less effort than focus groups. It provides a comprehensive wiki, main deployment at https://pol.is, discussions, issue tracking, and project board for users. Polis can be set up using Docker infrastructure and offers various commands for building and running containers. Users can test their instance, update the system, and deploy Polis for production. The tool also provides developer conveniences for code reloading, type checking, and database connections. Additionally, Polis supports end-to-end browser testing using Cypress and offers troubleshooting tips for common Docker and npm issues.

For similar tasks

AlwaysReddy

AlwaysReddy is a simple LLM assistant with no UI that you interact with entirely using hotkeys. It can easily read from or write to your clipboard, and voice chat with you via TTS and STT. Here are some of the things you can use AlwaysReddy for: - Explain a new concept to AlwaysReddy and have it save the concept (in roughly your words) into a note. - Ask AlwaysReddy "What is X called?" when you know how to roughly describe something but can't remember what it is called. - Have AlwaysReddy proofread the text in your clipboard before you send it. - Ask AlwaysReddy "From the comments in my clipboard, what do the r/LocalLLaMA users think of X?" - Quickly list what you have done today and get AlwaysReddy to write a journal entry to your clipboard before you shutdown the computer for the day.

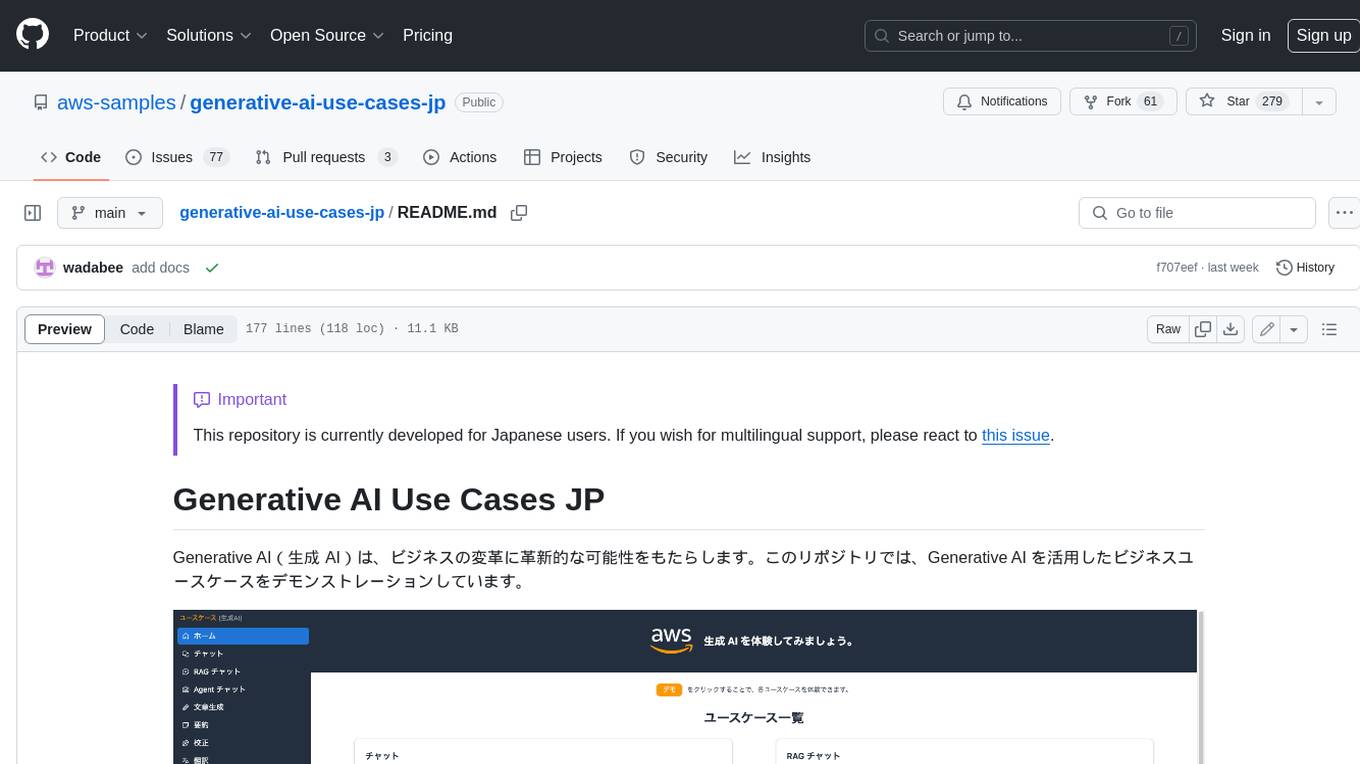

generative-ai-use-cases-jp

Generative AI (生成 AI) brings revolutionary potential to transform businesses. This repository demonstrates business use cases leveraging Generative AI.

WritingTools

Writing Tools is an Apple Intelligence-inspired application for Windows, Linux, and macOS that supercharges your writing with an AI LLM. It allows users to instantly proofread, optimize text, and summarize content from webpages, YouTube videos, documents, etc. The tool is privacy-focused, open-source, and supports multiple languages. It offers powerful features like grammar correction, content summarization, and LLM chat mode, making it a versatile writing assistant for various tasks.

payload-ai

The Payload AI Plugin is an advanced extension that integrates modern AI capabilities into your Payload CMS, streamlining content creation and management. It offers features like text generation, voice and image generation, field-level prompt customization, prompt editor, document analyzer, fact checking, automated content workflows, internationalization support, editor AI suggestions, and AI chat support. Users can personalize and configure the plugin by setting environment variables. The plugin is actively developed and tested with Payload version v3.2.1, with regular updates expected.

For similar jobs

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

mikupad

mikupad is a lightweight and efficient language model front-end powered by ReactJS, all packed into a single HTML file. Inspired by the likes of NovelAI, it provides a simple yet powerful interface for generating text with the help of various backends.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

firecrawl

Firecrawl is an API service that takes a URL, crawls it, and converts it into clean markdown. It crawls all accessible subpages and provides clean markdown for each, without requiring a sitemap. The API is easy to use and can be self-hosted. It also integrates with Langchain and Llama Index. The Python SDK makes it easy to crawl and scrape websites in Python code.