WritingTools

The world's smartest system-wide grammar assistant; a better version of the Apple Intelligence Writing Tools. Works on Windows, Linux, & macOS, with the free Gemini API, local LLMs, & more.

Stars: 2121

Writing Tools is an Apple Intelligence-inspired application for Windows, Linux, and macOS that supercharges your writing with an AI LLM. It allows users to instantly proofread, optimize text, and summarize content from webpages, YouTube videos, documents, etc. The tool is privacy-focused, open-source, and supports multiple languages. It offers powerful features like grammar correction, content summarization, and LLM chat mode, making it a versatile writing assistant for various tasks.

README:

🍎 Using a Mac? Jump to the macOS (Native Swift Port) section → macOS

https://github.com/user-attachments/assets/d3ce4694-b593-45ff-ae9a-892ce94b1dc8

https://github.com/user-attachments/assets/76d13eb9-168e-4459-ada4-62e0586ae58c

Writing Tools is an Apple Intelligence-inspired application for Windows, Linux, and macOS that supercharges your writing with an AI LLM (cloud-based or local).

With one hotkey press system-wide, it lets you fix grammar, optimize text according to your instructions, summarize content (webpages, YouTube videos, etc.), and more.

It's currently the world's most intelligent system-wide grammar assistant, works in ~any language, and has been featured in 🔥 28+ global publications (Beebom, XDA, How-To Geek, Neowin, Windows Central...).

Writing Tools was also among the 🔥 top 10 most trending AI programs in the world on GitHub in October 2024.

Hi! I'm Jesai, a high school student from Bangalore, and I created and maintain Writing Tools with help from our amazing contributors. I want to especially shout out momokrono, who's contributed extensively, and Arya Mirsepasi, who built the entire macOS port!

Writing Tools will always remain completely free and open-source.

If you find value in it, it would mean the world to me if you could support us as we continue to improve it. ❤️

| Support Jesai (Windows/Linux): | Support Arya (macOS): |

|

|

- Select any text on your PC and invoke Writing Tools with

ctrl+space. - Choose Proofread, Rewrite, Friendly, Professional, Concise, or even enter custom instructions (e.g., "add comments to this code", "make it title case", "translate to French").

- Your text will instantly be replaced with the AI-optimized version. Use

ctrl+zto revert.

- Select all text in any webpage, document, email, etc., with

ctrl+a, or select the transcript of a YouTube video (from its description). - Choose Summary, Key Points, or Table after invoking Writing Tools.

- Get a pop-up summary with clear and beautiful formatting (with Markdown rendering), saving you hours.

- Chat with the summary if you'd like to learn more or have questions.

- They're your own magic buttons. Dream, and it'll magically be done with AI.

- Press

ctrl+spacewithout selecting text to start a conversation with your LLM (for privacy, chat history is deleted when you close the window).

Aside from being the only Windows/Linux program like Apple's Writing Tools, and the only way to use them on an Intel Mac or in the EU:

- More intelligent than Apple's Writing Tools and Grammarly Premium: Apple uses a tiny 3B parameter model, while Writing Tools lets you use much more advanced models for free (e.g., Gemini 2.0 Flash [~30B]). Grammarly's rule-based NLP can't compete with LLMs.

- Completely free and open-source: No subscriptions or hidden costs. Bloat-free and uses ~0% of your CPU even when actively using it.

- Versatile AI LLM support: Jump in quickly with the free Gemini API & Gemini 2.0, or an extensive range of local LLMs (via Ollama [instructions], llama.cpp, KoboldCPP, TabbyAPI, vLLM, etc.) or cloud-based LLMs (ChatGPT, Mistral AI, etc.) through Writing Tools' OpenAI-API-compatibility.

- Does not mess with your clipboard, and works system-wide.

- Privacy-focused: Your API key and config files stay on your device. NO logging, diagnostic collection, tracking, or ads. Invoked only on your command. Local LLMs keep your data on your device & work without the internet.

-

Supports multiple languages: Works with any language and translates text better than Google Translate (type "translate to [language]" in

Describe your change...). -

Code support: Fix, improve, translate, or add comments to code with

Describe your change...." - Themes, Dark Mode, & Customization: Choose between 2 themes: a blurry gradient theme and a plain theme that resembles the Windows + V pop-up! Also has full dark mode support. Set your own hotkey for quick access.

- Go to the Releases page and download the latest

Writing.Tools.zipfile. - Extract it to your desired location (recommended:

DocumentsorApp Data/Local), runWriting Tools.exe, and enjoy! :D

Note: Writing Tools is a portable app. If you extract it into a protected folder (e.g., Program Files), you must run it as administrator at least on first launch so it can create/edit its config files (in the same folder as its exe).

PS: Go to Writing Tools' Settings (from its tray icon at the bottom right of the taskbar) to enable starting Writing Tools on boot.

Writing Tools works well on x11. On Wayland, there are a few caveats:

- it works on XWayland apps

- and it works if you disable Wayland for individual Flatpaks with Flatseal.

The macOS version is a native Swift port, developed by Arya Mirsepasi. View the README inside the macOS folder to learn more.

To install it:

- Go to the Releases page and download the latest macOS

.dmgfile. - Open the

.dmgfile, also open a Finder Window, and drag thewriting-tools.appinto the Applications folder. That's it!

Note: macOS 14 or later is required due to accessibility API requirements.

- Truly native: Built in Swift (SwiftUI + AppKit where needed) for a fast, polished Mac experience.

- Private & on-device: Run local LLMs with MLX on Apple Silicon — no internet required for on-device models.

- Rich-text aware: Proofread preserves RTF formatting (bold, italics, lists, links) so your documents keep their look while errors disappear.

- Your workflows, your way: Edit and add your own commands and assign custom shortcuts.

- Multilingual by design: App UI supports English, German, French, and Spanish, and commands work in many more languages.

- Choice of intelligence: Connect to top providers or go fully local — switch any time.

- Themes: Multiple themes (including dark mode) to match your desktop vibe.

- Cloud: OpenAI, Google (Gemini), Anthropic, Mistral, OpenRouter

- Local: Ollama (via OpenAI-compatible endpoint) and MLX on Apple Silicon for first-class, low-latency on-device inference

- You can mix & match: keep sensitive work on-device with MLX, use cloud models when you need the biggest brains.

- Works across most Mac apps — select text, invoke Writing Tools, and instantly Proofread, Rewrite, Change tone, or Summarize.

- Start a quick chat with your chosen model without selecting text.

Tip: If your shortcut clashes with Spotlight or Input Source switching, set a custom hotkey in Writing Tools and/or adjust macOS settings under

System Settings → Keyboard → Keyboard Shortcuts (Spotlight / Input Sources).

For full functionality, macOS will prompt you to grant:

- Accessibility (to read/replace selected text)

- Screen Recording (for certain apps that restrict text access)

You can manage these under System Settings → Privacy & Security.

- Command editor: Create reusable buttons for your own prompts and assign shortcuts.

- Model flexibility: Bring your own API keys. Switch providers per task.

- Document-friendly: RTF-preserving Proofread keeps your formatting intact.

- Localization: UI in EN/DE/FR/ES; commands happily work with many languages.

- Theming: Choose from multiple themes, including dark mode.

- Hotkey not firing? Change the shortcut in Writing Tools and make sure nothing else uses the same combo (Spotlight / Input Sources).

- No text replacement in a specific app? Ensure Accessibility is enabled for Writing Tools; for some apps, Screen Recording is also required.

- Local model issues? Confirm your Ollama/MLX model is running and the base URL/model name are correct in Settings.

https://github.com/user-attachments/assets/dd4780d4-7cdb-4bdb-9a64-e93520ab61be

2️⃣ Make Writing Tools work better in MS Word: the ctrl+space keyboard shortcut is mapped to "Clear Formatting", making you lose paragraph indentation. Here's how to improve this:

P.S.: Word's rich-text formatting (bold, italics, underline, colours...) will be lost on using Writing Tools. A Markdown editor such as Obsidian has no such issue.

https://github.com/user-attachments/assets/42a3d8c7-18ac-4282-9478-16aab935f35e

I believe strongly in protecting your privacy. Writing Tools:

- Does not collect or store any of your writing data by itself. It doesn't even collect general logs, so it's super light and privacy-friendly.

- Lets you use local LLMs to process your text entirely on-device.

- Only sends text to the chosen AI provider (encrypted) when you explicitly use one of the options.

- Only stores your API key locally on your device.

Note: If you choose to use a cloud based LLM, refer to the AI provider's privacy policy and terms of service.

- Proofread: The smartest grammar & spelling corrector. Sorry not sorry, Grammarly Premium.

- Rewrite: Improve the phrasing of your text.

- Make Friendly/Professional: Adjust the tone of your text.

-

Custom Instructions: Tailor your request (e.g., "Translate to French") through

Describe your change....

The following options respond in a pop-up window (with markdown rendering, selectable text, and a zoom level that saves & applies on app restarts):

- Summarize: Create clear and concise summaries.

- Extract Key Points: Highlight the most important points.

- Create Tables: Convert text into a formatted table. PS: You can copy & paste the table into MS Word.

These instructions are for Writing Tools Windows/Linux v7+, using its native Ollama provider:

- Download and install Ollama.

- Choose an LLM from here. Recommended:

Llama 3.1 8B(~8GB RAM of VRAM required). - Run

ollama pull llama3.1:8bin your terminal to download it. - Open Writing Tools Settings and simply select the Ollama AI Provider. The default model name is already

Llama 3.1 8B. - That's it! Enjoy Writing Tools with absolute privacy and no internet connection! 🎉 From now on, you'll simply need to launch Ollama and Writing Tools into the background for it to work.

These instructions are for any Writing Tools version, using the OpenAI-Compatible provider:

- Download and install Ollama.

- Choose an LLM from here. Recommended:

Llama 3.1 8B(~8GB RAM of VRAM/RAM required). - Run

ollama pull llama3.1:8bin your terminal to download Llama 3.1. - In Writing Tools, set the

OpenAI-Compatibleprovider with:- API Key:

ollama(PS: For most local LLM providers, any random string here will suffice.) - API Base URL:

http://localhost:11434/v1 - API Model:

llama3.1:8b

- API Key:

- That's it! Enjoy Writing Tools with absolute privacy and no internet connection! 🎉 From now on, you'll simply need to launch Ollama and Writing Tools into the background for it to work.

-

(Being investigated) On some devices, Writing Tools does not work correctly with the default hotkey.

To fix it, simply change the hotkey to ctrl+` or ctrl+j and restart Writing Tools. PS: If a hotkey is already in use by a program or background process, Writing Tools may not be able to intercept it. The above hotkeys are usually unused.

-

The initial launch of the

Writing Tools.exemight take unusually long — this seems to be because AV software extensively scans this new executable before letting it run. Once it launches into the background in RAM, it works instantly as usual.

Writing Tools would not be where it is today without its amazing contributors:

1. momokrono:

Added Linux support, switched to the pynput API to improve Windows stability. Added Ollama API support, the core logic for customisable buttons, and localization. Fixed misc. bugs and added graceful termination support by handling SIGINT signal.

@momokrono has been incredibly kind and helpful, and I'm forever grateful to have him as a contributor. Not only has he provided extensive help with code, but he's also played a big role in managing GitHub issues. - Jesai

2. Cameron Redmore (CameronRedmore):

Extensively refactored Writing Tools and added OpenAI Compatible API support, streamed responses, and the chat mode when no text is selected.

Helped add dark mode, the plain theme, tray menu fixes, and UI improvements.

Helped improve the reliability of text selection.

5. raghavdhingra24:

Made the rounded corners anti-aliased & prettier.

6. ErrorCatDev:

Significantly improved the About window, making it scrollable and cleaning things up. Also improved our .gitignore & requirements.txt.

7. Vadim Karpenko:

Helped add the start-on-boot setting!

A native Swift port created entirely by Arya Mirsepasi! This was a big endeavour and he's done an increadble job.

Over so many emails, @Aryamirsepasi has been someone I truly look up to, and it's rare to find people as kind as him. We're incredibly grateful for all his contributions here! — Jesai

1. Joaov41:

Developed the amazing picture processing functionality in Gemini for WritingTools, allowing the app to now work with images in addition to text!

2. drankush:

Fixed an issue that caused the app to fail in completing requests when the OpenAI provider was configured with a custom Base URL (e.g., for Groq or other compatible services).

3. gdmka:

- Added the change that makes the ResponseView remember the user’s preferred text size across app launches.

- Implemented ability to set custom provider per each command.

I welcome contributions! :D

If you'd like to improve Writing Tools, please feel free to open a Pull Request or get in touch with me (email below).

If there are major changes on your mind, it may be a good idea to get in touch before working on it.

Email: [email protected]

Made with ❤️ by a high school student. Check out my other app, Bliss AI, a free AI tutor!

Distributed under the GNU General Public License v3.0.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for WritingTools

Similar Open Source Tools

WritingTools

Writing Tools is an Apple Intelligence-inspired application for Windows, Linux, and macOS that supercharges your writing with an AI LLM. It allows users to instantly proofread, optimize text, and summarize content from webpages, YouTube videos, documents, etc. The tool is privacy-focused, open-source, and supports multiple languages. It offers powerful features like grammar correction, content summarization, and LLM chat mode, making it a versatile writing assistant for various tasks.

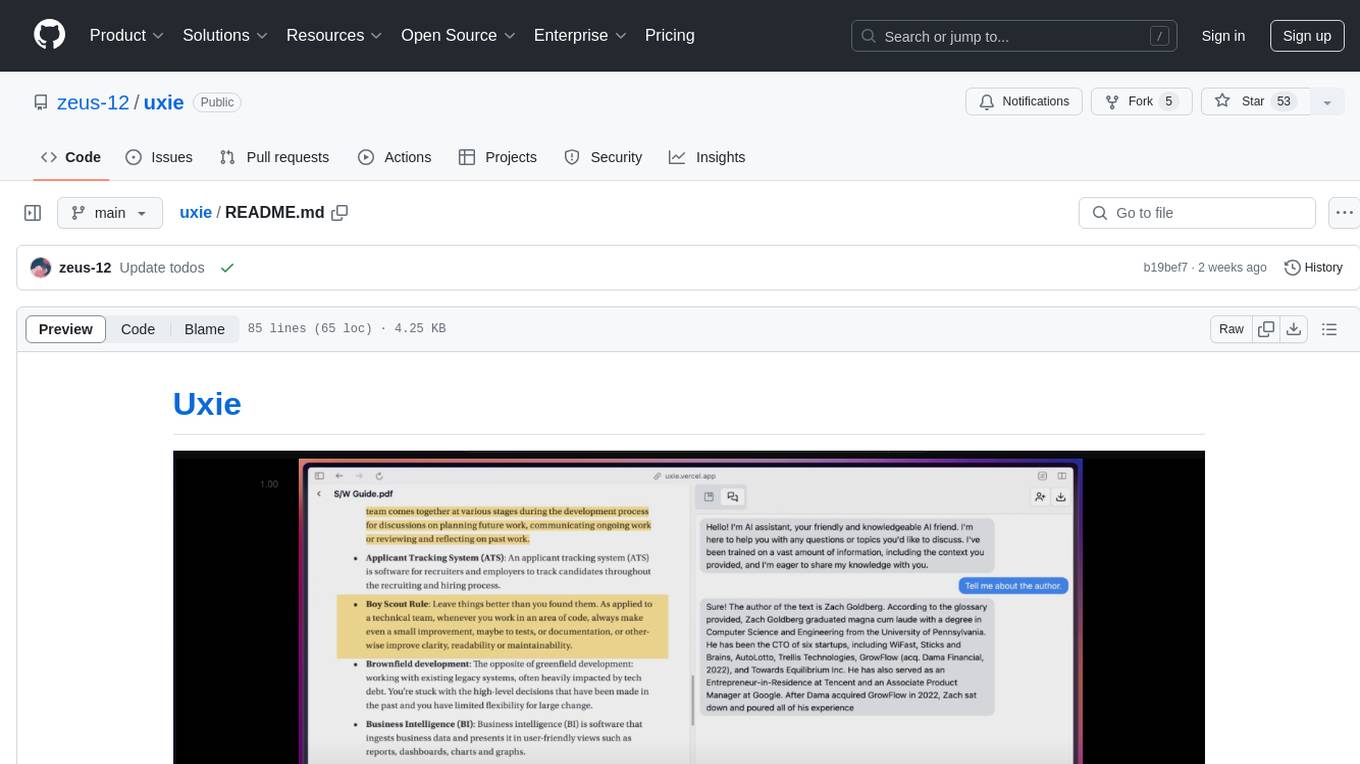

uxie

Uxie is a PDF reader app designed to revolutionize the learning experience. It offers features such as annotation, note-taking, collaboration tools, integration with LLM for enhanced learning, and flashcard generation with LLM feedback. Built using Nextjs, tRPC, Zod, TypeScript, Tailwind CSS, React Query, React Hook Form, Supabase, Prisma, and various other tools. Users can take notes, summarize PDFs, chat and collaborate with others, create custom blocks in the editor, and use AI-powered text autocompletion. The tool allows users to craft simple flashcards, test knowledge, answer questions, and receive instant feedback through AI evaluation.

AmigaGPT

AmigaGPT is a versatile ChatGPT client for AmigaOS 3.x, 4.1, and MorphOS. It brings the capabilities of OpenAI’s GPT to Amiga systems, enabling text generation, question answering, and creative exploration. AmigaGPT can generate images using DALL-E, supports speech output, and seamlessly integrates with AmigaOS. Users can customize the UI, choose fonts and colors, and enjoy a native user experience. The tool requires specific system requirements and offers features like state-of-the-art language models, AI image generation, speech capability, and UI customization.

plandex

Plandex is an open source, terminal-based AI coding engine designed for complex tasks. It uses long-running agents to break up large tasks into smaller subtasks, helping users work through backlogs, navigate unfamiliar technologies, and save time on repetitive tasks. Plandex supports various AI models, including OpenAI, Anthropic Claude, Google Gemini, and more. It allows users to manage context efficiently in the terminal, experiment with different approaches using branches, and review changes before applying them. The tool is platform-independent and runs from a single binary with no dependencies.

easydiffusion

Easy Diffusion 3.0 is a user-friendly tool for installing and using Stable Diffusion on your computer. It offers hassle-free installation, clutter-free UI, task queue, intelligent model detection, live preview, image modifiers, multiple prompts file, saving generated images, UI themes, searchable models dropdown, and supports various image generation tasks like 'Text to Image', 'Image to Image', and 'InPainting'. The tool also provides advanced features such as custom models, merge models, custom VAE models, multi-GPU support, auto-updater, developer console, and more. It is designed for both new users and advanced users looking for powerful AI image generation capabilities.

kollektiv

Kollektiv is a Retrieval-Augmented Generation (RAG) system designed to enable users to chat with their favorite documentation easily. It aims to provide LLMs with access to the most up-to-date knowledge, reducing inaccuracies and improving productivity. The system utilizes intelligent web crawling, advanced document processing, vector search, multi-query expansion, smart re-ranking, AI-powered responses, and dynamic system prompts. The technical stack includes Python/FastAPI for backend, Supabase, ChromaDB, and Redis for storage, OpenAI and Anthropic Claude 3.5 Sonnet for AI/ML, and Chainlit for UI. Kollektiv is licensed under a modified version of the Apache License 2.0, allowing free use for non-commercial purposes.

pocketpal-ai

PocketPal AI is a versatile virtual assistant tool designed to streamline daily tasks and enhance productivity. It leverages artificial intelligence technology to provide personalized assistance in managing schedules, organizing information, setting reminders, and more. With its intuitive interface and smart features, PocketPal AI aims to simplify users' lives by automating routine activities and offering proactive suggestions for optimal time management and task prioritization.

nanobrowser

Nanobrowser is an open-source AI web automation tool that runs in your browser. It is a free alternative to OpenAI Operator with flexible LLM options and a multi-agent system. Nanobrowser offers premium web automation capabilities while keeping users in complete control, with features like a multi-agent system, interactive side panel, task automation, follow-up questions, and multiple LLM support. Users can easily download and install Nanobrowser as a Chrome extension, configure agent models, and accomplish tasks such as news summary, GitHub research, and shopping research with just a sentence. The tool uses a specialized multi-agent system powered by large language models to understand and execute complex web tasks. Nanobrowser is actively developed with plans to expand LLM support, implement security measures, optimize memory usage, enable session replay, and develop specialized agents for domain-specific tasks. Contributions from the community are welcome to improve Nanobrowser and build the future of web automation.

AIWritingCompanion

AIWritingCompanion is a lightweight and versatile browser extension designed to translate text within input fields. It offers universal compatibility, multiple activation methods, and support for various translation providers like Gemini, OpenAI, and WebAI to API. Users can install it via CRX file or Git, set API key, and use it for automatic translation or via shortcut. The tool is suitable for writers, translators, students, researchers, and bloggers. AI keywords include writing assistant, translation tool, browser extension, language translation, and text translator. Users can use it for tasks like translate text, assist in writing, simplify content, check language accuracy, and enhance communication.

mikupad

mikupad is a lightweight and efficient language model front-end powered by ReactJS, all packed into a single HTML file. Inspired by the likes of NovelAI, it provides a simple yet powerful interface for generating text with the help of various backends.

gptme

Personal AI assistant/agent in your terminal, with tools for using the terminal, running code, editing files, browsing the web, using vision, and more. A great coding agent that is general-purpose to assist in all kinds of knowledge work, from a simple but powerful CLI. An unconstrained local alternative to ChatGPT with 'Code Interpreter', Cursor Agent, etc. Not limited by lack of software, internet access, timeouts, or privacy concerns if using local models.

AIOStreams

AIOStreams is a versatile tool that combines streams from various addons into one platform, offering extensive customization options. Users can change result formats, filter results by various criteria, remove duplicates, prioritize services, sort results, specify size limits, and more. The tool scrapes results from selected addons, applies user configurations, and presents the results in a unified manner. It simplifies the process of finding and accessing desired content from multiple sources, enhancing user experience and efficiency.

llm-answer-engine

This repository contains the code and instructions needed to build a sophisticated answer engine that leverages the capabilities of Groq, Mistral AI's Mixtral, Langchain.JS, Brave Search, Serper API, and OpenAI. Designed to efficiently return sources, answers, images, videos, and follow-up questions based on user queries, this project is an ideal starting point for developers interested in natural language processing and search technologies.

SAM

SAM is a native macOS AI assistant built with Swift and SwiftUI, designed for non-developers who want powerful tools in their everyday life. It provides real assistance, smart memory, voice control, image generation, and custom AI model training. SAM keeps your data on your Mac, supports multiple AI providers, and offers features for documents, creativity, writing, organization, learning, and more. It is privacy-focused, user-friendly, and accessible from various devices. SAM stands out with its privacy-first approach, intelligent memory, task execution capabilities, powerful tools, image generation features, custom AI model training, and flexible AI provider support.

agent-zero

Agent Zero is a personal and organic AI framework designed to be dynamic, organically growing, and learning as you use it. It is fully transparent, readable, comprehensible, customizable, and interactive. The framework uses the computer as a tool to accomplish tasks, with no single-purpose tools pre-programmed. It emphasizes multi-agent cooperation, complete customization, and extensibility. Communication is key in this framework, allowing users to give proper system prompts and instructions to achieve desired outcomes. Agent Zero is capable of dangerous actions and should be run in an isolated environment. The framework is prompt-based, highly customizable, and requires a specific environment to run effectively.

gurubase

Gurubase is an open-source RAG system that enables users to create AI-powered Q&A assistants ('Gurus') for various topics by integrating web pages, PDFs, YouTube videos, and GitHub repositories. It offers advanced LLM-based question answering, accurate context-aware responses through the RAG system, multiple data sources integration, easy website embedding, creation of custom AI assistants, real-time updates, personalized learning paths, and self-hosting options. Users can request Guru creation, manage existing Gurus, update datasources, and benefit from the system's features for enhancing user engagement and knowledge sharing.

For similar tasks

WritingTools

Writing Tools is an Apple Intelligence-inspired application for Windows, Linux, and macOS that supercharges your writing with an AI LLM. It allows users to instantly proofread, optimize text, and summarize content from webpages, YouTube videos, documents, etc. The tool is privacy-focused, open-source, and supports multiple languages. It offers powerful features like grammar correction, content summarization, and LLM chat mode, making it a versatile writing assistant for various tasks.

generative-ai-use-cases-jp

Generative AI (生成 AI) brings revolutionary potential to transform businesses. This repository demonstrates business use cases leveraging Generative AI.

AlwaysReddy

AlwaysReddy is a simple LLM assistant with no UI that you interact with entirely using hotkeys. It can easily read from or write to your clipboard, and voice chat with you via TTS and STT. Here are some of the things you can use AlwaysReddy for: - Explain a new concept to AlwaysReddy and have it save the concept (in roughly your words) into a note. - Ask AlwaysReddy "What is X called?" when you know how to roughly describe something but can't remember what it is called. - Have AlwaysReddy proofread the text in your clipboard before you send it. - Ask AlwaysReddy "From the comments in my clipboard, what do the r/LocalLLaMA users think of X?" - Quickly list what you have done today and get AlwaysReddy to write a journal entry to your clipboard before you shutdown the computer for the day.

payload-ai

The Payload AI Plugin is an advanced extension that integrates modern AI capabilities into your Payload CMS, streamlining content creation and management. It offers features like text generation, voice and image generation, field-level prompt customization, prompt editor, document analyzer, fact checking, automated content workflows, internationalization support, editor AI suggestions, and AI chat support. Users can personalize and configure the plugin by setting environment variables. The plugin is actively developed and tested with Payload version v3.2.1, with regular updates expected.

nlp-llms-resources

The 'nlp-llms-resources' repository is a comprehensive resource list for Natural Language Processing (NLP) and Large Language Models (LLMs). It covers a wide range of topics including traditional NLP datasets, data acquisition, libraries for NLP, neural networks, sentiment analysis, optical character recognition, information extraction, semantics, topic modeling, multilingual NLP, domain-specific LLMs, vector databases, ethics, costing, books, courses, surveys, aggregators, newsletters, papers, conferences, and societies. The repository provides valuable information and resources for individuals interested in NLP and LLMs.

Awesome-Segment-Anything

Awesome-Segment-Anything is a powerful tool for segmenting and extracting information from various types of data. It provides a user-friendly interface to easily define segmentation rules and apply them to text, images, and other data formats. The tool supports both supervised and unsupervised segmentation methods, allowing users to customize the segmentation process based on their specific needs. With its versatile functionality and intuitive design, Awesome-Segment-Anything is ideal for data analysts, researchers, content creators, and anyone looking to efficiently extract valuable insights from complex datasets.

fairseq

Fairseq is a sequence modeling toolkit that enables researchers and developers to train custom models for translation, summarization, language modeling, and other text generation tasks. It provides reference implementations of various sequence modeling papers covering CNN, LSTM networks, Transformer networks, LightConv, DynamicConv models, Non-autoregressive Transformers, Finetuning, and more. The toolkit supports multi-GPU training, fast generation on CPU and GPU, mixed precision training, extensibility, flexible configuration based on Hydra, and full parameter and optimizer state sharding. Pre-trained models are available for translation and language modeling with a torch.hub interface. Fairseq also offers pre-trained models and examples for tasks like XLS-R, cross-lingual retrieval, wav2vec 2.0, unsupervised quality estimation, and more.

transcriptionstream

Transcription Stream is a self-hosted diarization service that works offline, allowing users to easily transcribe and summarize audio files. It includes a web interface for file management, Ollama for complex operations on transcriptions, and Meilisearch for fast full-text search. Users can upload files via SSH or web interface, with output stored in named folders. The tool requires a NVIDIA GPU and provides various scripts for installation and running. Ports for SSH, HTTP, Ollama, and Meilisearch are specified, along with access details for SSH server and web interface. Customization options and troubleshooting tips are provided in the documentation.

For similar jobs

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

mikupad

mikupad is a lightweight and efficient language model front-end powered by ReactJS, all packed into a single HTML file. Inspired by the likes of NovelAI, it provides a simple yet powerful interface for generating text with the help of various backends.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

firecrawl

Firecrawl is an API service that takes a URL, crawls it, and converts it into clean markdown. It crawls all accessible subpages and provides clean markdown for each, without requiring a sitemap. The API is easy to use and can be self-hosted. It also integrates with Langchain and Llama Index. The Python SDK makes it easy to crawl and scrape websites in Python code.