generative-ai-use-cases-jp

Application implementation with business use cases for safely utilizing generative AI in business operations

Stars: 874

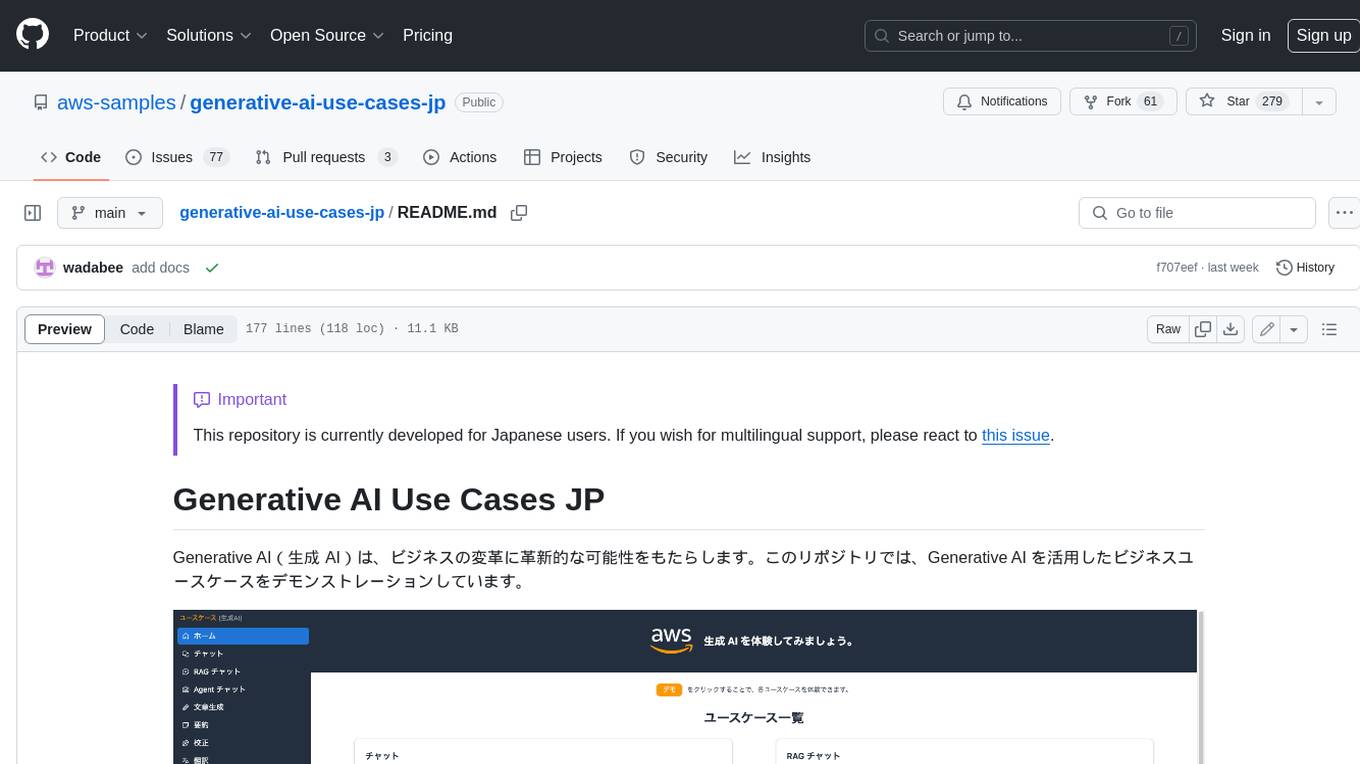

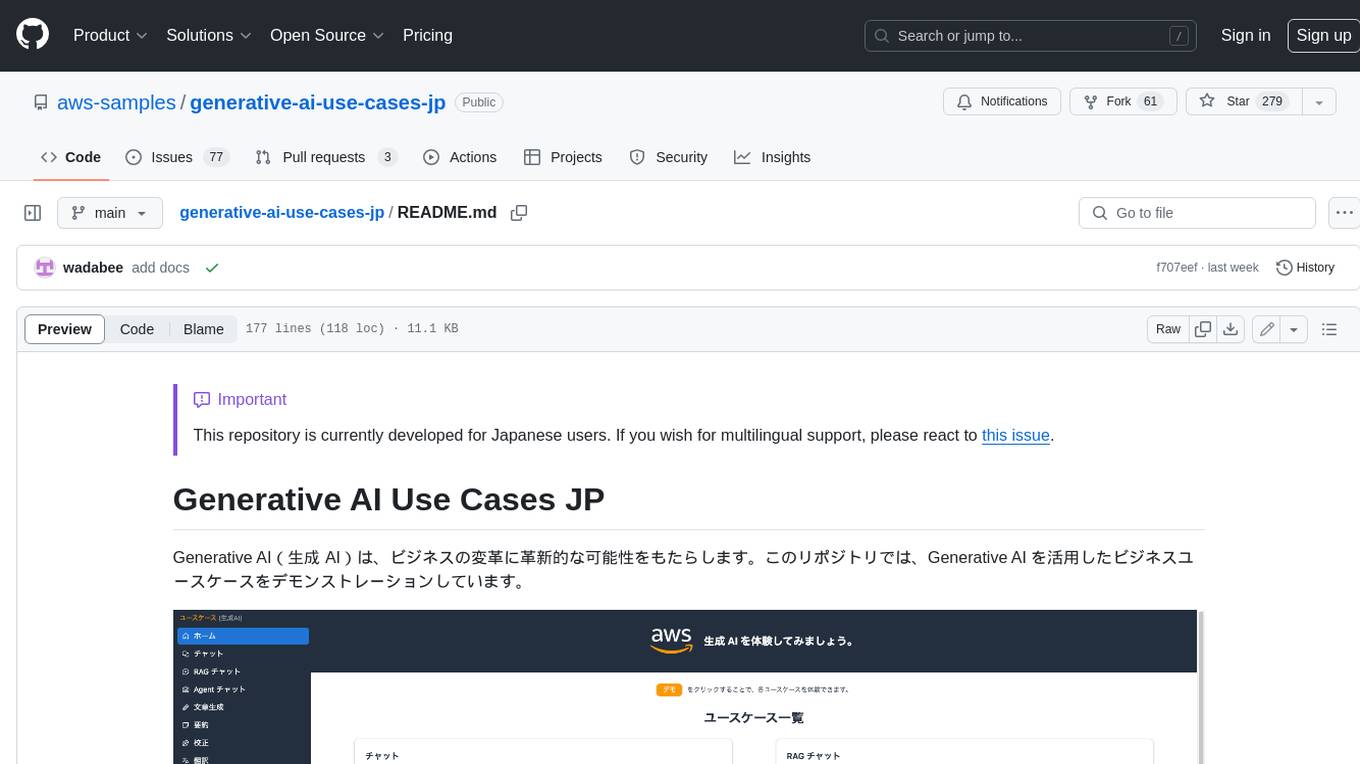

Generative AI (生成 AI) brings revolutionary potential to transform businesses. This repository demonstrates business use cases leveraging Generative AI.

README:

[!IMPORTANT] GenU は 2025/01 に v3 にアップグレードされました。いくつかの破壊的変更を伴いますので、アップグレード前に リリースノート をご確認ください。

GenU の機能やオプションを活用パターンごとに紹介いたします。網羅的なデプロイオプションに関しては こちら をご参照ください。

[!TIP] 活用パターンをクリックして詳細を確認してください

生成 AI のユースケースを体験したい

GenU は生成 AI を活用した多様なユースケースを標準で提供しています。それらのユースケースは、生成 AI を業務活用するためのアイデアの種となったり、そのまま業務で活用できるものなど、さまざまです。今後もさらにブラッシュアップされたユースケースを随時追加予定です。また、不要であれば 特定のユースケースを非表示にする オプションで非表示にすることもできます。デフォルトで提供しているユースケース一覧はこちらです。

| ユースケース | 説明 |

| チャット | 大規模言語モデル (LLM) とチャット形式で対話することができます。LLM と直接対話するプラットフォームが存在するおかげで、細かいユースケースや新しいユースケースに迅速に対応することができます。また、プロンプトエンジニアリングの検証用環境としても有効です。 |

| 文章生成 | あらゆるコンテキストで文章を生成することは LLM が最も得意とするタスクの 1 つです。記事・レポート・メールなど、あらゆる文章を生成します。 |

| 要約 | LLM は、大量の文章を要約するタスクを得意としています。ただ要約するだけでなく、文章をコンテキストとして与えた上で、必要な情報を対話形式で引き出すこともできます。例えば、契約書を読み込ませて「XXX の条件は?」「YYY の金額は?」といった情報を取得することが可能です。 |

| 執筆 | LLM は、誤字脱字のチェックだけでなく、文章の流れや内容を考慮したより客観的な視点から改善点を提案できます。人に見せる前に LLM に自分では気づかなかった点を客観的にチェックしてもらいクオリティを上げる効果が期待できます。 |

| 翻訳 | 多言語で学習した LLM は、翻訳を行うことも可能です。また、ただ翻訳するだけではなく、カジュアルさ・対象層など様々な指定されたコンテキスト情報を翻訳に反映させることが可能です。 |

| Web コンテンツ抽出 | ブログやドキュメントなどの Web コンテンツから必要な情報を抽出します。LLMによって不要な情報を除去し、整った文章として整形します。抽出したコンテンツは要約、翻訳などの別のユースケースで利用できます。 |

| 画像生成 | 画像生成 AI は、テキストや画像を元に新しい画像を生成できます。アイデアを即座に可視化することができ、デザイン作業などの効率化を期待できます。こちらの機能では、プロンプトの作成を LLM に支援してもらうことができます。 |

| 動画生成 | 動画生成 AI はテキストから短い動画を生成します。生成した動画は素材としてさまざまなシーンで活用できます。 |

| 映像分析 | マルチモーダルモデルによってテキストのみではなく、画像を入力することが可能になりました。こちらの機能では、映像の画像フレームとテキストを入力として LLM に分析を依頼します。 |

| ダイアグラム生成 | ダイアグラム生成は、あらゆるトピックに関する文章や内容を最適な図を用いて視覚化します。 テキストベースで簡単に図を生成でき、プログラマーやデザイナーでなくても効率的にフローチャートなどの図を作成できます。 |

RAG がしたい

RAG は LLM が苦手な最新の情報やドメイン知識を外部から伝えることで、本来なら回答できない内容にも答えられるようにする手法です。 社内に蓄積された PDF, Word, Excel などのファイルが情報ソースになります。 RAG は根拠に基づいた回答のみを許すため、LLM にありがちな「それっぽい間違った情報」を回答させないという効果もあります。

GenU は RAG チャットというユースケースを提供しています。 また RAG チャットの情報ソースとして Amazon Kendra と Knowledge Base の 2 種類が利用可能です。 Amazon Kendra を利用する場合は、手動で作成した S3 Bucket や Kendra Index をそのまま利用することが可能です。 Knowledge Base を利用する場合は、Advanced Parsing・チャンク戦略の選択・クエリ分解・リランキング など高度な RAG が利用可能です。 また Knowledge Base では、メタデータフィルターの設定 も可能です。 例えば「組織ごとにアクセス可能なデータソースを切り替えたい」や「UI からユーザーがフィルタを設定したい」といった要件を満たすことが可能です。

独自に作成した AI エージェントや Bedrock Flows などを社内で利用したい

GenU で エージェントを有効化すると Web 検索エージェントと Code Interpreter エージェントが作成されます。 Web 検索エージェントは、ユーザーの質問に回答するための情報を Web で検索し、回答します。例えば「AWS の GenU ってなに?」という質問に回答できます。 Code Interpreter エージェントは、ユーザーからのリクエストに応えるためにコードが実行できます。例えば「適当なダミーデータで散布図を描いて」といったリクエストに応えられます。

Web 検索エージェントと Code Interpreter エージェントはエージェントとしては基本的なものですので、中にはもっと業務に寄り添った実践的なエージェントを使いたいという要望もあると思います。 GenU では手動で作成したエージェントや別のアセットで作成したエージェントを インポートする機能 を提供しております。

GenU をエージェント活用のプラットフォームとして利用することで、GenU が提供する 豊富なセキュリティオプション や SAML認証 などを活用し、実践的なエージェントを社内に普及させることができます。 また、オプションで 不要な標準ユースケースを非表示 にしたり、エージェントをインライン表示 することで、よりエージェントに特化したプラットフォームとして GenU をご利用いただくことが可能です。

Bedrock Flows に関しても同様に インポート機能 がございますので、ぜひご活用ください。

独自のユースケースを作成したい

GenU はプロンプトテンプレートを自然言語で記述することで独自のユースケースを作成できる「ユースケースビルダー」という機能を提供しています。

プロンプトテンプレートだけで独自のユースケース画面が自動生成されるため、GenU 本体のコード変更は一切不要です。

作成したユースケースは、個人利用だけではなく、アプリケーションにログインできる全ユーザーに共有することもできます。

ユースケースビルダーは不要であれば無効化することも可能です。

ユースケースビルダーについての詳細は、ぜひこちらのブログをご覧ください。

ユースケースビルダーではフォームにテキストを入力したりファイルを添付するユースケースが作成できますが、要件によってはチャットの UI が良い場合もあると思います。

そのようなケースでは「チャット」ユースケースのシステムプロンプト保存機能をご活用ください。

システムプロンプトを保存しておくことで、ワンクリックで業務に必要な "ボット" が作成できます。

例えば「ソースコードを入力するとひたすらレビューしてくれるボット」や「入力した内容からひたすらメールアドレスを抽出してくれるボット」などが作成できます。

また、チャットの会話履歴はログインユーザーにシェアすることが可能で、シェアされた会話履歴からシステムプロンプトをインポートすることもできます。

GenU は OSS ですので、カスタマイズして独自のユースケースを追加するということも可能です。

その場合は GenU の main ブランチとのコンフリクトにお気をつけてください。

[!IMPORTANT]

/packages/cdk/cdk.jsonに記載されているmodelRegionリージョンのmodelIds(テキスト生成) 及びimageGenerationModelIds(画像生成) を有効化してください。(Amazon Bedrock の Model access 画面)

GenU のデプロイには AWS Cloud Development Kit(以降 CDK)を利用します。CDK の実行環境が用意できない場合は、以下のデプロイ方法を参照してください。

まず、以下のコマンドを実行してください。全てのコマンドはリポジトリのルートで実行してください。

npm ciCDK を利用したことがない場合、初回のみ Bootstrap 作業が必要です。すでに Bootstrap された環境では以下のコマンドは不要です。

npx -w packages/cdk cdk bootstrap続いて、以下のコマンドで AWS リソースをデプロイします。デプロイが完了するまで、お待ちください(20 分程度かかる場合があります)。

# 通常デプロイ

npm run cdk:deploy

# 高速デプロイ (作成されるリソースを事前確認せずに素早くデプロイ)

npm run cdk:deploy:quickGenU をご利用いただく際の、構成と料金試算例を公開しております。(従量課金制となっており、実際の料金はご利用内容により変動いたします。)

| Customer | Quote |

|---|---|

|

株式会社やさしい手 GenU のおかげで、利用者への付加価値提供と従業員の業務効率向上が実現できました。従業員にとって「いままでの仕事」が楽しい仕事に変化していく「サクサクからワクワクへ」更に進化を続けます! ・事例の詳細を見る ・事例のページを見る |

|

タキヒヨー株式会社 生成 AI を活用し社内業務効率化と 450 時間超の工数削減を実現。Amazon Bedrock を衣服デザイン等に適用、デジタル人材育成を推進。 ・事例のページを見る |

|

株式会社サルソニード ソリューションとして用意されている GenU を活用することで、生成 AI による業務プロセスの改善に素早く取り掛かることができました。 ・事例の詳細を見る ・適用サービス |

|

株式会社タムラ製作所 AWS が Github に公開しているアプリケーションサンプルは即テスト可能な機能が豊富で、そのまま利用することで自分たちにあった機能の選定が難なくでき、最終システムの開発時間を短縮することができました。 ・事例の詳細を見る |

|

株式会社JDSC Amazon Bedrock ではセキュアにデータを用い LLM が活用できます。また、用途により最適なモデルを切り替えて利用できるので、コストを抑えながら速度・精度を高めることができました。 ・事例の詳細を見る |

|

アイレット株式会社 株式会社バンダイナムコアミューズメントの生成 AI 活用に向けて社内のナレッジを蓄積・体系化すべく、AWS が提供している Generative AI Use Cases JP を活用したユースケースサイトを開発。アイレット株式会社が本プロジェクトの設計・構築・開発を支援。 ・株式会社バンダイナムコアミューズメント様のクラウドを活用した導入事例 |

|

株式会社アイデアログ M従来の生成 AI ツールよりもさらに業務効率化ができていると感じます。入出力データをモデルの学習に使わない Amazon Bedrock を使っているので、セキュリティ面も安心です。 ・事例の詳細を見る ・適用サービス |

|

株式会社エスタイル GenU を活用して短期間で生成 AI 環境を構築し、社内のナレッジシェアを促進することができました。 ・事例の詳細を見る |

|

株式会社明電舎 Amazon Bedrock や Amazon Kendra など AWS のサービスを利用することで、生成 AI の利用環境を迅速かつセキュアに構築することができました。議事録の自動生成や社内情報の検索など、従業員の業務効率化に貢献しています。 ・事例の詳細を見る |

|

三協立山株式会社 社内に埋もれていた情報が Amazon Kendra の活用で素早く探せるようになりました。GenU を参考にすることで求めていた議事録生成などの機能を迅速に提供できました。 ・事例の詳細を見る |

|

オイシックス・ラ・大地株式会社 GenU を活用したユースケースの開発プロジェクトを通して、必要なリソース、プロジェクト体制、外部からの支援、人材育成などを把握するきっかけとなり、生成 AI の社内展開に向けたイメージを明確につかむことができました。 ・事例のページを見る |

|

株式会社サンエー Amazon Bedrock を活用することでエンジニアの生産性が劇的に向上し、内製で構築してきた当社特有の環境のクラウドへの移行を加速できました。 ・事例の詳細を見る ・事例のページを見る |

活用事例を掲載させて頂ける場合は、Issueよりご連絡ください。

- ブログ: 生成 AI アプリをノーコードで作成・社内配布できる GenU ユースケースビルダー

- ブログ: RAG プロジェクトを成功させる方法 #1 ~ あるいは早く失敗しておく方法 ~

- ブログ: RAG チャットで精度向上のためのデバッグ方法

- ブログ: Amazon Q Developer CLI を利用してノーコーディングで GenU をカスタマイズ

- ブログ: Generative AI Use Cases JP をカスタマイズする方法

- ブログ: 無茶振りは生成 AI に断ってもらおう ~ ブラウザに生成 AI を組み込んでみた ~

- ブログ: Amazon Bedrock で Interpreter を開発!

- 動画: 生成 AI ユースケースを考え倒すための Generative AI Use Cases JP (GenU) の魅力と使い方

See CONTRIBUTING for more information.

This library is licensed under the MIT-0 License. See the LICENSE file.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for generative-ai-use-cases-jp

Similar Open Source Tools

generative-ai-use-cases-jp

Generative AI (生成 AI) brings revolutionary potential to transform businesses. This repository demonstrates business use cases leveraging Generative AI.

LLMOne

LLMOne is an open-source, lightweight enterprise-level platform for deploying and serving large language models. It aims to address pain points in traditional large model private deployment such as long cycles, complex configurations, performance challenges, and high operational costs. LLMOne simplifies the deployment process with highly automated workflows and optimized runtime environments, ensuring enterprise-level performance and stability. It caters to developers, manufacturers, and users of large language models, providing features like rapid deployment, professional inference performance, broad compatibility with AI hardware, flexible model and application management, visual operational monitoring, and an open application ecosystem.

LxgwZhenKai

LxgwZhenKai is a Chinese font derived from LXGW WenKai, manually adjusted for boldness and supplemented with AI assistance for character additions. The font aims to provide a comfortable reading experience on screens while also serving as a bold version of LXGW WenKai for temporary use. It contains over 13,000 characters, including common simplified and traditional Chinese characters, and is licensed under SIL Open Font License 1.1. Users are allowed to freely use, distribute, modify, and create derivative fonts based on LxgwZhenKai.

XianTu

XianTu is an AI-driven immersive cultivation text adventure game that features dynamic storytelling with multiple large models, a complete cultivation system including realm breakthroughs, cultivation of techniques, equipment refining, and NPC interactions, intelligent decision-making system based on multiple dimensions, multiple save file management with cloud sync support, open world exploration with character relationship networks, cross-platform compatibility with dual themes, and compatibility with SillyTavern embedded environment and standalone web version.

GoMaxAI-ChatGPT-Midjourney-Pro

GoMaxAI Pro is an AI-powered application for personal, team, and enterprise private operations. It supports various models like ChatGPT, Claude, Gemini, Kimi, Wenxin Yiyuan, Xunfei Xinghuo, Tsinghua Zhipu, Suno-v3.5, and Luma-video. The Pro version offers a new UI interface, member points system, management backend, homepage features, support for various content formats, AI video capabilities, SAAS multi-opening function, bug fixes, and more. It is built using web frontend with Vue3, mobile frontend with Uniapp, management frontend with Vue3, backend with Nodejs, and uses MySQL5.7(+) + Redis for data support. It can be deployed on Linux, Windows, or MacOS, with data storage options including local storage, Aliyun OSS, Tencent Cloud COS, and Chevereto image bed.

forksilly.doc

ForkSilly.doc is a repository mainly for storing documentation of ForkSilly, an Android project developed using React Native/Expo. It is suitable for users with experience in SillyTavern. The project is self-shared and may not accept feature requests. It is designed for pure text cards, illustration cards, and Stable Diffusion text-image. It is compatible with SillyTavern V2 character cards, world books, regex, presets, and chat records. Users can import and export at any time. The tool supports various customization options such as chat font, background image, and quick toggle of preset entries. It also allows the use of various OpenAI-compatible APIs and provides built-in storage management features. Users can utilize text-image functionality and access free text-image services like pollinations.ai. Additionally, it supports Stable Diffusion text-image features and integration with silicon-based flow and Gemini embedding models. The tool does not support TTS or connecting to NAI.

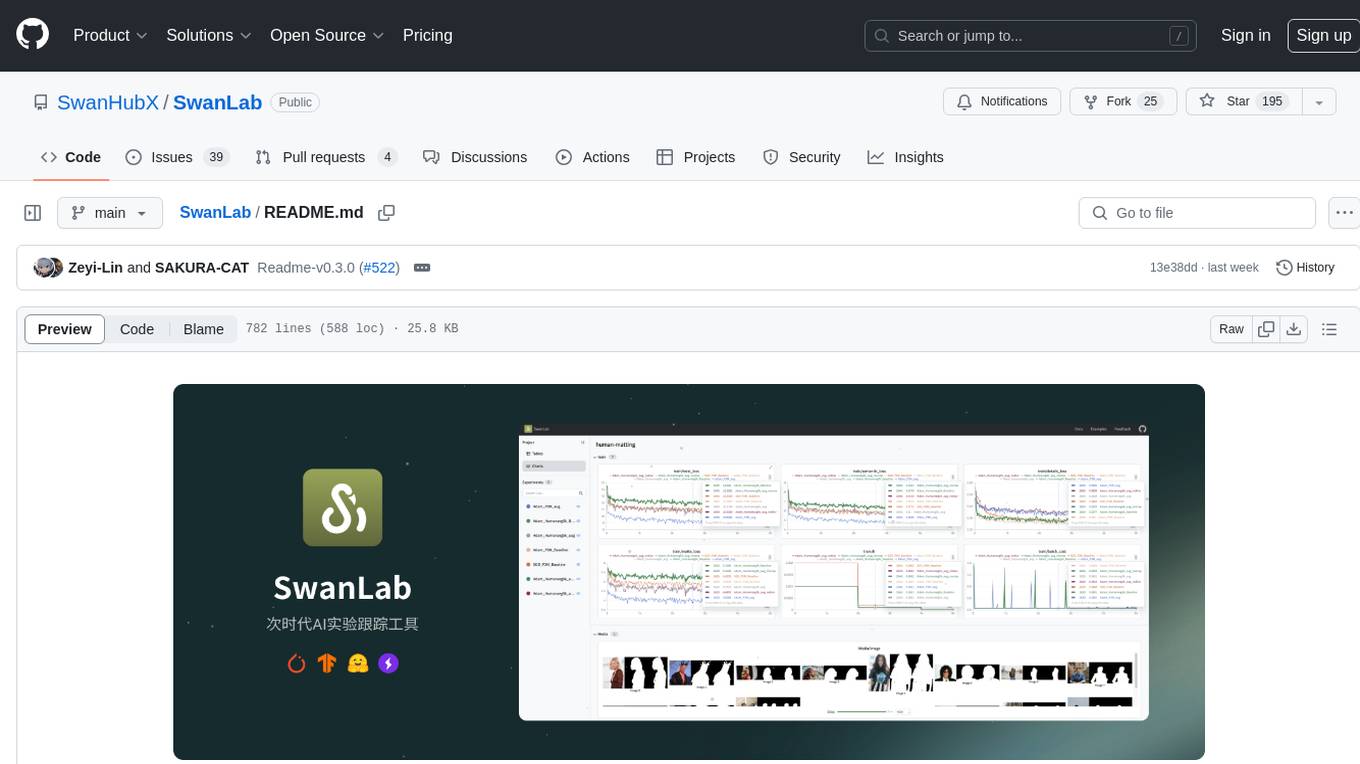

SwanLab

SwanLab is an open-source, lightweight AI experiment tracking tool that provides a platform for tracking, comparing, and collaborating on experiments, aiming to accelerate the research and development efficiency of AI teams by 100 times. It offers a friendly API and a beautiful interface, combining hyperparameter tracking, metric recording, online collaboration, experiment link sharing, real-time message notifications, and more. With SwanLab, researchers can document their training experiences, seamlessly communicate and collaborate with collaborators, and machine learning engineers can develop models for production faster.

goodsKill

The 'goodsKill' project aims to build a complete project framework integrating good technologies and development techniques, mainly focusing on backend technologies. It provides a simulated flash sale project with unified flash sale simulation request interface. The project uses SpringMVC + Mybatis for the overall technology stack, Dubbo3.x for service intercommunication, Nacos for service registration and discovery, and Spring State Machine for data state transitions. It also integrates Spring AI service for simulating flash sale actions.

AI-Vtuber

AI-VTuber is a highly customizable AI VTuber project that integrates with Bilibili live streaming, uses Zhifu API as the language base model, and includes intent recognition, short-term and long-term memory, cognitive library building, song library creation, and integration with various voice conversion, voice synthesis, image generation, and digital human projects. It provides a user-friendly client for operations. The project supports virtual VTuber template construction, multi-person device template management, real-time switching of virtual VTuber templates, and offers various practical tools such as video/audio crawlers, voice recognition, voice separation, voice synthesis, voice conversion, AI drawing, and image background removal.

Awesome-Chinese-LLM

Analyze the following text from a github repository (name and readme text at end) . Then, generate a JSON object with the following keys and provide the corresponding information for each key, ,'for_jobs' (List 5 jobs suitable for this tool,in lowercase letters), 'ai_keywords' (keywords of the tool,in lowercase letters), 'for_tasks' (list of 5 specific tasks user can use this tool to do,in less than 3 words,Verb + noun form,in daily spoken language,in lowercase letters).Answer in english languagesname:Awesome-Chinese-LLM readme:# Awesome Chinese LLM   An Awesome Collection for LLM in Chinese 收集和梳理中文LLM相关    自ChatGPT为代表的大语言模型(Large Language Model, LLM)出现以后,由于其惊人的类通用人工智能(AGI)的能力,掀起了新一轮自然语言处理领域的研究和应用的浪潮。尤其是以ChatGLM、LLaMA等平民玩家都能跑起来的较小规模的LLM开源之后,业界涌现了非常多基于LLM的二次微调或应用的案例。本项目旨在收集和梳理中文LLM相关的开源模型、应用、数据集及教程等资料,目前收录的资源已达100+个! 如果本项目能给您带来一点点帮助,麻烦点个⭐️吧~ 同时也欢迎大家贡献本项目未收录的开源模型、应用、数据集等。提供新的仓库信息请发起PR,并按照本项目的格式提供仓库链接、star数,简介等相关信息,感谢~

MaiBot

MaiBot is an interactive intelligent agent based on a large language model. It aims to be an 'entity' active in QQ group chats, focusing on human-like interactions. It features personification in language style, behavior planning, expression learning, plugin system for unlimited extensions, and emotion expression. The project's design philosophy emphasizes creating a 'life form' in group chats that feels real rather than perfect, with the goal of providing companionship through an AI that makes mistakes and has its own perceptions and thoughts. The code is open-source, but the runtime data of MaiBot is intended to remain closed to maintain its autonomy and conversational nature.

99AI

99AI is a commercializable AI web application based on NineAI 2.4.2 (no authorization, no backdoors, no piracy, integrated front-end and back-end integration packages, supports Docker rapid deployment). The uncompiled source code is temporarily closed. Compared with the stable version, the development version is faster.

aituber-kit

AITuber-Kit is a tool that enables users to interact with AI characters, conduct AITuber live streams, and engage in external integration modes. Users can easily converse with AI characters using various LLM APIs, stream on YouTube with AI character reactions, and send messages to server apps via WebSocket. The tool provides settings for API keys, character configurations, voice synthesis engines, and more. It supports multiple languages and allows customization of VRM models and background images. AITuber-Kit follows the MIT license and offers guidelines for adding new languages to the project.

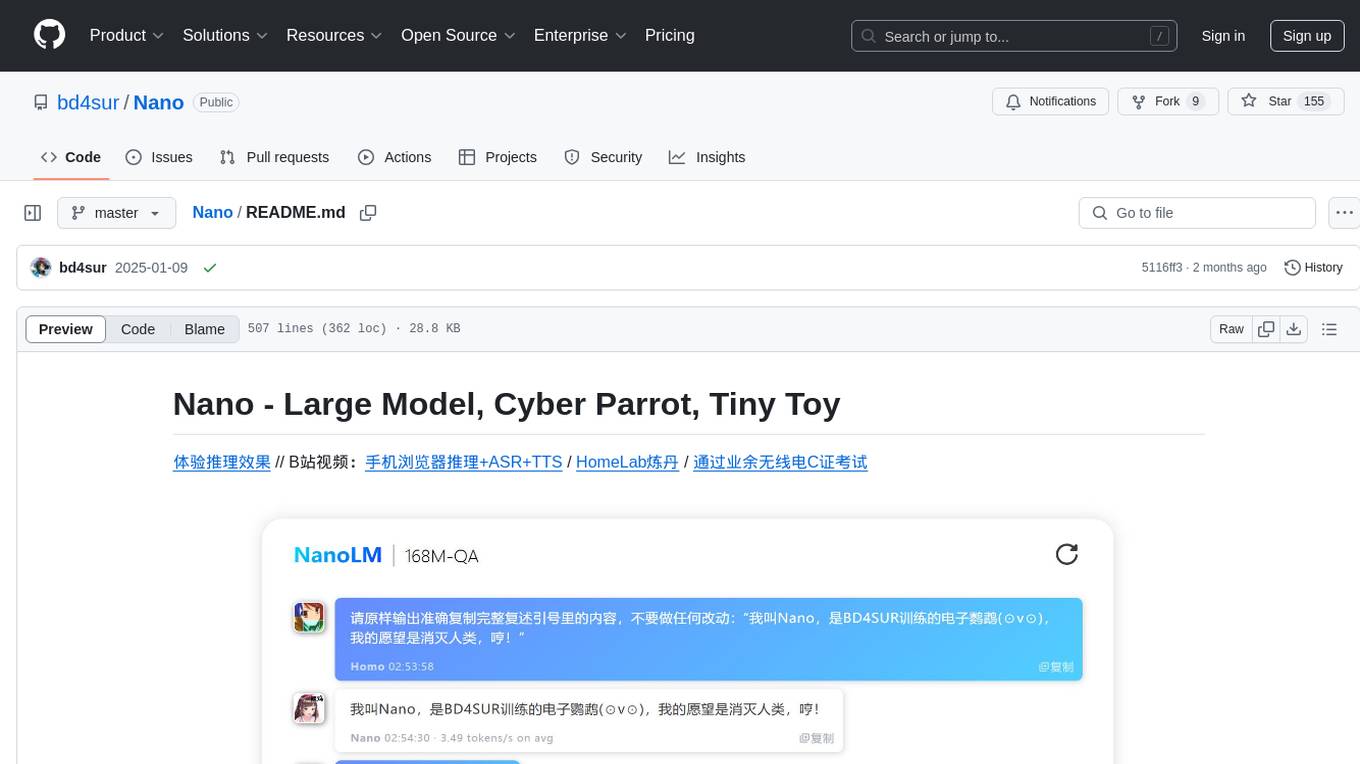

Nano

Nano is a Transformer-based autoregressive language model for personal enjoyment, research, modification, and alchemy. It aims to implement a specific and lightweight Transformer language model based on PyTorch, without relying on Hugging Face. Nano provides pre-training and supervised fine-tuning processes for models with 56M and 168M parameters, along with LoRA plugins. It supports inference on various computing devices and explores the potential of Transformer models in various non-NLP tasks. The repository also includes instructions for experiencing inference effects, installing dependencies, downloading and preprocessing data, pre-training, supervised fine-tuning, model conversion, and various other experiments.

Tianji

Tianji is a free, non-commercial artificial intelligence system developed by SocialAI for tasks involving worldly wisdom, such as etiquette, hospitality, gifting, wishes, communication, awkwardness resolution, and conflict handling. It includes four main technical routes: pure prompt, Agent architecture, knowledge base, and model training. Users can find corresponding source code for these routes in the tianji directory to replicate their own vertical domain AI applications. The project aims to accelerate the penetration of AI into various fields and enhance AI's core competencies.

HaE

HaE is a framework project in the field of network security (data security) that combines artificial intelligence (AI) large models to achieve highlighting and information extraction of HTTP messages (including WebSocket). It aims to reduce testing time, focus on valuable and meaningful messages, and improve vulnerability discovery efficiency. The project provides a clear and visual interface design, simple interface interaction, and centralized data panel for querying and extracting information. It also features built-in color upgrade algorithm, one-click export/import of data, and integration of AI large models API for optimized data processing.

For similar tasks

generative-ai-use-cases-jp

Generative AI (生成 AI) brings revolutionary potential to transform businesses. This repository demonstrates business use cases leveraging Generative AI.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

LocalAI

LocalAI is a free and open-source OpenAI alternative that acts as a drop-in replacement REST API compatible with OpenAI (Elevenlabs, Anthropic, etc.) API specifications for local AI inferencing. It allows users to run LLMs, generate images, audio, and more locally or on-premises with consumer-grade hardware, supporting multiple model families and not requiring a GPU. LocalAI offers features such as text generation with GPTs, text-to-audio, audio-to-text transcription, image generation with stable diffusion, OpenAI functions, embeddings generation for vector databases, constrained grammars, downloading models directly from Huggingface, and a Vision API. It provides a detailed step-by-step introduction in its Getting Started guide and supports community integrations such as custom containers, WebUIs, model galleries, and various bots for Discord, Slack, and Telegram. LocalAI also offers resources like an LLM fine-tuning guide, instructions for local building and Kubernetes installation, projects integrating LocalAI, and a how-tos section curated by the community. It encourages users to cite the repository when utilizing it in downstream projects and acknowledges the contributions of various software from the community.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

ChatFAQ

ChatFAQ is an open-source comprehensive platform for creating a wide variety of chatbots: generic ones, business-trained, or even capable of redirecting requests to human operators. It includes a specialized NLP/NLG engine based on a RAG architecture and customized chat widgets, ensuring a tailored experience for users and avoiding vendor lock-in.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.

glide

Glide is a cloud-native LLM gateway that provides a unified REST API for accessing various large language models (LLMs) from different providers. It handles LLMOps tasks such as model failover, caching, key management, and more, making it easy to integrate LLMs into applications. Glide supports popular LLM providers like OpenAI, Anthropic, Azure OpenAI, AWS Bedrock (Titan), Cohere, Google Gemini, OctoML, and Ollama. It offers high availability, performance, and observability, and provides SDKs for Python and NodeJS to simplify integration.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.