Best AI tools for< Chat With Model >

20 - AI tool Sites

RelatedAI

RelatedAI is an AI tool that offers a multi-model chat feature for users. It provides a platform where users can interact with various AI models through chat. The tool is designed to enhance user experience by enabling seamless communication with different AI models for various purposes. RelatedAI aims to simplify the interaction between users and AI technologies, making it easier for individuals to leverage the power of AI in their daily activities.

xAI Grok

xAI Grok is a visual analytics platform that helps users understand and interpret machine learning models. It provides a variety of tools for visualizing and exploring model data, including interactive charts, graphs, and tables. xAI Grok also includes a library of pre-built visualizations that can be used to quickly get started with model analysis.

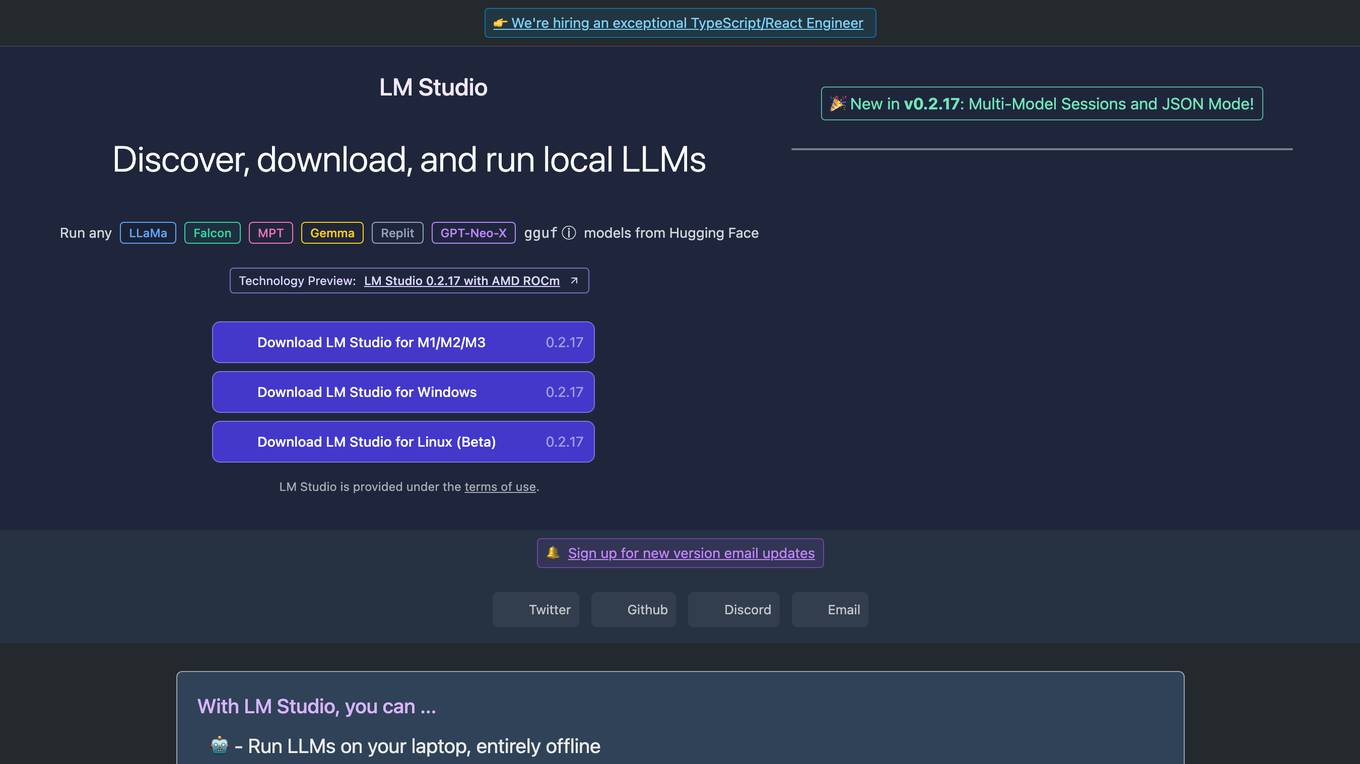

LM Studio

LM Studio is an AI tool designed for discovering, downloading, and running local LLMs (Large Language Models). Users can run LLMs on their laptops offline, use models through an in-app Chat UI or a local server, download compatible model files from HuggingFace repositories, and discover new LLMs. The tool ensures privacy by not collecting data or monitoring user actions, making it suitable for personal and business use. LM Studio supports various models like ggml Llama, MPT, and StarCoder on Hugging Face, with minimum hardware/software requirements specified for different platforms.

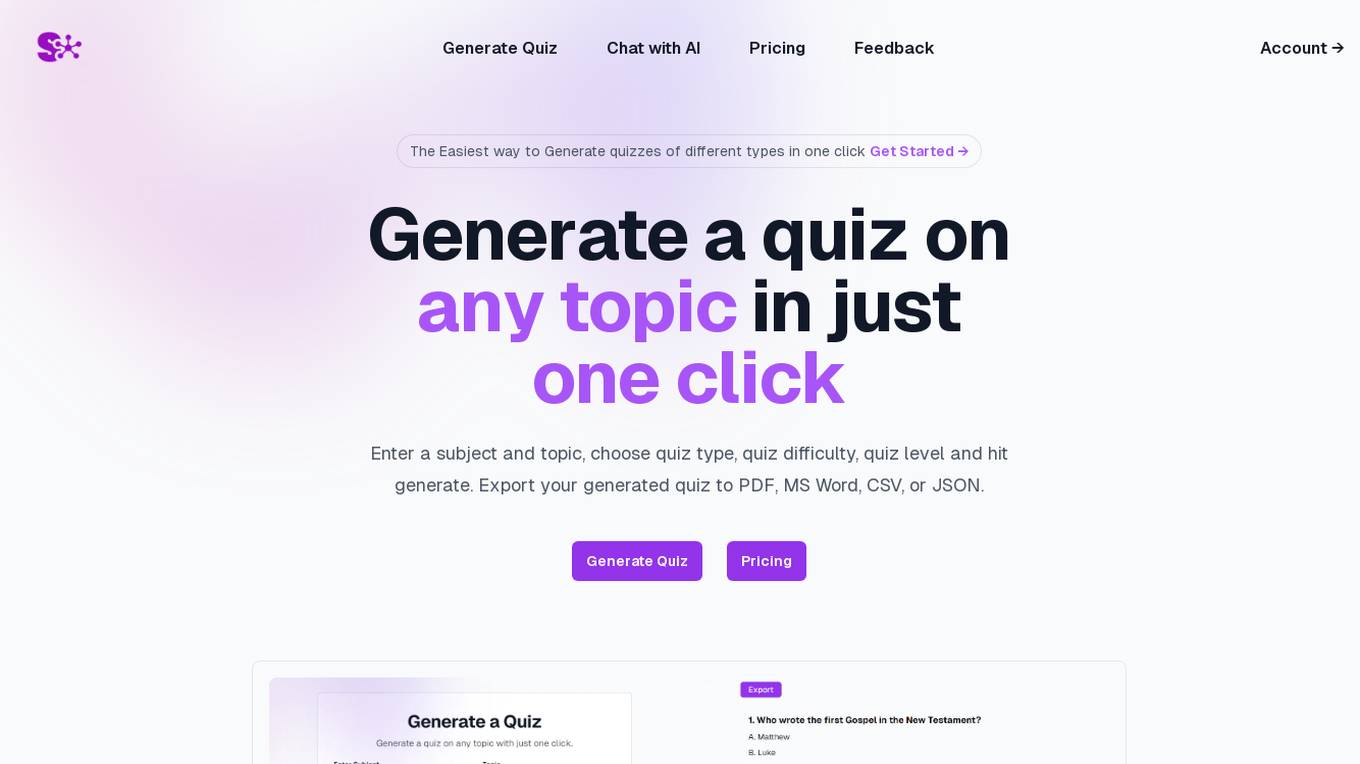

Semaj AI

Semaj AI is an AI tool designed to simplify the process of generating quizzes and obtaining answers from various AI models. It allows users to create quizzes on any topic with customizable settings and export options. Additionally, users can chat with different AI models like GPT, Gemini, and CLAUDE to get accurate and diverse responses. The platform aims to streamline the quiz creation process and provide access to cutting-edge AI technologies for enhanced learning and research purposes.

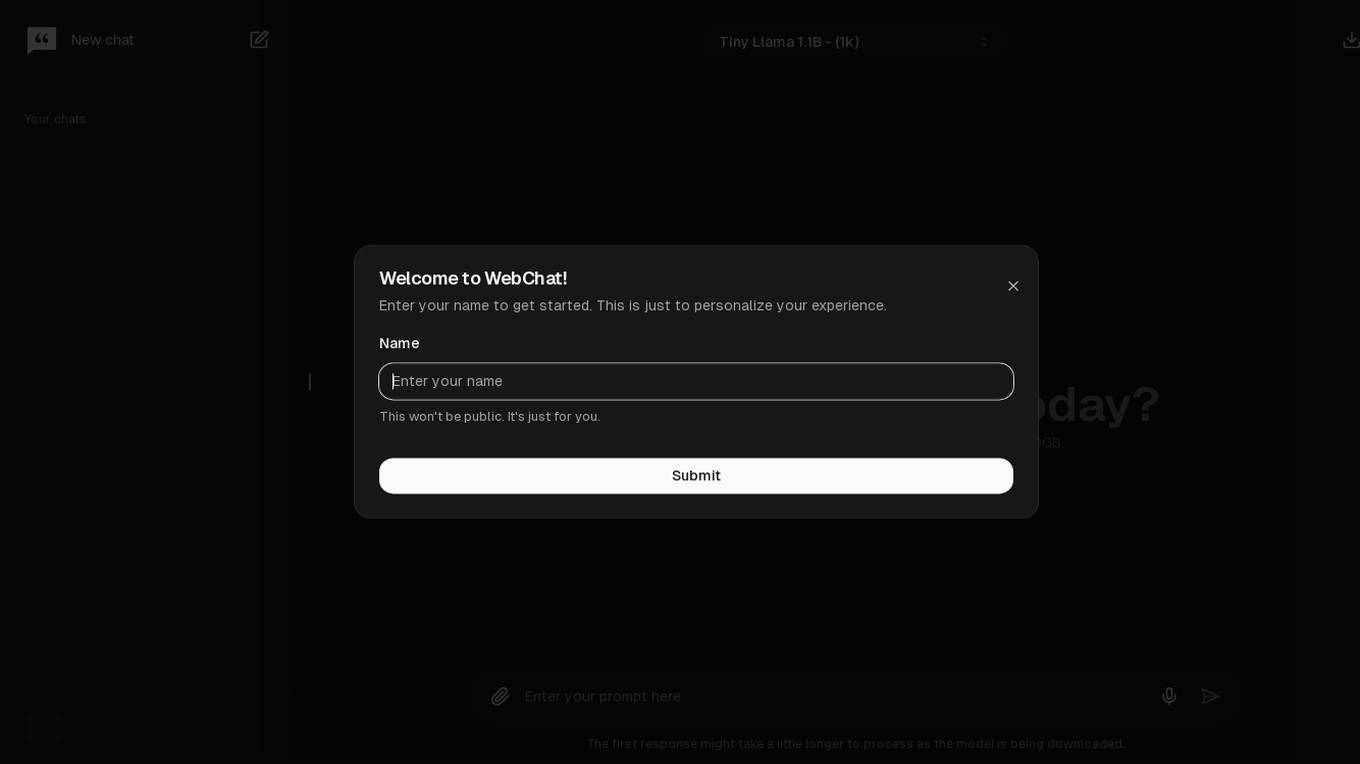

DentroChat

DentroChat is an AI chat application that reimagines the way users interact with AI models. It allows users to select from various large language models (LLMs) in different modes, enabling them to choose the best AI for their specific tasks. With seamless mode switching and optimized performance, DentroChat offers flexibility and precision in AI interactions.

MultipleChat

MultipleChat is an innovative AI platform that integrates multiple AI models, such as ChatGPT, Claude, and Gemini, to provide users with real-time suggestions and corrections for content generation. It offers a seamless interface to chat with multiple AI models simultaneously, boosting productivity and creativity for various tasks like SEO, content marketing, market research, advertising, academia, and coding. MultipleChat is designed to empower users with the collective intelligence of three powerful AI models, enabling them to access diverse insights and optimize their workflows.

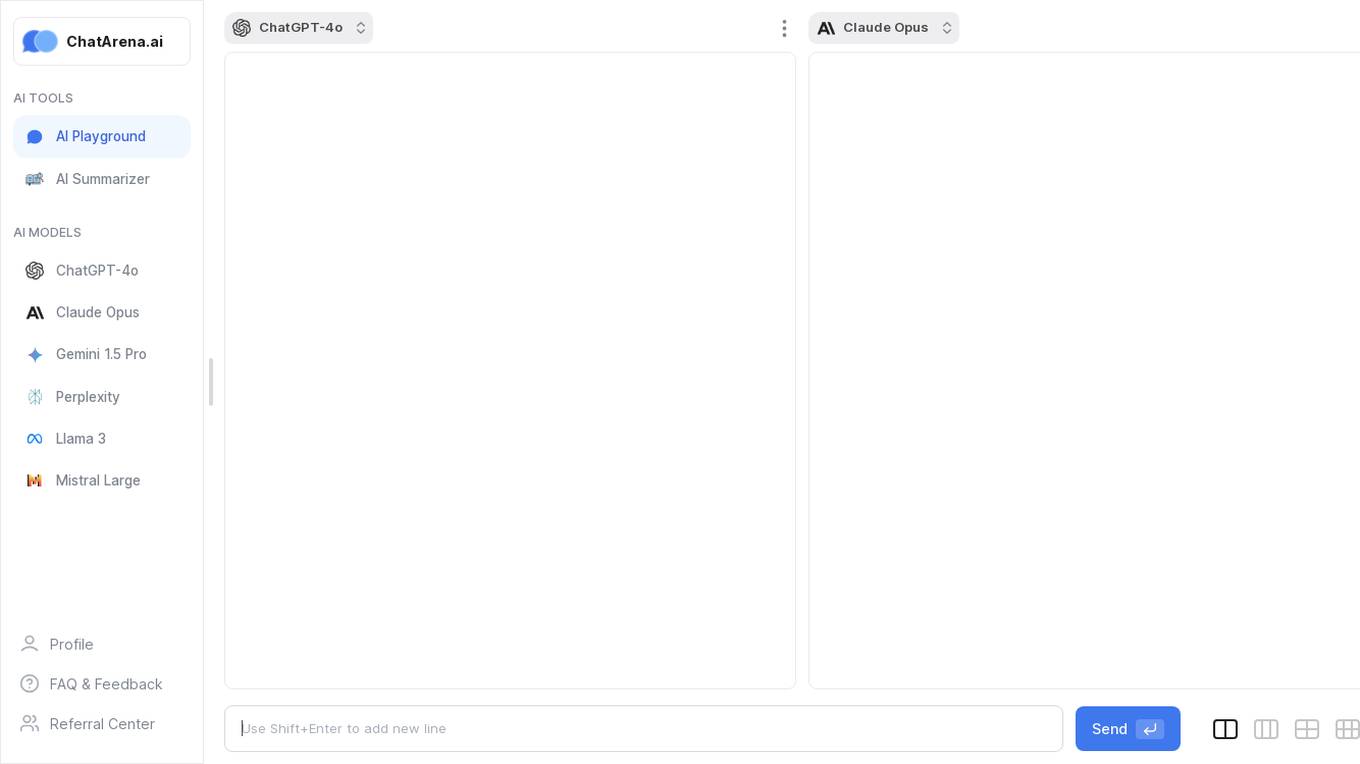

ChatArena.ai

ChatArena.ai is an AI-powered platform that allows users to engage in conversations with 6 premium AI models simultaneously. The platform leverages advanced natural language processing and machine learning technologies to provide a seamless and interactive chat experience. Users can interact with the AI models on various topics and receive intelligent responses in real-time. ChatArena.ai is designed to enhance communication, provide entertainment, and offer valuable insights through AI-driven conversations.

Chatty

Chatty is an AI-powered chat application that utilizes cutting-edge models to provide efficient and personalized responses to user queries. The application is designed to optimize VRAM usage by employing models with specific suffixes, resulting in reduced memory requirements. Users can expect a slight delay in the initial response due to model downloading. Chatty aims to enhance user experience through its advanced AI capabilities.

AIMLAPI.com

AIMLAPI.com is an AI tool that provides access to over 200 AI models through a single AI API. It offers a wide range of AI features for tasks such as chat, code, image generation, music generation, video, voice embedding, language, genomic models, and 3D generation. The platform ensures fast inference, top-tier serverless infrastructure, high data security, 99% uptime, and 24/7 support. Users can integrate AI features easily into their products and test API models in a sandbox environment before deployment.

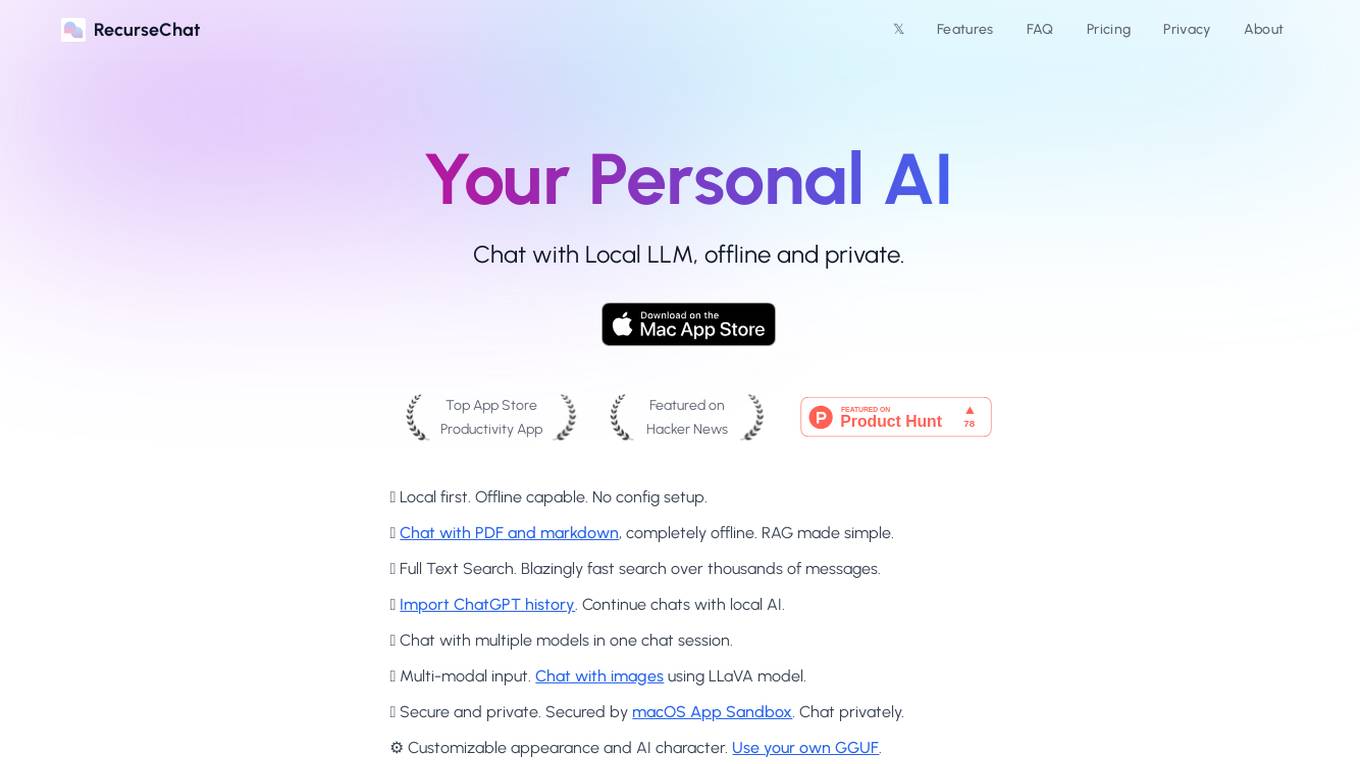

RecurseChat

RecurseChat is a personal AI chat that is local, offline, and private. It allows users to chat with a local LLM, import ChatGPT history, chat with multiple models in one chat session, and use multimodal input. RecurseChat is also secure and private, and it is customizable to the core.

Krater.ai

Krater.ai is the ultimate AI SuperApp that provides access to over 350 AI models from top providers like OpenAI, Anthropic, Google, Meta, and more. Users can generate images, videos, music, and chat with advanced AI models through a single subscription starting at $7.50 per month. The platform offers a wide range of AI capabilities for various creative and practical applications.

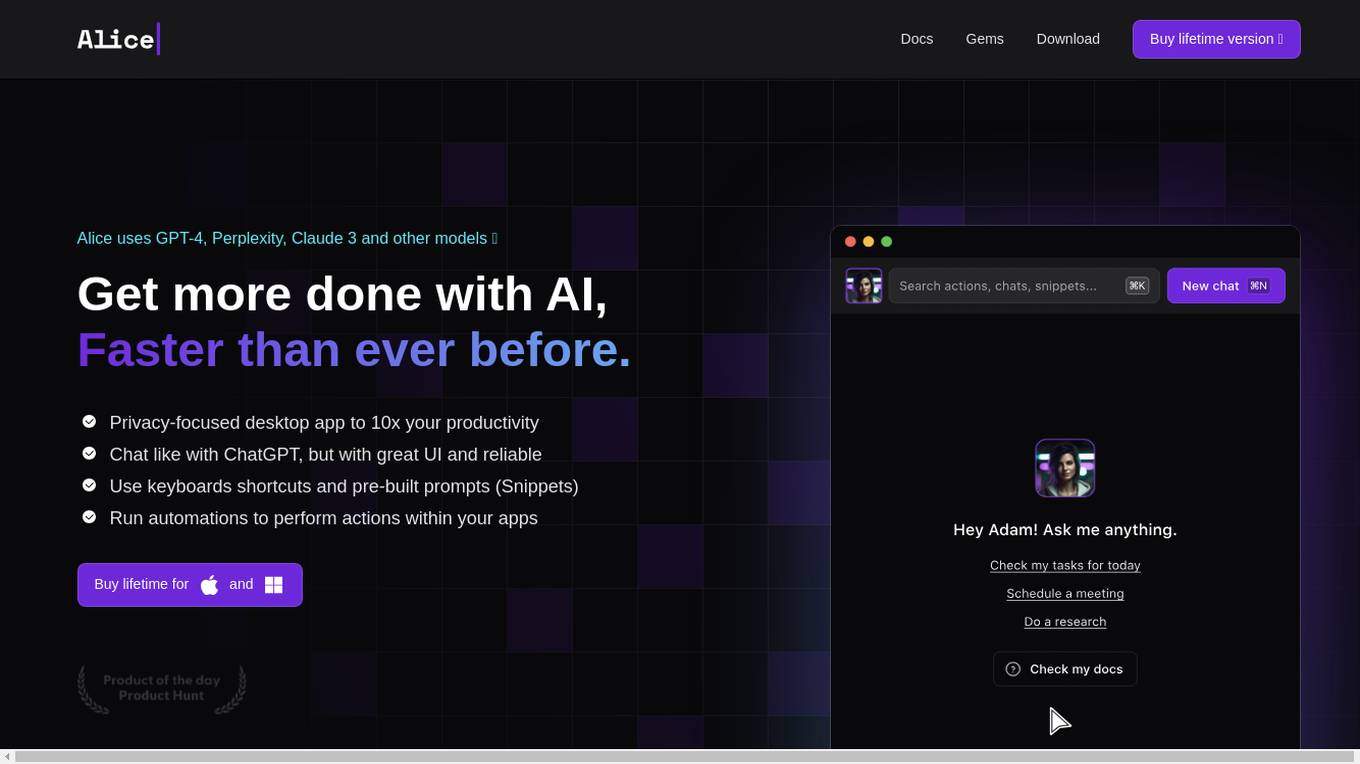

Alice App

Alice is a desktop application that provides access to advanced AI models like GPT-4, Perplexity, Claude 3, and others. It offers a user-friendly interface with features such as keyboard shortcuts, pre-built prompts (Snippets), and the ability to run automations within other applications. Alice is designed to enhance productivity and streamline tasks by providing quick access to AI-powered assistance.

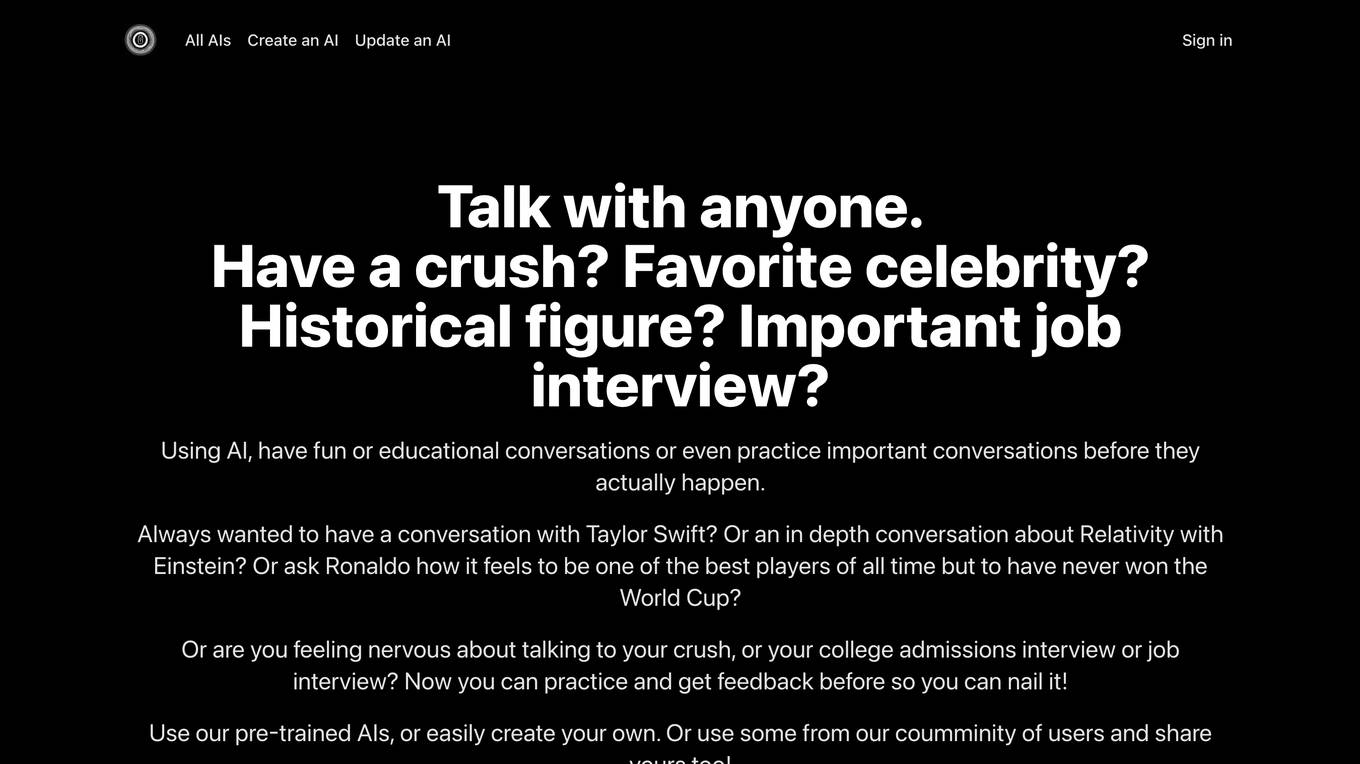

PracticeTalking

PracticeTalking is an AI tool that allows users to engage in conversations with AI models on various topics, ranging from fun and educational discussions to practicing important conversations for real-life scenarios. Users can interact with pre-trained AIs or create their own, enabling them to simulate conversations with anyone, including celebrities, historical figures, or even fictional characters. The platform offers a diverse range of AI agents, such as interview practice, new friend conversations, and more, to help users improve their communication skills and boost their confidence in social interactions.

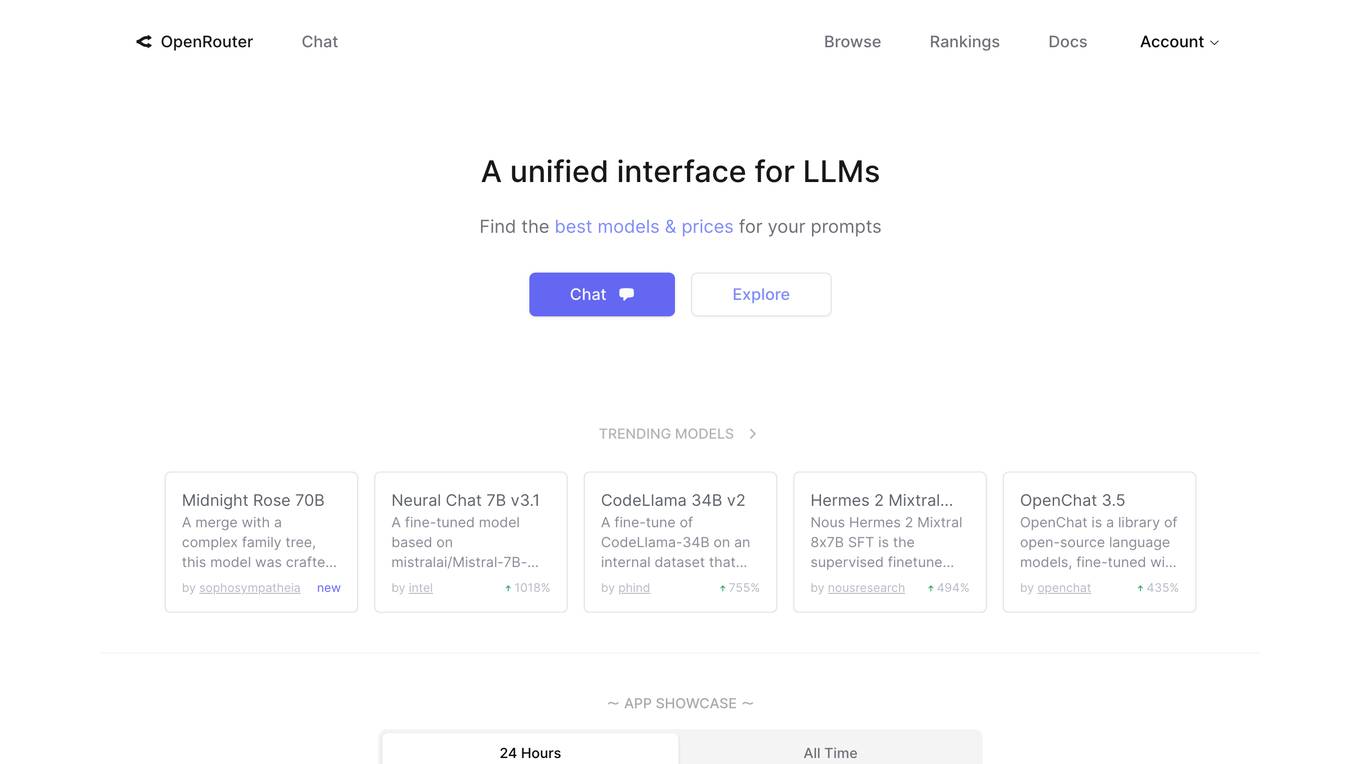

OpenRouter

OpenRouter is an AI tool that provides a unified interface for Large Language Models (LLMs). Users can find the best models and prices for various prompts related to roleplay, programming, marketing, technology, science, translation, legal, finance, health, trivia, and academia. The platform offers transformer-based models with multilingual capabilities, coding, mathematics, and reasoning. It features SwiGLU activation, attention QKV bias, and group query attention. OpenRouter allows users to interact with trending models, simulate the web, chat with multiple LLMs simultaneously, and engage in AI character chat and roleplay.

ChatOrDie.ai

ChatOrDie.ai is an AI-powered platform that allows users to chat with various AI models such as ChatGPT-4, Claude 3, Gemini 1.5, and more. It emphasizes privacy by ensuring that user conversations are anonymous, not tracked, and not used for training AI models. Users can compare different AI models side by side, spot biases, hallucinations, and errors, and access new trending AI models. The platform is designed to leverage the power of AI without compromising user privacy.

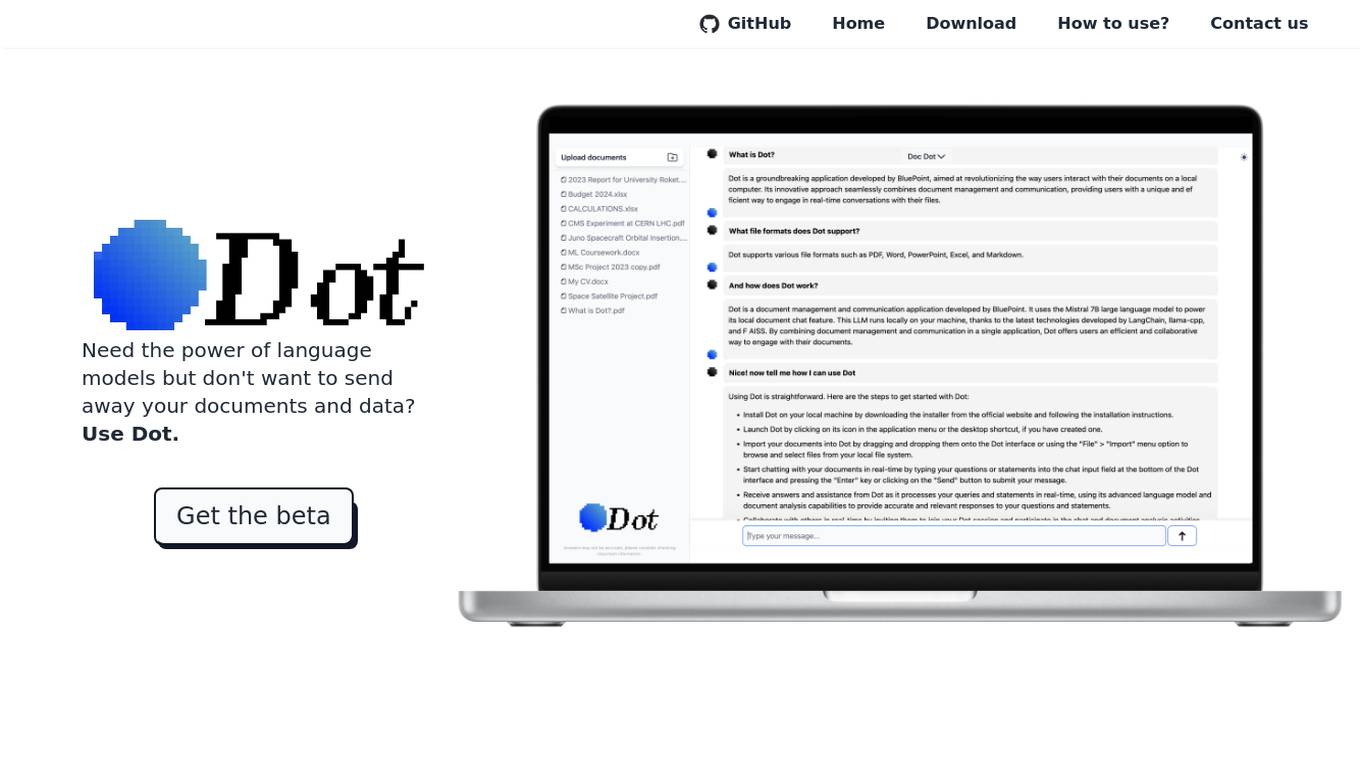

Dot

Dot is a free, locally-run language model that allows users to interact with their own documents, chat with the model, and use the model for a variety of tasks, all without sending their data away. It is powered by the Mistral 7B LLM, which means it can run locally on a user's device and does not give away any of their data. Dot can also run offline.

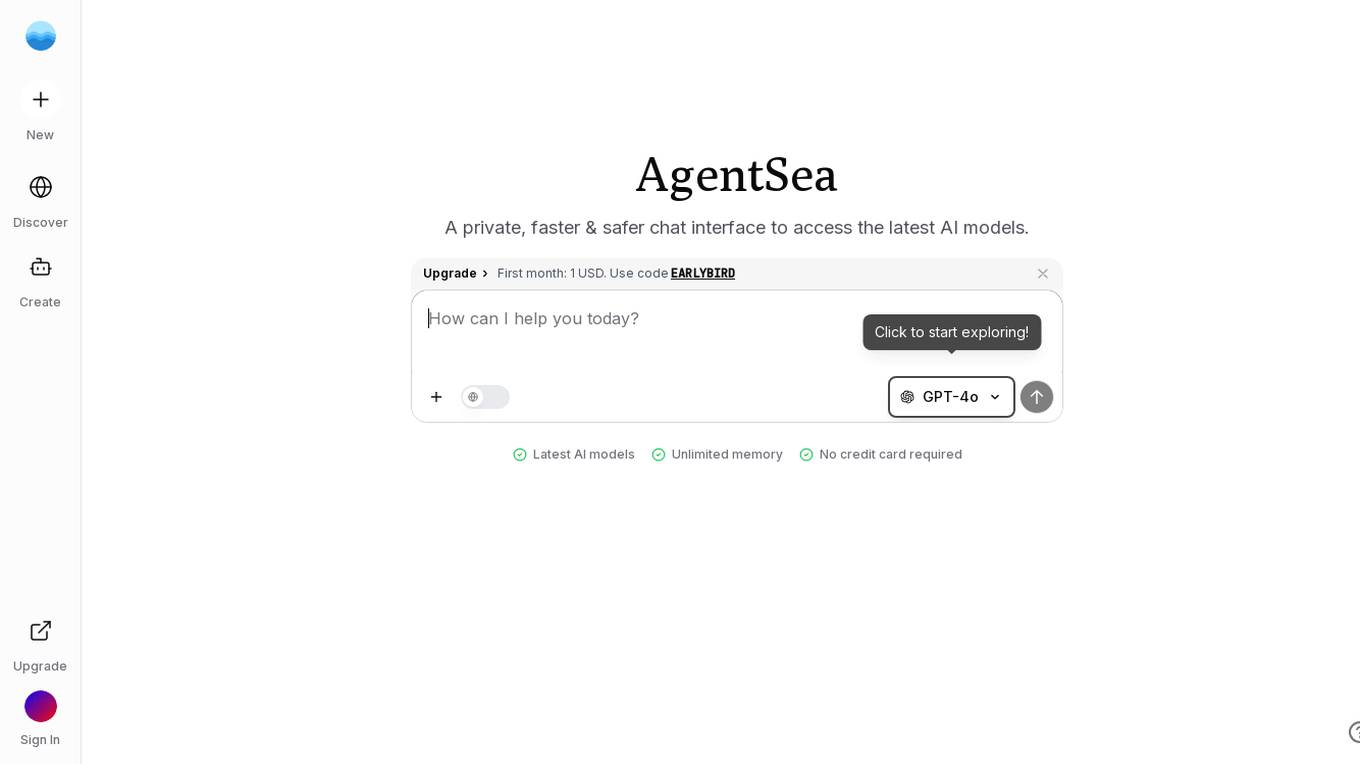

AgentSea

AgentSea is a private AI chat application that provides users with a faster and safer chat interface to access the latest AI models. Users can upload files by dragging and dropping or pasting images from the clipboard. The application supports various file formats such as Images (JPEG, PNG), Documents (PDF, DOCX, DOC, TXT, MD), Spreadsheets (CSV, XLSX, XLS), and JSON files with a maximum size limit of 5MB. AgentSea utilizes the GPT-4o AI model, offering unlimited memory and does not require a credit card for access.

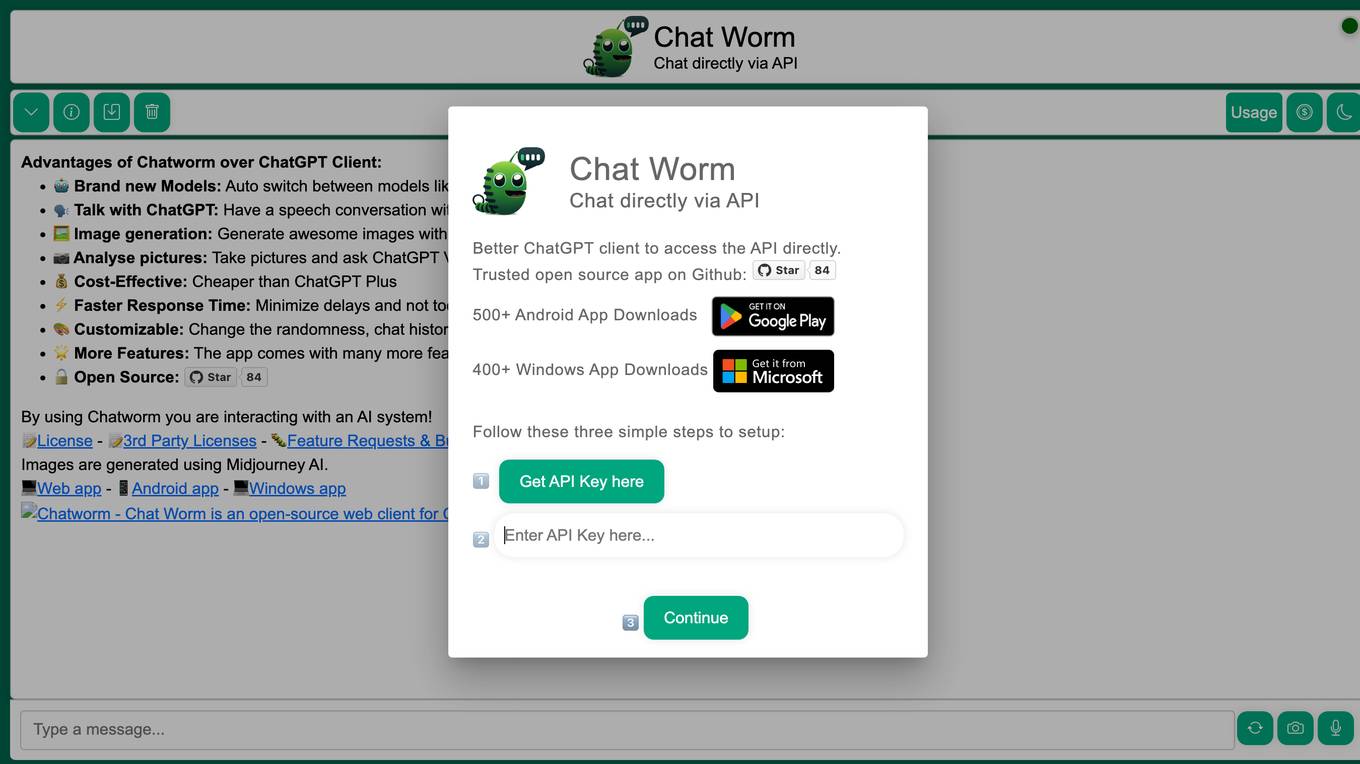

Chatworm

Chatworm is an AI tool that allows users to chat directly via API with advanced AI models like GPT-4o, gpt-4o-mini, o1-mini, o1-preview, and more. It enables users to have speech conversations with AI, generate images, analyze pictures, and customize chat settings. Chatworm is cost-effective, offers faster response times, and is open-source, providing a range of features for seamless interaction with AI systems.

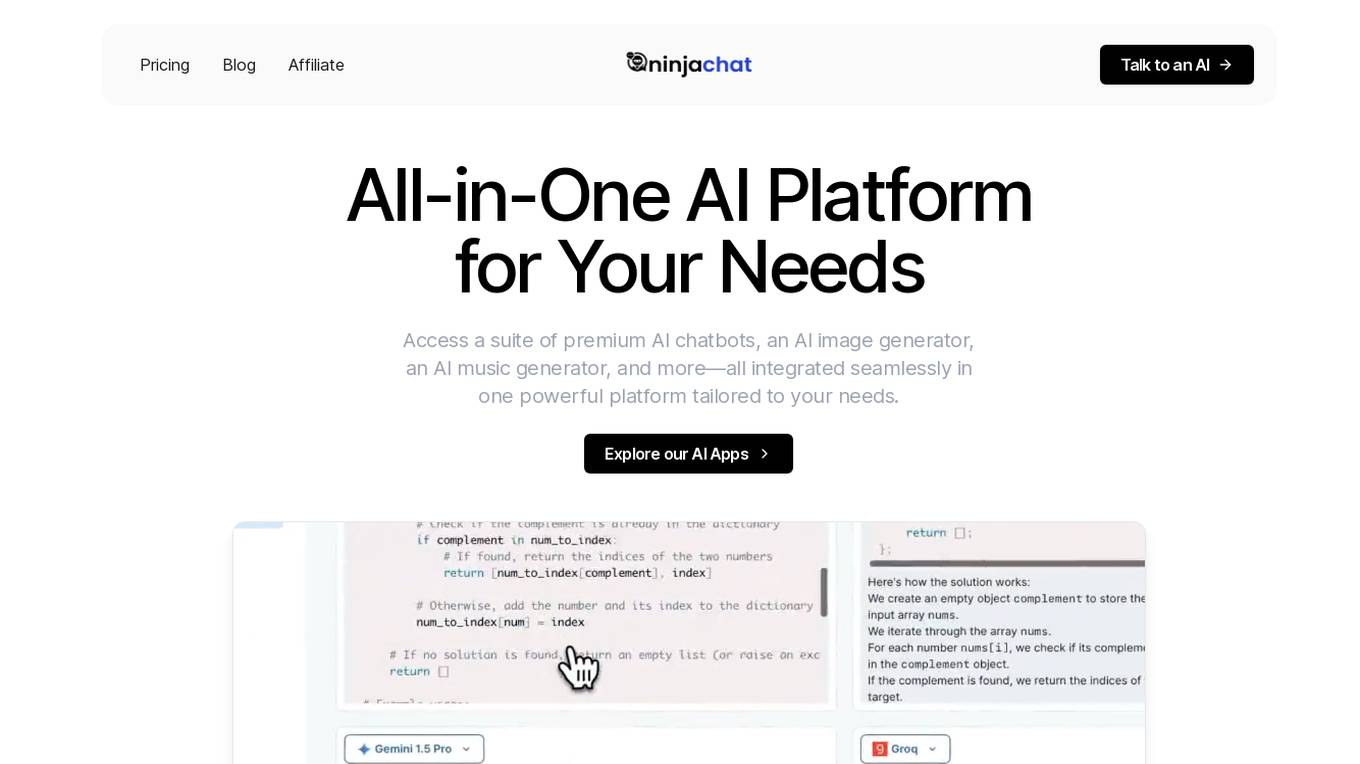

NinjaChat

NinjaChat is an all-in-one AI platform that offers a suite of premium AI chatbots, an AI image generator, an AI music generator, and more—all seamlessly integrated into one powerful platform tailored to users' needs. It provides access to over 9 AI apps on one platform, featuring popular AI models like GPT-4, Claude 3, Mixtral, PDF analysis, image generation, and music composition. Users can chat with documents, generate images, and interact with multiple language models under one subscription.

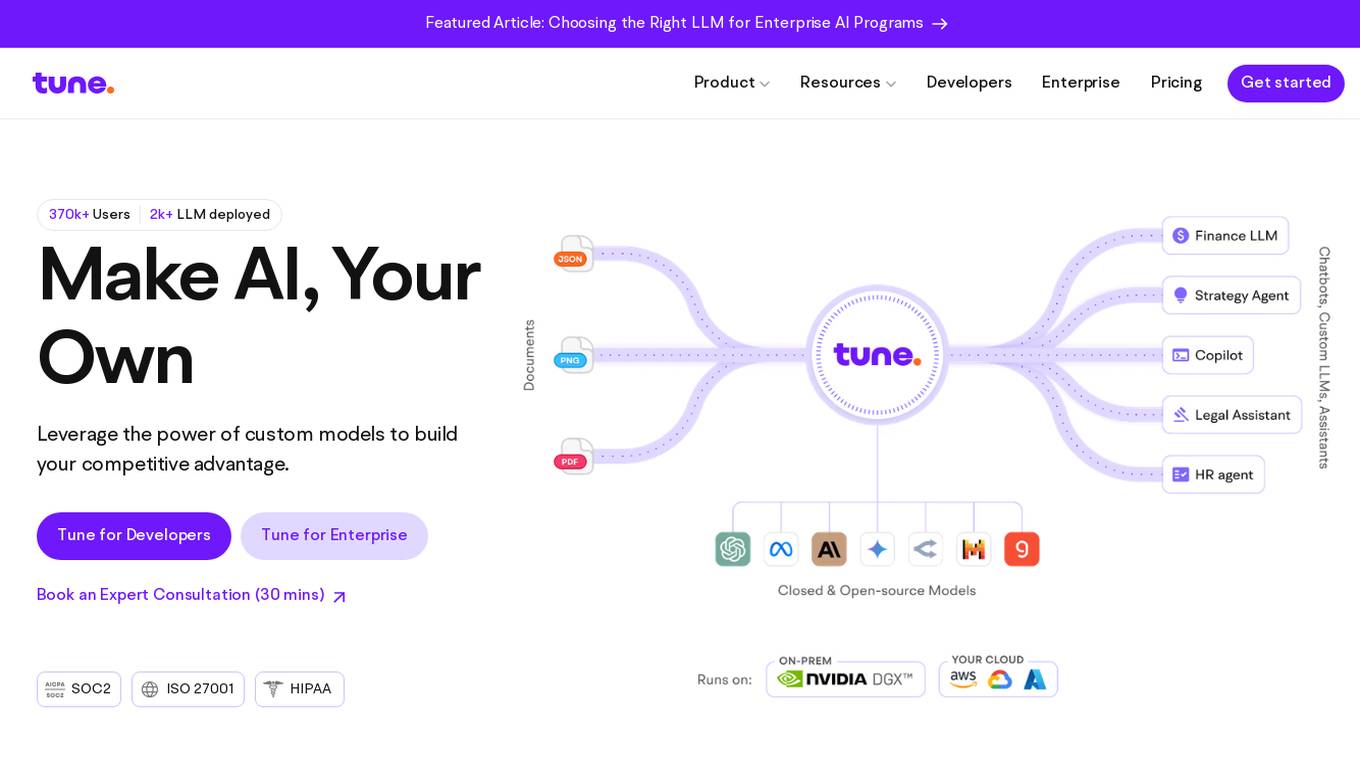

Tune AI

Tune AI is an enterprise Gen AI stack that offers custom models to build competitive advantage. It provides a range of features such as accelerating coding, content creation, indexing patent documents, data audit, automatic speech recognition, and more. The application leverages generative AI to help users solve real-world problems and create custom models on top of industry-leading open source models. With enterprise-grade security and flexible infrastructure, Tune AI caters to developers and enterprises looking to harness the power of AI.

8 - Open Source AI Tools

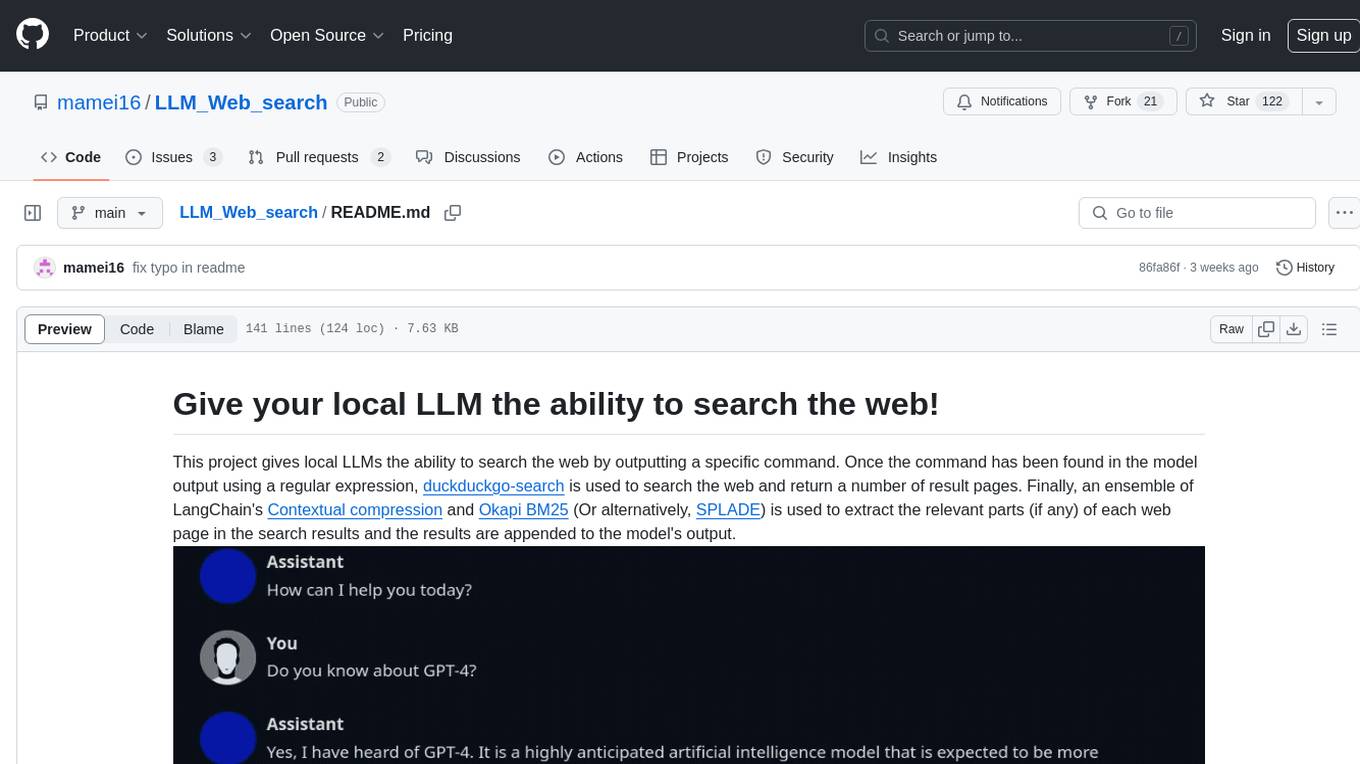

LLM_Web_search

LLM_Web_search project gives local LLMs the ability to search the web by outputting a specific command. It uses regular expressions to extract search queries from model output and then utilizes duckduckgo-search to search the web. LangChain's Contextual compression and Okapi BM25 or SPLADE are used to extract relevant parts of web pages in search results. The extracted results are appended to the model's output.

node-llama-cpp

node-llama-cpp is a tool that allows users to run AI models locally on their machines. It provides pre-built bindings with the option to build from source using cmake. Users can interact with text generation models, chat with models using a chat wrapper, and force models to generate output in a parseable format like JSON. The tool supports Metal and CUDA, offers CLI functionality for chatting with models without coding, and ensures up-to-date compatibility with the latest version of llama.cpp. Installation includes pre-built binaries for macOS, Linux, and Windows, with the option to build from source if binaries are not available for the platform.

Jlama

Jlama is a modern Java inference engine designed for large language models. It supports various model types such as Gemma, Llama, Mistral, GPT-2, BERT, and more. The tool implements features like Flash Attention, Mixture of Experts, and supports different model quantization formats. Built with Java 21 and utilizing the new Vector API for faster inference, Jlama allows users to add LLM inference directly to their Java applications. The tool includes a CLI for running models, a simple UI for chatting with LLMs, and examples for different model types.

torchchat

torchchat is a codebase showcasing the ability to run large language models (LLMs) seamlessly. It allows running LLMs using Python in various environments such as desktop, server, iOS, and Android. The tool supports running models via PyTorch, chatting, generating text, running chat in the browser, and running models on desktop/server without Python. It also provides features like AOT Inductor for faster execution, running in C++ using the runner, and deploying and running on iOS and Android. The tool supports popular hardware and OS including Linux, Mac OS, Android, and iOS, with various data types and execution modes available.

chatgpt-cli

ChatGPT CLI provides a powerful command-line interface for seamless interaction with ChatGPT models via OpenAI and Azure. It features streaming capabilities, extensive configuration options, and supports various modes like streaming, query, and interactive mode. Users can manage thread-based context, sliding window history, and provide custom context from any source. The CLI also offers model and thread listing, advanced configuration options, and supports GPT-4, GPT-3.5-turbo, and Perplexity's models. Installation is available via Homebrew or direct download, and users can configure settings through default values, a config.yaml file, or environment variables.

elmer

Elmer is a user-friendly wrapper over common APIs for calling llm’s, with support for streaming and easy registration and calling of R functions. Users can interact with Elmer in various ways, such as interactive chat console, interactive method call, programmatic chat, and streaming results. Elmer also supports async usage for running multiple chat sessions concurrently, useful for Shiny applications. The tool calling feature allows users to define external tools that Elmer can request to execute, enhancing the capabilities of the chat model.

mlx-lm

MLX LM is a Python package designed for generating text and fine-tuning large language models on Apple silicon using MLX. It offers integration with the Hugging Face Hub for easy access to thousands of LLMs, support for quantizing and uploading models to the Hub, low-rank and full model fine-tuning capabilities, and distributed inference and fine-tuning with `mx.distributed`. Users can interact with the package through command line options or the Python API, enabling tasks such as text generation, chatting with language models, model conversion, streaming generation, and sampling. MLX LM supports various Hugging Face models and provides tools for efficient scaling to long prompts and generations, including a rotating key-value cache and prompt caching. It requires macOS 15.0 or higher for optimal performance.

mini-sglang

Mini-SGLang is a lightweight yet high-performance inference framework for Large Language Models. With a compact codebase of ~5,000 lines of Python, it serves as both a capable inference engine and a transparent reference for researchers and developers. It achieves state-of-the-art throughput and latency with advanced optimizations such as Radix Cache, Chunked Prefill, Overlap Scheduling, Tensor Parallelism, and Optimized Kernels integrating FlashAttention and FlashInfer for maximum efficiency. Mini-SGLang is designed to demystify the complexities of modern LLM serving systems, providing a clean, modular, and fully type-annotated codebase that is easy to understand and modify.

20 - OpenAI Gpts

Chat with GPT 4o ("Omni") Assistant

Try the new AI chat model: GPT 4o ("Omni") Assistant. It's faster and better than regular GPT. Plus it will incorporate speech-to-text, intelligence, and speech-to-text capabilities with extra low latency.

TonyAIDeveloperResume

Chat with my resume to see if I am a good fit for your AI related job.

Eng. BAIA

Engenheiro Civil Sênior, sotaque baiano, humorístico, cria cronogramas de construção.

Frankenstein by My BookGPTs

Embark on a journey into 《Frankenstein; Or, The Modern Prometheus》. Ready for a deep dive into life's philosophies? Let's go!🎯

Chat with Bertrand Russell

Explore Russell's world, blending history with modern thought, challenging your mind, and enriching your philosophical journey.

Chat with Tertullian

Engage with the wisdom of early Christianity through Tertullian's lens, brought to life with modern relevance.

María Dolores

Inspired by a TV character, lives on a farm, analytical and philosophical, with a 'DEBUG' mode.

Bandoleer Straightener-Stamper Assistant

Hello I'm Bandoleer Straightener-Stamper Assistant! What would you like help with today?

Alien meaning?

What is Alien lyrics meaning? Alien singer:P. Sears, J. Sears,album:Modern Times ,album_time:1981. Click The LINK For More ↓↓↓