LLaMa2lang

Convenience scripts to finetune (chat-)LLaMa3 and other models for any language

Stars: 210

This repository contains convenience scripts to finetune LLaMa3-8B (or any other foundation model) for chat towards any language (that isn't English). The rationale behind this is that LLaMa3 is trained on primarily English data and while it works to some extent for other languages, its performance is poor compared to English.

README:

This repository contains convenience scripts to finetune LLaMa3-8B (or any other foundation model) for chat towards any language (that isn't English). The rationale behind this is that LLaMa3 is trained on primarily English data and while it works to some extent for other languages, its performance is poor compared to English.

pip install -r requirements.txt

# Translate OASST1 to target language

python translate.py m2m target_lang checkpoint_location

# Combine the checkpoint files into a dataset

python combine_checkpoints.py input_folder output_location

# Finetune

python finetune.py tuned_model dataset_name instruction_prompt

# Optionally finetune with DPO (RLHF)

python finetune_dpo.py tuned_model dataset_name instruction_prompt

# Run inference

python run_inference.py model_name instruction_prompt input

The process we follow to tune a foundation model such as LLaMa3 for a specific language is as follows:

- Load a dataset that contains Q&A/instruction pairs.

- Translate the entire dataset to a given target language.

- Load the translated dataset and extract threads by recursively selecting prompts with their respective answers with the highest rank only, through to subsequent prompts, etc.

- Turn the threads into prompts following a given template (customizable).

- Use QLoRA and PEFT to finetune a base foundation model's instruct finetune on this dataset.

- Run inference using the newly trained model.

- OPUS

- M2M

- MADLAD

- mBART

- NLLB

- Seamless (Large only)

- Tower Instruct (Can correct spelling mistakes)

The following have been tested but potentially more will work

- OASST1

- OASST2

- LLaMa3

- LLaMa2

- Mistral

- (Unofficial) Mixtral 8x7B

- [L2L-6] Investigate interoperability with other libraries (Axolotl, llamacpp, unsloth)

- [L2L-7] Allow for different quantizations next to QLoRA (GGUF, GPTQ, AWQ)

- [L2L-10] Support extending the tokenizer and vocabulary

The above process can be fully run on a free Google Colab T4 GPU. The last step however, can only be successfully run with short enough context windows and a batch of at most 2. In addition, the translation in step 2 takes about 36 hours in total for any given language so should be run in multiple steps if you want to stick with a free Google Colab GPU.

Our fine-tuned models for step 5 were performed using an A40 on vast.ai and cost us less than a dollar for each model, completing in about 1.5 hours.

-

Make sure pytorch is installed and working for your environment (use of CUDA preferable): https://pytorch.org/get-started/locally/

-

Clone the repo and install the requirements.

pip install -r requirements.txt

- Translate your base dataset to your designated target language.

usage: translate.py [-h] [--quant8] [--quant4] [--base_dataset BASE_DATASET] [--base_dataset_text_field BASE_DATASET_TEXT_FIELD] [--base_dataset_lang_field BASE_DATASET_LANG_FIELD]

[--checkpoint_n CHECKPOINT_N] [--batch_size BATCH_SIZE] [--max_length MAX_LENGTH] [--cpu] [--source_lang SOURCE_LANG]

{opus,mbart,madlad,m2m,nllb,seamless_m4t_v2,towerinstruct} ... target_lang checkpoint_location

Translate an instruct/RLHF dataset to a given target language using a variety of translation models

positional arguments:

{opus,mbart,madlad,m2m,nllb,seamless_m4t_v2,towerinstruct}

The model/architecture used for translation.

opus Translate the dataset using HelsinkiNLP OPUS models.

mbart Translate the dataset using mBART.

madlad Translate the dataset using Google's MADLAD models.

m2m Translate the dataset using Facebook's M2M models.

nllb Translate the dataset using Facebook's NLLB models.

seamless_m4t_v2 Translate the dataset using Facebook's SeamlessM4T-v2 multimodal models.

towerinstruct Translate the dataset using Unbabel's Tower Instruct. Make sure your target language is in the 10 languages supported by the model.

target_lang The target language. Make sure you use language codes defined by the translation model you are using.

checkpoint_location The folder the script will write (JSONized) checkpoint files to. Folder will be created if it doesn't exist.

options:

-h, --help show this help message and exit

--quant8 Optional flag to load the translation model in 8 bits. Decreases memory usage, increases running time

--quant4 Optional flag to load the translation model in 4 bits. Decreases memory usage, increases running time

--base_dataset BASE_DATASET

The base dataset to translate, defaults to OpenAssistant/oasst1

--base_dataset_text_field BASE_DATASET_TEXT_FIELD

The base dataset's column name containing the actual text to translate. Defaults to text

--base_dataset_lang_field BASE_DATASET_LANG_FIELD

The base dataset's column name containing the language the source text was written in. Defaults to lang

--checkpoint_n CHECKPOINT_N

An integer representing how often a checkpoint file will be written out. To start off, 400 is a reasonable number.

--batch_size BATCH_SIZE

The batch size for a single translation model. Adjust based on your GPU capacity. Default is 10.

--max_length MAX_LENGTH

How much tokens to generate at most. More tokens might be more accurate for lengthy input but creates a risk of running out of memory. Default is unlimited.

--cpu Forces usage of CPU. By default GPU is taken if available.

--source_lang SOURCE_LANG

Source language to select from OASST based on lang property of dataset

If you want more parameters for the different translation models, run:

python translate.py [MODEL] -h

Be sure to specify model-specific parameters first before you specify common parameters from the list above. Example calls:

# Using M2M with 4bit quantization and differen batch sizes to translate Dutch

python translate.py m2m nl ./output_nl --quant4 --batch_size 20

# Using madlad 7B with 8bit quantization for German with different max_length

python translate.py madlad --model_size 7b de ./output_de --quant8 --batch_size 5 --max_length 512

# Be sure to use target language codes that the model you use understands

python translate.py mbart xh_ZA ./output_xhosa

python translate.py nllb nld_Latn ./output_nl

- Combine the JSON arrays from the checkpoints' files into a Huggingface Dataset and then either write it to disk or publish it to Huggingface. The script will try to write to disk by default and fall back to publishing to Huggingface if the folder doesn't exist on disk. For publishing to Huggingface, make sure you have your

HF_TOKENenvironment variable set up as per the documentation.

usage: combine_checkpoints.py [-h] input_folder output_location

Combine checkpoint files from translation.

positional arguments:

input_folder The checkpoint folder used in translation, with the target language appended.

Example: "./output_nl".

output_location Where to write the Huggingface Dataset. Can be a disk location or a Huggingface

Dataset repository.

options:

-h, --help show this help message and exit

- Turn the translated messages into chat/instruct/prompt threads and finetune a foundate model's instruct using LoRA and PEFT.

usage: finetune.py [-h] [--base_model BASE_MODEL] [--base_dataset_text_field BASE_DATASET_TEXT_FIELD] [--base_dataset_rank_field BASE_DATASET_RANK_FIELD] [--base_dataset_id_field BASE_DATASET_ID_FIELD] [--base_dataset_parent_field BASE_DATASET_PARENT_FIELD]

[--base_dataset_role_field BASE_DATASET_ROLE_FIELD] [--quant8] [--noquant] [--max_seq_length MAX_SEQ_LENGTH] [--num_train_epochs NUM_TRAIN_EPOCHS] [--batch_size BATCH_SIZE] [--threads_output_name THREADS_OUTPUT_NAME] [--thread_template THREAD_TEMPLATE]

[--padding PADDING]

tuned_model dataset_name instruction_prompt

Finetune a base instruct/chat model using (Q)LoRA and PEFT

positional arguments:

tuned_model The name of the resulting tuned model.

dataset_name The name of the dataset to use for fine-tuning. This should be the output of the combine_checkpoints script.

instruction_prompt An instruction message added to every prompt given to the chatbot to force it to answer in the target language. Example: "You are a generic chatbot that always answers in English."

options:

-h, --help show this help message and exit

--base_model BASE_MODEL

The base foundation model. Default is "NousResearch/Meta-Llama-3-8B-Instruct".

--base_dataset_text_field BASE_DATASET_TEXT_FIELD

The dataset's column name containing the actual text to translate. Defaults to text

--base_dataset_rank_field BASE_DATASET_RANK_FIELD

The dataset's column name containing the rank of an answer given to a prompt. Defaults to rank

--base_dataset_id_field BASE_DATASET_ID_FIELD

The dataset's column name containing the id of a text. Defaults to message_id

--base_dataset_parent_field BASE_DATASET_PARENT_FIELD

The dataset's column name containing the parent id of a text. Defaults to parent_id

--base_dataset_role_field BASE_DATASET_ROLE_FIELD

The dataset's column name containing the role of the author of the text (eg. prompter, assistant). Defaults to role

--quant8 Finetunes the model in 8 bits. Requires more memory than the default 4 bit.

--noquant Do not quantize the finetuning. Requires more memory than the default 4 bit and optional 8 bit.

--max_seq_length MAX_SEQ_LENGTH

The maximum sequence length to use in finetuning. Should most likely line up with your base model's default max_seq_length. Default is 512.

--num_train_epochs NUM_TRAIN_EPOCHS

Number of epochs to use. 2 is default and has been shown to work well.

--batch_size BATCH_SIZE

The batch size to use in finetuning. Adjust to fit in your GPU vRAM. Default is 4

--threads_output_name THREADS_OUTPUT_NAME

If specified, the threads created in this script for finetuning will also be saved to disk or HuggingFace Hub.

--thread_template THREAD_TEMPLATE

A file containing the thread template to use. Default is threads/template_fefault.txt

--padding PADDING What padding to use, can be either left or right.

6.1 [OPTIONAL] Finetune using DPO (similar to RLHF)

usage: finetune_dpo.py [-h] [--base_model BASE_MODEL] [--base_dataset_text_field BASE_DATASET_TEXT_FIELD] [--base_dataset_rank_field BASE_DATASET_RANK_FIELD] [--base_dataset_id_field BASE_DATASET_ID_FIELD] [--base_dataset_parent_field BASE_DATASET_PARENT_FIELD] [--quant8]

[--noquant] [--max_seq_length MAX_SEQ_LENGTH] [--max_prompt_length MAX_PROMPT_LENGTH] [--num_train_epochs NUM_TRAIN_EPOCHS] [--batch_size BATCH_SIZE] [--threads_output_name THREADS_OUTPUT_NAME] [--thread_template THREAD_TEMPLATE] [--max_steps MAX_STEPS]

[--padding PADDING]

tuned_model dataset_name instruction_prompt

Finetune a base instruct/chat model using (Q)LoRA and PEFT using DPO (RLHF)

positional arguments:

tuned_model The name of the resulting tuned model.

dataset_name The name of the dataset to use for fine-tuning. This should be the output of the combine_checkpoints script.

instruction_prompt An instruction message added to every prompt given to the chatbot to force it to answer in the target language. Example: "You are a generic chatbot that always answers in English."

options:

-h, --help show this help message and exit

--base_model BASE_MODEL

The base foundation model. Default is "NousResearch/Meta-Llama-3-8B-Instruct".

--base_dataset_text_field BASE_DATASET_TEXT_FIELD

The dataset's column name containing the actual text to translate. Defaults to text

--base_dataset_rank_field BASE_DATASET_RANK_FIELD

The dataset's column name containing the rank of an answer given to a prompt. Defaults to rank

--base_dataset_id_field BASE_DATASET_ID_FIELD

The dataset's column name containing the id of a text. Defaults to message_id

--base_dataset_parent_field BASE_DATASET_PARENT_FIELD

The dataset's column name containing the parent id of a text. Defaults to parent_id

--quant8 Finetunes the model in 8 bits. Requires more memory than the default 4 bit.

--noquant Do not quantize the finetuning. Requires more memory than the default 4 bit and optional 8 bit.

--max_seq_length MAX_SEQ_LENGTH

The maximum sequence length to use in finetuning. Should most likely line up with your base model's default max_seq_length. Default is 512.

--max_prompt_length MAX_PROMPT_LENGTH

The maximum length of the prompts to use. Default is 512.

--num_train_epochs NUM_TRAIN_EPOCHS

Number of epochs to use. 2 is default and has been shown to work well.

--batch_size BATCH_SIZE

The batch size to use in finetuning. Adjust to fit in your GPU vRAM. Default is 4

--threads_output_name THREADS_OUTPUT_NAME

If specified, the threads created in this script for finetuning will also be saved to disk or HuggingFace Hub.

--thread_template THREAD_TEMPLATE

A file containing the thread template to use. Default is threads/template_fefault.txt

--max_steps MAX_STEPS

The maximum number of steps to run DPO for. Default is -1 which will run the data through fully for the number of epochs but this will be very time-consuming.

--padding PADDING What padding to use, can be either left or right.

6.1 [OPTIONAL] Finetune using ORPO (similar to RLHF)

usage: finetune_orpo.py [-h] [--base_model BASE_MODEL] [--base_dataset_text_field BASE_DATASET_TEXT_FIELD] [--base_dataset_rank_field BASE_DATASET_RANK_FIELD] [--base_dataset_id_field BASE_DATASET_ID_FIELD] [--base_dataset_parent_field BASE_DATASET_PARENT_FIELD] [--quant8]

[--noquant] [--max_seq_length MAX_SEQ_LENGTH] [--max_prompt_length MAX_PROMPT_LENGTH] [--num_train_epochs NUM_TRAIN_EPOCHS] [--batch_size BATCH_SIZE] [--threads_output_name THREADS_OUTPUT_NAME] [--thread_template THREAD_TEMPLATE] [--max_steps MAX_STEPS]

[--padding PADDING]

tuned_model dataset_name instruction_prompt

Finetune a base instruct/chat model using (Q)LoRA and PEFT using ORPO (RLHF)

positional arguments:

tuned_model The name of the resulting tuned model.

dataset_name The name of the dataset to use for fine-tuning. This should be the output of the combine_checkpoints script.

instruction_prompt An instruction message added to every prompt given to the chatbot to force it to answer in the target language. Example: "You are a generic chatbot that always answers in English."

options:

-h, --help show this help message and exit

--base_model BASE_MODEL

The base foundation model. Default is "NousResearch/Meta-Llama-3-8B-Instruct".

--base_dataset_text_field BASE_DATASET_TEXT_FIELD

The dataset's column name containing the actual text to translate. Defaults to text

--base_dataset_rank_field BASE_DATASET_RANK_FIELD

The dataset's column name containing the rank of an answer given to a prompt. Defaults to rank

--base_dataset_id_field BASE_DATASET_ID_FIELD

The dataset's column name containing the id of a text. Defaults to message_id

--base_dataset_parent_field BASE_DATASET_PARENT_FIELD

The dataset's column name containing the parent id of a text. Defaults to parent_id

--quant8 Finetunes the model in 8 bits. Requires more memory than the default 4 bit.

--noquant Do not quantize the finetuning. Requires more memory than the default 4 bit and optional 8 bit.

--max_seq_length MAX_SEQ_LENGTH

The maximum sequence length to use in finetuning. Should most likely line up with your base model's default max_seq_length. Default is 512.

--max_prompt_length MAX_PROMPT_LENGTH

The maximum length of the prompts to use. Default is 512.

--num_train_epochs NUM_TRAIN_EPOCHS

Number of epochs to use. 2 is default and has been shown to work well.

--batch_size BATCH_SIZE

The batch size to use in finetuning. Adjust to fit in your GPU vRAM. Default is 4

--threads_output_name THREADS_OUTPUT_NAME

If specified, the threads created in this script for finetuning will also be saved to disk or HuggingFace Hub.

--thread_template THREAD_TEMPLATE

A file containing the thread template to use. Default is threads/template_fefault.txt

--max_steps MAX_STEPS

The maximum number of steps to run ORPO for. Default is -1 which will run the data through fully for the number of epochs but this will be very time-consuming.

--padding PADDING What padding to use, can be either left or right.

- Run inference using the newly created QLoRA model.

usage: run_inference.py [-h] model_name instruction_prompt input

Script to run inference on a tuned model.

positional arguments:

model_name The name of the tuned model that you pushed to Huggingface in the previous

step.

instruction_prompt An instruction message added to every prompt given to the chatbot to force

it to answer in the target language.

input The actual chat input prompt. The script is only meant for testing purposes

and exits after answering.

options:

-h, --help show this help message and exit

How do I know which translation model to choose for my target language?

We got you covered with out benchmark.py script that helps make somewhat of a good guess (the dataset we use is the same as the OPUS models are trained on so the outcomes are always favorable towards OPUS). For usage, see the help of this script below. Models are loaded in 4-bit quantization and run on a small sample of the OPUS books subset.

Be sure to use the most commonly occurring languages in your base dataset as source_language and your target translation language as target_language. For OASST1 for example, be sure to at least run en and es as source languages.

usage: benchmark.py [-h] [--cpu] [--start START] [--n N] [--max_length MAX_LENGTH] source_language target_language included_models

Benchmark all the different translation models for a specific source and target language to find out which performs best. This uses 4bit quantization to limit GPU usage. Note:

the outcomes are indicative - you cannot assume corretness of the BLEU and CHRF scores but you can compare models against each other relatively.

positional arguments:

source_language The source language you want to test for. Check your dataset to see which occur most prevalent or use English as a good start.

target_language The source language you want to test for. This should be the language you want to apply the translate script on. Note: in benchmark, we use 2-character

language codes, in constrast to translate.py where you need to specify whatever your model expects.

included_models Comma-separated list of models to include. Allowed values are: opus, m2m_418m, m2m_1.2b, madlad_3b, madlad_7b, madlad_10b, madlad_7bbt, mbart,

nllb_distilled600m, nllb_1.3b, nllb_distilled1.3b, nllb_3.3b, seamless

options:

-h, --help show this help message and exit

--cpu Forces usage of CPU. By default GPU is taken if available.

--start START The starting offset to include sentences from the OPUS books dataset from. Defaults to 0.

--n N The number of sentences to benchmark on. Defaults to 100.

--max_length MAX_LENGTH

How much tokens to generate at most. More tokens might be more accurate for lengthy input but creates a risk of running out of memory. Default is 512.

We have created and will continue to create numerous datasets and models already. Want to help democratize LLMs? Clone the repo and create datasets and models for other languages, then create a PR.

| Dutch UnderstandLing/oasst1_nl | Spanish UnderstandLing/oasst1_es | French UnderstandLing/oasst1_fr | German UnderstandLing/oasst1_de |

| Catalan xaviviro/oasst1_ca | Portuguese UnderstandLing/oasst1_pt | Arabic HeshamHaroon/oasst-arabic | Italian UnderstandLing/oasst1_it |

| Russian UnderstandLing/oasst1_ru | Hindi UnderstandLing/oasst1_hi | Chinese UnderstandLing/oasst1_zh | Polish chrystians/oasst1_pl |

| Japanese UnderstandLing/oasst1_jap | Basque xezpeleta/oasst1_eu | Bengali UnderstandLing/oasst1_bn | Turkish UnderstandLing/oasst1_tr |

Make sure you have access to Meta's LLaMa3-8B model and set your HF_TOKEN before using these models.

| Dutch UnderstandLing/oasst1_nl_threads | Spanish UnderstandLing/oasst1_es_threads | French UnderstandLing/oasst1_fr_threads | German UnderstandLing/oasst1_de_threads |

| Catalan xaviviro/oasst1_ca_threads | Portuguese UnderstandLing/oasst1_pt_threads | Arabic HeshamHaroon/oasst-arabic_threads | Italian UnderstandLing/oasst1_it_threads |

| Russian UnderstandLing/oasst1_ru_threads | Hindi UnderstandLing/oasst1_hi_threads | Chinese UnderstandLing/oasst1_zh_threads | Polish chrystians/oasst1_pl_threads |

| Japanese UnderstandLing/oasst1_jap_threads | Basque xezpeleta/oasst1_eu_threads | Bengali UnderstandLing/oasst1_bn_threads | Turkish UnderstandLing/oasst1_tr_threads |

| UnderstandLing/llama-2-7b-chat-nl Dutch | UnderstandLing/llama-2-7b-chat-es Spanish | UnderstandLing/llama-2-7b-chat-fr French | UnderstandLing/llama-2-7b-chat-de German |

| xaviviro/llama-2-7b-chat-ca Catalan | UnderstandLing/llama-2-7b-chat-pt Portuguese | HeshamHaroon/llama-2-7b-chat-ar Arabic | UnderstandLing/llama-2-7b-chat-it Italian |

| UnderstandLing/llama-2-7b-chat-ru Russian | UnderstandLing/llama-2-7b-chat-hi Hindi | UnderstandLing/llama-2-7b-chat-zh Chinese | chrystians/llama-2-7b-chat-pl-polish-polski Polish |

| xezpeleta/llama-2-7b-chat-eu Basque | UnderstandLing/llama-2-7b-chat-bn Bengali | UnderstandLing/llama-2-7b-chat-tr Turkish |

| UnderstandLing/Mistral-7B-Instruct-v0.2-nl Dutch | UnderstandLing/Mistral-7B-Instruct-v0.2-es Spanish | UnderstandLing/Mistral-7B-Instruct-v0.2-de German |

| UnderstandLing/llama-2-13b-chat-nl Dutch | UnderstandLing/llama-2-13b-chat-es Spanish | UnderstandLing/llama-2-13b-chat-fr French |

| UnderstandLing/Mixtral-8x7B-Instruct-nl Dutch |

<s>[INST] <<SYS>> Je bent een generieke chatbot die altijd in het Nederlands antwoord geeft. <</SYS>> Wat is de hoofdstad van Nederland? [/INST] Amsterdam</s>

<s>[INST] <<SYS>> Je bent een generieke chatbot die altijd in het Nederlands antwoord geeft. <</SYS>> Wat is de hoofdstad van Nederland? [/INST] Amsterdam</s><s>[INST] Hoeveel inwoners heeft die stad? [/INST] 850 duizend inwoners (2023)</s>

<s>[INST] <<SYS>> Je bent een generieke chatbot die altijd in het Nederlands antwoord geeft. <</SYS>> Wat is de hoofdstad van Nederland? [/INST] Amsterdam</s><s>[INST] Hoeveel inwoners heeft die stad? [/INST] 850 duizend inwoners (2023)</s><s>[INST] In welke provincie ligt die stad? [/INST] In de provincie Noord-Holland</s>

<s>[INST] <<SYS>> Je bent een generieke chatbot die altijd in het Nederlands antwoord geeft. <</SYS>> Wie is de minister-president van Nederland? [/INST] Mark Rutte is sinds 2010 minister-president van Nederland. Hij is meerdere keren herkozen.</s>

-

Q: Why do you translate the full OASST1/2 dataset first? Wouldn't it be faster to only translate highest ranked threads?

-

A: While you can gain quite a lot in terms of throughput time by first creating the threads and then translating them, we provide full OASST1/2 translations to the community as we believe they can be useful on their own.

-

Q: How well do the fine-tunes perform compared to vanilla LLaMa3?

-

A: While we do not have formal benchmarks, getting LLaMa3 to consistently speak another language than English to begin with is challenging if not impossible. The non-English language it does produce is often grammatically broken. Our fine-tunes do not show this behavior.

-

Q: Can I use other frameworks for fine-tuning?

-

A: Yes you can, we use Axolotl for training on multi-GPU setups.

-

Q: Can I mix different translation models?

-

A: Absolutely, we think it might even increase performance to have translation done by multiple models. You can achieve this by early-stopping a translation and continuing from the checkpoints by reruning the translate script with a different translation model.

We are actively looking for funding to democratize AI and advance its applications. Contact us at [email protected] if you want to invest.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LLaMa2lang

Similar Open Source Tools

LLaMa2lang

This repository contains convenience scripts to finetune LLaMa3-8B (or any other foundation model) for chat towards any language (that isn't English). The rationale behind this is that LLaMa3 is trained on primarily English data and while it works to some extent for other languages, its performance is poor compared to English.

LLaMa2lang

LLaMa2lang is a repository containing convenience scripts to finetune LLaMa3-8B (or any other foundation model) for chat towards any language that isn't English. The repository aims to improve the performance of LLaMa3 for non-English languages by combining fine-tuning with RAG. Users can translate datasets, extract threads, turn threads into prompts, and finetune models using QLoRA and PEFT. Additionally, the repository supports translation models like OPUS, M2M, MADLAD, and base datasets like OASST1 and OASST2. The process involves loading datasets, translating them, combining checkpoints, and running inference using the newly trained model. The repository also provides benchmarking scripts to choose the right translation model for a target language.

olmocr

olmOCR is a toolkit designed for training language models to work with PDF documents in real-world scenarios. It includes various components such as a prompting strategy for natural text parsing, an evaluation toolkit for comparing pipeline versions, filtering by language and SEO spam removal, finetuning code for specific models, processing PDFs through a finetuned model, and viewing documents created from PDFs. The toolkit requires a recent NVIDIA GPU with at least 20 GB of RAM and 30GB of free disk space. Users can install dependencies, set up a conda environment, and utilize olmOCR for tasks like converting single or multiple PDFs, viewing extracted text, and running batch inference pipelines.

MARS5-TTS

MARS5 is a novel English speech model (TTS) developed by CAMB.AI, featuring a two-stage AR-NAR pipeline with a unique NAR component. The model can generate speech for various scenarios like sports commentary and anime with just 5 seconds of audio and a text snippet. It allows steering prosody using punctuation and capitalization in the transcript. Speaker identity is specified using an audio reference file, enabling 'deep clone' for improved quality. The model can be used via torch.hub or HuggingFace, supporting both shallow and deep cloning for inference. Checkpoints are provided for AR and NAR models, with hardware requirements of 750M+450M params on GPU. Contributions to improve model stability, performance, and reference audio selection are welcome.

searchGPT

searchGPT is an open-source project that aims to build a search engine based on Large Language Model (LLM) technology to provide natural language answers. It supports web search with real-time results, file content search, and semantic search from sources like the Internet. The tool integrates LLM technologies such as OpenAI and GooseAI, and offers an easy-to-use frontend user interface. The project is designed to provide grounded answers by referencing real-time factual information, addressing the limitations of LLM's training data. Contributions, especially from frontend developers, are welcome under the MIT License.

open-source-slack-ai

This repository provides a ready-to-run basic Slack AI solution that allows users to summarize threads and channels using OpenAI. Users can generate thread summaries, channel overviews, channel summaries since a specific time, and full channel summaries. The tool is powered by GPT-3.5-Turbo and an ensemble of NLP models. It requires Python 3.8 or higher, an OpenAI API key, Slack App with associated API tokens, Poetry package manager, and ngrok for local development. Users can customize channel and thread summaries, run tests with coverage using pytest, and contribute to the project for future enhancements.

Woodpecker

Woodpecker is a tool designed to correct hallucinations in Multimodal Large Language Models (MLLMs) by introducing a training-free method that picks out and corrects inconsistencies between generated text and image content. It consists of five stages: key concept extraction, question formulation, visual knowledge validation, visual claim generation, and hallucination correction. Woodpecker can be easily integrated with different MLLMs and provides interpretable results by accessing intermediate outputs of the stages. The tool has shown significant improvements in accuracy over baseline models like MiniGPT-4 and mPLUG-Owl.

LlamaEdge

The LlamaEdge project makes it easy to run LLM inference apps and create OpenAI-compatible API services for the Llama2 series of LLMs locally. It provides a Rust+Wasm stack for fast, portable, and secure LLM inference on heterogeneous edge devices. The project includes source code for text generation, chatbot, and API server applications, supporting all LLMs based on the llama2 framework in the GGUF format. LlamaEdge is committed to continuously testing and validating new open-source models and offers a list of supported models with download links and startup commands. It is cross-platform, supporting various OSes, CPUs, and GPUs, and provides troubleshooting tips for common errors.

project_alice

Alice is an agentic workflow framework that integrates task execution and intelligent chat capabilities. It provides a flexible environment for creating, managing, and deploying AI agents for various purposes, leveraging a microservices architecture with MongoDB for data persistence. The framework consists of components like APIs, agents, tasks, and chats that interact to produce outputs through files, messages, task results, and URL references. Users can create, test, and deploy agentic solutions in a human-language framework, making it easy to engage with by both users and agents. The tool offers an open-source option, user management, flexible model deployment, and programmatic access to tasks and chats.

air-script

AirScript is a domain-specific language for expressing AIR constraints for STARKs, with the goal of enabling writing and auditing constraints without the need to learn a specific programming language. It also aims to perform automated optimizations and output constraint evaluator code in multiple target languages. The project is organized into several crates including Parser, MIR, AIR, Winterfell code generator, ACE code generator, and AirScript CLI for transpiling AIRs to target languages.

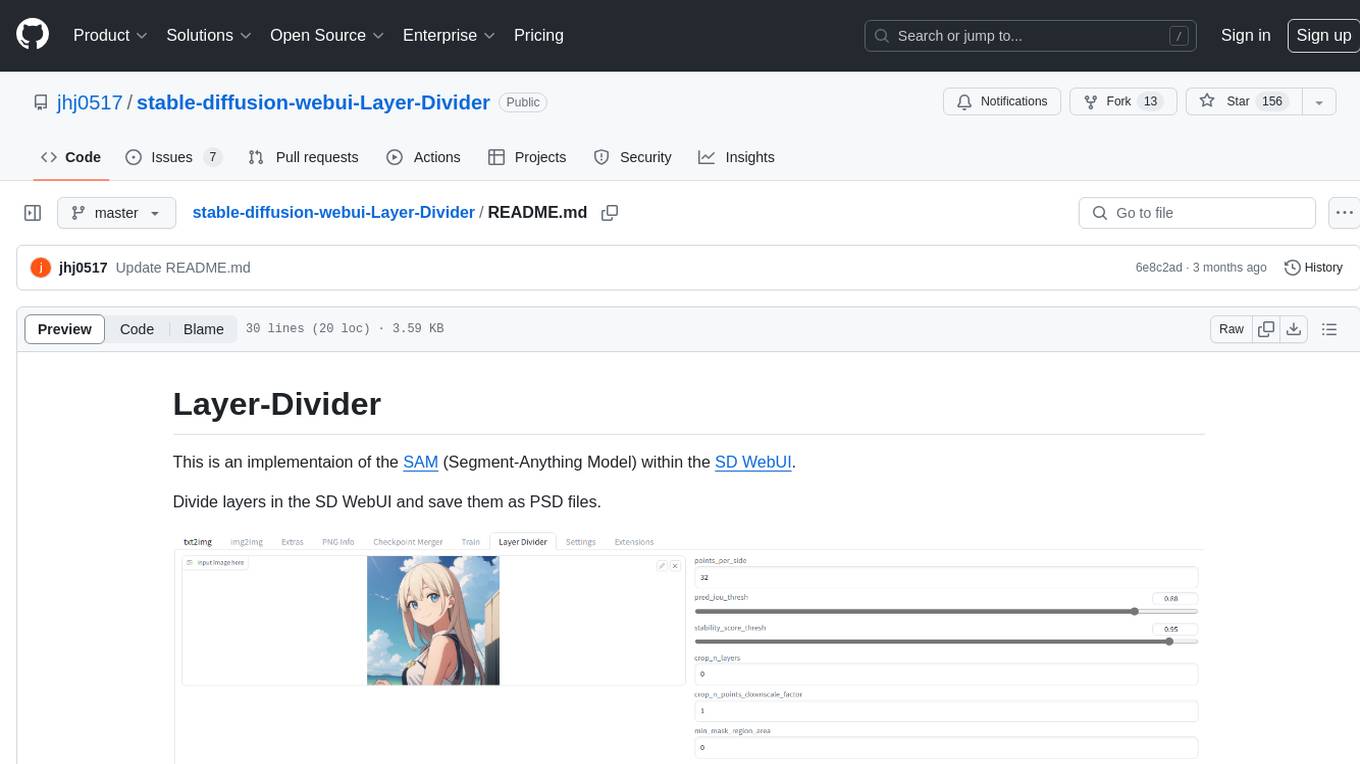

stable-diffusion-webui-Layer-Divider

This repository contains an implementation of the Segment-Anything Model (SAM) within the SD WebUI. It allows users to divide layers in the SD WebUI and save them as PSD files. Users can adjust parameters, click 'Generate', and view the output below. A PSD file will be saved in the designated folder. The tool provides various parameters for customization, such as points_per_side, pred_iou_thresh, stability_score_thresh, crops_n_layers, crop_n_points_downscale_factor, and min_mask_region_area.

burn

Burn is a new comprehensive dynamic Deep Learning Framework built using Rust with extreme flexibility, compute efficiency and portability as its primary goals.

kafka-ml

Kafka-ML is a framework designed to manage the pipeline of Tensorflow/Keras and PyTorch machine learning models on Kubernetes. It enables the design, training, and inference of ML models with datasets fed through Apache Kafka, connecting them directly to data streams like those from IoT devices. The Web UI allows easy definition of ML models without external libraries, catering to both experts and non-experts in ML/AI.

vscode-pddl

The vscode-pddl extension provides comprehensive support for Planning Domain Description Language (PDDL) in Visual Studio Code. It enables users to model planning domains, validate them, industrialize planning solutions, and run planners. The extension offers features like syntax highlighting, auto-completion, plan visualization, plan validation, plan happenings evaluation, search debugging, and integration with Planning.Domains. Users can create PDDL files, run planners, visualize plans, and debug search algorithms efficiently within VS Code.

Guardrails

Guardrails is a security tool designed to help developers identify and fix security vulnerabilities in their code. It provides automated scanning and analysis of code repositories to detect potential security issues, such as sensitive data exposure, injection attacks, and insecure configurations. By integrating Guardrails into the development workflow, teams can proactively address security concerns and reduce the risk of security breaches. The tool offers detailed reports and actionable recommendations to guide developers in remediation efforts, ultimately improving the overall security posture of the codebase. Guardrails supports multiple programming languages and frameworks, making it versatile and adaptable to different development environments. With its user-friendly interface and seamless integration with popular version control systems, Guardrails empowers developers to prioritize security without compromising productivity.

neutone_sdk

The Neutone SDK is a tool designed for researchers to wrap their own audio models and run them in a DAW using the Neutone Plugin. It simplifies the process by allowing models to be built using PyTorch and minimal Python code, eliminating the need for extensive C++ knowledge. The SDK provides support for buffering inputs and outputs, sample rate conversion, and profiling tools for model performance testing. It also offers examples, notebooks, and a submission process for sharing models with the community.

For similar tasks

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

R2R

R2R (RAG to Riches) is a fast and efficient framework for serving high-quality Retrieval-Augmented Generation (RAG) to end users. The framework is designed with customizable pipelines and a feature-rich FastAPI implementation, enabling developers to quickly deploy and scale RAG-based applications. R2R was conceived to bridge the gap between local LLM experimentation and scalable production solutions. **R2R is to LangChain/LlamaIndex what NextJS is to React**. A JavaScript client for R2R deployments can be found here. ### Key Features * **🚀 Deploy** : Instantly launch production-ready RAG pipelines with streaming capabilities. * **🧩 Customize** : Tailor your pipeline with intuitive configuration files. * **🔌 Extend** : Enhance your pipeline with custom code integrations. * **⚖️ Autoscale** : Scale your pipeline effortlessly in the cloud using SciPhi. * **🤖 OSS** : Benefit from a framework developed by the open-source community, designed to simplify RAG deployment.

intel-extension-for-transformers

Intel® Extension for Transformers is an innovative toolkit designed to accelerate GenAI/LLM everywhere with the optimal performance of Transformer-based models on various Intel platforms, including Intel Gaudi2, Intel CPU, and Intel GPU. The toolkit provides the below key features and examples: * Seamless user experience of model compressions on Transformer-based models by extending [Hugging Face transformers](https://github.com/huggingface/transformers) APIs and leveraging [Intel® Neural Compressor](https://github.com/intel/neural-compressor) * Advanced software optimizations and unique compression-aware runtime (released with NeurIPS 2022's paper [Fast Distilbert on CPUs](https://arxiv.org/abs/2211.07715) and [QuaLA-MiniLM: a Quantized Length Adaptive MiniLM](https://arxiv.org/abs/2210.17114), and NeurIPS 2021's paper [Prune Once for All: Sparse Pre-Trained Language Models](https://arxiv.org/abs/2111.05754)) * Optimized Transformer-based model packages such as [Stable Diffusion](examples/huggingface/pytorch/text-to-image/deployment/stable_diffusion), [GPT-J-6B](examples/huggingface/pytorch/text-generation/deployment), [GPT-NEOX](examples/huggingface/pytorch/language-modeling/quantization#2-validated-model-list), [BLOOM-176B](examples/huggingface/pytorch/language-modeling/inference#BLOOM-176B), [T5](examples/huggingface/pytorch/summarization/quantization#2-validated-model-list), [Flan-T5](examples/huggingface/pytorch/summarization/quantization#2-validated-model-list), and end-to-end workflows such as [SetFit-based text classification](docs/tutorials/pytorch/text-classification/SetFit_model_compression_AGNews.ipynb) and [document level sentiment analysis (DLSA)](workflows/dlsa) * [NeuralChat](intel_extension_for_transformers/neural_chat), a customizable chatbot framework to create your own chatbot within minutes by leveraging a rich set of [plugins](https://github.com/intel/intel-extension-for-transformers/blob/main/intel_extension_for_transformers/neural_chat/docs/advanced_features.md) such as [Knowledge Retrieval](./intel_extension_for_transformers/neural_chat/pipeline/plugins/retrieval/README.md), [Speech Interaction](./intel_extension_for_transformers/neural_chat/pipeline/plugins/audio/README.md), [Query Caching](./intel_extension_for_transformers/neural_chat/pipeline/plugins/caching/README.md), and [Security Guardrail](./intel_extension_for_transformers/neural_chat/pipeline/plugins/security/README.md). This framework supports Intel Gaudi2/CPU/GPU. * [Inference](https://github.com/intel/neural-speed/tree/main) of Large Language Model (LLM) in pure C/C++ with weight-only quantization kernels for Intel CPU and Intel GPU (TBD), supporting [GPT-NEOX](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptneox), [LLAMA](https://github.com/intel/neural-speed/tree/main/neural_speed/models/llama), [MPT](https://github.com/intel/neural-speed/tree/main/neural_speed/models/mpt), [FALCON](https://github.com/intel/neural-speed/tree/main/neural_speed/models/falcon), [BLOOM-7B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/bloom), [OPT](https://github.com/intel/neural-speed/tree/main/neural_speed/models/opt), [ChatGLM2-6B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/chatglm), [GPT-J-6B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptj), and [Dolly-v2-3B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptneox). Support AMX, VNNI, AVX512F and AVX2 instruction set. We've boosted the performance of Intel CPUs, with a particular focus on the 4th generation Intel Xeon Scalable processor, codenamed [Sapphire Rapids](https://www.intel.com/content/www/us/en/products/docs/processors/xeon-accelerated/4th-gen-xeon-scalable-processors.html).

opening-up-chatgpt.github.io

This repository provides a curated list of open-source projects that implement instruction-tuned large language models (LLMs) with reinforcement learning from human feedback (RLHF). The projects are evaluated in terms of their openness across a predefined set of criteria in the areas of Availability, Documentation, and Access. The goal of this repository is to promote transparency and accountability in the development and deployment of LLMs.

LLaMa2lang

This repository contains convenience scripts to finetune LLaMa3-8B (or any other foundation model) for chat towards any language (that isn't English). The rationale behind this is that LLaMa3 is trained on primarily English data and while it works to some extent for other languages, its performance is poor compared to English.

ChatGLM3

ChatGLM3 is a conversational pretrained model jointly released by Zhipu AI and THU's KEG Lab. ChatGLM3-6B is the open-sourced model in the ChatGLM3 series. It inherits the advantages of its predecessors, such as fluent conversation and low deployment threshold. In addition, ChatGLM3-6B introduces the following features: 1. A stronger foundation model: ChatGLM3-6B's foundation model ChatGLM3-6B-Base employs more diverse training data, more sufficient training steps, and more reasonable training strategies. Evaluation on datasets from different perspectives, such as semantics, mathematics, reasoning, code, and knowledge, shows that ChatGLM3-6B-Base has the strongest performance among foundation models below 10B parameters. 2. More complete functional support: ChatGLM3-6B adopts a newly designed prompt format, which supports not only normal multi-turn dialogue, but also complex scenarios such as tool invocation (Function Call), code execution (Code Interpreter), and Agent tasks. 3. A more comprehensive open-source sequence: In addition to the dialogue model ChatGLM3-6B, the foundation model ChatGLM3-6B-Base, the long-text dialogue model ChatGLM3-6B-32K, and ChatGLM3-6B-128K, which further enhances the long-text comprehension ability, are also open-sourced. All the above weights are completely open to academic research and are also allowed for free commercial use after filling out a questionnaire.

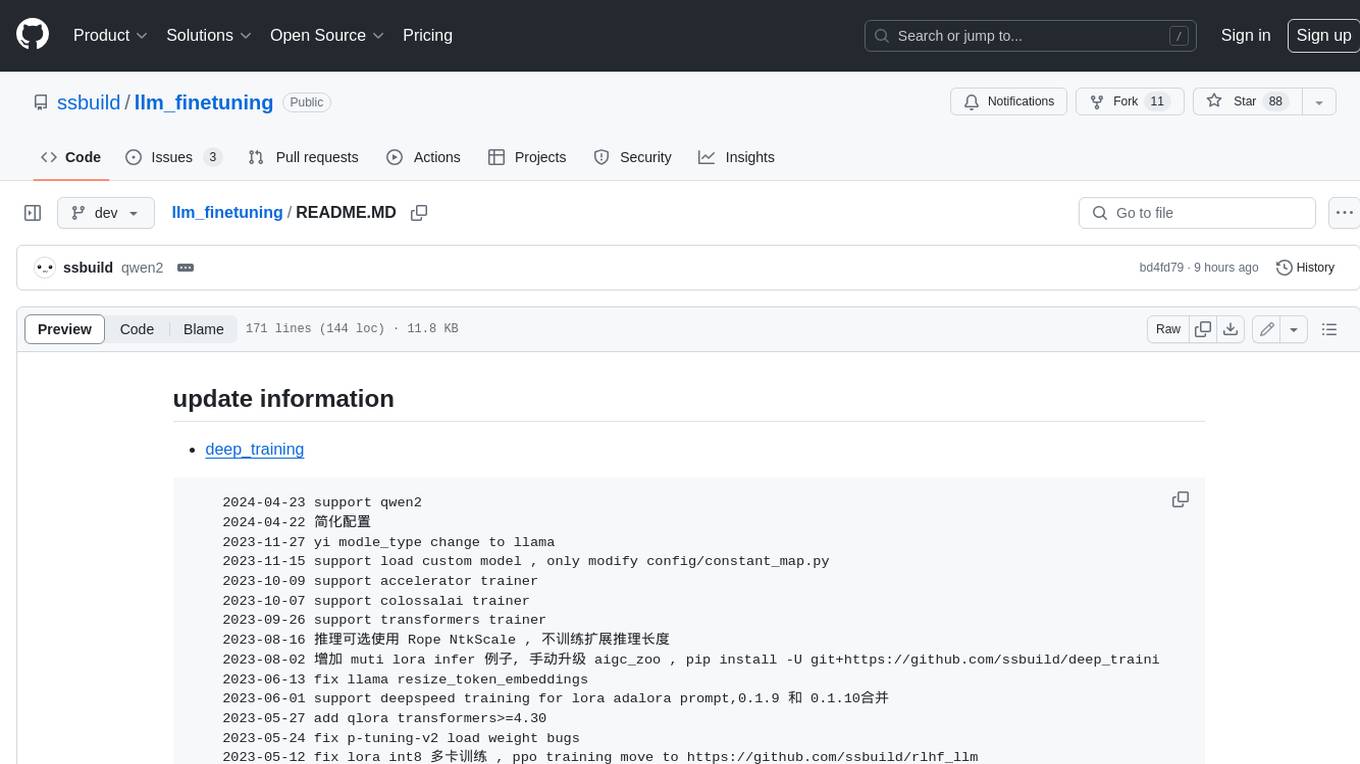

llm_finetuning

This repository provides a comprehensive set of tools for fine-tuning large language models (LLMs) using various techniques, including full parameter training, LoRA (Low-Rank Adaptation), and P-Tuning V2. It supports a wide range of LLM models, including Qwen, Yi, Llama, and others. The repository includes scripts for data preparation, training, and inference, making it easy for users to fine-tune LLMs for specific tasks. Additionally, it offers a collection of pre-trained models and provides detailed documentation and examples to guide users through the process.

ollama

Ollama is a lightweight, extensible framework for building and running language models on the local machine. It provides a simple API for creating, running, and managing models, as well as a library of pre-built models that can be easily used in a variety of applications. Ollama is designed to be easy to use and accessible to developers of all levels. It is open source and available for free on GitHub.

llama_ros

This repository provides a set of ROS 2 packages to integrate llama.cpp into ROS 2. By using the llama_ros packages, you can easily incorporate the powerful optimization capabilities of llama.cpp into your ROS 2 projects by running GGUF-based LLMs and VLMs.