Best AI tools for< Text Summarization >

Infographic

20 - AI tool Sites

Long Summary

Long Summary is an AI tool that allows users to generate custom-length summaries from large texts with no length limits. It overcomes the constraints of regular AI models by providing accurate and coherent long summaries without losing essential details. The tool is ideal for businesses, professionals, and developers seeking detailed overviews without compromising critical information. Long Summary offers features such as handling large texts and files, generating custom-length summaries, and providing a developer API for integration into applications.

Tinq.ai

Tinq.ai is a natural language processing (NLP) tool that provides a range of text analysis capabilities through its API. It offers tools for tasks such as plagiarism checking, text summarization, sentiment analysis, named entity recognition, and article extraction. Tinq.ai's API can be integrated into applications to add NLP functionality, such as content moderation, sentiment analysis, and text rewriting.

Rytar

Rytar is an AI-powered writing platform that helps users generate unique, relevant, and high-quality content in seconds. It uses state-of-the-art AI writing models to generate articles, blog posts, website pages, and other types of content from just a headline or a few keywords. Rytar is designed to help users save time and effort in the content creation process, and to produce content that is optimized for SEO and readability.

AI Writer

This website provides a suite of AI-powered writing tools, including an AI writer, text summarizer, story writer, and headline generator. These tools can help you generate high-quality content quickly and easily.

Cognify Insights

Cognify Insights is an AI-powered research assistant that provides instant understanding of online content such as text, diagrams, tables, and graphs. With a simple drag and drop feature, users can quickly analyze any type of content without leaving their browsing tab. The tool offers valuable insights and helps users unlock information efficiently.

TLDR This

TLDR This is an online article summarizer tool that helps users quickly understand the essence of lengthy content. It uses AI to analyze any piece of text and summarize it automatically, in a way that makes it easy to read, understand, and act on. TLDR This also extracts essential metadata such as author and date information, related images, and the title. Additionally, it estimates the reading time for news articles and blog posts, ensuring users have all the necessary information consolidated in one place for efficient reading. TLDR This is designed for students, writers, teachers, institutions, journalists, and any internet user who needs to quickly understand the essence of lengthy content.

Text Summarizer

The website offers a free online text summarizer powered by AI, designed to condense lengthy texts efficiently. It caters to professionals, students, and researchers who need to extract key details from documents. The tool utilizes advanced AI algorithms to provide users with essential information quickly, enhancing learning and productivity. Users can easily summarize text by pasting it into the tool, generating clear and concise summaries for research or quick information retrieval. The AI-enhanced tool aims to improve efficiency in processing large volumes of text.

InfraNodus

InfraNodus is a text network visualization tool that helps users generate insights from any discourse by representing it as a network. It uses AI-powered algorithms to identify structural gaps in the text and suggest ways to bridge them. InfraNodus can be used for a variety of purposes, including research, creative writing, marketing, and SEO.

Summarizer AI

Summarizer AI is a free online tool that simplifies and condenses extensive text documents, articles, or any written content into concise, easily digestible summaries. This cutting-edge artificial intelligence (AI) technology aims to enhance productivity and comprehension by breaking down complex information into its most essential points, making it particularly useful for students, researchers, professionals, and anyone looking to quickly grasp the main ideas of lengthy texts. The platform is user-friendly, emphasizing privacy and security for its users. It enhances reading comprehension by highlighting key terms and facilitates efficient knowledge acquisition without compromising on data confidentiality. Summarizer AI stands out for its versatility, ease of use, and commitment to user privacy, making it an invaluable resource for efficient text analysis and summarization.

TLDRai

TLDRai.com is an AI tool designed to help users summarize any text into concise and easy-to-digest content, enabling them to free themselves from information overload. The tool utilizes AI technology to provide efficient text summarization services, making it a valuable resource for individuals seeking quick and accurate summaries of lengthy texts.

UpSum

UpSum is a text summarization tool that uses advanced AI technology to condense lengthy texts into concise summaries. It is designed to save users time and effort by extracting the key points and insights from documents, research papers, news articles, and other written content. UpSum's AI algorithm analyzes the text, identifies the most important sentences and phrases, and assembles them into a coherent summary that accurately represents the main ideas and key takeaways of the original text. The tool is easy to use, simply upload or paste your text, select the desired summary length, and click the summarize button. UpSum is available as a free web-based tool, as well as a premium subscription with additional features and capabilities.

AI Summarizing Tool

The AI Summarizing Tool is a free online summary generator that uses advanced AI technology to quickly identify important points in text while maintaining the original context. Users can summarize various types of content such as articles, paragraphs, essays, and thesis. The tool is developed with algorithms that ensure accuracy and efficiency in creating summaries without altering the original meaning of the content. It offers features like free usage, unlimited text summarization, support for multiple languages, integration with other writing tools, and precise summaries using AI technology.

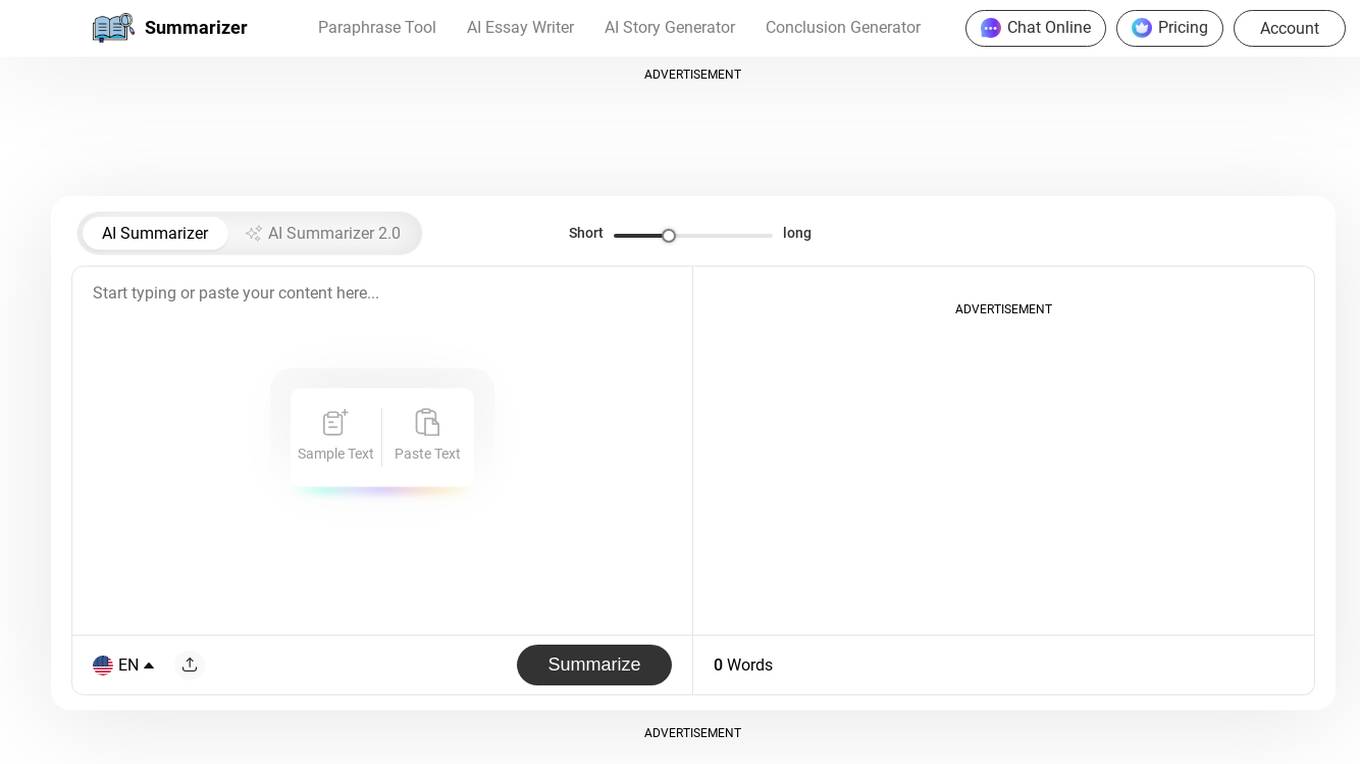

AI Summarizer

AI Summarizer is an online tool that uses state-of-the-art AI technology to shorten text while preserving all main points. It ensures accuracy and maintains original context, making it suitable for various types of content like essays or blog posts. Users can easily summarize text by typing or uploading content, with options to download, copy, or clear the summary. The tool offers features like setting summary length, showing bullets and best lines, and supporting multiple languages. It is known for its extensive word count limit, data safety, and readability-enhancing features.

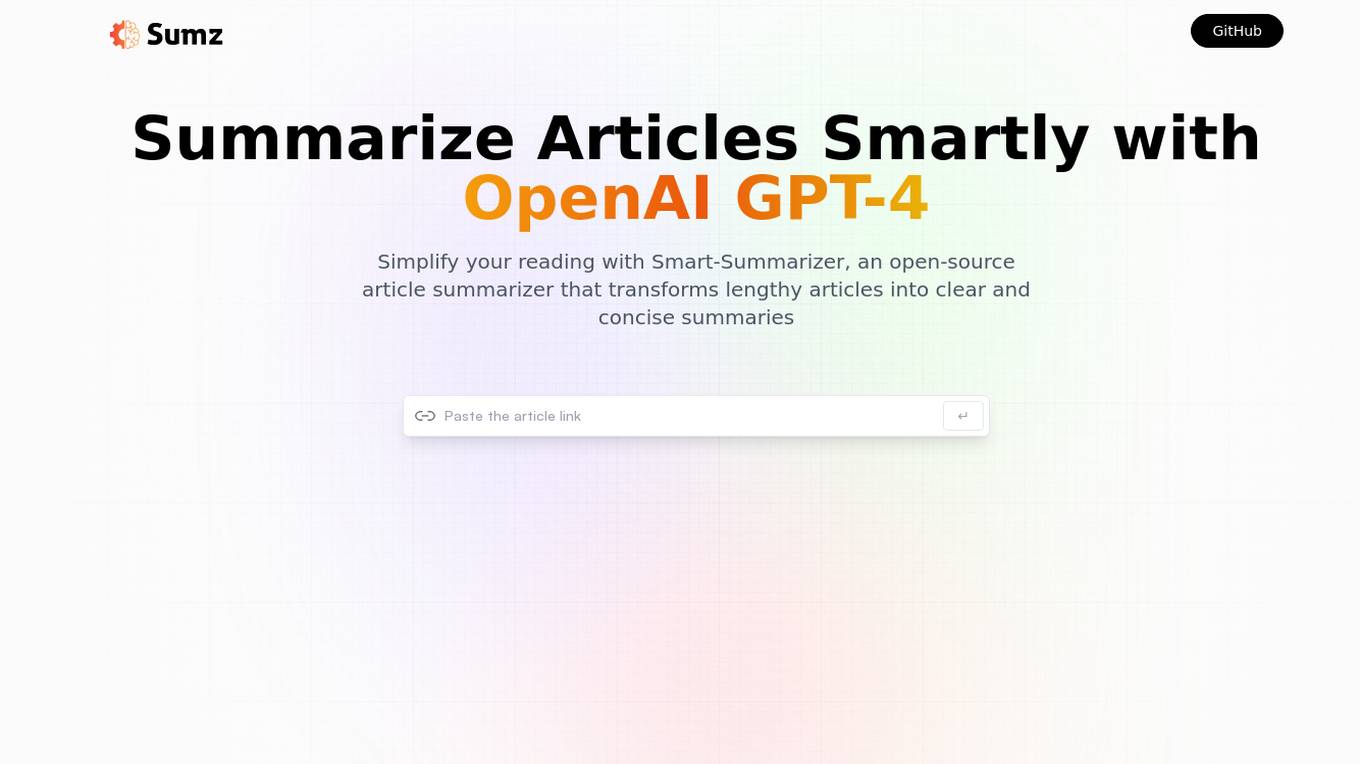

Smart-Summarizer

Smart-Summarizer is a powerful AI-powered tool that helps you summarize text quickly and easily. With its advanced algorithms, Smart-Summarizer can automatically extract the most important points from any piece of text, creating a concise and informative summary in seconds. Whether you're a student trying to condense your notes, a researcher needing to synthesize complex information, or a professional looking to save time on reading lengthy documents, Smart-Summarizer is the perfect tool for you.

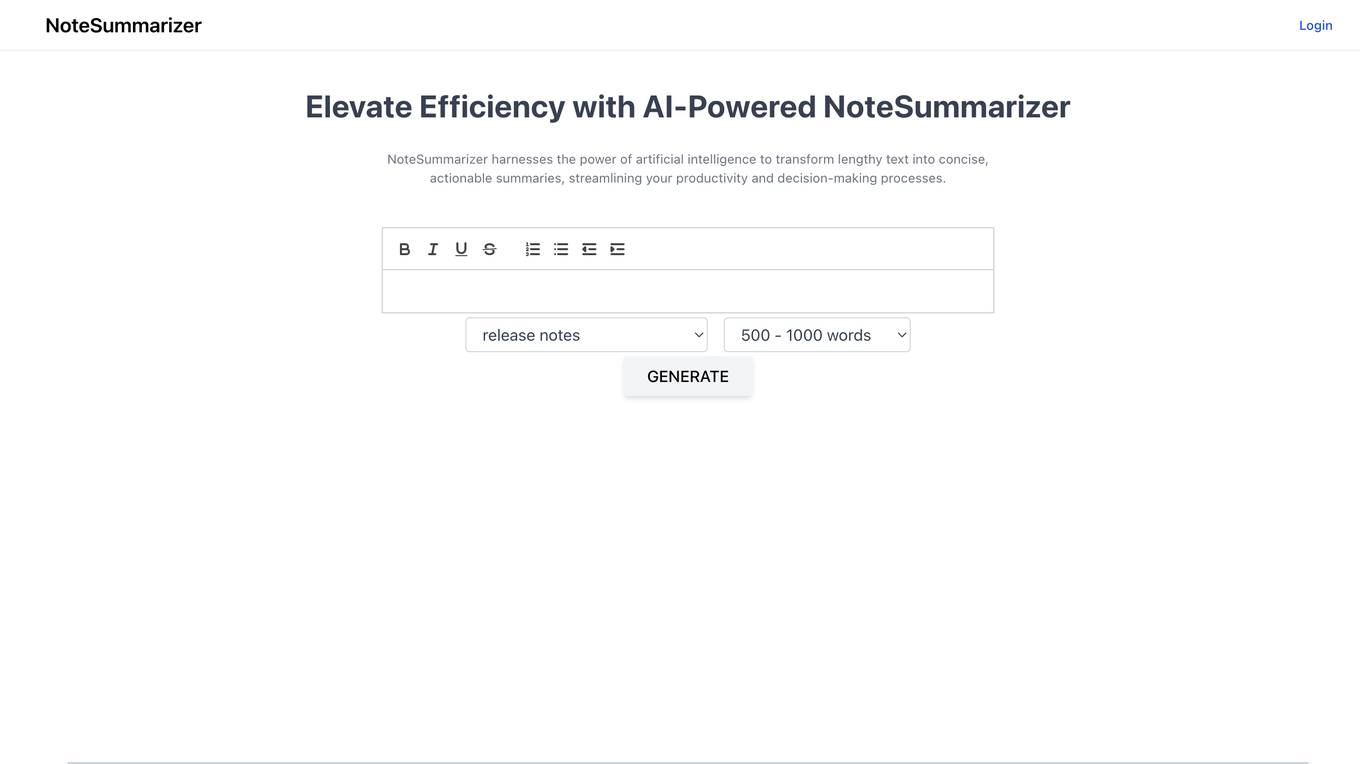

Note Summarizer

Note Summarizer is an AI-powered tool that helps you quickly and easily summarize large amounts of text. With its advanced natural language processing capabilities, Note Summarizer can extract the most important points from any document, article, or website, providing you with a concise and informative summary in seconds.

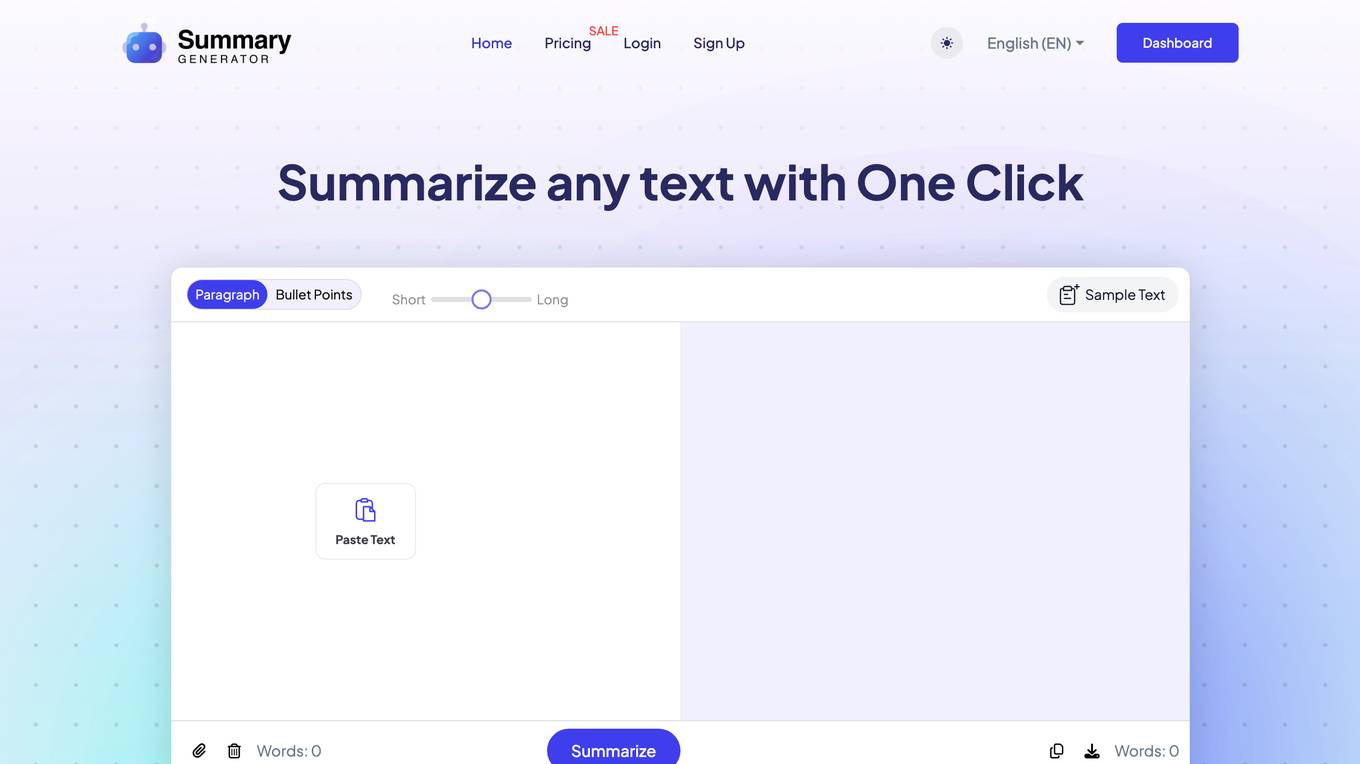

SummaryGenerator.io

SummaryGenerator.io is an AI-powered text summarizer that uses advanced algorithms and natural language processing to analyze the content and identify main ideas to generate relevant summaries. It generates summaries of varying lengths for any type of content.

Clarifai

Clarifai is a full-stack AI developer platform that provides a range of tools and services for building and deploying AI applications. The platform includes a variety of computer vision, natural language processing, and generative AI models, as well as tools for data preparation, model training, and model deployment. Clarifai is used by a variety of businesses and organizations, including Fortune 500 companies, startups, and government agencies.

ContextClue

ContextClue is an AI text analysis tool that offers enhanced document insights through features like text summarization, report generation, and LLM-driven semantic search. It helps users summarize multi-format content, automate document creation, and enhance research by understanding context and intent. ContextClue empowers users to efficiently analyze documents, extract insights, and generate content with unparalleled accuracy. The tool can be customized and integrated into existing workflows, making it suitable for various industries and tasks.

SumyAI

SumyAI is an AI-powered tool that helps users get 10x faster insights from YouTube videos. It condenses lengthy videos into key points for faster absorption, saving time and enhancing retention. SumyAI also provides summaries of events and conferences, podcasts and interviews, educational tutorials, product reviews, news reports, and entertainment.

Summate.it

Summate.it is a tool that uses OpenAI to quickly summarize web articles. It is simple and clean, and it can be used to summarize any web article by simply pasting the URL into the text box. Summate.it is a great way to quickly get the gist of an article without having to read the entire thing.

11 - Open Source Tools

llama_ros

This repository provides a set of ROS 2 packages to integrate llama.cpp into ROS 2. By using the llama_ros packages, you can easily incorporate the powerful optimization capabilities of llama.cpp into your ROS 2 projects by running GGUF-based LLMs and VLMs.

curated-transformers

Curated Transformers is a transformer library for PyTorch that provides state-of-the-art models composed of reusable components. It supports various transformer architectures, including encoders like ALBERT, BERT, and RoBERTa, and decoders like Falcon, Llama, and MPT. The library emphasizes consistent type annotations, minimal dependencies, and ease of use for education and research. It has been production-tested by Explosion and will be the default transformer implementation in spaCy 3.7.

intel-extension-for-transformers

Intel® Extension for Transformers is an innovative toolkit designed to accelerate GenAI/LLM everywhere with the optimal performance of Transformer-based models on various Intel platforms, including Intel Gaudi2, Intel CPU, and Intel GPU. The toolkit provides the below key features and examples: * Seamless user experience of model compressions on Transformer-based models by extending [Hugging Face transformers](https://github.com/huggingface/transformers) APIs and leveraging [Intel® Neural Compressor](https://github.com/intel/neural-compressor) * Advanced software optimizations and unique compression-aware runtime (released with NeurIPS 2022's paper [Fast Distilbert on CPUs](https://arxiv.org/abs/2211.07715) and [QuaLA-MiniLM: a Quantized Length Adaptive MiniLM](https://arxiv.org/abs/2210.17114), and NeurIPS 2021's paper [Prune Once for All: Sparse Pre-Trained Language Models](https://arxiv.org/abs/2111.05754)) * Optimized Transformer-based model packages such as [Stable Diffusion](examples/huggingface/pytorch/text-to-image/deployment/stable_diffusion), [GPT-J-6B](examples/huggingface/pytorch/text-generation/deployment), [GPT-NEOX](examples/huggingface/pytorch/language-modeling/quantization#2-validated-model-list), [BLOOM-176B](examples/huggingface/pytorch/language-modeling/inference#BLOOM-176B), [T5](examples/huggingface/pytorch/summarization/quantization#2-validated-model-list), [Flan-T5](examples/huggingface/pytorch/summarization/quantization#2-validated-model-list), and end-to-end workflows such as [SetFit-based text classification](docs/tutorials/pytorch/text-classification/SetFit_model_compression_AGNews.ipynb) and [document level sentiment analysis (DLSA)](workflows/dlsa) * [NeuralChat](intel_extension_for_transformers/neural_chat), a customizable chatbot framework to create your own chatbot within minutes by leveraging a rich set of [plugins](https://github.com/intel/intel-extension-for-transformers/blob/main/intel_extension_for_transformers/neural_chat/docs/advanced_features.md) such as [Knowledge Retrieval](./intel_extension_for_transformers/neural_chat/pipeline/plugins/retrieval/README.md), [Speech Interaction](./intel_extension_for_transformers/neural_chat/pipeline/plugins/audio/README.md), [Query Caching](./intel_extension_for_transformers/neural_chat/pipeline/plugins/caching/README.md), and [Security Guardrail](./intel_extension_for_transformers/neural_chat/pipeline/plugins/security/README.md). This framework supports Intel Gaudi2/CPU/GPU. * [Inference](https://github.com/intel/neural-speed/tree/main) of Large Language Model (LLM) in pure C/C++ with weight-only quantization kernels for Intel CPU and Intel GPU (TBD), supporting [GPT-NEOX](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptneox), [LLAMA](https://github.com/intel/neural-speed/tree/main/neural_speed/models/llama), [MPT](https://github.com/intel/neural-speed/tree/main/neural_speed/models/mpt), [FALCON](https://github.com/intel/neural-speed/tree/main/neural_speed/models/falcon), [BLOOM-7B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/bloom), [OPT](https://github.com/intel/neural-speed/tree/main/neural_speed/models/opt), [ChatGLM2-6B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/chatglm), [GPT-J-6B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptj), and [Dolly-v2-3B](https://github.com/intel/neural-speed/tree/main/neural_speed/models/gptneox). Support AMX, VNNI, AVX512F and AVX2 instruction set. We've boosted the performance of Intel CPUs, with a particular focus on the 4th generation Intel Xeon Scalable processor, codenamed [Sapphire Rapids](https://www.intel.com/content/www/us/en/products/docs/processors/xeon-accelerated/4th-gen-xeon-scalable-processors.html).

bert4torch

**bert4torch** is a high-level framework for training and deploying transformer models in PyTorch. It provides a simple and efficient API for building, training, and evaluating transformer models, and supports a wide range of pre-trained models, including BERT, RoBERTa, ALBERT, XLNet, and GPT-2. bert4torch also includes a number of useful features, such as data loading, tokenization, and model evaluation. It is a powerful and versatile tool for natural language processing tasks.

lmdeploy

LMDeploy is a toolkit for compressing, deploying, and serving LLM, developed by the MMRazor and MMDeploy teams. It has the following core features: * **Efficient Inference** : LMDeploy delivers up to 1.8x higher request throughput than vLLM, by introducing key features like persistent batch(a.k.a. continuous batching), blocked KV cache, dynamic split&fuse, tensor parallelism, high-performance CUDA kernels and so on. * **Effective Quantization** : LMDeploy supports weight-only and k/v quantization, and the 4-bit inference performance is 2.4x higher than FP16. The quantization quality has been confirmed via OpenCompass evaluation. * **Effortless Distribution Server** : Leveraging the request distribution service, LMDeploy facilitates an easy and efficient deployment of multi-model services across multiple machines and cards. * **Interactive Inference Mode** : By caching the k/v of attention during multi-round dialogue processes, the engine remembers dialogue history, thus avoiding repetitive processing of historical sessions.

fastllm

FastLLM is a high-performance large model inference library implemented in pure C++ with no third-party dependencies. Models of 6-7B size can run smoothly on Android devices. Deployment communication QQ group: 831641348

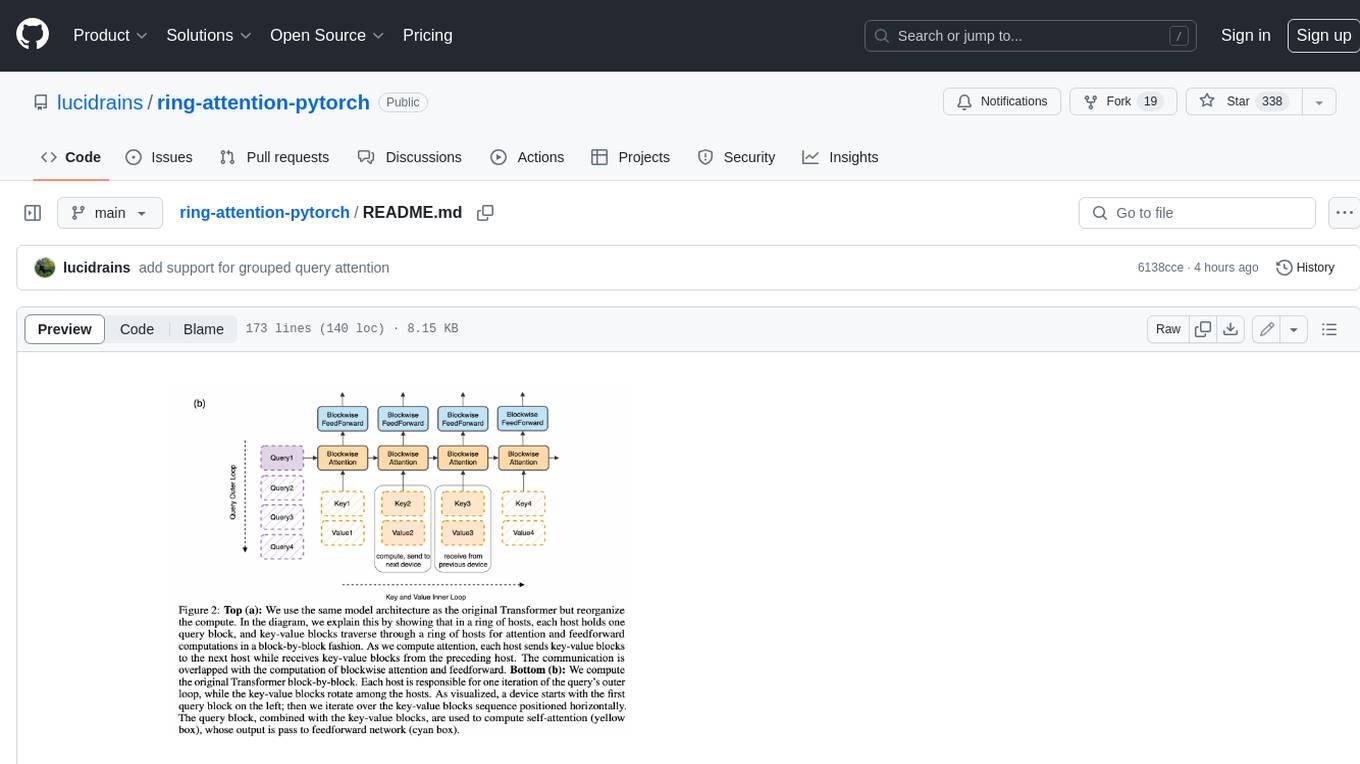

ring-attention-pytorch

This repository contains an implementation of Ring Attention, a technique for processing large sequences in transformers. Ring Attention splits the data across the sequence dimension and applies ring reduce to the processing of the tiles of the attention matrix, similar to flash attention. It also includes support for Striped Attention, a follow-up paper that permutes the sequence for better workload balancing for autoregressive transformers, and grouped query attention, which saves on communication costs during the ring reduce. The repository includes a CUDA version of the flash attention kernel, which is used for the forward and backward passes of the ring attention. It also includes logic for splitting the sequence evenly among ranks, either within the attention function or in the external ring transformer wrapper, and basic test cases with two processes to check for equivalent output and gradients.

LLaMa2lang

This repository contains convenience scripts to finetune LLaMa3-8B (or any other foundation model) for chat towards any language (that isn't English). The rationale behind this is that LLaMa3 is trained on primarily English data and while it works to some extent for other languages, its performance is poor compared to English.

FlagEmbedding

FlagEmbedding focuses on retrieval-augmented LLMs, consisting of the following projects currently: * **Long-Context LLM** : Activation Beacon * **Fine-tuning of LM** : LM-Cocktail * **Embedding Model** : Visualized-BGE, BGE-M3, LLM Embedder, BGE Embedding * **Reranker Model** : llm rerankers, BGE Reranker * **Benchmark** : C-MTEB

edenai-apis

Eden AI aims to simplify the use and deployment of AI technologies by providing a unique API that connects to all the best AI engines. With the rise of **AI as a Service** , a lot of companies provide off-the-shelf trained models that you can access directly through an API. These companies are either the tech giants (Google, Microsoft , Amazon) or other smaller, more specialized companies, and there are hundreds of them. Some of the most known are : DeepL (translation), OpenAI (text and image analysis), AssemblyAI (speech analysis). There are **hundreds of companies** doing that. We're regrouping the best ones **in one place** !

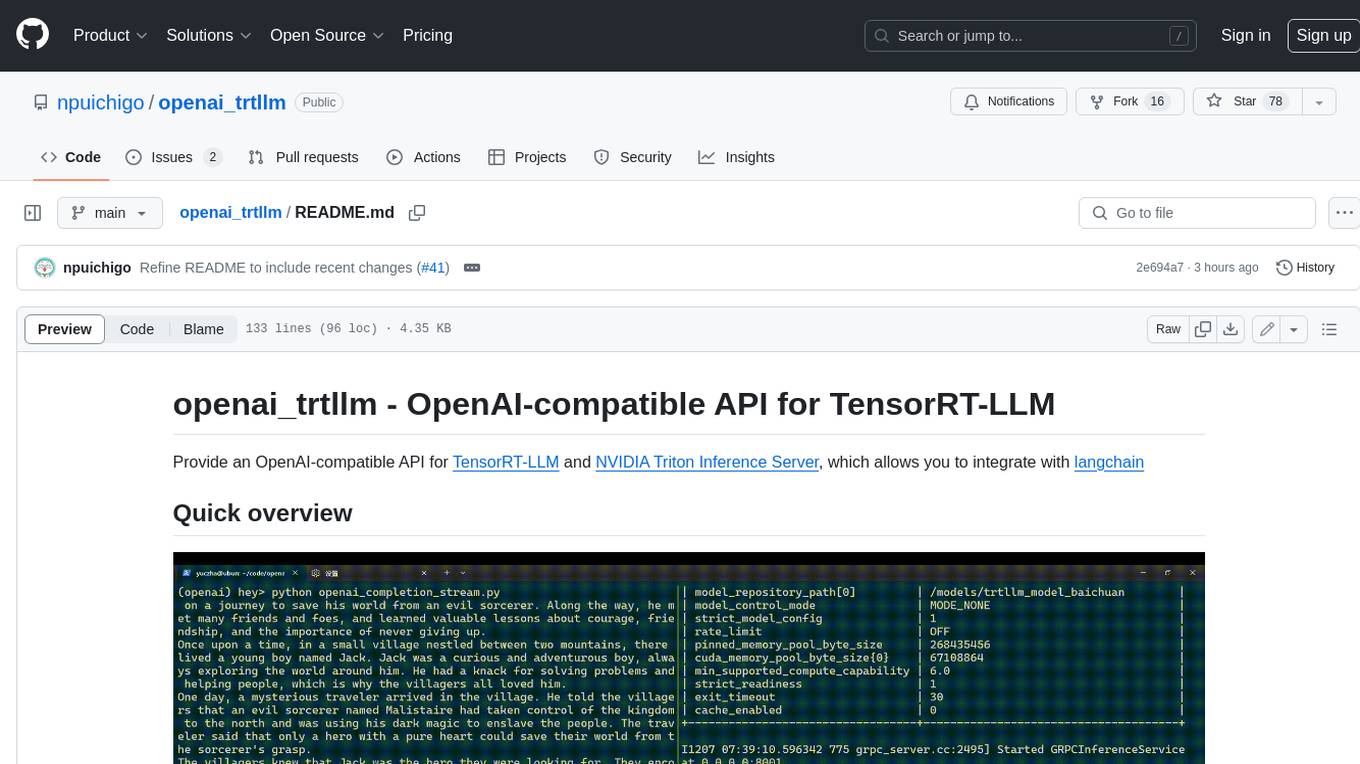

openai_trtllm

OpenAI-compatible API for TensorRT-LLM and NVIDIA Triton Inference Server, which allows you to integrate with langchain

20 - OpenAI Gpts

Text Zusammenfassen

Text zusammenfassen spart Zeit und extrahiert die Kernaussagen. Nutzen Sie unseren Service, um Texte effektiv zu zusammenfassen.

Succinct Summarizer

Summarizes texts in various styles so they can be effective to various stakeholders

Blogsmith JP

Friendly copywriter for blogs, using provided text, with Japanese support and summaries.

Ringkesan

Nyimpulkeun sareng nimba poin konci tina téks, artikel, video, dokumén sareng seueur deui

Résumeur GPT

Ce GPT va résumer votre texte pour ne garder que l'essentiel. Il supporte aussi les liens (à condition que le site ne s'y soit pas opposé)

Notes GPT

Paste your notes in here and I'll re-organizes your hastily written notes, write you a summary and give you actionable insights.

Tale Spinner

Curated content creator for language learning. Read what you want in ANY language.