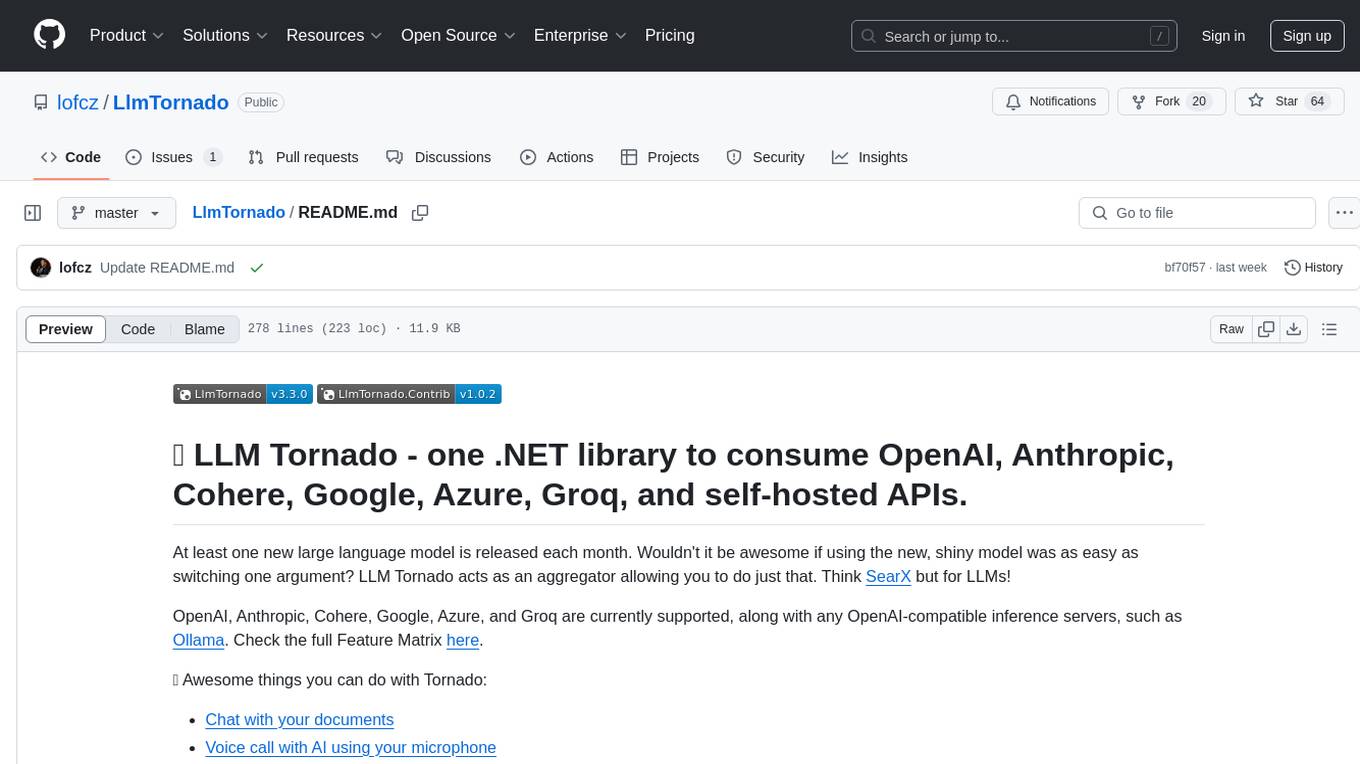

LlmTornado

The .NET library to build AI systems with 100+ LLM APIs: Anthropic, Azure, Cohere, DeepInfra, DeepSeek, Google, Groq, Mistral, Ollama, OpenAI, OpenRouter, Perplexity, vLLM, Voyage, xAI, and many more!

Stars: 306

LLM Tornado is a .NET library designed to simplify the consumption of various large language models (LLMs) from providers like OpenAI, Anthropic, Cohere, Google, Azure, Groq, and self-hosted APIs. It acts as an aggregator, allowing users to easily switch between different LLM providers with just a change in argument. Users can perform tasks such as chatting with documents, voice calling with AI, orchestrating assistants, generating images, and more. The library exposes capabilities through vendor extensions, making it easy to integrate and use multiple LLM providers simultaneously.

README:

Build AI agents and multi-agent systems in minutes with one toolkit and the broadest Provider support.

- Use Any Provider: All you need to know is the model's name; we handle the rest. Built-in: Anthropic, Azure, Cohere, DeepInfra, DeepSeek, Google, Groq, Mistral, Ollama, OpenAI, OpenRouter, Perplexity, Voyage, xAI. Check the full Feature Matrix here.

- First-class Local Deployments: Run with vLLM, Ollama, or LocalAI with integrated support for request transformations.

- Multi-Agent Systems: Toolkit for the orchestration of multiple collaborating specialist agents.

- Maximize Request Success Rate: If enabled, we keep track of which parameters are supported by which models, how long the reasoning context can be, etc., and silently modify your requests to comply with rules enforced by a diverse set of Providers.

- Leverage Multiple APIs: Non-standard features from all major Providers are carefully mapped, documented, and ready to use via strongly-typed code.

- Fully Multimodal: Text, images, videos, documents, URLs, and audio inputs are supported.

-

MCP Compatible: Seamlessly integrate Model Context Protocol using the official .NET SDK and

LlmTornado.Mcpadapter. - Enterprise Ready: Preview any request before committing to it. Automatic redaction of secrets in outputs. Stable APIs.

- Chat with your documents

- Make multiple-speaker podcasts

- Voice call with AI using your microphone

- Orchestrate Assistants

- Generate images

- Summarize a video (local file / YouTube)

- Turn text & images into high quality embeddings

- Transcribe audio in real time

... and a lot more! Now, instead of relying on one LLM provider, you can combine the unique strengths of many.

Install LLM Tornado via NuGet:

dotnet add package LlmTornado.ToolkitOptional addons:

dotnet add package LlmTornado.Mcp # Model Context Protocol (MCP) integration

dotnet add package LlmTornado.Contrib # productivity, quality of life enhancementsInferencing across multiple providers is as easy as changing the ChatModel argument. Tornado instance can be constructed with multiple API keys, the correct key is then used based on the model automatically:

TornadoApi api = new TornadoApi([

// note: delete lines with providers you won't be using

new (LLmProviders.OpenAi, "OPEN_AI_KEY"),

new (LLmProviders.Anthropic, "ANTHROPIC_KEY"),

new (LLmProviders.Cohere, "COHERE_KEY"),

new (LLmProviders.Google, "GOOGLE_KEY"),

new (LLmProviders.Groq, "GROQ_KEY"),

new (LLmProviders.DeepSeek, "DEEP_SEEK_KEY"),

new (LLmProviders.Mistral, "MISTRAL_KEY"),

new (LLmProviders.XAi, "XAI_KEY"),

new (LLmProviders.Perplexity, "PERPLEXITY_KEY"),

new (LLmProviders.Voyage, "VOYAGE_KEY"),

new (LLmProviders.DeepInfra, "DEEP_INFRA_KEY"),

new (LLmProviders.OpenRouter, "OPEN_ROUTER_KEY")

]);

// this sample iterates a bunch of models, gives each the same task, and prints results.

List<ChatModel> models = [

ChatModel.OpenAi.O3.Mini, ChatModel.Anthropic.Claude37.Sonnet,

ChatModel.Cohere.Command.RPlus, ChatModel.Google.Gemini.Gemini2Flash001,

ChatModel.Groq.Meta.Llama370B, ChatModel.DeepSeek.Models.Chat,

ChatModel.Mistral.Premier.MistralLarge, ChatModel.XAi.Grok.Grok2241212,

ChatModel.Perplexity.Sonar.Default

];

foreach (ChatModel model in models)

{

string? response = await api.Chat.CreateConversation(model)

.AppendSystemMessage("You are a fortune teller.")

.AppendUserInput("What will my future bring?")

.GetResponse();

Console.WriteLine(response);

}💡 Instead of passing in a strongly typed model, you can pass a string instead: await api.Chat.CreateConversation("gpt-4o"), Tornado will automatically resolve the provider.

Tornado has a powerful concept of VendorExtensions which can be applied to various endpoints and are strongly typed. Many Providers offer unique/niche APIs, often enabling use cases otherwise unavailable. For example, let's set a reasoning budget for Anthropic's Claude 3.7:

public static async Task AnthropicSonnet37Thinking()

{

Conversation chat = Program.Connect(LLmProviders.Anthropic).Chat.CreateConversation(new ChatRequest

{

Model = ChatModel.Anthropic.Claude37.Sonnet,

VendorExtensions = new ChatRequestVendorExtensions(new ChatRequestVendorAnthropicExtensions

{

Thinking = new AnthropicThinkingSettings

{

BudgetTokens = 2_000,

Enabled = true

}

})

});

chat.AppendUserInput("Explain how to solve differential equations.");

ChatRichResponse blocks = await chat.GetResponseRich();

if (blocks.Blocks is not null)

{

foreach (ChatRichResponseBlock reasoning in blocks.Blocks.Where(x => x.Type is ChatRichResponseBlockTypes.Reasoning))

{

Console.ForegroundColor = ConsoleColor.DarkGray;

Console.WriteLine(reasoning.Reasoning?.Content);

Console.ResetColor();

}

foreach (ChatRichResponseBlock reasoning in blocks.Blocks.Where(x => x.Type is ChatRichResponseBlockTypes.Message))

{

Console.WriteLine(reasoning.Message);

}

}

}Instead of consuming commercial APIs, one can easily roll their inference servers with a plethora of available tools. Here is a simple demo for streaming response with Ollama, but the same approach can be used for any custom provider:

public static async Task OllamaStreaming()

{

TornadoApi api = new TornadoApi(new Uri("http://localhost:11434")); // default Ollama port, API key can be passed in the second argument if needed

await api.Chat.CreateConversation(new ChatModel("falcon3:1b")) // <-- replace with your model

.AppendUserInput("Why is the sky blue?")

.StreamResponse(Console.Write);

}If you need more control over requests, for example, custom headers, you can create an instance of a built-in Provider. This is useful for custom deployments like Amazon Bedrock, Vertex AI, etc.

TornadoApi tornadoApi = new TornadoApi(new AnthropicEndpointProvider

{

Auth = new ProviderAuthentication("ANTHROPIC_API_KEY"),

// {0} = endpoint, {1} = action, {2} = model's name

UrlResolver = (endpoint, url, ctx) => "https://api.anthropic.com/v1/{0}{1}",

RequestResolver = (request, data, streaming) =>

{

// by default, providing a custom request resolver omits beta headers

// request is HttpRequestMessage, data contains the payload

},

RequestSerializer = (data, ctx) =>

{

// data is JObject, which can be modified before

// being serialized into a string.

}

});https://github.com/user-attachments/assets/de62f0fe-93e0-448c-81d0-8ab7447ad780

Tornado offers three levels of abstraction, trading more details for more complexity. The simple use cases where only plaintext is needed can be represented in a terse format:

await api.Chat.CreateConversation(ChatModel.Anthropic.Claude3.Sonnet)

.AppendSystemMessage("You are a fortune teller.")

.AppendUserInput("What will my future bring?")

.StreamResponse(Console.Write);The levels of abstraction are:

-

Response(stringfor chat,float[]for embeddings, etc.) -

ResponseRich(tools, modalities, metadata such as usage) -

ResponseRichSafe(same as level 2, guaranteed not to throw on network level, for example, if the provider returns an internal error or doesn't respond at all)

When plaintext is insufficient, switch to StreamResponseRich or GetResponseRich() APIs. Tools requested by the model can be resolved later and never returned to the model. This is useful in scenarios where we use the tools without intending to continue the conversation:

//Ask the model to generate two images, and stream the result:

public static async Task GoogleStreamImages()

{

Conversation chat = api.Chat.CreateConversation(new ChatRequest

{

Model = ChatModel.Google.GeminiExperimental.Gemini2FlashImageGeneration,

Modalities = [ ChatModelModalities.Text, ChatModelModalities.Image ]

});

chat.AppendUserInput([

new ChatMessagePart("Generate two images: a lion and a squirrel")

]);

await chat.StreamResponseRich(new ChatStreamEventHandler

{

MessagePartHandler = async (part) =>

{

if (part.Text is not null)

{

Console.Write(part.Text);

return;

}

if (part.Image is not null)

{

// In our tests this executes Chafa to turn the raw base64 data into Sixels

await DisplayImage(part.Image.Url);

}

},

BlockFinishedHandler = (block) =>

{

Console.WriteLine();

return ValueTask.CompletedTask;

},

OnUsageReceived = (usage) =>

{

Console.WriteLine();

Console.WriteLine(usage);

return ValueTask.CompletedTask;

}

});

}Tools requested by the model can be resolved and the results returned immediately. This has the benefit of automatically continuing the conversation:

Conversation chat = api.Chat.CreateConversation(new ChatRequest

{

Model = ChatModel.OpenAi.Gpt4.O,

Tools =

[

new Tool(new ToolFunction("get_weather", "gets the current weather", new

{

type = "object",

properties = new

{

location = new

{

type = "string",

description = "The location for which the weather information is required."

}

},

required = new List<string> { "location" }

}))

]

})

.AppendSystemMessage("You are a helpful assistant")

.AppendUserInput("What is the weather like today in Prague?");

ChatStreamEventHandler handler = new ChatStreamEventHandler

{

MessageTokenHandler = (x) =>

{

Console.Write(x);

return Task.CompletedTask;

},

FunctionCallHandler = (calls) =>

{

calls.ForEach(x => x.Result = new FunctionResult(x, "A mild rain is expected around noon.", null));

return Task.CompletedTask;

},

AfterFunctionCallsResolvedHandler = async (results, handler) => { await chat.StreamResponseRich(handler); }

};

await chat.StreamResponseRich(handler);Instead of resolving the tool call, we can postpone/quit the conversation. This is useful for extractive tasks, where we care only for the tool call:

Conversation chat = api.Chat.CreateConversation(new ChatRequest

{

Model = ChatModel.OpenAi.Gpt4.Turbo,

Tools = new List<Tool>

{

new Tool

{

Function = new ToolFunction("get_weather", "gets the current weather")

}

},

ToolChoice = new OutboundToolChoice(OutboundToolChoiceModes.Required)

});

chat.AppendUserInput("Who are you?"); // user asks something unrelated, but we force the model to use the tool

ChatRichResponse response = await chat.GetResponseRich(); // the response contains one block of type FunctionGetResponseRichSafe() API is also available, which is guaranteed not to throw on the network level. The response is wrapped in a network-level wrapper, containing additional information. For production use cases, either use try {} catch {} on all the HTTP request-producing Tornado APIs, or use the safe APIs.

To use the Model Context Protocol, install the LlmTornado.Mcp adapter. After that, new interop methods will become available on the ModelContextProtocol types. The following example uses the GetForecast tool defined on an example MCP server:

[McpServerToolType]

public sealed class WeatherTools

{

[McpServerTool, Description("Get weather forecast for a location.")]

public static async Task<string> GetForecast(

HttpClient client,

[Description("Latitude of the location.")] double latitude,

[Description("Longitude of the location.")] double longitude)

{

var pointUrl = string.Create(CultureInfo.InvariantCulture, $"/points/{latitude},{longitude}");

using var jsonDocument = await client.ReadJsonDocumentAsync(pointUrl);

var forecastUrl = jsonDocument.RootElement.GetProperty("properties").GetProperty("forecast").GetString()

?? throw new Exception($"No forecast URL provided by {client.BaseAddress}points/{latitude},{longitude}");

using var forecastDocument = await client.ReadJsonDocumentAsync(forecastUrl);

var periods = forecastDocument.RootElement.GetProperty("properties").GetProperty("periods").EnumerateArray();

return string.Join("\n---\n", periods.Select(period => $"""

{period.GetProperty("name").GetString()}

Temperature: {period.GetProperty("temperature").GetInt32()}°F

Wind: {period.GetProperty("windSpeed").GetString()} {period.GetProperty("windDirection").GetString()}

Forecast: {period.GetProperty("detailedForecast").GetString()}

"""));

}

}The following is done by the client:

// your clientTransport, for example StdioClientTransport

await using IMcpClient mcpClient = await McpClientFactory.CreateAsync(clientTransport);

// 1. fetch tools

List<Tool> tools = await mcpClient.ListTornadoToolsAsync();

// 2. create a conversation, pass available tools

TornadoApi api = new TornadoApi(LLmProviders.OpenAi, apiKeys.OpenAi);

Conversation conversation = api.Chat.CreateConversation(new ChatRequest

{

Model = ChatModel.OpenAi.Gpt41.V41,

Tools = tools,

// force any of the available tools to be used (use new OutboundToolChoice("toolName") to specify which if needed)

ToolChoice = OutboundToolChoice.Required

});

// 3. let the model call the tool and infer arguments

await conversation

.AddSystemMessage("You are a helpful assistant")

.AddUserMessage("What is the weather like in Dallas?")

.GetResponseRich(async calls =>

{

foreach (FunctionCall call in calls)

{

// retrieve arguments inferred by the model

double latitude = call.GetOrDefault<double>("latitude");

double longitude = call.GetOrDefault<double>("longitude");

// call the tool on the MCP server, pass args

await call.ResolveRemote(new

{

latitude = latitude,

longitude = longitude

});

// extract the tool result and pass it back to the model

if (call.Result?.RemoteContent is McpContent mcpContent)

{

foreach (IMcpContentBlock block in mcpContent.McpContentBlocks)

{

if (block is McpContentBlockText textBlock)

{

call.Result.Content = textBlock.Text;

}

}

}

}

});

// stop forcing the client to call the tool

conversation.RequestParameters.ToolChoice = null;

// 4. stream final response

await conversation.StreamResponse(Console.Write);A complete example is available here: client, server.

Tornado includes powerful abstractions in the LlmTornado.Toolkit package, allowing rapid development of applications, while avoiding many design pitfalls. Scalability and tuning-friendly code design are at the core of these abstractions.

ToolkitChat is a primitive for graph-based workflows, where edges move data and nodes execute functions. ToolkitChat supports streaming, rich responses, and chaining tool calls. Tool calls are provided via ChatFunction or ChatPlugin (an envelope with multiple tools). Many overloads accept a primary and a secondary model acting as a backup, this zig-zag strategy overcomes temporary downtime in APIs better than simple retrying of the same model. All tool calls are strongly typed and strict by default. For providers, where a strict JSON schema is not supported (Anthropic, for example), prefill with { is used as a fallback. Call can be marked as non-strict by simply changing a parameter.

class DemoAggregatedItem

{

public string Name { get; set; }

public string KnownName { get; set; }

public int Quantity { get; set; }

}

string sysPrompt = "aggregate items by type";

string userPrompt = "three apples, one cherry, two apples, one orange, one orange";

await ToolkitChat.GetSingleResponse(Program.Connect(), ChatModel.Google.Gemini.Gemini25Flash, ChatModel.OpenAi.Gpt41.V41Mini, sysPrompt, new ChatFunction([

new ToolParam("items", new ToolParamList("aggregated items", [

new ToolParam("name", "name of the item", ToolParamAtomicTypes.String),

new ToolParam("quantity", "aggregated quantity", ToolParamAtomicTypes.Int),

new ToolParam("known_name", new ToolParamEnum("known name of the item", [ "apple", "cherry", "orange", "other" ]))

]))

], async (args, ctx) =>

{

if (!args.ParamTryGet("items", out List<DemoAggregatedItem>? items) || items is null)

{

return new ChatFunctionCallResult(ChatFunctionCallResultParameterErrors.MissingRequiredParameter, "items");

}

Console.WriteLine("Aggregated items:");

foreach (DemoAggregatedItem item in items)

{

Console.WriteLine($"{item.Name}: {item.Quantity}");

}

return new ChatFunctionCallResult();

}), userPrompt); // temp defaults to 0, output length to 8k

/*

Aggregated items:

apple: 5

cherry: 1

orange: 2

*/- 50,000+ installs on NuGet (previous names Lofcz.Forks.OpenAI, OpenAiNg, currently LlmTornado).

- Used in award-winning commercial projects, processing > 100B tokens monthly.

- Covered by 250+ tests.

- Great performance.

- The license will never change.

- ScioBot - AI For Educators, 100k+ users

- LombdaAgentSDK - A lightweight C# SDK designed to create and run modular agents

- NotT3Chat - The C# Answer to the T3 Stack

- ClaudeCodeProxy - Provider multiplexing proxy

- Semantic Search - AI semantic search where a query is matched by context and meaning

- Monster Collector - A database of AI-generated monsters

Have you built something with Tornado? Let us know about it in the issues to get a spotlight!

PRs are welcome! We are accepting new Provider implementations, contributions towards a 100 % green Feature Matrix, and, after public discussion, new abstractions.

This library is licensed under the MIT license. 💜

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for LlmTornado

Similar Open Source Tools

LlmTornado

LLM Tornado is a .NET library designed to simplify the consumption of various large language models (LLMs) from providers like OpenAI, Anthropic, Cohere, Google, Azure, Groq, and self-hosted APIs. It acts as an aggregator, allowing users to easily switch between different LLM providers with just a change in argument. Users can perform tasks such as chatting with documents, voice calling with AI, orchestrating assistants, generating images, and more. The library exposes capabilities through vendor extensions, making it easy to integrate and use multiple LLM providers simultaneously.

whetstone.chatgpt

Whetstone.ChatGPT is a simple light-weight library that wraps the Open AI API with support for dependency injection. It supports features like GPT 4, GPT 3.5 Turbo, chat completions, audio transcription and translation, vision completions, files, fine tunes, images, embeddings, moderations, and response streaming. The library provides a video walkthrough of a Blazor web app built on it and includes examples such as a command line bot. It offers quickstarts for dependency injection, chat completions, completions, file handling, fine tuning, image generation, and audio transcription.

azure-functions-openai-extension

Azure Functions OpenAI Extension is a project that adds support for OpenAI LLM (GPT-3.5-turbo, GPT-4) bindings in Azure Functions. It provides NuGet packages for various functionalities like text completions, chat completions, assistants, embeddings generators, and semantic search. The project requires .NET 6 SDK or greater, Azure Functions Core Tools v4.x, and specific settings in Azure Function or local settings for development. It offers features like text completions, chat completion, assistants with custom skills, embeddings generators for text relatedness, and semantic search using vector databases. The project also includes examples in C# and Python for different functionalities.

UniChat

UniChat is a pipeline tool for creating online and offline chat-bots in Unity. It leverages Unity.Sentis and text vector embedding technology to enable offline mode text content search based on vector databases. The tool includes a chain toolkit for embedding LLM and Agent in games, along with middleware components for Text to Speech, Speech to Text, and Sub-classifier functionalities. UniChat also offers a tool for invoking tools based on ReActAgent workflow, allowing users to create personalized chat scenarios and character cards. The tool provides a comprehensive solution for designing flexible conversations in games while maintaining developer's ideas.

Ollama

Ollama SDK for .NET is a fully generated C# SDK based on OpenAPI specification using OpenApiGenerator. It supports automatic releases of new preview versions, source generator for defining tools natively through C# interfaces, and all modern .NET features. The SDK provides support for all Ollama API endpoints including chats, embeddings, listing models, pulling and creating new models, and more. It also offers tools for interacting with weather data and providing weather-related information to users.

instructor-go

Instructor Go is a library that simplifies working with structured outputs from large language models (LLMs). Built on top of `invopop/jsonschema` and utilizing `jsonschema` Go struct tags, it provides a user-friendly API for managing validation, retries, and streaming responses without changing code logic. The library supports LLM provider APIs such as OpenAI, Anthropic, Cohere, and Google, capturing and returning usage data in responses. Users can easily add metadata to struct fields using `jsonschema` tags to enhance model awareness and streamline workflows.

mcpdotnet

mcpdotnet is a .NET implementation of the Model Context Protocol (MCP), facilitating connections and interactions between .NET applications and MCP clients and servers. It aims to provide a clean, specification-compliant implementation with support for various MCP capabilities and transport types. The library includes features such as async/await pattern, logging support, and compatibility with .NET 8.0 and later. Users can create clients to use tools from configured servers and also create servers to register tools and interact with clients. The project roadmap includes expanding documentation, increasing test coverage, adding samples, performance optimization, SSE server support, and authentication.

simple-openai

Simple-OpenAI is a Java library that provides a simple way to interact with the OpenAI API. It offers consistent interfaces for various OpenAI services like Audio, Chat Completion, Image Generation, and more. The library uses CleverClient for HTTP communication, Jackson for JSON parsing, and Lombok to reduce boilerplate code. It supports asynchronous requests and provides methods for synchronous calls as well. Users can easily create objects to communicate with the OpenAI API and perform tasks like text-to-speech, transcription, image generation, and chat completions.

LightRAG

LightRAG is a PyTorch library designed for building and optimizing Retriever-Agent-Generator (RAG) pipelines. It follows principles of simplicity, quality, and optimization, offering developers maximum customizability with minimal abstraction. The library includes components for model interaction, output parsing, and structured data generation. LightRAG facilitates tasks like providing explanations and examples for concepts through a question-answering pipeline.

go-utcp

The Universal Tool Calling Protocol (UTCP) is a modern, flexible, and scalable standard for defining and interacting with tools across various communication protocols. It emphasizes scalability, interoperability, and ease of use. It provides built-in transports for HTTP, CLI, Server-Sent Events, streaming HTTP, GraphQL, MCP, and UDP. Users can use the library to construct a client and call tools using the available transports. The library also includes utilities for variable substitution, in-memory repository for storing providers and tools, and OpenAPI conversion to UTCP manuals.

Jlama

Jlama is a modern Java inference engine designed for large language models. It supports various model types such as Gemma, Llama, Mistral, GPT-2, BERT, and more. The tool implements features like Flash Attention, Mixture of Experts, and supports different model quantization formats. Built with Java 21 and utilizing the new Vector API for faster inference, Jlama allows users to add LLM inference directly to their Java applications. The tool includes a CLI for running models, a simple UI for chatting with LLMs, and examples for different model types.

gollm

gollm is a Go package designed to simplify interactions with Large Language Models (LLMs) for AI engineers and developers. It offers a unified API for multiple LLM providers, easy provider and model switching, flexible configuration options, advanced prompt engineering, prompt optimization, memory retention, structured output and validation, provider comparison tools, high-level AI functions, robust error handling and retries, and extensible architecture. The package enables users to create AI-powered golems for tasks like content creation workflows, complex reasoning tasks, structured data generation, model performance analysis, prompt optimization, and creating a mixture of agents.

swarmgo

SwarmGo is a Go package designed to create AI agents capable of interacting, coordinating, and executing tasks. It focuses on lightweight agent coordination and execution, offering powerful primitives like Agents and handoffs. SwarmGo enables building scalable solutions with rich dynamics between tools and networks of agents, all while keeping the learning curve low. It supports features like memory management, streaming support, concurrent agent execution, LLM interface, and structured workflows for organizing and coordinating multiple agents.

instructor-js

Instructor is a Typescript library for structured extraction in Typescript, powered by llms, designed for simplicity, transparency, and control. It stands out for its simplicity, transparency, and user-centric design. Whether you're a seasoned developer or just starting out, you'll find Instructor's approach intuitive and steerable.

ChatRex

ChatRex is a Multimodal Large Language Model (MLLM) designed to seamlessly integrate fine-grained object perception and robust language understanding. By adopting a decoupled architecture with a retrieval-based approach for object detection and leveraging high-resolution visual inputs, ChatRex addresses key challenges in perception tasks. It is powered by the Rexverse-2M dataset with diverse image-region-text annotations. ChatRex can be applied to various scenarios requiring fine-grained perception, such as object detection, grounded conversation, grounded image captioning, and region understanding.

dive

Dive is an AI toolkit for Go that enables the creation of specialized teams of AI agents and seamless integration with leading LLMs. It offers a CLI and APIs for easy integration, with features like creating specialized agents, hierarchical agent systems, declarative configuration, multiple LLM support, extended reasoning, model context protocol, advanced model settings, tools for agent capabilities, tool annotations, streaming, CLI functionalities, thread management, confirmation system, deep research, and semantic diff. Dive also provides semantic diff analysis, unified interface for LLM providers, tool system with annotations, custom tool creation, and support for various verified models. The toolkit is designed for developers to build AI-powered applications with rich agent capabilities and tool integrations.

For similar tasks

anything-llm

AnythingLLM is a full-stack application that enables you to turn any document, resource, or piece of content into context that any LLM can use as references during chatting. This application allows you to pick and choose which LLM or Vector Database you want to use as well as supporting multi-user management and permissions.

DistiLlama

DistiLlama is a Chrome extension that leverages a locally running Large Language Model (LLM) to perform various tasks, including text summarization, chat, and document analysis. It utilizes Ollama as the locally running LLM instance and LangChain for text summarization. DistiLlama provides a user-friendly interface for interacting with the LLM, allowing users to summarize web pages, chat with documents (including PDFs), and engage in text-based conversations. The extension is easy to install and use, requiring only the installation of Ollama and a few simple steps to set up the environment. DistiLlama offers a range of customization options, including the choice of LLM model and the ability to configure the summarization chain. It also supports multimodal capabilities, allowing users to interact with the LLM through text, voice, and images. DistiLlama is a valuable tool for researchers, students, and professionals who seek to leverage the power of LLMs for various tasks without compromising data privacy.

SecureAI-Tools

SecureAI Tools is a private and secure AI tool that allows users to chat with AI models, chat with documents (PDFs), and run AI models locally. It comes with built-in authentication and user management, making it suitable for family members or coworkers. The tool is self-hosting optimized and provides necessary scripts and docker-compose files for easy setup in under 5 minutes. Users can customize the tool by editing the .env file and enabling GPU support for faster inference. SecureAI Tools also supports remote OpenAI-compatible APIs, with lower hardware requirements for using remote APIs only. The tool's features wishlist includes chat sharing, mobile-friendly UI, and support for more file types and markdown rendering.

serverless-pdf-chat

The serverless-pdf-chat repository contains a sample application that allows users to ask natural language questions of any PDF document they upload. It leverages serverless services like Amazon Bedrock, AWS Lambda, and Amazon DynamoDB to provide text generation and analysis capabilities. The application architecture involves uploading a PDF document to an S3 bucket, extracting metadata, converting text to vectors, and using a LangChain to search for information related to user prompts. The application is not intended for production use and serves as a demonstration and educational tool.

chat-your-doc

Chat Your Doc is an experimental project exploring various applications based on LLM technology. It goes beyond being just a chatbot project, focusing on researching LLM applications using tools like LangChain and LlamaIndex. The project delves into UX, computer vision, and offers a range of examples in the 'Lab Apps' section. It includes links to different apps, descriptions, launch commands, and demos, aiming to showcase the versatility and potential of LLM applications.

witsy

Witsy is a generative AI desktop application that supports various models like OpenAI, Ollama, Anthropic, MistralAI, Google, Groq, and Cerebras. It offers features such as chat completion, image generation, scratchpad for content creation, prompt anywhere functionality, AI commands for productivity, expert prompts for specialization, LLM plugins for additional functionalities, read aloud capabilities, chat with local files, transcription/dictation, Anthropic Computer Use support, local history of conversations, code formatting, image copy/download, and more. Users can interact with the application to generate content, boost productivity, and perform various AI-related tasks.

docs-ai

Docs AI is a platform that allows users to train their documents, chat with their documents, and create chatbots to solve queries. It is built using NextJS, Tailwind, tRPC, ShadcnUI, Prisma, Postgres, NextAuth, Pinecone, and Cloudflare R2. The platform requires Node.js (Version: >=18.x), PostgreSQL, and Redis for setup. Users can utilize Docker for development by using the provided `docker-compose.yml` file in the `/app` directory.

LlmTornado

LLM Tornado is a .NET library designed to simplify the consumption of various large language models (LLMs) from providers like OpenAI, Anthropic, Cohere, Google, Azure, Groq, and self-hosted APIs. It acts as an aggregator, allowing users to easily switch between different LLM providers with just a change in argument. Users can perform tasks such as chatting with documents, voice calling with AI, orchestrating assistants, generating images, and more. The library exposes capabilities through vendor extensions, making it easy to integrate and use multiple LLM providers simultaneously.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.