human

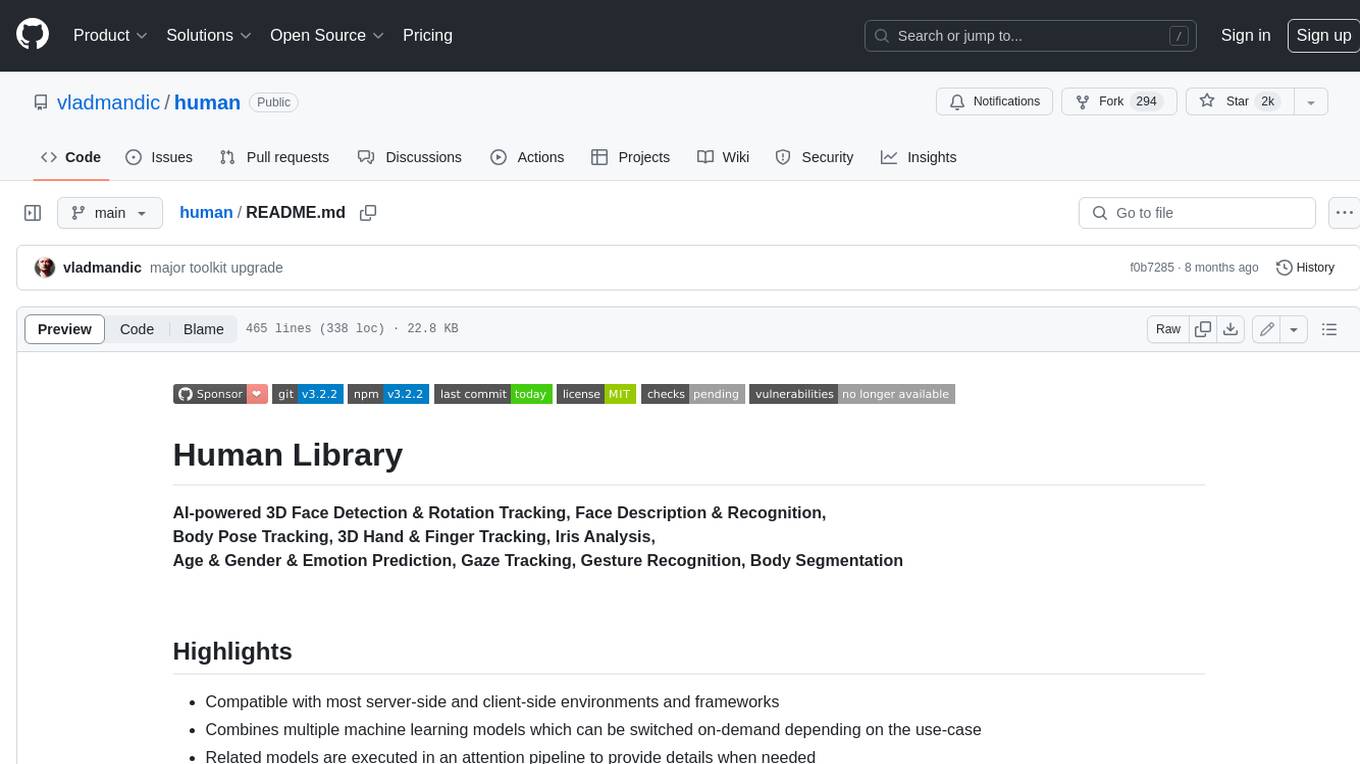

Human: AI-powered 3D Face Detection & Rotation Tracking, Face Description & Recognition, Body Pose Tracking, 3D Hand & Finger Tracking, Iris Analysis, Age & Gender & Emotion Prediction, Gaze Tracking, Gesture Recognition

Stars: 2005

AI-powered 3D Face Detection & Rotation Tracking, Face Description & Recognition, Body Pose Tracking, 3D Hand & Finger Tracking, Iris Analysis, Age & Gender & Emotion Prediction, Gaze Tracking, Gesture Recognition, Body Segmentation

README:

AI-powered 3D Face Detection & Rotation Tracking, Face Description & Recognition,

Body Pose Tracking, 3D Hand & Finger Tracking, Iris Analysis,

Age & Gender & Emotion Prediction, Gaze Tracking, Gesture Recognition, Body Segmentation

- Compatible with most server-side and client-side environments and frameworks

- Combines multiple machine learning models which can be switched on-demand depending on the use-case

- Related models are executed in an attention pipeline to provide details when needed

- Optimized input pre-processing that can enhance image quality of any type of inputs

- Detection of frame changes to trigger only required models for improved performance

- Intelligent temporal interpolation to provide smooth results regardless of processing performance

- Simple unified API

- Built-in Image, Video and WebCam handling

-

Browser:

Compatible with both desktop and mobile platforms

Compatible with CPU, WebGL, WASM backends

Compatible with WebWorker execution

Compatible with WebView -

NodeJS:

Compatibile with WASM backend for executions on architectures where tensorflow binaries are not available

Compatible with tfjs-node using software execution via tensorflow shared libraries

Compatible with tfjs-node using GPU-accelerated execution via tensorflow shared libraries and nVidia CUDA

Check out Simple Live Demo fully annotated app as a good start starting point (html)(code)

Check out Main Live Demo app for advanced processing of of webcam, video stream or images static images with all possible tunable options

- To start video detection, simply press Play

- To process images, simply drag & drop in your Browser window

- Note: For optimal performance, select only models you'd like to use

- Note: If you have modern GPU, WebGL (default) backend is preferred, otherwise select WASM backend

All browser demos are self-contained without any external dependencies

- Full [Live] [Details]: Main browser demo app that showcases all Human capabilities

- Simple [Live] [Details]: Simple demo in WebCam processing demo in TypeScript

- Embedded [Live] [Details]: Even simpler demo with tiny code embedded in HTML file

- Face Detect [Live] [Details]: Extract faces from images and processes details

- Face Match [Live] [Details]: Extract faces from images, calculates face descriptors and similarities and matches them to known database

- Face ID [Live] [Details]: Runs multiple checks to validate webcam input before performing face match to faces in IndexDB

- Multi-thread [Live] [Details]: Runs each Human module in a separate web worker for highest possible performance

- NextJS [Live] [Details]: Use Human with TypeScript, NextJS and ReactJS

- ElectronJS [Details]: Use Human with TypeScript and ElectonJS to create standalone cross-platform apps

- 3D Analysis with BabylonJS [Live] [Details]: 3D tracking and visualization of heead, face, eye, body and hand

- VRM Virtual Model Tracking with Three.JS [Live] [Details]: VR model with head, face, eye, body and hand tracking

- VRM Virtual Model Tracking with BabylonJS [Live] [Details]: VR model with head, face, eye, body and hand tracking

NodeJS demos may require extra dependencies which are used to decode inputs

See header of each demo to see its dependencies as they are not automatically installed with Human

- Main [Details]: Process images from files, folders or URLs using native methods

-

Canvas [Details]: Process image from file or URL and draw results to a new image file using

node-canvas -

Video [Details]: Processing of video input using

ffmpeg -

WebCam [Details]: Processing of webcam screenshots using

fswebcam -

Events [Details]: Showcases usage of

Humaneventing to get notifications on processing - Similarity [Details]: Compares two input images for similarity of detected faces

- Face Match [Details]: Parallel processing of face match in multiple child worker threads

-

Multiple Workers [Details]: Runs multiple parallel

humanby dispaching them to pool of pre-created worker processes - Dynamic Load [Details]: Loads Human dynamically with multiple different desired backends

- Code Repository

- NPM Package

- Issues Tracker

- TypeDoc API Specification - Main class

- TypeDoc API Specification - Full

- Change Log

- Current To-do List

- Home

- Installation

- Usage & Functions

- Configuration Details

- Result Details

- Customizing Draw Methods

- Caching & Smoothing

- Input Processing

- Face Recognition & Face Description

- Gesture Recognition

- Common Issues

- Background and Benchmarks

- Comparing Backends

- Development Server

- Build Process

- Adding Custom Modules

- Performance Notes

- Performance Profiling

- Platform Support

- Diagnostic and Performance trace information

- Dockerize Human applications

- List of Models & Credits

- Models Download Repository

- Security & Privacy Policy

- License & Usage Restrictions

See issues and discussions for list of known limitations and planned enhancements

Suggestions are welcome!

Visit Examples gallery for more examples

All options as presented in the demo application...

demo/index.html

Results Browser:

[ Demo -> Display -> Show Results ]

-

Face Similarity Matching:

Extracts all faces from provided input images,

sorts them by similarity to selected face

and optionally matches detected face with database of known people to guess their names

-

Face Detect:

Extracts all detect faces from loaded images on-demand and highlights face details on a selected face

-

Face ID:

Performs validation check on a webcam input to detect a real face and matches it to known faces stored in database

- 3D Rendering:

- VR Model Tracking:

- Human as OS native application:

468-Point Face Mesh Defails:

(view in full resolution to see keypoints)

Simply load Human (IIFE version) directly from a cloud CDN in your HTML file:

(pick one: jsdelirv, unpkg or cdnjs)

<!DOCTYPE HTML>

<script src="https://cdn.jsdelivr.net/npm/@vladmandic/human/dist/human.js"></script>

<script src="https://unpkg.dev/@vladmandic/human/dist/human.js"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/human/3.0.0/human.js"></script>For details, including how to use Browser ESM version or NodeJS version of Human, see Installation

Simple app that uses Human to process video input and

draw output on screen using internal draw helper functions

// create instance of human with simple configuration using default values

const config = { backend: 'webgl' };

const human = new Human.Human(config);

// select input HTMLVideoElement and output HTMLCanvasElement from page

const inputVideo = document.getElementById('video-id');

const outputCanvas = document.getElementById('canvas-id');

function detectVideo() {

// perform processing using default configuration

human.detect(inputVideo).then((result) => {

// result object will contain detected details

// as well as the processed canvas itself

// so lets first draw processed frame on canvas

human.draw.canvas(result.canvas, outputCanvas);

// then draw results on the same canvas

human.draw.face(outputCanvas, result.face);

human.draw.body(outputCanvas, result.body);

human.draw.hand(outputCanvas, result.hand);

human.draw.gesture(outputCanvas, result.gesture);

// and loop immediate to the next frame

requestAnimationFrame(detectVideo);

return result;

});

}

detectVideo();or using async/await:

// create instance of human with simple configuration using default values

const config = { backend: 'webgl' };

const human = new Human(config); // create instance of Human

const inputVideo = document.getElementById('video-id');

const outputCanvas = document.getElementById('canvas-id');

async function detectVideo() {

const result = await human.detect(inputVideo); // run detection

human.draw.all(outputCanvas, result); // draw all results

requestAnimationFrame(detectVideo); // run loop

}

detectVideo(); // start loopor using Events:

// create instance of human with simple configuration using default values

const config = { backend: 'webgl' };

const human = new Human(config); // create instance of Human

const inputVideo = document.getElementById('video-id');

const outputCanvas = document.getElementById('canvas-id');

human.events.addEventListener('detect', () => { // event gets triggered when detect is complete

human.draw.all(outputCanvas, human.result); // draw all results

});

function detectVideo() {

human.detect(inputVideo) // run detection

.then(() => requestAnimationFrame(detectVideo)); // upon detect complete start processing of the next frame

}

detectVideo(); // start loopor using interpolated results for smooth video processing by separating detection and drawing loops:

const human = new Human(); // create instance of Human

const inputVideo = document.getElementById('video-id');

const outputCanvas = document.getElementById('canvas-id');

let result;

async function detectVideo() {

result = await human.detect(inputVideo); // run detection

requestAnimationFrame(detectVideo); // run detect loop

}

async function drawVideo() {

if (result) { // check if result is available

const interpolated = human.next(result); // get smoothened result using last-known results

human.draw.all(outputCanvas, interpolated); // draw the frame

}

requestAnimationFrame(drawVideo); // run draw loop

}

detectVideo(); // start detection loop

drawVideo(); // start draw loopor same, but using built-in full video processing instead of running manual frame-by-frame loop:

const human = new Human(); // create instance of Human

const inputVideo = document.getElementById('video-id');

const outputCanvas = document.getElementById('canvas-id');

async function drawResults() {

const interpolated = human.next(); // get smoothened result using last-known results

human.draw.all(outputCanvas, interpolated); // draw the frame

requestAnimationFrame(drawResults); // run draw loop

}

human.video(inputVideo); // start detection loop which continously updates results

drawResults(); // start draw loopor using built-in webcam helper methods that take care of video handling completely:

const human = new Human(); // create instance of Human

const outputCanvas = document.getElementById('canvas-id');

async function drawResults() {

const interpolated = human.next(); // get smoothened result using last-known results

human.draw.canvas(outputCanvas, human.webcam.element); // draw current webcam frame

human.draw.all(outputCanvas, interpolated); // draw the frame detectgion results

requestAnimationFrame(drawResults); // run draw loop

}

await human.webcam.start({ crop: true });

human.video(human.webcam.element); // start detection loop which continously updates results

drawResults(); // start draw loopAnd for even better results, you can run detection in a separate web worker thread

Human library can process all known input types:

-

Image,ImageData,ImageBitmap,Canvas,OffscreenCanvas,Tensor, -

HTMLImageElement,HTMLCanvasElement,HTMLVideoElement,HTMLMediaElement

Additionally, HTMLVideoElement, HTMLMediaElement can be a standard <video> tag that links to:

- WebCam on user's system

- Any supported video type

e.g..mp4,.avi, etc. - Additional video types supported via HTML5 Media Source Extensions

e.g.: HLS (HTTP Live Streaming) usinghls.jsor DASH (Dynamic Adaptive Streaming over HTTP) usingdash.js - WebRTC media track using built-in support

Human is written using TypeScript strong typing and ships with full TypeDefs for all classes defined by the library bundled in types/human.d.ts and enabled by default

Note: This does not include embedded tfjs

If you want to use embedded tfjs inside Human (human.tf namespace) and still full typedefs, add this code:

import type * as tfjs from '@vladmandic/human/dist/tfjs.esm';

const tf = human.tf as typeof tfjs;

This is not enabled by default as Human does not ship with full TFJS TypeDefs due to size considerations

Enabling tfjs TypeDefs as above creates additional project (dev-only as only types are required) dependencies as defined in @vladmandic/human/dist/tfjs.esm.d.ts:

@tensorflow/tfjs-core, @tensorflow/tfjs-converter, @tensorflow/tfjs-backend-wasm, @tensorflow/tfjs-backend-webgl

Default models in Human library are:

- Face Detection: MediaPipe BlazeFace Back variation

- Face Mesh: MediaPipe FaceMesh

- Face Iris Analysis: MediaPipe Iris

- Face Description: HSE FaceRes

- Emotion Detection: Oarriaga Emotion

- Body Analysis: MoveNet Lightning variation

- Hand Analysis: HandTrack & MediaPipe HandLandmarks

- Body Segmentation: Google Selfie

- Object Detection: CenterNet with MobileNet v3

Note that alternative models are provided and can be enabled via configuration

For example, body pose detection by default uses MoveNet Lightning, but can be switched to MultiNet Thunder for higher precision or Multinet MultiPose for multi-person detection or even PoseNet, BlazePose or EfficientPose depending on the use case

For more info, see Configuration Details and List of Models

Human library is written in TypeScript 5.1 using TensorFlow/JS 4.10 and conforming to latest JavaScript ECMAScript version 2022 standard

Build target for distributables is JavaScript EMCAScript version 2018

For details see Wiki Pages

and API Specification

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for human

Similar Open Source Tools

human

AI-powered 3D Face Detection & Rotation Tracking, Face Description & Recognition, Body Pose Tracking, 3D Hand & Finger Tracking, Iris Analysis, Age & Gender & Emotion Prediction, Gaze Tracking, Gesture Recognition, Body Segmentation

lighteval

LightEval is a lightweight LLM evaluation suite that Hugging Face has been using internally with the recently released LLM data processing library datatrove and LLM training library nanotron. We're releasing it with the community in the spirit of building in the open. Note that it is still very much early so don't expect 100% stability ^^' In case of problems or question, feel free to open an issue!

nanolang

NanoLang is a minimal, LLM-friendly programming language that transpiles to C for native performance. It features mandatory testing, unambiguous syntax, automatic memory management, LLM-powered autonomous optimization, dual notation for operators, static typing, C interop, and native performance. The language supports variables, functions with mandatory tests, control flow, structs, enums, generic types, and provides a clean, modern syntax optimized for both human readability and AI code generation.

asktube

AskTube is an AI-powered YouTube video summarizer and QA assistant that utilizes Retrieval Augmented Generation (RAG) technology. It offers a comprehensive solution with Q&A functionality and aims to provide a user-friendly experience for local machine usage. The project integrates various technologies including Python, JS, Sanic, Peewee, Pytubefix, Sentence Transformers, Sqlite, Chroma, and NuxtJs/DaisyUI. AskTube supports multiple providers for analysis, AI services, and speech-to-text conversion. The tool is designed to extract data from YouTube URLs, store embedding chapter subtitles, and facilitate interactive Q&A sessions with enriched questions. It is not intended for production use but rather for end-users on their local machines.

ort

Ort is an unofficial ONNX Runtime 1.17 wrapper for Rust based on the now inactive onnxruntime-rs. ONNX Runtime accelerates ML inference on both CPU and GPU.

tappas

Hailo TAPPAS is a set of full application examples that implement pipeline elements and pre-trained AI tasks. It demonstrates Hailo's system integration scenarios on predefined systems, aiming to accelerate time to market, simplify integration with Hailo's runtime SW stack, and provide a starting point for customers to fine-tune their applications. The tool supports both Hailo-15 and Hailo-8, offering various example applications optimized for different common hosts. TAPPAS includes pipelines for single network, two network, and multi-stream processing, as well as high-resolution processing via tiling. It also provides example use case pipelines like License Plate Recognition and Multi-Person Multi-Camera Tracking. The tool is regularly updated with new features, bug fixes, and platform support.

leetcode-py

A Python package to generate professional LeetCode practice environments. Features automated problem generation from LeetCode URLs, beautiful data structure visualizations (TreeNode, ListNode, GraphNode), and comprehensive testing with 10+ test cases per problem. Built with professional development practices including CI/CD, type hints, and quality gates. The tool provides a modern Python development environment with production-grade features such as linting, test coverage, logging, and CI/CD pipeline. It also offers enhanced data structure visualization for debugging complex structures, flexible notebook support, and a powerful CLI for generating problems anywhere.

SINQ

SINQ (Sinkhorn-Normalized Quantization) is a novel, fast, and high-quality quantization method designed to make any Large Language Models smaller while keeping their accuracy almost intact. It offers a model-agnostic quantization technique that delivers state-of-the-art performance for Large Language Models without sacrificing accuracy. With SINQ, users can deploy models that would otherwise be too big, drastically reducing memory usage while preserving LLM quality. The tool quantizes models using dual scaling for better quantization and achieves a more even error distribution, leading to stable behavior across layers and consistently higher accuracy even at very low bit-widths.

stable-diffusion.cpp

The stable-diffusion.cpp repository provides an implementation for inferring stable diffusion in pure C/C++. It offers features such as support for different versions of stable diffusion, lightweight and dependency-free implementation, various quantization support, memory-efficient CPU inference, GPU acceleration, and more. Users can download the built executable program or build it manually. The repository also includes instructions for downloading weights, building from scratch, using different acceleration methods, running the tool, converting weights, and utilizing various features like Flash Attention, ESRGAN upscaling, PhotoMaker support, and more. Additionally, it mentions future TODOs and provides information on memory requirements, bindings, UIs, contributors, and references.

ChordMiniApp

ChordMini is an advanced music analysis platform with AI-powered chord recognition, beat detection, and synchronized lyrics. It features a clean and intuitive interface for YouTube search, chord progression visualization, interactive guitar diagrams with accurate fingering patterns, lead sheet with AI assistant for synchronized lyrics transcription, and various add-on features like Roman Numeral Analysis, Key Modulation Signals, Simplified Chord Notation, and Enhanced Chord Correction. The tool requires Node.js, Python 3.9+, and a Firebase account for setup. It offers a hybrid backend architecture for local development and production deployments, with features like beat detection, chord recognition, lyrics processing, rate limiting, and audio processing supporting MP3, WAV, and FLAC formats. ChordMini provides a comprehensive music analysis workflow from user input to visualization, including dual input support, environment-aware processing, intelligent caching, advanced ML pipeline, and rich visualization options.

airflow-client-python

The Apache Airflow Python Client provides a range of REST API endpoints for managing Airflow metadata objects. It supports CRUD operations for resources, with endpoints accepting and returning JSON. Users can create, read, update, and delete resources. The API design follows conventions with consistent naming and field formats. Update mask is available for patch endpoints to specify fields for update. API versioning is not synchronized with Airflow releases, and changes go through a deprecation phase. The tool supports various authentication methods and error responses follow RFC 7807 format.

botserver

General Bots is a self-hosted AI automation platform and LLM conversational platform focused on convention over configuration and code-less approaches. It serves as the core API server handling LLM orchestration, business logic, database operations, and multi-channel communication. The platform offers features like multi-vendor LLM API, MCP + LLM Tools Generation, Semantic Caching, Web Automation Engine, Enterprise Data Connectors, and Git-like Version Control. It enforces a ZERO TOLERANCE POLICY for code quality and security, with strict guidelines for error handling, performance optimization, and code patterns. The project structure includes modules for core functionalities like Rhai BASIC interpreter, security, shared types, tasks, auto task system, file operations, learning system, and LLM assistance.

agentfield

AgentField is an open-source control plane designed for autonomous AI agents, providing infrastructure for agents to make decisions beyond chatbots. It offers features like scaling infrastructure, routing & discovery, async execution, durable state, observability, trust infrastructure with cryptographic identity, verifiable credentials, and policy enforcement. Users can write agents in Python, Go, TypeScript, or interact via REST APIs. The tool enables the creation of AI backends that reason autonomously within defined boundaries, offering predictability and flexibility. AgentField aims to bridge the gap between AI frameworks and production-ready infrastructure for AI agents.

ComfyUI-Ollama-Describer

ComfyUI-Ollama-Describer is an extension for ComfyUI that enables the use of LLM models provided by Ollama, such as Gemma, Llava (multimodal), Llama2, Llama3, or Mistral. It requires the Ollama library for interacting with large-scale language models, supporting GPUs using CUDA and AMD GPUs on Windows, Linux, and Mac. The extension allows users to run Ollama through Docker and utilize NVIDIA GPUs for faster processing. It provides nodes for image description, text description, image captioning, and text transformation, with various customizable parameters for model selection, API communication, response generation, and model memory management.

AutoAgents

AutoAgents is a cutting-edge multi-agent framework built in Rust that enables the creation of intelligent, autonomous agents powered by Large Language Models (LLMs) and Ractor. Designed for performance, safety, and scalability. AutoAgents provides a robust foundation for building complex AI systems that can reason, act, and collaborate. With AutoAgents you can create Cloud Native Agents, Edge Native Agents and Hybrid Models as well. It is so extensible that other ML Models can be used to create complex pipelines using Actor Framework.

For similar tasks

human

AI-powered 3D Face Detection & Rotation Tracking, Face Description & Recognition, Body Pose Tracking, 3D Hand & Finger Tracking, Iris Analysis, Age & Gender & Emotion Prediction, Gaze Tracking, Gesture Recognition, Body Segmentation

Fay

Fay is an open-source digital human framework that offers different versions for various purposes. The '带货完整版' is suitable for online and offline salespersons. The '助理完整版' serves as a human-machine interactive digital assistant that can also control devices upon command. The 'agent版' is designed to be an autonomous agent capable of making decisions and contacting its owner. The framework provides updates and improvements across its different versions, including features like emotion analysis integration, model optimizations, and compatibility enhancements. Users can access detailed documentation for each version through the provided links.

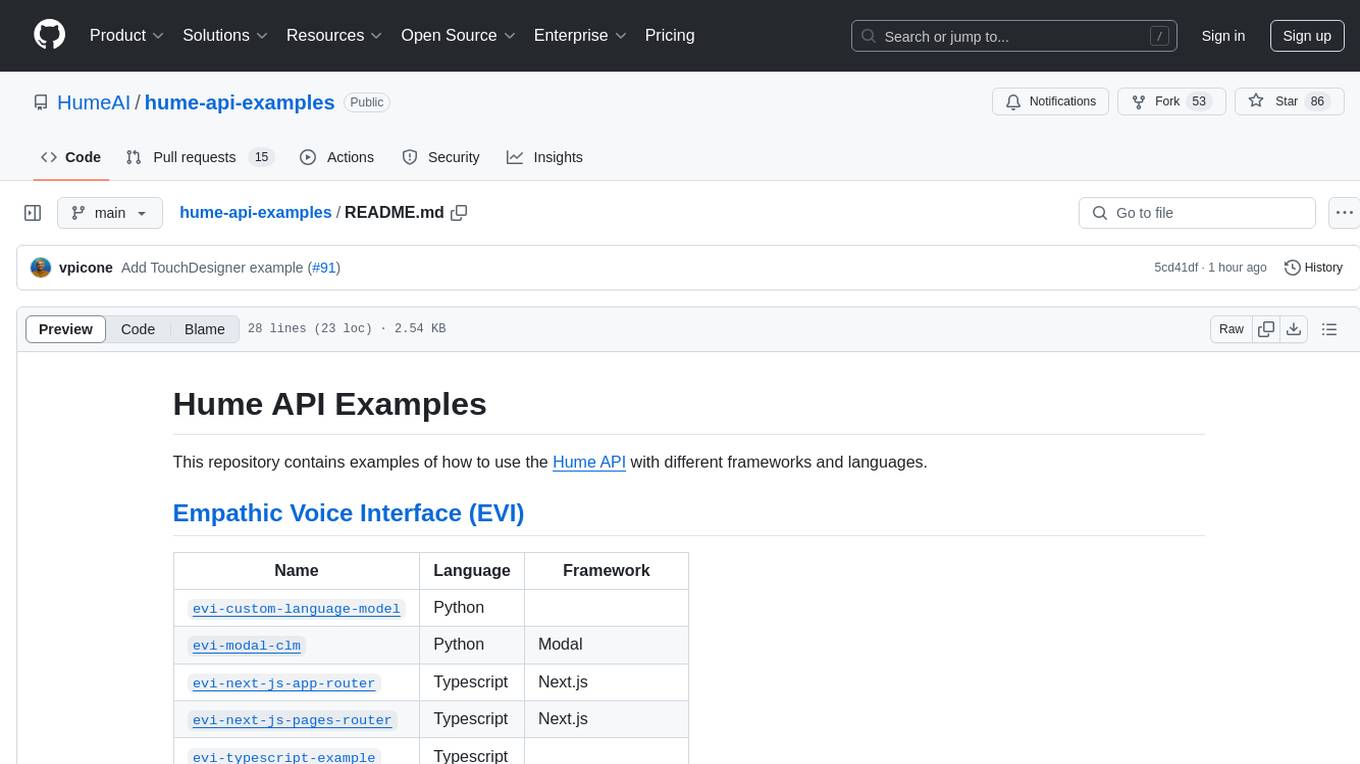

hume-api-examples

This repository contains examples of how to use the Hume API with different frameworks and languages. It includes examples for Empathic Voice Interface (EVI) and Expression Measurement API. The EVI examples cover custom language models, modal, Next.js integration, Vue integration, Hume Python SDK, and React integration. The Expression Measurement API examples include models for face, language, burst, and speech, with implementations in Python and Typescript using frameworks like Next.js.

Starmoon

Starmoon is an affordable, compact AI-enabled device that can understand and respond to your emotions with empathy. It offers supportive conversations and personalized learning assistance. The device is cost-effective, voice-enabled, open-source, compact, and aims to reduce screen time. Users can assemble the device themselves using off-the-shelf components and deploy it locally for data privacy. Starmoon integrates various APIs for AI language models, speech-to-text, text-to-speech, and emotion intelligence. The hardware setup involves components like ESP32S3, microphone, amplifier, speaker, LED light, and button, along with software setup instructions for developers. The project also includes a web app, backend API, and background task dashboard for monitoring and management.

gabber

Gabber is a real-time AI engine that supports graph-based apps with multiple participants and simultaneous media streams. It allows developers to build powerful and developer-friendly AI applications across voice, text, video, and more. The engine consists of frontend and backend services including an editor, engine, and repository. Gabber provides SDKs for JavaScript/TypeScript, React, Python, Unity, and upcoming support for iOS, Android, React Native, and Flutter. The roadmap includes adding more nodes and examples, such as computer use nodes, Unity SDK with robotics simulation, SIP nodes, and multi-participant turn-taking. Users can create apps using nodes, pads, subgraphs, and state machines to define application flow and logic.

MiniAI-Face-Recognition-LivenessDetection-WindowsSDK

This repository contains a C++ application that demonstrates face recognition capabilities using computer vision techniques. The demo utilizes OpenCV and dlib libraries for efficient face detection and recognition with 3D passive face liveness detection (face anti-spoofing). Key Features: Face detection: The SDK utilizes advanced computer vision techniques to detect faces in images or video frames, enabling a wide range of applications. Face recognition: It can recognize known faces by comparing them with a pre-defined database of individuals. Age estimation: It can estimate the age of detected faces. Gender detection: It can determine the gender of detected faces. Liveness detection: It can detect whether a face is from a live person or a static image.

face-api

FaceAPI is an AI-powered tool for face detection, rotation tracking, face description, recognition, age, gender, and emotion prediction. It can be used in both browser and NodeJS environments using TensorFlow/JS. The tool provides live demos for processing images and webcam feeds, along with NodeJS examples for various tasks such as face similarity comparison and multiprocessing. FaceAPI offers different pre-built versions for client-side browser execution and server-side NodeJS execution, with or without TFJS pre-bundled. It is compatible with TFJS 2.0+ and TFJS 3.0+.

MiKaPo

MiKaPo is a web-based tool that allows users to pose MMD models in real-time using video input. It utilizes technologies such as Mediapipe for 3D key points detection, Babylon.js for 3D scene rendering, babylon-mmd for MMD model viewing, and Vite+React for the web framework. Users can upload videos and images, select different environments, and choose models for posing. MiKaPo also supports camera input and Ollama (electron version). The tool is open to feature requests and pull requests, with ongoing development to add VMD export functionality.

For similar jobs

human

AI-powered 3D Face Detection & Rotation Tracking, Face Description & Recognition, Body Pose Tracking, 3D Hand & Finger Tracking, Iris Analysis, Age & Gender & Emotion Prediction, Gaze Tracking, Gesture Recognition, Body Segmentation

ailia-models

The collection of pre-trained, state-of-the-art AI models. ailia SDK is a self-contained, cross-platform, high-speed inference SDK for AI. The ailia SDK provides a consistent C++ API across Windows, Mac, Linux, iOS, Android, Jetson, and Raspberry Pi platforms. It also supports Unity (C#), Python, Rust, Flutter(Dart) and JNI for efficient AI implementation. The ailia SDK makes extensive use of the GPU through Vulkan and Metal to enable accelerated computing. # Supported models 323 models as of April 8th, 2024