xtuner

A Next-Generation Training Engine Built for Ultra-Large MoE Models

Stars: 4829

XTuner is an efficient, flexible, and full-featured toolkit for fine-tuning large models. It supports various LLMs (InternLM, Mixtral-8x7B, Llama 2, ChatGLM, Qwen, Baichuan, ...), VLMs (LLaVA), and various training algorithms (QLoRA, LoRA, full-parameter fine-tune). XTuner also provides tools for chatting with pretrained / fine-tuned LLMs and deploying fine-tuned LLMs with any other framework, such as LMDeploy.

README:

- [2025/09] XTuner V1 Released! A Next-Generation Training Engine Built for Ultra-Large MoE Models

XTuner V1 is a next-generation LLM training engine specifically designed for ultra-large-scale MoE models. Unlike traditional 3D parallel training architectures, XTuner V1 is optimized for the mainstream MoE training scenarios prevalent in today's academic research.

📊 Dropless Training

- Scalable without complexity: Train 200B-scale MoE models without expert parallelism; 600B models require only intra-node expert parallelism

- Optimized parallelism strategy: Smaller expert parallelism dimension compared to traditional 3D approaches, enabling more efficient Dropless training

📝 Long Sequence Support

- Memory-efficient design: Train 200B MoE models on 64k sequence lengths without sequence parallelism through advanced memory optimization techniques

- Flexible scaling: Full support for DeepSpeed Ulysses sequence parallelism with linearly scalable maximum sequence length

- Robust performance: Maintains stability despite expert load imbalance during long sequence training

⚡ Superior Efficiency

- Massive scale: Supports MoE training up to 1T parameters

- Breakthrough performance: First to achieve FSDP training throughput that surpasses traditional 3D parallel schemes for MoE models above 200B scale

- Hardware optimization: Achieves training efficiency on Ascend A3 Supernode that exceeds NVIDIA H800

XTuner V1 is committed to continuously improving training efficiency for pre-training, instruction fine-tuning, and reinforcement learning of ultra-large MoE models, with special focus on Ascend NPU optimization.

Our vision is to establish XTuner V1 as a versatile training backend that seamlessly integrates with the broader open-source ecosystem.

| Model | GPU(FP8) | GPU(BF16) | NPU(BF16) |

|---|---|---|---|

| Intern S1 | ✅ | ✅ | ✅ |

| Intern VL | ✅ | ✅ | ✅ |

| Qwen3 Dense | ✅ | ✅ | ✅ |

| Qwen3 MoE | ✅ | ✅ | ✅ |

| GPT OSS | ✅ | ✅ | 🚧 |

| Deepseek V3 | ✅ | ✅ | 🚧 |

| KIMI K2 | ✅ | ✅ | 🚧 |

The algorithm component is actively evolving. We welcome community contributions - with XTuner V1, scale your algorithms to unprecedented sizes!

Implemented

- ✅ Multimodal Pre-training - Full support for vision-language model training

- ✅ Multimodal Supervised Fine-tuning - Optimized for instruction following

- ✅ GRPO - Group Relative Policy Optimization

Coming Soon

- 🔄 MPO - Mixed Preference Optimization

- 🔄 DAPO - Dynamic Sampling Policy Optimization

- 🔄 Multi-turn Agentic RL - Advanced agent training capabilities

Seamless deployment with leading inference frameworks:

- [x] LMDeploy

- [ ] vLLM

- [ ] SGLang

- You can use GraphGen to create synthetic data for fine-tuning.

We appreciate all contributions to XTuner. Please refer to CONTRIBUTING.md for the contributing guideline.

The development of XTuner V1's training engine has been greatly inspired by and built upon the excellent work of the open-source community. We extend our sincere gratitude to the following pioneering projects:

Training Engine:

- Torchtitan - A PyTorch native platform for training generative AI models

- Deepspeed - Microsoft's deep learning optimization library

- MindSpeed - Ascend's high-performance training acceleration library

- Megatron - NVIDIA's large-scale transformer training framework

Reinforcement Learning:

XTuner V1's reinforcement learning capabilities have been enhanced through insights and best practices from:

- veRL - Volcano Engine Reinforcement Learning for LLMs

- SLIME - THU's scalable RLHF implementation

- AReal - Ant Reasoning Reinforcement Learning for LLMs

- OpenRLHF - An Easy-to-use, Scalable and High-performance RLHF Framework based on Ray

We are deeply grateful to all contributors and maintainers of these projects for advancing the field of large-scale model training.

@misc{2023xtuner,

title={XTuner: A Toolkit for Efficiently Fine-tuning LLM},

author={XTuner Contributors},

howpublished = {\url{https://github.com/InternLM/xtuner}},

year={2023}

}This project is released under the Apache License 2.0. Please also adhere to the Licenses of models and datasets being used.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for xtuner

Similar Open Source Tools

xtuner

XTuner is an efficient, flexible, and full-featured toolkit for fine-tuning large models. It supports various LLMs (InternLM, Mixtral-8x7B, Llama 2, ChatGLM, Qwen, Baichuan, ...), VLMs (LLaVA), and various training algorithms (QLoRA, LoRA, full-parameter fine-tune). XTuner also provides tools for chatting with pretrained / fine-tuned LLMs and deploying fine-tuned LLMs with any other framework, such as LMDeploy.

big-AGI

big-AGI is an AI suite designed for professionals seeking function, form, simplicity, and speed. It offers best-in-class Chats, Beams, and Calls with AI personas, visualizations, coding, drawing, side-by-side chatting, and more, all wrapped in a polished UX. The tool is powered by the latest models from 12 vendors and open-source servers, providing users with advanced AI capabilities and a seamless user experience. With continuous updates and enhancements, big-AGI aims to stay ahead of the curve in the AI landscape, catering to the needs of both developers and AI enthusiasts.

computer

Cua is a tool for creating and running high-performance macOS and Linux VMs on Apple Silicon, with built-in support for AI agents. It provides libraries like Lume for running VMs with near-native performance, Computer for interacting with sandboxes, and Agent for running agentic workflows. Users can refer to the documentation for onboarding and explore demos showcasing the tool's capabilities. Additionally, accessory libraries like Core, PyLume, Computer Server, and SOM offer additional functionality. Contributions to Cua are welcome, and the tool is open-sourced under the MIT License.

retinify

Retinify is an advanced AI-powered stereo vision library designed for robotics, enabling real-time, high-precision 3D perception by leveraging GPU and NPU acceleration. It is open source under Apache-2.0 license, offers high precision 3D mapping and object recognition, runs computations on GPU for fast performance, accepts stereo images from any rectified camera setup, is cost-efficient using minimal hardware, and has minimal dependencies on CUDA Toolkit, cuDNN, and TensorRT. The tool provides a pipeline for stereo matching and supports various image data types independently of OpenCV.

anx-reader

Anx Reader is a meticulously designed e-book reader tailored for book enthusiasts. It boasts powerful AI functionalities and supports various e-book formats, enhancing the reading experience. With a modern interface, the tool aims to provide a seamless and enjoyable reading journey. It offers rich format support, seamless sync across devices, smart AI assistance, personalized reading experiences, professional reading analytics, a powerful note system, practical tools, and cross-platform support. The tool is continuously evolving with features like UI adaptation for tablets, page-turning animation, TTS voice reading, reading fonts, translation, and more in the pipeline.

RLinf

RLinf is a flexible and scalable open-source infrastructure designed for post-training foundation models via reinforcement learning. It provides a robust backbone for next-generation training, supporting open-ended learning, continuous generalization, and limitless possibilities in intelligence development. The tool offers unique features like Macro-to-Micro Flow, flexible execution modes, auto-scheduling strategy, embodied agent support, and fast adaptation for mainstream VLA models. RLinf is fast with hybrid mode and automatic online scaling strategy, achieving significant throughput improvement and efficiency. It is also flexible and easy to use with multiple backend integrations, adaptive communication, and built-in support for popular RL methods. The roadmap includes system-level enhancements and application-level extensions to support various training scenarios and models. Users can get started with complete documentation, quickstart guides, key design principles, example gallery, advanced features, and guidelines for extending the framework. Contributions are welcome, and users are encouraged to cite the GitHub repository and acknowledge the broader open-source community.

sdnext

SD.Next is an Image Diffusion implementation with advanced features. It offers multiple UI options, diffusion models, and built-in controls for text, image, batch, and video processing. The tool is multiplatform, supporting Windows, Linux, MacOS, nVidia, AMD, IntelArc/IPEX, DirectML, OpenVINO, ONNX+Olive, and ZLUDA. It provides optimized processing with the latest torch developments, including model compile, quantize, and compress functionalities. SD.Next also features Interrogate/Captioning with various models, queue management, automatic updates, and mobile compatibility.

nuitrack-sdk

Nuitrack™ is an ultimate 3D body tracking solution developed by 3DiVi Inc. It enables body motion analytics applications for virtually any widespread depth sensors and hardware platforms, supporting a wide range of applications from real-time gesture recognition on embedded platforms to large-scale multisensor analytical systems. Nuitrack provides highly-sophisticated 3D skeletal tracking, basic facial analysis, hand tracking, and gesture recognition APIs for UI control. It offers two skeletal tracking engines: classical for embedded hardware and AI for complex poses, providing a human-centric spatial understanding tool for natural and intelligent user engagement.

automatic

Automatic is an Image Diffusion implementation with advanced features. It supports multiple diffusion models, built-in control for text, image, batch, and video processing, and is compatible with various platforms and backends. The tool offers optimized processing with the latest torch developments, built-in support for torch.compile, and multiple compile backends. It also features platform-specific autodetection, queue management, enterprise-level logging, and a built-in installer with automatic updates and dependency management. Automatic is mobile compatible and provides a main interface using StandardUI and ModernUI.

Awesome-Lists-and-CheatSheets

Awesome-Lists is a curated index of selected resources spanning various fields including programming languages and theories, web and frontend development, server-side development and infrastructure, cloud computing and big data, data science and artificial intelligence, product design, etc. It includes articles, books, courses, examples, open-source projects, and more. The repository categorizes resources according to the knowledge system of different domains, aiming to provide valuable and concise material indexes for readers. Users can explore and learn from a wide range of high-quality resources in a systematic way.

MATLAB-Simulink-Challenge-Project-Hub

MATLAB-Simulink-Challenge-Project-Hub is a repository aimed at contributing to the progress of engineering and science by providing challenge projects with real industry relevance and societal impact. The repository offers a wide range of projects covering various technology trends such as Artificial Intelligence, Autonomous Vehicles, Big Data, Computer Vision, and Sustainability. Participants can gain practical skills with MATLAB and Simulink while making a significant contribution to science and engineering. The projects are designed to enhance expertise in areas like Sustainability and Renewable Energy, Control, Modeling and Simulation, Machine Learning, and Robotics. By participating in these projects, individuals can receive official recognition for their problem-solving skills from technology leaders at MathWorks and earn rewards upon project completion.

supabase

Supabase is an open source Firebase alternative that provides a wide range of features including a hosted Postgres database, authentication and authorization, auto-generated APIs, REST and GraphQL support, realtime subscriptions, functions, file storage, AI and vector/embeddings toolkit, and a dashboard. It aims to offer developers a Firebase-like experience using enterprise-grade open source tools.

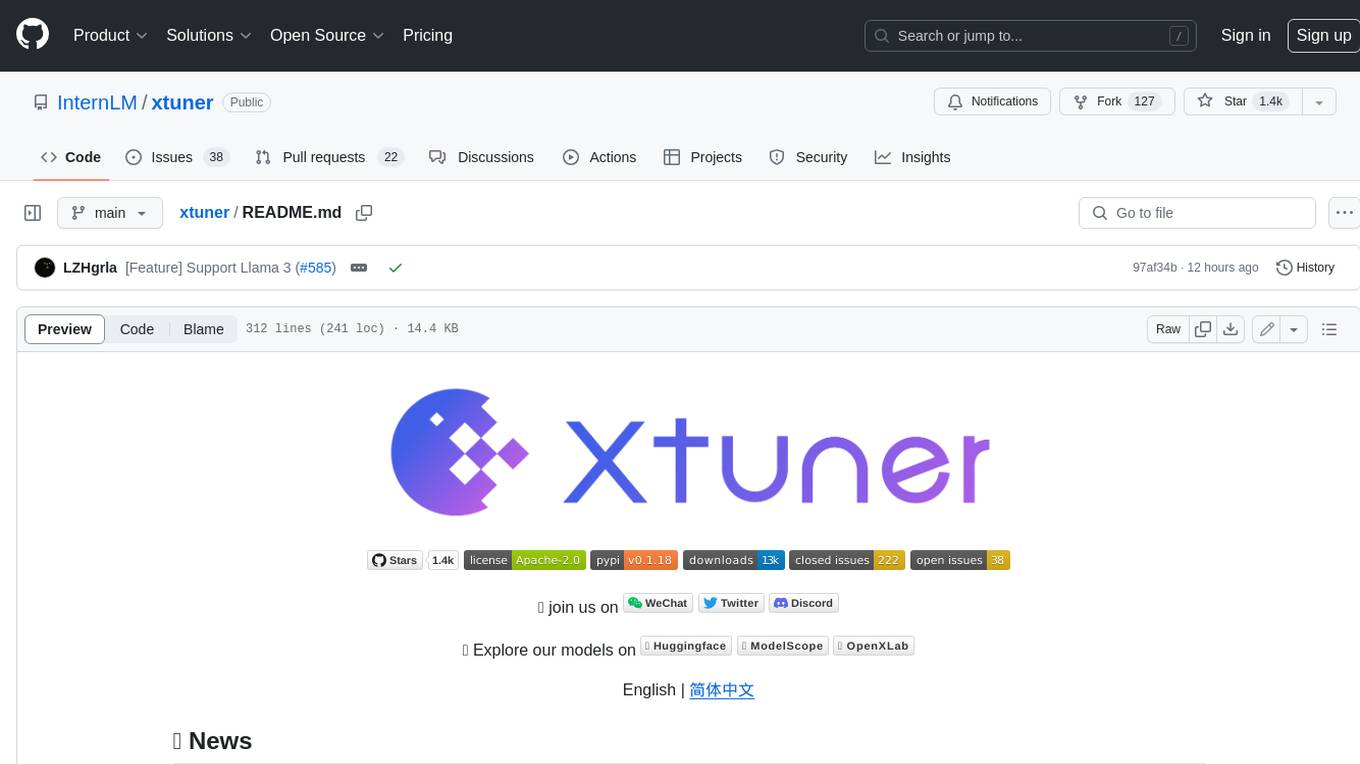

comfyui-photoshop

ComfyUI for Photoshop is a plugin that integrates with an AI-powered image generation system to enhance the Photoshop experience with features like unlimited generative fill, customizable back-end, AI-powered artistry, and one-click transformation. The plugin requires a minimum of 6GB graphics memory and 12GB RAM. Users can install the plugin and set up the ComfyUI workflow using provided links and files. Additionally, specific files like Check points, Loras, and Detailer Lora are required for different functionalities. Support and contributions are encouraged through GitHub.

superagentx

SuperAgentX is a lightweight open-source AI framework designed for multi-agent applications with Artificial General Intelligence (AGI) capabilities. It offers goal-oriented multi-agents with retry mechanisms, easy deployment through WebSocket, RESTful API, and IO console interfaces, streamlined architecture with no major dependencies, contextual memory using SQL + Vector databases, flexible LLM configuration supporting various Gen AI models, and extendable handlers for integration with diverse APIs and data sources. It aims to accelerate the development of AGI by providing a powerful platform for building autonomous AI agents capable of executing complex tasks with minimal human intervention.

Open-Sora-Plan

Open-Sora-Plan is a project that aims to create a simple and scalable repo to reproduce Sora (OpenAI, but we prefer to call it "ClosedAI"). The project is still in its early stages, but the team is working hard to improve it and make it more accessible to the open-source community. The project is currently focused on training an unconditional model on a landscape dataset, but the team plans to expand the scope of the project in the future to include text2video experiments, training on video2text datasets, and controlling the model with more conditions.

For similar tasks

xtuner

XTuner is an efficient, flexible, and full-featured toolkit for fine-tuning large models. It supports various LLMs (InternLM, Mixtral-8x7B, Llama 2, ChatGLM, Qwen, Baichuan, ...), VLMs (LLaVA), and various training algorithms (QLoRA, LoRA, full-parameter fine-tune). XTuner also provides tools for chatting with pretrained / fine-tuned LLMs and deploying fine-tuned LLMs with any other framework, such as LMDeploy.

llm-hosting-container

The LLM Hosting Container repository provides Dockerfile and associated resources for building and hosting containers for large language models, specifically the HuggingFace Text Generation Inference (TGI) container. This tool allows users to easily deploy and manage large language models in a containerized environment, enabling efficient inference and deployment of language-based applications.

wingman

The LLM Platform, also known as Inference Hub, is an open-source tool designed to simplify the development and deployment of large language model applications at scale. It provides a unified framework for integrating and managing multiple LLM vendors, models, and related services through a flexible approach. The platform supports various LLM providers, document processing, RAG, advanced AI workflows, infrastructure operations, and flexible configuration using YAML files. Its modular and extensible architecture allows developers to plug in different providers and services as needed. Key components include completers, embedders, renderers, synthesizers, transcribers, document processors, segmenters, retrievers, summarizers, translators, AI workflows, tools, and infrastructure components. Use cases range from enterprise AI applications to scalable LLM deployment and custom AI pipelines. Integrations with LLM providers like OpenAI, Azure OpenAI, Anthropic, Google Gemini, AWS Bedrock, Groq, Mistral AI, xAI, Hugging Face, and more are supported.

Awesome-local-LLM

Awesome-local-LLM is a curated list of platforms, tools, practices, and resources that help run Large Language Models (LLMs) locally. It includes sections on inference platforms, engines, user interfaces, specific models for general purpose, coding, vision, audio, and miscellaneous tasks. The repository also covers tools for coding agents, agent frameworks, retrieval-augmented generation, computer use, browser automation, memory management, testing, evaluation, research, training, and fine-tuning. Additionally, there are tutorials on models, prompt engineering, context engineering, inference, agents, retrieval-augmented generation, and miscellaneous topics, along with a section on communities for LLM enthusiasts.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.