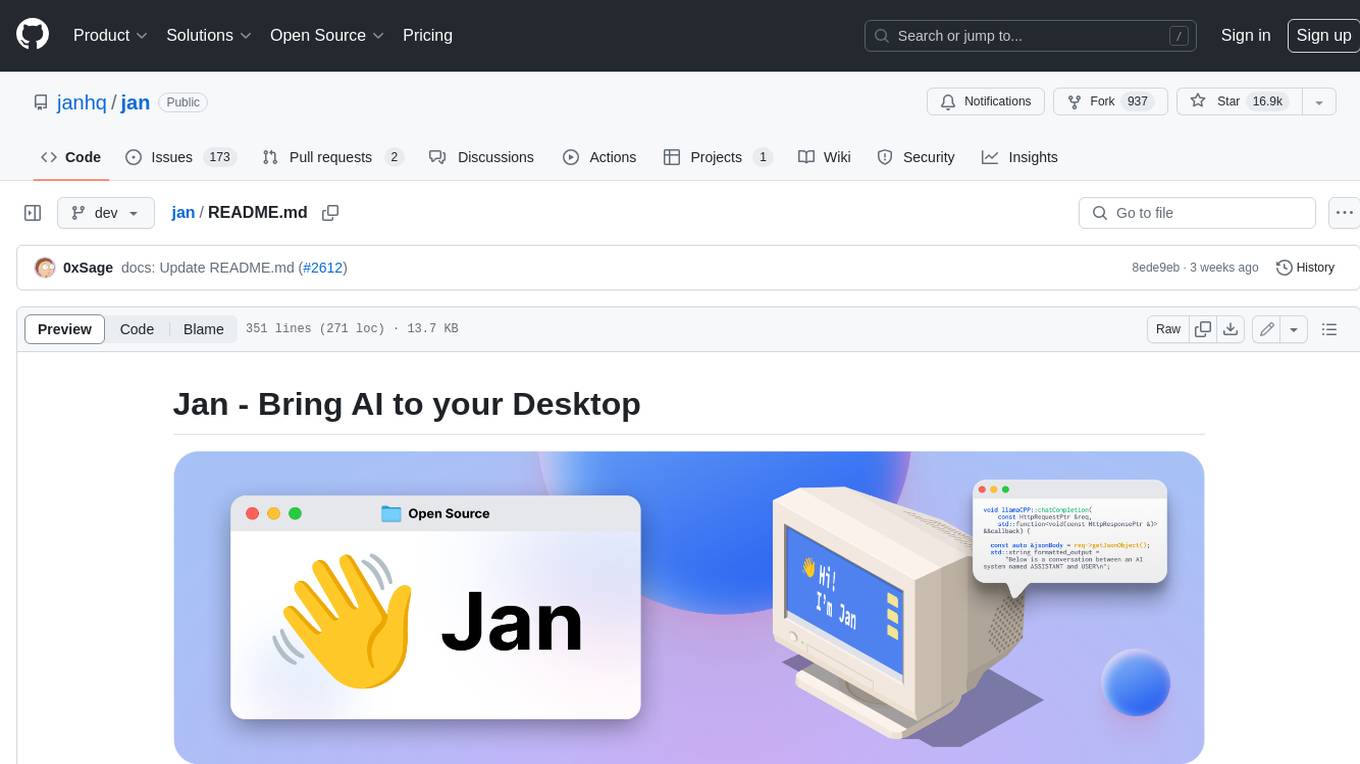

jan

Jan is an open source alternative to ChatGPT that runs 100% offline on your computer.

Stars: 40374

Jan is an open-source ChatGPT alternative that runs 100% offline on your computer. It supports universal architectures, including Nvidia GPUs, Apple M-series, Apple Intel, Linux Debian, and Windows x64. Jan is currently in development, so expect breaking changes and bugs. It is lightweight and embeddable, and can be used on its own within your own projects.

README:

Getting Started - Community - Changelog - Bug reports

Jan is bringing the best of open-source AI in an easy-to-use product. Download and run LLMs with full control and privacy.

|

|

The easiest way to get started is by downloading one of the following versions for your respective operating system:

| Platform | Download |

| Windows | jan.exe |

| macOS | jan.dmg |

| Linux (deb) | jan.deb |

| Linux (AppImage) | jan.AppImage |

| Linux (Arm64) | How-to |

Download from jan.ai or GitHub Releases.

- Local AI Models: Download and run LLMs (Llama, Gemma, Qwen, GPT-oss etc.) from HuggingFace

- Cloud Integration: Connect to GPT models via OpenAI, Claude models via Anthropic, Mistral, Groq, and others

- Custom Assistants: Create specialized AI assistants for your tasks

-

OpenAI-Compatible API: Local server at

localhost:1337for other applications - Model Context Protocol: MCP integration for agentic capabilities

- Privacy First: Everything runs locally when you want it to

For those who enjoy the scenic route:

- Node.js ≥ 20.0.0

- Yarn ≥ 1.22.0

- Make ≥ 3.81

- Rust (for Tauri)

git clone https://github.com/janhq/jan

cd jan

make devThis handles everything: installs dependencies, builds core components, and launches the app.

Available make targets:

-

make dev- Full development setup and launch -

make build- Production build -

make test- Run tests and linting -

make clean- Delete everything and start fresh

yarn install

yarn build:tauri:plugin:api

yarn build:core

yarn build:extensions

yarn devMinimum specs for a decent experience:

- macOS: 13.6+ (8GB RAM for 3B models, 16GB for 7B, 32GB for 13B)

- Windows: 10+ with GPU support for NVIDIA/AMD/Intel Arc

- Linux: Most distributions work, GPU acceleration available

For detailed compatibility, check our installation guides.

If things go sideways:

- Check our troubleshooting docs

- Copy your error logs and system specs

- Ask for help in our Discord

#🆘|jan-helpchannel

Contributions welcome. See CONTRIBUTING.md for the full spiel.

- Documentation - The manual you should read

- API Reference - For the technically inclined

- Changelog - What we broke and fixed

- Discord - Where the community lives

- Bugs: GitHub Issues

- Business: [email protected]

- Jobs: [email protected]

- General Discussion: Discord

Apache 2.0 - Because sharing is caring.

Built on the shoulders of giants:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for jan

Similar Open Source Tools

jan

Jan is an open-source ChatGPT alternative that runs 100% offline on your computer. It supports universal architectures, including Nvidia GPUs, Apple M-series, Apple Intel, Linux Debian, and Windows x64. Jan is currently in development, so expect breaking changes and bugs. It is lightweight and embeddable, and can be used on its own within your own projects.

transformerlab-app

Transformer Lab is an app that allows users to experiment with Large Language Models by providing features such as one-click download of popular models, finetuning across different hardware, RLHF and Preference Optimization, working with LLMs across different operating systems, chatting with models, using different inference engines, evaluating models, building datasets for training, calculating embeddings, providing a full REST API, running in the cloud, converting models across platforms, supporting plugins, embedded Monaco code editor, prompt editing, inference logs, all through a simple cross-platform GUI.

nodetool

NodeTool is a platform designed for AI enthusiasts, developers, and creators, providing a visual interface to access a variety of AI tools and models. It simplifies access to advanced AI technologies, offering resources for content creation, data analysis, automation, and more. With features like a visual editor, seamless integration with leading AI platforms, model manager, and API integration, NodeTool caters to both newcomers and experienced users in the AI field.

AionUi

AionUi is a user interface library for building modern and responsive web applications. It provides a set of customizable components and styles to create visually appealing user interfaces. With AionUi, developers can easily design and implement interactive web interfaces that are both functional and aesthetically pleasing. The library is built using the latest web technologies and follows best practices for performance and accessibility. Whether you are working on a personal project or a professional application, AionUi can help you streamline the UI development process and deliver a seamless user experience.

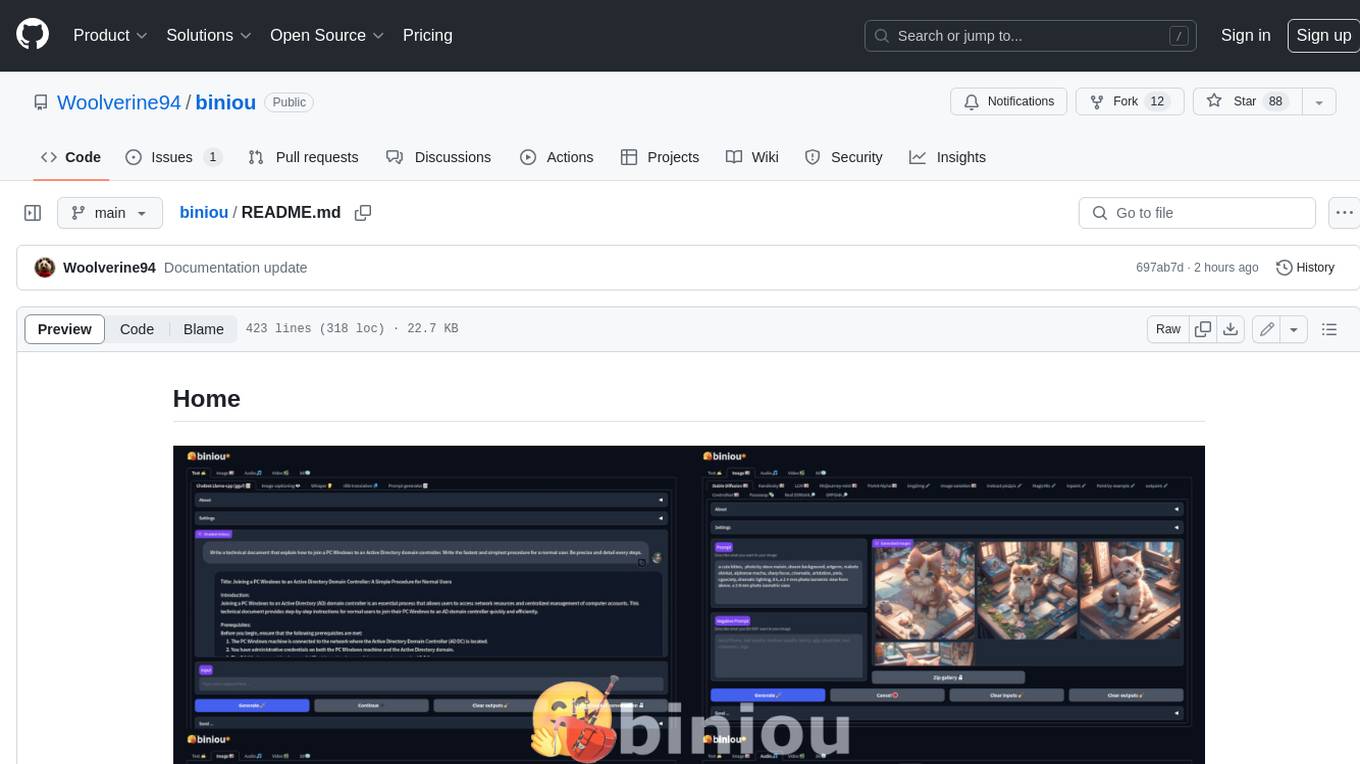

biniou

biniou is a self-hosted webui for various GenAI (generative artificial intelligence) tasks. It allows users to generate multimedia content using AI models and chatbots on their own computer, even without a dedicated GPU. The tool can work offline once deployed and required models are downloaded. It offers a wide range of features for text, image, audio, video, and 3D object generation and modification. Users can easily manage the tool through a control panel within the webui, with support for various operating systems and CUDA optimization. biniou is powered by Huggingface and Gradio, providing a cross-platform solution for AI content generation.

handit.ai

Handit.ai is an autonomous engineer tool designed to fix AI failures 24/7. It catches failures, writes fixes, tests them, and ships PRs automatically. It monitors AI applications, detects issues, generates fixes, tests them against real data, and ships them as pull requests—all automatically. Users can write JavaScript, TypeScript, Python, and more, and the tool automates what used to require manual debugging and firefighting.

gemini-voyager

Gemini Voyager is a browser extension designed to enhance the user experience of Google Gemini by providing features such as folder organization, prompt vault, cloud sync, timeline navigation, chat export, Mermaid rendering, markdown fixing, and various power tools. It aims to help users keep their AI conversations organized, accessible, and productive. The extension is available on multiple browsers and supports manual installation and development builds. Users can also support the project by buying the developer a coffee or sponsoring via different platforms.

llamafarm

LlamaFarm is a comprehensive AI framework that empowers users to build powerful AI applications locally, with full control over costs and deployment options. It provides modular components for RAG systems, vector databases, model management, prompt engineering, and fine-tuning. Users can create differentiated AI products without needing extensive ML expertise, using simple CLI commands and YAML configs. The framework supports local-first development, production-ready components, strategy-based configuration, and deployment anywhere from laptops to the cloud.

claude-code-plugins-plus-skills

Claude Code Skills & Plugins Hub is a comprehensive marketplace for agent skills and plugins, offering 1537 production-ready agent skills and 270 total plugins. It provides a learning lab with guides, diagrams, and examples for building production agent workflows. The package manager CLI allows users to discover, install, and manage plugins from their terminal, with features like searching, listing, installing, updating, and validating plugins. The marketplace is not on GitHub Marketplace and does not support built-in monetization. It is community-driven, actively maintained, and focuses on quality over quantity, aiming to be the definitive resource for Claude Code plugins.

neuropilot

NeuroPilot is an open-source AI-powered education platform that transforms study materials into interactive learning resources. It provides tools like contextual chat, smart notes, flashcards, quizzes, and AI podcasts. Supported by various AI models and embedding providers, it offers features like WebSocket streaming, JSON or vector database support, file-based storage, and configurable multi-provider setup for LLMs and TTS engines. The technology stack includes Node.js, TypeScript, Vite, React, TailwindCSS, JSON database, multiple LLM providers, and Docker for deployment. Users can contribute to the project by integrating AI models, adding mobile app support, improving performance, enhancing accessibility features, and creating documentation and tutorials.

CBbot

CBbot is an AI-powered coding assistant for macOS that helps users write code more efficiently, process documents, and automate tasks. It offers easy installation, built-in AI coding capabilities, auto configuration, and smart tools. Users can download CBbot for macOS 10.15 or higher, with Apple Silicon or Intel chip, and at least 6GB memory and 10GB disk space. The tool requires an internet connection for AI features. CBbot assists users in installing Docker Desktop, binding keys, troubleshooting, and using various skills for document processing and automation tasks. It also provides community support, billing based on usage, and network tips for using overseas AI models.

RSTGameTranslation

RSTGameTranslation is a tool designed for translating game text into multiple languages efficiently. It provides a user-friendly interface for game developers to easily manage and localize their game content. With RSTGameTranslation, developers can streamline the translation process, ensuring consistency and accuracy across different language versions of their games. The tool supports various file formats commonly used in game development, making it versatile and adaptable to different project requirements. Whether you are working on a small indie game or a large-scale production, RSTGameTranslation can help you reach a global audience by making localization a seamless and hassle-free experience.

Lumina-Note

Lumina Note is a local-first AI note-taking app designed to help users write, connect, and evolve knowledge with AI capabilities while ensuring data ownership. It offers a knowledge-centered workflow with features like Markdown editor, WikiLinks, and graph view. The app includes AI workspace modes such as Chat, Agent, Deep Research, and Codex, along with support for multiple model providers. Users can benefit from bidirectional links, LaTeX support, graph visualization, PDF reader with annotations, real-time voice input, and plugin ecosystem for extended functionalities. Lumina Note is built on Tauri v2 framework with a tech stack including React 18, TypeScript, Tailwind CSS, and SQLite for vector storage.

natively-cluely-ai-assistant

Natively is a free, open-source, privacy-first AI assistant designed to help users in real time during meetings, interviews, presentations, and conversations. Unlike traditional AI tools that work after the conversation, Natively operates while the conversation is happening. It runs as an invisible, always-on-top desktop overlay, listens when prompted, observes the screen content, and provides instant, context-aware assistance. The tool is fully transparent, customizable, and grants users complete control over local vs cloud AI, data, and credentials.

tingly-box

Tingly Box is a tool that helps in deciding which model to call, compressing context, and routing requests efficiently. It offers secure, reliable, and customizable functional extensions. With features like unified API, smart routing, context compression, auto API translation, blazing fast performance, flexible authentication, visual control panel, and client-side usage stats, Tingly Box provides a comprehensive solution for managing AI models and tokens. It supports integration with various IDEs, CLI tools, SDKs, and AI applications, making it versatile and easy to use. The tool also allows seamless integration with OAuth providers like Claude Code, enabling users to utilize existing quotas in OpenAI-compatible tools. Tingly Box aims to simplify AI model management and usage by providing a single endpoint for multiple providers with minimal configuration, promoting seamless integration with SDKs and CLI tools.

opcode

opcode is a powerful desktop application built with Tauri 2 that serves as a command center for interacting with Claude Code. It offers a visual GUI for managing Claude Code sessions, creating custom agents, tracking usage, and more. Users can navigate projects, create specialized AI agents, monitor usage analytics, manage MCP servers, create session checkpoints, edit CLAUDE.md files, and more. The tool bridges the gap between command-line tools and visual experiences, making AI-assisted development more intuitive and productive.

For similar tasks

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

onnxruntime-genai

ONNX Runtime Generative AI is a library that provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. Users can call a high level `generate()` method, or run each iteration of the model in a loop. It supports greedy/beam search and TopP, TopK sampling to generate token sequences, has built in logits processing like repetition penalties, and allows for easy custom scoring.

jupyter-ai

Jupyter AI connects generative AI with Jupyter notebooks. It provides a user-friendly and powerful way to explore generative AI models in notebooks and improve your productivity in JupyterLab and the Jupyter Notebook. Specifically, Jupyter AI offers: * An `%%ai` magic that turns the Jupyter notebook into a reproducible generative AI playground. This works anywhere the IPython kernel runs (JupyterLab, Jupyter Notebook, Google Colab, Kaggle, VSCode, etc.). * A native chat UI in JupyterLab that enables you to work with generative AI as a conversational assistant. * Support for a wide range of generative model providers, including AI21, Anthropic, AWS, Cohere, Gemini, Hugging Face, NVIDIA, and OpenAI. * Local model support through GPT4All, enabling use of generative AI models on consumer grade machines with ease and privacy.

khoj

Khoj is an open-source, personal AI assistant that extends your capabilities by creating always-available AI agents. You can share your notes and documents to extend your digital brain, and your AI agents have access to the internet, allowing you to incorporate real-time information. Khoj is accessible on Desktop, Emacs, Obsidian, Web, and Whatsapp, and you can share PDF, markdown, org-mode, notion files, and GitHub repositories. You'll get fast, accurate semantic search on top of your docs, and your agents can create deeply personal images and understand your speech. Khoj is self-hostable and always will be.

langchain_dart

LangChain.dart is a Dart port of the popular LangChain Python framework created by Harrison Chase. LangChain provides a set of ready-to-use components for working with language models and a standard interface for chaining them together to formulate more advanced use cases (e.g. chatbots, Q&A with RAG, agents, summarization, extraction, etc.). The components can be grouped into a few core modules: * **Model I/O:** LangChain offers a unified API for interacting with various LLM providers (e.g. OpenAI, Google, Mistral, Ollama, etc.), allowing developers to switch between them with ease. Additionally, it provides tools for managing model inputs (prompt templates and example selectors) and parsing the resulting model outputs (output parsers). * **Retrieval:** assists in loading user data (via document loaders), transforming it (with text splitters), extracting its meaning (using embedding models), storing (in vector stores) and retrieving it (through retrievers) so that it can be used to ground the model's responses (i.e. Retrieval-Augmented Generation or RAG). * **Agents:** "bots" that leverage LLMs to make informed decisions about which available tools (such as web search, calculators, database lookup, etc.) to use to accomplish the designated task. The different components can be composed together using the LangChain Expression Language (LCEL).

danswer

Danswer is an open-source Gen-AI Chat and Unified Search tool that connects to your company's docs, apps, and people. It provides a Chat interface and plugs into any LLM of your choice. Danswer can be deployed anywhere and for any scale - on a laptop, on-premise, or to cloud. Since you own the deployment, your user data and chats are fully in your own control. Danswer is MIT licensed and designed to be modular and easily extensible. The system also comes fully ready for production usage with user authentication, role management (admin/basic users), chat persistence, and a UI for configuring Personas (AI Assistants) and their Prompts. Danswer also serves as a Unified Search across all common workplace tools such as Slack, Google Drive, Confluence, etc. By combining LLMs and team specific knowledge, Danswer becomes a subject matter expert for the team. Imagine ChatGPT if it had access to your team's unique knowledge! It enables questions such as "A customer wants feature X, is this already supported?" or "Where's the pull request for feature Y?"

infinity

Infinity is an AI-native database designed for LLM applications, providing incredibly fast full-text and vector search capabilities. It supports a wide range of data types, including vectors, full-text, and structured data, and offers a fused search feature that combines multiple embeddings and full text. Infinity is easy to use, with an intuitive Python API and a single-binary architecture that simplifies deployment. It achieves high performance, with 0.1 milliseconds query latency on million-scale vector datasets and up to 15K QPS.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.