worker-vllm

The RunPod worker template for serving our large language model endpoints. Powered by vLLM.

Stars: 300

The worker-vLLM repository provides a serverless endpoint for deploying OpenAI-compatible vLLM models with blazing-fast performance. It supports deploying various model architectures, such as Aquila, Baichuan, BLOOM, ChatGLM, Command-R, DBRX, DeciLM, Falcon, Gemma, GPT-2, GPT BigCode, GPT-J, GPT-NeoX, InternLM, Jais, LLaMA, MiniCPM, Mistral, Mixtral, MPT, OLMo, OPT, Orion, Phi, Phi-3, Qwen, Qwen2, Qwen2MoE, StableLM, Starcoder2, Xverse, and Yi. Users can deploy models using pre-built Docker images or build custom images with specified arguments. The repository also supports OpenAI compatibility for chat completions, completions, and models, with customizable input parameters. Users can modify their OpenAI codebase to use the deployed vLLM worker and access a list of available models for deployment.

README:

Deploy OpenAI-Compatible Blazing-Fast LLM Endpoints powered by the vLLM Inference Engine on RunPod Serverless with just a few clicks.

Update v2.2.0 is now available, use the image tag runpod/worker-v1-vllm:v2.2.0stable-cuda12.1.0.

3. OpenAI-Compatible Embedding Worker Released

Deploy your own OpenAI-compatible Serverless Endpoint on RunPod with multiple embedding models and fast inference for RAG and more!

Worker vLLM is now cached on all RunPod machines, resulting in near-instant deployment! Previously, downloading and extracting the image took 3-5 minutes on average.

- Setting up the Serverless Worker

- Usage: OpenAI Compatibility

- Usage: standard

- Worker Config

[!NOTE] You can now deploy from the dedicated UI on the RunPod console with all of the settings and choices listed. Try now by accessing in Explore or Serverless pages on the RunPod console!

We now offer a pre-built Docker Image for the vLLM Worker that you can configure entirely with Environment Variables when creating the RunPod Serverless Endpoint:

Below is a summary of the available RunPod Worker images, categorized by image stability and CUDA version compatibility.

| CUDA Version | Stable Image Tag | Development Image Tag | Note |

|---|---|---|---|

| 12.1.0 | runpod/worker-v1-vllm:v2.2.0stable-cuda12.1.0 |

runpod/worker-v1-vllm:v2.2.0dev-cuda12.1.0 |

When creating an Endpoint, select CUDA Version 12.3, 12.2 and 12.1 in the filter. |

- RunPod Account

Note:

0is equivalent toFalseand1is equivalent toTruefor boolean as int values.

Name |

Default |

Type/Choices |

Description |

|---|---|---|---|

MODEL_NAME |

'facebook/opt-125m' | str |

Name or path of the Hugging Face model to use. |

TOKENIZER |

None | str |

Name or path of the Hugging Face tokenizer to use. |

SKIP_TOKENIZER_INIT |

False | bool |

Skip initialization of tokenizer and detokenizer. |

TOKENIZER_MODE |

'auto' | ['auto', 'slow'] | The tokenizer mode. |

TRUST_REMOTE_CODE |

False |

bool |

Trust remote code from Hugging Face. |

DOWNLOAD_DIR |

None | str |

Directory to download and load the weights. |

LOAD_FORMAT |

'auto' | str |

The format of the model weights to load. |

HF_TOKEN |

- | str |

Hugging Face token for private and gated models. |

DTYPE |

'auto' | ['auto', 'half', 'float16', 'bfloat16', 'float', 'float32'] | Data type for model weights and activations. |

KV_CACHE_DTYPE |

'auto' | ['auto', 'fp8'] | Data type for KV cache storage. |

QUANTIZATION_PARAM_PATH |

None | str |

Path to the JSON file containing the KV cache scaling factors. |

MAX_MODEL_LEN |

None | int |

Model context length. |

GUIDED_DECODING_BACKEND |

'outlines' | ['outlines', 'lm-format-enforcer'] | Which engine will be used for guided decoding by default. |

DISTRIBUTED_EXECUTOR_BACKEND |

None | ['ray', 'mp'] | Backend to use for distributed serving. |

WORKER_USE_RAY |

False | bool |

Deprecated, use --distributed-executor-backend=ray. |

PIPELINE_PARALLEL_SIZE |

1 | int |

Number of pipeline stages. |

TENSOR_PARALLEL_SIZE |

1 | int |

Number of tensor parallel replicas. |

MAX_PARALLEL_LOADING_WORKERS |

None | int |

Load model sequentially in multiple batches. |

RAY_WORKERS_USE_NSIGHT |

False | bool |

If specified, use nsight to profile Ray workers. |

ENABLE_PREFIX_CACHING |

False | bool |

Enables automatic prefix caching. |

DISABLE_SLIDING_WINDOW |

False | bool |

Disables sliding window, capping to sliding window size. |

USE_V2_BLOCK_MANAGER |

False | bool |

Use BlockSpaceMangerV2. |

NUM_LOOKAHEAD_SLOTS |

0 | int |

Experimental scheduling config necessary for speculative decoding. |

SEED |

0 | int |

Random seed for operations. |

NUM_GPU_BLOCKS_OVERRIDE |

None | int |

If specified, ignore GPU profiling result and use this number of GPU blocks. |

MAX_NUM_BATCHED_TOKENS |

None | int |

Maximum number of batched tokens per iteration. |

MAX_NUM_SEQS |

256 | int |

Maximum number of sequences per iteration. |

MAX_LOGPROBS |

20 | int |

Max number of log probs to return when logprobs is specified in SamplingParams. |

DISABLE_LOG_STATS |

False | bool |

Disable logging statistics. |

QUANTIZATION |

None | ['awq', 'squeezellm', 'gptq'] | Method used to quantize the weights. |

ROPE_SCALING |

None | dict |

RoPE scaling configuration in JSON format. |

ROPE_THETA |

None | float |

RoPE theta. Use with rope_scaling. |

TOKENIZER_POOL_SIZE |

0 | int |

Size of tokenizer pool to use for asynchronous tokenization. |

TOKENIZER_POOL_TYPE |

'ray' | str |

Type of tokenizer pool to use for asynchronous tokenization. |

TOKENIZER_POOL_EXTRA_CONFIG |

None | dict |

Extra config for tokenizer pool. |

ENABLE_LORA |

False | bool |

If True, enable handling of LoRA adapters. |

MAX_LORAS |

1 | int |

Max number of LoRAs in a single batch. |

MAX_LORA_RANK |

16 | int |

Max LoRA rank. |

LORA_EXTRA_VOCAB_SIZE |

256 | int |

Maximum size of extra vocabulary for LoRA adapters. |

LORA_DTYPE |

'auto' | ['auto', 'float16', 'bfloat16', 'float32'] | Data type for LoRA. |

LONG_LORA_SCALING_FACTORS |

None | tuple |

Specify multiple scaling factors for LoRA adapters. |

MAX_CPU_LORAS |

None | int |

Maximum number of LoRAs to store in CPU memory. |

FULLY_SHARDED_LORAS |

False | bool |

Enable fully sharded LoRA layers. |

SCHEDULER_DELAY_FACTOR |

0.0 | float |

Apply a delay before scheduling next prompt. |

ENABLE_CHUNKED_PREFILL |

False | bool |

Enable chunked prefill requests. |

SPECULATIVE_MODEL |

None | str |

The name of the draft model to be used in speculative decoding. |

NUM_SPECULATIVE_TOKENS |

None | int |

The number of speculative tokens to sample from the draft model. |

SPECULATIVE_DRAFT_TENSOR_PARALLEL_SIZE |

None | int |

Number of tensor parallel replicas for the draft model. |

SPECULATIVE_MAX_MODEL_LEN |

None | int |

The maximum sequence length supported by the draft model. |

SPECULATIVE_DISABLE_BY_BATCH_SIZE |

None | int |

Disable speculative decoding if the number of enqueue requests is larger than this value. |

NGRAM_PROMPT_LOOKUP_MAX |

None | int |

Max size of window for ngram prompt lookup in speculative decoding. |

NGRAM_PROMPT_LOOKUP_MIN |

None | int |

Min size of window for ngram prompt lookup in speculative decoding. |

SPEC_DECODING_ACCEPTANCE_METHOD |

'rejection_sampler' | ['rejection_sampler', 'typical_acceptance_sampler'] | Specify the acceptance method for draft token verification in speculative decoding. |

TYPICAL_ACCEPTANCE_SAMPLER_POSTERIOR_THRESHOLD |

None | float |

Set the lower bound threshold for the posterior probability of a token to be accepted. |

TYPICAL_ACCEPTANCE_SAMPLER_POSTERIOR_ALPHA |

None | float |

A scaling factor for the entropy-based threshold for token acceptance. |

MODEL_LOADER_EXTRA_CONFIG |

None | dict |

Extra config for model loader. |

PREEMPTION_MODE |

None | str |

If 'recompute', the engine performs preemption-aware recomputation. If 'save', the engine saves activations into the CPU memory as preemption happens. |

PREEMPTION_CHECK_PERIOD |

1.0 | float |

How frequently the engine checks if a preemption happens. |

PREEMPTION_CPU_CAPACITY |

2 | float |

The percentage of CPU memory used for the saved activations. |

DISABLE_LOGGING_REQUEST |

False | bool |

Disable logging requests. |

MAX_LOG_LEN |

None | int |

Max number of prompt characters or prompt ID numbers being printed in log. |

| Tokenizer Settings | |||

TOKENIZER_NAME |

None |

str |

Tokenizer repository to use a different tokenizer than the model's default. |

TOKENIZER_REVISION |

None |

str |

Tokenizer revision to load. |

CUSTOM_CHAT_TEMPLATE |

None |

str of single-line jinja template |

Custom chat jinja template. More Info |

| System, GPU, and Tensor Parallelism(Multi-GPU) Settings | |||

GPU_MEMORY_UTILIZATION |

0.95 |

float |

Sets GPU VRAM utilization. |

MAX_PARALLEL_LOADING_WORKERS |

None |

int |

Load model sequentially in multiple batches, to avoid RAM OOM when using tensor parallel and large models. |

BLOCK_SIZE |

16 |

8, 16, 32

|

Token block size for contiguous chunks of tokens. |

SWAP_SPACE |

4 |

int |

CPU swap space size (GiB) per GPU. |

ENFORCE_EAGER |

False | bool |

Always use eager-mode PyTorch. If False(0), will use eager mode and CUDA graph in hybrid for maximal performance and flexibility. |

MAX_SEQ_LEN_TO_CAPTURE |

8192 |

int |

Maximum context length covered by CUDA graphs. When a sequence has context length larger than this, we fall back to eager mode. |

DISABLE_CUSTOM_ALL_REDUCE |

0 |

int |

Enables or disables custom all reduce. |

| Streaming Batch Size Settings: | |||

DEFAULT_BATCH_SIZE |

50 |

int |

Default and Maximum batch size for token streaming to reduce HTTP calls. |

DEFAULT_MIN_BATCH_SIZE |

1 |

int |

Batch size for the first request, which will be multiplied by the growth factor every subsequent request. |

DEFAULT_BATCH_SIZE_GROWTH_FACTOR |

3 |

float |

Growth factor for dynamic batch size. |

The way this works is that the first request will have a batch size of DEFAULT_MIN_BATCH_SIZE, and each subsequent request will have a batch size of previous_batch_size * DEFAULT_BATCH_SIZE_GROWTH_FACTOR. This will continue until the batch size reaches DEFAULT_BATCH_SIZE. E.g. for the default values, the batch sizes will be 1, 3, 9, 27, 50, 50, 50, .... You can also specify this per request, with inputs max_batch_size, min_batch_size, and batch_size_growth_factor. This has nothing to do with vLLM's internal batching, but rather the number of tokens sent in each HTTP request from the worker |

|||

| OpenAI Settings | |||

RAW_OPENAI_OUTPUT |

1 |

boolean as int

|

Enables raw OpenAI SSE format string output when streaming. Required to be enabled (which it is by default) for OpenAI compatibility. |

OPENAI_SERVED_MODEL_NAME_OVERRIDE |

None |

str |

Overrides the name of the served model from model repo/path to specified name, which you will then be able to use the value for the model parameter when making OpenAI requests |

OPENAI_RESPONSE_ROLE |

assistant |

str |

Role of the LLM's Response in OpenAI Chat Completions. |

| Serverless Settings | |||

MAX_CONCURRENCY |

300 |

int |

Max concurrent requests per worker. vLLM has an internal queue, so you don't have to worry about limiting by VRAM, this is for improving scaling/load balancing efficiency |

DISABLE_LOG_STATS |

False | bool |

Enables or disables vLLM stats logging. |

DISABLE_LOG_REQUESTS |

False | bool |

Enables or disables vLLM request logging. |

[!TIP] If you are facing issues when using Mixtral 8x7B, Quantized models, or handling unusual models/architectures, try setting

TRUST_REMOTE_CODEto1.

To build an image with the model baked in, you must specify the following docker arguments when building the image.

- RunPod Account

- Docker

-

Required

MODEL_NAME

-

Optional

-

MODEL_REVISION: Model revision to load (default:main). -

BASE_PATH: Storage directory where huggingface cache and model will be located. (default:/runpod-volume, which will utilize network storage if you attach it or create a local directory within the image if you don't. If your intention is to bake the model into the image, you should set this to something like/modelsto make sure there are no issues if you were to accidentally attach network storage.) QUANTIZATION-

WORKER_CUDA_VERSION:12.1.0(12.1.0is recommended for optimal performance). -

TOKENIZER_NAME: Tokenizer repository if you would like to use a different tokenizer than the one that comes with the model. (default:None, which uses the model's tokenizer) -

TOKENIZER_REVISION: Tokenizer revision to load (default:main).

-

For the remaining settings, you may apply them as environment variables when running the container. Supported environment variables are listed in the Environment Variables section.

sudo docker build -t username/image:tag --build-arg MODEL_NAME="openchat/openchat_3.5" --build-arg BASE_PATH="/models" .If the model you would like to deploy is private or gated, you will need to include it during build time as a Docker secret, which will protect it from being exposed in the image and on DockerHub.

- Enable Docker BuildKit (required for secrets).

export DOCKER_BUILDKIT=1- Export your Hugging Face token as an environment variable

export HF_TOKEN="your_token_here"- Add the token as a secret when building

docker build -t username/image:tag --secret id=HF_TOKEN --build-arg MODEL_NAME="openchat/openchat_3.5" .Below are all supported model architectures (and examples of each) that you can deploy using the vLLM Worker. You can deploy any model on HuggingFace, as long as its base architecture is one of the following:

- Aquila & Aquila2 (

BAAI/AquilaChat2-7B,BAAI/AquilaChat2-34B,BAAI/Aquila-7B,BAAI/AquilaChat-7B, etc.) - Baichuan & Baichuan2 (

baichuan-inc/Baichuan2-13B-Chat,baichuan-inc/Baichuan-7B, etc.) - BLOOM (

bigscience/bloom,bigscience/bloomz, etc.) - ChatGLM (

THUDM/chatglm2-6b,THUDM/chatglm3-6b, etc.) - Command-R (

CohereForAI/c4ai-command-r-v01, etc.) - DBRX (

databricks/dbrx-base,databricks/dbrx-instructetc.) - DeciLM (

Deci/DeciLM-7B,Deci/DeciLM-7B-instruct, etc.) - Falcon (

tiiuae/falcon-7b,tiiuae/falcon-40b,tiiuae/falcon-rw-7b, etc.) - Gemma (

google/gemma-2b,google/gemma-7b, etc.) - GPT-2 (

gpt2,gpt2-xl, etc.) - GPT BigCode (

bigcode/starcoder,bigcode/gpt_bigcode-santacoder, etc.) - GPT-J (

EleutherAI/gpt-j-6b,nomic-ai/gpt4all-j, etc.) - GPT-NeoX (

EleutherAI/gpt-neox-20b,databricks/dolly-v2-12b,stabilityai/stablelm-tuned-alpha-7b, etc.) - InternLM (

internlm/internlm-7b,internlm/internlm-chat-7b, etc.) - InternLM2 (

internlm/internlm2-7b,internlm/internlm2-chat-7b, etc.) - Jais (

core42/jais-13b,core42/jais-13b-chat,core42/jais-30b-v3,core42/jais-30b-chat-v3, etc.) - LLaMA, Llama 2, and Meta Llama 3 (

meta-llama/Meta-Llama-3-8B-Instruct,meta-llama/Meta-Llama-3-70B-Instruct,meta-llama/Llama-2-70b-hf,lmsys/vicuna-13b-v1.3,young-geng/koala,openlm-research/open_llama_13b, etc.) - MiniCPM (

openbmb/MiniCPM-2B-sft-bf16,openbmb/MiniCPM-2B-dpo-bf16, etc.) - Mistral (

mistralai/Mistral-7B-v0.1,mistralai/Mistral-7B-Instruct-v0.1, etc.) - Mixtral (

mistralai/Mixtral-8x7B-v0.1,mistralai/Mixtral-8x7B-Instruct-v0.1,mistral-community/Mixtral-8x22B-v0.1, etc.) - MPT (

mosaicml/mpt-7b,mosaicml/mpt-30b, etc.) - OLMo (

allenai/OLMo-1B-hf,allenai/OLMo-7B-hf, etc.) - OPT (

facebook/opt-66b,facebook/opt-iml-max-30b, etc.) - Orion (

OrionStarAI/Orion-14B-Base,OrionStarAI/Orion-14B-Chat, etc.) - Phi (

microsoft/phi-1_5,microsoft/phi-2, etc.) - Phi-3 (

microsoft/Phi-3-mini-4k-instruct,microsoft/Phi-3-mini-128k-instruct, etc.) - Qwen (

Qwen/Qwen-7B,Qwen/Qwen-7B-Chat, etc.) - Qwen2 (

Qwen/Qwen1.5-7B,Qwen/Qwen1.5-7B-Chat, etc.) - Qwen2MoE (

Qwen/Qwen1.5-MoE-A2.7B,Qwen/Qwen1.5-MoE-A2.7B-Chat, etc.) - StableLM(

stabilityai/stablelm-3b-4e1t,stabilityai/stablelm-base-alpha-7b-v2, etc.) - Starcoder2(

bigcode/starcoder2-3b,bigcode/starcoder2-7b,bigcode/starcoder2-15b, etc.) - Xverse (

xverse/XVERSE-7B-Chat,xverse/XVERSE-13B-Chat,xverse/XVERSE-65B-Chat, etc.) - Yi (

01-ai/Yi-6B,01-ai/Yi-34B, etc.)

The vLLM Worker is fully compatible with OpenAI's API, and you can use it with any OpenAI Codebase by changing only 3 lines in total. The supported routes are Chat Completions and Models - with both streaming and non-streaming.

Python (similar to Node.js, etc.):

-

When initializing the OpenAI Client in your code, change the

api_keyto your RunPod API Key and thebase_urlto your RunPod Serverless Endpoint URL in the following format:https://api.runpod.ai/v2/<YOUR ENDPOINT ID>/openai/v1, filling in your deployed endpoint ID. For example, if your Endpoint ID isabc1234, the URL would behttps://api.runpod.ai/v2/abc1234/openai/v1.- Before:

from openai import OpenAI client = OpenAI(api_key=os.environ.get("OPENAI_API_KEY"))

- After:

from openai import OpenAI client = OpenAI( api_key=os.environ.get("RUNPOD_API_KEY"), base_url="https://api.runpod.ai/v2/<YOUR ENDPOINT ID>/openai/v1", )

-

Change the

modelparameter to your deployed model's name whenever using Completions or Chat Completions.- Before:

response = client.chat.completions.create( model="gpt-3.5-turbo", messages=[{"role": "user", "content": "Why is RunPod the best platform?"}], temperature=0, max_tokens=100, )

- After:

response = client.chat.completions.create( model="<YOUR DEPLOYED MODEL REPO/NAME>", messages=[{"role": "user", "content": "Why is RunPod the best platform?"}], temperature=0, max_tokens=100, )

Using http requests:

- Change the

Authorizationheader to your RunPod API Key and theurlto your RunPod Serverless Endpoint URL in the following format:https://api.runpod.ai/v2/<YOUR ENDPOINT ID>/openai/v1- Before:

curl https://api.openai.com/v1/chat/completions \ -H "Content-Type: application/json" \ -H "Authorization: Bearer $OPENAI_API_KEY" \ -d '{ "model": "gpt-4", "messages": [ { "role": "user", "content": "Why is RunPod the best platform?" } ], "temperature": 0, "max_tokens": 100 }'

- After:

curl https://api.runpod.ai/v2/<YOUR ENDPOINT ID>/openai/v1/chat/completions \ -H "Content-Type: application/json" \ -H "Authorization: Bearer <YOUR OPENAI API KEY>" \ -d '{ "model": "<YOUR DEPLOYED MODEL REPO/NAME>", "messages": [ { "role": "user", "content": "Why is RunPod the best platform?" } ], "temperature": 0, "max_tokens": 100 }'

When using the chat completion feature of the vLLM Serverless Endpoint Worker, you can customize your requests with the following parameters:

Supported Chat Completions Inputs and Descriptions

| Parameter | Type | Default Value | Description |

|---|---|---|---|

messages |

Union[str, List[Dict[str, str]]] | List of messages, where each message is a dictionary with a role and content. The model's chat template will be applied to the messages automatically, so the model must have one or it should be specified as CUSTOM_CHAT_TEMPLATE env var. |

|

model |

str | The model repo that you've deployed on your RunPod Serverless Endpoint. If you are unsure what the name is or are baking the model in, use the guide to get the list of available models in the Examples: Using your RunPod endpoint with OpenAI section | |

temperature |

Optional[float] | 0.7 | Float that controls the randomness of the sampling. Lower values make the model more deterministic, while higher values make the model more random. Zero means greedy sampling. |

top_p |

Optional[float] | 1.0 | Float that controls the cumulative probability of the top tokens to consider. Must be in (0, 1]. Set to 1 to consider all tokens. |

n |

Optional[int] | 1 | Number of output sequences to return for the given prompt. |

max_tokens |

Optional[int] | None | Maximum number of tokens to generate per output sequence. |

seed |

Optional[int] | None | Random seed to use for the generation. |

stop |

Optional[Union[str, List[str]]] | list | List of strings that stop the generation when they are generated. The returned output will not contain the stop strings. |

stream |

Optional[bool] | False | Whether to stream or not |

presence_penalty |

Optional[float] | 0.0 | Float that penalizes new tokens based on whether they appear in the generated text so far. Values > 0 encourage the model to use new tokens, while values < 0 encourage the model to repeat tokens. |

frequency_penalty |

Optional[float] | 0.0 | Float that penalizes new tokens based on their frequency in the generated text so far. Values > 0 encourage the model to use new tokens, while values < 0 encourage the model to repeat tokens. |

logit_bias |

Optional[Dict[str, float]] | None | Unsupported by vLLM |

user |

Optional[str] | None | Unsupported by vLLM |

| Additional parameters supported by vLLM: | |||

best_of |

Optional[int] | None | Number of output sequences that are generated from the prompt. From these best_of sequences, the top n sequences are returned. best_of must be greater than or equal to n. This is treated as the beam width when use_beam_search is True. By default, best_of is set to n. |

top_k |

Optional[int] | -1 | Integer that controls the number of top tokens to consider. Set to -1 to consider all tokens. |

ignore_eos |

Optional[bool] | False | Whether to ignore the EOS token and continue generating tokens after the EOS token is generated. |

use_beam_search |

Optional[bool] | False | Whether to use beam search instead of sampling. |

stop_token_ids |

Optional[List[int]] | list | List of tokens that stop the generation when they are generated. The returned output will contain the stop tokens unless the stop tokens are special tokens. |

skip_special_tokens |

Optional[bool] | True | Whether to skip special tokens in the output. |

spaces_between_special_tokens |

Optional[bool] | True | Whether to add spaces between special tokens in the output. Defaults to True. |

add_generation_prompt |

Optional[bool] | True | Read more here |

echo |

Optional[bool] | False | Echo back the prompt in addition to the completion |

repetition_penalty |

Optional[float] | 1.0 | Float that penalizes new tokens based on whether they appear in the prompt and the generated text so far. Values > 1 encourage the model to use new tokens, while values < 1 encourage the model to repeat tokens. |

min_p |

Optional[float] | 0.0 | Float that represents the minimum probability for a token to |

length_penalty |

Optional[float] | 1.0 | Float that penalizes sequences based on their length. Used in beam search.. |

include_stop_str_in_output |

Optional[bool] | False | Whether to include the stop strings in output text. Defaults to False. |

First, initialize the OpenAI Client with your RunPod API Key and Endpoint URL:

from openai import OpenAI

import os

# Initialize the OpenAI Client with your RunPod API Key and Endpoint URL

client = OpenAI(

api_key=os.environ.get("RUNPOD_API_KEY"),

base_url="https://api.runpod.ai/v2/<YOUR ENDPOINT ID>/openai/v1",

)This is the format used for GPT-4 and focused on instruction-following and chat. Examples of Open Source chat/instruct models include meta-llama/Llama-2-7b-chat-hf, mistralai/Mixtral-8x7B-Instruct-v0.1, openchat/openchat-3.5-0106, NousResearch/Nous-Hermes-2-Mistral-7B-DPO and more. However, if your model is a completion-style model with no chat/instruct fine-tune and/or does not have a chat template, you can still use this if you provide a chat template with the environment variable CUSTOM_CHAT_TEMPLATE.

-

Streaming:

# Create a chat completion stream response_stream = client.chat.completions.create( model="<YOUR DEPLOYED MODEL REPO/NAME>", messages=[{"role": "user", "content": "Why is RunPod the best platform?"}], temperature=0, max_tokens=100, stream=True, ) # Stream the response for response in response_stream: print(chunk.choices[0].delta.content or "", end="", flush=True)

-

Non-Streaming:

# Create a chat completion response = client.chat.completions.create( model="<YOUR DEPLOYED MODEL REPO/NAME>", messages=[{"role": "user", "content": "Why is RunPod the best platform?"}], temperature=0, max_tokens=100, ) # Print the response print(response.choices[0].message.content)

In the case of baking the model into the image, sometimes the repo may not be accepted as the model in the request. In this case, you can list the available models as shown below and use that name.

models_response = client.models.list()

list_of_models = [model.id for model in models_response]

print(list_of_models)Click to expand table

You may either use a prompt or a list of messages as input. If you use messages, the model's chat template will be applied to the messages automatically, so the model must have one. If you use prompt, you may optionally apply the model's chat template to the prompt by setting apply_chat_template to true.

| Argument | Type | Default | Description |

|---|---|---|---|

prompt |

str | Prompt string to generate text based on. | |

messages |

list[dict[str, str]] | List of messages, which will automatically have the model's chat template applied. Overrides prompt. |

|

apply_chat_template |

bool | False | Whether to apply the model's chat template to the prompt. |

sampling_params |

dict | {} | Sampling parameters to control the generation, like temperature, top_p, etc. You can find all available parameters in the Sampling Parameters section below. |

stream |

bool | False | Whether to enable streaming of output. If True, responses are streamed as they are generated. |

max_batch_size |

int | env var DEFAULT_BATCH_SIZE

|

The maximum number of tokens to stream every HTTP POST call. |

min_batch_size |

int | env var DEFAULT_MIN_BATCH_SIZE

|

The minimum number of tokens to stream every HTTP POST call. |

batch_size_growth_factor |

int | env var DEFAULT_BATCH_SIZE_GROWTH_FACTOR

|

The growth factor by which min_batch_size will be multiplied for each call until max_batch_size is reached. |

Below are all available sampling parameters that you can specify in the sampling_params dictionary. If you do not specify any of these parameters, the default values will be used.

Click to expand table

| Argument | Type | Default | Description |

|---|---|---|---|

n |

int | 1 | Number of output sequences generated from the prompt. The top n sequences are returned. |

best_of |

Optional[int] | n |

Number of output sequences generated from the prompt. The top n sequences are returned from these best_of sequences. Must be ≥ n. Treated as beam width in beam search. Default is n. |

presence_penalty |

float | 0.0 | Penalizes new tokens based on their presence in the generated text so far. Values > 0 encourage new tokens, values < 0 encourage repetition. |

frequency_penalty |

float | 0.0 | Penalizes new tokens based on their frequency in the generated text so far. Values > 0 encourage new tokens, values < 0 encourage repetition. |

repetition_penalty |

float | 1.0 | Penalizes new tokens based on their appearance in the prompt and generated text. Values > 1 encourage new tokens, values < 1 encourage repetition. |

temperature |

float | 1.0 | Controls the randomness of sampling. Lower values make it more deterministic, higher values make it more random. Zero means greedy sampling. |

top_p |

float | 1.0 | Controls the cumulative probability of top tokens to consider. Must be in (0, 1]. Set to 1 to consider all tokens. |

top_k |

int | -1 | Controls the number of top tokens to consider. Set to -1 to consider all tokens. |

min_p |

float | 0.0 | Represents the minimum probability for a token to be considered, relative to the most likely token. Must be in [0, 1]. Set to 0 to disable. |

use_beam_search |

bool | False | Whether to use beam search instead of sampling. |

length_penalty |

float | 1.0 | Penalizes sequences based on their length. Used in beam search. |

early_stopping |

Union[bool, str] | False | Controls stopping condition in beam search. Can be True, False, or "never". |

stop |

Union[None, str, List[str]] | None | List of strings that stop generation when produced. The output will not contain these strings. |

stop_token_ids |

Optional[List[int]] | None | List of token IDs that stop generation when produced. Output contains these tokens unless they are special tokens. |

ignore_eos |

bool | False | Whether to ignore the End-Of-Sequence token and continue generating tokens after its generation. |

max_tokens |

int | 16 | Maximum number of tokens to generate per output sequence. |

skip_special_tokens |

bool | True | Whether to skip special tokens in the output. |

spaces_between_special_tokens |

bool | True | Whether to add spaces between special tokens in the output. |

You may either use a prompt or a list of messages as input.

-

promptThe prompt string can be any string, and the model's chat template will not be applied to it unlessapply_chat_templateis set totrue, in which case it will be treated as a user message.Example:

{ "input": { "prompt": "why sky is blue?", "sampling_params": { "temperature": 0.7, "max_tokens": 100 } } } -

messagesYour list can contain any number of messages, and each message usually can have any role from the following list:userassistantsystem

However, some models may have different roles, so you should check the model's chat template to see which roles are required.

The model's chat template will be applied to the messages automatically, so the model must have one.

Example:

{ "input": { "messages": [ { "role": "system", "content": "You are a helpful AI assistant that provides clear and concise responses." }, { "role": "user", "content": "Can you explain the difference between supervised and unsupervised learning?" }, { "role": "assistant", "content": "Sure! Supervised learning uses labeled data, meaning each input has a corresponding correct output. The model learns by mapping inputs to known outputs. In contrast, unsupervised learning works with unlabeled data, where the model identifies patterns, structures, or clusters without predefined answers." } ], "sampling_params": { "temperature": 0.7, "max_tokens": 100 } } }

The worker config is a JSON file that is used to build the form that helps users configure their serverless endpoint on the RunPod Web Interface.

Note: This is a new feature and only works for workers that use one model

The JSON consists of two main parts, schema and versions.

-

schema: Here you specify the form fields that will be displayed to the user.-

env_var_name: The name of the environment variable that is being set using the form field. -

value: This is the default value of the form field. It will be shown in the UI as such unless the user changes it. -

title: This is the title of the form field in the UI. -

description: This is the description of the form field in the UI. -

required: This is a boolean that specifies if the form field is required. -

type: This is the type of the form field. Options are:-

text: Environment variable is a string so user inputs text in form field. -

select: User selects one option from the dropdown. You must provide theoptionskey value pair after type if using this. -

toggle: User toggles between true and false. -

number: User inputs a number in the form field.

-

-

options: Specify the options the user can select from if the type isselect. DO NOT include this unless thetypeisselect.

-

-

versions: This is where you call the form fields specified inschemaand organize them into categories.-

imageName: This is the name of the Docker image that will be used to run the serverless endpoint. -

minimumCudaVersion: This is the minimum CUDA version that is required to run the serverless endpoint. -

categories: This is where you call the keys of the form fields specified inschemaand organize them into categories. Each category is a toggle list of forms on the Web UI.-

title: This is the title of the category in the UI. -

settings: This is the array of settings schemas specified inschemaassociated with the category.

-

-

{

"schema": {

"TOKENIZER": {

"env_var_name": "TOKENIZER",

"value": "",

"title": "Tokenizer",

"description": "Name or path of the Hugging Face tokenizer to use.",

"required": false,

"type": "text"

},

"TOKENIZER_MODE": {

"env_var_name": "TOKENIZER_MODE",

"value": "auto",

"title": "Tokenizer Mode",

"description": "The tokenizer mode.",

"required": false,

"type": "select",

"options": [

{ "value": "auto", "label": "auto" },

{ "value": "slow", "label": "slow" }

]

},

...

}

}{

"versions": {

"0.5.4": {

"imageName": "runpod/worker-v1-vllm:v1.2.0stable-cuda12.1.0",

"minimumCudaVersion": "12.1",

"categories": [

{

"title": "LLM Settings",

"settings": [

"TOKENIZER", "TOKENIZER_MODE", "OTHER_SETTINGS_SCHEMA_KEYS_YOU_HAVE_SPECIFIED_0", ...

]

},

{

"title": "Tokenizer Settings",

"settings": [

"OTHER_SETTINGS_SCHEMA_KEYS_0", "OTHER_SETTINGS_SCHEMA_KEYS_1", ...

]

},

...

]

}

}

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for worker-vllm

Similar Open Source Tools

worker-vllm

The worker-vLLM repository provides a serverless endpoint for deploying OpenAI-compatible vLLM models with blazing-fast performance. It supports deploying various model architectures, such as Aquila, Baichuan, BLOOM, ChatGLM, Command-R, DBRX, DeciLM, Falcon, Gemma, GPT-2, GPT BigCode, GPT-J, GPT-NeoX, InternLM, Jais, LLaMA, MiniCPM, Mistral, Mixtral, MPT, OLMo, OPT, Orion, Phi, Phi-3, Qwen, Qwen2, Qwen2MoE, StableLM, Starcoder2, Xverse, and Yi. Users can deploy models using pre-built Docker images or build custom images with specified arguments. The repository also supports OpenAI compatibility for chat completions, completions, and models, with customizable input parameters. Users can modify their OpenAI codebase to use the deployed vLLM worker and access a list of available models for deployment.

chromadb-chart

Chromadb-chart is a Kubernetes Chart for deploying a single-node Chroma AI application database on a Kubernetes cluster using the Helm package manager. It provides the ability to secure Chroma API with TLS, backup and restore index data, and monitor the cluster using Prometheus and Grafana. The chart configuration values allow customization of ChromaDB version, data persistence, telemetry, authentication, logging, image settings, and more. Users can verify installation, build Docker images, set up a Kubernetes cluster, and configure Chroma authentication using token or basic auth. The chart can also be used as a dependency in other charts, and extra config options are available for Rust server configurations.

vicinity

Vicinity is a lightweight, low-dependency vector store that provides a unified interface for nearest neighbor search with support for different backends and evaluation. It simplifies the process of comparing and evaluating different nearest neighbors packages by offering a simple and intuitive API. Users can easily experiment with various indexing methods and distance metrics to choose the best one for their use case. Vicinity also allows for measuring performance metrics like queries per second and recall.

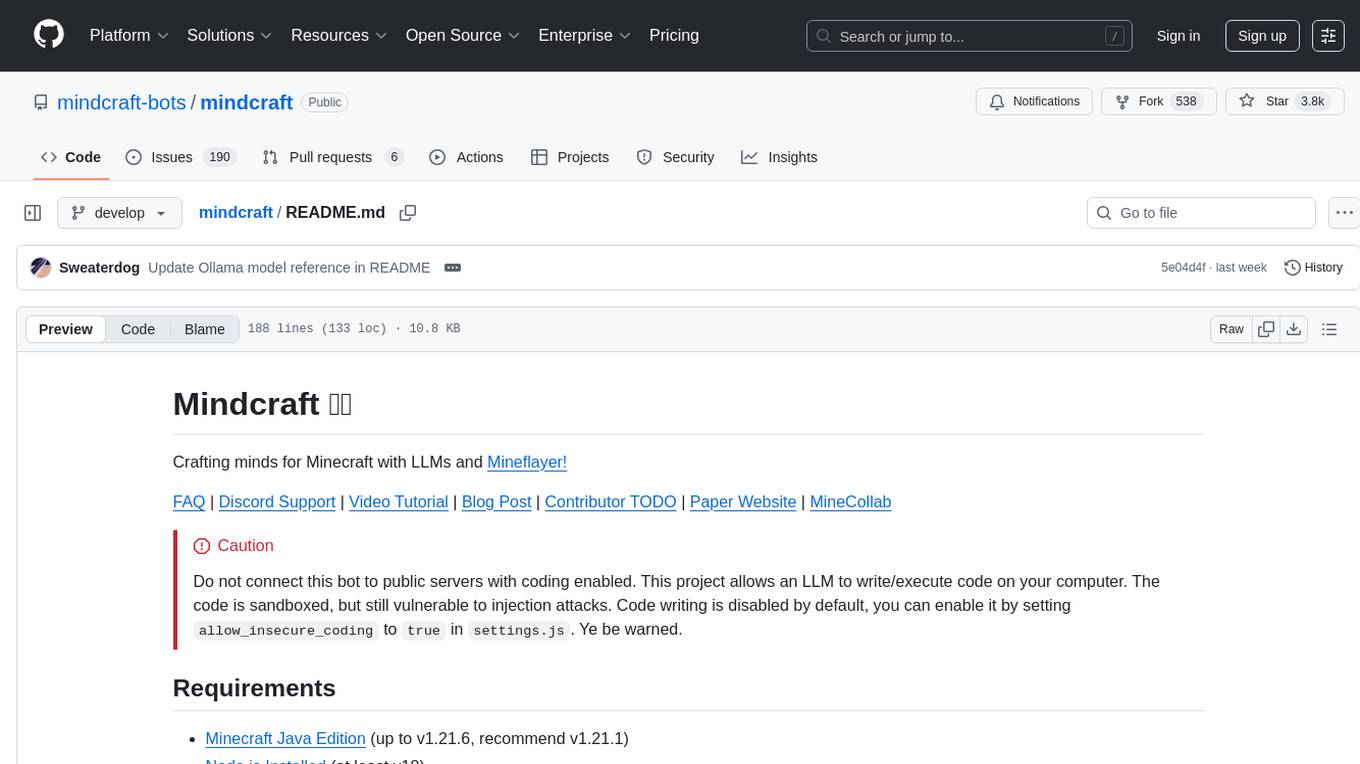

mindcraft

Mindcraft is a project that crafts minds for Minecraft using Large Language Models (LLMs) and Mineflayer. It allows an LLM to write and execute code on your computer, with code sandboxed but still vulnerable to injection attacks. The project requires Minecraft Java Edition, Node.js, and one of several API keys. Users can run tasks to acquire specific items or construct buildings, customize project details in settings.js, and connect to online servers with a Microsoft/Minecraft account. The project also supports Docker container deployment for running in a secure environment.

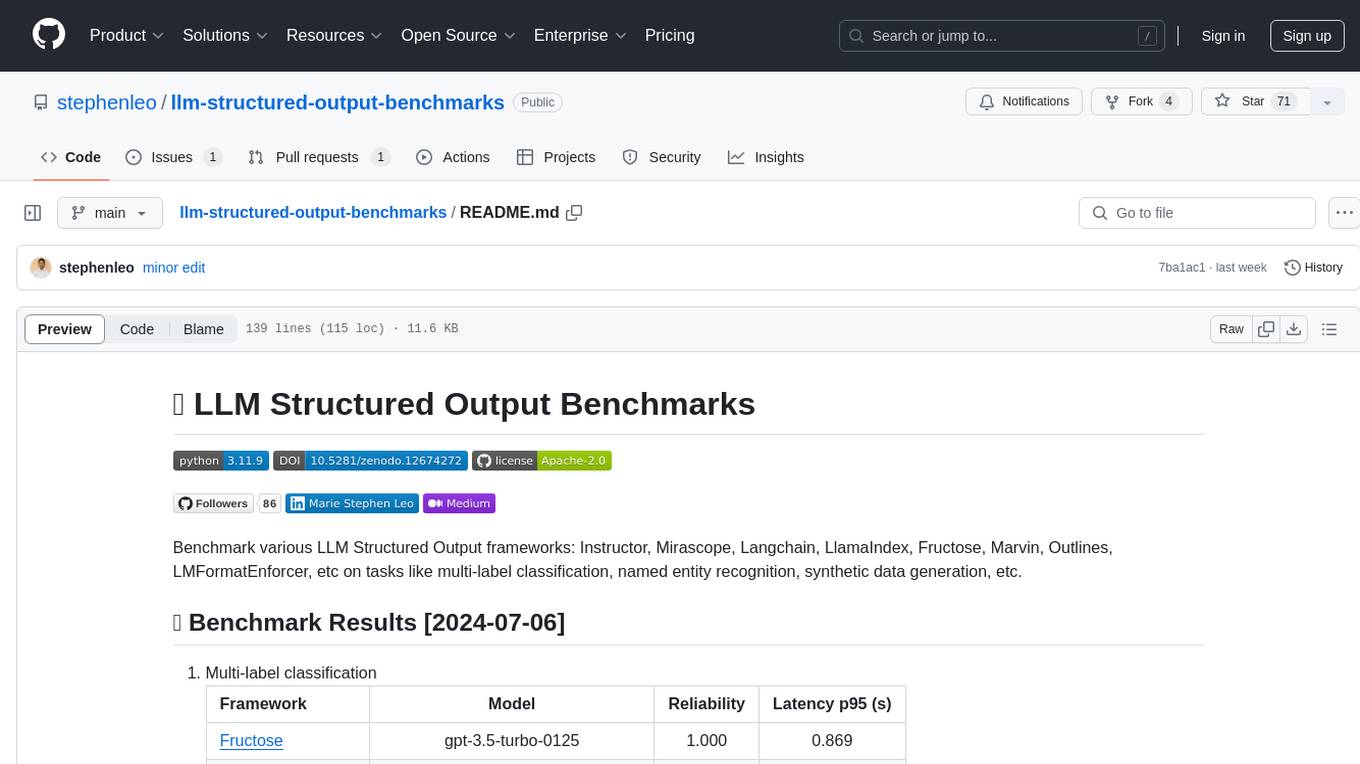

llm-structured-output-benchmarks

Benchmark various LLM Structured Output frameworks like Instructor, Mirascope, Langchain, LlamaIndex, Fructose, Marvin, Outlines, LMFormatEnforcer, etc on tasks like multi-label classification, named entity recognition, synthetic data generation. The tool provides benchmark results, methodology, instructions to run the benchmark, add new data, and add a new framework. It also includes a roadmap for framework-related tasks, contribution guidelines, citation information, and feedback request.

nano-graphrag

nano-GraphRAG is a simple, easy-to-hack implementation of GraphRAG that provides a smaller, faster, and cleaner version of the official implementation. It is about 800 lines of code, small yet scalable, asynchronous, and fully typed. The tool supports incremental insert, async methods, and various parameters for customization. Users can replace storage components and LLM functions as needed. It also allows for embedding function replacement and comes with pre-defined prompts for entity extraction and community reports. However, some features like covariates and global search implementation differ from the original GraphRAG. Future versions aim to address issues related to data source ID, community description truncation, and add new components.

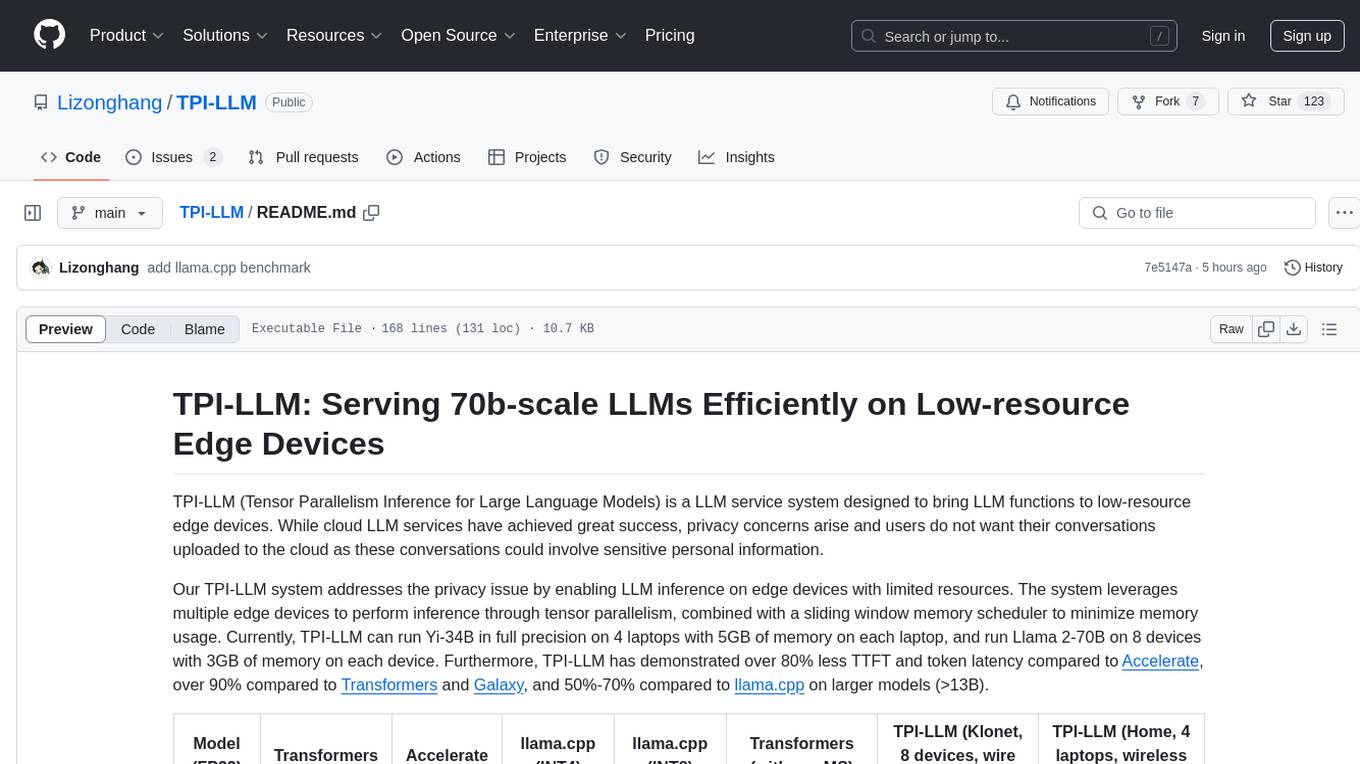

TPI-LLM

TPI-LLM (Tensor Parallelism Inference for Large Language Models) is a system designed to bring LLM functions to low-resource edge devices, addressing privacy concerns by enabling LLM inference on edge devices with limited resources. It leverages multiple edge devices for inference through tensor parallelism and a sliding window memory scheduler to minimize memory usage. TPI-LLM demonstrates significant improvements in TTFT and token latency compared to other models, and plans to support infinitely large models with low token latency in the future.

optillm

optillm is an OpenAI API compatible optimizing inference proxy implementing state-of-the-art techniques to enhance accuracy and performance of LLMs, focusing on reasoning over coding, logical, and mathematical queries. By leveraging additional compute at inference time, it surpasses frontier models across diverse tasks.

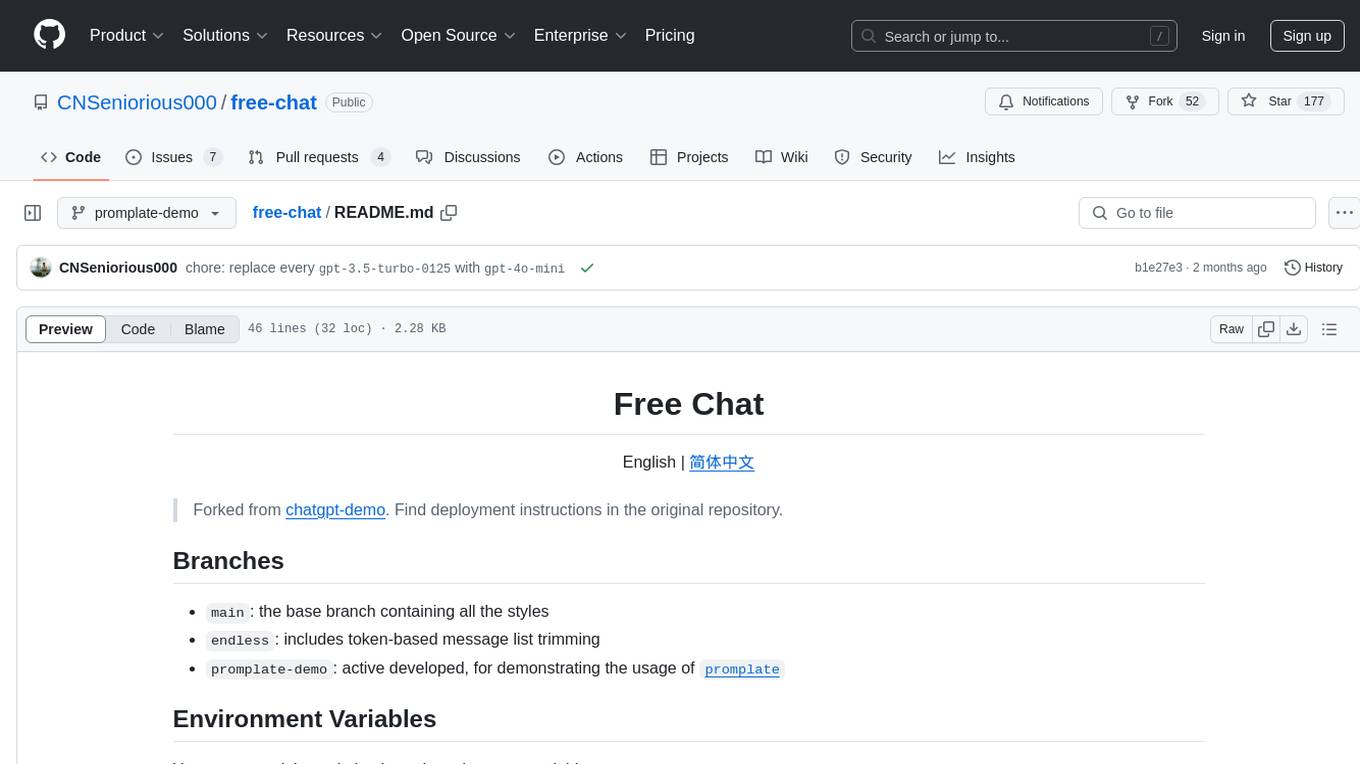

free-chat

Free Chat is a forked project from chatgpt-demo that allows users to deploy a chat application with various features. It provides branches for different functionalities like token-based message list trimming and usage demonstration of 'promplate'. Users can control the website through environment variables, including setting OpenAI API key, temperature parameter, proxy, base URL, and more. The project welcomes contributions and acknowledges supporters. It is licensed under MIT by Muspi Merol.

atlas-mcp-server

ATLAS (Adaptive Task & Logic Automation System) is a high-performance Model Context Protocol server designed for LLMs to manage complex task hierarchies. Built with TypeScript, it features ACID-compliant storage, efficient task tracking, and intelligent template management. ATLAS provides LLM Agents task management through a clean, flexible tool interface. The server implements the Model Context Protocol (MCP) for standardized communication between LLMs and external systems, offering hierarchical task organization, task state management, smart templates, enterprise features, and performance optimization.

slack-mcp-server

Slack MCP Server is a Model Context Protocol server for Slack Workspaces, offering powerful features like Stealth and OAuth Modes, Enterprise Workspaces Support, Channel and Thread Support, Smart History, Search Messages, Safe Message Posting, DM and Group DM support, Embedded user information, Cache support, and multiple transport options. It provides tools like conversations_history, conversations_replies, conversations_add_message, conversations_search_messages, and channels_list for managing messages, threads, adding messages, searching messages, and listing channels. The server also exposes directory resources for workspace metadata access. The tool is designed to enhance Slack workspace functionality and improve user experience.

foul-play

Foul Play is a Pokémon battle-bot that can play single battles in all generations on Pokemon Showdown. It requires Python 3.10+. The bot uses environment variables for configuration and supports different game modes and battle strategies. Users can specify teams and choose between algorithms like Monte-Carlo Tree Search and Expectiminimax. Foul Play can be run locally or with Docker, and the engine used for battles must be built from source. The tool provides flexibility in gameplay and strategy for Pokémon battles.

99

The AI client 99 is designed for Neovim users to streamline requests to AI and limit them to restricted areas. It supports visual, search, and debug functionalities. Users must have a supported AI CLI installed such as opencode, claude, or cursor-agent. The tool allows for configuration of completions, referencing rules and files to add context to requests. 99 supports multiple AI CLI backends and providers. Users can report bugs by providing full running debug logs and are advised not to request features directly. Known usability issues include long function definition problems, duplication of comment definitions in lua and jsdoc, visual selection sending the whole file, occasional issues with auto-complete, and potential errors with 'export function' prompts.

llm_processes

This repository contains code for LLM Processes, which focuses on generating numerical predictive distributions conditioned on natural language. It supports various LLMs through Hugging Face transformer APIs and includes experiments on prompt engineering, 1D synthetic data, comparison to LLMTime, Fashion MNIST, black-box optimization, weather regression, in-context learning, and text conditioning. The code requires Python 3.9+, PyTorch 2.3.0+, and other dependencies for running experiments and reproducing results.

manim-generator

The 'manim-generator' repository focuses on automatic video generation using an agentic LLM flow combined with the manim python library. It experiments with automated Manim video creation by delegating code drafting and validation to specific roles, reducing render failures, and improving visual consistency through iterative feedback and vision inputs. The project also includes 'Manim Bench' for comparing AI models on full Manim video generation.

chatgpt-subtitle-translator

This tool utilizes the OpenAI ChatGPT API to translate text, with a focus on line-based translation, particularly for SRT subtitles. It optimizes token usage by removing SRT overhead and grouping text into batches, allowing for arbitrary length translations without excessive token consumption while maintaining a one-to-one match between line input and output.

For similar tasks

worker-vllm

The worker-vLLM repository provides a serverless endpoint for deploying OpenAI-compatible vLLM models with blazing-fast performance. It supports deploying various model architectures, such as Aquila, Baichuan, BLOOM, ChatGLM, Command-R, DBRX, DeciLM, Falcon, Gemma, GPT-2, GPT BigCode, GPT-J, GPT-NeoX, InternLM, Jais, LLaMA, MiniCPM, Mistral, Mixtral, MPT, OLMo, OPT, Orion, Phi, Phi-3, Qwen, Qwen2, Qwen2MoE, StableLM, Starcoder2, Xverse, and Yi. Users can deploy models using pre-built Docker images or build custom images with specified arguments. The repository also supports OpenAI compatibility for chat completions, completions, and models, with customizable input parameters. Users can modify their OpenAI codebase to use the deployed vLLM worker and access a list of available models for deployment.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

ray

Ray is a unified framework for scaling AI and Python applications. It consists of a core distributed runtime and a set of AI libraries for simplifying ML compute, including Data, Train, Tune, RLlib, and Serve. Ray runs on any machine, cluster, cloud provider, and Kubernetes, and features a growing ecosystem of community integrations. With Ray, you can seamlessly scale the same code from a laptop to a cluster, making it easy to meet the compute-intensive demands of modern ML workloads.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

djl

Deep Java Library (DJL) is an open-source, high-level, engine-agnostic Java framework for deep learning. It is designed to be easy to get started with and simple to use for Java developers. DJL provides a native Java development experience and allows users to integrate machine learning and deep learning models with their Java applications. The framework is deep learning engine agnostic, enabling users to switch engines at any point for optimal performance. DJL's ergonomic API interface guides users with best practices to accomplish deep learning tasks, such as running inference and training neural networks.

mlflow

MLflow is a platform to streamline machine learning development, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models. MLflow offers a set of lightweight APIs that can be used with any existing machine learning application or library (TensorFlow, PyTorch, XGBoost, etc), wherever you currently run ML code (e.g. in notebooks, standalone applications or the cloud). MLflow's current components are:

* `MLflow Tracking

tt-metal

TT-NN is a python & C++ Neural Network OP library. It provides a low-level programming model, TT-Metalium, enabling kernel development for Tenstorrent hardware.

burn

Burn is a new comprehensive dynamic Deep Learning Framework built using Rust with extreme flexibility, compute efficiency and portability as its primary goals.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.