crssnt

🥐 Open-source LLM-friendly Markdown/JSON generator

Stars: 93

crssnt is a tool that converts RSS/Atom feeds into LLM-friendly Markdown or JSON, simplifying integration of feed content into AI workflows. It supports LLM-optimized conversion, multiple output formats, feed aggregation, and Google Sheet support. Users can access various endpoints for feed conversion and Google Sheet processing, with query parameters for customization. The tool processes user-provided URLs transiently without storing feed data, and can be self-hosted as Firebase Cloud Functions. Contributions are welcome under the MIT License.

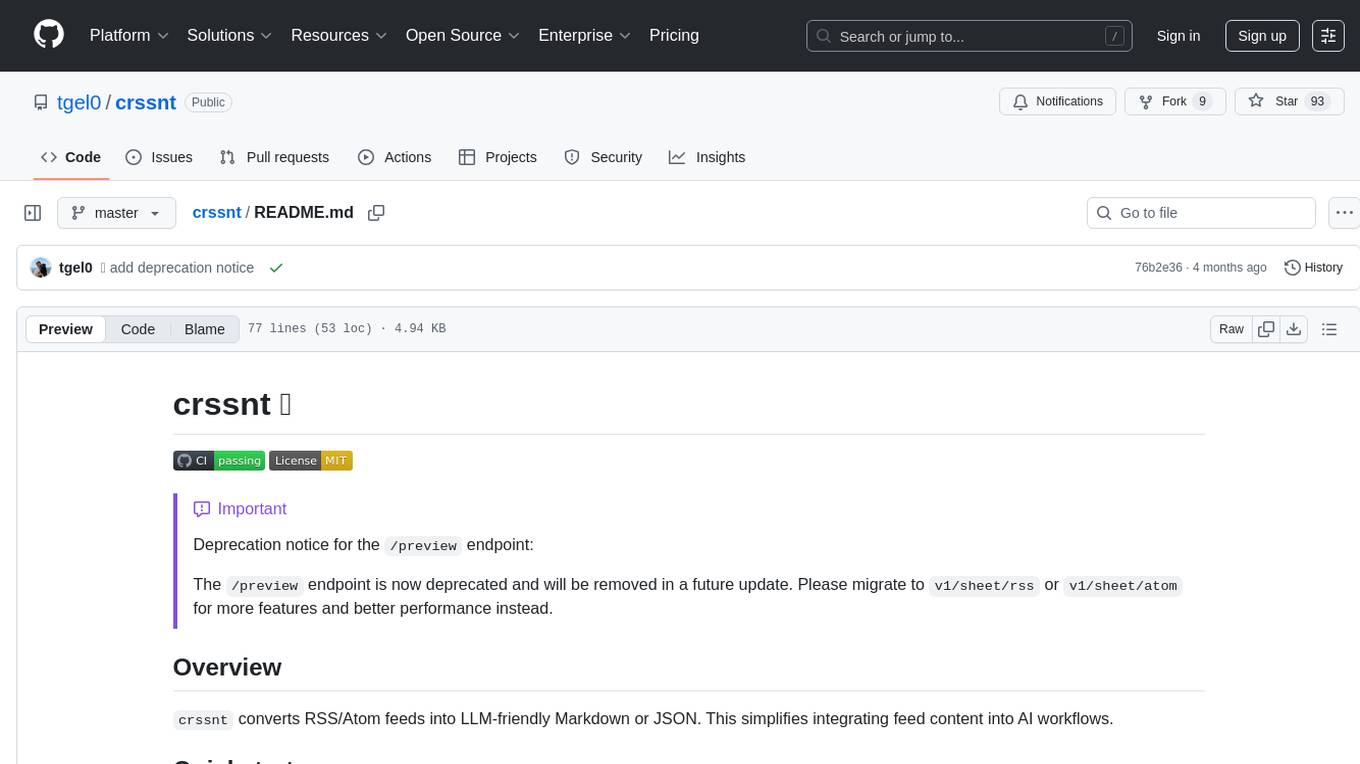

README:

[!IMPORTANT] Deprecation notice for the

/previewendpoint:The

/previewendpoint is now deprecated and will be removed in a future update. Please migrate tov1/sheet/rssorv1/sheet/atomfor more features and better performance instead.

crssnt converts RSS/Atom feeds into LLM-friendly Markdown or JSON. This simplifies integrating feed content into AI workflows.

This fetches the BBC News RSS feed and returns its content as Markdown optimized for language models., with the &llm_compact=true parameter:

https://crssnt.com/v1/feed/md/?url=http://feeds.bbci.co.uk/news/rss.xml&llm_compact=true

This uses the group_by_feed=true parameter to fetch and group items from BBC News and The Guardian and return a combined LLM-optimized Markdown output.

https://crssnt.com/v1/feed/md/?url=http://feeds.bbci.co.uk/news/rss.xml&url=https://www.theguardian.com/world/rss&llm_compact=true&group_by_feed=true

-

LLM-Optimized Conversion: Transforms RSS/Atom feeds into structured Markdown or JSON, with an

llm_compactoption for conciseness. - Multiple Output Formats: Supports Markdown, JSON, and Atom for converted feeds.

- Feed Aggregation: Combines (and auto-sorts by date) items from multiple source feeds.

- Google Sheet Support: Can also generate feeds (RSS, Atom, JSON, Markdown) from public Google Sheets.

Access via https://crssnt.com/ followed by these endpoint paths:

Feed Conversion:

/v1/feed/md//v1/feed/json//v1/feed/atom/

Google Sheet Processing:

/v1/sheet/md//v1/sheet/json//v1/sheet/rss//v1/sheet/atom/

| Parameter | Description | Supported Endpoints | Example Values/Notes |

|---|---|---|---|

url |

URL of the source RSS/Atom feed. Stack up to 10 URLs together using &url=

|

/v1/feed/md/, /v1/feed/json/, /v1/feed/atom/

|

url=http://example.com/feed.xml |

llm_compact |

If true, produces compact JSON or Markdown output for LLMs. |

/v1/feed/md/, /v1/feed/json/, /v1/sheet/md/, /v1/sheet/json/

|

true, false

|

group_by_feed |

If true and multiple urls are provided, items in JSON/Markdown are grouped by original feed title. |

/v1/feed/md/, /v1/feed/json/

|

true, false

|

max_items |

Limits the number of items returned. | All data-returning functions |

1, 10

|

id |

Google Sheet ID (from its URL). | /v1/sheet/* |

your-sheet-id |

name |

Name of a specific sheet/tab in Google Spreadsheet. Multiple name params for multiple sheets. Defaults to first. |

/v1/sheet/* |

Sheet1, name=MyData&name=Sheet2

|

use_manual_mode |

If true, uses specific column headers (title, link, etc.) for mapping. Default false (auto-detection). |

/v1/sheet/* |

true, false

|

crssnt processes user-provided URLs to fetch data. It's a transient processor and doesn't store feed data. Standard logging may occur. See Privacy Policy.

crssnt can be self-hosted as Firebase Cloud Functions. Refer to the Firebase documentation for deploying functions. Use the Firebase Emulator Suite for local testing. The https://crssnt.com/ service is recommended for most users.

Contributions are welcome. Please fork the repository, make your changes on a new branch, and submit a pull request.

This project is licensed under the MIT License. See the LICENSE file for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for crssnt

Similar Open Source Tools

crssnt

crssnt is a tool that converts RSS/Atom feeds into LLM-friendly Markdown or JSON, simplifying integration of feed content into AI workflows. It supports LLM-optimized conversion, multiple output formats, feed aggregation, and Google Sheet support. Users can access various endpoints for feed conversion and Google Sheet processing, with query parameters for customization. The tool processes user-provided URLs transiently without storing feed data, and can be self-hosted as Firebase Cloud Functions. Contributions are welcome under the MIT License.

receipt-scanner

The receipt-scanner repository is an AI-Powered Receipt and Invoice Scanner for Laravel that allows users to easily extract structured receipt data from images, PDFs, and emails within their Laravel application using OpenAI. It provides a light wrapper around OpenAI Chat and Completion endpoints, supports various input formats, and integrates with Textract for OCR functionality. Users can install the package via composer, publish configuration files, and use it to extract data from plain text, PDFs, images, Word documents, and web content. The scanned receipt data is parsed into a DTO structure with main classes like Receipt, Merchant, and LineItem.

vue-markdown-render

vue-renderer-markdown is a high-performance tool designed for streaming and rendering Markdown content in real-time. It is optimized for handling incomplete or rapidly changing Markdown blocks, making it ideal for scenarios like AI model responses, live content updates, and real-time Markdown rendering. The tool offers features such as ultra-high performance, streaming-first design, Monaco integration, progressive Mermaid rendering, custom components integration, complete Markdown support, real-time updates, TypeScript support, and zero configuration setup. It solves challenges like incomplete syntax blocks, rapid content changes, cursor positioning complexities, and graceful handling of partial tokens with a streaming-optimized architecture.

nano-graphrag

nano-GraphRAG is a simple, easy-to-hack implementation of GraphRAG that provides a smaller, faster, and cleaner version of the official implementation. It is about 800 lines of code, small yet scalable, asynchronous, and fully typed. The tool supports incremental insert, async methods, and various parameters for customization. Users can replace storage components and LLM functions as needed. It also allows for embedding function replacement and comes with pre-defined prompts for entity extraction and community reports. However, some features like covariates and global search implementation differ from the original GraphRAG. Future versions aim to address issues related to data source ID, community description truncation, and add new components.

slack-mcp-server

Slack MCP Server is a Model Context Protocol server for Slack Workspaces, offering powerful features like Stealth and OAuth Modes, Enterprise Workspaces Support, Channel and Thread Support, Smart History, Search Messages, Safe Message Posting, DM and Group DM support, Embedded user information, Cache support, and multiple transport options. It provides tools like conversations_history, conversations_replies, conversations_add_message, conversations_search_messages, and channels_list for managing messages, threads, adding messages, searching messages, and listing channels. The server also exposes directory resources for workspace metadata access. The tool is designed to enhance Slack workspace functionality and improve user experience.

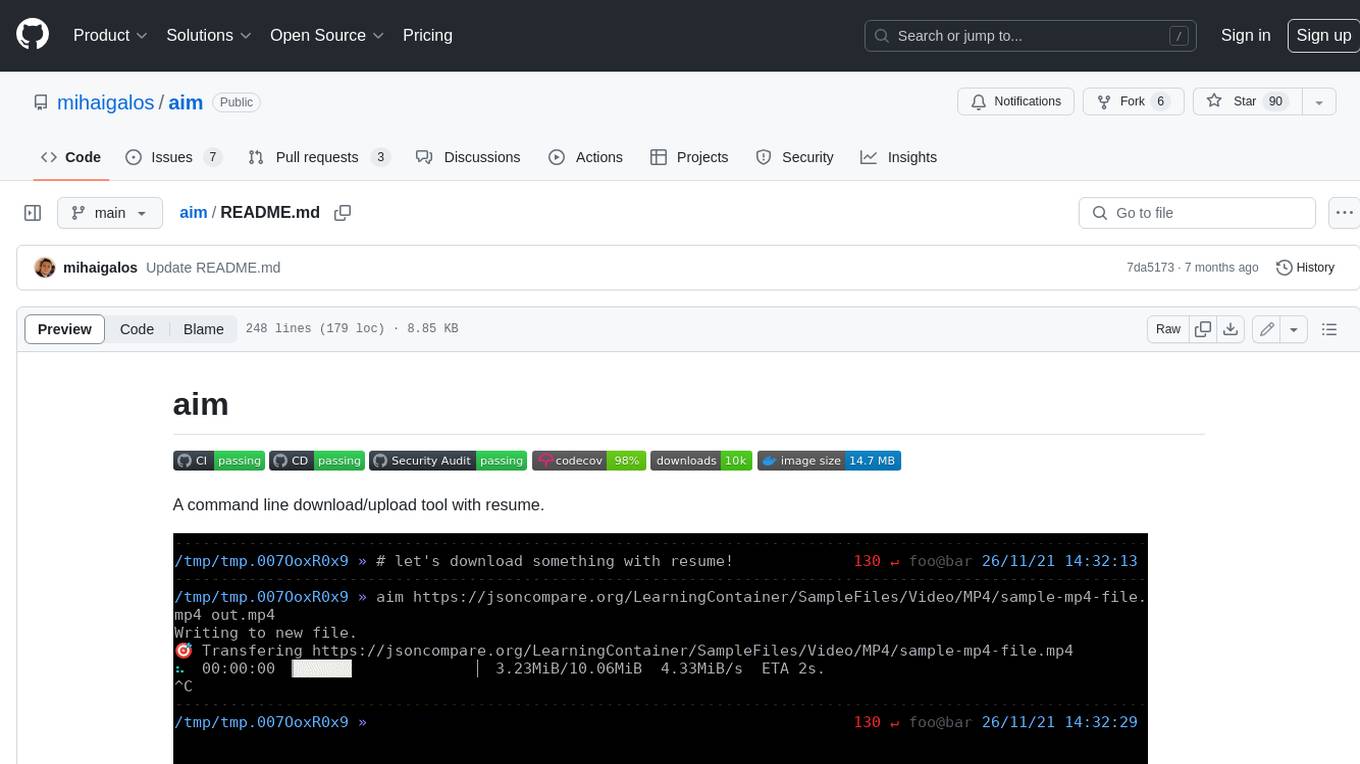

aim

Aim is a command-line tool for downloading and uploading files with resume support. It supports various protocols including HTTP, FTP, SFTP, SSH, and S3. Aim features an interactive mode for easy navigation and selection of files, as well as the ability to share folders over HTTP for easy access from other devices. Additionally, it offers customizable progress indicators and output formats, and can be integrated with other commands through piping. Aim can be installed via pre-built binaries or by compiling from source, and is also available as a Docker image for platform-independent usage.

repomix

Repomix is a powerful tool that packs your entire repository into a single, AI-friendly file. It is designed to format your codebase for easy understanding by AI tools like Large Language Models (LLMs), Claude, ChatGPT, and Gemini. Repomix offers features such as AI optimization, token counting, simplicity in usage, customization options, Git awareness, and security-focused checks using Secretlint. It allows users to pack their entire repository or specific directories/files using glob patterns, and even supports processing remote Git repositories. The tool generates output in plain text, XML, or Markdown formats, with options for including/excluding files, removing comments, and performing security checks. Repomix also provides a global configuration option, custom instructions for AI context, and a security check feature to detect sensitive information in files.

k8sgpt

K8sGPT is a tool for scanning your Kubernetes clusters, diagnosing, and triaging issues in simple English. It has SRE experience codified into its analyzers and helps to pull out the most relevant information to enrich it with AI.

worker-vllm

The worker-vLLM repository provides a serverless endpoint for deploying OpenAI-compatible vLLM models with blazing-fast performance. It supports deploying various model architectures, such as Aquila, Baichuan, BLOOM, ChatGLM, Command-R, DBRX, DeciLM, Falcon, Gemma, GPT-2, GPT BigCode, GPT-J, GPT-NeoX, InternLM, Jais, LLaMA, MiniCPM, Mistral, Mixtral, MPT, OLMo, OPT, Orion, Phi, Phi-3, Qwen, Qwen2, Qwen2MoE, StableLM, Starcoder2, Xverse, and Yi. Users can deploy models using pre-built Docker images or build custom images with specified arguments. The repository also supports OpenAI compatibility for chat completions, completions, and models, with customizable input parameters. Users can modify their OpenAI codebase to use the deployed vLLM worker and access a list of available models for deployment.

chatgpt-subtitle-translator

This tool utilizes the OpenAI ChatGPT API to translate text, with a focus on line-based translation, particularly for SRT subtitles. It optimizes token usage by removing SRT overhead and grouping text into batches, allowing for arbitrary length translations without excessive token consumption while maintaining a one-to-one match between line input and output.

orbiton

Orbiton is a text editor and simple IDE designed with minimal annoyance in mind, not highly configurable to help users stay focused, and supports rapid edit-format-compile cycles. It is suitable for writing git commit messages, editing README.md and TODO.md files, writing Markdown and exporting to HTML or PDF, learning programming languages, editing files within larger projects, solving Advent of Code tasks, and providing a distraction-free environment for writing. The tool offers unique features like smart cursor movement, paste and copy shortcuts, portal for copying lines across files, code building and formatting shortcuts, and more.

laravel-crod

Laravel Crod is a package designed to facilitate the implementation of CRUD operations in Laravel projects. It allows users to quickly generate controllers, models, migrations, services, repositories, views, and requests with various customization options. The package simplifies tasks such as creating resource controllers, making models fillable, querying repositories and services, and generating additional files like seeders and factories. Laravel Crod aims to streamline the process of building CRUD functionalities in Laravel applications by providing a set of commands and tools for developers.

python-tgpt

Python-tgpt is a Python package that enables seamless interaction with over 45 free LLM providers without requiring an API key. It also provides image generation capabilities. The name _python-tgpt_ draws inspiration from its parent project tgpt, which operates on Golang. Through this Python adaptation, users can effortlessly engage with a number of free LLMs available, fostering a smoother AI interaction experience.

ruler

Ruler is a tool designed to centralize AI coding assistant instructions, providing a single source of truth for managing instructions across multiple AI coding tools. It helps in avoiding inconsistent guidance, duplicated effort, context drift, onboarding friction, and complex project structures by automatically distributing instructions to the right configuration files. With support for nested rule loading, Ruler can handle complex project structures with context-specific instructions for different components. It offers features like centralised rule management, nested rule loading, automatic distribution, targeted agent configuration, MCP server propagation, .gitignore automation, and a simple CLI for easy configuration management.

mindcraft

Mindcraft is a project that crafts minds for Minecraft using Large Language Models (LLMs) and Mineflayer. It allows an LLM to write and execute code on your computer, with code sandboxed but still vulnerable to injection attacks. The project requires Minecraft Java Edition, Node.js, and one of several API keys. Users can run tasks to acquire specific items or construct buildings, customize project details in settings.js, and connect to online servers with a Microsoft/Minecraft account. The project also supports Docker container deployment for running in a secure environment.

RepairAgent

RepairAgent is an autonomous LLM-based agent for automated program repair targeting the Defects4J benchmark. It uses an LLM-driven loop to localize, analyze, and fix Java bugs. The tool requires Docker, VS Code with Dev Containers extension, OpenAI API key, disk space of ~40 GB, and internet access. Users can get started with RepairAgent using either VS Code Dev Container or Docker Image. Running RepairAgent involves checking out the buggy project version, autonomous bug analysis, fix candidate generation, and testing against the project's test suite. Users can configure hyperparameters for budget control, repetition handling, commands limit, and external fix strategy. The tool provides output structure, experiment overview, individual analysis scripts, and data on fixed bugs from the Defects4J dataset.

For similar tasks

Revornix

Revornix is an information management tool designed for the AI era. It allows users to conveniently integrate all visible information and generates comprehensive reports at specific times. The tool offers cross-platform availability, all-in-one content aggregation, document transformation & vectorized storage, native multi-tenancy, localization & open-source features, smart assistant & built-in MCP, seamless LLM integration, and multilingual & responsive experience for users.

crssnt

crssnt is a tool that converts RSS/Atom feeds into LLM-friendly Markdown or JSON, simplifying integration of feed content into AI workflows. It supports LLM-optimized conversion, multiple output formats, feed aggregation, and Google Sheet support. Users can access various endpoints for feed conversion and Google Sheet processing, with query parameters for customization. The tool processes user-provided URLs transiently without storing feed data, and can be self-hosted as Firebase Cloud Functions. Contributions are welcome under the MIT License.

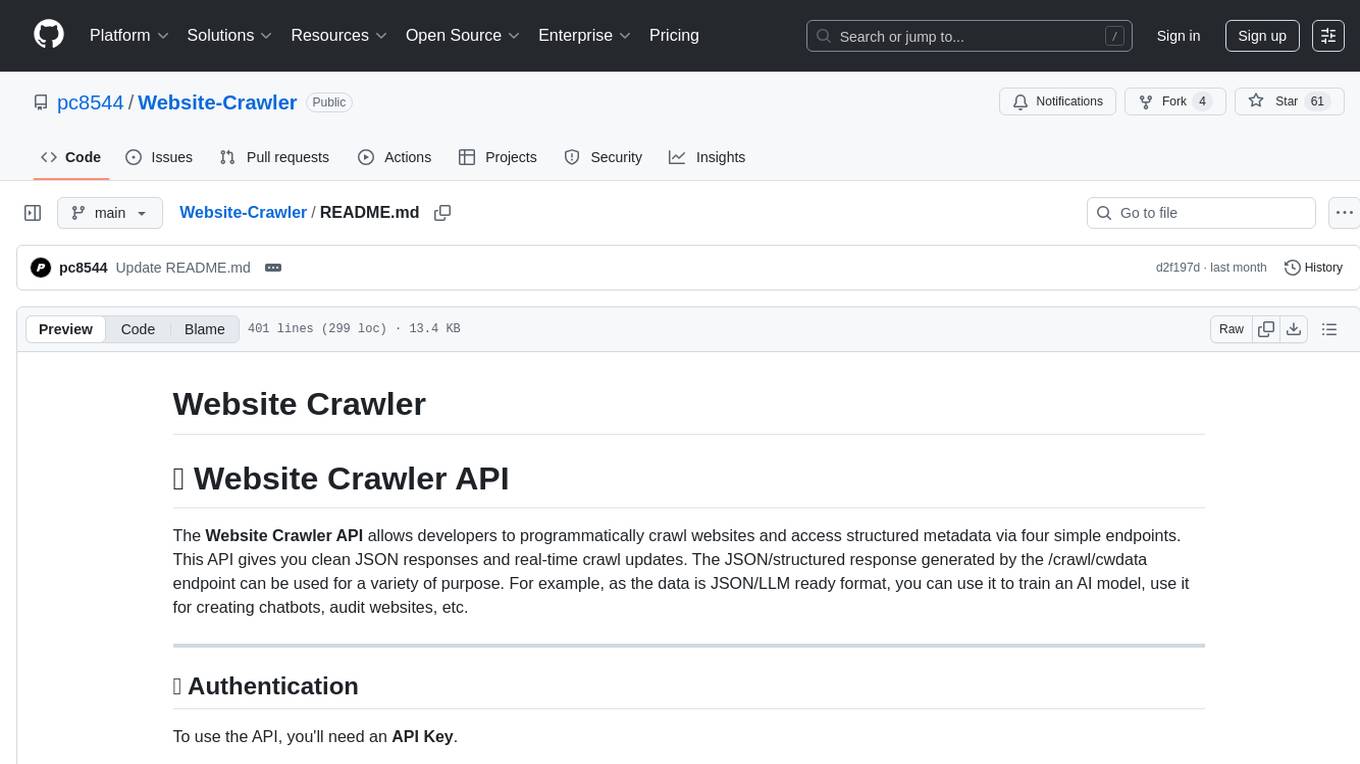

Website-Crawler

Website-Crawler is a tool designed to extract data from websites in an automated manner. It allows users to scrape information such as text, images, links, and more from web pages. The tool provides functionalities to navigate through websites, handle different types of content, and store extracted data for further analysis. Website-Crawler is useful for tasks like web scraping, data collection, content aggregation, and competitive analysis. It can be customized to extract specific data elements based on user requirements, making it a versatile tool for various web data extraction needs.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.