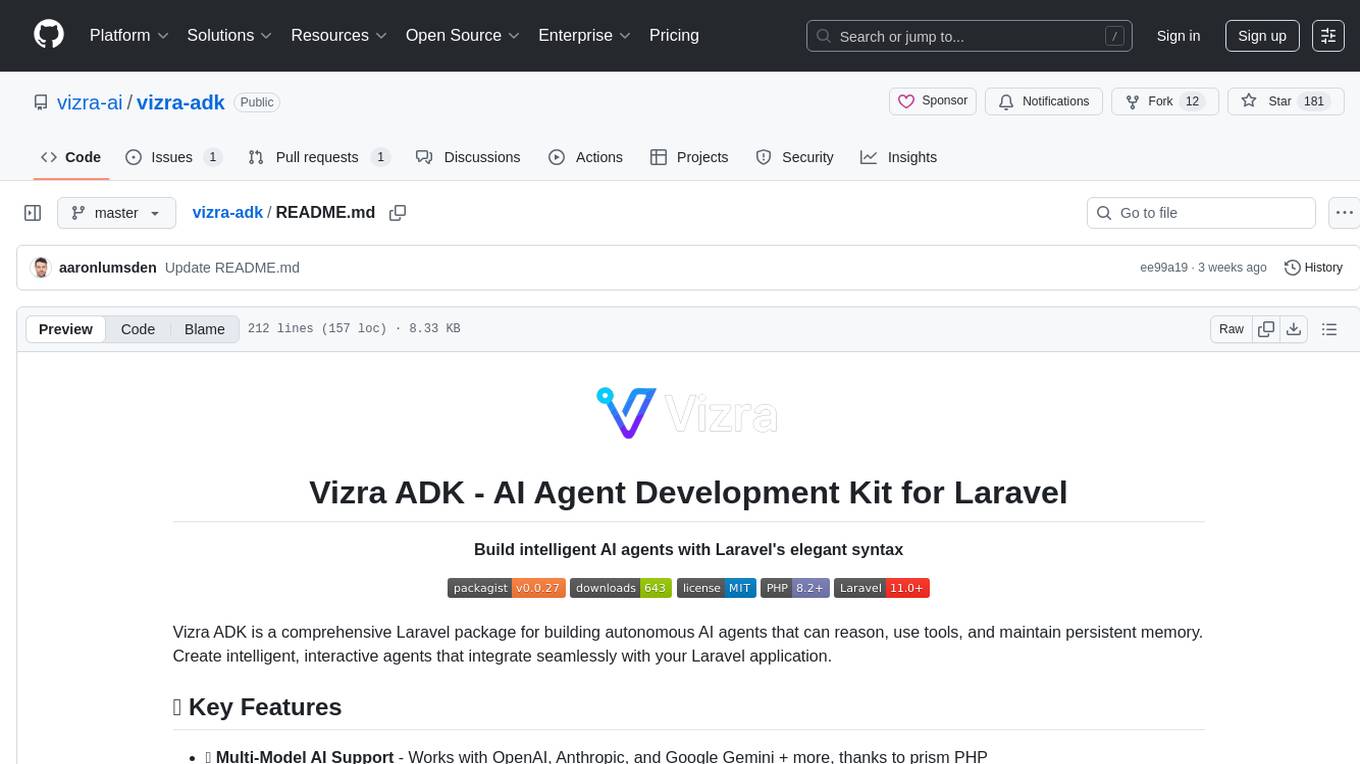

Website-Crawler

Extract data from websites in LLM ready JSON or CSV format. Crawl or Scrape entire website with Website Crawler

Stars: 61

Website-Crawler is a tool designed to extract data from websites in an automated manner. It allows users to scrape information such as text, images, links, and more from web pages. The tool provides functionalities to navigate through websites, handle different types of content, and store extracted data for further analysis. Website-Crawler is useful for tasks like web scraping, data collection, content aggregation, and competitive analysis. It can be customized to extract specific data elements based on user requirements, making it a versatile tool for various web data extraction needs.

README:

The Website Crawler API allows developers to programmatically crawl websites and access structured metadata via four simple endpoints. This API gives you clean JSON responses and real-time crawl updates. The JSON/structured response generated by the /crawl/cwdata endpoint can be used for a variety of purpose. For example, as the data is JSON/LLM ready format, you can use it to train an AI model, use it for creating chatbots, audit websites, etc.

To use the API, you'll need an API Key.

How to get one:

- Visit websitecrawler.org

- Create an account or log in

- Go to the Settings page to generate your API key

https://www.websitecrawler.org/api

Obtain an access token through the API. This token must be included in all subsequent requests.

-

Key required in the JSON payload:

-

key(string): Your API Key

-

-

Sample Request to get the token:

curl -X POST https://www.websitecrawler.org/api/crawl/authenticate \

-H "Content-Type: application/json" \

-d '{"apiKey": "your_api_key"}'

-

- Sample Response:

{

"token": "api_generated_token"

}

Initiate a new crawl for a given domain.

-

Keys required in the JSON payload:

-

url(string, required): Target website (e.g.example.com) i.e. a non redirecting main URL of the website. -

limit(integer, required): Max pages to crawl (free tier is resticted to 100)

-

-

Sample Request to initiate crawling:

curl -X POST https://www.websitecrawler.org/api/crawl/start \

-H "Authorization: Bearer api_generated_token" \

-H "Content-Type: application/json" \

-d '{"url": "your_url","limit":"your_limit"}'

-

- Sample Response 1:

{

"status": "Crawling"

}

-

- Sample Response 2:

{

"status": "Completed!"

} Retrieve the structured crawl output once crawling has completed.

-

Required key in JSON payload:

-

url(string, required): Target website (e.g.example.com) -

- Sample Request to get data:

-

curl -X POST https://www.websitecrawler.org/api/crawl/cwdata \

-H "Authorization: Bearer api_generated_token" \

-H "Content-Type: application/json" \

-d '{"url": "your_url"}'

-

- Sample Response:

{

"status": [

{

"tt": "WPTLS - WordPress Plugins, themes and related services",

"np": "12",

"h1": "",

"nw": "534",

"h2": "Why learn HTML when there is WordPress?",

"h3": "",

"h4": "",

"h5": "",

"atb": "Why learn HTML when there is WordPress?",

"sc": "200",

"md": "Reviews, comparison, and collection of top WordPress themes, plugins, related services, and useful WP tips.",

"elsc": "",

"textCN": "Websitedata.",

"d": "",

"mr": "follow, index",

"pname": "wptls.com",

"al": "",

"cn": "https://wptls.com/",

"kw": "",

"url": "https://wptls.com",

"at": "",

"external_links": "https://www.facebook.com/wptls",

"tm": "96",

"image_links": "https://wptls.com/wp-content/uploads/2021/12/cropped-wptls-logo.png | https://wptls.com/wp-content/uploads/2021/12/cropped-wptls-logo.png | https://wptls.com/wp-content/uploads/2024/02/Spaceship-768x378.jpg | https://wptls.com/wp-content/uploads/2023/12/AdSense-768x612.png | https://wptls.com/wp-content/uploads/2023/12/Exabytes-768x375.jpg | https://wptls.com/wp-content/uploads/2023/10/HTML-768x112.jpg | https://wptls.com/wp-content/uploads/2023/10/Cloudflare-add-site-768x363.png | https://wptls.com/wp-content/uploads/2023/01/Google-Trends-768x363.webp | https://wptls.com/wp-content/uploads/2022/11/Twenty-Twenty-Three-768x351.webp | https://wptls.com/wp-content/uploads/2022/11/Broken-Link-Checker-768x223.webp | https://wptls.com/wp-content/uploads/2022/11/wordpress_logo.webp | https://wptls.com/wp-content/uploads/2022/11/footer-css-768x327.webp",

"internal_links": "https://wptls.com/why-learn-html-when-there-is-wordpress/ | https://wptls.com/customize-footer-wordpress/",

"nofollow_links": ""

}

]

}Get the last crawled/processed URL

-

Required key in the JSON payload:

-

url(string, required): Target website (e.g.example.com) i.e. a non redirecting main URL of the website.

-

-

Sample Request to get the last crawled/processed URL:

curl -X POST https://www.websitecrawler.org/api/crawl/currentURL \

-H "Authorization: Bearer api_generated_token" \

-H "Content-Type: application/json" \

-d '{"url": "your_url"}'

-

- Sample Response:

{

"currentURL": "https://wptls.com"

}Clear the previous job in case you want to rerun the crawler.

-

Required key:

-

url(string, required): Target website (e.g.example.com) i.e. a non redirecting main URL of the website.

-

-

Sample Request to clear the job:

curl -X POST https://www.websitecrawler.org/api/crawl/clear \

-H "Authorization: Bearer api_generated_token" \

-H "Content-Type: application/json" \

-d '{"url": "your_url"}'

-

- Sample Response:

{

"clearStatus": "Job cannot be cleared as the URL of the entered website is being crawled."

}The Python, Java, nodejs demos showcases how to use the WebsiteCrawlerSDK to interact with websitecrawler.org, enabling automated URL submission, status tracking, and retrieval of crawl data via their API.

install the website crawler sdk

pip install website-crawler-sdk

change API_KEY,YOUR_LIMIT,YOUR_URL in the following demo script and run it. The objective of this script is to submit a URL to websitecrawler.org, get crawl status, the current URL being processed by websitecrawler in realtime, and retrieve the structured data once the task of crawling the website is finished.

import time

from website_crawler_sdk import WebsiteCrawlerConfig, WebsiteCrawlerClient

"""

Author: Pramod Choudhary (websitecrawler.org)

Version: 1.1

Date: July 10, 2025

"""

# Replace with your actual API key, target URL, and limit

YOUR_API_KEY = "YOUR_API_KEY" #Your API key goes here

URL = "YOUR_URL" #Enter a non redirecting URL/domain with https or http

LIMIT = YOUR_LIMIT #Change YOUR_LIMIT

def main():

cfg = WebsiteCrawlerConfig(YOUR_API_KEY)

client = WebsiteCrawlerClient(cfg)

# Submit URL to WebsiteCrawler.org for crawling

client.submit_url_to_website_crawler(URL, LIMIT) #Submit the URL and Limit to websitecrawler via API

while True:

task_status = client.get_task_status() #Start retrieving data if the task_status is true

print(f"{task_status} << task status")

time.sleep(2) #Wait for 2 seconds

if not task_status:

break

if task_status:

status = client.get_crawl_status() #get_crawl_status() method gets the crawl status

currenturl = client.get_current_url() #get_current_url() method gets the current URL

data = client.get_crawl_data() # get_crawl_data() method gets the structured data once crawling has completed

if status:

print(f"Current Status:: {status}")

if status == "Crawling": #Crawling is one of the status

print(f"Current URL:: {currenturl}")

if status == "Completed!": #Completed! (with exclamation) is one of the status

print("Task has been completed... closing the loop and gettint the data...")

if data:

print(f"JSON Data:: {data}")

time.sleep(20) # Give extra time for large JSON response

break

print("Job over")

if __name__ == "__main__":

main()

- Submit any website URL to be crawled

- Track crawl status in real-time

- View current URL being crawled

- Retrieve JSON-formatted crawl data on completion

- Java 11 or higher

- Maven build system

- API key from WebsiteCrawler.org

Download the jar file WebsiteCrawlerSDK-Java-1.0.jar and add it as a dependency in your java project. Create the WebsiteCrawlerConfig object as shown in the following code. Pass the WebsiteCrawlerConfig object to WebsiteCrawlerClient. Use the WebsiteCrawlerConfig object to call the methods.

WebsiteCrawlerConfig config = new WebsiteCrawlerConfig("YOUR_API_KEY");

WebsiteCrawlerClient crawler = new WebsiteCrawlerClient(config);package wc.WebsiteCrawlerAPIUsageDemo;

import wc.websitecrawlersdk.WebsiteCrawlerClient;

import wc.websitecrawlersdk.WebsiteCrawlerConfig;

/**

*

* @author Pramod

*/

public class WebsiteCrawlerAPIUsageDemo {

public static void main(String[] args) throws InterruptedException {

String status;

String currenturl;

String data;

WebsiteCrawlerConfig cfg = new WebsiteCrawlerConfig(YOUR_API_KEY); //replace YOUR_API_KEY with your api key

WebsiteCrawlerClient client = new WebsiteCrawlerClient(cfg);

client.submitUrlToWebsiteCrawler(URL, LIMIT); //replace "URL" with the URL you want Websitecrawler.org to crawl and the number of URLs

boolean taskStatus;

while (true) {

taskStatus = client.getTaskStatus(); //getTaskStatus() should be true before you call any methods

System.out.println(taskStatus + "<<task status");

Thread.sleep(9000);

if (taskStatus == true) {

status = client.getCrawlStatus(); // getCrawlStatus() method returns the live crawling status

currenturl = client.getCurrentURL(); //getCurrentURL() method returns the URL being processed by WebsiteCrawler.org

data = client.getcwData(); // getcwData() returns the JSON array of the website data;

System.out.println("Crawl status::");

if (status != null) {

System.out.println(status);

}

if (status != null && status.equals("Crawling")) { // status: Crawling ----> Crawl job is in progresss

System.out.println("Current URL::" + currenturl);

}

if (status != null && status.equals("Completed!")) { // status: Completed! ---> Crawl job has completed succesfully

System.out.println("Task has been completed.. closing the while loop");

if (data != null) {

System.out.println("Json Data::" + data);

Thread.sleep(20000); // JSON data might be huge. Thread.sleep makes the program wait until json data is retrieved

break; // exits the while(true) loop

}

}

}

}

System.out.println("job over");

}

}install the website crawler sdk

npm i website-crawler-sdk

change YOUR_API_KEY,YOUR_LIMIT,YOUR_URL in the following demo script and run it. The objective of this script is to submit a URL to websitecrawler.org, get crawl status, the current URL being processed by websitecrawler in realtime, and retrieve the structured data once the task of crawling the website is finished.

const { WebsiteCrawlerConfig, WebsiteCrawlerClient } = require('website-crawler-sdk');

const config = new WebsiteCrawlerConfig('YOUR_API_KEY');

const client = new WebsiteCrawlerClient(config);

client.submitUrlToWebsiteCrawler('YOUR_URL', 'YOUR_LIMIT');

const intervalId = setInterval(() => {

const status = client.getCrawlStatus();

console.log('Status:', status);

console.log('Current URL:', client.getCurrentURL());

if (status === 'Completed!') {

console.log('Crawl Data:', client.getCrawlData());

console.log('Job completed...');

clearInterval(intervalId);

}

}, 3000);

This section highlights how the XML-Sitemap-Generator project uses the websitecrawler.org API to automate XML sitemap generation.

The following steps outline the flow between the Website Crawler API and the sitemap generation logic:

-

Start Crawling

- Use the

crawl/startendpoint to initiate crawling of your website:https://www.websitecrawler.org/api/crawl/start?url=example.com&limit=100&key=YOUR_API_KEY

- Use the

-

Fetch Crawled Data

- Once crawling is complete, retrieve data using:

https://www.websitecrawler.org/api/crawl/cwdata?url=example.com&key=YOUR_API_KEY

- Response includes structured metadata (titles, links, status codes, etc.) in JSON format.

- Once crawling is complete, retrieve data using:

-

Process and Transform

- The XML Sitemap Generator parses the response and extracts valid URLs.

-

Generate Sitemap

- The extracted URLs are then converted into a compliant

sitemap.xmlfor SEO optimization and better search engine indexing.

- The extracted URLs are then converted into a compliant

Check out the full implementation here:

🔗 XML-Sitemap-Generator

For best results, ensure your API key is valid and your domain permits crawling.

##👋 Feedback & Support Found a bug or need help? Open an issue or connect via websitecrawler.org

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Website-Crawler

Similar Open Source Tools

Website-Crawler

Website-Crawler is a tool designed to extract data from websites in an automated manner. It allows users to scrape information such as text, images, links, and more from web pages. The tool provides functionalities to navigate through websites, handle different types of content, and store extracted data for further analysis. Website-Crawler is useful for tasks like web scraping, data collection, content aggregation, and competitive analysis. It can be customized to extract specific data elements based on user requirements, making it a versatile tool for various web data extraction needs.

waidrin

Waidrin is a powerful web scraping tool that allows users to easily extract data from websites. It provides a user-friendly interface for creating custom web scraping scripts and supports various data formats for exporting the extracted data. With Waidrin, users can automate the process of collecting information from multiple websites, saving time and effort. The tool is designed to be flexible and scalable, making it suitable for both beginners and advanced users in the field of web scraping.

onlook

Onlook is a web scraping tool that allows users to extract data from websites easily and efficiently. It provides a user-friendly interface for creating web scraping scripts and supports various data formats for exporting the extracted data. With Onlook, users can automate the process of collecting information from multiple websites, saving time and effort. The tool is designed to be flexible and customizable, making it suitable for a wide range of web scraping tasks.

HyperAgent

HyperAgent is a powerful tool for automating repetitive tasks in web scraping and data extraction. It provides a user-friendly interface to create custom web scraping scripts without the need for extensive coding knowledge. With HyperAgent, users can easily extract data from websites, transform it into structured formats, and save it for further analysis. The tool supports various data formats and offers scheduling options for automated data extraction at regular intervals. HyperAgent is suitable for individuals and businesses looking to streamline their data collection processes and improve efficiency in extracting information from the web.

trafilatura

Trafilatura is a Python package and command-line tool for gathering text on the Web and simplifying the process of turning raw HTML into structured, meaningful data. It includes components for web crawling, downloads, scraping, and extraction of main texts, metadata, and comments. The tool aims to focus on actual content, avoid noise, and make sense of data and metadata. It is robust, fast, and widely used by companies and institutions. Trafilatura outperforms other libraries in text extraction benchmarks and offers various features like support for sitemaps, parallel processing, configurable extraction of key elements, multiple output formats, and optional add-ons. The tool is actively maintained with regular updates and comprehensive documentation.

crawlee-python

Crawlee-python is a web scraping and browser automation library that covers crawling and scraping end-to-end, helping users build reliable scrapers fast. It allows users to crawl the web for links, scrape data, and store it in machine-readable formats without worrying about technical details. With rich configuration options, users can customize almost any aspect of Crawlee to suit their project's needs.

proxyless-llm-websearch

Proxyless-LLM-WebSearch is a tool that enables users to perform large language model-based web search without the need for proxies. It leverages state-of-the-art language models to provide accurate and efficient web search results. The tool is designed to be user-friendly and accessible for individuals looking to conduct web searches at scale. With Proxyless-LLM-WebSearch, users can easily search the web using natural language queries and receive relevant results in a timely manner. This tool is particularly useful for researchers, data analysts, content creators, and anyone interested in leveraging advanced language models for web search tasks.

cellm

Cellm is an Excel extension that allows users to leverage Large Language Models (LLMs) like ChatGPT within cell formulas. It enables users to extract AI responses to text ranges, making it useful for automating repetitive tasks that involve data processing and analysis. Cellm supports various models from Anthropic, Mistral, OpenAI, and Google, as well as locally hosted models via Llamafiles, Ollama, or vLLM. The tool is designed to simplify the integration of AI capabilities into Excel for tasks such as text classification, data cleaning, content summarization, entity extraction, and more.

LLM_Web_search

LLM_Web_search project gives local LLMs the ability to search the web by outputting a specific command. It uses regular expressions to extract search queries from model output and then utilizes duckduckgo-search to search the web. LangChain's Contextual compression and Okapi BM25 or SPLADE are used to extract relevant parts of web pages in search results. The extracted results are appended to the model's output.

uBlockOrigin-HUGE-AI-Blocklist

A huge blocklist of sites containing AI generated content (~950 sites) for cleaning image search engines with uBlock Origin or uBlacklist. Includes hosts file for pi-hole/adguard. Provides instructions for importing blocklists and additional lists for specific content. Allows users to create allowlists and customize filtering based on keywords. Offers tips and tricks for advanced filtering and comparison between uBlock Origin and uBlacklist implementations.

databerry

Chaindesk is a no-code platform that allows users to easily set up a semantic search system for personal data without technical knowledge. It supports loading data from various sources such as raw text, web pages, files (Word, Excel, PowerPoint, PDF, Markdown, Plain Text), and upcoming support for web sites, Notion, and Airtable. The platform offers a user-friendly interface for managing datastores, querying data via a secure API endpoint, and auto-generating ChatGPT Plugins for each datastore. Chaindesk utilizes a Vector Database (Qdrant), Openai's text-embedding-ada-002 for embeddings, and has a chunk size of 1024 tokens. The technology stack includes Next.js, Joy UI, LangchainJS, PostgreSQL, Prisma, and Qdrant, inspired by the ChatGPT Retrieval Plugin.

ag2

Ag2 is a lightweight and efficient tool for generating automated reports from data sources. It simplifies the process of creating reports by allowing users to define templates and automate the data extraction and formatting. With Ag2, users can easily generate reports in various formats such as PDF, Excel, and CSV, saving time and effort in manual report generation tasks.

oramacore

OramaCore is a database designed for AI projects, answer engines, copilots, and search functionalities. It offers features such as a full-text search engine, vector database, LLM interface, and various utilities. The tool is currently under active development and not recommended for production use due to potential API changes. OramaCore aims to provide a comprehensive solution for managing data and enabling advanced search capabilities in AI applications.

atlas

Atlas is a powerful data visualization tool that allows users to create interactive charts and graphs from their datasets. It provides a user-friendly interface for exploring and analyzing data, making it ideal for both beginners and experienced data analysts. With Atlas, users can easily customize the appearance of their visualizations, add filters and drill-down capabilities, and share their insights with others. The tool supports a wide range of data formats and offers various chart types to suit different data visualization needs. Whether you are looking to create simple bar charts or complex interactive dashboards, Atlas has you covered.

vizra-adk

Vizra-ADK is a data visualization tool that allows users to create interactive and customizable visualizations for their data. With a user-friendly interface and a wide range of customization options, Vizra-ADK makes it easy for users to explore and analyze their data in a visually appealing way. Whether you're a data scientist looking to create informative charts and graphs, or a business analyst wanting to present your findings in a compelling way, Vizra-ADK has you covered. The tool supports various data formats and provides features like filtering, sorting, and grouping to help users make sense of their data quickly and efficiently.

udm14

udm14 is a basic website designed to facilitate easy searches on Google with the &udm=14 parameter, ensuring AI-free results without knowledge panels. The tool simplifies access to these specific search results buried within Google's interface, providing a straightforward solution for users seeking this functionality.

For similar tasks

Revornix

Revornix is an information management tool designed for the AI era. It allows users to conveniently integrate all visible information and generates comprehensive reports at specific times. The tool offers cross-platform availability, all-in-one content aggregation, document transformation & vectorized storage, native multi-tenancy, localization & open-source features, smart assistant & built-in MCP, seamless LLM integration, and multilingual & responsive experience for users.

crssnt

crssnt is a tool that converts RSS/Atom feeds into LLM-friendly Markdown or JSON, simplifying integration of feed content into AI workflows. It supports LLM-optimized conversion, multiple output formats, feed aggregation, and Google Sheet support. Users can access various endpoints for feed conversion and Google Sheet processing, with query parameters for customization. The tool processes user-provided URLs transiently without storing feed data, and can be self-hosted as Firebase Cloud Functions. Contributions are welcome under the MIT License.

Website-Crawler

Website-Crawler is a tool designed to extract data from websites in an automated manner. It allows users to scrape information such as text, images, links, and more from web pages. The tool provides functionalities to navigate through websites, handle different types of content, and store extracted data for further analysis. Website-Crawler is useful for tasks like web scraping, data collection, content aggregation, and competitive analysis. It can be customized to extract specific data elements based on user requirements, making it a versatile tool for various web data extraction needs.

temporal-ai-agent

Temporal AI Agent is a demo showcasing a multi-turn conversation with an AI agent running inside a Temporal workflow. The agent collects information towards a goal using a simple DSL input. It is currently set up to search for events, book flights around those events, and create an invoice for those flights. The AI agent responds with clarifications and prompts for missing information. Users can configure the agent to use ChatGPT 4o or a local LLM via Ollama. The tool requires Rapidapi key for sky-scrapper to find flights and a Stripe key for creating invoices. Users can customize the agent by modifying tool and goal definitions in the codebase.

skyvern

Skyvern automates browser-based workflows using LLMs and computer vision. It provides a simple API endpoint to fully automate manual workflows, replacing brittle or unreliable automation solutions. Traditional approaches to browser automations required writing custom scripts for websites, often relying on DOM parsing and XPath-based interactions which would break whenever the website layouts changed. Instead of only relying on code-defined XPath interactions, Skyvern adds computer vision and LLMs to the mix to parse items in the viewport in real-time, create a plan for interaction and interact with them. This approach gives us a few advantages: 1. Skyvern can operate on websites it’s never seen before, as it’s able to map visual elements to actions necessary to complete a workflow, without any customized code 2. Skyvern is resistant to website layout changes, as there are no pre-determined XPaths or other selectors our system is looking for while trying to navigate 3. Skyvern leverages LLMs to reason through interactions to ensure we can cover complex situations. Examples include: 1. If you wanted to get an auto insurance quote from Geico, the answer to a common question “Were you eligible to drive at 18?” could be inferred from the driver receiving their license at age 16 2. If you were doing competitor analysis, it’s understanding that an Arnold Palmer 22 oz can at 7/11 is almost definitely the same product as a 23 oz can at Gopuff (even though the sizes are slightly different, which could be a rounding error!) Want to see examples of Skyvern in action? Jump to #real-world-examples-of- skyvern

airbyte-connectors

This repository contains Airbyte connectors used in Faros and Faros Community Edition platforms as well as Airbyte Connector Development Kit (CDK) for JavaScript/TypeScript.

open-parse

Open Parse is a Python library for visually discerning document layouts and chunking them effectively. It is designed to fill the gap in open-source libraries for handling complex documents. Unlike text splitting, which converts a file to raw text and slices it up, Open Parse visually analyzes documents for superior LLM input. It also supports basic markdown for parsing headings, bold, and italics, and has high-precision table support, extracting tables into clean Markdown formats with accuracy that surpasses traditional tools. Open Parse is extensible, allowing users to easily implement their own post-processing steps. It is also intuitive, with great editor support and completion everywhere, making it easy to use and learn.

unstract

Unstract is a no-code platform that enables users to launch APIs and ETL pipelines to structure unstructured documents. With Unstract, users can go beyond co-pilots by enabling machine-to-machine automation. Unstract's Prompt Studio provides a simple, no-code approach to creating prompts for LLMs, vector databases, embedding models, and text extractors. Users can then configure Prompt Studio projects as API deployments or ETL pipelines to automate critical business processes that involve complex documents. Unstract supports a wide range of LLM providers, vector databases, embeddings, text extractors, ETL sources, and ETL destinations, providing users with the flexibility to choose the best tools for their needs.

For similar jobs

databerry

Chaindesk is a no-code platform that allows users to easily set up a semantic search system for personal data without technical knowledge. It supports loading data from various sources such as raw text, web pages, files (Word, Excel, PowerPoint, PDF, Markdown, Plain Text), and upcoming support for web sites, Notion, and Airtable. The platform offers a user-friendly interface for managing datastores, querying data via a secure API endpoint, and auto-generating ChatGPT Plugins for each datastore. Chaindesk utilizes a Vector Database (Qdrant), Openai's text-embedding-ada-002 for embeddings, and has a chunk size of 1024 tokens. The technology stack includes Next.js, Joy UI, LangchainJS, PostgreSQL, Prisma, and Qdrant, inspired by the ChatGPT Retrieval Plugin.

OAD

OAD is a powerful open-source tool for analyzing and visualizing data. It provides a user-friendly interface for exploring datasets, generating insights, and creating interactive visualizations. With OAD, users can easily import data from various sources, clean and preprocess data, perform statistical analysis, and create customizable visualizations to communicate findings effectively. Whether you are a data scientist, analyst, or researcher, OAD can help you streamline your data analysis workflow and uncover valuable insights from your data.

sqlcoder

Defog's SQLCoder is a family of state-of-the-art large language models (LLMs) designed for converting natural language questions into SQL queries. It outperforms popular open-source models like gpt-4 and gpt-4-turbo on SQL generation tasks. SQLCoder has been trained on more than 20,000 human-curated questions based on 10 different schemas, and the model weights are licensed under CC BY-SA 4.0. Users can interact with SQLCoder through the 'transformers' library and run queries using the 'sqlcoder launch' command in the terminal. The tool has been tested on NVIDIA GPUs with more than 16GB VRAM and Apple Silicon devices with some limitations. SQLCoder offers a demo on their website and supports quantized versions of the model for consumer GPUs with sufficient memory.

TableLLM

TableLLM is a large language model designed for efficient tabular data manipulation tasks in real office scenarios. It can generate code solutions or direct text answers for tasks like insert, delete, update, query, merge, and chart operations on tables embedded in spreadsheets or documents. The model has been fine-tuned based on CodeLlama-7B and 13B, offering two scales: TableLLM-7B and TableLLM-13B. Evaluation results show its performance on benchmarks like WikiSQL, Spider, and self-created table operation benchmark. Users can use TableLLM for code and text generation tasks on tabular data.

mlcraft

Synmetrix (prev. MLCraft) is an open source data engineering platform and semantic layer for centralized metrics management. It provides a complete framework for modeling, integrating, transforming, aggregating, and distributing metrics data at scale. Key features include data modeling and transformations, semantic layer for unified data model, scheduled reports and alerts, versioning, role-based access control, data exploration, caching, and collaboration on metrics modeling. Synmetrix leverages Cube (Cube.js) for flexible data models that consolidate metrics from various sources, enabling downstream distribution via a SQL API for integration into BI tools, reporting, dashboards, and data science. Use cases include data democratization, business intelligence, embedded analytics, and enhancing accuracy in data handling and queries. The tool speeds up data-driven workflows from metrics definition to consumption by combining data engineering best practices with self-service analytics capabilities.

data-scientist-roadmap2024

The Data Scientist Roadmap2024 provides a comprehensive guide to mastering essential tools for data science success. It includes programming languages, machine learning libraries, cloud platforms, and concepts categorized by difficulty. The roadmap covers a wide range of topics from programming languages to machine learning techniques, data visualization tools, and DevOps/MLOps tools. It also includes web development frameworks and specific concepts like supervised and unsupervised learning, NLP, deep learning, reinforcement learning, and statistics. Additionally, it delves into DevOps tools like Airflow and MLFlow, data visualization tools like Tableau and Matplotlib, and other topics such as ETL processes, optimization algorithms, and financial modeling.

VMind

VMind is an open-source solution for intelligent visualization, providing an intelligent chart component based on LLM by VisActor. It allows users to create chart narrative works with natural language interaction, edit charts through dialogue, and export narratives as videos or GIFs. The tool is easy to use, scalable, supports various chart types, and offers one-click export functionality. Users can customize chart styles, specify themes, and aggregate data using LLM models. VMind aims to enhance efficiency in creating data visualization works through dialogue-based editing and natural language interaction.

quadratic

Quadratic is a modern multiplayer spreadsheet application that integrates Python, AI, and SQL functionalities. It aims to streamline team collaboration and data analysis by enabling users to pull data from various sources and utilize popular data science tools. The application supports building dashboards, creating internal tools, mixing data from different sources, exploring data for insights, visualizing Python workflows, and facilitating collaboration between technical and non-technical team members. Quadratic is built with Rust + WASM + WebGL to ensure seamless performance in the browser, and it offers features like WebGL Grid, local file management, Python and Pandas support, Excel formula support, multiplayer capabilities, charts and graphs, and team support. The tool is currently in Beta with ongoing development for additional features like JS support, SQL database support, and AI auto-complete.